#1. A Lack of Indexes

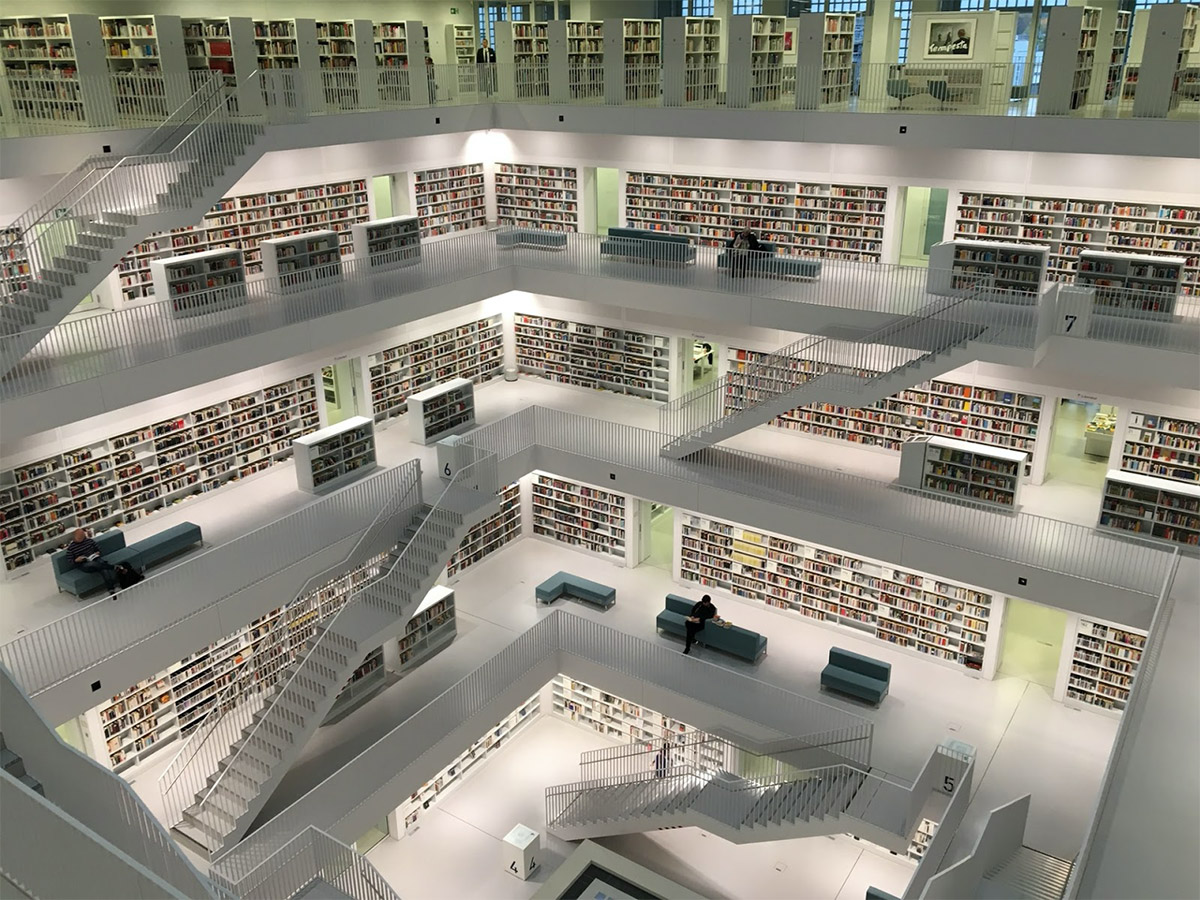

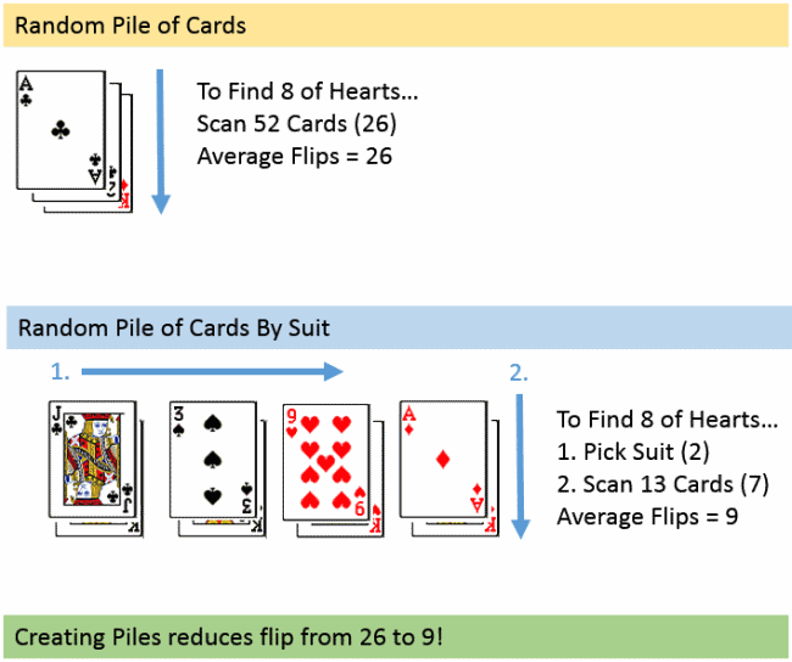

Database indexes let queries to efficiently retrieve data from a database. These indexes work similarly to the index at the end of a book: You can look at 10 pages to find the information that you need rather than searching through the full 1,000 pages.

How Indexes Increase Efficiency – Source: Essential SQL

You should use database indexes in many situations, such as:

- Foreign Keys – Indexing foreign keys lets you to efficiently query relationships, such as a “belongs to” or “has many” relationship.

- Sorted Values – Indexing frequently used sorting methods can make it easier to retrieve sorted data rather than relying on “group by” or other queries.

- Uniqueness – Indexing unique values in a database enables you to efficiently look up information and instantly validate uniqueness.

Database indexes can dramatically improve the performance of large and unorganized datasets, but that doesn't mean that you should use it for every situation. After all, database indexes are separate tables that take up additional space and require special queries to access – it’s not a free operation by any means.

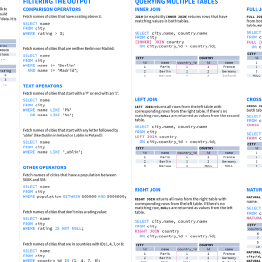

#2. Inefficient Querying

Most developers aren't database experts and the code they write can lead to significant performance issues. The most common problem is the so-called N+1 query , which is a common cause of insidious performance bottlenecks in web applications.

For example, suppose you want to query the database for a list of items.

You might write the following iterator in PHP:

The problem with this approach is that you're executing many separate queries rather than combining them into a single query. It's much faster to execute one query with 100 results than 100 queries with a single result.

The solution is batching queries:

N+1 queries can be difficult to detect because they don't creep up until you have a lot of queries. You may be testing on a development machine with only a handful of queries without an issue, but as soon as you push the code to production, you could see dramatic performance issues under production loads.

#3. Improper Data Types1

Databases can store nearly any type of data, but it's important to choose the correct data type for the job to avoid performance issues. For instance, it’s usually a good idea to select the data type with the smallest size and best performance for a given task.

There are a few best practices to keep in mind:

- Size – Use the smallest data size that will accommodate the largest possible value. For example, you should use `tinyint` instead of `int` if you're only storing small values.

- Keys – Primary keys should use simple `integer` or `string` data types rather than `float` or `datetime` to avoid unnecessary overhead.

- NULL – Avoid NULL values in fixed-length columns since NULL consumes the same space as an input value would. These values can add up quickly in large columns.

- Numbers – Avoid storing numbers as strings even if they aren't used in mathematical operations. For example, a ZIP code or phone number integer is smaller than a string.

There are also several complex data types that can add value in certain circumstances but come with gotchas.

For example, Postgresql’s ENUM data type makes it easy to include an enumerable list in a single database item. While ENUM is great for reducing join complexity and storage space, long lists of values, or variable values, can introduce performance issues since it makes database queries a lot more expensive.

How to Spot Problems

The problem with inefficient database queries is that they’re difficult to detect until it's too late. For example, a developer may experience very little lag with a small sample database and just his local machine, but a production-level number of simultaneous queries on a much larger database could grind the entire application to a halt.

Load tests are the best way to avoid these problems by simulating production-level traffic before pushing any code to production users. While most load tests simulate traffic using protocols, the best platforms spin up real browser instances for the most accurate performance results.

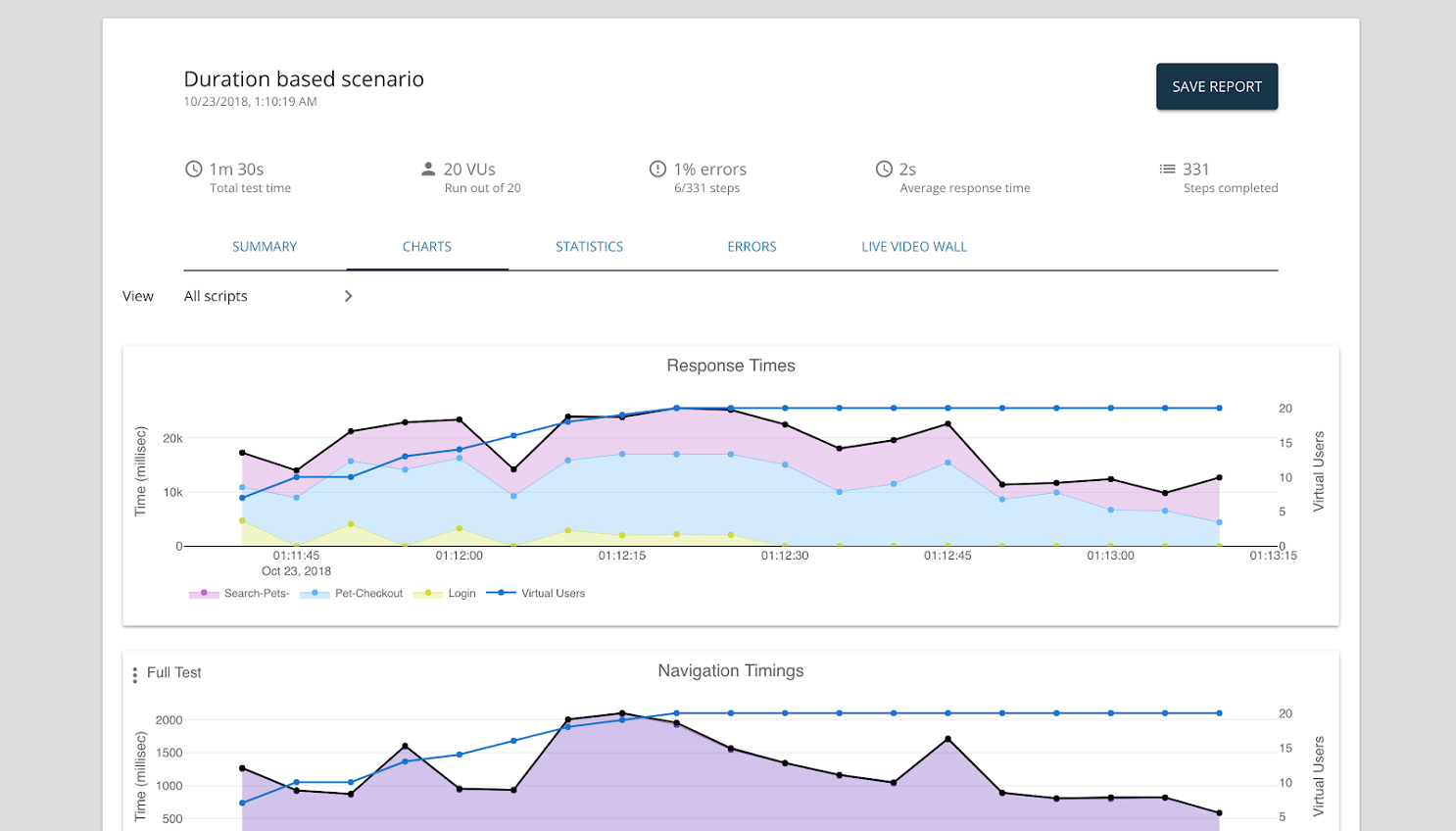

LoadNinja’s In-Depth Analytics – Source: LoadNinja

LoadNinja enables you to record and instantly replay load tests in minutes rather than hours. These tests are run across tens of thousands of real browsers in the cloud to generate a realistic load. Engineers can then access step times, browser resources, and other actionable metrics.

Sign up for a free LoadNinja trial See how easy it is to get started with load testing today! Start Free Trial

Load tests are only helpful if they're run in advance of a production deployment. Using a continuous integration (CI) and deployment (CD) process, you can ensure that load tests run with each merge into the master or pre-production branch well-before the final push to production.

It’s an Easy Fix

Database performance issues are a common cause of web application bottlenecks. Most of these problems boil down to a lack of indexing, inefficient queries, and the misuse of data types, which can all be easily fixed. The challenge is identifying them before they reach production.

Load testing is the best way to ensure database bottlenecks – and other performance issues – are identified before they reach production users. Using this solution, you can easily incorporate these tests into your CI/CD pipeline and make fixes before they become costly problems.

Sign up for a free LoadNinja trial Make these issues non-issues Start Free Trial

Start Your 14 Day Free Trial

By submitting this form, you agree to our Terms of Use and Privacy Policy

Ensure your web applications reliably perform under any condition

- Record and playback test scripts in minutes with no dynamic correlation or coding

- Generate accurate load with real browsers at scale for realistic performance data

- Analyze browser-based performance data that developers and testers can understand out of the box

- Visualize, isolate and debug any performance issue Virtual Users encounter

New FishXProxy Phishing Kit Making Phishing Accessible to Script Kiddies

SiegedSec Hacks Heritage Foundation; Leaks Data Over “Project 2025”

AI-Driven Scam Ads: Deepfake Tech Used to Peddle Bogus Health Products

UAE’s Lulu Hypermarket Data Breach: Hackers Claim Millions of Customer Records

- Zyklon B hacker

- Zues Malware

- Zoombombing

5 Common Database Management Challenges & How to Solve Them

Since nearly every application or tool in your tech stack connects to a database, it’s no surprise that 57% of organizations find themselves constantly managing database challenges.

Storing and accessing huge volumes of data poses problems when teams are responsible for managing the security, reliability, and uptime of multiple databases in a hybrid IT environment on top of their day-to-day tasks. Yet, these teams often run into the same issues across their tech stack and may not even recognize it.

Here are the 5 most common database challenges your team should watch out for and how to solve them.

1. Managing Scalability with Growing Data Volumes

As data volumes continue growing at an average of 63% per month , organizations often don’t have their databases set up to effectively scale.

Not only are individual tools and applications delivering larger datasets into databases, but there’s also a good chance your data is being updated and queried more frequently. Your queries are getting more complex, your data is more distributed, and you’re defining more relationships between your data. Many relational databases aren’t designed to support all of these factors.

Even if your database is designed to scale with your data needs, you may need to pay to manage and query your increasing amount of data. Horizontal scaling can only go so far before memory upgrade costs become untenable.

Something all organizations should consider is whether you’re actually using the data you’re storing. Create retention policies that reduce the amount of data you store as you scale. For example, you can decrease the amount of data you store by erasing transient data in permanent storage, allowing you to better leverage the storage you have available.

2. Maintaining Database Performance

Slow database performance isn’t just inconvenient for your team; it can also stall applications and impact your end-users. Providing the best experience for employees and customers is a must, so it’s crucial to solve database performance issues quickly.

Beyond scalability issues, high latency in databases is often related to slow read/write speeds. Caching to a remote host is one solution to support scaling your databases that don’t need to be updated frequently. This is a great way to offload the database, particularly if some of your data only needs to be accessed in read-only mode.

You should also focus on improving query performance. In some cases, that may involve creating indexes to retrieve data more efficiently. In others, that may involve leveraging more skilled employees with more experience working with databases. Otherwise, inexperienced users can create unexpected performance bottlenecks.

3. Database Access Concerns

Even if your organization sets up and regularly monitors database security, you may continue running into security issues based on your access permissions.

Embracing a least-privilege approach is a must if you’re experiencing database security issues. Reducing the number of people with access using role-based access control, attribute-based access control, or a combination of the two reduces the likelihood of insider threats, phishing or malware attacks, and human error that impacts your data quality. Limit access to users with the right skills to maintain peak performance.

Thankfully, you don’t have to manage access independently for every database. A robust infrastructure access platform can help you manage what access is appropriate across multiple databases based on roles and functions.

4. Misconfigured or Incomplete Security

There’s no doubt that misconfigured security poses a significant risk to databases, particularly in cloud environments. Often, incomplete cloud security without encryption can expose your data to external attacks. Yet, when you’re managing multiple databases, it’s easy to overlook correct configuration or security patches.

Newly deployed or updated databases are particularly at risk for attacks. Regularly monitoring and upgrading databases can enhance security, but those efforts fall short if your database isn’t properly encrypted. Some databases have encryption on by default, so query your database to confirm that either transparent data encryption (TDE) or tablespace encryption (TSE) is enabled.

Plus, poor database configuration or implementation can lead to both intentional data loss—through unauthorized access or exporting—and unintentional data loss through corruption or incomplete logs. Activating logging features helps organizations keep better track of their data, discover and triage data issues, and remediate lost data incidents. Tracking data movement, traces, and logs with full-stack observability tools gives your team the visibility needed to monitor databases and identify threats before sensitive data is at risk.

5. Data Integration and Quality Problems

Without data standardization, your organization can experience integration issues within your database. Finding and aggregating data for queries is especially difficult when data types and formats aren’t aligned across all sources. Plus, data silos across your organization may leave datasets incomplete, resulting in poor queries that both create performance issues and waste company time, resources, and money.

Not all data integration tools are created equal. Leverage platforms and tools that let your organization create rules to standardize your data for each source before it’s integrated into your data pipeline . From there, use the same standardization processes for the existing data in your database and employ automation to limit redundant or incomplete data.

It’s also important to ensure all your sources are integrated seamlessly and regularly into your database. Automation plays a crucial role in data integration, and many tools can push data into your database in real-time or more frequently. However, you may still want to set up integration frequency for different sources, since real-time updates for all data can impact performance if your solution isn’t prepared to support your data.

Managing Database Challenges with Confidence

With more data comes more database challenges. But, with the right tools and preparation, your organization doesn’t have to constantly focus on mitigating database issues. For instance, adopting a modern access control solution like strongDM , where workflows are streamlined for DBAs or developers will go a long way towards ensuring easy, secure access to databases.

At the end of the day, by overcoming these five common challenges, your organization can keep data quality high, improve its security posture, and maintain data accessibility for your organization.

- Vulnerability

Related Posts

- Cyber Crime

- Cyber Events

Stop!t: An App for Kids To Report Cyberbullies With Push of A Button

- Surveillance

Tiny “Spyslide Webcam Cover” Protects Your Privacy From Hackers, Spies

- Cyber Attacks

Xplain Hack Aftermath: Play Ransomware Leaks Sensitive Swiss Government Data

- Scams and Fraud

AI-Powered Scams, Human Trafficking Fuel Global Cybercrime Surge: INTERPOL

- SQL Server training

- Write for us!

Problem solving database or data warehouse production issues with PRIDESTES DEPLOY Principle

This article talks about a golden rule called PRIDESTES DEPLOY to sort out any SQL BI (business intelligence) related issue and it is particularly useful for resolving database, data warehouse or even reporting related issues where the database is built through modern tools like Azure Data Studio.

I am coining a new term perhaps never used before, but it is practiced generally in almost every IT environment where teams are busy resolving issues round the clock.

PRIDESTES DEPLOY principle is not the solution it is rather a key behind a solution that may be related to any sensitive environment including the Production environment.

About PRIDESTES DEPLOY

After actively taking part in numerous professional life scenarios to resolve production related issues with reference to a database or data warehouse business intelligence solution I have been inclined to formalize the standard or principle that has helped us and can help other professionals and teams while they already use it and it is just a matter of a polite reminder.

I call it PRIDESTES DEPLOY someone may call it something else but as long as we follow it in accordance with its essence it is a fast-track way to either find a solution or the cause that can facilitate the solution.

Let us explore this in detail.

What is PRIDESTES DEPLOY?

PRIDESTES DEPLOY is an acronym and a principle that can be adopted in order to take steps in resolving an issue related to any IT framework including database or data warehouse-related issues.

What does PRIDESTES DEPLOY Stand for?

PRIDESTES DEPLOY stands for the following things:

P: Preliminary Analysis

R: Replicating the Problem

I: Identifying the cause

DES: Designing the Solution/Fix

TES: Testing the Solution/Fix

DEPLOY: Deploying the Solution/Fix

Preliminary Analysis (P)

As the name indicates any problem-solving story begins with getting to know the IT or SQL/BI-based problem and the best way to understand the nature of the problem and factors surrounding it is to analyze the issue.

Preliminary analysis suggests that the first step is always to analyze the problem domain by doing some checks to understand exactly what the problem is.

So, the P in PRISDESTES DEPLOY encourages the solution developer to start their work by first analysing the problem itself and this requires performing preliminary analysis.

Please remember the most important part of preliminary analysis is (analysing) the source that is responsible for reporting the problem such as the database issue reported by a customer to gather as much information as possible. However, this phase is quite challenging.

In a typical environment potential source reporting the problem can be one of the following:

- A Customer can simply raise a support ticket about a database/data warehouse/reporting problem he/she is faced with

- Post-deployment checks by you or other team members can sometimes reveal a potential issue

- Any automated checks can also raise an issue provided they are configured or designed to contain enough information for the preliminary analysis by the respective developer/analyst

No matter which source notified the issue your preliminary analysis should always target the most important piece of information found in the reported problem in order to gather precise information to speed up the problem-solving task.

There is one more important point that is about the assignment of the task for preliminary analysis. For example, a Power BI related issue must first be assigned to the Power BI team of the organization so that they can pass it onto the relevant expert often the developer for preliminary analysis.

Please remember, the preliminary analysis must focus on the following:

- What is the problem?

- A rough idea of solving the problem

- Information about next steps

- Information about the best person who can resolve the issue

Professional Tip

Finally, if you are an automation fan then I suggest any raised work item by the customer should automatically trigger preliminary analysis after assigning it to the most appropriate team and if you automate preliminary analysis further such as analysing the problem statement and extracting more information then that’s a plus but not always required.

Replicating the Problem (R)

The R in PRIDESTES DEPLOY refers to “Replicating the Problem”. Now, this is a very crucial step and should never be skipped, ignored, or underestimated unless you have a genuine reason to do so.

In the world of problem solvers, replicating a problem successfully is half the solution and sometimes it is the solution because you know what went wrong you just need to build it (solution) after you know it. However, please stay focussed as replicating the problem especially a production issue is not a child’s play because a very solid understanding and experience in the related field is required to be able to replicate a reported problem.

For example, if a customer reports an error in a Power BI report then you have to replicate the exact error to understand what might have caused the error.

Replicating the database or data warehouse related issue requires a fair amount of effort and time provided you have done the homework because you should have some means to replicate the problem. Now, this is a matter of a lot of discussions whether the production issue needs to be replicated in the production (Prod) or should be doing it in QA or Test environment.

Please bear in mind sometimes Production issues can only be replicated in Production and that’s why they are rightly called Production issues and in that case please have some solid strategy to replicate a production issue.

For example, if a customer says his record has failed to save in the database then you have to try to save the exact record (row) into the database and prepare to see the error but also keep an eye on the underlying database structure and the objects (procedures) taking part in saving the record because often the problem lies there for that particular case.

Please remember it is always handy to have a live test workspace in case of Power BI error handling that must have a test user to replicate any problem reported by the customers.

Identifying the cause (I)

Now, what is a problem without identifying the cause.

For example, you are informed by a team (self-discovered production error) that for some strange reason duplicates are generated in the final FACT table. Now, unless you know the exact reason removing the duplicates from the current result set is not going to keep the system calm as this may happen again.

That’s why we must identify the actual cause of the issue to resolve it completely and the preceding steps (preliminary analysis and replicating the problem) can help a lot to identify the cause of the problem. In my opinion, this is a very demanding step as it can test the patience of the developer assigned to do the job of resolving the production issue because he may have to run numerous tests to find out the exact cause of the issue.

However, the experience and a solid background in that area (such as database, data warehouse or reporting) can be handy here. If you have spent over a decade resolving such issues, then it may turn out to be a piece of cake to identify the cause, but every Production issue in itself can be sometimes unique and challenging even though you have seen this before and know the cause.

Please remember to be fully aware of the underlying architecture and the processes involved in a reporting solution (when handling a reporting issue reported by the customer) can help you to identify the cause of an issue although be prepared to run a couple of stringent tests to get to the point.

Designing the Solution/Fix (DES)

Finally designing the solution or fix is proof that you have worked hard in the previous steps.

You cannot perform well in this phase, if you have not done much in the previous phases that’s why in order to design the solution you must have done the right amount of preliminary analysis followed by replicating the problem and identifying the cause.

If you know the cause you can fix the problem and to fix the problem, you design the solution.

Sometimes designing the solution is as simple as modifying a small, stored procedure to behave correctly in case of a special use case that has caused the system error. Now you modify the stored procedure to ensure that you don’t break the existing code and you address the issue being reported.

On some other days, designing a solution can be adding a new data workflow to handle archiving requirements that are not correctly handled by the existing data warehouse architecture.

Designing the fix or solution in a traditional professional problem-solving scenario ultimately means developing it with the help of tools and technologies and this also requires you to define how your solution/fix fits well into the current database or data warehouse architecture including the data movement activities to achieve the desired goal that may mean using some form of data integration service such as Azure Data Factory or Integration Services Projects (SSIS Packages) to extract, transform and load data.

Testing the Solution/Fix (TES)

Designing the solution is not enough it has to be tested to meet its requirements. Testing in itself is a skill as I have seen often times the developers can spot the issue in their code even before it is picked up by a tester. However, testing is also a very broad area.

You have to be pretty specific in this step and it is somewhat similar to unit testing provided you understand that the unit being tested can be as big as a report showing wrong figures and then narrowing it down to the object (table) behind that error or the workflow (data loading activity) that keeps the object active.

Testing your fix simply means you have to check from the user perspective whether the problem has been resolved or not and this can be a step where you can do your part of testing and then hand it over to the team of professionals responsible for the overall testing of the whole module.

If it is a small issue, then I suggest the person who is developing the fix should test it first if he completely understands the bigger picture. For example, sometimes a business intelligence developer/analyst is smart enough to know that fixing this piece of the puzzle solves the mystery although he is not completely aware of all the other components that interact with the data warehouse such as data models, Power BI reports or real-time analysis in Excel.

Deploying the Solution/Fix (DEPLOY)

Finally, you have to deploy the solution or the fix that you have worked so hard on it.

Again, deployment can be a small piece of code ranging from a stored procedure modification to a fully functional process consisting of several objects and sub-processes, but the ultimate goal remains the smooth deployment to the Production server.

Considering the modern-day tools and technologies the deployment can be completely automated such as using Azure DevOps Builds and Release pipelines but there is nothing that stops you to use a simpler and even manual way of deploying an object to the Production system (with the help of the teams responsible for finally pushing it to the Server) as long as you have a predefined set strategy that is acceptable, workable and shareable.

If your data warehouse (database) is managed by a SQL database project then you can simply deploy a small fix such as a modified stored procedure or view that addresses the issue to Dev (development database) using publish script or even schema compare tool and then from there (after successful unit testing) let it be handled by the next team or you can also deploy to Test and UAT regardless of the fact that you have got a very sophisticated fully managed deployment strategy or just a manual check list that is shared across other team members and is as solid as an automated deployment strategy.

I am not against automated builds and releases, but I don’t recommend for a sole developer to overcomplicate tasks that can assist rather than focussing and working on the main objective expected to be delivered as soon as possible.

A Word of Advice

We all know that practice makes a man perfect, but I read in an interesting article that sometimes practice does not make a man perfect if he is not doing the things correctly then he is mastering to do things incorrectly.

In other words, surround yourself with experts of your field and get the knowledge-based understanding of your area of expertise through knowledge sharing sessions or taking reputable good courses along with finding time to learn and implement things from credible sources that you learn and most importantly it is crucial to be aware of standard practices and how to encourage others to follow them.

Once you have a solid foundation and experience you can find your own ways and feel free to experiment with PRIDESTES DEPLOY to mend it according to your level of comfort but without compromising the standards and please keep in mind without dedication and commitment the journey is difficult so be proactive and be ready to improve and to embrace new technologies and tools that can help you to solve Production issues but at the same time work on the methodology even it is just a few dry steps that are actually helpful for you, your team and your organization to solve real-time production issues efficiently and effectively.

- Recent Posts

- SQL Machine Learning in simple words - May 15, 2023

- MySQL Cluster in simple words - February 23, 2023

- Common use cases of SQL SELECT Distinct - February 2, 2023

Related posts:

- SQL Unit Testing Data Warehouse Extracts with tSQLt

- The Concept of Test-Driven Data Warehouse Development (TDWD) with tSQLt

- Mapping schema and recursively managing data – Part 1

- Using tSQLt for Test-Driven Data Warehouse Development (TDWD)

- Why you should cleverly name Database Objects for SQL Unit Testing

DBmarlin Blog

Get our latest articles in your inbox each month.

Uncover the insights in database performance with DBmarlin's informative blog. Stay updated and informed!

Top 10 causes of database performance problems and how to troubleshoot

When a client tells you that their database is running slowly, it can be impossible to know where to start. It’s just like the old joke when someone asks for directions and is given the answer that ‘you don’t want to start here!’—you really don’t want to start diagnosing this issue without knowing where to look.

Ideally, you’d start with a monitoring tool, such as DBmarlin, already in place that has been building up a history of performance data so you can see the trend over time. This means that you can start to overlay today’s performance with your baseline performance and start to unpick what today’s issues really are.

Here is my top 10 things to look for:

- Which users are affected? - Is this a single user issue or are all users affected?

- Changes Have there been any software releases or schema changes on the system today?

- Disk space - Are any of your filesystems or drives full?

- Redo/Archive logs - has your log production jumped?

CPU - has your database CPU consumption jumped?

- Disk I/O - Is your database suddenly doing far more I/O than before?

- SQL - what are your top resource-consuming queries?

Locks - have you any blocking locks?

- Object sizes - Have any key tables or indexes suddenly jumped in size?

Sessions - has the number of sessions suddenly spiked?

Taking these in turn, let’s expand a bit.

Which users are affected? Is this a single user issue or are all users affected?

- If this is a single user problem, not only is it easier to identify what is going on by talking to them, but also in reality this is a lower priority than something affecting all users. So, prioritise accordingly and grab a nice cup of coffee if you can. ☕️

- If all users are affected, then it’s a much bigger problem and you might be firefighting the problem under pressure. In this case, ensuring that you have all the information readily available and presented clearly before the event will ease the stress.

- DBmarlin lets you drill down into Users , Clients , Sessions , Programs and Database or Schema so you can easily see who is affected.

Releases - have there been any software releases or changes on the system today?

- Knowing about the software releases is key to understanding variable system performance, especially in today’s world of agile and rapid release cycles.

- Have any new database objects been dropped in?

- Have your key queries changed execution paths because of new objects or changes in object size? (i.e. have 3,000 row tables becomes 3,000,000 overnight?)

- DBmarlin can help you here with it’s change tracking function showing changes along the timeline on the landing page for your database.

Disk - are any of your filesystems or drives full?

- It may seem obvious, but servers do not react well to having key filesystems full, and neither do databases. This doesn’t just include tablespace and transaction log area, but audit file space is required as looking at trace files you may find that suddenly the app is core dumping and causing unexpected issues.

- Server root mounts and drives

- Software mounts and drives

- Online transaction/redo log areas

- Archived transaction/redo log areas

- Database Temporary locations

- Audit trail locations

- Trace file locations

Transaction logs - has your log production jumped?

- An unexpected increase in transaction load can stress the transaction log system and cause issues with archived/saved logs too.

- Oracle Redo/Archive logs

- MS-SQL Transaction Log

- PostgreSQL WAL logs

- MySQL binary logs

- Ensure your logs are sized correctly for the transaction throughput so that log switches do not affect your system.

- Knowing your system’s performance during key times of day is critical to diagnosing issues.

- DBmarlin’s Time Comparison feature allows you to easily see Total DB Time differences between two periods. By default it will compare the last hour with the previous one allowing you to see if something has just happened.

Disk - is your database suddenly doing far more I/O than before?

- Again - having an historical view is key to troubleshooting.

- DBmarlin’s Time Comparison feature allows you to easily see the Top Waits in your database and what has changed. Again - by default, it will compare the last hour with the previous one allowing you to see if something has just happened.

SQL - what are your top consuming queries?

- Being able to quickly determine what your top resource-consuming queries are is critical to getting to the bottom of that issue.

- DBmarlin’s ability to show you a graphical representation of what is occurring now on the landing page of your database, but once again, the Time Comparison feature allows you to pick a baseline time from this hour last week, when everything was peachy.

- Blocking locks can quickly hang up your key applications.

- DBmarlin can help start you in the right direction by showing the wait time on the Wait Events pie chart on your database’s landing page.

Object sizes? Have any key tables or indexes suddenly jumped in size?

- Keeping a record of the size of your database objects is a subject all on its own. Being able to track them over time allows you to see if there has been a sudden increase, altering your execution plans and killing the performance of your application’s key queries.

- DBmarlin’s Change History facility allows you to see if a release could be causing your current issue. After selecting the change you are interested in, examine the Statements to find the ones with multiple execution plans , and use the Execution Plans drop-down to compare the plans.

- Being able to see at a glance if you are being hit by an application server that has an issue is of real value here.

- On DBmarlin’s database landing page, the DB Time will be the first thing you see in the top left corner indicating the trend. If you see it rocketing upwards, change the data of the pie chart to Sessions and Clients to identify the source of the issue.

So in summary, troubleshooting is rarely a simple exercise but it can be made a lot easier if you have a structured approach to troubleshooting supported by a tool which visualises database performance. Having been involved in many major incidents over the years, the technical teams will communicate well and reach a common understanding of issues and deciding next steps, but I have often found it very useful to have pictures to show ‘The Management’ as a way of increasing their understanding of complex issues and gaining their buy-in as to where focus should be put.

As in common with standalone tuning exercises, don’t try and boil the ocean in fixing things - you are here to restore service. Having an historical comparison available to show you ‘what good looks like’ is incredibly useful here.

And - when you’ve restored service - stop!

Ready to try DBmarlin?

If you would like to find out more about DBmarlin and why we think it is special, try one of the links below.

- Get hands-on without an installation at play.dbmarlin.com

- Download DBmarlin from www.dbmarlin.com , with one FREE standard edition license, which is free forever for 1 target database.

- Follow the latest news on our LinkedIn Community at linkedin.com/showcase/dbmarlin

- Join our community on Slack at join-community.dbmarlin.com

27 Sep 2021

- Database Performance

- #Application Performance

Real World Problem Solving with SQL

- Tutorial Real World Problem Solving with SQL

- Description Examples of how to use SQL to solve real problems, as discussed in the database@home event. https://asktom.oracle.com/pls/apex/asktom.search?oh=8141

- Tags match_recognize, sum, analytic functions

- Area SQL Analytics

- Contributor Chris Saxon (Oracle)

- Created Tuesday May 05, 2020

Prerequisite SQL

Predicting stock shortages.

To start we'll build a prediction engine, estimating when shops will run out of fireworks to sell. This will use data from tables storing the shops, order received, and daily and hourly sales predictions:

Calculating Running Totals

By adding the OVER clause to SUM, you can calculate running totals. This has three clauses:

- Partition by - split the data set up into separate groups

- Order by - sort the data, defining the order totals are calculated

- Window clause - which rows to include in the total

This returns the cumulative sales for each shop by date:

The clauses in this SUM work as follows:

- partition by sales.shopid calculates the running total for each shop separately

- order by sales.saleshour sorts the rows by hour, so this gives the cumulative sales by date

- rows between unbounded preceding and current row sums the sales for this row and all previous rows

Creating Hourly Sales Predictions

The budget figures are daily. To combine these with hourly sales, we need to convert them to hourly figures.

This query does this by cross joining the day and hour budget tables. Then multiplying the daily budget by the hourly percentage to give the expected sales for that hour:

Finding When Predicted Sales Exceed Stock Level

We can now combine the hourly actual and predicted sales figures. We want to include actual figures up to the time of the last recorded sale. After this, the query should use the expected figures.

This query returns the real or projected sales figures. Then computes the running total of sales of this combined figure:

It also predicts the remaining stock at each time by subtracting the running total of hourly sales (real or predicted) from the starting stock. Notice that this has this window clause:

This means include all the previous rows and exclude the current row. This is because we want to return the expected stock at the start of the hour. Including the current row returns the expected level at the end of the hour.

Predicting Exact Time of Zero Stock

The previous query returned every hour and the expected stock level. To find the time stock is expected to run out, find the last date where the remaining stock is greater than zero:

To estimate the exact time stock will run out, we can take the ratio of expected sales to remaining stock for the hour it's due to run out. The query does this with these functions:

This uses the KEEP clause to return the value for stock and quantity for the maximum HOUR. This is necessary because STOCKNEM & QTYNUM are not in the GROUP BY or in an aggregate function.

This technique of calculating the previous running total up to some limit has many other applications. These include:

- Stock picking algorithms

- Calculating SLA breach times for support tickets

We'll look at how you can use this to do stock picking algorithms next

Stock Picking Routines

Next we'll look at how to use SQL to find which stock location to get inventory from to fulfil orders. This will use these tables:

The algorithm needs to list all the locations stock pickers need to choose from. There must be enough locations for the sum of quantities in stock reaches the ordered quantity of that product.

Get the Cumulative Quantity of Stock Picked

The algorithm needs to keep selecting locations until the total quantity picked is greater than the ordered quantity. As with the previous problem, this is possible with a running SUM.

This query gets the cumulative selected quantity for the current row and the previous quantity:

To filter this to those locations needed to fulfil the order, we need all the rows where the previous picked quantity is less than the ordered quantity.

Try Different Stock Picking Algorithms

Like the previous problem, the SQL needs to keep adding rows to the result until the running total for the previous row is greater than the ordered quantity.

This starts the SQL to find all the needed locations. You can implement different stock picking algorithms by changing the ORDER BY for the running total. Experiment by replacing /* TODO */ with different columns to see what effect this has on the locations chosen:

Row Numbering Methods

Whichever algorithm you decide to use to select stock, it may choose a poor route for the stock picker to walk around the warehouse. This can lead to them walking back down an aisle, when it would be better to continue up to the top of the aisle. Then walk back down the next one.

One way to do this is to number the aisles needed. Then walk up the odds and back down the evens. This means locations in the same aisle need the same aisle number. We want to assign these numbers!

Oracle Database has three row numbering functions:

- Rank - An Olympic ranking system. Rows with same sort key have the same rank. After ties there is a gap in the ranks. After ties the numbering starts from the row's position in the results.

- Dense_Rank - Like RANK, this sets the rank to be the same for rows with the same sort key. But this has no gaps in the sequence

- Row_Number - This gives unique consecutive values

This compares the different ranking functions:

Change Stock Picking Route

To improve the routing algorithm, we want to give locations row numbers. Locations on the same aisle must have the same rank. We can then alternate the route up and down the aisles by sorting:

- By ascending position for odd aisles

- By descending position for even aisles

To do this locations in the same aisle must have the same rank. And there must be no gaps in the ranks. Thus DENSE_RANK is the correct function to use here. This sorts by warehouse number, then aisle:

To alternate ascending/descending sorts, take this rank modulus two. Return the position when it's one and negate the position when it's zero:

The complete query for this is:

Finding Consecutive Rows - Tabibitosan

For the third problem we're searching for consecutive dates in this running log:

The goal is to split these rows into groups of consecutive dates. For each group, return the start date and number of days in it.

There's a trick you can use to do this:

- Assign unique, consecutive numbers sorted by date to each row

- Subtract this row number from the date

After applying this method, consecutive dates will have the same value:

You can then summarise these data by grouping by the expression above and returning min, max, and counts to find start, end, and numbers of rows:

This technique is referred to at the Tabibitosan method.

Finding Consecutive Rows - Pattern Matching

Added in Oracle Database 12c, the row pattern matching clause, MATCH_RECOGNIZE, offers another way to solve this problem.

To do this, you need to define pattern variables: criteria for rows to meet. Then create a regular expression using these variables.

To find consecutive dates, you need to look for rows where the current date equals the previous date plus one. This pattern variable does this:

To search for a series of consecutive rows, use this pattern:

This matches one instance of INIT followed by any number of CONSECUTIVE.

But what's this INIT variable? It has no definition!

Undefined variables are "always true". This matches any row. This enables the pattern to match the first row in the data set. Without this CONSECUTIVE will always be false, because the previous RUN_DATE will always be null.

This query returns the first date and number of rows in each group:

By default MATCH_RECOGNIZE returns one row per group. To make it easier to see what's going on, this query adds the clause:

This returns all the matched rows. In the MEASURES clause it also adds the CLASSIFIER function. This returns the name of the pattern variable this row matches:

Counting Number of Child Nodes in a Tree

To finish, we'll build an organization tree using the classic EMP table:

We want to augment this by adding the total number of reports each person has. I.e. count the number of nodes in the tree below this one.

You can do this with the following query:

The subquery calculates the hierarchy for every row in the table. So it queries EMP once for each row in the table. This leads to a huge amount of extra work. You can see this by getting its execution plan:

Note the FULL TABLE SCAN at line three happens 14 times. Reading a total of 196 rows. As you add more rows to EMP, this query will scale terribly.

Counting Child Nodes - Pattern Matching

You can overcome the performance issues for the previous query with this algorithm:

- Create the hierarchy returning the rows using depth first search.

- Add a row number to the results showing which order this returns rows

- Walk through the tree in this order

- For each row, count the number of rows after it which are at a lower depth (the LEVEL is greater)

This uses the fact that when using depth-first search, all the children of a node will be at a lower depth. The next node that is the same depth or higher as the current is not a child.

You can implement this in MATCH_RECOGNIZE with these clauses:

Like searching for consecutive rows, this starts with an always true variable. Then looks for zero or more rows which are at a greater depth.

A key difference is this clause:

After matching the pattern for the first row, this instructs the database to repeat the process for the second row in the data set. Then the third, fourth, etc.

This contrasts with the default behaviour for MATCH_RECOGNIZE: after completing a pattern, continue the search from the last matched row. Because all rows are children of the root, the default would match every row. Then have nothing left to match! So it only returns the count of the child for KING!

All together this gives:

Notice that now the pattern only reads EMP once, for a total of 14 rows read. This scales significantly better than using a subquery!

Additional Information

- Database on OTN SQL and PL/SQL Discussion forums Oracle Database Download Oracle Database

- MSP business strategy

How to diagnose and troubleshoot database performance problems

Database performance problems can wreak havoc with web site performance and cost your customers lots of money. diagnosing problems is much easier when you rely on this systematic approach..

- Hilary Cotter

Service provider takeaway: Database service providers should follow an established methodology when troubleshooting database performance problems.

Relational database management systems (RDBMSes) , such as SQL Server , Oracle , DB2 and Sybase , are highly scalable and capable of responding to thousands of requests per second. Mission-critical applications are dependent on highly responsive database systems to provide their clients with sub-second performance. Unfortunately, performance problems on a database have the potential to drive your customer's Web users to another site, causing significant financial losses to the company.

It is essential that service providers use a proven methodology to diagnose and troubleshoot performance problems in customers' databases. Following a methodology enables you to approach the diagnosis of problems in an orderly, logical manner, which increases the chances that you'll find the exact cause of the problem quickly and accurately. Failure to use a methodology will result in the service provider attempting to solve symptoms with no clear understanding of what the underlying problem is, and whether the solution offered has solved the problem.

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

IMAGES

VIDEO

COMMENTS

Database Query Languages. Multiple Choice Question Max Score: 2 Success Rate: 83.89%. Solve Challenge. Status. Solved. Unsolved. Skills. Problem Solving (Basic) Difficulty. Easy. Medium. ... Easy Problem Solving (Basic) Max Score: 5 Success Rate: 98.23%. Solve Challenge. Relational Algebra - 3. Multiple Choice Question Max Score: 2 Success Rate ...

Most developers aren't database experts and the code they write can lead to significant performance issues. The most common problem is the so-called N+1 query, which is a common cause of insidious performance bottlenecks in web applications. For example, suppose you want to query the database for a list of items.

We encourage you to solve the exercise on your own before looking at the solution. If you like these exercises, sign up for our SQL Practice Set in MySQL course and solve all 88 exercises! Section 1: Selecting Data from One Table. We'll start with the most basic SQL syntax, which is the SELECT statement. It selects data from a single table.

Try to solve each problem before you read the solution. 20 SQL Practice Problems with Solutions. Before you start, ... Delve into a database filled with information from the Rio Olympics, the 2019 IAAF World Championships in Doha, and the 2017 IAAF World Championships in London. Discover the champions of sprints, marathons, and long distances ...

At the end of the day, by overcoming these five common challenges, your organization can keep data quality high, improve its security posture, and maintain data accessibility for your organization. Business. cloud. Data. database. security. Technology. Vulnerability. Since nearly every application or tool in your tech stack connects to a ...

There are several common database problems you're likely to encounter when managing a large IT network, and solving these quickly can make a big difference in terms of preventing disruptions for your end users. ... There are several best practices you can follow for checking on your database performance metrics to prevent database problems ...

Store Database Overview. Data Analysis SQL Exercises. Single Table Queries. Exercise 1: All Products. Exercise 2: Products with the Unit Price Greater Than 3.5. Exercise 3: Products with Specific Conditions for Category and Price. JOIN Queries. Exercise 4: Products and Categories. Exercise 5: Purchases and Products.

Problem solving database or data warehouse production issues with PRIDESTES DEPLOY Principle. July 5, 2022 by Haroon Ashraf. This article talks about a golden rule called PRIDESTES DEPLOY to sort out any SQL BI (business intelligence) related issue and it is particularly useful for resolving database, data warehouse or even reporting related ...

An unexpected increase in transaction load can stress the transaction log system and cause issues with archived/saved logs too. Each RDBMS vendor has its own name for these logs. Oracle Redo/Archive logs. MS-SQL Transaction Log. PostgreSQL WAL logs. MySQL binary logs.

Like the previous problem, the SQL needs to keep adding rows to the result until the running total for the previous row is greater than the ordered quantity. This starts the SQL to find all the needed locations. You can implement different stock picking algorithms by changing the ORDER BY for the running total.

Challenge 4: Data Quality. Poor data quality—including inaccuracies, duplications, and outdated information—can compromise decision-making and business insights. Solution: Implement stringent data validation rules to maintain accuracy. Regularly cleanse the data to remove duplicates and correct errors. Automate data quality checks where ...

Diagnosing problems is much easier when you rely on this systematic approach. Service provider takeaway: Database service providers should follow an established methodology when troubleshooting database performance problems. Relational database management systems (RDBMSes), such as SQL Server, Oracle, DB2 and Sybase, are highly scalable and ...

Authored by a world-renowned, veteran-author team of Oracle ACEs, ACE Directors, and Experts, this book is intended to be a problem-solving handbook with a blend of real-world, hands-on examples and troubleshooting of complex Oracle database scenarios. This book shows you how to.

Choosing the right database can be a challenge in itself thanks to the many options available today. However, once you clear that hurdle, more remain. In this article, Oren Eini broadly explores five of the most common problems for database engineers and talks about solutions for each one.

SQL Query Practice. Dataset. Exercise #1: Show the Final Dates of All Events and the Wind Points. Exercise #2: Show All Finals Where the Wind Was Above .5 Points. Exercise #3: Show All Data for All Marathons. Exercise #4: Show All Final Results for Non-Placing Runners. Exercise #5: Show All the Result Data for Non-Starting Runners.

Getting the proper database management support is essential to overcoming these unexpected challenges. 3. Multiple data storage. Multiple data storages are one of the most significant challenges most businesses encounter. Big organizations may develop tens of business solutions with their data repository like CRM, ERP, databases, etc.

Problem-Solving: SQL enables you to extract, transform, and manipulate data. Regular practice sharpens your problem-solving skills, making you adept at tackling real-world data challenges.

clues to determine the cause of a particular problem. Here is a list of those commonly used tools: Profiler. Notepad. Event viewer. When SQL Server starts up, it starts a default trace event, provided the. default trace is enabled. Profiler can be used to review the information. captured from the default trace event.

Join over 23 million developers in solving code challenges on HackerRank, one of the best ways to prepare for programming interviews.

Whether we are beginners or experienced professionals, practicing SQL exercises is important for improving your skills. Regular practice helps you get better at using SQL and boosts your confidence in handling different database tasks. So, in this free SQL exercises page, we'll cover a series of SQL practice exercises covering a wide range of ...

Solve these ten SQL practice problems and test where you stand with your SQL knowledge! This article is all about SQL practice. It's the best way to learn SQL. We show you ten SQL practice exercises where you need to apply essential SQL concepts. If you're an SQL rookie, no need to worry - these examples are for beginners.

How to breakdown the solution for a SQL based problem. Breakdown document available at: https://github.com/CodyHenrichsen-CTEC/Study_Resources/blob/main/Data...