DoD Peer Reviewed Medical Research Program

Discovery award.

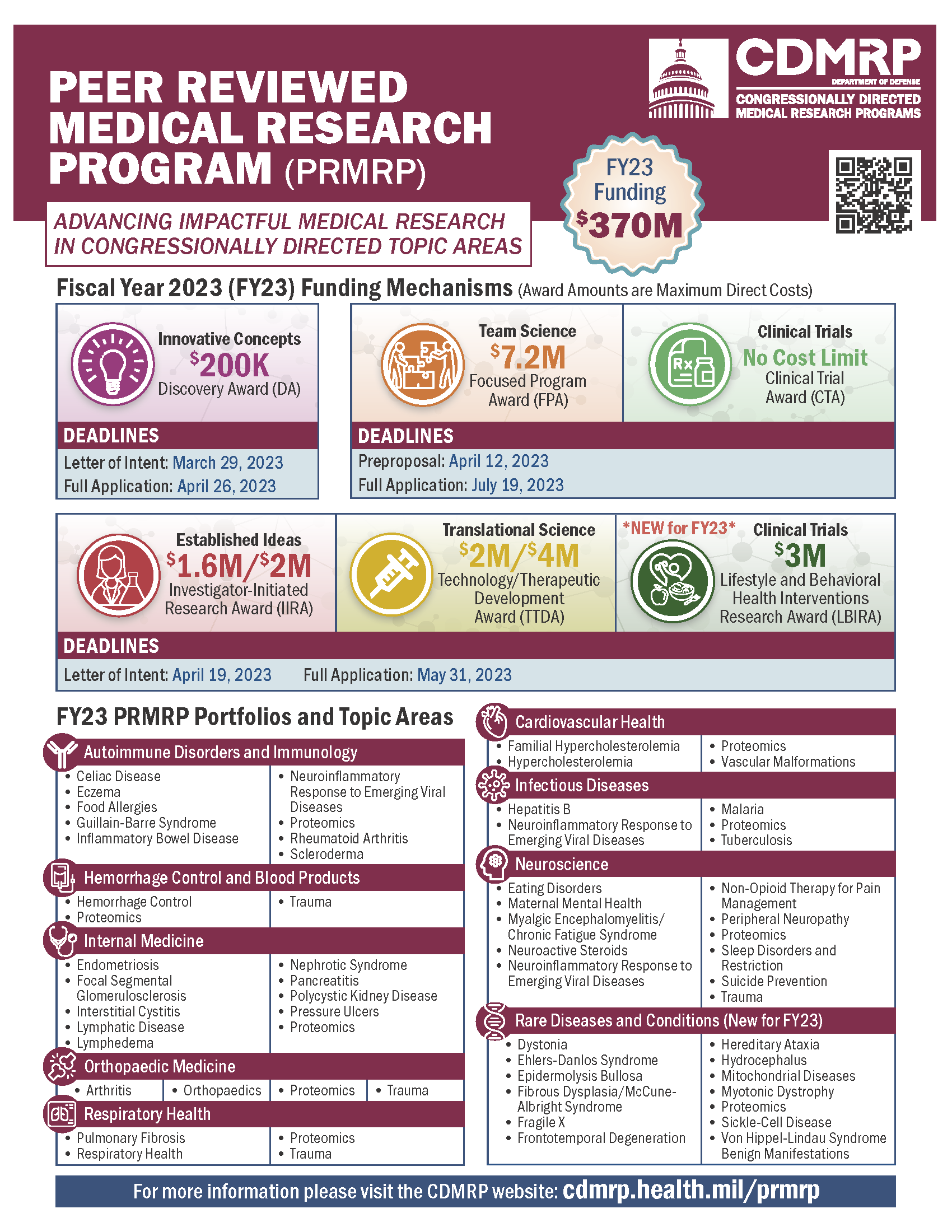

Amount of funding: Direct Costs budgeted for the entire period of performance will not exceed $200,000. Purpose: The intent of the PRMRP DA is to support innovative, non-incremental, high-risk/potentially high-reward research that will provide new insights, paradigms, technologies, or applications. Studies supported by this award are expected to lay the groundwork for future avenues of scientific investigation. The proposed research project should include a well-formulated, testable hypothesis based on a sound scientific rationale and study design. This award mechanism may not be used to conduct clinical trials; however, non-interventional clinical research studies are allowed . PI Eligibility: Independent investigators - Faculty with PI eligibility and CE faculty (with an approved CE faculty PI waiver obtained through their RPM in RMG prior to the pre-application/letter of intent) may be the PI or Partnering PI. Stanford eligibility clarification - Fellows are not eligible : even though the guidelines indicate "postdoctoral fellow or clinical fellow" may serve as PI, the application and guidelines do not require to a mentor. Therefore, this award is considered a research grant in which the Stanford PIship policy only permits faculty to apply. Since it is not mentored career development award, Instructors, Clinical Instructors, Academic Staff-Research (i.e., research associates), Postdocs, and Clinical fellows are not eligible (they may not submit career development PI waivers). Timeline: Pre-Application (Letter of Intent) Submission Deadline: March 29, 2023 via eBrap Please include your RPM’s name as business official in the pre-application. Institutional representative (RPM/RMG or CGO/OSR) Deadline: April 19, 2023 Application submission Deadline: April 26, 2023 via grants.gov Guidelines: https://cdmrp.army.mil/funding/prmrp

Focused Program Award

Amount of funding: Direct Costs budgeted for the entire period of performance will not exceed $7.2M. Purpose: The FY23 PRMRP Focused Program Award (FPA) is intended to optimize research and accelerate solutions to a critical question related to one of the congressionally directed FY23 PRMRP Topic Areas and one of the FY23 PRMRP Strategic Goals through a synergistic, multidisciplinary research program. Lead PI Eligibility : Stanford Full Professor with PI eligibility. The PI is required to devote a minimum of 20% effort to this award. Project Leader Eligibility : must be at or above the level of Assistant Professor with PI eligibility or a CE Assistant Professor (with an approved CE faculty PI waiver obtained through their RPM in RMG prior to the pre-application/letter of intent) Not eligible : Instructors, Clinical Instructors, Academic staff-research (i.e., research associates), and postdocs are not eligible for this RFP because Stanford does not consider them to be independent positions. Timeline: REQUIRED Pre-Application (Pre-Proposal) Deadline: April 12, 2023 via eBrap Please include your RPM’s name as business official in the pre-application. Institutional representative (RPM/RMG or CGO/OSR) Deadline: July 12, 2023 Application Submission Deadline: July 19, 2023 via grants.gov Guidelines: https://cdmrp.army.mil/funding/prmrp

Investigator-Initiated Research Award

Amount of funding: Direct Costs budgeted for the entire period of performance will not exceed $1.6M. Direct Costs budgeted for the entire period of performance with the Partnering PI Option will not exceed $2M. Purpose: The PRMRP Investigator-Initiated Research Award (IIRA) is intended to support studies that will make an important contribution toward research and/or patient care for a disease or condition related to one of the FY23 PRMRP Topic Areas and one of the FY23 PRMRP Strategic Goals. PI Eligibility: Independent investigators - Faculty with PI eligibility and CE faculty (with an approved CE faculty PI waiver obtained through their RPM in RMG prior to the pre-application/letter of intent) may be the PI or Partnering PI. Not eligible : Instructors, Clinical Instructors, Academic staff-research (i.e., research associates), and postdocs are not eligible for this RFP because Stanford does not consider them to hold independent positions. Timeline: Pre-Application (Letter of Intent) Submission Deadline: April 19, 2023 via eBrap Please include your RPM’s name as business official in the pre-application. Institutional representative (RPM/RMG or CGO/OSR) Deadline: May 23, 2023 Application submission Deadline: May 31, 2023 via grants.gov Guidelines: https://cdmrp.army.mil/funding/prmrp

Technology/Therapeutic Development Award

Amount of funding: Funding Level 1 Direct Costs will not exceed $2M. Funding Level 2 Direct Costs will not exceed $4M. Purpose: The PRMRP Technology/Therapeutic Development Award (TTDA) is a product-driven award mechanism intended to provide support for the translation of promising preclinical findings into products for clinical applications, including prevention, detection, diagnosis, treatment, or quality of life, for a disease or condition related to one of the FY23 PRMRP Topic Areas and one DOD FY23 Peer Reviewed Medical Technology/Therapeutic Development Award 11 of the FY23 PRMRP Strategic Goals. Products in development should be responsive to the health care needs of military Service Members, Veterans, and/or beneficiaries. This award mechanism may not be used to conduct clinical trials. Eligibility: Independent investigators - Faculty with PI eligibility and CE faculty (with an approved CE faculty PI waiver obtained through their RPM in RMG prior to the pre-application/letter of intent) may be the PI. Not eligible : Instructors, Clinical Instructors, Postdoctoral Fellows, Clinical Fellows, Academic staff-researchers (i.e., research associates) are not eligible because Stanford does not consider them to hold independent or faculty-level positions. Timeline: Pre-Application (Letter of Intent) Submission Deadline: April 19, 2023 via eBrap Please include your RPM’s name as business official in the pre-application. Institutional representative (RPM/RMG or CGO/OSR) Deadline: May 23, 2023 Application submission Deadline: May 31, 2023 via grants.gov Guidelines: https://cdmrp.army.mil/funding/prmrp

Clinical Trial Award

Amount of funding: Direct Costs budgeted for the entire period of performance will not exceed $500,000. Purpose: The FY23 PRMRP Clinical Trial Award (CTA) supports the rapid implementation of clinical trials with the potential to have a significant impact on a disease or condition addressed in one of the congressionally directed FY23 PRMRP Topic Areas and FY23 PRMRP Strategic Goals. Clinical trials may be designed to evaluate promising new products, pharmacologic agents (drugs DOD FY23 Peer Reviewed Medical Clinical Trial Award 11 or biologics), devices, clinical guidance, and/or emerging approaches and technologies. Proposed projects may range from small proof-of-concept trials (e.g., pilot, first in human, phase 0) to demonstrate the feasibility or inform the design of more advanced trials through large-scale trials to determine efficacy in relevant patient populations . PI Eligibility: Independent investigators - Faculty with PI eligibility and CE faculty (with an approved CE faculty PI waiver obtained through their RPM in RMG prior to the pre-application/letter of intent) may be the PI or Partnering PI. Not eligible : Instructors, Clinical Instructors, Academic staff-research (i.e., research associates), and Postdocs are not eligible for this RFP because Stanford does not consider them to hold independent positions. Timeline: REQUIRED Pre-Application (Pre-Proposal) Deadline: April 12, 2023 via eBrap Please include your RPM’s name as business official in the pre-application. Institutional representative (RPM/RMG or CGO/OSR) Deadline: July 12, 2023 Application Submission Deadline: July 19, 2023 via grants.gov Guidelines: https://cdmrp.army.mil/funding/prmrp

Lifestyle and Behavioral Health Interventions Research Award

Amount of funding: Direct Costs for the entire period of performance will not exceed $3M. Purpose: The FY23 PRMRP Lifestyle and Behavioral Health Interventions Research Award (LBIRA) supports clinical research and/or clinical trials using a combination of scientific disciplines including behavioral health, psychology, psychometrics, biostatistics and epidemiology, surveillance, and public health. Applications are required to address and provide a solution to DOD FY23 Peer Reviewed Medical Lifestyle and Behavioral Health Interventions Research Award 11 one of the congressionally directed FY23 PRMRP Topic Areas and FY23 PRMRP Strategic Goals. Eligibility: Independent investigators - Faculty with PI eligibility and CE faculty (with an approved CE faculty PI waiver obtained through their RPM in RMG prior to the pre-application/letter of intent) may be the PI. Not eligible : Instructors, Clinical Instructors, Postdoctoral Fellows, Clinical Fellows, Academic staff-researchers (i.e., research associates) are not eligible because Stanford does not consider them to hold independent or faculty-level positions. Timeline: Pre-Application (Letter of Intent) Submission Deadline: April 19, 2023 via eBrap Please include your RPM’s name as business official in the pre-application. Institutional representative (RPM/RMG or CGO/OSR) Deadline: May 23, 2023 Application submission Deadline: May 31, 2023 via grants.gov Guidelines: https://cdmrp.army.mil/funding/prmrp

Additional Information

Please include your institutional official's name (RPM/RMG or CGO/OSR) as business official in the pre-application/LOI.

eBRAP Funding Opportunities and Forms (including the General Application Instructions).

On this page:

Funding Opportunity: DOD CDMRP Releases FY 2024 Peer Reviewed Medical Research Program Solicitations

Lewis-Burke Associates has provided campus with a report about the Department of Defense (DOD) Congressionally Directed Medical Research Programs (CDMRP) FY24 Peer Reviewed Medical Research Program (PRMRP) solicitations. For FY 2024, Congress has allocated $370 million for PRMRP in over forty topic areas. The PRMRP aims to support medical research projects of clear scientific merit that lead to clear and impactful advances in the health care of service members, veterans, and beneficiaries. Those interested should carefully review the submission requirements for each funding mechanism and the “Strategic Goals” of each FY 2024 PRMRP topic area in each solicitation.

- COVID Information

- Patient Care

- Referring Providers

- Price Transparency

- Employee Resources

Peer Reviewed Medical Research Program (PRMRP)

The Peer Reviewed Medical Research Program (PRMRP), established in fiscal year 1999 (FY99), has supported research across the full range of science and medicine, with an underlying goal of enhancing the health, care, and well-being of military Service Members, Veterans, retirees, and their family members.

FY2023 Funding Mechanisms have been announced! The Congressionally Directed Topic Areas include:

- Clinical Trial Award (CTA)

- Discovery Award (DA)

- Focused Program Award (FPA)

- Investigator-Initiated Research Award (IIRA)

- Lifestyle and Behavioral Health Interventions Research Award (LBIRA)

- Technology/Therapeutic Development Award (TTDA)

Letter of Intent is Due: April 19th, 2023

Full Applications are Due: May 31st, 2023

Learn more about this opportunity at https://cdmrp.health.mil/funding/prmrp

- Notice of Special Interest (NOSI): Halting Tuberculosis (TB) Transmission

- Analyzing Early Events in TB and TB/HIV Infection for Interventional Targets (R01 - Clinical Trial Not Allowed) expires 10/12/2023

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

National Academies of Sciences, Engineering, and Medicine; Health and Medicine Division; Board on the Health of Select Populations; Committee on the Evaluation of Research Management by DoD Congressionally Directed Medical Research Programs (CDMRP). Evaluation of the Congressionally Directed Medical Research Programs Review Process. Washington (DC): National Academies Press (US); 2016 Dec 19.

Evaluation of the Congressionally Directed Medical Research Programs Review Process.

- Hardcopy Version at National Academies Press

5 Peer Review

A robust peer review process is critical to the Congressionally Directed Medical Research Programs' (CDMRP's) mission of funding innovative health-related research. Peer review is used throughout the scientific research community, both for determining funding decisions for research applications and for publications on research outcomes. The purpose of peer review is for the proposed research to be assessed for scientific and technical merit by experts in the same field who are free from conflicts of interest (COIs) or bias and, in some instances, by lay people who might be affected by the results of the proposed study. The process should be transparent to the applicant as well as to the research and stakeholder communities in order to ensure that each application receives a competent, thorough, timely, and fair review. The peer review process is the standard by which the most meritorious science is developed, assessed, and distributed.

This chapter describes the process of assigning peer reviewers to panels and applications; the criteria and scoring used by peer reviewers; activities that occur prior to, during, and after the peer review meeting; and the quality assurance procedures used by CDMRP to ensure that the applications are scored and critiqued correctly. Peer review occurs after CDMRP has received full applications in response to the programmatic panel activities described in Chapter 4 (see Figure 5-1 ). Peer review panels are responsible for conducting both the scientific and technical merit review and an impact assessment of all of the full applications submitted to each CDMRP research program.

The CDMRP review cycle. The yellow circle and box show the steps in the cycle that are discussed in this chapter. * As needed.

- PRE-MEETING ACTIVITIES

As described in Chapter 3 , the selection of peer reviewers begins shortly after the award mechanisms are chosen by the programmatic panel during the vision setting meeting. The peer review support contractor works with the CDMRP program manager to determine the number of peer review panels that will be required and the types of expertise that will be necessary to review the anticipated applications.

Panel and Application Assignments

Depending on the program and the number of award mechanisms and anticipated applications, multiple peer review panels—tailored to the specific expertise required by the research program and award mechanism—may be needed for a single funding opportunity or topic area (for example, if several applications propose to investigate a specific protein group or metabolic pathway). In general, a peer review panel reviews applications for a single award mechanism (or a group of similar types of mechanisms). Because the review criteria differ to some extent by mechanism, having multiple panels allows peer reviewers to focus on the specific review criteria and programmatic intent of an award mechanism ( Kaime et al., 2010 ). For example, in 2014 there were 249 separate review panels (and 3,195 peer reviewers) across 23 programs. Large programs, such as the Peer Reviewed Medical Research Program and the Breast Cancer Research Program, had the most peer review panels (85 and 38, respectively), and small programs, such as the Duchenne Muscular Dystrophy Research Program and the Multiple Sclerosis Research Program, had only one panel each ( Salzer, 2016c ).

The number of peer review panels and the mechanism(s) or topic(s) they cover are discussed by the integrated program team and inform the CDMRP program manager's task order assumptions regarding the types and number of reviewers to be recruited ( Salzer, 2016a ). As mentioned in Chapter 3 , the peer review contractor begins recruiting potential reviewers when the program announcement is publicly released. The committee notes that in its scanning of program announcements for 2016, there appear to have been about 5–6 months between the release of the program announcement and the peer review meeting.

In general, a single panel reviews a maximum of 60 applications; there is no minimum number of applications a panel may review ( CDMRP, 2011 ). Scientific review officers (SROs) and consumer review administrators assign, respectively, scientist and consumer reviewers to specific panels ( Kaime et al., 2010 ).

Each application is assigned to at least two scientist reviewers (a primary reviewer and a secondary reviewer) and one consumer reviewer, but reviewers are responsible for being familiar with all applications to be discussed and reviewed by their panel. Each scientist reviewer is generally assigned six to eight applications, but this may vary depending on the program and the guidance provided by the program manager.

One to four consumer reviewers serve on each panel, depending on the number of applications and the number of panels for the program. Each consumer reviewer is assigned at most 20 applications as these reviewers are required to review only selected sections of an application, such as the impact statement and lay abstract. Any consumer who has a scientific background is assigned to a panel that is reviewing applications outside his or her area of expertise to avoid confusion between the “science” and “advocacy” roles of the panel member ( CDMRP, 2016f ). A specialty reviewer may also be assigned to review and assess specific sections of applications, in addition to the two scientist reviewers ( CDMRP, 2011 ). For example, for a clinical trial application, a biostatistician specialty reviewer would review the statistical analysis plan.

The criteria used to assign specific reviewers to an application include COIs (discussed in Chapter 3 ) and expertise. Applicants also assign primary and secondary research classification codes to their applications; the codes can be used to assign applications to peer review panels, inform recruitment of peer reviewers, and balance panel workloads. Panel membership is confidential until the end of the review process for that funding year, when the names and affiliations of all peer reviewers for each research program are posted to the CDMRP website ( CDMRP, 2016g ).

Panel Expertise

Primary peer reviewers are assigned to applications on the basis of their expertise ( CDMRP, 2011 ). Once applications are assigned to a panel, all scientist reviewers indicate their level of expertise for reviewing individual applications using an adjectival rating scale of high, medium, low, or none, based on review of the title and abstract. The scale and description are as follows ( Salzer, 2016c ):

- High: You are able to review the application with little or no need to make use of background material or the relevant literature. You have likely published in areas closely related to the science/topics presented in the application.

- Medium: You have most of the knowledge to review the application although it would require some review of relevant literature to fill in details or increase familiarity with the system employed. You may employ similar methodologies in your own work, or study similar molecules, processes, and/or topics, but you may need to review the literature for recent advances pertinent to the application.

- Low: You understand the broad concepts but are unfamiliar with the specific system or other details, and reviewing the application would require considerable preparation.

- None: You have only superficial or no familiarity with the concepts and systems described in the application.

The committee finds these definitions of expertise and experience to be very helpful and likely to be quite informative in assigning applications for review. There does not appear to be a similar process at the National Institutes of Health (NIH).

The committee assumes that the SRO uses the reviewers' responses to confirm their expertise and to assign primary, secondary, and specialty reviewers (if applicable). In general, only reviewers who have indicated high or medium expertise for an application are assigned to be primary or secondary reviewers for that application; the primary reviewer for any application must have high expertise ( Salzer, 2016d ). The peer review contractor also ensures that all reviewers on a panel have a minimum level of expertise.

Preliminary Critiques and Initial Scores

Timing of the preliminary reviews.

In general, it appears that the time between the deadline for the submission of a full application and the peer review meeting is about 2 months, although for some programs or awards this may extend to about 3 months. CDMRP states that approximately 40 hours of pre-meeting preparation over 4–6 weeks is required for peer review. This time includes registration, training, reviewing assigned applications, and writing critiques and comments for assigned applications. CDMRP states that peer reviewers typically have 3–4 weeks to review their assigned applications, although review time may vary by program, or if additional reviewers are added to the panel at a later time. In response to the committee's solicitation of input, several peer reviewers stated that they would like more time to review and critique their assigned applications.

Preliminary critiques and scores are submitted to the electronic biomedical research application portal (eBRAP), after which the contractor SRO and the CDMRP program manager review them. Applications, preliminary critiques, and preliminary scores are also available electronically to all other panel members (not just assigned reviewers) except in the case of those who have COI with a particular application.

CDMRP reports that peer reviewers then have 4–5 weeks to review all preliminary critiques and scores assigned to their panel before the panel meeting ( Salzer, 2016c ). This length of time is intended to allow the reviewers to become familiar with all the critiques before the meeting, so that the discussion at the meeting can be focused and the reviewers who were not assigned a specific application will have enough time to be informed about it and contribute to the discussion.

Peer Review Criteria

Each application is evaluated according to the peer review criteria published in the program announcement. Usually, two sets of scores are given during peer review. The first set consists of scores on evaluation criteria such as impact, research strategy and feasibility, and the transition plan; each of these criteria receive numeric scores from the primary and secondary reviewers. Other criteria, such as environment, budget, and application presentation are also evaluated but do not receive numeric scores. The individual criteria are not given different weights, but they are generally presented in order of decreasing importance ( Kaime et al., 2010 ). The scale uses whole numbers from 1 (deficient) to 10 (outstanding) (see Table 5-1 ).

CDMRP Evaluation Criteria Scoring Scale.

The second score given is the overall score, which represents an overall assessment of the application's merit. Overall scores range from 1.0 (outstanding) to 5.0 (deficient) (see Table 5-2 ). Some award mechanisms use only an overall score, whereas others only use adjectival scores instead of numeric ones ( Salzer, 2016b ). Reviewers are instructed to base the overall score on the evaluation criterion scores, but they may also consider additional criteria that are not individually scored, such as budget and application presentation ( Kaime et al., 2010 ).

CDMRP Overall Scoring Scale.

As can be seen from Tables 5-1 and 5-2 , the scale used to score evaluation criteria is different from the scale for the overall score. The overall and criterion scores are not combined or otherwise mathematically manipulated to connect them, but the overall score is expected to correspond to the individual criterion scores (for example, if the individual criteria receive scores in the excellent range, the overall score should also be in the excellent range) ( MOMRP, 2014 ). The use of two different scales to score the application is deliberate and intended to discourage averaging evaluation criterion scores into an overall score ( Kaime et al., 2010 ). However, several peer and programmatic reviewers who responded to the committee's solicitation of input noted that the use of the two scales was confusing. The committee finds that because CDMRP funding announcements dictate the importance of each criterion for the overall score, it would be easier for reviewers to appropriately consider each criterion if the same scale were used for both overall and criterion scores.

The committee notes that in 2009 the NIH, which reviews tens of thousands of applications per year, moved to a 9-point, whole-number scale (see Table 5-3 ) which may be used for both criterion scoring and the overall score ( NIH, 2015b ). Several other agencies that conduct peer review have adopted NIH's revised scoring system. The new scoring includes additional guidance for the category descriptors. For example, an application that is given a score of 1 has a descriptor of “exceptional,” which corresponds to “exceptionally strong with essentially no weaknesses.” Major, moderate, and minor weaknesses are further defined ( NIH, 2015b ). The NIH 9-point scoring system is based on the specific psychometric properties presented with that scale. CDMRP's use of such a 9-point scale would better reflect current peer review practices and help experts who review for multiple funding agencies be more comfortable with the CDMRP scoring system.

NIH Peer Review Scoring Scale.

Reviewer Roles

Scientist reviewers evaluate an entire application; consumer reviewers are required to critique only specific sections—the most important of which is the “impact statement”—but they may read and critique other sections if they choose. The impact statement describes the potential of the proposed research to address the goal of the program and the potential effects it will have on the scientific research community or people who have or are survivors of the health condition. Directions for preparing an impact statement are included in each program announcement, but the specific content varies by award mechanism ( CDMRP, 2016f ). Specialty scientist reviewers are responsible for critiquing and scoring only designated sections of the applications, such as the research plan or statistical design, but, as with consumer reviewers, they may critique additional sections of the application if they choose.

Each peer reviewer writes a preliminary critique on the strengths and weaknesses of his or her assigned applications and scores each criterion; the data are entered directly into eBRAP. Specialty or ad hoc peer reviewers provide criterion scores for those specific areas that they are charged with reviewing (for example, the statistical plan for a biostatistician specialty reviewer). Each assigned reviewer, including specialty reviewers, also provides an overall score ( Salzer, 2016b ). The committee is concerned about having reviewers who may have read only a portion of an application provide an overall score for it. However, the committee recognizes that the preliminary peer review scores, including the overall scores, can be revised by a reviewer during the full panel discussion of an application.

- THE PEER REVIEW MEETING

There are five possible formats for conducting scientific peer review: onsite, in-person peer review panels; online/virtual peer review panels; teleconference peer review panels; video teleconference peer review panels; and online/electronic individual peer reviews. The peer review format to be used is decided by the program manager and included in the task order assumptions. An onsite peer review meeting lasts approximately 2–3 days, whereas teleconference or videoconference panels generally meet over 1–3 afternoons. In 2014, across 23 CDMRP research programs, there were 124 in-person panels, 23 videoconferences, 41 teleconferences, and 61 online conferences (49 of the online conferences were held by the Peer Reviewed Medical Research Program alone) ( Salzer, 2016c ). The majority of the programs had only in-person meetings or in-person meetings plus another meeting format, a few programs had only teleconferences, and none of the programs used only videoconferences or online meetings. Among peer reviewers who responded to the committee's solicitation of input, many stated that the in-person panel meetings were far superior to meetings held by teleconference or another meeting format.

When possible and depending on program size, most or all peer review panels for a given program are scheduled to take place in consecutive sessions. At the start of peer review panel meetings, the program manager presents a plenary briefing for all reviewers which includes an overview of CDMRP, the history and background of the program, the award mechanisms and their intent, the goals and expectations of the review process, and a summary of how the peer review deliverables inform the programmatic review panel. Program managers observe the performance of the panel as a whole as well as the performance of the individual peer reviewers, the panel chair, and the scientific review officers. Program managers also ensure that the panel discussions are consistent with the program announcement. For large programs with multiple peer review panels, the program manager may request that the program's science officer(s) attend to provide additional oversight ( Salzer, 2016b ).

During the meeting, the chair calls each individual application for discussion. Chairs are responsible for being familiar with all applications assigned to their panel and their preliminary critiques and scores. Each peer review panel must maintain a quorum of at least 80% of all panel members. The discussion of an application begins with the primary reviewer summarizing its goals, strengths, and weaknesses, followed by additional comments from the secondary and specialty peer reviewers, if applicable, and consumer reviewers. For in-person meetings, the chair facilitates further discussion of the application between the assigned reviewers and the other panel members ( Kaime et al., 2010 ).

All panel members assign a final score to each application following deliberations on it ( Kaime et al., 2010 ). Specialty peer reviewers are considered equal voting members of the panel for applications that they reviewed, but their input is limited to their assigned applications and not the panel's entire portfolio ( Salzer, 2016b ). The overall score provided by each voting panel member is averaged to produce the overall score for the application, which is provided to the applicant along with the summary statement ( MOMRP, 2014 ).

Reviewers may revise their preliminary critiques, if necessary, and complete the standardized application evaluation forms following the general discussion of the application. The panel chair then gives an oral summary of the discussion, including any reviewer's revisions. Panel members must finalize their scores immediately after the discussion of each application, and critiques may be modified at any time during the meeting and up to 1 hour after the meeting is concluded ( Salzer, 2016d ). The discussion is incorporated into the final summary statement for the application (discussed under Post-Meeting Activities and Deliverables). All panel reviewers are given an opportunity to comment on the chair's oral summary ( Salzer, 2016b ).

Programs that handle large volumes of applications may use an expedited review process ( Kaime et al., 2010 ). This process is a form of review triage and was instituted to decrease the cost and increase the efficiency of the peer review processes ( Salzer, 2016c ). In this process, all applications are reviewed in the pre-meeting phase by assigned peer reviewers as previously described. Pre-meeting scores are collated by the peer review contractor, who then sends the reviewers' scores (overall and criteria scores) for all applications to the CDMRP program manager. The program manager then reviews the scores and selects the range of scores (and thus applications) that will be assigned for discussion as well as those that will not be discussed at the plenary peer review meeting. Typically, the scoring threshold for discussion is the top 10% (although this could be as much as 40%) of applications. Applications designated for expedited review are not discussed at the plenary peer review meeting unless the application is championed. An expedited application may be championed by any member of the peer review panel and will immediately be added to the docket for full panel discussion ( Salzer, 2016c ).

- POST-MEETING ACTIVITIES AND DELIVERABLES

The main outcome of a peer review meeting is a summary statement for each application. Summary statements are used to help inform the deliberations of the programmatic panel when making funding recommendations (see Chapter 6 ). After the review process is complete and funding decisions have been made, the summary statements are provided as feedback to the respective principal investigators.

Summary Statements

Following a peer review panel meeting, the peer review contractor generates an overall debriefing report that has a summary of all comments made by the reviewers during the panel discussion. For each application that was reviewed by a panel, the SRO prepares a multi-page summary statement that consists of

- identifying information for the application;

- an overview of the proposed research which may include the specific aims;

- the average overall score from all panel members who participated in the peer review meeting (with standard deviation so that the applicant can see how much variability there was in panel scores);

- the average criterion-based scores (standard deviation may be provided);

- for each criterion section, the assigned reviewer's written critiques of the application's strengths and weaknesses, including specialty reviews;

- any panel discussion notes captured by the SRO during the panel meeting, such as comments from panel members who were not assigned to the application or the chair's oral summary; and

- for unscored criteria, such as budget and application presentation, a summary of the strengths and weaknesses noted by assigned scientist reviewers and any relevant panel discussion notes ( Salzer, 2016b ).

The SRO does not change or alter the reviewers' written critiques except for formatting, spelling, typographical errors, etc. The SRO drafts all summary statements, and the program manager reviews them ( Salzer, 2016b ).

After the meeting, the CDMRP program manager receives a final scoring report as well as administrative and budgetary notes ( Salzer, 2016b ). Program managers review the summary statements and a final scoring report and may request rewrites from reviewers or the contractor if, for example, the critiques and summary do not match the scores or a summary statement is inadequate. If there are any actions that need to be completed prior to programmatic review, such as clarifying eligibility issues, the program manager will act on them before the programmatic review meeting ( Salzer, 2016b ).

Quality Assurance and Control of the Peer Review Process

The CDMRP program manager reviews all deliverables and evaluates contractor performance according to the individual contract's Quality Assurance Surveillance Plan. Contractor performance is reviewed and evaluated on a quarterly basis. Through the evaluations, CDMRP is able to provide feedback on the members of the peer review panels. All comments are sent to the contracting officer's representative, who gathers them and sends them to the contracting officer. Any discrepancies or deficiencies in the Quality Assurance Surveillance Plan are discussed with the contractor, and a resolution is sought ( Salzer, 2016a ).

For each funding cycle, the U.S. Army Medical Research and Materiel Command (USAMRMC) evaluates a random sample of 20 applications across all programs to assess whether reviewer expertise was appropriate for the application and to compare the information with the reviewer's self-assessment of their areas of expertise ( CDMRP, 2015c ). However, this random sample equates to less than one application to be reviewed per program. No additional information was provided on this evaluation process.

In addition to the USAMRMC evaluation, the program manager reviews at least 10% of the draft summary statements from each panel to assess the reviewers' evaluation of applications and to ensure that the summary statements accurately capture the panel's discussions ( Salzer, 2016b , d ). Summary statements are not chosen randomly; factors that may flag a summary for review include large changes in pre- versus post-discussion scores, applications with high scores, applications for which the panel had disagreements, and applications considered to be “high-profile” ( Salzer, 2016d ). Summary statements are reviewed to ensure that there is concordance among the evaluation criteria, overall scores, and the written critiques; that key issues of the panel discussion were captured; and that the critiques are appropriate for each criterion. If an issue that may have affected the peer review outcome is identified, the program manager may request a new peer review for the application. Other assessments that do not directly affect a peer review outcome are given as feedback to the contractor ( Salzer, 2016d ).

A mechanism for the quality assurance of peer reviewers is the post-meeting evaluation by the CDMRP program manager with support from the contractor's scientific review manager and SRO. The goal of this evaluation is to identify reviewers and chairs who should be asked to serve again, should their expertise be needed. Panel members are also evaluated to identify those who might be good chairs in future review cycles. There is no standardized form or criteria for peer reviewer evaluations ( Salzer, 2016c ), and no examples of program-specific evaluations were provided. The performance assessments of individual peer reviewers consider expertise, the ability to communicate ideas and rationale, group interactions, the ability to present and debate an opposing view in a professional manner, strong critique writing skills, and adherence to policies on nondisclosure and confidentiality ( Salzer, 2016c ). As part of the quality assurance check of summary statements, the overall quality of each reviewer's product is evaluated to be sure that there is a match between scores and written critiques, that there is solid reasoning, and that reviewers have demonstrated an understanding of how to judge each criterion ( Salzer, 2016d ). It is unclear to the committee whether the evaluation also includes any feedback from the peer reviewer, such as whether the panel had the appropriate expertise. Because program needs and award mechanisms may change from year to year, there is no guarantee that a particular expertise will be required each year, so even reviewers or chairs who are exemplary may not be invited to participate in subsequent panels if their expertise is not needed ( Salzer, 2016b ).

Post-Peer Review Survey

Following the peer review meetings, scientist and consumer reviewers complete an online survey and evaluation form to provide feedback on the experience, the process, and the areas that could use improvement ( Salzer, 2016b ). CDMRP informed the committee that the survey includes reviewer demographics; satisfaction with the process, including whether consumers are engaged; an evaluation of pre-meeting support (including logistics, webinars, and review guidance) and the technological interfaces used for review; and whether reviewers have recently submitted an application to another CDMRP research program or to another award mechanism in the same research program. Scores and comments are compiled and used by the peer review contractor and CDMRP program managers to assess the peer review process and to evaluate the program announcement for future modifications—for example, to clarify overall intent of the award, focus areas, or peer review criteria. Other aspects may be discussed at the program's subsequent vision setting meeting ( Salzer, 2016a ).

CDMRP stated that program managers have an opportunity to add program-specific or award mechanism–specific questions as needed to the post-review survey. For example, Box 5-1 shows the questions that were added to the post-peer review survey for the Amyotrophic Lateral Sclerosis Research Program at CDMRP's request in 2014 and 2015.

Questions Added by CDMRP to the Post-Peer Review Survey for the Amyotrophic Lateral Sclerosis Research Program.

The committee requested a copy of the post-peer review survey as well as the aggregated responses from a sample program. However, neither the survey nor the aggregate sample results were provided because the post-peer review survey is owned by and considered a deliverable by the peer review support contractor.

The consumer reviewers meet separately as a group at the end of the meeting to debrief on their experience and provide feedback to CDMRP through the consumer review administrator on how the experience could be improved ( CDMRP, 2016f ). Questionnaires are used to evaluate the mentor program and other consumer aspects of the CDMRP program. The results of the questionnaires were not available to the committee, but individual testimony from consumers who attended the committee's open sessions as well as consumers and scientists who responded to the committee's solicitation of input reported that consumers' input to the peer review process was helpful.

- Cite this Page National Academies of Sciences, Engineering, and Medicine; Health and Medicine Division; Board on the Health of Select Populations; Committee on the Evaluation of Research Management by DoD Congressionally Directed Medical Research Programs (CDMRP). Evaluation of the Congressionally Directed Medical Research Programs Review Process. Washington (DC): National Academies Press (US); 2016 Dec 19. 5, Peer Review.

- PDF version of this title (3.3M)

In this Page

Recent activity.

- Peer Review - Evaluation of the Congressionally Directed Medical Research Progra... Peer Review - Evaluation of the Congressionally Directed Medical Research Programs Review Process

Your browsing activity is empty.

Activity recording is turned off.

Turn recording back on

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

- Appropriations

Senate Appropriations Committee Approves FY2024 Funding for CDMRP

by crdassociates

July 28, 2023

Yesterday, by an overwhelming bipartisan vote of 27-1, the Senate Committee on Appropriations approved its version of the fiscal year 2024 Defense Appropriations Act . This legislation provides funding for the Congressionally Directed Medical Research Program (CDMRP) consistent with levels provided in previous years by the Committee. Specifically, the bill provides funding for a number of cancer programs, including breast cancer ($130 million), prostate cancer ($75 million), ovarian cancer ($15 million), and rare cancers ($17.5 million). While some of the cancer programs were proposed for cuts below the fiscal year 2023 levels, they will likely be restored in conference to their previous levels as proposed in the House version of the Defense Appropriations Act.

The Senate version of the bill also provides $370 million for the Peer Reviewed Medical Research Program, the same as appropriated in FY23, for 47 eligible conditions. The bill also flat maintains the Peer Reviewed Cancer Research Program at $130 million.

The Senate bill will likely come to the Senate floor in December. Meanwhile, the House bill’s floor fate has yet to be determined. However, in past years, Congress has found a way to enact a Defense Appropriations Act, and typically provides the higher levels of funding for programs where there are discrepancies between the House and Senate versions.

A summary of the bill is available HERE .

Bill text, as amended is available HERE .

The bill report, as amended, is available HERE .

Adopted amendments are available HERE .

Tags: Defense Appropriations Act FY2024 SAC

You may also like...

Defense Health Research Consortium to Congress: Enact the FY24 Defense Appropriations Act

November 9, 2023

- Next story Defense Health Research Consortium to Congress: Enact the FY24 Defense Appropriations Act

- Previous story Defense Health Research Consortium to Congress: Enact the FY23 Defense Appropriations Bill

LATEST NEWS

June 26, 2023

House Appropriations Committee approves FY24 Defense Appropriations Act

On June 22, the House Committee on Appropriations approved its version of the fiscal year 2024 Defense Appropriations Act. Approved 34 to 24 along party lines, the bill would provide $826.45 billion for defense spending in the fiscal year starting October 1, staying within spending caps negotiated in the debt ceiling agreement.

The House Committee mark largely includes funding for the Congressionally Directed Medical Research Programs (CDMRPs) at existing fiscal year 2023 levels. The bill provides $10 million to create a new arthritis research program. Arthritis is currently an eligible condition in the Peer Reviewed Medical Research Program (PRMRP).

The House version of the bill will likely be brought to the House floor for consideration in July. The House may choose to first consider the fiscal year 2024 National Defense Authorization Act when it reconvenes on July 11.

In a related development, on June 22, the Senate Committee on Appropriations approved allocations for its versions of the fiscal year 2024 appropriations bills, including $823.3 billion for defense spending.

_______________________

November 22, 2022

The Defense Health Research Consortium and dozens of its affiliated members today sent a letter to House and Senate leadership, calling on them to “work toward the enactment of the fiscal year 2023 Defense Appropriations Act, to ensure full funding levels for the Defense Health Research Programs, including the Congressionally Directed Medical Research Programs (CDMRP).”

November 16, 2022

Rep. Ken Calvert has survived his re-election and will serve as chair of HAC-D

End-of-Year Outlook: What’s Left on the Congressional Agenda

Congress returns to Washington next week with a full agenda before adjourning for the year. Here is a look at what issues they may consider:

FY 2023 Appropriations

One benefit of the close elections is the path to finishing fiscal year (FY) 2023 appropriations may have gotten easier as Republicans will not have the leverage to punt the spending package into the new Congress. The current CR expires on December 16 th and Democrats in the House and Senate intend to negotiate and enact an omnibus spending package by then, or by the end of the calendar year. An omnibus would likely include supplemental Ukraine funding, disaster relief, mental health authorizations, and other priorities.

The $31.4 trillion debt limit will need to be raised by before the end of 2023. Influential Republicans have described the debt limit as a tool they will use to extract major spending cuts, despite the risk of crashing the economy. Therefore, Democrats and moderate Republicans are discussing the possibility of including a debt limit increase, or abolishing the debt limit, during the lame duck. President Biden opposes the abolishment of the debt limit, claiming it would be “irresponsible.” It is unclear there will be enough votes attached to this proposal to the omnibus. However, it would protect the economy under President Biden while solving the issue for the next Congress by taking it out of their hands.

Congress Passes Another Continuing Resolution to Extend Fiscal Deadline Through December 18

December 11, 2020

The Senate passed a one-week continuing resolution (CR) late Friday afternoon via voice vote, sending it to President Trump for his signature just hours before the midnight deadline. The President signed the bill Friday evening to keep the government open for another week while lawmakers work to reach an agreement on a spending package before the new December 18 deadline.

Lawmakers and staff worked over the weekend to finalize and file an omnibus package by COB today, but sources tell us lawmakers are still uncertain whether it is possible. Rumors are circulating that House Appropriations Committee staff have drafted a three-month CR that they could rely on if a final spending package isn’t ready by early this week. However, appropriators in both chambers are outwardly optimistic, including Senate Majority Leader Mitch McConnell (R-KY), who told reporters he is hopeful that progress on these items will produce a final bill this week.

Senate Passes FY 21 NDAA Conference Report

The Senate this afternoon passed H.R. 6395, the $740.5 billion FY 21 NDAA conference report, by a vote of 84 to 13.

President Trump had threatened to veto the bill because it excluded language repealing a legal shield to tech companies and included bill language that calls for renaming military bases named after confederate soldiers. The House passed the bill earlier this week by a strong vote of 335-78.

Congress Passes Continuing Resolution, Extending Fiscal Deadline

September 30, 2020

Today, Congress passed the continuing resolution (CR) to extend the fiscal deadline through December 11. According to a person familiar with the planning, President Trump will sign the CR on Thursday, but there won’t be a lapse in appropriations because of his intent to sign the measure, which the Senate cleared Wednesday on an 84-10 vote, several days after the House voted 359 to 57 to approve the bipartisan bill.

House Democrats Unveil a Short-term Spending Bill

September 22, 2020

House Appropriations Committee Chair Nita Lowey (D-NY) introduced a short-term CR to extend Fiscal Year (FY) 2020 funding beyond the September 30 fiscal deadline until December 11.

The Democrats reportedly introduced the bill on their own without support of the White House or House or Senate Republicans.

The Latest on the CR Negotiations

September 15, 2020

We are hearing the House is planning on filing a continuing resolution on Friday, and plan to take it up on the floor next week. How long the CR will last is still unknown, but it seems that Speaker Pelosi is in favor of February or March. Senate Majority Leader McConnell and Treasury Secretary Mnuchin unsurprising prefer the CR expire in December.

House Approves Fiscal Year 2021 Defense Appropriations Act

July 31, 2020

The House has approved the Fiscal Year 2021 Defense Appropriations Act, as part of a larger minibus package. There were no amendments that would have adversely impacted the CDMRPs. Now on to the Senate, which may not act on this until after the election.

The House has sent its members home for August but will call them back with a 24 hour notice if there is a deal on the next COVID-19 relief package.

EXTERNAL LINKS

- Congressionally Directed Medical Research Programs (CDMRP) – Home Page

- CDMRP – Research Programs Page

- Appointments

- Resume Reviews

- Undergraduates

- PhDs & Postdocs

- Faculty & Staff

- Prospective Students

- Online Students

- Career Champions

- I’m Exploring

- Architecture & Design

- Education & Academia

- Engineering

- Fashion, Retail & Consumer Products

- Fellowships & Gap Year

- Fine Arts, Performing Arts, & Music

- Government, Law & Public Policy

- Healthcare & Public Health

- International Relations & NGOs

- Life & Physical Sciences

- Marketing, Advertising & Public Relations

- Media, Journalism & Entertainment

- Non-Profits

- Pre-Health, Pre-Law and Pre-Grad

- Real Estate, Accounting, & Insurance

- Social Work & Human Services

- Sports & Hospitality

- Startups, Entrepreneurship & Freelancing

- Sustainability, Energy & Conservation

- Technology, Data & Analytics

- DACA and Undocumented Students

- First Generation and Low Income Students

- International Students

- LGBTQ+ Students

- Transfer Students

- Students of Color

- Students with Disabilities

- Explore Careers & Industries

- Make Connections & Network

- Search for a Job or Internship

- Write a Resume/CV

- Write a Cover Letter

- Engage with Employers

- Research Salaries & Negotiate Offers

- Find Funding

- Develop Professional and Leadership Skills

- Apply to Graduate School

- Apply to Health Professions School

- Apply to Law School

- Self-Assessment

- Experiences

- Post-Graduate

- Jobs & Internships

- Career Fairs

- For Employers

- Meet the Team

- Peer Career Advisors

- Social Media

- Career Services Policies

- Walk-Ins & Pop-Ins

- Strategic Plan 2022-2025

Pre-Med 101: What is the MSAR?

- Share This: Share Pre-Med 101: What is the MSAR? on Facebook Share Pre-Med 101: What is the MSAR? on LinkedIn Share Pre-Med 101: What is the MSAR? on X

The MSAR is pure pre-med gold, not to be confused with MRSA, which is bad. MSAR is an acronym for Medical School Admissions Requirements. It is published by the AAMC and updated each year. There is a fee to access the online resource. One of the benefits of the AAMC Fee Assistance Program is a complimentary two-year subscription.

Is the MSAR worth it? Here are five highlights of the MSAR that our applicants have found useful:

There is a filter to easily identify medical schools that accept applications from international and Canadian applicants. Thank goodness!

In addition to reporting up-to-date median GPAs and MCAT scores for each school, the MSAR shows the 10th to 90th percentiles (outer band) and the 25th to 50th percentiles (inner band). So helpful! Even better, you can filter this data for in- and out-of-state accepted applicants and all matriculants. Oooh.

Speaking of in- and out-of-state applicants…for each school you can see how many in- and out-of-state applicants and international applicants there were and how many within each group were interviewed. Pure gold.

Medical schools seek students that are a good “mission fit” for their class. The MSAR shares the mission statement of each school. One school may seek to “transform” medicine while another seeks to “enhance delivery;” one might talk a great deal about “worldwide” health while another talks more about “community-based” care. So easy to locate these and compare.

“Average graduate indebtedness.” Knowing the average graduate indebtedness at a school doesn’t tell you everything you need to know about financial aid at a school or what your financial obligation might be, but…it’s interesting and good to know what these numbers look like. The “Tuition, Aid & Debt” section is a terrific place to start thinking about financial considerations and how financial aid might differ from your undergraduate experience (along with the AAMC Paying for Med School resource).

- Open access

- Published: 01 June 2024

Do the teaching, practice and assessment of clinical communication skills align?

- Sari Puspa Dewi ORCID: orcid.org/0000-0001-5353-9526 1 , 2 , 3 ,

- Amanda Wilson ORCID: orcid.org/0000-0001-5681-9611 4 ,

- Robbert Duvivier ORCID: orcid.org/0000-0001-8282-1715 2 , 5 , 6 ,

- Brian Kelly ORCID: orcid.org/0000-0003-4460-1134 2 &

- Conor Gilligan ORCID: orcid.org/0000-0001-5493-4309 2 , 7

BMC Medical Education volume 24 , Article number: 609 ( 2024 ) Cite this article

Metrics details

Evidence indicates that communication skills teaching learnt in the classroom are not often readily transferable to the assessment methods that are applied nor to the clinical environment. An observational study was conducted to objectively evaluate students’ communication skills in different learning environments. The study sought to investigate the extent to which the communication skills demonstrated by students in classroom, clinical, and assessment settings align.

A mixed methods study was conducted to observe and evaluate students during the fourth year of a five-year medical program. Participants were videorecorded during structured classroom ‘interactional skills’ sessions, as well as clinical encounters with real patients and an OSCE station calling upon communication skills. The Calgary Cambridge Observational Guides was used to evaluate students at different settings.

This study observed 28 students and findings revealed that while in the classroom students were able to practise a broad range of communication skills, in contrast in the clinical environment, information-gathering and relationship-building with patients became the focus of their encounters with patients. In the OSCEs, limited time and high-pressure scenarios caused the students to rush to complete the task which focussed solely on information-gathering and/or explanation, diminishing opportunity for rapport-building with the patient.

These findings indicate a poor alignment that can develop between the skills practiced across learning environments. Further research is needed to investigate the development and application of students’ skills over the long term to understand supports for and barriers to effective teaching and learning of communication skills in different learning environments.

Peer Review reports

Doctors’ communication skills are among the most essential elements of effective patient care [ 1 , 2 ]. Studies show a clear link between the quality of explanations provided to patients and their health outcomes, including reduced pain, enhanced quality of life, improved emotional health, symptom alleviation, and better adherence to treatment plans [ 1 , 3 , 4 ]. Recognizing its significance, medical councils and accreditation bodies worldwide prioritize effective communication as a core competency for healthcare practitioners [ 5 , 6 , 7 ]. Experts agree that communication skills can be effectively taught and learnt [ 8 , 9 , 10 , 11 , 12 , 13 ]. Patients treated by doctors who have undergone communication skills training exhibit 1.62 times higher treatment adherence [ 4 ]. Furthermore, doctors trained in communication have better patient interactive processes and outcomes (information gathered, signs and symptoms relieved, and patient satisfaction) compared to those without such training [ 14 ].

Diverse medical consultation models have emerged, drawing from a spectrum of frameworks that prioritize tasks, processes, outcomes, clinical competencies, doctor-patient relationship, patients’ perceptive of illness, or a combination of these elements [ 15 ]. These models serve as frameworks conducive to structuring the teaching and learning of communication skills, delineating both content and pedagogical approaches. Numerous research endeavours have assessed these models’ applicability in clinical and educational settings [ 16 , 17 ]. Medical schools can leverage these models to articulate learning objectives and tailor teaching strategies within their curricula accordingly. Furthermore, the model can serve as framework for evaluating communication skills and the effectiveness of training interventions. The adoption of such models across these diverse educational contexts however, appears to be inconsistent.

Communication skills teaching and learning typically commences in classroom or simulation settings before students transition to clinical practice. Classroom sessions involve simulation-based role-play exercises with peers and simulated patients [ 18 , 19 ], while clinical environments offer opportunities for real patient interactions in various healthcare settings [ 20 ]. This structured learning process aims to develop students’ ability to conduct effective, patient-centered medical consultations across diverse clinical scenarios and handle challenging situations [ 20 , 21 ]. Students’ ability to communicate with patients is commonly assessed using Objective Structural Clinical Examination (OSCE) [ 22 ], in which students are observed during an interaction with simulated patient in a set time and evaluated using a standardised rating form.

Constructive alignment, a crucial concept in education, refers to “aligning teaching methods, as well as assessment, to the intended learning outcomes” [ 23 ]. In medical education, this alignment ensures that students achieve desired learning outcomes related to communication skills necessary for delivering patient-centered care in diverse contexts [ 24 ]. Communication skills include the process of exchanging messages and demonstrating empathic behaviour while interacting with patients and colleagues to deliver patient-centred care in a range of contexts [ 25 ]. Learning clinical communication skills is complex and nuanced but should be subjected to the scrutiny and planning associated with constructive alignment along with other curricula elements. Both approaches to learning, and application of clinical communication skills often requires students to be creative and flexible in applying their skills to different contexts and patient conditions [ 19 ], and to be committed to ongoing development and improvement of their communication in practice [ 26 , 27 ].

The achievement of such constructive alignment, however, remains an elusive goal in many medical schools, with challenges in aligning the communication skills learnt, modelled, and applied in different learning environments and assessment contexts [ 28 , 29 , 30 , 31 , 32 ]. Evidence indicates that the skills, suggested structures, and processes learnt in the classroom are not always transferred in the clinical environment [ 20 , 33 , 34 ]. Differences in learning processes exist between settings, and particularly in the transition from classroom to clinical environment, associated with heavy workloads, different teaching and assessment methods, students’ uncertainty about their role, and adaptation to a more self-directed learning style [ 35 ].

Although structured approaches to medical consultations, such as the Calgary-Cambridge Observational Guides (CCOG) [ 19 ] are often taught by classroom educators, teaching, feedback and modelling in clinical environment often does not align with this [ 20 , 36 ]. Further, these structures are not always reflected in the rating schemes used to assess OSCE performance [ 37 ], or the structure of OSCE stations in which communication is not the only skill being assessed. OSCE stations differ in purpose and focus from those designed to assess communication as it is integrated within broader clinical tasks, to those which focus more specifically on communication [ 38 ], but time limited OSCE stations rarely reflect the true entirety of a complex clinical task [ 39 ]. Recent reviews indicate that OSCEs remain widely used, and generally apply good assessment practices, such as blueprinting to curricula and the used of valid and reliable instruments [ 38 , 39 ], but their ability to reflect authentic clinical tasks is less clear.

An observational study was conducted to objectively evaluate students’ communication skills in different learning environments. The study aimed to explore the extent to which the communication skills demonstrated by students in classroom, clinical, and assessment settings align.

Study design

A concurrent triangulation mixed methods study was conducted to observe and evaluate students during the fourth year of a five-year medical program. Concurrent triangulation designs leverage both qualitative and quantitative data collection to enhance the accuracy of defining relationships between variables of interest [ 40 ]. Video-recordings were employed during structured classroom ‘interactional skills’ sessions (ISS) or workshops, clinical encounters with patients and an OSCE station requiring communication skills. The use of video recording aimed to ensure the objectivity of data collection and eliminate researcher-participant interaction biases [ 41 ]. As communication skills comprise verbal and non-verbal behaviour, video-recording is considered the most suitable method to capture this behaviour alongside their contextual settings [ 42 ]. Additionally, field notes were taken during each observation to provide further context to during data analysis. This study was approved by both the University and Health District Human Research Ethics Committees (H-2018-0152 and 2018/PID00638).

Study sites and participants

This study was undertaken in a five-year undergraduate medical program which includes a structured communication skills curriculum grounded in the principles delineated in the Calgary-Cambridge guide to the medical interview. The curriculum is structured to initiate students into communication micro-skills in the context of classroom-based simulation early in the program, with ongoing opportunities to practise and master these throughout the program during both classroom sessions and application in clinical practice. Interactional skills workshops (classroom) occur throughout the program to align with other curricula elements and clinical rotations. Beginning in the third year, students engage in regular interaction with real patients across various clinical contexts, complemented by continued participation in scheduled interactional skills workshops within the classroom environment. For example, during the Women’s, Adolescent’s, and Children’s Health (WACH) rotation, students attend four structured classroom workshops tailored to the communication skills pertinent to specific clinical scenarios;1) Addressing sensitive issues with adolescents, 2) Partnership with parents, 3) Prenatal screening, and 4) Cervical screening discussions with Aboriginal and Torres Strait Islander women. Evaluation of communication skills is conducted using OSCEs at pivotal points throughout the program.

For the purpose of this study, all fourth-year medical students were invited to participate during their 12-week WACH rotation. This rotation spans clinical placements across five clinical schools in the medical school footprint. The WACH rotation encompasses a blended learning approach, both didactic and clinical components. Students are afforded opportunities to apply communication skills acquired in classroom settings to clinical setting and are assessed in a multi-station OSCE at the end of the rotation. Participation invitations were extended to all students actively enrolled in the course during the designated study period.

Study procedure

Students received an email invitation from the school administration at the beginning of the rotation, and the study was also briefly described during a lecture prior to clinical placement. Students who consented were observed during an interactive workshop session involving role-play with simulated patients, one real patient encounter, and one end-of-semester OSCE station related to communication skills. As part of this rotation, students attended four ISS workshops focusing on communication skills required in specific situations. They were also expected to keep a record of experience and achievement towards their core clinical competencies, including history-taking and patient communication tasks. Skills were assessed in a multiple station OSCE at the end of semester. Participating students received an AU$20 gift vouchers as appreciation for their time.

Context of the observation

In-class activities were directly observed and video-recorded, with equipment set up to record role-play interactions between consenting students and a simulated patient. Sessions included eight to twelve students, beginning with discussion of the topic before inviting students to practice skills with simulated patients.

The learning process typically commenced with an introductory overview of the topic, followed by a discussion of students’ clinical rotation experiences. Subsequently, the session advanced to simulated scenarios, wherein various students engaged in role-playing activities. The classroom facilitator initiated each role-play by delineating the scenario and ensuring that students were adequately briefed on their roles and the context before inviting volunteers to interact with the simulated patient. The length of time each student spent in the ‘hot-seat’ engaged in a role-play varied depending on the nature of the session, the facilitator style, and the section of the scenario each student was role-playing.

Clinical educators or health behaviour scientists facilitated the workshops, guided by facilitator instructions which encouraged application of agenda-led, outcome-based analysis of the role-play experiences [ 19 ]. As part of the learning process, some students started the role-play at a mid-point of the consultation, picking up from where previous students paused. They continued the conversation whenever previous students left the role. Therefore, not all micro-skills in the CCOG could be observed in every student’s role-play.

The clinical observations were scheduled for times and locations convenient for the participants, either with clinical supervisor during unstructured clinical time, or self-study, usually in Internal Medicine, Paediatrics, or Obstetrics and Gynaecology wards. In this setting, the students aimed to independently take a complete medical history from a patient. Stable and cooperative patients were identified by attending physicians or nurses who sought initial consent for students to approach them. Students also sought permission when approaching patients, to have the consultation recorded for the purpose of the study. The researcher was available to explain the study to the patients if needed. After observing a real patient encounter, a structured debriefing was conducted and recorded for used in the analysis.

One station in which communication was directly assessed (included as one or more items in the marking schema), was observed during an end-of-semester OSCE. Each student had a maximum of eight minutes to respond to a clinical task, after two-minutes reading and preparation time. Students interacted with a simulated patient and examiner based on the task given. In this study, students were observed in three end-of-semester OSCEs with three different cases. All cases related to the women’s health clinical rotation; the first case required the student to discuss a pregnancy test result with a female patient. The second asked the student to discuss contraceptive options with a teenage girl. The third case required the students to discuss urinary incontinence due to uterine prolapse with a female patient. Each simulated patient was trained to present with specific symptoms in response to the students’ questioning. The examiners observed the students and rated their performance based on pre-determined marking criteria. The OSCEs were video recorded without the researcher present.

Outcome measures/instruments

Students’ communication skills in each context were independently observed and rated using the CCOG [ 19 ] by two observers. This evaluation tool has good validity and reliability for evaluating communication skills across a range of settings [ 17 , 19 , 43 ], with moderate intraclass correlation coefficients for each item, ranging from 0.05 to 0.57 [ 43 ]. CCOG evaluates six essential communication skills tasks including initiating the session, gathering information, providing structure, building relationships, explanation and planning, and closing the session, and overall performance in interpersonal communication [ 19 ]. Each task consists of two to four micro-skills. Not all tasks could be applied to each observation and setting depending on the presenting complaint, the purpose of encounters, and the patient context [ 19 ]. Each student’s performance of micro-skills was evaluated using a 3-point Likert scale: “0” (did not perform the skill), “1” (skill was partially performed or not performed well), “2” (skills performed well), and “NA” (not applicable). Overall performance was evaluated using a 9-point Likert scale (1–3 = unsatisfactory, 4–6 = satisfactory, and 7–9 = superior) [ 44 ].

During observations, the researcher took field notes which included the context, time and setting of the observation, number and type of attendees (students, facilitator, simulated or real patient), how the sessions occurred, interactions among attendees, and critical reflections of the researcher.

Analysis method

This study implemented a combination of descriptive quantitative and qualitative approaches. This method uses qualitative data to support and enable a deeper understanding and interpretation of the quantitative data. A concurrent triangulation method was used to analyse data collected from observations. For quantitative data three researchers independently rated a sample of the recordings and reached agreement on ratings before scoring was completed by the lead author. This process ensured that the ratings were representative of the meaning of the task and confirmed that the rating of the data was consistent. The researchers discussed the scores to check for consistency and inter-rater reliability and Cohen’s kappa was calculated [ 45 ] as 0.88 (SE = 0.12; CI 95% = 0.65–1.00). SPSS Statistics for Windows (IBM SPSS Statistics for Windows, Version 26.0. Armonk, NY, USA) was used to calculate descriptive statistics for demographic variables and scoring. Analysis of variance was conducted to analyse the mean difference between each setting.

Field notes of observation and video-observation were used to support the description of the findings from quantitative data,

Criteria from CCOG was used to identify themes and provide additional explanatory variances capturing the meaning of data. Iterative process was conducted with frequent discussions among the researchers to ensure agreement and consistency in analysing. A reflection on how these data might influence the research questions and findings, as well as the theoretical interest of the study, accompanied this process.

Thirty-three students initially agreed to participate; 14 students were observed in all three settings and 14 students had incomplete observations. Five students withdrew from this study – one due to moving clinical schools and being unable to arrange observation and four students withdrew after one observation was conducted. These withdrawals were associated with challenges scheduling further observations and other undisclosed personal reasons. A total of 63 unique observations were included in the final analysis. Table 1 summarises the demographic characteristics of the participants and the number of observations in each setting. Table 2 provides observation time in each setting, and Table 3 shows the average score of students’ performance on each communication skill task.

The overall quantitative performance of students did not differ significantly across settings. The average score for the overall performance in classroom, clinical and OSCE settings was 4.2, 4.3 and 4.2, respectively corresponds to performances which were satisfactory and appropriate for their study level. Nine of 14 students (64%) with complete observations received the same classification (satisfactory) for all settings. Further analysis showed that the performances in the separate components were not statistically significantly different across settings, except for providing structure and closing the session ( p = 0.005 and p = 0.02, respectively). Key differences were found, however, in specific areas of the communication micro-skills across learning environments and this was probably due to the different opportunities to demonstrate skills. (See Supplement material for more detailed micro-skills scores).

We explored the observations from both quantitative and qualitative perspective, considering both scores and rating on the CCOG, and descriptions of the observation themselves. Students started the consultation by establishing initial rapport , and identifying the reason(s) for the consultation. In the classroom, some students did not perform these tasks as thoroughly as would be expected in a real clinical encounter, in part because many began the role-play as a follow-on from a peer. In the clinical environment, students had longer and unhurried discussions with patients and the tasks associated with initiating the session were performed well. During OSCE, students often rushed to enter the room, sanitise their hands, and greet the patient before they had even reached their chair.