Type 1 and Type 2 Errors in Statistics

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

On This Page:

A statistically significant result cannot prove that a research hypothesis is correct (which implies 100% certainty). Because a p -value is based on probabilities, there is always a chance of making an incorrect conclusion regarding accepting or rejecting the null hypothesis ( H 0 ).

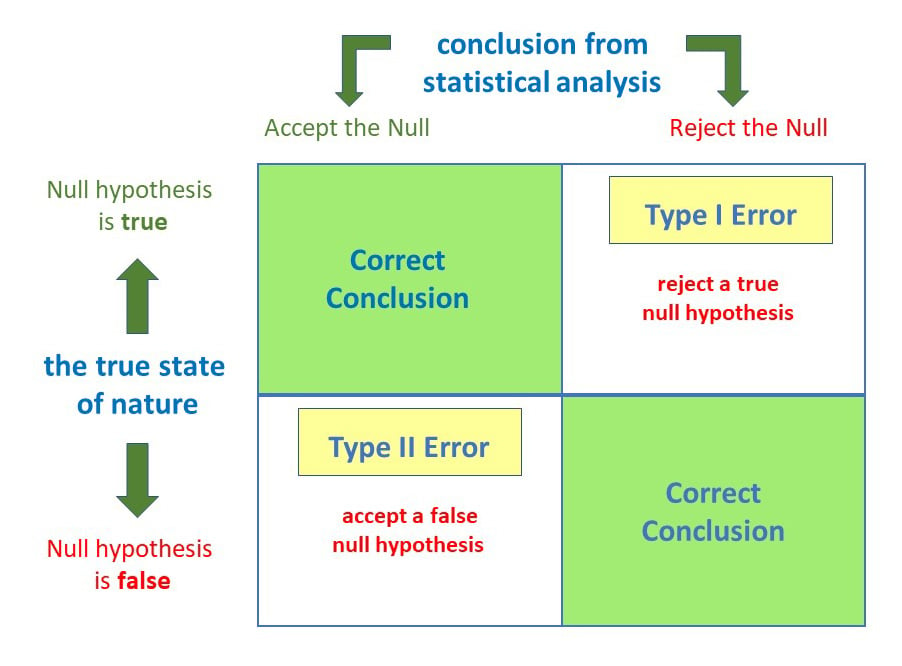

Anytime we make a decision using statistics, there are four possible outcomes, with two representing correct decisions and two representing errors.

The chances of committing these two types of errors are inversely proportional: that is, decreasing type I error rate increases type II error rate and vice versa.

As the significance level (α) increases, it becomes easier to reject the null hypothesis, decreasing the chance of missing a real effect (Type II error, β). If the significance level (α) goes down, it becomes harder to reject the null hypothesis , increasing the chance of missing an effect while reducing the risk of falsely finding one (Type I error).

Type I error

A type 1 error is also known as a false positive and occurs when a researcher incorrectly rejects a true null hypothesis. Simply put, it’s a false alarm.

This means that you report that your findings are significant when they have occurred by chance.

The probability of making a type 1 error is represented by your alpha level (α), the p- value below which you reject the null hypothesis.

A p -value of 0.05 indicates that you are willing to accept a 5% chance of getting the observed data (or something more extreme) when the null hypothesis is true.

You can reduce your risk of committing a type 1 error by setting a lower alpha level (like α = 0.01). For example, a p-value of 0.01 would mean there is a 1% chance of committing a Type I error.

However, using a lower value for alpha means that you will be less likely to detect a true difference if one really exists (thus risking a type II error).

Scenario: Drug Efficacy Study

Imagine a pharmaceutical company is testing a new drug, named “MediCure”, to determine if it’s more effective than a placebo at reducing fever. They experimented with two groups: one receives MediCure, and the other received a placebo.

- Null Hypothesis (H0) : MediCure is no more effective at reducing fever than the placebo.

- Alternative Hypothesis (H1) : MediCure is more effective at reducing fever than the placebo.

After conducting the study and analyzing the results, the researchers found a p-value of 0.04.

If they use an alpha (α) level of 0.05, this p-value is considered statistically significant, leading them to reject the null hypothesis and conclude that MediCure is more effective than the placebo.

However, MediCure has no actual effect, and the observed difference was due to random variation or some other confounding factor. In this case, the researchers have incorrectly rejected a true null hypothesis.

Error : The researchers have made a Type 1 error by concluding that MediCure is more effective when it isn’t.

Implications

Resource Allocation : Making a Type I error can lead to wastage of resources. If a business believes a new strategy is effective when it’s not (based on a Type I error), they might allocate significant financial and human resources toward that ineffective strategy.

Unnecessary Interventions : In medical trials, a Type I error might lead to the belief that a new treatment is effective when it isn’t. As a result, patients might undergo unnecessary treatments, risking potential side effects without any benefit.

Reputation and Credibility : For researchers, making repeated Type I errors can harm their professional reputation. If they frequently claim groundbreaking results that are later refuted, their credibility in the scientific community might diminish.

Type II error

A type 2 error (or false negative) happens when you accept the null hypothesis when it should actually be rejected.

Here, a researcher concludes there is not a significant effect when actually there really is.

The probability of making a type II error is called Beta (β), which is related to the power of the statistical test (power = 1- β). You can decrease your risk of committing a type II error by ensuring your test has enough power.

You can do this by ensuring your sample size is large enough to detect a practical difference when one truly exists.

Scenario: Efficacy of a New Teaching Method

Educational psychologists are investigating the potential benefits of a new interactive teaching method, named “EduInteract”, which utilizes virtual reality (VR) technology to teach history to middle school students.

They hypothesize that this method will lead to better retention and understanding compared to the traditional textbook-based approach.

- Null Hypothesis (H0) : The EduInteract VR teaching method does not result in significantly better retention and understanding of history content than the traditional textbook method.

- Alternative Hypothesis (H1) : The EduInteract VR teaching method results in significantly better retention and understanding of history content than the traditional textbook method.

The researchers designed an experiment where one group of students learns a history module using the EduInteract VR method, while a control group learns the same module using a traditional textbook.

After a week, the student’s retention and understanding are tested using a standardized assessment.

Upon analyzing the results, the psychologists found a p-value of 0.06. Using an alpha (α) level of 0.05, this p-value isn’t statistically significant.

Therefore, they fail to reject the null hypothesis and conclude that the EduInteract VR method isn’t more effective than the traditional textbook approach.

However, let’s assume that in the real world, the EduInteract VR truly enhances retention and understanding, but the study failed to detect this benefit due to reasons like small sample size, variability in students’ prior knowledge, or perhaps the assessment wasn’t sensitive enough to detect the nuances of VR-based learning.

Error : By concluding that the EduInteract VR method isn’t more effective than the traditional method when it is, the researchers have made a Type 2 error.

This could prevent schools from adopting a potentially superior teaching method that might benefit students’ learning experiences.

Missed Opportunities : A Type II error can lead to missed opportunities for improvement or innovation. For example, in education, if a more effective teaching method is overlooked because of a Type II error, students might miss out on a better learning experience.

Potential Risks : In healthcare, a Type II error might mean overlooking a harmful side effect of a medication because the research didn’t detect its harmful impacts. As a result, patients might continue using a harmful treatment.

Stagnation : In the business world, making a Type II error can result in continued investment in outdated or less efficient methods. This can lead to stagnation and the inability to compete effectively in the marketplace.

How do Type I and Type II errors relate to psychological research and experiments?

Type I errors are like false alarms, while Type II errors are like missed opportunities. Both errors can impact the validity and reliability of psychological findings, so researchers strive to minimize them to draw accurate conclusions from their studies.

How does sample size influence the likelihood of Type I and Type II errors in psychological research?

Sample size in psychological research influences the likelihood of Type I and Type II errors. A larger sample size reduces the chances of Type I errors, which means researchers are less likely to mistakenly find a significant effect when there isn’t one.

A larger sample size also increases the chances of detecting true effects, reducing the likelihood of Type II errors.

Are there any ethical implications associated with Type I and Type II errors in psychological research?

Yes, there are ethical implications associated with Type I and Type II errors in psychological research.

Type I errors may lead to false positive findings, resulting in misleading conclusions and potentially wasting resources on ineffective interventions. This can harm individuals who are falsely diagnosed or receive unnecessary treatments.

Type II errors, on the other hand, may result in missed opportunities to identify important effects or relationships, leading to a lack of appropriate interventions or support. This can also have negative consequences for individuals who genuinely require assistance.

Therefore, minimizing these errors is crucial for ethical research and ensuring the well-being of participants.

Further Information

- Publication manual of the American Psychological Association

- Statistics for Psychology Book Download

Related Articles

Exploratory Data Analysis

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

Convergent Validity: Definition and Examples

Content Validity in Research: Definition & Examples

Construct Validity In Psychology Research

- Games & Quizzes

- History & Society

- Science & Tech

- Biographies

- Animals & Nature

- Geography & Travel

- Arts & Culture

- On This Day

- One Good Fact

- New Articles

- Lifestyles & Social Issues

- Philosophy & Religion

- Politics, Law & Government

- World History

- Health & Medicine

- Browse Biographies

- Birds, Reptiles & Other Vertebrates

- Bugs, Mollusks & Other Invertebrates

- Environment

- Fossils & Geologic Time

- Entertainment & Pop Culture

- Sports & Recreation

- Visual Arts

- Demystified

- Image Galleries

- Infographics

- Top Questions

- Britannica Kids

- Saving Earth

- Space Next 50

- Student Center

scientific hypothesis

Our editors will review what you’ve submitted and determine whether to revise the article.

- National Center for Biotechnology Information - PubMed Central - On the scope of scientific hypotheses

- LiveScience - What is a scientific hypothesis?

- The Royal Society - On the scope of scientific hypotheses

scientific hypothesis , an idea that proposes a tentative explanation about a phenomenon or a narrow set of phenomena observed in the natural world. The two primary features of a scientific hypothesis are falsifiability and testability, which are reflected in an “If…then” statement summarizing the idea and in the ability to be supported or refuted through observation and experimentation. The notion of the scientific hypothesis as both falsifiable and testable was advanced in the mid-20th century by Austrian-born British philosopher Karl Popper .

The formulation and testing of a hypothesis is part of the scientific method , the approach scientists use when attempting to understand and test ideas about natural phenomena. The generation of a hypothesis frequently is described as a creative process and is based on existing scientific knowledge, intuition , or experience. Therefore, although scientific hypotheses commonly are described as educated guesses, they actually are more informed than a guess. In addition, scientists generally strive to develop simple hypotheses, since these are easier to test relative to hypotheses that involve many different variables and potential outcomes. Such complex hypotheses may be developed as scientific models ( see scientific modeling ).

Depending on the results of scientific evaluation, a hypothesis typically is either rejected as false or accepted as true. However, because a hypothesis inherently is falsifiable, even hypotheses supported by scientific evidence and accepted as true are susceptible to rejection later, when new evidence has become available. In some instances, rather than rejecting a hypothesis because it has been falsified by new evidence, scientists simply adapt the existing idea to accommodate the new information. In this sense a hypothesis is never incorrect but only incomplete.

The investigation of scientific hypotheses is an important component in the development of scientific theory . Hence, hypotheses differ fundamentally from theories; whereas the former is a specific tentative explanation and serves as the main tool by which scientists gather data, the latter is a broad general explanation that incorporates data from many different scientific investigations undertaken to explore hypotheses.

Countless hypotheses have been developed and tested throughout the history of science . Several examples include the idea that living organisms develop from nonliving matter, which formed the basis of spontaneous generation , a hypothesis that ultimately was disproved (first in 1668, with the experiments of Italian physician Francesco Redi , and later in 1859, with the experiments of French chemist and microbiologist Louis Pasteur ); the concept proposed in the late 19th century that microorganisms cause certain diseases (now known as germ theory ); and the notion that oceanic crust forms along submarine mountain zones and spreads laterally away from them ( seafloor spreading hypothesis ).

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian J Crit Care Med

- v.23(Suppl 3); 2019 Sep

An Introduction to Statistics: Understanding Hypothesis Testing and Statistical Errors

Priya ranganathan.

1 Department of Anesthesiology, Critical Care and Pain, Tata Memorial Hospital, Mumbai, Maharashtra, India

2 Department of Surgical Oncology, Tata Memorial Centre, Mumbai, Maharashtra, India

The second article in this series on biostatistics covers the concepts of sample, population, research hypotheses and statistical errors.

How to cite this article

Ranganathan P, Pramesh CS. An Introduction to Statistics: Understanding Hypothesis Testing and Statistical Errors. Indian J Crit Care Med 2019;23(Suppl 3):S230–S231.

Two papers quoted in this issue of the Indian Journal of Critical Care Medicine report. The results of studies aim to prove that a new intervention is better than (superior to) an existing treatment. In the ABLE study, the investigators wanted to show that transfusion of fresh red blood cells would be superior to standard-issue red cells in reducing 90-day mortality in ICU patients. 1 The PROPPR study was designed to prove that transfusion of a lower ratio of plasma and platelets to red cells would be superior to a higher ratio in decreasing 24-hour and 30-day mortality in critically ill patients. 2 These studies are known as superiority studies (as opposed to noninferiority or equivalence studies which will be discussed in a subsequent article).

SAMPLE VERSUS POPULATION

A sample represents a group of participants selected from the entire population. Since studies cannot be carried out on entire populations, researchers choose samples, which are representative of the population. This is similar to walking into a grocery store and examining a few grains of rice or wheat before purchasing an entire bag; we assume that the few grains that we select (the sample) are representative of the entire sack of grains (the population).

The results of the study are then extrapolated to generate inferences about the population. We do this using a process known as hypothesis testing. This means that the results of the study may not always be identical to the results we would expect to find in the population; i.e., there is the possibility that the study results may be erroneous.

HYPOTHESIS TESTING

A clinical trial begins with an assumption or belief, and then proceeds to either prove or disprove this assumption. In statistical terms, this belief or assumption is known as a hypothesis. Counterintuitively, what the researcher believes in (or is trying to prove) is called the “alternate” hypothesis, and the opposite is called the “null” hypothesis; every study has a null hypothesis and an alternate hypothesis. For superiority studies, the alternate hypothesis states that one treatment (usually the new or experimental treatment) is superior to the other; the null hypothesis states that there is no difference between the treatments (the treatments are equal). For example, in the ABLE study, we start by stating the null hypothesis—there is no difference in mortality between groups receiving fresh RBCs and standard-issue RBCs. We then state the alternate hypothesis—There is a difference between groups receiving fresh RBCs and standard-issue RBCs. It is important to note that we have stated that the groups are different, without specifying which group will be better than the other. This is known as a two-tailed hypothesis and it allows us to test for superiority on either side (using a two-sided test). This is because, when we start a study, we are not 100% certain that the new treatment can only be better than the standard treatment—it could be worse, and if it is so, the study should pick it up as well. One tailed hypothesis and one-sided statistical testing is done for non-inferiority studies, which will be discussed in a subsequent paper in this series.

STATISTICAL ERRORS

There are two possibilities to consider when interpreting the results of a superiority study. The first possibility is that there is truly no difference between the treatments but the study finds that they are different. This is called a Type-1 error or false-positive error or alpha error. This means falsely rejecting the null hypothesis.

The second possibility is that there is a difference between the treatments and the study does not pick up this difference. This is called a Type 2 error or false-negative error or beta error. This means falsely accepting the null hypothesis.

The power of the study is the ability to detect a difference between groups and is the converse of the beta error; i.e., power = 1-beta error. Alpha and beta errors are finalized when the protocol is written and form the basis for sample size calculation for the study. In an ideal world, we would not like any error in the results of our study; however, we would need to do the study in the entire population (infinite sample size) to be able to get a 0% alpha and beta error. These two errors enable us to do studies with realistic sample sizes, with the compromise that there is a small possibility that the results may not always reflect the truth. The basis for this will be discussed in a subsequent paper in this series dealing with sample size calculation.

Conventionally, type 1 or alpha error is set at 5%. This means, that at the end of the study, if there is a difference between groups, we want to be 95% certain that this is a true difference and allow only a 5% probability that this difference has occurred by chance (false positive). Type 2 or beta error is usually set between 10% and 20%; therefore, the power of the study is 90% or 80%. This means that if there is a difference between groups, we want to be 80% (or 90%) certain that the study will detect that difference. For example, in the ABLE study, sample size was calculated with a type 1 error of 5% (two-sided) and power of 90% (type 2 error of 10%) (1).

Table 1 gives a summary of the two types of statistical errors with an example

Statistical errors

| (a) Types of statistical errors | |||

| : Null hypothesis is | |||

| True | False | ||

| Null hypothesis is actually | True | Correct results! | Falsely rejecting null hypothesis - Type I error |

| False | Falsely accepting null hypothesis - Type II error | Correct results! | |

| (b) Possible statistical errors in the ABLE trial | |||

| There is difference in mortality between groups receiving fresh RBCs and standard-issue RBCs | There difference in mortality between groups receiving fresh RBCs and standard-issue RBCs | ||

| Truth | There is difference in mortality between groups receiving fresh RBCs and standard-issue RBCs | Correct results! | Falsely rejecting null hypothesis - Type I error |

| There difference in mortality between groups receiving fresh RBCs and standard-issue RBCs | Falsely accepting null hypothesis - Type II error | Correct results! | |

In the next article in this series, we will look at the meaning and interpretation of ‘ p ’ value and confidence intervals for hypothesis testing.

Source of support: Nil

Conflict of interest: None

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

6a.1 - introduction to hypothesis testing, basic terms section .

The first step in hypothesis testing is to set up two competing hypotheses. The hypotheses are the most important aspect. If the hypotheses are incorrect, your conclusion will also be incorrect.

The two hypotheses are named the null hypothesis and the alternative hypothesis.

The goal of hypothesis testing is to see if there is enough evidence against the null hypothesis. In other words, to see if there is enough evidence to reject the null hypothesis. If there is not enough evidence, then we fail to reject the null hypothesis.

Consider the following example where we set up these hypotheses.

Example 6-1 Section

A man, Mr. Orangejuice, goes to trial and is tried for the murder of his ex-wife. He is either guilty or innocent. Set up the null and alternative hypotheses for this example.

Putting this in a hypothesis testing framework, the hypotheses being tested are:

- The man is guilty

- The man is innocent

Let's set up the null and alternative hypotheses.

\(H_0\colon \) Mr. Orangejuice is innocent

\(H_a\colon \) Mr. Orangejuice is guilty

Remember that we assume the null hypothesis is true and try to see if we have evidence against the null. Therefore, it makes sense in this example to assume the man is innocent and test to see if there is evidence that he is guilty.

The Logic of Hypothesis Testing Section

We want to know the answer to a research question. We determine our null and alternative hypotheses. Now it is time to make a decision.

The decision is either going to be...

- reject the null hypothesis or...

- fail to reject the null hypothesis.

Consider the following table. The table shows the decision/conclusion of the hypothesis test and the unknown "reality", or truth. We do not know if the null is true or if it is false. If the null is false and we reject it, then we made the correct decision. If the null hypothesis is true and we fail to reject it, then we made the correct decision.

| Decision | Reality | |

|---|---|---|

| \(H_0\) is true | \(H_0\) is false | |

| Reject \(H_0\), (conclude \(H_a\)) | Correct decision | |

| Fail to reject \(H_0\) | Correct decision | |

So what happens when we do not make the correct decision?

When doing hypothesis testing, two types of mistakes may be made and we call them Type I error and Type II error. If we reject the null hypothesis when it is true, then we made a type I error. If the null hypothesis is false and we failed to reject it, we made another error called a Type II error.

| Decision | Reality | |

|---|---|---|

| \(H_0\) is true | \(H_0\) is false | |

| Reject \(H_0\), (conclude \(H_a\)) | Type I error | Correct decision |

| Fail to reject \(H_0\) | Correct decision | Type II error |

Types of errors

The “reality”, or truth, about the null hypothesis is unknown and therefore we do not know if we have made the correct decision or if we committed an error. We can, however, define the likelihood of these events.

\(\alpha\) and \(\beta\) are probabilities of committing an error so we want these values to be low. However, we cannot decrease both. As \(\alpha\) decreases, \(\beta\) increases.

Example 6-1 Cont'd... Section

A man, Mr. Orangejuice, goes to trial and is tried for the murder of his ex-wife. He is either guilty or not guilty. We found before that...

- \( H_0\colon \) Mr. Orangejuice is innocent

- \( H_a\colon \) Mr. Orangejuice is guilty

Interpret Type I error, \(\alpha \), Type II error, \(\beta \).

As you can see here, the Type I error (putting an innocent man in jail) is the more serious error. Ethically, it is more serious to put an innocent man in jail than to let a guilty man go free. So to minimize the probability of a type I error we would choose a smaller significance level.

Try it! Section

An inspector has to choose between certifying a building as safe or saying that the building is not safe. There are two hypotheses:

- Building is safe

- Building is not safe

Set up the null and alternative hypotheses. Interpret Type I and Type II error.

\( H_0\colon\) Building is not safe vs \(H_a\colon \) Building is safe

| Decision | Reality | |

|---|---|---|

| \(H_0\) is true | \(H_0\) is false | |

| Reject \(H_0\), (conclude \(H_a\)) | Reject "building is not safe" when it is not safe (Type I Error) | Correct decision |

| Fail to reject \(H_0\) | Correct decision | Failing to reject 'building not is safe' when it is safe (Type II Error) |

Power and \(\beta \) are complements of each other. Therefore, they have an inverse relationship, i.e. as one increases, the other decreases.

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Definition, Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis.

- Operationalization

Hypothesis Types

Hypotheses examples.

- Collecting Data

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study. It is a preliminary answer to your question that helps guide the research process.

Consider a study designed to examine the relationship between sleep deprivation and test performance. The hypothesis might be: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

At a Glance

A hypothesis is crucial to scientific research because it offers a clear direction for what the researchers are looking to find. This allows them to design experiments to test their predictions and add to our scientific knowledge about the world. This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. At this point, researchers then begin to develop a testable hypothesis.

Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore numerous factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk adage that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

How to Formulate a Good Hypothesis

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

The Importance of Operational Definitions

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

Operational definitions are specific definitions for all relevant factors in a study. This process helps make vague or ambiguous concepts detailed and measurable.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in various ways. Clearly defining these variables and how they are measured helps ensure that other researchers can replicate your results.

Replicability

One of the basic principles of any type of scientific research is that the results must be replicable.

Replication means repeating an experiment in the same way to produce the same results. By clearly detailing the specifics of how the variables were measured and manipulated, other researchers can better understand the results and repeat the study if needed.

Some variables are more difficult than others to define. For example, how would you operationally define a variable such as aggression ? For obvious ethical reasons, researchers cannot create a situation in which a person behaves aggressively toward others.

To measure this variable, the researcher must devise a measurement that assesses aggressive behavior without harming others. The researcher might utilize a simulated task to measure aggressiveness in this situation.

Hypothesis Checklist

- Does your hypothesis focus on something that you can actually test?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate the variables?

- Can your hypothesis be tested without violating ethical standards?

The hypothesis you use will depend on what you are investigating and hoping to find. Some of the main types of hypotheses that you might use include:

- Simple hypothesis : This type of hypothesis suggests there is a relationship between one independent variable and one dependent variable.

- Complex hypothesis : This type suggests a relationship between three or more variables, such as two independent and dependent variables.

- Null hypothesis : This hypothesis suggests no relationship exists between two or more variables.

- Alternative hypothesis : This hypothesis states the opposite of the null hypothesis.

- Statistical hypothesis : This hypothesis uses statistical analysis to evaluate a representative population sample and then generalizes the findings to the larger group.

- Logical hypothesis : This hypothesis assumes a relationship between variables without collecting data or evidence.

A hypothesis often follows a basic format of "If {this happens} then {this will happen}." One way to structure your hypothesis is to describe what will happen to the dependent variable if you change the independent variable .

The basic format might be: "If {these changes are made to a certain independent variable}, then we will observe {a change in a specific dependent variable}."

A few examples of simple hypotheses:

- "Students who eat breakfast will perform better on a math exam than students who do not eat breakfast."

- "Students who experience test anxiety before an English exam will get lower scores than students who do not experience test anxiety."

- "Motorists who talk on the phone while driving will be more likely to make errors on a driving course than those who do not talk on the phone."

- "Children who receive a new reading intervention will have higher reading scores than students who do not receive the intervention."

Examples of a complex hypothesis include:

- "People with high-sugar diets and sedentary activity levels are more likely to develop depression."

- "Younger people who are regularly exposed to green, outdoor areas have better subjective well-being than older adults who have limited exposure to green spaces."

Examples of a null hypothesis include:

- "There is no difference in anxiety levels between people who take St. John's wort supplements and those who do not."

- "There is no difference in scores on a memory recall task between children and adults."

- "There is no difference in aggression levels between children who play first-person shooter games and those who do not."

Examples of an alternative hypothesis:

- "People who take St. John's wort supplements will have less anxiety than those who do not."

- "Adults will perform better on a memory task than children."

- "Children who play first-person shooter games will show higher levels of aggression than children who do not."

Collecting Data on Your Hypothesis

Once a researcher has formed a testable hypothesis, the next step is to select a research design and start collecting data. The research method depends largely on exactly what they are studying. There are two basic types of research methods: descriptive research and experimental research.

Descriptive Research Methods

Descriptive research such as case studies , naturalistic observations , and surveys are often used when conducting an experiment is difficult or impossible. These methods are best used to describe different aspects of a behavior or psychological phenomenon.

Once a researcher has collected data using descriptive methods, a correlational study can examine how the variables are related. This research method might be used to investigate a hypothesis that is difficult to test experimentally.

Experimental Research Methods

Experimental methods are used to demonstrate causal relationships between variables. In an experiment, the researcher systematically manipulates a variable of interest (known as the independent variable) and measures the effect on another variable (known as the dependent variable).

Unlike correlational studies, which can only be used to determine if there is a relationship between two variables, experimental methods can be used to determine the actual nature of the relationship—whether changes in one variable actually cause another to change.

The hypothesis is a critical part of any scientific exploration. It represents what researchers expect to find in a study or experiment. In situations where the hypothesis is unsupported by the research, the research still has value. Such research helps us better understand how different aspects of the natural world relate to one another. It also helps us develop new hypotheses that can then be tested in the future.

Thompson WH, Skau S. On the scope of scientific hypotheses . R Soc Open Sci . 2023;10(8):230607. doi:10.1098/rsos.230607

Taran S, Adhikari NKJ, Fan E. Falsifiability in medicine: what clinicians can learn from Karl Popper [published correction appears in Intensive Care Med. 2021 Jun 17;:]. Intensive Care Med . 2021;47(9):1054-1056. doi:10.1007/s00134-021-06432-z

Eyler AA. Research Methods for Public Health . 1st ed. Springer Publishing Company; 2020. doi:10.1891/9780826182067.0004

Nosek BA, Errington TM. What is replication ? PLoS Biol . 2020;18(3):e3000691. doi:10.1371/journal.pbio.3000691

Aggarwal R, Ranganathan P. Study designs: Part 2 - Descriptive studies . Perspect Clin Res . 2019;10(1):34-36. doi:10.4103/picr.PICR_154_18

Nevid J. Psychology: Concepts and Applications. Wadworth, 2013.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

What 'Fail to Reject' Means in a Hypothesis Test

Casarsa Guru/Getty Images

- Inferential Statistics

- Statistics Tutorials

- Probability & Games

- Descriptive Statistics

- Applications Of Statistics

- Math Tutorials

- Pre Algebra & Algebra

- Exponential Decay

- Worksheets By Grade

- Ph.D., Mathematics, Purdue University

- M.S., Mathematics, Purdue University

- B.A., Mathematics, Physics, and Chemistry, Anderson University

In statistics , scientists can perform a number of different significance tests to determine if there is a relationship between two phenomena. One of the first they usually perform is a null hypothesis test. In short, the null hypothesis states that there is no meaningful relationship between two measured phenomena. After a performing a test, scientists can:

- Reject the null hypothesis (meaning there is a definite, consequential relationship between the two phenomena), or

- Fail to reject the null hypothesis (meaning the test has not identified a consequential relationship between the two phenomena)

Key Takeaways: The Null Hypothesis

• In a test of significance, the null hypothesis states that there is no meaningful relationship between two measured phenomena.

• By comparing the null hypothesis to an alternative hypothesis, scientists can either reject or fail to reject the null hypothesis.

• The null hypothesis cannot be positively proven. Rather, all that scientists can determine from a test of significance is that the evidence collected does or does not disprove the null hypothesis.

It is important to note that a failure to reject does not mean that the null hypothesis is true—only that the test did not prove it to be false. In some cases, depending on the experiment, a relationship may exist between two phenomena that is not identified by the experiment. In such cases, new experiments must be designed to rule out alternative hypotheses.

Null vs. Alternative Hypothesis

The null hypothesis is considered the default in a scientific experiment . In contrast, an alternative hypothesis is one that claims that there is a meaningful relationship between two phenomena. These two competing hypotheses can be compared by performing a statistical hypothesis test, which determines whether there is a statistically significant relationship between the data.

For example, scientists studying the water quality of a stream may wish to determine whether a certain chemical affects the acidity of the water. The null hypothesis—that the chemical has no effect on the water quality—can be tested by measuring the pH level of two water samples, one of which contains some of the chemical and one of which has been left untouched. If the sample with the added chemical is measurably more or less acidic—as determined through statistical analysis—it is a reason to reject the null hypothesis. If the sample's acidity is unchanged, it is a reason to not reject the null hypothesis.

When scientists design experiments, they attempt to find evidence for the alternative hypothesis. They do not try to prove that the null hypothesis is true. The null hypothesis is assumed to be an accurate statement until contrary evidence proves otherwise. As a result, a test of significance does not produce any evidence pertaining to the truth of the null hypothesis.

Failing to Reject vs. Accept

In an experiment, the null hypothesis and the alternative hypothesis should be carefully formulated such that one and only one of these statements is true. If the collected data supports the alternative hypothesis, then the null hypothesis can be rejected as false. However, if the data does not support the alternative hypothesis, this does not mean that the null hypothesis is true. All it means is that the null hypothesis has not been disproven—hence the term "failure to reject." A "failure to reject" a hypothesis should not be confused with acceptance.

In mathematics, negations are typically formed by simply placing the word “not” in the correct place. Using this convention, tests of significance allow scientists to either reject or not reject the null hypothesis. It sometimes takes a moment to realize that “not rejecting” is not the same as "accepting."

Null Hypothesis Example

In many ways, the philosophy behind a test of significance is similar to that of a trial. At the beginning of the proceedings, when the defendant enters a plea of “not guilty,” it is analogous to the statement of the null hypothesis. While the defendant may indeed be innocent, there is no plea of “innocent” to be formally made in court. The alternative hypothesis of “guilty” is what the prosecutor attempts to demonstrate.

The presumption at the outset of the trial is that the defendant is innocent. In theory, there is no need for the defendant to prove that he or she is innocent. The burden of proof is on the prosecuting attorney, who must marshal enough evidence to convince the jury that the defendant is guilty beyond a reasonable doubt. Likewise, in a test of significance, a scientist can only reject the null hypothesis by providing evidence for the alternative hypothesis.

If there is not enough evidence in a trial to demonstrate guilt, then the defendant is declared “not guilty.” This claim has nothing to do with innocence; it merely reflects the fact that the prosecution failed to provide enough evidence of guilt. In a similar way, a failure to reject the null hypothesis in a significance test does not mean that the null hypothesis is true. It only means that the scientist was unable to provide enough evidence for the alternative hypothesis.

For example, scientists testing the effects of a certain pesticide on crop yields might design an experiment in which some crops are left untreated and others are treated with varying amounts of pesticide. Any result in which the crop yields varied based on pesticide exposure—assuming all other variables are equal—would provide strong evidence for the alternative hypothesis (that the pesticide does affect crop yields). As a result, the scientists would have reason to reject the null hypothesis.

- Null Hypothesis Examples

- Hypothesis Test for the Difference of Two Population Proportions

- Type I and Type II Errors in Statistics

- Null Hypothesis and Alternative Hypothesis

- How to Conduct a Hypothesis Test

- An Example of a Hypothesis Test

- What Is a P-Value?

- The Difference Between Type I and Type II Errors in Hypothesis Testing

- What Is a Hypothesis? (Science)

- Null Hypothesis Definition and Examples

- Hypothesis Test Example

- What Level of Alpha Determines Statistical Significance?

- How to Do Hypothesis Tests With the Z.TEST Function in Excel

- The Runs Test for Random Sequences

- What Is the Difference Between Alpha and P-Values?

- Scientific Method Vocabulary Terms

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Null Hypothesis: Definition, Rejecting & Examples

By Jim Frost 6 Comments

What is a Null Hypothesis?

The null hypothesis in statistics states that there is no difference between groups or no relationship between variables. It is one of two mutually exclusive hypotheses about a population in a hypothesis test.

- Null Hypothesis H 0 : No effect exists in the population.

- Alternative Hypothesis H A : The effect exists in the population.

In every study or experiment, researchers assess an effect or relationship. This effect can be the effectiveness of a new drug, building material, or other intervention that has benefits. There is a benefit or connection that the researchers hope to identify. Unfortunately, no effect may exist. In statistics, we call this lack of an effect the null hypothesis. Researchers assume that this notion of no effect is correct until they have enough evidence to suggest otherwise, similar to how a trial presumes innocence.

In this context, the analysts don’t necessarily believe the null hypothesis is correct. In fact, they typically want to reject it because that leads to more exciting finds about an effect or relationship. The new vaccine works!

You can think of it as the default theory that requires sufficiently strong evidence to reject. Like a prosecutor, researchers must collect sufficient evidence to overturn the presumption of no effect. Investigators must work hard to set up a study and a data collection system to obtain evidence that can reject the null hypothesis.

Related post : What is an Effect in Statistics?

Null Hypothesis Examples

Null hypotheses start as research questions that the investigator rephrases as a statement indicating there is no effect or relationship.

| Does the vaccine prevent infections? | The vaccine does not affect the infection rate. |

| Does the new additive increase product strength? | The additive does not affect mean product strength. |

| Does the exercise intervention increase bone mineral density? | The intervention does not affect bone mineral density. |

| As screen time increases, does test performance decrease? | There is no relationship between screen time and test performance. |

After reading these examples, you might think they’re a bit boring and pointless. However, the key is to remember that the null hypothesis defines the condition that the researchers need to discredit before suggesting an effect exists.

Let’s see how you reject the null hypothesis and get to those more exciting findings!

When to Reject the Null Hypothesis

So, you want to reject the null hypothesis, but how and when can you do that? To start, you’ll need to perform a statistical test on your data. The following is an overview of performing a study that uses a hypothesis test.

The first step is to devise a research question and the appropriate null hypothesis. After that, the investigators need to formulate an experimental design and data collection procedures that will allow them to gather data that can answer the research question. Then they collect the data. For more information about designing a scientific study that uses statistics, read my post 5 Steps for Conducting Studies with Statistics .

After data collection is complete, statistics and hypothesis testing enter the picture. Hypothesis testing takes your sample data and evaluates how consistent they are with the null hypothesis. The p-value is a crucial part of the statistical results because it quantifies how strongly the sample data contradict the null hypothesis.

When the sample data provide sufficient evidence, you can reject the null hypothesis. In a hypothesis test, this process involves comparing the p-value to your significance level .

Rejecting the Null Hypothesis

Reject the null hypothesis when the p-value is less than or equal to your significance level. Your sample data favor the alternative hypothesis, which suggests that the effect exists in the population. For a mnemonic device, remember—when the p-value is low, the null must go!

When you can reject the null hypothesis, your results are statistically significant. Learn more about Statistical Significance: Definition & Meaning .

Failing to Reject the Null Hypothesis

Conversely, when the p-value is greater than your significance level, you fail to reject the null hypothesis. The sample data provides insufficient data to conclude that the effect exists in the population. When the p-value is high, the null must fly!

Note that failing to reject the null is not the same as proving it. For more information about the difference, read my post about Failing to Reject the Null .

That’s a very general look at the process. But I hope you can see how the path to more exciting findings depends on being able to rule out the less exciting null hypothesis that states there’s nothing to see here!

Let’s move on to learning how to write the null hypothesis for different types of effects, relationships, and tests.

Related posts : How Hypothesis Tests Work and Interpreting P-values

How to Write a Null Hypothesis

The null hypothesis varies by the type of statistic and hypothesis test. Remember that inferential statistics use samples to draw conclusions about populations. Consequently, when you write a null hypothesis, it must make a claim about the relevant population parameter . Further, that claim usually indicates that the effect does not exist in the population. Below are typical examples of writing a null hypothesis for various parameters and hypothesis tests.

Related posts : Descriptive vs. Inferential Statistics and Populations, Parameters, and Samples in Inferential Statistics

Group Means

T-tests and ANOVA assess the differences between group means. For these tests, the null hypothesis states that there is no difference between group means in the population. In other words, the experimental conditions that define the groups do not affect the mean outcome. Mu (µ) is the population parameter for the mean, and you’ll need to include it in the statement for this type of study.

For example, an experiment compares the mean bone density changes for a new osteoporosis medication. The control group does not receive the medicine, while the treatment group does. The null states that the mean bone density changes for the control and treatment groups are equal.

- Null Hypothesis H 0 : Group means are equal in the population: µ 1 = µ 2 , or µ 1 – µ 2 = 0

- Alternative Hypothesis H A : Group means are not equal in the population: µ 1 ≠ µ 2 , or µ 1 – µ 2 ≠ 0.

Group Proportions

Proportions tests assess the differences between group proportions. For these tests, the null hypothesis states that there is no difference between group proportions. Again, the experimental conditions did not affect the proportion of events in the groups. P is the population proportion parameter that you’ll need to include.

For example, a vaccine experiment compares the infection rate in the treatment group to the control group. The treatment group receives the vaccine, while the control group does not. The null states that the infection rates for the control and treatment groups are equal.

- Null Hypothesis H 0 : Group proportions are equal in the population: p 1 = p 2 .

- Alternative Hypothesis H A : Group proportions are not equal in the population: p 1 ≠ p 2 .

Correlation and Regression Coefficients

Some studies assess the relationship between two continuous variables rather than differences between groups.

In these studies, analysts often use either correlation or regression analysis . For these tests, the null states that there is no relationship between the variables. Specifically, it says that the correlation or regression coefficient is zero. As one variable increases, there is no tendency for the other variable to increase or decrease. Rho (ρ) is the population correlation parameter and beta (β) is the regression coefficient parameter.

For example, a study assesses the relationship between screen time and test performance. The null states that there is no correlation between this pair of variables. As screen time increases, test performance does not tend to increase or decrease.

- Null Hypothesis H 0 : The correlation in the population is zero: ρ = 0.

- Alternative Hypothesis H A : The correlation in the population is not zero: ρ ≠ 0.

For all these cases, the analysts define the hypotheses before the study. After collecting the data, they perform a hypothesis test to determine whether they can reject the null hypothesis.

The preceding examples are all for two-tailed hypothesis tests. To learn about one-tailed tests and how to write a null hypothesis for them, read my post One-Tailed vs. Two-Tailed Tests .

Related post : Understanding Correlation

Neyman, J; Pearson, E. S. (January 1, 1933). On the Problem of the most Efficient Tests of Statistical Hypotheses . Philosophical Transactions of the Royal Society A . 231 (694–706): 289–337.

Share this:

Reader Interactions

January 11, 2024 at 2:57 pm

Thanks for the reply.

January 10, 2024 at 1:23 pm

Hi Jim, In your comment you state that equivalence test null and alternate hypotheses are reversed. For hypothesis tests of data fits to a probability distribution, the null hypothesis is that the probability distribution fits the data. Is this correct?

January 10, 2024 at 2:15 pm

Those two separate things, equivalence testing and normality tests. But, yes, you’re correct for both.

Hypotheses are switched for equivalence testing. You need to “work” (i.e., collect a large sample of good quality data) to be able to reject the null that the groups are different to be able to conclude they’re the same.

With typical hypothesis tests, if you have low quality data and a low sample size, you’ll fail to reject the null that they’re the same, concluding they’re equivalent. But that’s more a statement about the low quality and small sample size than anything to do with the groups being equal.

So, equivalence testing make you work to obtain a finding that the groups are the same (at least within some amount you define as a trivial difference).

For normality testing, and other distribution tests, the null states that the data follow the distribution (normal or whatever). If you reject the null, you have sufficient evidence to conclude that your sample data don’t follow the probability distribution. That’s a rare case where you hope to fail to reject the null. And it suffers from the problem I describe above where you might fail to reject the null simply because you have a small sample size. In that case, you’d conclude the data follow the probability distribution but it’s more that you don’t have enough data for the test to register the deviation. In this scenario, if you had a larger sample size, you’d reject the null and conclude it doesn’t follow that distribution.

I don’t know of any equivalence testing type approach for distribution fit tests where you’d need to work to show the data follow a distribution, although I haven’t looked for one either!

February 20, 2022 at 9:26 pm

Is a null hypothesis regularly (always) stated in the negative? “there is no” or “does not”

February 23, 2022 at 9:21 pm

Typically, the null hypothesis includes an equal sign. The null hypothesis states that the population parameter equals a particular value. That value is usually one that represents no effect. In the case of a one-sided hypothesis test, the null still contains an equal sign but it’s “greater than or equal to” or “less than or equal to.” If you wanted to translate the null hypothesis from its native mathematical expression, you could use the expression “there is no effect.” But the mathematical form more specifically states what it’s testing.

It’s the alternative hypothesis that typically contains does not equal.

There are some exceptions. For example, in an equivalence test where the researchers want to show that two things are equal, the null hypothesis states that they’re not equal.

In short, the null hypothesis states the condition that the researchers hope to reject. They need to work hard to set up an experiment and data collection that’ll gather enough evidence to be able to reject the null condition.

February 15, 2022 at 9:32 am

Dear sir I always read your notes on Research methods.. Kindly tell is there any available Book on all these..wonderfull Urgent

Comments and Questions Cancel reply

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

9.1: Null and Alternative Hypotheses

- Last updated

- Save as PDF

- Page ID 23459

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

The actual test begins by considering two hypotheses . They are called the null hypothesis and the alternative hypothesis . These hypotheses contain opposing viewpoints.

\(H_0\): The null hypothesis: It is a statement of no difference between the variables—they are not related. This can often be considered the status quo and as a result if you cannot accept the null it requires some action.

\(H_a\): The alternative hypothesis: It is a claim about the population that is contradictory to \(H_0\) and what we conclude when we reject \(H_0\). This is usually what the researcher is trying to prove.

Since the null and alternative hypotheses are contradictory, you must examine evidence to decide if you have enough evidence to reject the null hypothesis or not. The evidence is in the form of sample data.

After you have determined which hypothesis the sample supports, you make a decision. There are two options for a decision. They are "reject \(H_0\)" if the sample information favors the alternative hypothesis or "do not reject \(H_0\)" or "decline to reject \(H_0\)" if the sample information is insufficient to reject the null hypothesis.

| equal (=) | not equal \((\neq)\) greater than (>) less than (<) |

| greater than or equal to \((\geq)\) | less than (<) |

| less than or equal to \((\geq)\) | more than (>) |

\(H_{0}\) always has a symbol with an equal in it. \(H_{a}\) never has a symbol with an equal in it. The choice of symbol depends on the wording of the hypothesis test. However, be aware that many researchers (including one of the co-authors in research work) use = in the null hypothesis, even with > or < as the symbol in the alternative hypothesis. This practice is acceptable because we only make the decision to reject or not reject the null hypothesis.

Example \(\PageIndex{1}\)

- \(H_{0}\): No more than 30% of the registered voters in Santa Clara County voted in the primary election. \(p \leq 30\)

- \(H_{a}\): More than 30% of the registered voters in Santa Clara County voted in the primary election. \(p > 30\)

Exercise \(\PageIndex{1}\)

A medical trial is conducted to test whether or not a new medicine reduces cholesterol by 25%. State the null and alternative hypotheses.

- \(H_{0}\): The drug reduces cholesterol by 25%. \(p = 0.25\)

- \(H_{a}\): The drug does not reduce cholesterol by 25%. \(p \neq 0.25\)

Example \(\PageIndex{2}\)

We want to test whether the mean GPA of students in American colleges is different from 2.0 (out of 4.0). The null and alternative hypotheses are:

- \(H_{0}: \mu = 2.0\)

- \(H_{a}: \mu \neq 2.0\)

Exercise \(\PageIndex{2}\)

We want to test whether the mean height of eighth graders is 66 inches. State the null and alternative hypotheses. Fill in the correct symbol \((=, \neq, \geq, <, \leq, >)\) for the null and alternative hypotheses.

- \(H_{0}: \mu \_ 66\)

- \(H_{a}: \mu \_ 66\)

- \(H_{0}: \mu = 66\)

- \(H_{a}: \mu \neq 66\)

Example \(\PageIndex{3}\)

We want to test if college students take less than five years to graduate from college, on the average. The null and alternative hypotheses are:

- \(H_{0}: \mu \geq 5\)

- \(H_{a}: \mu < 5\)

Exercise \(\PageIndex{3}\)

We want to test if it takes fewer than 45 minutes to teach a lesson plan. State the null and alternative hypotheses. Fill in the correct symbol ( =, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- \(H_{0}: \mu \_ 45\)

- \(H_{a}: \mu \_ 45\)

- \(H_{0}: \mu \geq 45\)

- \(H_{a}: \mu < 45\)

Example \(\PageIndex{4}\)

In an issue of U. S. News and World Report , an article on school standards stated that about half of all students in France, Germany, and Israel take advanced placement exams and a third pass. The same article stated that 6.6% of U.S. students take advanced placement exams and 4.4% pass. Test if the percentage of U.S. students who take advanced placement exams is more than 6.6%. State the null and alternative hypotheses.

- \(H_{0}: p \leq 0.066\)

- \(H_{a}: p > 0.066\)

Exercise \(\PageIndex{4}\)

On a state driver’s test, about 40% pass the test on the first try. We want to test if more than 40% pass on the first try. Fill in the correct symbol (\(=, \neq, \geq, <, \leq, >\)) for the null and alternative hypotheses.

- \(H_{0}: p \_ 0.40\)

- \(H_{a}: p \_ 0.40\)

- \(H_{0}: p = 0.40\)

- \(H_{a}: p > 0.40\)

COLLABORATIVE EXERCISE

Bring to class a newspaper, some news magazines, and some Internet articles . In groups, find articles from which your group can write null and alternative hypotheses. Discuss your hypotheses with the rest of the class.

In a hypothesis test , sample data is evaluated in order to arrive at a decision about some type of claim. If certain conditions about the sample are satisfied, then the claim can be evaluated for a population. In a hypothesis test, we:

- Evaluate the null hypothesis , typically denoted with \(H_{0}\). The null is not rejected unless the hypothesis test shows otherwise. The null statement must always contain some form of equality \((=, \leq \text{or} \geq)\)

- Always write the alternative hypothesis , typically denoted with \(H_{a}\) or \(H_{1}\), using less than, greater than, or not equals symbols, i.e., \((\neq, >, \text{or} <)\).

- If we reject the null hypothesis, then we can assume there is enough evidence to support the alternative hypothesis.

- Never state that a claim is proven true or false. Keep in mind the underlying fact that hypothesis testing is based on probability laws; therefore, we can talk only in terms of non-absolute certainties.

Formula Review

\(H_{0}\) and \(H_{a}\) are contradictory.

| equal \((=)\) | greater than or equal to \((\geq)\) | less than or equal to \((\leq)\) | |

| has: | not equal \((\neq)\) greater than \((>)\) less than \((<)\) | less than \((<)\) | greater than \((>)\) |

- If \(\alpha \leq p\)-value, then do not reject \(H_{0}\).

- If\(\alpha > p\)-value, then reject \(H_{0}\).

\(\alpha\) is preconceived. Its value is set before the hypothesis test starts. The \(p\)-value is calculated from the data.References

Data from the National Institute of Mental Health. Available online at http://www.nimh.nih.gov/publicat/depression.cfm .

- More from M-W

- To save this word, you'll need to log in. Log In

Definition of hypothesis

Did you know.

The Difference Between Hypothesis and Theory

A hypothesis is an assumption, an idea that is proposed for the sake of argument so that it can be tested to see if it might be true.

In the scientific method, the hypothesis is constructed before any applicable research has been done, apart from a basic background review. You ask a question, read up on what has been studied before, and then form a hypothesis.

A hypothesis is usually tentative; it's an assumption or suggestion made strictly for the objective of being tested.

A theory , in contrast, is a principle that has been formed as an attempt to explain things that have already been substantiated by data. It is used in the names of a number of principles accepted in the scientific community, such as the Big Bang Theory . Because of the rigors of experimentation and control, it is understood to be more likely to be true than a hypothesis is.

In non-scientific use, however, hypothesis and theory are often used interchangeably to mean simply an idea, speculation, or hunch, with theory being the more common choice.

Since this casual use does away with the distinctions upheld by the scientific community, hypothesis and theory are prone to being wrongly interpreted even when they are encountered in scientific contexts—or at least, contexts that allude to scientific study without making the critical distinction that scientists employ when weighing hypotheses and theories.

The most common occurrence is when theory is interpreted—and sometimes even gleefully seized upon—to mean something having less truth value than other scientific principles. (The word law applies to principles so firmly established that they are almost never questioned, such as the law of gravity.)

This mistake is one of projection: since we use theory in general to mean something lightly speculated, then it's implied that scientists must be talking about the same level of uncertainty when they use theory to refer to their well-tested and reasoned principles.

The distinction has come to the forefront particularly on occasions when the content of science curricula in schools has been challenged—notably, when a school board in Georgia put stickers on textbooks stating that evolution was "a theory, not a fact, regarding the origin of living things." As Kenneth R. Miller, a cell biologist at Brown University, has said , a theory "doesn’t mean a hunch or a guess. A theory is a system of explanations that ties together a whole bunch of facts. It not only explains those facts, but predicts what you ought to find from other observations and experiments.”

While theories are never completely infallible, they form the basis of scientific reasoning because, as Miller said "to the best of our ability, we’ve tested them, and they’ve held up."

- proposition

- supposition

hypothesis , theory , law mean a formula derived by inference from scientific data that explains a principle operating in nature.

hypothesis implies insufficient evidence to provide more than a tentative explanation.

theory implies a greater range of evidence and greater likelihood of truth.

law implies a statement of order and relation in nature that has been found to be invariable under the same conditions.

Examples of hypothesis in a Sentence

These examples are programmatically compiled from various online sources to illustrate current usage of the word 'hypothesis.' Any opinions expressed in the examples do not represent those of Merriam-Webster or its editors. Send us feedback about these examples.

Word History

Greek, from hypotithenai to put under, suppose, from hypo- + tithenai to put — more at do

1641, in the meaning defined at sense 1a

Phrases Containing hypothesis

- counter - hypothesis

- nebular hypothesis

- null hypothesis

- planetesimal hypothesis

- Whorfian hypothesis

Articles Related to hypothesis

This is the Difference Between a...

This is the Difference Between a Hypothesis and a Theory

In scientific reasoning, they're two completely different things

Dictionary Entries Near hypothesis

hypothermia

hypothesize

Cite this Entry

“Hypothesis.” Merriam-Webster.com Dictionary , Merriam-Webster, https://www.merriam-webster.com/dictionary/hypothesis. Accessed 23 Jun. 2024.

Kids Definition

Kids definition of hypothesis, medical definition, medical definition of hypothesis, more from merriam-webster on hypothesis.

Nglish: Translation of hypothesis for Spanish Speakers

Britannica English: Translation of hypothesis for Arabic Speakers

Britannica.com: Encyclopedia article about hypothesis

Subscribe to America's largest dictionary and get thousands more definitions and advanced search—ad free!

Can you solve 4 words at once?

Word of the day.

See Definitions and Examples »

Get Word of the Day daily email!

Popular in Grammar & Usage

Plural and possessive names: a guide, more commonly misspelled words, your vs. you're: how to use them correctly, every letter is silent, sometimes: a-z list of examples, more commonly mispronounced words, popular in wordplay, 8 words for lesser-known musical instruments, birds say the darndest things, 10 words from taylor swift songs (merriam's version), 10 scrabble words without any vowels, 12 more bird names that sound like insults (and sometimes are), games & quizzes.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Null and Alternative Hypotheses | Definitions & Examples

Null & Alternative Hypotheses | Definitions, Templates & Examples

Published on May 6, 2022 by Shaun Turney . Revised on June 22, 2023.

The null and alternative hypotheses are two competing claims that researchers weigh evidence for and against using a statistical test :

- Null hypothesis ( H 0 ): There’s no effect in the population .

- Alternative hypothesis ( H a or H 1 ) : There’s an effect in the population.

Table of contents

Answering your research question with hypotheses, what is a null hypothesis, what is an alternative hypothesis, similarities and differences between null and alternative hypotheses, how to write null and alternative hypotheses, other interesting articles, frequently asked questions.

The null and alternative hypotheses offer competing answers to your research question . When the research question asks “Does the independent variable affect the dependent variable?”:

- The null hypothesis ( H 0 ) answers “No, there’s no effect in the population.”

- The alternative hypothesis ( H a ) answers “Yes, there is an effect in the population.”

The null and alternative are always claims about the population. That’s because the goal of hypothesis testing is to make inferences about a population based on a sample . Often, we infer whether there’s an effect in the population by looking at differences between groups or relationships between variables in the sample. It’s critical for your research to write strong hypotheses .

You can use a statistical test to decide whether the evidence favors the null or alternative hypothesis. Each type of statistical test comes with a specific way of phrasing the null and alternative hypothesis. However, the hypotheses can also be phrased in a general way that applies to any test.

Prevent plagiarism. Run a free check.

The null hypothesis is the claim that there’s no effect in the population.

If the sample provides enough evidence against the claim that there’s no effect in the population ( p ≤ α), then we can reject the null hypothesis . Otherwise, we fail to reject the null hypothesis.

Although “fail to reject” may sound awkward, it’s the only wording that statisticians accept . Be careful not to say you “prove” or “accept” the null hypothesis.

Null hypotheses often include phrases such as “no effect,” “no difference,” or “no relationship.” When written in mathematical terms, they always include an equality (usually =, but sometimes ≥ or ≤).

You can never know with complete certainty whether there is an effect in the population. Some percentage of the time, your inference about the population will be incorrect. When you incorrectly reject the null hypothesis, it’s called a type I error . When you incorrectly fail to reject it, it’s a type II error.

Examples of null hypotheses

The table below gives examples of research questions and null hypotheses. There’s always more than one way to answer a research question, but these null hypotheses can help you get started.

| ( ) | ||

| Does tooth flossing affect the number of cavities? | Tooth flossing has on the number of cavities. | test: The mean number of cavities per person does not differ between the flossing group (µ ) and the non-flossing group (µ ) in the population; µ = µ . |