Center for Hearing Loss Help

Help for your hearing loss, tinnitus and other ear conditions

FREE Subscription to: Hearing Loss Help

Your email address will never be rented, traded or sold!

Help, I’ve Memorized the Word List!—Understanding Hearing Loss Speech Testing

Question : Whenever I have my hearing tested, it seems the audiologist uses the same list of words each time. I can understand this for people who have never been tested before, but when I get my hearing tested regularly, isn’t this sort of ridiculous? I mean, I’ve half-memorized the word list so I’m not getting fair test results, am I?—D. P.

Answer : I understand your concerns, but your questions reflect a fundamental misunderstanding of the whole audiometric testing process. When you go to your audiologist for a complete audiological evaluation, your audiologist performs a whole battery of tests. The speech audiometry portion of your audiological evaluation consists of not just one, but two “word” tests—the Speech Reception Threshold (SRT) test and the Speech Discrimination (SD) or Word Recognition (WR) test. The SRT and the SD/WR tests are entirely different tests—each with totally different objectives. Unfortunately, many people somehow “smoosh” these two tests together in their minds, hence the confusion and concern expressed in your questions. Let me explain.

Speech Reception Threshold (SRT) Testing

The purpose of the Speech Reception Threshold (SRT) test, sometimes called the speech-recognition threshold test, is to determine the softest level at which you just begin to recognize speech. That’s it. It has nothing to do with speech discrimination.

Audiologists determine your Speech Reception Threshold by asking you to repeat a list of easy-to-distinguish, familiar spondee words. (Spondee words are two-syllable words that have equal stress on each syllable. You’ll notice that when you repeat a spondee, you speak each syllable at the same volume and take the same length of time saying each syllable.) Here are examples of some of the spondee words used in SRT testing: baseball, cowboy, railroad, hotdog, ice cream, airplane, outside and cupcake.

When you take the SRT test, your audiologist will tell you, “Say the word ‘baseball.’ Say the word ‘cowboy.’ Say the word ‘hotdog.'” etc. As she does this, she varies the volume to find the softest sound level in decibels at which you can just hear and correctly repeat 50% of these words. This level is your SRT score expressed in decibels (dB). You will have a separate SRT test for each ear. 1

If your SRT is 5 dB (normal), you can understand speech perfectly at 21 feet and still catch some words at over 100 feet. If you have a mild hearing loss—or example a SRT of 30 dB—you could only hear perfectly at 1 foot but could still hear some words at 18 feet. If your SRT is 60 dB (a moderately severe hearing loss), you would need the speaker to be only 1 inch from your ear in order to hear perfectly and within 1 foot to still hear some of the words correctly. 2

Now, to address your concerns about memorizing the words in the word lists. For the record, you should be familiar with all the spondee words in the list before testing commences because this familiarization results in an SRT that is 4 to 5 dB better than that obtained if you didn’t know them. 3

Bet you didn’t know this, but there are actually two standardized spondee word lists—not just one. Each list consists of 18 words. These words are “phonetically dissimilar and homogeneous in terms of intelligibility.” 3 That is just a fancy-pants way of saying that all these words sound different, yet are equally easy to understand. Spondees are an excellent choice for determining your Speech Reception Threshold because they are so easy to understand at faint hearing levels. 3

Let me emphasize again, you are supposed to know these words. They are not supposed to be a secret. However, this doesn’t mean that because you know a number of the words on this list, that you have memorized them. In fact, it would be difficult since there are two different SRT word lists, and your audiologist may go from top to bottom, or bottom to top, or in random order. Thus, although you are generally familiar with these words, and can even recite a number of them from memory, you still don’t know the exact order you’ll be hearing them so you cannot cheat. Thus, you can set your mind at rest. Your SRT scores are still perfectly valid even though the words “railroad,” “cowboy” and “hotdog” are almost certainly going to be in one of those lists.

Why is knowing your Speech Reception Threshold important? In addition to determining the softest level at which you can hear and repeat words, your SRT is also used to confirm the results of your pure-tone threshold test. For example, there is a high correlation between your SRT results and the average of your pure-tone thresholds at 500, 1000, and 2000 Hz. In fact, your SRT and 3-frequency average should be within 6 dB of each other. 4 Furthermore, your Speech Reception Threshold determines the appropriate gain needed when selecting the right hearing aid for your hearing loss. 4

Discrimination Testing

The purpose of Speech Discrimination (SD) testing (also called Word Recognition (WR) testing) is to determine how well you hear and understand speech when the volume is set at your Most Comfortable Level (MCL).

To do this, your audiologist says a series of 50 single-syllable phonetically-balanced (PB) words. (Phonetically-balanced just means that the percent of time any given sound appears on the list is equal to its occurrence in the English language. 5 For this test, your audiologist will say, “Say the word ‘come.’ Say the word ‘high.’ Say the word ‘chew.’ Say the word ‘knees,'” and so on. You repeat back what you think you hear.

During this test, your audiologist keeps her voice (or a recording on tape or CD) at the same loudness throughout. Each correct response is worth 2%. She records the percentage of the words you correctly repeat for each ear.

For the best results, the Speech Discrimination word list is typically read at 40 decibels above your SRT level (although it may range from 25-50 dB above your SRT level, depending on how you perceive sound). 4

Your Speech Discrimination score is an important indicator of how much difficulty you will have communicating and how well you may do if you wear a hearing aid. If your speech discrimination is poor, speech will sound garbled to you.

For example, a Speech Discrimination score of 100% indicates that you heard and repeated every word correctly. If your score was 0%, it means that you cannot understand speech no matter how loud it is 1 —speech will be just so much gibberish to you. Scores over 90% are excellent and are considered to be normal. Scores below 90% indicate a problem with word recognition. 3 If your score is under 50%, your word discrimination is poor. This indicates that you will have significant trouble following a conversation, even when it is loud enough for you to hear. 2 Thus, hearing aids will only be of very limited benefit in helping you understand speech. If your speech discrimination falls below 40%, you may be eligible for a cochlear implant.

Incidentally, people with conductive hearing losses frequently show excellent speech discrimination scores when the volume is set at their most comfortable level. On the other hand, people with sensorineural hearing losses typically have poorer discrimination scores. People with problems in the auditory parts of their brains tend to have even poorer speech discrimination scores, even though they may have normal auditory pure-tone thresholds. 3

Unlike the SRT word lists (railroad, cowboy, hotdog, etc.), where everyone seems to remember some of the words, I have never come across a person who has memorized any of the words in the speech discrimination lists. In fact, as the initial questions indicated, people mistakenly think the SRT words are used for speech discrimination. This is just not true. In truth, the SD words are so unprepossessing and “plain Jane” that few can even remember one of them! They just don’t stick in your mind!

For example, one Speech Discrimination list includes words such as: “are,” “bar,” “clove,” “dike,” “fraud,” “hive,” “nook,” “pants,” “rat,” “slip,” “there,” “use,” “wheat” and 37 others. How many of you remember any of these words?

One person asked, “Why aren’t there several different word lists to use?” Then our audiologists would find out if we really heard them.”

Surprise! This is what they already do. There are not just one, but many phonetically-balanced word lists already in existence. In case you are interested, the Harvard Psycho-Acoustic Laboratory developed the original PB word lists. There are 20 of these lists, collectively referred to as the PB-50 lists. These 20 PB-50 lists each contain 50 single-syllable words comprising a total of 1,000 different monosyllabic words . Several years later, the Central Institute for the Deaf (CID) developed four 50-word W-22 word lists, using 120 of the same words used in the PB-50 lists and adding 80 other common words. Furthermore, Northwestern University developed yet four more word lists 6 (devised from a grouping of 200 consonant-nucleus-consonant (CNC] words). These lists are called the Northwestern University Test No. 6 (NU-6). 4

As you now understand, your audiologist has a pool of over 1,000 words in 28 different lists from which to choose, thus making memorizing them most difficult. (In actual practice, audiologists use the W-22 and NU-6 lists much more commonly than the original PB-50 lists.) In addition to choosing any one of the many speech discrimination word lists available to her, your audiologist may go from top to bottom, or bottom to top so you still don’t know what word is coming next. Thus you can rest assured that your word discrimination test is valid. Your memory has not influenced any of the results.

In addition to determining how well you recognize speech, Speech Discrimination testing has another use. Your audiologist uses it to verify that your hearing aids are really helping you. She does this by testing you first without your hearing aids on to set the baseline Speech Discrimination score. Then she tests you with your hearing aids on. If your hearing aids are really helping you understand speech better, your Speech Discrimination scores should be significantly higher than without them on. If the scores are lower, your audiologist needs to “tweak” them or try different hearing aids.

To Guess or Not To Guess, That is the Question

One person asked, “Do audiologists want us to guess at the word if we are not sure what the word is? Wouldn’t it be better to say what we really hear instead of trying to guess what the word is?”

Good question. Your audiologist wants you to just repeat what you hear. If you hear what sounds like a proper word, say it. If it is gibberish and not any word you know, either repeat the gibberish sounds you heard, or just say that you don’t have a clue what the word is supposed to be. If you don’t say anything when you are not completely certain of the word, you are skewing the results because sometimes you will hear the word correctly, but by not repeating what you thought you heard, you are deliberately lowering your discrimination score.

Another person remarked, “I remember a while back; when I was taking the word test, there was a word that sounded like the 4 letter word that begins with an ‘F’. I knew it wasn’t that word, and I would not say ‘F***.'” In that case, if you are not comfortable repeating what you “heard,” just say it sounded like a “dirty” word and you don’t want to repeat it. You audiologist will know you got it wrong as there are no “dirty” words on the PB lists.

____________________

1 ASHA. 2005. http://www.asha.org/public/hearing/testing/assess.htm .

2 Olsson, Robert. 1996. Do You Have a Hearing Loss? In: How-To Guide For Families Coping With Hearing Loss. http://www.earinfo.com . p. 3.

3 Pachigolla, Ravi, MD & Jeffery T. Vrabec, MD. 2000. Assessment of Peripheral and Central Auditory Function. Dept. of Otolaryngology. UTMB. http://www.utmb.edu/otoref/Grnds/Auditory-assessment/200003/Auditory_Assess_200003.htm .

4 Smoski, Walter J. Ph.D. Associate Professor, Department of Speech Pathology and Audiology, Illinois State University. http://www.emedicine.com/ent/topic371.htm .

5 Audiologic Consultants. 2005. http://www.audiologicconsultants.com/hearing_evaluations.htm

6 Thompson, Sadi. 2002. Comparison of Word Familiarity: Conversational Words v. NU-6 list Words. Dept. Sp. Ed. & Comm. Dis. University of Nebraska-Lincoln. Lincoln, NE. http://www.audiologyonline.com/articles/arc_disp.asp?id=350 .

February 24, 2016 at 10:54 PM

Thanks for the explanation. It is really very helpful.

September 2, 2023 at 8:01 AM

Great article and very well written.

My question concerns the doubling of the SRT decibel. In my case, that meant the discrimination testing was at 80 decibels. I scored 80R/70L. But I was wondering how accurate this is, as I can’t think of anyone who actually speaks routinely at that volume!

September 2, 2023 at 8:52 AM

Discrimination (word recognition) testing is always done at your most comfortable listening level. If it were done at the level people speak, you wouldn’t hear much of anything. The idea is to see how well you can understand speech WHEN YOU CAN COMFORTABLY HEAR IT.

This tells the audiologist whether hearing aids will help you–when the sounds are amplified to your most comfortable level. In your case (and mine too) hearing aids will help you a lot (at least in quiet conditions). But, if you’re like me, you’ll still want to speechread to fill in words that you miss.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Ototoxic Drugs Exposed

Learn More | Add to Cart—Printed | Add to Cart—eBook

Contacta HLD3 Hearing Loop System

Learn More | Add to Cart

Say Good Bye to Meniere’s Disease

Severe Meniere’s disease is not something you ever want to experience. In this book, not only will you learn what it’s like to have Meniere’s disease, but you will learn about the latest discovery of the underlying cause of Meniere’s disease and the simple treatment that let’s you wave good bye to your Meniere’s disease.

© Copyright Center for Hearing Loss Help 2024 · Help for your hearing loss, tinnitus and other ear conditions ™

Center for Hearing Loss Help, Neil G. Bauman, Ph.D. 1013 Ridgeway Drive, Lynden, WA 98264-1057 USA Email: neil@hearinglosshelp.com · Phone: 360-778-1266 (M-F 9:00 AM-5:00 PM PST) · FAX: 360-389-5226

"The wages of sin is death, but the gift of God is eternal life [which also includes perfect hearing] through Jesus Christ our Lord." [Romans 6:23]

"But know this, in the last days perilous times will come" [2 Timothy 3:1]. "For there will be famines, pestilences, and [severe] earthquakes in various places" [Matthew 24:7], "distress of nations, the sea and the waves roaring"—tsunamis, hurricanes—Luke 21:25, but this is good news if you have put your trust in the Lord Jesus Christ, for "when these things begin to happen, lift up your heads [and rejoice] because your redemption draws near" [Luke 21:28].

- Audiometers

- Hearing Aid Test Systems

- Newborn Hearing Screening

- TeleAudiology

- Occupational Health - Industrial

- Otoacoustic Emissions - OAE

- Sound Rooms - Suites

- System Software

- Tympanometry - Middle Ear Analyzers

- Vestibular - Balance

- Vision Screeners

- VRA - COR Systems

- Calibration Services

- Repair Services

- Preventative Maintenance Services

- Download Center

- National Conferences

- Pre-recorded Webinars

Am I Doing it Right? Word Recognition Test Edition

Word recognition testing evaluates a patient's ability to identify one-syllable words presented above their hearing threshold, providing a Word Recognition Score (WRS). Word recognition testing is a routine part of a comprehensive hearing assessment and in an ENT practice, the WRS can be an indication of a more serious problem that needs further testing. Unfortunately, the common way WRS is measured may give inaccurate results. Let's talk about why that is.

The Problems with Word Recognition Testing

One of the key variables to consider when performing word recognition testing is at what level to present the words. A common practice is setting the loudness of the words at 30 to 40 dB above the patient's Speech Reception Threshold (SRT), known as "SRT + 30 or +40 dB." Some also set the level to the patient's subjective "Most Comfortable Level" (MCL). Clinicians often use one of these methods, assuming it ensures optimal performance. However, some research suggests that utilizing this method may not capture the maximum performance (PBmax) for those with hearing loss. Consequently, this approach does not necessarily guarantee the most accurate WRS measurement. This fact prompts the question: Does this traditional method align with best practices and evidence-based approaches? Let's explore this further.

Audibility and Speech Audiometry

Audibility significantly impacts word recognition scores. Using only the SRT +30 dB or +40 dB method or MCL may not be the best strategy, as the audiogram configuration can affect whether words are presented at an effective level. Studies suggest that raising the presentation level could enhance WRS in some instances. Since the SRT +30 dB or 40 dB method or MCL does not offer the best results, let's explore two methods supported by current research.

Alternative Word Recognition Methods Explained

In a 2009 study, Guthrie and Mackersie compared various presentation levels to find the one that maximized word recognition in people with different hearing loss patterns. The Uncomfortable Loudness Level – 5 dB (UCL-5dB) method and the 2KHz Sensation Level method were found to have the highest average scores. Let's look at each of these methods separately.

Uncomfortable Loudness Level – 5dB (UCL-5dB)

The Uncomfortable Loudness Level (UCL) refers to the intensity level at which sounds become uncomfortably loud for an individual. In the UCL-5 dB method, the presentation level for word recognition testing is set to 5 decibels below the measured UCL. This method aims to find the test subject's most comfortable and effective presentation level. While UCL-5 may be louder than the patient prefers, setting the presentation level slightly below the uncomfortable loudness threshold ensures that the speech stimuli are audible without causing discomfort. Patients with mild to moderate and moderately severe hearing loss obtained their best WRS at this level.

2KHz Sensation Level (SL)

The 2KHz Sensation Level method for WRS testing involves determining the presentation level for speech stimuli based on the individual's hearing threshold at 2,000 Hertz (2KHz). Once determined, the words are presented at a variable Sensation Level (SL) relative to the patient's hearing threshold at 2KHz. In this case, SL is the difference between the hearing threshold at 2KHz and the loudness level of the words for the word recognition test. The 2KHz Sensation Level (SL) method is convenient for busy clinics as it avoids the need to measure the UCL. This method involves using variable SL values determined in the following way:

- 2KHz threshold <50dB HL: 25dB SL

- 2KHz threshold 50-55dB HL: 20dB SL

- 2KHz threshold 60-65dB HL: 15dB SL

- 2KHz threshold 70-75dB HL: 10dB SL

This method tailors the presentation level to the individual's specific frequency sensitivity. It allows for a more personalized and precise word recognition testing approach, considering the individual's hearing characteristics at a specific frequency of 2 kHz. Ensuring the accuracy of WRS test results is crucial by employing research-backed methods. Another important aspect of best practice is carefully selecting the word list and ensuring the appropriate number of words is used during the test. Let's delve deeper into exploring WRS test materials.

WRS Test Materials

Nu-6 and cid w-22.

These are two standardized word lists and the most used lists when performing the WR test. Each is comprised of 50 words; however, many hearing care professionals present only half of the list to save valuable clinical time. This approach may be invalid and unreliable. Why?

Research has shown that the words comprising the first half of these standardized lists are more difficult to understand than the second. Rintleman showed that the average difference between ears when presenting the first half to one ear and the second half to another was 16%. A solution lies in using re-ordered lists by difficulty, offering a screening approach. Hurley and Sells' 2003 study provides a valid alternative, potentially saving substantial testing time.

Auditec NU-6 Ordered by Difficulty

Hornsby and Mueller (2013) propose using the Hurley and Sells Auditec NU-6 Ordered by Difficulty list (Version II) via recorded word lists. The list comprises four sets of 50 words each. The examiner can conclude the test after the initial 10 or 25 words or complete all 50 words. Studies have shown that approximately 25% of patients require only a 10-word list. Instructions accompany the list.

Interpreting Word Recognition Test Results

Otolaryngologists often compare the WRS between ears to determine if additional testing is needed to rule out certain medical conditions. The American Academy of Otolaryngology recommends referring patients for further testing when the WRS between ears shows an asymmetry of 15% or greater. This asymmetry is not significant enough to warrant a referral for additional testing. What constitutes a significant difference? Thornton and Raffin (1978) developed a statistical model against which the WRS can be plotted to determine what is statistically significant based on "critical difference tables." Linda Thibodeau developed the SPRINT chart to aid in interpreting WR tests. It utilizes data from Thornton and Raffin and that obtained by Dubno et al. This chart helps evaluate the asymmetry and determine if a WRS is close to PBMax.

Word Recognition Testing Best Practice

Here are some dos and don'ts from an Audiology Online presentation by Muellar and Hornsby.

- Always use recorded materials, such as the shortened-interval Auditec recording of the NU-6.

- Choose a presentation level to maximize audibility without causing loudness discomfort, utilizing either UCL-5 or the 2KHz –SL method.

- Use the Thornton and Raffin data, incorporated into Thibodeau's SPRINT chart, to determine when a difference is significant.

- Use the Judy Dubno data in Thibodeau's SPRINT chart to determine when findings are "normal."

- Utilize the Ordered-by-Difficulty version of the Auditec NU-6 and employ the 10-word and 25-word screenings.

Word Recognition Testing Don'ts

- Avoid live-voice testing.

- Steer clear of using a presentation of SRT+30 or SRT+40.

- Avoid making random guesses regarding when two scores are different.

- Refrain from conducting one-half list per ear testing unless using the Ordered-by-Difficulty screening.

In conclusion, adopting modern methods and evidence-based practices can improve the accuracy, efficiency, and validity of word recognition testing. Staying informed and using best practices is crucial for mastering this critical aspect of hearing assessments and giving your patients the best possible care.

Other Good Reads: A Quick Guide to Speech Audiometry Using the GSI Pello Audiometer

Follow us on Social!

If you haven't already, make sure to subscribe to our newsletter to keep up-to-date with our latest resources and product information.

- Word Recognition Test Edition >

e3 Diagnostics represents

- Expertise: Personalized consultation

- Excellence: World-leading technology and service

- Everywhere: Relationships that go beyond a sale

Corporate Office

(800) 323-4371

info @e3diagnostics.com

MON - FRI: 8am-5pm CST

Find Local Office

Shop Online

Browse our audiology supplies web shop today and stock up on products from the industry’s best brands!

© 2013-2024 e3 Diagnostics. All Rights Reserved. [email protected] | Privacy Policy | Terms of Use | 3333 N Kennicott Ave., Arlington Heights, IL. 60004

- Audiometers

- Tympanometers

- Hearing Aid Fitting

- Research Systems

- Research Unit

- ACT Research

- Our History

- Distributors

- Sustainability

- Environmental Sustainability

Training in Speech Audiometry

- Why Perform Functional Hearing Tests?

- Performing aided speech testing to validate pediatric hearing devices

Speech Audiometry: An Introduction

Description, table of contents, what is speech audiometry, why perform speech audiometry.

- Contraindications and considerations

Audiometers that can perform speech audiometry

How to perform speech audiometry, results interpretation, calibration for speech audiometry.

Speech audiometry is an umbrella term used to describe a collection of audiometric tests using speech as the stimulus. You can perform speech audiometry by presenting speech to the subject in both quiet and in the presence of noise (e.g. speech babble or speech noise). The latter is speech-in-noise testing and is beyond the scope of this article.

Speech audiometry is a core test in the audiologist’s test battery because pure tone audiometry (the primary test of hearing sensitivity) is a limited predictor of a person’s ability to recognize speech. Improving an individual’s access to speech sounds is often the main motivation for fitting them with a hearing aid. Therefore, it is important to understand how a person with hearing loss recognizes or discriminates speech before fitting them with amplification, and speech audiometry provides a method of doing this.

A decrease in hearing sensitivity, as measured by pure tone audiometry, results in greater difficulty understanding speech. However, the literature also shows that two individuals of the same age with similar audiograms can have quite different speech recognition scores. Therefore, by performing speech audiometry, an audiologist can determine how well a person can access speech information.

Acquiring this information is key in the diagnostic process. For instance, it can assist in differentiating between different types of hearing loss. You can also use information from speech audiometry in the (re)habilitation process. For example, the results can guide you toward the appropriate amplification technology, such as directional microphones or remote microphone devices. Speech audiometry can also provide the audiologist with a prediction of how well a subject will hear with their new hearing aids. You can use this information to set realistic expectations and help with other aspects of the counseling process.

Below are some more examples of how you can use the results obtained from speech testing.

Identify need for further testing

Based on the results from speech recognition testing, it may be appropriate to perform further testing to get more information on the nature of the hearing loss. An example could be to perform a TEN test to detect a dead region or to perform the Audible Contrast Threshold (ACT™) test .

Inform amplification decisions

You can use the results from speech audiometry to determine whether binaural amplification is the most appropriate fitting approach or if you should consider alternatives such as CROS aids.

You can use the results obtained through speech audiometry to discuss and manage the amplification expectations of patients and their communication partners.

Unexpected asymmetric speech discrimination, significant roll-over , or particularly poor speech discrimination may warrant further investigation by a medical professional.

Non-organic hearing loss

You can use speech testing to cross-check the results from pure tone audiometry for suspected non‑organic hearing loss.

Contraindications and considerations when performing speech audiometry

Before speech audiometry, it is important that you perform pure tone audiometry and otoscopy. Results from these procedures can reveal contraindications to performing speech audiometry.

Otoscopic findings

Speech testing using headphones or inserts is generally contraindicated when the ear canal is occluded with:

- Foreign body

- Or infective otitis externa

In these situations, you can perform bone conduction speech testing or sound field testing.

Audiometric findings

Speech audiometry can be challenging to perform in subjects with severe-to-profound hearing losses as well as asymmetrical hearing losses where the level of stimulation and/or masking noise required is beyond the limits of the audiometer or the patient's uncomfortable loudness levels (ULLs).

Subject variables

Depending on the age or language ability of the subject, complex words may not be suitable. This is particularly true for young children and adults with learning disabilities or other complex presentations such as dementia and reduced cognitive function.

You should also perform speech audiometry in a language which is native to your patient. Speech recognition testing may not be suitable for patients with expressive speech difficulties. However, in these situations, speech detection testing should be possible.

Before we discuss speech audiometry in more detail, let’s briefly consider the instrumentation to deliver the speech stimuli. As speech audiometry plays a significant role in diagnostic audiometry, many audiometers include – or have the option to include – speech testing capabilities.

Table 1 outlines which audiometers from Interacoustics can perform speech audiometry.

| Clinical audiometer | |

| Diagnostic audiometer | |

| Diagnostic audiometer | |

| Equinox 2.0 | PC-based audiometer |

| Portable audiometer | |

| Hearing aid fitting system | |

| Hearing aid fitting system |

Table 1: Audiometers from Interacoustics that can perform speech audiometry.

Because speech audiometry uses speech as the stimulus and languages are different across the globe, the way in which speech audiometry is implemented varies depending on the country where the test is being performed. For the purposes of this article, we will start with addressing how to measure speech in quiet using the international organization of standards ISO 8252-3:2022 as the reference to describe the terminology and processes encompassing speech audiometry. We will describe two tests: speech detection testing and speech recognition testing.

Speech detection testing

In speech detection testing, you ask the subject to identify when they hear speech (not necessarily understand). It is the most basic form of speech testing because understanding is not required. However, it is not commonly performed. In this test, words are normally presented to the ear(s) through headphones (monaural or binaural testing) or through a loudspeaker (binaural testing).

Speech detection threshold (SDT)

Here, the tester will present speech at varying intensity levels and the patient identifies when they can detect speech. The goal is to identify the level at which the patient detects speech in 50% of the trials. This is the speech detection threshold. It is important not to confuse this with the speech discrimination threshold. The speech discrimination threshold looks at a person’s ability to recognize speech and we will explain it later in this article.

The speech detection threshold has been found to correlate well with the pure tone average, which is calculated from pure tone audiometry. Because of this, the main application of speech detection testing in the clinical setting is confirmation of the audiogram.

Speech recognition testing

In speech recognition testing, also known as speech discrimination testing, the subject must not only detect the speech, but also correctly recognize the word or words presented. This is the most popular form of speech testing and provides insights into how a person with hearing loss can discriminate speech in ideal conditions.

Across the globe, the methods of obtaining this information are different and this often leads to confusion about speech recognition testing. Despite there being differences in the way speech recognition testing is performed, there are some core calculations and test parameters which are used globally.

Speech recognition testing: Calculations

There are two main calculations in speech recognition testing.

1. Speech recognition threshold (SRT)

This is the level in dB HL at which the patient recognizes 50% of the test material correctly. This level will differ depending on the test material used. Some references describe the SRT as the speech discrimination threshold or SDT. This can be confusing because the acronym SDT belongs to the speech detection threshold. For this reason, we will not use the term discrimination but instead continue with the term speech recognition threshold.

2. Word recognition score (WRS)

In word recognition testing, you present a list of phonetically balanced words to the subject at a single intensity and ask them to repeat the words they hear. You score if the patient repeats these words correctly or incorrectly. This score, expressed as a percentage of correct words, is calculated by dividing the number of words correctly identified by the total number of words presented.

In some countries, multiple word recognition scores are recorded at various intensities and plotted on a graph. In other countries, a single word recognition score is performed using a level based on the SRT (usually presented 20 to 40 dB louder than the SRT).

Speech recognition testing: Parameters

Before completing a speech recognition test, there are several parameters to consider.

1. Test transducer

You can perform speech recognition testing using air conduction, bone conduction, and speakers in a sound-field setup.

2. Types of words

Speech recognition testing can be performed using a variety of different words or sentences. Some countries use monosyllabic words such as ‘boat’ or ‘cat’ whereas other countries prefer to use spondee words such as ‘baseball’ or ‘cowboy’. These words are then combined with other words to create a phonetically balanced list of words called a word list.

3. Number of words

The number of words in a word list can impact the score. If there are too few words in the list, then there is a risk that not enough data points are acquired to accurately calculate the word recognition score. However, too many words may lead to increased test times and patient fatigue. Word lists often consist of 10 to 25 words.

You can either score words as whole words or by the number of phonemes they contain.

An example of scoring can be illustrated by the word ‘boat’. When scoring using whole words, anything other than the word ‘boat’ would result in an incorrect score.

However, in phoneme scoring, the word ‘boat’ is broken down into its individual phonemes: /b/, /oa/, and /t/. Each phoneme is then scored as a point, meaning that the word boat has a maximum score of 3. An example could be that a patient mishears the word ‘boat’ and reports the word to be ‘float’. With phoneme scoring, 2 points would be awarded for this answer whereas in word scoring, the word float would be marked as incorrect.

5. Delivery of material

Modern audiometers have the functionality of storing word lists digitally onto the hardware of the device so that you can deliver a calibrated speech signal the same way each time you test a patient. This is different from the older methods of testing using live voice or a CD recording of the speech material. Using digitally stored and calibrated speech material in .wav files provides the most reliable and repeatable results as the delivery of the speech is not influenced by the tester.

6. Aided or unaided

You can perform speech recognition testing either aided or unaided. When performing aided measurements, the stimulus is usually played through a loudspeaker and the test is recorded binaurally.

Global examples of how speech recognition testing is performed and reported

Below are examples of how speech recognition testing is performed in the US and the UK. This will show how speech testing varies across the globe.

Speech recognition testing in the US: Speech tables

In the US, the SRT and WRS are usually performed as two separate tests using different word lists for each test. The results are displayed in tables called speech tables.

The SRT is the first speech test which is performed and typically uses spondee words (a word with two equally stressed syllables, such as ‘hotdog’) as the stimulus. During this test, you present spondee words to the patient at different intensities and a bracketing technique establishes the threshold at where the patient correctly identifies 50% of the words.

In the below video, we can see how an SRT is performed using spondee words.

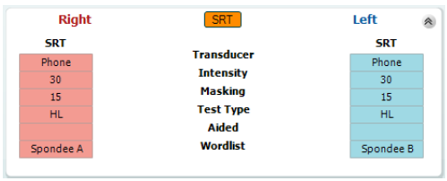

Below, you can see a table showing the results from an SRT test (Figure 1). Here, we can see that the SRT has been measured in each ear. The table shows the intensity at which the SRT was found as well as the transducer, word list, and the level at which masking noise was presented (if applicable). Here we see an unaided SRT of 30 dB HL in both the left and right ears.

Once you have established the intensity of the SRT in dB HL, you can use it to calculate the intensity to present the next list of words to measure the WRS. In WRS testing, it is common to start at an intensity of between 20 dB and 40 dB louder than the speech recognition threshold and to use a different word list from the SRT. The word lists most commonly used in the US for WRS are the NU-6 and CID-W22 word lists.

In word recognition score testing, you present an entire word list to the test subject at a single intensity and score each word based on whether the subject can correctly repeat it or not. The results are reported as a percentage.

The video below demonstrates how to perform the word recognition score.

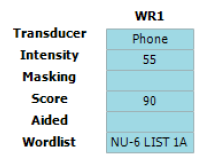

Below is an image of a speech table showing the word recognition score in the left ear using the NU‑6 word list at an intensity of 55 dB HL (Figure 2). Here we can see that the patient in this example scored 90%, indicating good speech recognition at moderate intensities.

Speech recognition testing in the UK: Speech audiogram

In the UK, speech recognition testing is performed with the goal of obtaining a speech audiogram. A speech audiogram is a graphical representation of how well an individual can discriminate speech across a variety of intensities (Figure 3).

In the UK, the most common method of recording a speech audiogram is to present several different word lists to the subject at varying intensities and calculate multiple word recognition scores. The AB (Arthur Boothroyd) word lists are the most used lists. The initial list is presented around 20 to 30 dB sensation level with subsequent lists performed at quieter intensities before finally increasing the sensation level to determine how well the patient can recognize words at louder intensities.

The speech audiogram is made up of plotting the WRS at each intensity on a graph displaying word recognition score in % as a function of intensity in dB HL. The following video explains how it is performed.

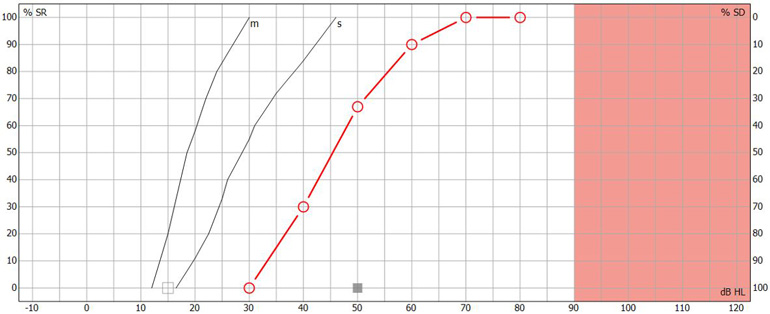

Below is an image of a completed speech audiogram (Figure 4). There are several components.

Point A on the graph shows the intensity in dB HL where the person identified 50% of the speech material correctly. This is the speech recognition threshold or SRT.

Point B on the graph shows the maximum speech recognition score which informs the clinician of the maximum score the subject obtained.

Point C on the graph shows the reference speech recognition curve; this is specific to the test material used (e.g., AB words) and method of presentation (e.g., headphones), and shows a curve which describes the median speech recognition scores at multiple intensities for a group of normal hearing individuals.

Having this displayed on a single graph can provide a quick and easy way to determine and analyze the ability of the person to hear speech and compare their results to a normative group. Lastly, you can use the speech audiogram to identify roll-over. Roll-over occurs when the speech recognition deteriorates at loud intensities and can be a sign of retro-cochlear hearing loss. We will discuss this further in the interpretation section.

Masking in speech recognition testing

Just like in audiometry, cross hearing can also occur in speech audiometry. Therefore, it is important to mask the non-test ear when testing monaurally. Masking is important because word recognition testing is usually performed at supra-threshold levels. Speech encompasses a wide spectrum of frequencies, so the use of narrowband noise as a masking stimulus is not appropriate, and you need to modify the masking noise for speech audiometry. In speech audiometry, speech noise is typically used to mask the non-test ear.

There are several approaches to calculating required masking noise level. An equation by Coles and Priede (1975) suggests one approach which applies to all types of hearing loss (sensorineural, conductive, and mixed):

- Masking level = D S plus max ABG NT minus 40 plus E M

It considers the following factors.

1. Dial setting

D S is the level of dial setting in dB HL for presentation of speech to the test ear.

2. Air-bone gap

Max ABG NT is the maximum air-bone gap between 250 to 4000 Hz in the non‑test ear.

3. Interaural attenuation

Interaural attenuation: The value of 40 comes from the minimum interaural attenuation for masking in audiometry using headphones (for insert earphones, this would be 55 dB).

4. Effective masking

E M is effective masking. Modern audiometers are calibrated in E M , so you don’t need to include this in the calculation. However, if you are using an old audiometer calibrated to an older calibration standard, then you should calculate the E M .

You can calculate it by measuring the difference in the speech dial setting presented to normal listeners at a level that yields a score of 95% in quiet and the noise dial setting presented to the same ear that yields a score less than 10%.

You can use the results from speech audiometry for many purposes. The below section describes these applications.

1. Cross-check against pure tone audiometry results

The cross-check principle in audiology states that no auditory test result should be accepted and used in the diagnosis of hearing loss until you confirm or cross-check it by one or more independent measures (Hall J. W., 3rd, 2016). Speech-in-quiet testing serves this purpose for the pure tone audiogram.

The following scores and their descriptions identify how well the speech detection threshold and the pure tone average correlate (Table 2).

| 6 dB or less | Good |

| 7 to 12 dB | Adequate |

| 13 dB or more | Poor |

Table 2: Correlation between speech detection threshold and pure tone average.

If there is a poor correlation between the speech detection threshold and the pure tone average, it warrants further investigation to determine the underlying cause or to identify if there was a technical error in the recordings of one of the tests.

2. Detect asymmetries between ears

Another core use of speech audiometry in quiet is to determine the symmetry between the two ears and whether it is appropriate to fit binaural amplification. Significant differences between ears can occur when there are two different etiologies causing hearing loss.

An example of this could be a patient with sensorineural hearing loss who then also contracts unilateral Meniere’s disease . In this example, it would be important to understand if there are significant differences in the word recognition scores between the two ears. If there are significant differences, then it may not be appropriate for you to fit binaural amplification, where other forms of amplification such as contralateral routing of sound (CROS) devices may be more appropriate.

3. Identify if further testing is required

The results from speech audiometry in quiet can identify whether further testing is required. This could be highlighted in several ways.

One example could be a severe difference in the SRT and the pure tone average. Another example could be significant asymmetries between the two ears. Lastly, very poor speech recognition scores in quiet might also be a red flag for further testing.

In these examples, the clinician might decide to perform a test to detect the presence of cochlear dead regions such as the TEN test or an ACT test to get more information.

4. Detect retro-cochlear hearing loss

In subjects with retro-cochlear causes of hearing loss, speech recognition can begin to deteriorate as sounds are made louder. This is called ‘roll-over’ and is calculated by the following equation:

- Roll-over index = (maximum score minus minimum score) divided by maximum score

If roll-over is detected at a certain value (the value is dependent on the word list chosen for testing but is commonly larger than 0.4), then it is considered to be a sign of retro-cochlear pathology. This could then have an influence on the fitting strategy for patients exhibiting these results.

It is important to note however that as the cross-check principle states, you should interpret any roll-over with caution and you should perform additional tests such as acoustic reflexes , the reflex decay test, or auditory brainstem response measurements to confirm the presence of a retro-cochlear lesion.

5. Predict success with amplification

The maximum speech recognition score is a useful measure which you can use to predict whether a person will benefit from hearing aids. More recent, and advanced tests such as the ACT test combined with the Acceptable Noise Level (ANL) test offer good alternatives to predicting hearing success with amplification.

Just like in pure tone audiometry, the stimuli which are presented during speech audiometry require annual calibration by a specialized technician ster. Checking of the transducers of the audiometer to determine if the speech stimulus contains any distortions or level abnormalities should also be performed daily. This process replicates the daily checks a clinicians would do for pure tone audiometry. If speech is being presented using a sound field setup, then you can use a sound level meter to check if the material is being presented at the correct level.

The next level of calibration depends on how the speech material is delivered to the audiometer. Speech material can be presented in many ways including live voice, CD, or installed WAV files on the audiometer. Speech being presented as live voice cannot be calibrated but instead requires the clinician to use the VU meter on the audiometer (which indicates the level of the signal being presented) to determine if they are speaking at the correct intensity. Speech material on a CD requires daily checks and is also performed using the VU meter on the audiometer. Here, a speech calibration tone track on the CD is used, and the VU meter is adjusted accordingly to the desired level as determined by the manufacturer of the speech material.

The most reliable way to deliver a speech stimulus is through a WAV file. By presenting through a WAV file, you can skip the daily tone-based calibration as this method allows you to calibrate the speech material as part of the annual calibration process. This saves the clinician time and ensures the stimulus is calibrated to the same standard as the pure tones in their audiometer. To calibrate the WAV file stimulus, the speech material is calibrated against a speech calibration tone. This is stored on the audiometer. Typically, a 1000 Hz speech tone is used for the calibration and the calibration process is the same as for a 1000 Hz pure tone calibration.

Lastly, if the speech is being presented through the sound field, a calibration professional should perform an annual sound field speaker calibration using an external free field microphone aimed directly at the speaker from the position of the patient’s head.

Coles, R. R., & Priede, V. M. (1975). Masking of the non-test ear in speech audiometry . The Journal of laryngology and otology , 89 (3), 217–226.

Graham, J. Baguley, D. (2009). Ballantyne's Deafness, 7th Edition. Whiley Blackwell.

Hall J. W., 3rd (2016). Crosscheck Principle in Pediatric Audiology Today: A 40-Year Perspective . Journal of audiology & otology , 20 (2), 59–67.

Katz, J. (2009). Handbook of Clinical Audiology. Wolters Kluwer.

Killion, M. C., Niquette, P. A., Gudmundsen, G. I., Revit, L. J., & Banerjee, S. (2004). Development of a quick speech-in-noise test for measuring signal-to-noise ratio loss in normal-hearing and hearing-impaired listeners . The Journal of the Acoustical Society of America , 116 (4), 2395–2405.

Stach, B.A (1998). Clinical Audiology: An Introduction, Cengage Learning.

Popular Academy Advancements

What is nhl-to-ehl correction, getting started: assr, what is the ce-chirp® family of stimuli, nhl-to-ehl correction for abr stimuli.

- Find a distributor

- Customer stories

- Made Magazine

- ABR equipment

- OAE devices

- Hearing aid fitting systems

- Balance testing equipment

Certificates

- Privacy policy

- Cookie Policy

0845 123 5342

Soundbyte Solutions

Automated Speech Test Systems

- Description

- Specification

- Customisation

- Customisation Samples

- Calibration

- Parrotplus RFQ

- Parrotplus Add Tests RFQ

- Spare Parts RFQ

- Accessories RFQ

- Equipment Repair Form

- Parrot Downloads

- ParrotPlus Downloads

- Phoenix Downloads

- Testimonials

- McCormick Toy Test

- English as an Additional Language Toy Test

- Manchester Picture Test

- Manchester Junior Word List

AB Short Word List

- BKB Sentence Test

Related Pages

The AB short word list test first devised by Arthur Boothroyd in 1968, is widely used in the UK as a speech recognition test and for rehabilitation. The Parrot AB test consists of 8 word lists, with each list containing 10 words. Each word has three phonemes constructed as consonant – vowel – consonant with 30 phonemes, 10 vowels and 20 consonants present in each list. The new Parrot AB combines the digital reliability of the Parrot with the benefits of the AB short word list. These include:

- The large number of words which diminishes the effects of learning factors

- 80 separate words have been recorded on the Parrot: these can be played at differing sound levels at the touch of a button

- There is a high inter list equivalence as the recognition score is based on the phonemes correct out of 30

We use a subset (8 lists) of the original 15 word lists. The lists that have been selected as being the most relevant (after consultation with a wide range of audiology professionals), are: 2,5,6,8,11,13,14,15.

| List 1 (2) | List 2 (5) | List 3 (6) | List 4 (8) | List 5 (11) | List 6 (13) | List 7 (14) | List 8 (15) |

|---|---|---|---|---|---|---|---|

| Fish | Fib | Fill | Bath | Man | Kiss | Wish | Hug |

| Duck | Thatch | Catch | Hum | Hip | Buzz | Dutch | Dish |

| Gap | Sum | Thumb | Dip | Thug | Hash | Jam | Ban |

| Cheese | Heel | Heap | Five | Ride | Thieve | Heath | Rage |

| Rail | Wide | Wise | Ways | Siege | Gate | Laze | Chief |

| Hive | Rake | Rave | Reach | Veil | Wife | Bike | Pies |

| Bone | Goes | Goat | Joke | Chose | Pole | Rove | Wet |

| Wedge | Shop | Shone | Noose | Shoot | Wretch | Pet | Cove |

| Moss | Vet | Bed | Got | Web | Dodge | Fog | Loose |

| Tooth | June | Juice | Shell | Cough | Moon | Soon | Moth |

The child should repeat the word exactly as they hear it. If they are not sure of the word they should guess it, and if only part of a word is heard, then they should say that part. Present all the words in the list at the same sound level. Make a note of the result of each presentation on the results sheet. The test can be conducted aided and/or unaided. Use a different word list for each test.

Three points are assigned to each word in the AB word lists, one for each phoneme. Only when a phoneme is entirely correct, does it obtain a score of 1. For example:

Test Word MAN Response PAN

YUU Score = 2

Test Word SHOOT Response SHOOTS

UUY Score = 2 as an addition counts as an error

For each word list a percentage score is obtained by calculating the points awarded out of 30. Therefore, if a child scores 20 in one list presentation, the percentage score is (20/30) x 100% = 66%.

Quick Links

- Accessories

The Discrimination Score is the percentage of words repeated correctly: Discrimination % at HL = 100 x Number of Correct Responses/Number of Trials.

WRS = Word Recognition Score, SRS = Speech Reception Score, Speech Discrimination Score. These terms are interchangeable and describe the patient’s capability to correctly repeat a list of phonetically balanced (PB) words at a comfortable level. The score is a percentage of correct responses and indicates the patient’s ability to understand speech.

Word Recognition Score (WRS)

WRS, or word recognition score, is a type of speech audiometry that is designed to measure speech understanding. Sometimes it is called word discrimination. The words used are common and phonetically balanced and typically presented at a level that is comfortable for the patient. The results of WRS can be used to help set realistic expectations and formulate a treatment plan.

Speech In Noise Test

Speech in noise testing is a critical component to a comprehensive hearing evaluation. When you test a patient's ability to understand speech in a "real world setting" like background noise, the results influence the diagnosis, the recommendations, and the patient's understanding of their own hearing loss.

Auditory Processing

Sometimes, a patient's brain has trouble making sense of auditory information. This is called an auditory processing disorder. It's not always clear that this lack of understanding is a hearing issue, so it requires a very specialized battery of speech tests to identify what kind of processing disorder exists and develop recommendations to improve the listening and understanding for the patient.

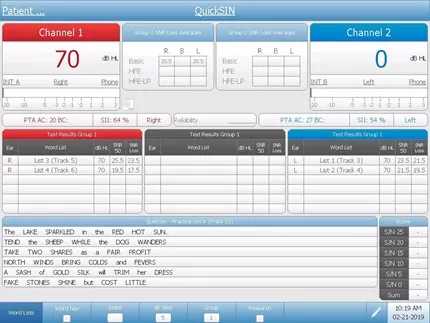

QuickSIN is a quick sentence in noise test that quantifies how a patient hears in noise. The patient repeats sentences that are embedded in different levels of restaurant noise and the result is an SNR loss - or Signal To Noise ratio loss. Taking a few additional minutes to measure the SNR loss of every patient seen in your clinic provides valuable insights on the overall status of the patient' s auditory system and allows you to counsel more effectively about communication in real-world situations. Using the Quick SIN to make important decisions about hearing loss treatment and rehabilitation is a key differentiator for clinicians who strive to provide patient-centered care.

BKB-SIN is a sentence in noise test that quantifies how patients hear in noise. The patient repeats sentences that are embedded in different levels of restaurant noise an the result is an SNR loss - or signal to noise ratio loss. This test is designed to evaluate patients of many ages and has normative corrections for children and adults. Taking a few additional minutes to measure the SNR loss of every patient seen in your clinic is a key differentiator for clinicians who strive to provide patient-centered care.

- Education >

- Testing Guides >

- Speech Audiometry >

GRASON-STADLER

- Our Approach

- Cookie Policy

- AMTAS Pro

Corporate Headquarters 10395 West 70th St. Eden Prairie, MN 55344

General Inquires +1 800-700-2282 +1 952-278-4402 [email protected] (US) [email protected] (International)

Technical Support Hardware: +1 877-722-4490 Software: +1 952-278-4456

DISTRIBUTOR LOGIN

- GSI Extranet

- Request an Account

- Forgot Password?

Get Connected

- Distributor Locator

© Copyright 2024

American Speech-Language-Hearing Association

- Certification

- Publications

- Continuing Education

- Practice Management

- Audiologists

- Speech-Language Pathologists

- Academic & Faculty

- Audiology & SLP Assistants

- Speech Testing

Types of Tests

- Auditory Brainstem Response (ABR)

- Otoacoustic Emissions (OAEs)

- Pure-Tone Testing

- Tests of the Middle Ear

About Speech Testing

An audiologist may do a number of tests to check your hearing. Speech testing will look at how well you listen to and repeat words. One test is the speech reception threshold, or SRT.

The SRT is for older children and adults who can talk. The results are compared to pure-tone test results to help identify hearing loss.

How Speech Testing Is Done

The audiologist will say words to you through headphones, and you will repeat the words. The audiologist will record the softest speech you can repeat. You may also need to repeat words that you hear at a louder level. This is done to test word recognition.

Speech testing may happen in a quiet or noisy place. People who have hearing loss often say that they have the most trouble hearing in noisy places. So, it is helpful to test how well you hear in noise.

Learn more about hearing testing .

To find an audiologist near you, visit ProFind .

In the Public Section

- Hearing & Balance

- Speech, Language & Swallowing

- About Health Insurance

- Adding Speech & Hearing Benefits

- Advocacy & Outreach

- Find a Professional

- Advertising Disclaimer

- Advertise with us

ASHA Corporate Partners

- Become A Corporate Partner

The American Speech-Language-Hearing Association (ASHA) is the national professional, scientific, and credentialing association for 234,000 members, certificate holders, and affiliates who are audiologists; speech-language pathologists; speech, language, and hearing scientists; audiology and speech-language pathology assistants; and students.

- All ASHA Websites

- Work at ASHA

- Marketing Solutions

Information For

Get involved.

- ASHA Community

- Become a Mentor

- Become a Volunteer

- Special Interest Groups (SIGs)

Connect With ASHA

American Speech-Language-Hearing Association 2200 Research Blvd., Rockville, MD 20850 Members: 800-498-2071 Non-Member: 800-638-8255

MORE WAYS TO CONNECT

Media Resources

- Press Queries

Site Help | A–Z Topic Index | Privacy Statement | Terms of Use © 1997- American Speech-Language-Hearing Association

Masks Strongly Recommended but Not Required in Maryland

Respiratory viruses continue to circulate in Maryland, so masking remains strongly recommended when you visit Johns Hopkins Medicine clinical locations in Maryland. To protect your loved one, please do not visit if you are sick or have a COVID-19 positive test result. Get more resources on masking and COVID-19 precautions .

- Vaccines

- Masking Guidelines

- Visitor Guidelines

Speech Audiometry

Speech audiometry involves two different tests:

One checks how loud speech needs to be for you to hear it.

The other checks how clearly you can understand and distinguish different words when you hear them spoken.

What Happens During the Test

The tests take 10-15 minutes. You are seated in a sound booth and wear headphones. You will hear a recording of a list of common words spoken at different volumes, and be asked to repeat those words.

Your audiologist will ask you to repeat a list of words to determine your speech reception threshold (SRT) or the lowest volume at which you can hear and recognize speech.

Then, the audiologist will measure speech discrimination — also called word recognition ability. He or she will either say words to you or you will listen to a recording, and then you will be asked to repeat the words. The audiologist will measure your ability to understand speech at a comfortable listening level.

Getting Speech Audiology Test Results

The audiologist will share your test results with you at the completion of testing. Speech discrimination ability is typically measured as a percentage score.

Find a Doctor

Specializing In:

- Sudden Hearing Loss

- Hearing Aids

- Hearing Disorders

- Hearing Loss

- Hearing Restoration

- Implantable Hearing Devices

- Cochlear Implantation

Find a Treatment Center

- Otolaryngology-Head and Neck Surgery

Find Additional Treatment Centers at:

- Howard County Medical Center

- Sibley Memorial Hospital

- Suburban Hospital

Request an Appointment

The Hidden Risks of Hearing Loss

Hearing Loss in Children

What Is an Audiologist

Related Topics

- Aging and Hearing

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Improve recognition accuracy with phrase list

- 1 contributor

A phrase list is a list of words or phrases provided ahead of time to help improve their recognition. Adding a phrase to a phrase list increases its importance, thus making it more likely to be recognized.

Examples of phrases include:

- Geographical locations

- Words or acronyms unique to your industry or organization

Phrase lists are simple and lightweight:

- Just-in-time : A phrase list is provided just before starting the speech recognition, eliminating the need to train a custom model.

- Lightweight : You don't need a large data set. Provide a word or phrase to boost its recognition.

For supported phrase list locales, see Language and voice support for the Speech service .

You can use phrase lists with the Speech Studio , Speech SDK , or Speech Command Line Interface (CLI) . The Batch transcription API doesn't support phrase lists.

You can use phrase lists with both standard and custom speech . There are some situations where training a custom model that includes phrases is likely the best option to improve accuracy. For example, in the following cases you would use custom speech:

- If you need to use a large list of phrases. A phrase list shouldn't have more than 500 phrases.

- If you need a phrase list for languages that aren't currently supported.

Try it in Speech Studio

You can use Speech Studio to test how phrase list would help improve recognition for your audio. To implement a phrase list with your application in production, you use the Speech SDK or Speech CLI.

For example, let's say that you want the Speech service to recognize this sentence: "Hi Rehaan, I'm Jessie from Contoso bank."

You might find that a phrase is incorrectly recognized as: "Hi everyone , I'm Jesse from can't do so bank ."

In the previous scenario, you would want to add "Rehaan", "Jessie", and "Contoso" to your phrase list. Then the names should be recognized correctly.

Now try Speech Studio to see how phrase list can improve recognition accuracy.

You may be prompted to select your Azure subscription and Speech resource, and then acknowledge billing for your region.

- Go to Real-time Speech to text in Speech Studio .

- You test speech recognition by uploading an audio file or recording audio with a microphone. For example, select record audio with a microphone and then say "Hi Rehaan, I'm Jessie from Contoso bank. " Then select the red button to stop recording.

- You should see the transcription result in the Test results text box. If "Rehaan", "Jessie", or "Contoso" were recognized incorrectly, you can add the terms to a phrase list in the next step.

- Select Show advanced options and turn on Phrase list .

- Use the microphone to test recognition again. Otherwise you can select the retry arrow next to your audio file to re-run your audio. The terms "Rehaan", "Jessie", or "Contoso" should be recognized.

Implement phrase list

With the Speech SDK you can add phrases individually and then run speech recognition.

With the Speech CLI you can include a phrase list in-line or with a text file along with the recognize command.

Try recognition from a microphone or an audio file.

You can also add a phrase list using a text file that contains one phrase per line.

Allowed characters include locale-specific letters and digits, white space characters, and special characters such as +, -, $, :, (, ), {, }, _, ., ?, @, \, ’, &, #, %, ^, *, `, <, >, ;, /. Other special characters are removed internally from the phrase.

Check out more options to improve recognition accuracy.

Custom speech

Was this page helpful?

Additional resources

Toggle Menu

Tous droits réservés © NeurOreille (loi sur la propriété intellectuelle 85-660 du 3 juillet 1985). Ce produit ne peut être copié ou utilisé dans un but lucratif.

Journey into the world of hearing

Speech audiometry

Authors: Benjamin Chaix Rebecca Lewis Contributors: Diane Lazard Sam Irving

Facebook Twitter Google+

Speech audiometry is routinely carried out in the clinic. It is complementary to pure tone audiometry, which only gives an indication of absolute perceptual thresholds of tonal sounds (peripheral function), whereas speech audiometry determines speech intelligibility and discrimination (between phonemes). It is of major importance during hearing aid fitting and for diagnosis of certain retrocochlear pathologies (tumour of the auditory nerve, auditory neuropathy, etc.) and tests both peripheral and central systems.

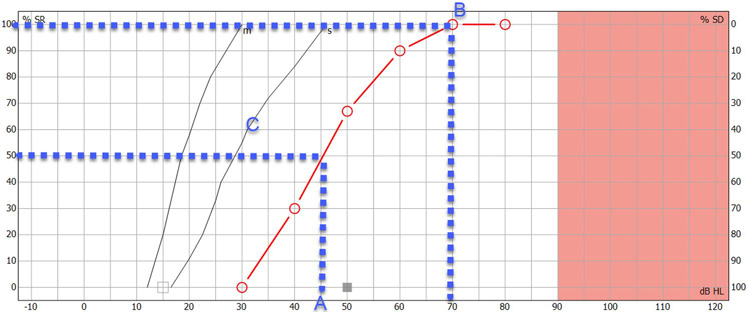

Speech audiogram

Normal hearing and hearing impaired subjects.

The speech recognition threshold (SRT) is the lowest level at which a person can identify a sound from a closed set list of disyllabic words.

The word recognition score (WRS) testrequires a list of single syllable words unknown to the patient to be presented at the speech recognition threshold + 30 dBHL. The number of correct words is scored out of the number of presented words to give the WRS. A score of 85-100% correct is considered normal when pure tone thresholds are normal (A), but it is common for WRS to decrease with increasing sensorineural hearing loss.

The curve 'B', on the other hand, indicates hypoacusis (a slight hearing impairment), and 'C' indicates a profound loss of speech intelligibility with distortion occurring at intensities greater than 80 dB HL.

It is important to distinguish between WRS, which gives an indication of speech comprehension, and SRT, which is the ability to distinguish phonemes.

Phonetic materials and testing conditions

Various tests can be carried out using lists of sentences, monosyllabic or dissyllabic words, or logatomes (words with no meaning, also known as pseudowords). Dissyllabic words require mental substitution (identification by context), the others do not.

A few examples

|

|

| ||

|

Laud Boat Pool Nag Limb Shout Sub Vine Dime Goose |

Pick Room Nice Said Fail South White Keep Dead Loaf | Greyhound Schoolboy Inkwell Whitewash Pancake Mousetrap Eardrum Headlight Birthday Duck pond | |

The test stimuli can be presented through headphones to test each ear separately, or in freefield in a sound attenuated booth to allow binaural hearing to be tested with and without hearing aids or cochlear implants. Test material is adapted to the individual's age and language ability.

What you need to remember

In the case of a conductive hearing loss:

- the response curve has a normal 'S' shape, there is no deformation

- there is a shift to the right compared to the reference (normal threshold)

- there is an increase in the threshold of intelligibility

In the case of sensorineural hearing loss:

- there is an increased intelligibility threshold

- the curve can appear normal except in the higher intensity regions, where deformations indicate distortions

Phonetic testing is also carried out routinely in the clinic (especially in the case of rehabilitation after cochlear implantation). It is relatively long to carry out, but enables the evaluation of the real social and linguistic handicaps experienced by hearing impaired individuals. Cochlear deficits are tested using the “CNC (Consonant Nucleus Consonant) Test” (short words requiring little mental recruitment - errors are apparent on each phoneme and not over the complete word) and central deficits are tested with speech in noise tests, such as the “HINT (Hearing In Noise Test)” or “QuickSIN (Quick Speech In Noise)” tests, which are sentences carried out in noise.

Speech audiometry generally confirms pure tone audiometry results, and provides insight to the perceptual abilities of the individual. The intelligibility threshold is generally equivalent to the average of the intensity of frequencies 500, 1000 and 2000 Hz, determined by tonal audiometry (conversational frequencies). In the case of mismatch between the results of these tests, the diagnostic test used, equipment calibration or the reliability of the responses should be called into question.

Finally, remember that speech audiometry is a more sensitive indicator than pure tone audiometry in many cases, including rehabilitation after cochlear implantation.

Last update: 16/04/2020 8:57 pm

Connexion | Powered by eZPublish - Ligams

Loading metrics

Open Access

Peer-reviewed

Research Article

Language models outperform cloze predictability in a cognitive model of reading

Roles Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Visualization, Writing – original draft, Writing – review & editing

* E-mail: [email protected]

Affiliation Department of Education, Vrije Universiteit Amsterdam, and LEARN! Research Institute, Amsterdam, The Netherlands

Roles Conceptualization, Methodology, Supervision, Writing – review & editing

Affiliation Department of Experimental and Applied Psychology, Vrije Universiteit Amsterdam, Amsterdam, The Netherlands

Roles Conceptualization, Formal analysis, Funding acquisition, Methodology, Project administration, Software, Validation, Writing – review & editing

- Adrielli Tina Lopes Rego,

- Joshua Snell,

- Martijn Meeter

- Published: September 25, 2024

- https://doi.org/10.1371/journal.pcbi.1012117

- Peer Review

- Reader Comments

This is an uncorrected proof.

Although word predictability is commonly considered an important factor in reading, sophisticated accounts of predictability in theories of reading are lacking. Computational models of reading traditionally use cloze norming as a proxy of word predictability, but what cloze norms precisely capture remains unclear. This study investigates whether large language models (LLMs) can fill this gap. Contextual predictions are implemented via a novel parallel-graded mechanism, where all predicted words at a given position are pre-activated as a function of contextual certainty, which varies dynamically as text processing unfolds. Through reading simulations with OB1-reader, a cognitive model of word recognition and eye-movement control in reading, we compare the model’s fit to eye-movement data when using predictability values derived from a cloze task against those derived from LLMs (GPT-2 and LLaMA). Root Mean Square Error between simulated and human eye movements indicates that LLM predictability provides a better fit than cloze. This is the first study to use LLMs to augment a cognitive model of reading with higher-order language processing while proposing a mechanism on the interplay between word predictability and eye movements.

Author summary

Reading comprehension is a crucial skill that is highly predictive of later success in education. One aspect of efficient reading is our ability to predict what is coming next in the text based on the current context. Although we know predictions take place during reading, the mechanism through which contextual facilitation affects oculomotor behaviour in reading is not yet well-understood. Here, we model this mechanism and test different measures of predictability (computational vs. empirical) by simulating eye movements with a cognitive model of reading. Our results suggest that, when implemented with our novel mechanism, a computational measure of predictability provides better fits to eye movements in reading than a traditional empirical measure. With this model, we scrutinize how predictions about upcoming input affects eye movements in reading, and how computational approaches to measuring predictability may support theory testing. Modelling aspects of reading comprehension and testing them against human behaviour contributes to the effort of advancing theory building in reading research. In the longer term, more understanding of reading comprehension may help improve reading pedagogies, diagnoses and treatments.

Citation: Lopes Rego AT, Snell J, Meeter M (2024) Language models outperform cloze predictability in a cognitive model of reading. PLoS Comput Biol 20(9): e1012117. https://doi.org/10.1371/journal.pcbi.1012117

Editor: Ronald van den Berg, Stockholm University, SWEDEN

Received: April 26, 2024; Accepted: September 9, 2024; Published: September 25, 2024

Copyright: © 2024 Lopes Rego et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: All the relevant data and source code used to produce the results and analyses presented in this manuscript are available on a Github repository at https://github.com/dritlopes/OB1-reader-model .

Funding: This study was supported by the Nederlandse Organisatie voor Wetenschappelijk Onderzoek (NWO) Open Competition-SSH (Social Sciences and Humanities) ( https://www.nwo.nl ), 406.21.GO.019 to MM. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors declare that no competing interests exist.

Introduction

Humans can read remarkably efficiently. What underlies efficient reading has been subject of considerable interest in psycholinguistic research. A prominent hypothesis is that we can generally keep up with the rapid pace of language input because language processing is predictive, i.e., as reading unfolds, the reader anticipates some information about the upcoming input [ 1 – 3 ]. Despite general agreement that this is the case, it remains unclear how to best operationalize contextual predictions [ 3 , 4 ]. In current models of reading [ 5 – 9 ]), the influence of prior context on word recognition is operationalized using cloze norming, which is the proportion of participants that complete a textual sequence by answering a given word. However, cloze norming has both theoretical and practical limitations, which are outlined below [ 4 , 10 , 11 ]. To address these concerns, in the present work we explore the use of Large Language Models (LLMs) as an alternative means to account for contextual predictions in computational models of reading. In the remainder of this section, we discuss the limitations of the current implementation of contextual predictions in models of reading, which includes the use of cloze norming, as well as the potential benefits of LLM outputs as a proxy of word predictability. We also offer a novel parsimonious account of how these predictions gradually unfold during text processing.