For IEEE Members

Ieee spectrum, follow ieee spectrum, support ieee spectrum, enjoy more free content and benefits by creating an account, saving articles to read later requires an ieee spectrum account, the institute content is only available for members, downloading full pdf issues is exclusive for ieee members, downloading this e-book is exclusive for ieee members, access to spectrum 's digital edition is exclusive for ieee members, following topics is a feature exclusive for ieee members, adding your response to an article requires an ieee spectrum account, create an account to access more content and features on ieee spectrum , including the ability to save articles to read later, download spectrum collections, and participate in conversations with readers and editors. for more exclusive content and features, consider joining ieee ., join the world’s largest professional organization devoted to engineering and applied sciences and get access to all of spectrum’s articles, archives, pdf downloads, and other benefits. learn more about ieee →, join the world’s largest professional organization devoted to engineering and applied sciences and get access to this e-book plus all of ieee spectrum’s articles, archives, pdf downloads, and other benefits. learn more about ieee →, access thousands of articles — completely free, create an account and get exclusive content and features: save articles, download collections, and talk to tech insiders — all free for full access and benefits, join ieee as a paying member., the real story of stuxnet, how kaspersky lab tracked down the malware that stymied iran’s nuclear-fuel enrichment program.

Update 19 January 2024: The fabled story of the 2007 Stuxnet computer virus continues to fascinate readers, tech enthusiasts, journalists, and lovers of a good whodunnit mystery. So much so that the Dutch newspaper De Volkskrant published the results of a two-year investigative effort to get to the bottom of this sabotage cyberattack that was allegedly carried out by United States and Israeli forces—possibly in league with intelligence agencies in both countries—against Iran’s nuclear program.

The original article, of course, being in Dutch, computer translation software plus the work of security journalists—for instance at Security Week —can at least provide adept readers a glimpse of what a tangled web of deceit was woven in this international tale of high-stakes nuclear espionage. According to the new Dutch reporting, the mystery of how Stuxnet malware was loaded in to the Iranian centrifuges in the first place has now been compounded. Many previous reports, including the story that follows, assert a USB thumb drive provided the vector to infect the Iranian systems. The Volksrant report, however, states that according to their reporting, Stuxnet was instead loaded in to a water pump near the Iranian Natanz nuclear facility .

Yet, as Security Week has found, researcher Ralph Langner has independently determined that, “a water pump cannot carry a copy of Stuxnet,” as he wrote on Twitter/X on 8 January. That, plus some counterclaims about De Volkskrant ’s estimated $1-2 billion pricetag on the effort—far too high, according to some critics —leave the controversies and details far from fully settled. Though now 11 years on from David Kushner’s compelling account for Spectrum of the cyberworm, below, it continues to be the kind of technothriller that can at least keep the curious wanting to know more and the eager inspired to dig deeper still to the ultimate core of this enduring mystery. —IEEE Spectrum

Original article from 26 February 2013 follows:

Computer cables snake across the floor. Cryptic flowcharts are scrawled across various whiteboards adorning the walls. A life-size Batman doll stands in the hall. This office might seem no different than any other geeky workplace, but in fact it’s the front line of a war—a cyberwar, where most battles play out not in remote jungles or deserts but in suburban office parks like this one. As a senior researcher for Kaspersky Lab, a leading computer security firm based in Moscow, Roel Schouwenberg spends his days (and many nights) here at the lab’s U.S. headquarters in Woburn, Mass., battling the most insidious digital weapons ever, capable of crippling water supplies, power plants, banks, and the very infrastructure that once seemed invulnerable to attack.

Recognition of such threats exploded in June 2010 with the discovery of Stuxnet, a 500-kilobyte computer worm that infected the software of at least 14 industrial sites in Iran, including a uranium-enrichment plant. Although a computer virus relies on an unwitting victim to install it, a worm spreads on its own, often over a computer network.

This worm was an unprecedentedly masterful and malicious piece of code that attacked in three phases. First, it targeted Microsoft Windows machines and networks, repeatedly replicating itself. Then it sought out Siemens Step7 software, which is also Windows-based and used to program industrial control systems that operate equipment, such as centrifuges. Finally, it compromised the programmable logic controllers. The worm’s authors could thus spy on the industrial systems and even cause the fast-spinning centrifuges to tear themselves apart, unbeknownst to the human operators at the plant. (Iran has not confirmed reports that Stuxnet destroyed some of its centrifuges.)

Stuxnet could spread stealthily between computers running Windows—even those not connected to the Internet. If a worker stuck a USB thumb drive into an infected machine, Stuxnet could, well, worm its way onto it, then spread onto the next machine that read that USB drive. Because someone could unsuspectingly infect a machine this way, letting the worm proliferate over local area networks, experts feared that the malware had perhaps gone wild across the world.

In October 2012, U.S. defense secretary Leon Panetta warned that the United States was vulnerable to a “cyber Pearl Harbor” that could derail trains, poison water supplies, and cripple power grids. The next month, Chevron confirmed the speculation by becoming the first U.S. corporation to admit that Stuxnet had spread across its machines.

Although the authors of Stuxnet haven’t been officially identified, the size and sophistication of the worm have led experts to believe that it could have been created only with the sponsorship of a nation-state, and although no one’s owned up to it, leaks to the press from officials in the United States and Israel strongly suggest that those two countries did the deed. Since the discovery of Stuxnet, Schouwenberg and other computer-security engineers have been fighting off other weaponized viruses, such as Duqu, Flame, and Gauss, an onslaught that shows no signs of abating.

This marks a turning point in geopolitical conflicts, when the apocalyptic scenarios once only imagined in movies like Live Free or Die Hard have finally become plausible. “Fiction suddenly became reality,” Schouwenberg says. But the hero fighting against this isn’t Bruce Willis; he’s a scruffy 27-year-old with a ponytail. Schouwenberg tells me, “We are here to save the world.” The question is: Does the Kaspersky Lab have what it takes?

Viruses weren’t always this malicious. In the 1990s, when Schouwenberg was just a geeky teen in the Netherlands, malware was typically the work of pranksters and hackers, people looking to crash your machine or scrawl graffiti on your AOL home page.

After discovering a computer virus on his own, the 14-year-old Schouwenberg contacted Kaspersky Lab, one of the leading antivirus companies. Such companies are judged in part on how many viruses they are first to detect, and Kaspersky was considered among the best. But with its success came controversy. Some accused Kaspersky of having ties with the Russian government—accusations the company has denied .

A few years after that first overture, Schouwenberg e-mailed founder Eugene Kaspersky, asking him whether he should study math in college if he wanted to be a security specialist. Kaspersky replied by offering the 17-year-old a job, which he took. After spending four years working for the company in the Netherlands, he went to the Boston area. There, Schouwenberg learned that an engineer needs specific skills to fight malware. Because most viruses are written for Windows, reverse engineering them requires knowledge of x86 assembly language.

Over the next decade, Schouwenberg was witness to the most significant change ever in the industry. The manual detection of viruses gave way to automated methods designed to find as many as 250 000 new malware files each day. At first, banks faced the most significant threats, and the specter of state-against-state cyberwars still seemed distant. “It wasn’t in the conversation,” says Liam O’Murchu, an analyst for Symantec Corp., a computer-security company in Mountain View, Calif.

All that changed in June 2010, when a Belarusian malware-detection firm got a request from a client to determine why its machines were rebooting over and over again. The malware was signed by a digital certificate to make it appear that it had come from a reliable company. This feat caught the attention of the antivirus community, whose automated-detection programs couldn’t handle such a threat. This was the first sighting of Stuxnet in the wild.

The danger posed by forged signatures was so frightening that computer-security specialists began quietly sharing their findings over e-mail and on private online forums. That’s not unusual. “Information sharing [in the] computer-security industry can only be categorized as extraordinary,” adds Mikko H. Hypponen, chief research officer for F-Secure, a security firm in Helsinki, Finland. “I can’t think of any other IT sector where there is such extensive cooperation between competitors.” Still, companies do compete—for example, to be the first to identify a key feature of a cyberweapon and then cash in on the public-relations boon that results.

Before they knew what targets Stuxnet had been designed to go after, the researchers at Kaspersky and other security firms began reverse engineering the code, picking up clues along the way: the number of infections, the fraction of infections in Iran, and the references to Siemens industrial programs, which are used at power plants.

Schouwenberg was most impressed by Stuxnet’s having performed not just one but four zero-day exploits, hacks that take advantage of vulnerabilities previously unknown to the white-hat community. “It’s not just a groundbreaking number; they all complement each other beautifully,” he says. “The LNK [a file shortcut in Microsoft Windows] vulnerability is used to spread via USB sticks. The shared print-spooler vulnerability is used to spread in networks with shared printers, which is extremely common in Internet Connection Sharing networks. The other two vulnerabilities have to do with privilege escalation, designed to gain system-level privileges even when computers have been thoroughly locked down. It’s just brilliantly executed.”

Schouwenberg and his colleagues at Kaspersky soon concluded that the code was too sophisticated to be the brainchild of a ragtag group of black-hat hackers. Schouwenberg believes that a team of 10 people would have needed at least two or three years to create it. The question was, who was responsible?

It soon became clear, in the code itself as well as from field reports, that Stuxnet had been specifically designed to subvert Siemens systems running centrifuges in Iran’s nuclear-enrichment program. The Kaspersky analysts then realized that financial gain had not been the objective. It was a politically motivated attack. “At that point there was no doubt that this was nation-state sponsored,” Schouwenberg says. This phenomenon caught most computer-security specialists by surprise. “We’re all engineers here; we look at code,” says Symantec’s O’Murchu. “This was the first real threat we’ve seen where it had real-world political ramifications. That was something we had to come to terms with.”

Milestones in Malware

Creeper, an experimental self-replicating viral program, is written by Bob Thomas at Bolt, Beranek and Newman. It infected DEC PDP-10 computers running the Tenex operating system. Creeper gained access via the ARPANET, the predecessor of the Internet, and copied itself to the remote system, where the message “I’m the creeper, catch me if you can!” was displayed. The Reaper program was later created to delete Creeper.

Elk Cloner, written for Apple II systems and created by Richard Skrenta, led to the first large-scale computer virus outbreak in history.

The Brain boot sector virus (aka Pakistani flu), the first IBM PC–compatible virus, is released and causes an epidemic. It was created in Lahore, Pakistan, by 19-year-old Basit Farooq Alvi and his brother, Amjad Farooq Alvi.

The Morris worm, created by Robert Tappan Morris, infects DEC VAX and Sun machines running BSD Unix connected to the Internet. It becomes the first worm to spread extensively “in the wild.”

Michelangelo is hyped by computer-security executive John McAfee, who predicted that on 6 March the virus would wipe out information on millions of computers; actual damage was minimal.

The SQL Slammer worm (aka Sapphire worm) attacks vulnerabilities in the Microsoft Structured Query Language Server and Microsoft SQL Server Data Engine and becomes the fastest spreading worm of all time, crashing the Internet within 15 minutes of release.

The Stuxnet worm is detected. It is the first worm known to attack SCADA (supervisory control and data acquisition) systems.

The Duqu worm is discovered. Unlike Stuxnet, to which it seems to be related, it was designed to gather information rather than to interfere with industrial operations.

Flame is discovered and found to be used in cyberespionage in Iran and other Middle Eastern countries.

In May 2012, Kaspersky Lab received a request from the International Telecommunication Union , the United Nations agency that manages information and communication technologies, to study a piece of malware that had supposedly destroyed files from oil-company computers in Iran. By now, Schouwenberg and his peers were already on the lookout for variants of the Stuxnet virus. They knew that in September 2011, Hungarian researchers had uncovered Duqu, which had been designed to steal information about industrial control systems.

While pursuing the U.N.’s request, Kaspersky’s automated system identified another Stuxnet variant. At first, Schouwenberg and his team concluded that the system had made a mistake, because the newly discovered malware showed no obvious similarities to Stuxnet. But after diving into the code more deeply, they found traces of another file, called Flame, that were evident in the early iterations of Stuxnet. At first, Flame and Stuxnet had been considered totally independent, but now the researchers realized that Flame was actually a precursor to Stuxnet that had somehow gone undetected.

Flame was 20 megabytes in total, or some 40 times as big as Stuxnet. Security specialists realized, as Schouwenberg puts it, that “this could be nation-state again.”

To analyze Flame, Kaspersky used a technique it calls the “sinkhole.” This entailed taking control of Flame’s command-and-control server domain so that when Flame tried to communicate with the server in its home base, it actually sent information to Kaspersky’s server instead. It was difficult to determine who owned Flame’s servers. “With all the available stolen credit cards and Internet proxies,” Schouwenberg says, “it’s really quite easy for attackers to become invisible.”

While Stuxnet was meant to destroy things, Flame’s purpose was merely to spy on people. Spread over USB sticks, it could infect printers shared over the same network. Once Flame had compromised a machine, it could stealthily search for keywords on top-secret PDF files, then make and transmit a summary of the document—all without being detected.

Indeed, Flame’s designers went “to great lengths to avoid detection by security software,” says Schouwenberg. He offers an example: Flame didn’t simply transmit the information it harvested all at once to its command-and-control server, because network managers might notice that sudden outflow. “Data’s sent off in smaller chunks to avoid hogging available bandwidth for too long,” he says.

Most impressively, Flame could exchange data with any Bluetooth-enabled device. In fact, the attackers could steal information or install other malware not only within Bluetooth’s standard 30-meter range but also farther out. A “ Bluetooth rifle “—a directional antenna linked to a Bluetooth-enabled computer, plans for which are readily available online—could do the job from nearly 2 kilometers away.

But the most worrisome thing about Flame was how it got onto machines in the first place: via an update to the Windows 7 operating system. A user would think she was simply downloading a legitimate patch from Microsoft, only to install Flame instead. “Flame spreading through Windows updates is more significant than Flame itself,” says Schouwenberg, who estimates that there are perhaps only 10 programmers in the world capable of engineering such behavior. “It’s a technical feat that’s nothing short of amazing, because it broke world-class encryption,” says F-Secure’s Hypponen. “You need a supercomputer and loads of scientists to do this.”

If the U.S. government was indeed behind the worm, this circumvention of Microsoft’s encryption could create some tension between the company and its largest customer, the Feds. “I’m guessing Microsoft had a phone call between Bill Gates, Steve Ballmer, and Barack Obama,” says Hypponen. “I would have liked to listen to that call.”

While reverse engineering Flame, Schouwenberg and his team fine-tuned their “similarity algorithms”—essentially, their detection code—to search for variants built on the same platform. In July, they found Gauss. Its purpose, too, was cybersurveillance.

Carried from one computer to another on a USB stick, Gauss would steal files and gather passwords, targeting Lebanese bank credentials for unknown reasons. (Experts speculate that this was either to monitor transactions or siphon money from certain accounts.) “The USB module grabs information from the system—next to the encrypted payload—and stores this information on the USB stick itself,” Schouwenberg explains. “When this USB stick is then inserted into a Gauss-infected machine, Gauss grabs the gathered data from the USB stick and sends it to the command-and-control server.”

Just as Kaspersky’s engineers were tricking Gauss into communicating with their own servers, those very servers suddenly went down, leading the engineers to think that the malware’s authors were quickly covering their tracks. Kaspersky had already gathered enough information to protect its clients against Gauss, but the moment was chilling. “We’re not sure if we did something and the hackers were onto us,” Schouwenberg says.

The implications of Flame and Stuxnet go beyond state-sponsored cyberattacks. “Regular cybercriminals look at something that Stuxnet is doing and say, that’s a great idea, let’s copy that,” Schouwenberg says.

“The takeaway is that nation-states are spending millions of dollars of development for these types of cybertools, and this is a trend that will simply increase in the future,” says Jeffrey Carr, the founder and CEO of Taia Global, a security firm in McLean, Va. Although Stuxnet may have temporarily slowed the enrichment program in Iran, it did not achieve its end goal. “Whoever spent millions of dollars on Stuxnet, Flame, Duqu, and so on—all that money is sort of wasted. That malware is now out in the public spaces and can be reverse engineered,” says Carr.

Hackers can simply reuse specific components and technology available online for their own attacks. Criminals might use cyberespionage to, say, steal customer data from a bank or simply wreak havoc as part of an elaborate prank. “There’s a lot of talk about nations trying to attack us, but we are in a situation where we are vulnerable to an army of 14-year-olds who have two weeks’ training,” says Schouwenberg.

The vulnerability is great, particularly that of industrial machines. All it takes is the right Google search terms to find a way into the systems of U.S. water utilities, for instance. “What we see is that a lot of industrial control systems are hooked up to the Internet,” says Schouwenberg, “and they don’t change the default password, so if you know the right keywords you can find these control panels.”

Companies have been slow to invest the resources required to update industrial controls. Kaspersky has found critical-infrastructure companies running 30-year-old operating systems. In Washington, politicians have been calling for laws to require such companies to maintain better security practices. One cybersecurity bill, however, was stymied in August on the grounds that it would be too costly for businesses. “To fully provide the necessary protection in our democracy, cybersecurity must be passed by the Congress,” Panetta recently said. “Without it, we are and we will be vulnerable.”

In the meantime, virus hunters at Kaspersky and elsewhere will keep up the fight. “The stakes are just getting higher and higher and higher,” Schouwenberg says. “I’m very curious to see what will happen 10, 20 years down the line. How will history look at the decisions we’ve made?”

This article is for IEEE members only. Join IEEE to access our full archive.

Membership includes:.

- Get unlimited access to IEEE Spectrum content

- Follow your favorite topics to create a personalized feed of IEEE Spectrum content

- Save Spectrum articles to read later

- Network with other technology professionals

- Establish a professional profile

- Create a group to share and collaborate on projects

- Discover IEEE events and activities

- Join and participate in discussions

- Mobile Site

- Staff Directory

- Advertise with Ars

Filter by topic

- Biz & IT

- Gaming & Culture

Front page layout

Policy —

How digital detectives deciphered stuxnet, the most menacing malware in history, it was january 2010 when investigators with the international atomic energy ….

Kim Zetter, wired.com - Jul 11, 2011 2:47 pm UTC

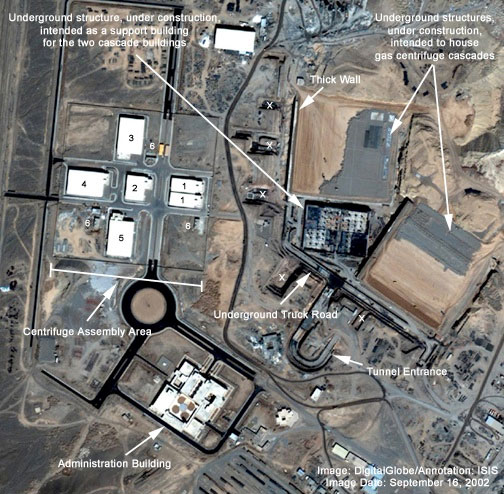

It was January 2010, and investigators with the International Atomic Energy Agency had just completed an inspection at the uranium enrichment plant outside Natanz in central Iran, when they realized that something was off within the cascade rooms where thousands of centrifuges were enriching uranium.

Natanz technicians in white lab coats, gloves, and blue booties were scurrying in and out of the "clean" cascade rooms, hauling out unwieldy centrifuges one by one, each sheathed in shiny silver cylindrical casings.

Any time workers at the plant decommissioned damaged or otherwise unusable centrifuges, they were required to line them up for IAEA inspection to verify that no radioactive material was being smuggled out in the devices before they were removed. The technicians had been doing so for more than a month.

Normally Iran replaced up to 10 percent of its centrifuges a year, due to material defects and other issues. With about 8,700 centrifuges installed at Natanz at the time, it would have been normal to decommission about 800 over the course of the year.

But when the IAEA later reviewed footage from surveillance cameras installed outside the cascade rooms to monitor Iran’s enrichment program, they were stunned as they counted the numbers. The workers had been replacing the units at an incredible rate—later estimates would indicate between 1,000 and 2,000 centrifuges were swapped out over a few months.

The question was, why?

Iran wasn't required to disclose the reason for replacing the centrifuges and, officially, the inspectors had no right to ask. Their mandate was to monitor what happened to nuclear material at the plant, not keep track of equipment failures. But it was clear that something had damaged the centrifuges.

What the inspectors didn't know was that the answer they were seeking was hidden all around them, buried in the disk space and memory of Natanz's computers. Months earlier, in June 2009, someone had silently unleashed a sophisticated and destructive digital worm that had been slithering its way through computers in Iran with just one aim — to sabotage the country’s uranium enrichment program and prevent President Mahmoud Ahmadinejad from building a nuclear weapon.

But it would be nearly a year before the inspectors would learn of this. The answer would come only after dozens of computer security researchers around the world would spend months deconstructing what would come to be known as the most complex malware ever written—a piece of software that would ultimately make history as the world’s first real cyberweapon.

On June 17, 2010, Sergey Ulasen was in his office in Belarus sifting through e-mail when a report caught his eye. A computer belonging to a customer in Iran was caught in a reboot loop—shutting down and restarting repeatedly despite efforts by operators to take control of it. It appeared the machine was infected with a virus.

Ulasen heads an antivirus division of a small computer security firm in Minsk called VirusBlokAda. Once a specialized offshoot of computer science, computer security has grown into a multibillion-dollar industry over the last decade keeping pace with an explosion in sophisticated hack attacks and evolving viruses, Trojan horses, and spyware programs.

The best security specialists, like Bruce Schneier, Dan Kaminsky, and Charlie Miller are considered rock stars among their peers, and top companies like Symantec, McAfee, and Kaspersky have become household names, protecting everything from grandmothers' laptops to sensitive military networks.

VirusBlokAda, however, was no rock star nor a household name. It was an obscure company that even few in the security industry had heard of. But that would shortly change.

"If I turn up dead and I committed suicide on Monday, I just want to tell you guys, I'm not suicidal."—Liam O Murchu

Ulasen's research team got hold of the virus infecting their client's computer and realized it was using a “zero-day” exploit to spread. Zero-days are the hacking world’s most potent weapons: They exploit vulnerabilities in software that are yet unknown to the software maker or antivirus vendors. They’re also exceedingly rare; it takes considerable skill and persistence to find such vulnerabilities and exploit them. Out of more than 12 million pieces of malware that antivirus researchers discover each year, fewer than a dozen use a zero-day exploit.

In this case, the exploit allowed the virus to cleverly spread from one computer to another via infected USB sticks. The vulnerability was in the LNK file of Windows Explorer, a fundamental component of Microsoft Windows. When an infected USB stick was inserted into a computer, as Explorer automatically scanned the contents of the stick, the exploit code awakened and surreptitiously dropped a large, partially encrypted file onto the computer, like a military transport plane dropping camouflaged soldiers into target territory.

It was an ingenious exploit that seemed obvious in retrospect, since it attacked such a ubiquitous function. It was also one, researchers would soon learn to their surprise, that had been used before.

VirusBlokAda contacted Microsoft to report the vulnerability, and on July 12, as the software giant was preparing a patch, VirusBlokAda went public with the discovery in a post to a security forum. Three days later, security blogger Brian Krebs picked up the story, and antivirus companies around the world scrambled to grab samples of the malware—dubbed Stuxnet by Microsoft from a combination of file names (.stub and MrxNet.sys) found in the code.

As the computer security industry rumbled into action, decrypting and deconstructing Stuxnet, more assessments filtered out.

It turned out the code had been launched into the wild as early as a year before, in June 2009, and its mysterious creator had updated and refined it over time, releasing three different versions. Notably, one of the virus’s driver files used a valid signed certificate stolen from RealTek Semiconductor, a hardware maker in Taiwan, in order to fool systems into thinking the malware was a trusted program from RealTek.

Internet authorities quickly revoked the certificate. But another Stuxnet driver was found using a second certificate, this one stolen from JMicron Technology, a circuit maker in Taiwan that was—coincidentally or not—headquartered in the same business park as RealTek. Had the attackers physically broken into the companies to steal the certificates? Or had they remotely hacked them to swipe the company’s digital certificate-signing keys? No one knew.

“We rarely see such professional operations,” wrote ESET, a security firm that found one of the certificates, on its blog. "This shows [the attackers] have significant resources."

In other ways, though, Stuxnet seemed routine and unambitious in its aims. Experts determined that the virus was designed to target Simatic WinCC Step7 software, an industrial control system made by the German conglomerate Siemens that was used to program controllers that drive motors, valves and switches in everything from food factories and automobile assembly lines to gas pipelines and water treatment plants.

Although this was new in itself—control systems aren’t a traditional hacker target, because there’s no obvious financial gain in hacking them—what Stuxnet did to the Simatic systems wasn't new. It appeared to be simply stealing configuration and design data from the systems, presumably to allow a competitor to duplicate a factory's production layout. Stuxnet looked like just another case of industrial espionage.

Antivirus companies added signatures for various versions of the malware to their detection engines, and then for the most part moved on to other things.

The story of Stuxnet might have ended there. But a few researchers weren’t quite ready to let it go.

reader comments

Channel ars technica.

More From Forbes

The story behind the stuxnet virus.

- Share to Facebook

- Share to Twitter

- Share to Linkedin

Computer security experts are often surprised at which stories get picked up by the mainstream media. Sometimes it makes no sense. Why this particular data breach, vulnerability, or worm and not others? Sometimes it's obvious. In the case of Stuxnet, there's a great story.

As the story goes, the Stuxnet worm was designed and released by a government--the U.S. and Israel are the most common suspects--specifically to attack the Bushehr nuclear power plant in Iran. How could anyone not report that? It combines computer attacks, nuclear power, spy agencies and a country that's a pariah to much of the world. The only problem with the story is that it's almost entirely speculation.

Here's what we do know: Stuxnet is an Internet worm that infects Windows computers. It primarily spreads via USB sticks, which allows it to get into computers and networks not normally connected to the Internet. Once inside a network, it uses a variety of mechanisms to propagate to other machines within that network and gain privilege once it has infected those machines. These mechanisms include both known and patched vulnerabilities, and four "zero-day exploits": vulnerabilities that were unknown and unpatched when the worm was released. (All the infection vulnerabilities have since been patched.)

Stuxnet doesn't actually do anything on those infected Windows computers, because they're not the real target. What Stuxnet looks for is a particular model of Programmable Logic Controller (PLC) made by Siemens (the press often refers to these as SCADA systems, which is technically incorrect). These are small embedded industrial control systems that run all sorts of automated processes: on factory floors, in chemical plants, in oil refineries, at pipelines--and, yes, in nuclear power plants. These PLCs are often controlled by computers, and Stuxnet looks for Siemens SIMATIC WinCC/Step 7 controller software.

If it doesn't find one, it does nothing. If it does, it infects it using yet another unknown and unpatched vulnerability, this one in the controller software. Then it reads and changes particular bits of data in the controlled PLCs. It's impossible to predict the effects of this without knowing what the PLC is doing and how it is programmed, and that programming can be unique based on the application. But the changes are very specific, leading many to believe that Stuxnet is targeting a specific PLC, or a specific group of PLCs, performing a specific function in a specific location--and that Stuxnet's authors knew exactly what they were targeting.

It's already infected more than 50,000 Windows computers, and Siemens has reported 14 infected control systems, many in Germany. (These numbers were certainly out of date as soon as I typed them.) We don't know of any physical damage Stuxnet has caused, although there are rumors that it was responsible for the failure of India's INSAT-4B satellite in July. We believe that it did infect the Bushehr plant.

All the anti-virus programs detect and remove Stuxnet from Windows systems.

Stuxnet was first discovered in late June, although there's speculation that it was released a year earlier. As worms go, it's very complex and got more complex over time. In addition to the multiple vulnerabilities that it exploits, it installs its own driver into Windows. These have to be signed, of course, but Stuxnet used a stolen legitimate certificate. Interestingly, the stolen certificate was revoked on July 16, and a Stuxnet variant with a different stolen certificate was discovered on July 17.

Over time the attackers swapped out modules that didn't work and replaced them with new ones--perhaps as Stuxnet made its way to its intended target. Those certificates first appeared in January. USB propagation, in March.

Stuxnet has two ways to update itself. It checks back to two control servers, one in Malaysia and the other in Denmark, but also uses a peer-to-peer update system: When two Stuxnet infections encounter each other, they compare versions and make sure they both have the most recent one. It also has a kill date of June 24, 2012. On that date, the worm will stop spreading and delete itself.

We don't know who wrote Stuxnet. We don't know why. We don't know what the target is, or if Stuxnet reached it. But you can see why there is so much speculation that it was created by a government.

Stuxnet doesn't act like a criminal worm. It doesn't spread indiscriminately. It doesn't steal credit card information or account login credentials. It doesn't herd infected computers into a botnet. It uses multiple zero-day vulnerabilities. A criminal group would be smarter to create different worm variants and use one in each. Stuxnet performs sabotage. It doesn't threaten sabotage, like a criminal organization intent on extortion might.

Stuxnet was expensive to create. Estimates are that it took 8 to 10 people six months to write. There's also the lab setup--surely any organization that goes to all this trouble would test the thing before releasing it--and the intelligence gathering to know exactly how to target it. Additionally, zero-day exploits are valuable. They're hard to find, and they can only be used once. Whoever wrote Stuxnet was willing to spend a lot of money to ensure that whatever job it was intended to do would be done.

None of this points to the Bushehr nuclear power plant in Iran, though. Best I can tell, this rumor was started by Ralph Langner , a security researcher from Germany. He labeled his theory "highly speculative," and based it primarily on the facts that Iran had an usually high number of infections (the rumor that it had the most infections of any country seems not to be true), that the Bushehr nuclear plant is a juicy target, and that some of the other countries with high infection rates--India, Indonesia, and Pakistan--are countries where the same Russian contractor involved in Bushehr is also involved. This rumor moved into the computer press and then into the mainstream press, where it became the accepted story, without any of the original caveats.

Once a theory takes hold, though, it's easy to find more evidence . The word "myrtus" appears in the worm: an artifact that the compiler left, possibly by accident. That's the myrtle plant. Of course, that doesn't mean that druids wrote Stuxnet. According to the story, it refers to Queen Esther, also known as Hadassah; she saved the Persian Jews from genocide in the 4th century B.C. "Hadassah" means "myrtle" in Hebrew.

Stuxnet also sets a registry value of "19790509" to alert new copies of Stuxnet that the computer has already been infected. It's rather obviously a date, but instead of looking at the gazillion things--large and small--that happened on that the date, the story insists it refers to the date Persian Jew Habib Elghanain was executed in Tehran for spying for Israel.

Sure, these markers could point to Israel as the author. On the other hand, Stuxnet's authors were uncommonly thorough about not leaving clues in their code; the markers could have been deliberately planted by someone who wanted to frame Israel. Or they could have been deliberately planted by Israel, who wanted us to think they were planted by someone who wanted to frame Israel. Once you start walking down this road, it's impossible to know when to stop.

Another number found in Stuxnet is 0xDEADF007. Perhaps that means "Dead Fool" or "Dead Foot," a term that refers to an airplane engine failure. Perhaps this means Stuxnet is trying to cause the targeted system to fail. Or perhaps not. Still, a targeted worm designed to cause a specific sabotage seems to be the most likely explanation.

If that's the case, why is Stuxnet so sloppily targeted? Why doesn't Stuxnet erase itself when it realizes it's not in the targeted network? When it infects a network via USB stick, it's supposed to only spread to three additional computers and to erase itself after 21 days--but it doesn't do that. A mistake in programming, or a feature in the code not enabled? Maybe we're not supposed to reverse engineer the target. By allowing Stuxnet to spread globally, its authors committed collateral damage worldwide. From a foreign policy perspective, that seems dumb. But maybe Stuxnet's authors didn't care.

My guess is that Stuxnet's authors, and its target, will forever remain a mystery.

Bruce Schneier is a security technologist and the chief security technology officer of computer security firm BT. Read more of his writing at www.schneier.com .

See Also: The Internet: Anonymous Forever Google And Facebook's Privacy Illusion McAfee And The Software Side Of Intel

- Editorial Standards

- Reprints & Permissions

Stanford University

The Center for International Security and Cooperation is a center of the Freeman Spogli Institute for International Studies .

Stuxnet: The world's first cyber weapon

- By Joshua Alvarez

The United States has thrust itself and the world into the era of cyber warfare, Kim Zetter , an award-winning cybersecurity journalist for WIRED magazine, told a Stanford audience. Zetter discussed her book “Countdown to Zero Day,” which details the discovery and unraveling of Stuxnet, the world’s first cyber weapon.

Stuxnet was the name given to a highly complex digital malware that targeted, and physically damaged, Iran’s clandestine nuclear program from 2007 until its cover was blown in 2010 by computer security researchers. The malware targeted the computer systems controlling physical infrastructure such as centrifuges and gas valves.

Reports following its discovery attributed the creation and deployment of Stuxnet to the United States and Israel. The New York Times quoted anonymous U.S. officials claiming responsibility for Stuxnet.

Zetter began reporting on the cyber weapon in 2010.

“When the first news came out, I didn’t think much of it,” Zetter told a CISAC seminar on Monday. The title of her book refers to a “zero-day attack," which exploits a previously unknown vulnerability in a computer application or operating system.

“Watching the Symantec researchers unravel Stuxnet , I knew what fascinated me was the process and brilliance of the researchers. The detective story is what pulled me in.”

Zetter’s book follows computer security researchers from around the world as they discover and disassemble Stuxnet over the course of months, much longer than any time spent on typical malware. The realization that Stuxnet was the world’s first cyber weapon sent shock waves throughout the tech community, yet did not create as much of a stir in mainstream society.

“It’s funny because a lot of people still don’t know Stuxnet or haven’t even heard of it,” Zetter said. “The recent vandalization of Sony seems to have finally gotten people’s attention. It was not a case of true cyber warefare, but I'm glad that my book came out right before it happened because its perception as a nation-state attack has led to interest in all nation-state attacks, including Stuxnet. The Snowden leaks also put cyber warfare on the map.”

“Countdown to Zero” also places Stuxnet in political context. The first version of Stuxnet was built and unleashed by the Bush administration in 2007, according to Zetter. Iran accelerated its enrichment process in 2008, leading to fears it would have enough uranium to build a bomb by 2010. President Barack Obama inherited the program; he not only continued it,but accelerated it. Another, more aggressive version of Stuxnet was unleashed in June 2009 and again in 2010. Obama gave the order to unleash Stuxnet while publicly demanding Iran to open itself up to negotiations.

The effectiveness of the world’s first cyber weapon remains a subject of debate. The most optimistic assessment of Stuxnet is that it delayed and slowed Iran’s uranium development enough to dissuade Israel from unilaterally striking the country, and it afforded time for intelligence and diplomatic efforts. Stuxnet contributed to dissension and frustration among the upper ranks of Iran’s government (the head of Iran’s nuclear program was replaced) and bought time for harsh economic sanctions to impact the Iranian public.

“Stuxnet actually had very little effect on Iran’s nuclear program,” said Zetter. “It was premature, it could have had a much bigger effect had the attackers waited.” Iran still made a net gain in their uranium stockpile while being attacked and they are updating their centrifuges, which would make Stuxnet obsolete.

The more unsettling parts of Zetter’s book catalog security vulnerabilities in America’s public infrastructure, which could easily be victim to a Stuxnet-style attack, and consider the implications of the era Stuxnet heralded. For example, in 2001 hackers attacked California ISO , a nonprofit corporation that manages the transmission system for moving electricity throughout most of California. More recently, Zetter writes, in 2011 a security research team “penetrated the remote-access system for a Southern California water plant and was able to take control of equipment the facility used for adding chemicals to drinking water.”

The Obama administration has publicly announced that shoring up infrastructure security is a top priority. Zetter finds this ironic, because unleashing Stuxnet has opened the U.S. up to attacks using the same malware.

“When you launch a cyber weapon, you don’t just send the weapon to your enemies, you send the intellectual property that created it and the ability to launch the weapon back against you,” writes Zetter. “Marcus Ranum, one of the early innovators of the computer firewall, called Stuxnet ‘a stone thrown by people who live in a glass house.’”

More broadly, Stuxnet heralded an era of cyber warfare that could prove to be more destructive than the nuclear era. For Zetter there is also irony to the use of cyber weapons to combat nuclear weapons. She quotes Kennette Benedict, the executive director of the “Bulletin of the Atomic Scientists,” pointing out, “that the first acknowledged military use of cyber warfare is ostensibly to prevent the spread of nuclear weapons. A new age of mass destruction will begin in an effort to close a chapter from the first age of mass destruction.”

Zetter has similar fears.

“The U.S. lost the moral high ground from where it could tell other countries to not use digital weapons to resolve disputes,” Zetter said. “No one has been killed by a cyber attack, but I think it’s only a matter of time.”

Joshua Alvarez was a 2012 CISAC Honors Student.

Stuxnet Worm Attack on Iranian Nuclear Facilities

Michael holloway july 16, 2015, submitted as coursework for ph241 , stanford university, winter 2015, stuxnet background.

| This is a diagram of the connection between the Siemens Step7 software and the programmable logic controllers of a nuclear reactor. (Source: ) |

The Stuxnet Worm first emerged during the summer of 2010. Stuxnet was a 500-kilobyte computer worm that infiltrated numerous computer systems. [1] This virus operated in three steps. First, it analyzed and targeted Windows networks and computer systems. The worm, having infiltrated these machines, began to continually replicate itself. [1] Next, the machine infiltrated the Windows-based Siemens Step7 software. This Siemens software system was and continues to be prevalent in industrial computing networks, such as nuclear enrichment facilities. Lastly, by compromising the Step7 software, the worm gained access to the industrial program logic controllers. [1] This connection is illustrated in Fig. 1. This final step gave the worm's creators access to crucial industrial information as well as giving them the ability to operate various machinery at the individual industrial sites. [2] The replication process previously discussed is what made the worm so prevalent. It was so invasive that if a USB was plugged into an effected system, the worm would infiltrate the USB device and spread to any subsequent computing systems that the USB was plugged in to. [2]

Stuxnet Effect on Iran

Over fifteen Iranian facilities were attacked and infiltrated by the Stuxnet worm. It is believed that this attack was initiated by a random worker's USB drive. One of the affected industrial facilities was the Natanz nuclear facility. [1] The fist signs that an issue existed in the nuclear facility's computer system in 2010. Inspectors from the International Atomic Energy Agency visited the Natanz facility and observed that a strange number of uranium enriching centrifuges were breaking. [3] The cause of these failures was unknown at the time. Later in 2010, Iran technicians contracted computer security specialists in Belarus to examine their computer systems. [3] This security firm eventually discovered multiple malicious files on the Iranian computer systems. It has subsequently revealed that these malicious files were the Stuxnet worm. [3] Although Iran has not released specific details regarding the effects of the attack, it is currently estimated that the Stuxnet worm destroyed 984 uranium enriching centrifuges. By current estimations this constituded a 30% decrease in enrichment efficiency. [1]

Stuxnet Worm Origin Speculation

Many media members have speculated on who designed the Stuxnet worm and who was responsible for using it to essentially attack Iran's nuclear facility. It is currently agreed upon that this worm was designed as a cyber weapon to attack the development of Iran's nuclear development program. [1] However, the designers of the worm are still unknown. [4] Many experts suggest that the Stuxnet worm attack on the Iranian nuclear facilities was a joint operation between the United States and Israel. Edward Snowden, the NSA whistleblower, said that this was the case in 2013. [4] Despite this speculation, there is still no concrete evidence as to who designed the original cyber weapon.

© Michael Holloway. The author grants permission to copy, distribute and display this work in unaltered form, with attribution to the author, for noncommercial purposes only. All other rights, including commercial rights, are reserved to the author.

[1] W. Broad, J. Markoff, and D. Sanger, " Israeli Test on Worm Called Crucial in Iran Nuclear Delay ," New York Times, 15 Jan 11.

[2] D. Kushner, " The Real Story of Stuxnet ," IEEE Spectrum 53 , No. 3, 48 (2013).

[3] B. Kesler, " The Vulnerability of Nuclear Facilities to Cyber Attack ," Strategic Insights 10 , 15 (2011).

[4] K. Zetter, Countdown to Zero Day: Stuxnet and the Launch of the World's First Digital Weapon (Crown, 2014).

[5] J. Grayson, " Stuxnet and Iran's Nuclear Program ," Physics 241, 7 Mar 11

IEEE Account

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Schneier on Security

Home Essays

The Story Behind The Stuxnet Virus

- Bruce Schneier

- October 7, 2010

Computer security experts are often surprised at which stories get picked up by the mainstream media. Sometimes it makes no sense. Why this particular data breach, vulnerability, or worm and not others? Sometimes it’s obvious. In the case of Stuxnet, there’s a great story.

As the story goes, the Stuxnet worm was designed and released by a government—the U.S. and Israel are the most common suspects—specifically to attack the Bushehr nuclear power plant in Iran. How could anyone not report that? It combines computer attacks, nuclear power, spy agencies and a country that’s a pariah to much of the world. The only problem with the story is that it’s almost entirely speculation.

Here’s what we do know: Stuxnet is an Internet worm that infects Windows computers. It primarily spreads via USB sticks, which allows it to get into computers and networks not normally connected to the Internet. Once inside a network, it uses a variety of mechanisms to propagate to other machines within that network and gain privilege once it has infected those machines. These mechanisms include both known and patched vulnerabilities, and four “zero-day exploits”: vulnerabilities that were unknown and unpatched when the worm was released. (All the infection vulnerabilities have since been patched.)

Stuxnet doesn’t actually do anything on those infected Windows computers, because they’re not the real target. What Stuxnet looks for is a particular model of Programmable Logic Controller (PLC) made by Siemens (the press often refers to these as SCADA systems, which is technically incorrect). These are small embedded industrial control systems that run all sorts of automated processes: on factory floors, in chemical plants, in oil refineries, at pipelines—and, yes, in nuclear power plants. These PLCs are often controlled by computers, and Stuxnet looks for Siemens SIMATIC WinCC/Step 7 controller software.

If it doesn’t find one, it does nothing. If it does, it infects it using yet another unknown and unpatched vulnerability, this one in the controller software. Then it reads and changes particular bits of data in the controlled PLCs. It’s impossible to predict the effects of this without knowing what the PLC is doing and how it is programmed, and that programming can be unique based on the application. But the changes are very specific, leading many to believe that Stuxnet is targeting a specific PLC, or a specific group of PLCs, performing a specific function in a specific location—and that Stuxnet’s authors knew exactly what they were targeting.

It’s already infected more than 50,000 Windows computers, and Siemens has reported 14 infected control systems, many in Germany. (These numbers were certainly out of date as soon as I typed them.) We don’t know of any physical damage Stuxnet has caused, although there are rumors that it was responsible for the failure of India’s INSAT-4B satellite in July. We believe that it did infect the Bushehr plant.

All the anti-virus programs detect and remove Stuxnet from Windows systems.

Stuxnet was first discovered in late June, although there’s speculation that it was released a year earlier. As worms go, it’s very complex and got more complex over time. In addition to the multiple vulnerabilities that it exploits, it installs its own driver into Windows. These have to be signed, of course, but Stuxnet used a stolen legitimate certificate. Interestingly, the stolen certificate was revoked on July 16, and a Stuxnet variant with a different stolen certificate was discovered on July 17.

Over time the attackers swapped out modules that didn’t work and replaced them with new ones—perhaps as Stuxnet made its way to its intended target. Those certificates first appeared in January. USB propagation, in March.

Stuxnet has two ways to update itself. It checks back to two control servers, one in Malaysia and the other in Denmark, but also uses a peer-to-peer update system: When two Stuxnet infections encounter each other, they compare versions and make sure they both have the most recent one. It also has a kill date of June 24, 2012. On that date, the worm will stop spreading and delete itself.

We don’t know who wrote Stuxnet. We don’t know why. We don’t know what the target is, or if Stuxnet reached it. But you can see why there is so much speculation that it was created by a government.

Stuxnet doesn’t act like a criminal worm. It doesn’t spread indiscriminately. It doesn’t steal credit card information or account login credentials. It doesn’t herd infected computers into a botnet. It uses multiple zero-day vulnerabilities. A criminal group would be smarter to create different worm variants and use one in each. Stuxnet performs sabotage. It doesn’t threaten sabotage, like a criminal organization intent on extortion might.

Stuxnet was expensive to create. Estimates are that it took 8 to 10 people six months to write. There’s also the lab setup—surely any organization that goes to all this trouble would test the thing before releasing it—and the intelligence gathering to know exactly how to target it. Additionally, zero-day exploits are valuable. They’re hard to find, and they can only be used once. Whoever wrote Stuxnet was willing to spend a lot of money to ensure that whatever job it was intended to do would be done.

None of this points to the Bushehr nuclear power plant in Iran, though. Best I can tell, this rumor was started by Ralph Langner , a security researcher from Germany. He labeled his theory “highly speculative,” and based it primarily on the facts that Iran had an usually high number of infections (the rumor that it had the most infections of any country seems not to be true), that the Bushehr nuclear plant is a juicy target, and that some of the other countries with high infection rates—India, Indonesia, and Pakistan—are countries where the same Russian contractor involved in Bushehr is also involved. This rumor moved into the computer press and then into the mainstream press, where it became the accepted story, without any of the original caveats.

Once a theory takes hold, though, it’s easy to find more evidence . The word “myrtus” appears in the worm: an artifact that the compiler left, possibly by accident. That’s the myrtle plant. Of course, that doesn’t mean that druids wrote Stuxnet. According to the story, it refers to Queen Esther, also known as Hadassah; she saved the Persian Jews from genocide in the 4th century B.C. “Hadassah” means “myrtle” in Hebrew.

Stuxnet also sets a registry value of “19790509” to alert new copies of Stuxnet that the computer has already been infected. It’s rather obviously a date, but instead of looking at the gazillion things—large and small—that happened on that the date, the story insists it refers to the date Persian Jew Habib Elghanain was executed in Tehran for spying for Israel.

Sure, these markers could point to Israel as the author. On the other hand, Stuxnet’s authors were uncommonly thorough about not leaving clues in their code; the markers could have been deliberately planted by someone who wanted to frame Israel. Or they could have been deliberately planted by Israel, who wanted us to think they were planted by someone who wanted to frame Israel. Once you start walking down this road, it’s impossible to know when to stop.

Another number found in Stuxnet is 0xDEADF007. Perhaps that means “Dead Fool” or “Dead Foot,” a term that refers to an airplane engine failure. Perhaps this means Stuxnet is trying to cause the targeted system to fail. Or perhaps not. Still, a targeted worm designed to cause a specific sabotage seems to be the most likely explanation.

If that’s the case, why is Stuxnet so sloppily targeted? Why doesn’t Stuxnet erase itself when it realizes it’s not in the targeted network? When it infects a network via USB stick, it’s supposed to only spread to three additional computers and to erase itself after 21 days—but it doesn’t do that. A mistake in programming, or a feature in the code not enabled? Maybe we’re not supposed to reverse engineer the target. By allowing Stuxnet to spread globally, its authors committed collateral damage worldwide. From a foreign policy perspective, that seems dumb. But maybe Stuxnet’s authors didn’t care.

My guess is that Stuxnet’s authors, and its target, will forever remain a mystery.

Categories: Computer and Information Security

Tags: Forbes

Sidebar photo of Bruce Schneier by Joe MacInnis.

Powered by WordPress Hosted by Pressable

- Senior Fellows

- Research Fellows

- Submission Guidelines

- Media Inquiries

- Commentary & Analysis

Upcoming Events

- Past Events

- October 2021 War Studies Conference

- November 2020 War Studies Conference

- November 2018 War Studies Conference

- March 2018 War Studies Conference

- November 2016 War Studies Conference

- Class of 1974 MWI Podcast

- Urban Warfare Project Podcast

- Social Science of War

- Urban Warfare Project

- Project 6633

- Shield Notes

- Rethinking Civ-Mil

- Book Reviews

Select Page

Stuxnet: A Digital Staff Ride

James Long | 03.08.19

At the risk of stating the obvious, the era of cyber war is here. Russia’s use of cyber capabilities in Ukraine to deny communications and locate Ukrainian military units for destruction by artillery demonstrates cyber’s battlefield utility. And it has become an assumed truth that in any future conflict, the United States’ adversaries will pursue asymmetric strategies to mitigate traditional US advantages in overwhelming firepower. As US military forces around the world expand their digital footprints, cyberattacks will grow in frequency and sophistication. As a result, commanders at all levels must take defensive measures or risk being exposed to what the 2018 Department of Defense Cyber Strategy described as “urgent and unacceptable risk to the Nation.”

The creation and growth of the US Army’s cyber branch speaks to institutional acceptance of cyber operations in modern warfare, but many leaders remain ignorant about the nature of cyberattacks and best practices to protect their formations. The Army’s preferred use of historical case studies to inform current tactics, techniques, and procedures runs into a problem here, given the dearth of such cyber case studies. One of the few is Stuxnet, the first recognized cyberattack to physically destroy key infrastructure—Iranian enrichment centrifuges in Natanz. So how should an analysis of it influence the way Army leaders think about cyber?

Stuxnet, for the purposes of this analysis, is a collective term for the malware’s multiple permutations from 2007 to 2009. Lockheed Martin has created a model it calls the “Cyber Kill Chain,” which offers a useful framework within which to analyze Stuxnet. The model deconstructs cyberattacks into kill chains, comprised of a fluid sequence of reconnaissance, weaponization, delivery, exploitation, installation, command and control, and actions on objectives. This analysis allows US commanders to learn how hackers exploited Iranian vulnerabilities to comprise vital security infrastructure, allowing them to guard against similar threats in multi-domain environments.

The Stuxnet Kill Chain

Reconnaissance

Successful kill chains precisely exploit vulnerable centers of gravity within target networks to inflict disproportionate impact. Open-source intelligence, from press releases to social media, are combined with social network engineering to identify targets and reveal entry points.

Stuxnet’s developers likely targeted the Iranian nuclear program’s enrichment centrifuges because enrichment is capital-intensive and a prerequisite for weapons development. Enrichment centrifuges are bundled into cascades whose operations are precisely regulated by industrial control systems (ICSs) that monitor and control particle movements, presenting network-control and mechanical vulnerabilities. The omnipresence of ICSs in key infrastructure, and their role as the insurance against problems, underscores the potency of Stuxnet attacking this vulnerability.

Reconnaissance efforts were likely aided by Iranian press releases and photographs of key leader visits, which were intended to generate internal support for the program but also revealed technical equipment information. These media releases could be complemented by inspection reports from the International Atomic Energy Agency describing the program’s scale and the types of enrichment equipment employed.

These sources would have helped identify Iranian enrichment centrifuges as designs that came from A.Q. Khan’s P-1 centrifuge (Pakistan’s first generation), comparable to Libyan and North Korean models. The P-1’s cascades connect centrifuges spinning at hypersonic speeds with pipes separating uranium isotopes. Natanz was the heart of the enrichment program and contained over six thousand centrifuges organized into 164 cascades in a series of stages. The centrifuge count expanded to 7,052 by June 2009 , including 4,092 enriching gas within eighteen cascades in unit A24 plus another twelve in A26. Iranian expansion in centrifuge volume was complemented by technological improvements as output from three thousand IR-1 systems was replaced with only twelve hundred IR-2 centrifuges, producing 839 kilograms of low-enriched uranium—enough to produce two nuclear weapons.

Analysis of open-source intelligence could also have revealed the particular systems— manufactured by the German company Siemens —that Iran employed to operate centrifuges, creating the initial target and subsequent transition to researching exploitable vulnerabilities.

Weaponization

After deciding to target the Siemens control system, exploring centrifuge vulnerabilities was essential for turning the systems responsible for safe plant operations into weapons. Research into vulnerabilities of industrial control systems was undertaken by a Department of Homeland Security program with the Idaho National Laboratory , which had run the 2007 Aurora Project that discovered that malware could cause physical damage to physical equipment like the large turbines used in the power grid by forcing valve releases and other components out of synchronization. In 2008 the Idaho National Laboratory conducted extensive vulnerability testing of Siemens ICSs, finding vulnerabilities later exploited by Stuxnet.

These vulnerabilities could have been used to develop the malware through tests at the Israeli nuclear enrichment facility at Dimona . What emerged was a “dual warhead” virus that destroyed centrifuges by hyper-accelerating rotations and avoided detection through a “man in the middle” attack showing false readings to mechanical control stations. The 500-kilobyte malware had tailored engagement criteria for a three-phase attack : first it would replicate within Microsoft networks, then target specific Siemens software used in ICSs, before finally focusing on programmable logic controllers.

Introducing the dual warhead’s malicious payload into Natanz required overcoming the air gap that intentionally isolated it from the internet, designed to prevent direct-attack strategies like spear phishing. Hackers gained indirect access through third-party attacks of contractors with weaker digital security systems and access to Natanz. The virus lay dormant in their systems before automatically writing itself onto USB drives inserted into their computers that were carried into Natanz.

Multiple third-party hosts could have played this role , but Behpajooh was a prime candidate, as the company worked on ICSs, was located near Iran’s Nuclear Technology Center, and had been targeted by US federal investigators for illegal procurement activities.

Exploit ation

After accessing Natanz through USB drives , Stuxnet circumvented existing malware-detection systems protecting enrichment infrastructure through zero-day exploits (previously unused security exploits) of Microsoft systems, including faking digital security certificates and spreading between computers connected to networked hardware like printers. This enabled rapid network exploitation.

Installation

After exploiting security gaps, Stuxnet infected computers by worm ing across networks and mass-replicating through self-installation, avoiding detection by remaining dormant until finding files indicating the host was associated with centrifuge control systems.

Command and Control

While malware often operates as an extension of an external attacker’s commands, the complex security breaches required to access Natanz forced Stuxnet to operate semi-independently to prevent network security from detecting signals reaching outside Natanz. Hackers mitigated some command limitations by writing the code to automatically update on contact with any Stuxnet code with a more recent date stamp.

Actions on Objectives

After accessing Siemens computers, Stuxnet gathered operational intelligence on network baselines before attacking centrifuges. When it did attack, it used the dual-purpose warhead to send “accurate” false reports to scientists monitoring the centrifuges while manipulating cascade rotational frequency until the centrifuges self-destructed. By 2009, Stuxnet destroyed an estimated 984 centrifuges and decreased enrichment efficiency by 30 percent, sending the enrichment program back years and causing significantly delays in establishing the Bushehr Nuclear Power Plant .

The virus was discovered after Iranian scientists contacted security specialists at Kaspersky Labs in Belarus to diagnose Microsoft operating system errors that were causing computers to continuously reboot. Stuxnet’s sophistication led experts to believe development required nation-state support and Kasperky warned that it would “ lead to the creation of a new arms race in the world .” As security experts studied the code, they found the zero-day attacks, including false digital certificates that bypassed screening methods used by most anti-virus programs , which led cybersecurity experts like Germany’s Ralph Lagner to fear that the virus could be reverse-engineered to target civilian infrastructure .

Lessons learned

1. Respect open-source intelligence

Social media provides troves of open-source intelligence, from Vice News tracking Russian troop movements to dating app vulnerabilities like Tinder enabling phone hacking. Hackers can use open-source intelligence to identify and target individuals that enable breaching entire social networks, through strategies like using a target’s phone to transmit malware. These efforts can be complemented by social-media mining programs using artificial intelligence–enabled chat bots so sophisticated that a Stanford experiment found students cannot differentiate them from actual teaching assistants.

The power of information operations fed by social-media exploitation was studied by NATO’s Strategic Communications Center of Excellence , using a “red-teamed” social-media campaign to interfere with an international security cooperation exercise. The team spent sixty dollars and created fake Facebook groups to investigate what they could find out about a military exercise just from open-source data, including details of the participants, and whether they could use this data to influence the participants’ behavior.

The effort identified 150 specific soldiers, found the location of several battalions, tracked troop movements, and compelled service members to engage in “undesirable” behavior, including leaving their positions against orders. Other experiments replicated these results to varying degrees, using bot personalities like “ Robin Sage ” to penetrate the social networks of the Joint Chiefs of Staff, the chief information officer of the National Security Agency, a senior congressional staffer, and others.

Risks posed by open-source intelligence are especially acute for grey-zone operations. Russia passed a law forbidding military personnel from posting photos or other sources of geolocation data on the internet after investigative journalists revealed “covert” military actions via social media posts. The United States has also experienced operational-security breaches, like when a twenty-year-old Australian student identified military base locations by looking at heat maps produced by a fitness-tracking app showing run routes scattered in global hot spots including Iraq and Syria . These challenges will grow as more devices get connected, requiring leaders to mitigate threats from poor data management, or risk jeopardizing the safety of their soldiers.

2. There is no perfect security solution

While air gaps are the gold standard for protecting key systems, overreliance on perimeter defense systems can trigger underinvestment in internal security, resulting in defenseless core systems. Cybersecurity expert Leigh-Anne Galloway notes that the outcome is that it is “often only at the point of exfiltration that an organization will realize they have a compromise,” as illustrated by Stuxnet’s detection only after it caused Microsoft malfunctions.

Failure to aggressively enforce security protocols risks negating the benefits of air gaps to prevent third-party-enabled breaches, including denying external devices from entering the facility and disabling USB device reading. Even with effective perimeter security protocols, passive security measures must be complemented by redundant systems such as training, internal audits, and active detection measures like threat hunting and penetration testing.

3. Change the culture dominating cyber operations

Avoiding cyber discussions to maintain security risks exposing commanders to vulnerabilities through ignorance. While operational sources and methods must be protected, it may be time to challenge the norm of secrecy, arguably a vestige of military cyber’s emergence from organizations like the NSA. While high classification levels help avoid detection in espionage operations and computer network exploitation, they are less relevant in overt computer network attacks. This tension is described by retired Rear Admiral William Leigher, the former director of warfare integration for information dominance:

If you [are] collecting intelligence, it’s foreign espionage. You don’t want to get caught. The measure of success is: “collect intelligence and don’t get caught.” If you’re going to war, I would argue that the measure of performance is what we do has to have the characteristics of a legal weapon.

Educating military leaders can be accomplished by importing civilian sector case studies, creating modern staff rides of the digital battlefield. This approach has been traditionally used with historic battlefields to educate commanders on how terrain shapes decision making and battle outcomes. Indeed, the analysis in this article is a sort of “digital staff ride,” but the much larger number of private-sector case studies—like the Target hack where vulnerable third-party vendors were exploited to access information from forty million credit cards —makes them worthy of study, as well.

4. Protect your baselines

Many computer systems, like ICSs, predictably run the same applications over time, creating “baseline” operational patterns. These baselines identify software, files, and processes, and deviations can help detect an attack using methods like monitoring file integrity and endpoint detection (continuous monitoring of network events for analysis, which works even if malware uses unprecedented zero-day exploits like Stuxnet did). Monitoring can also detect malware reaching back to external command nodes for operational guidance; while this method would not have detected Stuxnet, it is capable of uncovering more common malware attacks. “Whitelisting” can further protect systems by explicitly listing all programs permitted to run on a given system and denying all others.

Data analysis amplifies the impact of these strategies by integrating sources like physical access and network activity logs to find deviations like “superman” reports, where a user appears to hop between different geographic locations in moments, caused by the use of a virtual private network to avoid revealing the user’s actual location. These tools can also reveal non-logical access data that could be a sign of an intrusion or attack.

5. Train effectively

Current Army digital-security training focuses on basic cyber awareness, and while training fundamental security practices like not opening files from unknown addresses and avoiding cloned social-media pages is important, it is not sufficient.

While hacker exploits constantly change, training leader response to breaches and maintaining current incident response plans is vital to guarding against cyber threats. Commanders can develop these plans using resources like the National Institute of Standards and Technology’s Guide to Test, Training, and Exercise Programs for IT Plans and Capabilities . The guide, and industry best practices, recommend executing wargames and table-top exercises to stress decision making and internal systems to find capability gaps, especially at the lowest tactical levels which are often neglected in the Army’s current wargames. These exercises should include quantifiable tests to stress and validate system response capabilities, training that delineates responsibilities and ensures accountability for network users and administrators, and regular execution of well-developed emergency response plans to ensure accuracy and feasibility to protect vital internal infrastructure.

6. Invest before it becomes necessary

Strong executive action is needed to close cyber capability gaps, and not confronting that challenge at the national level invites strategic risks. The failure to develop a collective cybersecurity strategy at the national level was identified as an issue as early as 2002, when former CIA Director James Woolsey wrote to President Bush calling for aggressive investment in cyber-defense to “avoid a national disaster.” The issue was revisited by cybersecurity experts in the aftermath of the Aurora Project, which estimated a cyberattack against the United States would cost “$700 billion. . . the equivalent of 40 to 50 large hurricanes striking all at once.”

Despite these risks, there has been comparatively little investment in enhancing security infrastructure, primarily because, as one analysis explains , “there is no obvious incentive for any utility operator to take any of the relatively simple costs necessary to defend against it.” This extends past traditional utilities and into core elements of the national economy, underscored by the story of a Chinese company’s planting of tiny chips on motherboards that would enable infiltration of networks that used the servers—which could be found in Department of Defense and Central Intelligence Agency data centers , along with over thirty major US companies. In the absence of strong executive leadership and direct regulation, these lapses within supply chains and internal communication systems will endure and present enormous risks to national security.

While the White House’s 2018 National Cyber Strategy is progress, given it is the “first fully articulated cyber strategy for the United States since 2003,” implementation and execution require continued leadership. The plan acknowledged omnipresent cyber threats from rogue and near-peer rivals and called for offensive strength through a “Cyber Deterrence Initiative,” intended “to create the structures of deterrence that will demonstrate to adversaries that the cost of their engaging in operations against us is higher than they want to bear,” according to National Security Advisor John Bolton . Digitally literate and effective government leadership is required to execute the plan’s four pillars of: enhancing resilience, developing a vibrant digital economy and work force, aggressively pursuing cyber threats, and advancing America’s interests in cyberspace.

Army leaders must take ownership of cybersecurity within the Multi-Domain Operations concept, including education and strategy development, since expanding military networks increase attack probability. Studying Stuxnet, and understanding the digital terrain hackers exploit to attack key infrastructure, allows military leaders to safeguard against these threats.