Hypothesis Testing - Analysis of Variance (ANOVA)

Lisa Sullivan, PhD

Professor of Biostatistics

Boston University School of Public Health

Introduction

This module will continue the discussion of hypothesis testing, where a specific statement or hypothesis is generated about a population parameter, and sample statistics are used to assess the likelihood that the hypothesis is true. The hypothesis is based on available information and the investigator's belief about the population parameters. The specific test considered here is called analysis of variance (ANOVA) and is a test of hypothesis that is appropriate to compare means of a continuous variable in two or more independent comparison groups. For example, in some clinical trials there are more than two comparison groups. In a clinical trial to evaluate a new medication for asthma, investigators might compare an experimental medication to a placebo and to a standard treatment (i.e., a medication currently being used). In an observational study such as the Framingham Heart Study, it might be of interest to compare mean blood pressure or mean cholesterol levels in persons who are underweight, normal weight, overweight and obese.

The technique to test for a difference in more than two independent means is an extension of the two independent samples procedure discussed previously which applies when there are exactly two independent comparison groups. The ANOVA technique applies when there are two or more than two independent groups. The ANOVA procedure is used to compare the means of the comparison groups and is conducted using the same five step approach used in the scenarios discussed in previous sections. Because there are more than two groups, however, the computation of the test statistic is more involved. The test statistic must take into account the sample sizes, sample means and sample standard deviations in each of the comparison groups.

If one is examining the means observed among, say three groups, it might be tempting to perform three separate group to group comparisons, but this approach is incorrect because each of these comparisons fails to take into account the total data, and it increases the likelihood of incorrectly concluding that there are statistically significate differences, since each comparison adds to the probability of a type I error. Analysis of variance avoids these problemss by asking a more global question, i.e., whether there are significant differences among the groups, without addressing differences between any two groups in particular (although there are additional tests that can do this if the analysis of variance indicates that there are differences among the groups).

The fundamental strategy of ANOVA is to systematically examine variability within groups being compared and also examine variability among the groups being compared.

Learning Objectives

After completing this module, the student will be able to:

- Perform analysis of variance by hand

- Appropriately interpret results of analysis of variance tests

- Distinguish between one and two factor analysis of variance tests

- Identify the appropriate hypothesis testing procedure based on type of outcome variable and number of samples

The ANOVA Approach

Consider an example with four independent groups and a continuous outcome measure. The independent groups might be defined by a particular characteristic of the participants such as BMI (e.g., underweight, normal weight, overweight, obese) or by the investigator (e.g., randomizing participants to one of four competing treatments, call them A, B, C and D). Suppose that the outcome is systolic blood pressure, and we wish to test whether there is a statistically significant difference in mean systolic blood pressures among the four groups. The sample data are organized as follows:

The hypotheses of interest in an ANOVA are as follows:

- H 0 : μ 1 = μ 2 = μ 3 ... = μ k

- H 1 : Means are not all equal.

where k = the number of independent comparison groups.

In this example, the hypotheses are:

- H 0 : μ 1 = μ 2 = μ 3 = μ 4

- H 1 : The means are not all equal.

The null hypothesis in ANOVA is always that there is no difference in means. The research or alternative hypothesis is always that the means are not all equal and is usually written in words rather than in mathematical symbols. The research hypothesis captures any difference in means and includes, for example, the situation where all four means are unequal, where one is different from the other three, where two are different, and so on. The alternative hypothesis, as shown above, capture all possible situations other than equality of all means specified in the null hypothesis.

Test Statistic for ANOVA

The test statistic for testing H 0 : μ 1 = μ 2 = ... = μ k is:

and the critical value is found in a table of probability values for the F distribution with (degrees of freedom) df 1 = k-1, df 2 =N-k. The table can be found in "Other Resources" on the left side of the pages.

NOTE: The test statistic F assumes equal variability in the k populations (i.e., the population variances are equal, or s 1 2 = s 2 2 = ... = s k 2 ). This means that the outcome is equally variable in each of the comparison populations. This assumption is the same as that assumed for appropriate use of the test statistic to test equality of two independent means. It is possible to assess the likelihood that the assumption of equal variances is true and the test can be conducted in most statistical computing packages. If the variability in the k comparison groups is not similar, then alternative techniques must be used.

The F statistic is computed by taking the ratio of what is called the "between treatment" variability to the "residual or error" variability. This is where the name of the procedure originates. In analysis of variance we are testing for a difference in means (H 0 : means are all equal versus H 1 : means are not all equal) by evaluating variability in the data. The numerator captures between treatment variability (i.e., differences among the sample means) and the denominator contains an estimate of the variability in the outcome. The test statistic is a measure that allows us to assess whether the differences among the sample means (numerator) are more than would be expected by chance if the null hypothesis is true. Recall in the two independent sample test, the test statistic was computed by taking the ratio of the difference in sample means (numerator) to the variability in the outcome (estimated by Sp).

The decision rule for the F test in ANOVA is set up in a similar way to decision rules we established for t tests. The decision rule again depends on the level of significance and the degrees of freedom. The F statistic has two degrees of freedom. These are denoted df 1 and df 2 , and called the numerator and denominator degrees of freedom, respectively. The degrees of freedom are defined as follows:

df 1 = k-1 and df 2 =N-k,

where k is the number of comparison groups and N is the total number of observations in the analysis. If the null hypothesis is true, the between treatment variation (numerator) will not exceed the residual or error variation (denominator) and the F statistic will small. If the null hypothesis is false, then the F statistic will be large. The rejection region for the F test is always in the upper (right-hand) tail of the distribution as shown below.

Rejection Region for F Test with a =0.05, df 1 =3 and df 2 =36 (k=4, N=40)

For the scenario depicted here, the decision rule is: Reject H 0 if F > 2.87.

The ANOVA Procedure

We will next illustrate the ANOVA procedure using the five step approach. Because the computation of the test statistic is involved, the computations are often organized in an ANOVA table. The ANOVA table breaks down the components of variation in the data into variation between treatments and error or residual variation. Statistical computing packages also produce ANOVA tables as part of their standard output for ANOVA, and the ANOVA table is set up as follows:

where

- X = individual observation,

- k = the number of treatments or independent comparison groups, and

- N = total number of observations or total sample size.

The ANOVA table above is organized as follows.

- The first column is entitled "Source of Variation" and delineates the between treatment and error or residual variation. The total variation is the sum of the between treatment and error variation.

- The second column is entitled "Sums of Squares (SS)" . The between treatment sums of squares is

and is computed by summing the squared differences between each treatment (or group) mean and the overall mean. The squared differences are weighted by the sample sizes per group (n j ). The error sums of squares is:

and is computed by summing the squared differences between each observation and its group mean (i.e., the squared differences between each observation in group 1 and the group 1 mean, the squared differences between each observation in group 2 and the group 2 mean, and so on). The double summation ( SS ) indicates summation of the squared differences within each treatment and then summation of these totals across treatments to produce a single value. (This will be illustrated in the following examples). The total sums of squares is:

and is computed by summing the squared differences between each observation and the overall sample mean. In an ANOVA, data are organized by comparison or treatment groups. If all of the data were pooled into a single sample, SST would reflect the numerator of the sample variance computed on the pooled or total sample. SST does not figure into the F statistic directly. However, SST = SSB + SSE, thus if two sums of squares are known, the third can be computed from the other two.

- The third column contains degrees of freedom . The between treatment degrees of freedom is df 1 = k-1. The error degrees of freedom is df 2 = N - k. The total degrees of freedom is N-1 (and it is also true that (k-1) + (N-k) = N-1).

- The fourth column contains "Mean Squares (MS)" which are computed by dividing sums of squares (SS) by degrees of freedom (df), row by row. Specifically, MSB=SSB/(k-1) and MSE=SSE/(N-k). Dividing SST/(N-1) produces the variance of the total sample. The F statistic is in the rightmost column of the ANOVA table and is computed by taking the ratio of MSB/MSE.

A clinical trial is run to compare weight loss programs and participants are randomly assigned to one of the comparison programs and are counseled on the details of the assigned program. Participants follow the assigned program for 8 weeks. The outcome of interest is weight loss, defined as the difference in weight measured at the start of the study (baseline) and weight measured at the end of the study (8 weeks), measured in pounds.

Three popular weight loss programs are considered. The first is a low calorie diet. The second is a low fat diet and the third is a low carbohydrate diet. For comparison purposes, a fourth group is considered as a control group. Participants in the fourth group are told that they are participating in a study of healthy behaviors with weight loss only one component of interest. The control group is included here to assess the placebo effect (i.e., weight loss due to simply participating in the study). A total of twenty patients agree to participate in the study and are randomly assigned to one of the four diet groups. Weights are measured at baseline and patients are counseled on the proper implementation of the assigned diet (with the exception of the control group). After 8 weeks, each patient's weight is again measured and the difference in weights is computed by subtracting the 8 week weight from the baseline weight. Positive differences indicate weight losses and negative differences indicate weight gains. For interpretation purposes, we refer to the differences in weights as weight losses and the observed weight losses are shown below.

Is there a statistically significant difference in the mean weight loss among the four diets? We will run the ANOVA using the five-step approach.

- Step 1. Set up hypotheses and determine level of significance

H 0 : μ 1 = μ 2 = μ 3 = μ 4 H 1 : Means are not all equal α=0.05

- Step 2. Select the appropriate test statistic.

The test statistic is the F statistic for ANOVA, F=MSB/MSE.

- Step 3. Set up decision rule.

The appropriate critical value can be found in a table of probabilities for the F distribution(see "Other Resources"). In order to determine the critical value of F we need degrees of freedom, df 1 =k-1 and df 2 =N-k. In this example, df 1 =k-1=4-1=3 and df 2 =N-k=20-4=16. The critical value is 3.24 and the decision rule is as follows: Reject H 0 if F > 3.24.

- Step 4. Compute the test statistic.

To organize our computations we complete the ANOVA table. In order to compute the sums of squares we must first compute the sample means for each group and the overall mean based on the total sample.

We can now compute

So, in this case:

Next we compute,

SSE requires computing the squared differences between each observation and its group mean. We will compute SSE in parts. For the participants in the low calorie diet:

For the participants in the low fat diet:

For the participants in the low carbohydrate diet:

For the participants in the control group:

We can now construct the ANOVA table .

- Step 5. Conclusion.

We reject H 0 because 8.43 > 3.24. We have statistically significant evidence at α=0.05 to show that there is a difference in mean weight loss among the four diets.

ANOVA is a test that provides a global assessment of a statistical difference in more than two independent means. In this example, we find that there is a statistically significant difference in mean weight loss among the four diets considered. In addition to reporting the results of the statistical test of hypothesis (i.e., that there is a statistically significant difference in mean weight losses at α=0.05), investigators should also report the observed sample means to facilitate interpretation of the results. In this example, participants in the low calorie diet lost an average of 6.6 pounds over 8 weeks, as compared to 3.0 and 3.4 pounds in the low fat and low carbohydrate groups, respectively. Participants in the control group lost an average of 1.2 pounds which could be called the placebo effect because these participants were not participating in an active arm of the trial specifically targeted for weight loss. Are the observed weight losses clinically meaningful?

Another ANOVA Example

Calcium is an essential mineral that regulates the heart, is important for blood clotting and for building healthy bones. The National Osteoporosis Foundation recommends a daily calcium intake of 1000-1200 mg/day for adult men and women. While calcium is contained in some foods, most adults do not get enough calcium in their diets and take supplements. Unfortunately some of the supplements have side effects such as gastric distress, making them difficult for some patients to take on a regular basis.

A study is designed to test whether there is a difference in mean daily calcium intake in adults with normal bone density, adults with osteopenia (a low bone density which may lead to osteoporosis) and adults with osteoporosis. Adults 60 years of age with normal bone density, osteopenia and osteoporosis are selected at random from hospital records and invited to participate in the study. Each participant's daily calcium intake is measured based on reported food intake and supplements. The data are shown below.

Is there a statistically significant difference in mean calcium intake in patients with normal bone density as compared to patients with osteopenia and osteoporosis? We will run the ANOVA using the five-step approach.

H 0 : μ 1 = μ 2 = μ 3 H 1 : Means are not all equal α=0.05

In order to determine the critical value of F we need degrees of freedom, df 1 =k-1 and df 2 =N-k. In this example, df 1 =k-1=3-1=2 and df 2 =N-k=18-3=15. The critical value is 3.68 and the decision rule is as follows: Reject H 0 if F > 3.68.

To organize our computations we will complete the ANOVA table. In order to compute the sums of squares we must first compute the sample means for each group and the overall mean.

If we pool all N=18 observations, the overall mean is 817.8.

We can now compute:

Substituting:

SSE requires computing the squared differences between each observation and its group mean. We will compute SSE in parts. For the participants with normal bone density:

For participants with osteopenia:

For participants with osteoporosis:

We do not reject H 0 because 1.395 < 3.68. We do not have statistically significant evidence at a =0.05 to show that there is a difference in mean calcium intake in patients with normal bone density as compared to osteopenia and osterporosis. Are the differences in mean calcium intake clinically meaningful? If so, what might account for the lack of statistical significance?

One-Way ANOVA in R

The video below by Mike Marin demonstrates how to perform analysis of variance in R. It also covers some other statistical issues, but the initial part of the video will be useful to you.

Two-Factor ANOVA

The ANOVA tests described above are called one-factor ANOVAs. There is one treatment or grouping factor with k > 2 levels and we wish to compare the means across the different categories of this factor. The factor might represent different diets, different classifications of risk for disease (e.g., osteoporosis), different medical treatments, different age groups, or different racial/ethnic groups. There are situations where it may be of interest to compare means of a continuous outcome across two or more factors. For example, suppose a clinical trial is designed to compare five different treatments for joint pain in patients with osteoarthritis. Investigators might also hypothesize that there are differences in the outcome by sex. This is an example of a two-factor ANOVA where the factors are treatment (with 5 levels) and sex (with 2 levels). In the two-factor ANOVA, investigators can assess whether there are differences in means due to the treatment, by sex or whether there is a difference in outcomes by the combination or interaction of treatment and sex. Higher order ANOVAs are conducted in the same way as one-factor ANOVAs presented here and the computations are again organized in ANOVA tables with more rows to distinguish the different sources of variation (e.g., between treatments, between men and women). The following example illustrates the approach.

Consider the clinical trial outlined above in which three competing treatments for joint pain are compared in terms of their mean time to pain relief in patients with osteoarthritis. Because investigators hypothesize that there may be a difference in time to pain relief in men versus women, they randomly assign 15 participating men to one of the three competing treatments and randomly assign 15 participating women to one of the three competing treatments (i.e., stratified randomization). Participating men and women do not know to which treatment they are assigned. They are instructed to take the assigned medication when they experience joint pain and to record the time, in minutes, until the pain subsides. The data (times to pain relief) are shown below and are organized by the assigned treatment and sex of the participant.

Table of Time to Pain Relief by Treatment and Sex

The analysis in two-factor ANOVA is similar to that illustrated above for one-factor ANOVA. The computations are again organized in an ANOVA table, but the total variation is partitioned into that due to the main effect of treatment, the main effect of sex and the interaction effect. The results of the analysis are shown below (and were generated with a statistical computing package - here we focus on interpretation).

ANOVA Table for Two-Factor ANOVA

There are 4 statistical tests in the ANOVA table above. The first test is an overall test to assess whether there is a difference among the 6 cell means (cells are defined by treatment and sex). The F statistic is 20.7 and is highly statistically significant with p=0.0001. When the overall test is significant, focus then turns to the factors that may be driving the significance (in this example, treatment, sex or the interaction between the two). The next three statistical tests assess the significance of the main effect of treatment, the main effect of sex and the interaction effect. In this example, there is a highly significant main effect of treatment (p=0.0001) and a highly significant main effect of sex (p=0.0001). The interaction between the two does not reach statistical significance (p=0.91). The table below contains the mean times to pain relief in each of the treatments for men and women (Note that each sample mean is computed on the 5 observations measured under that experimental condition).

Mean Time to Pain Relief by Treatment and Gender

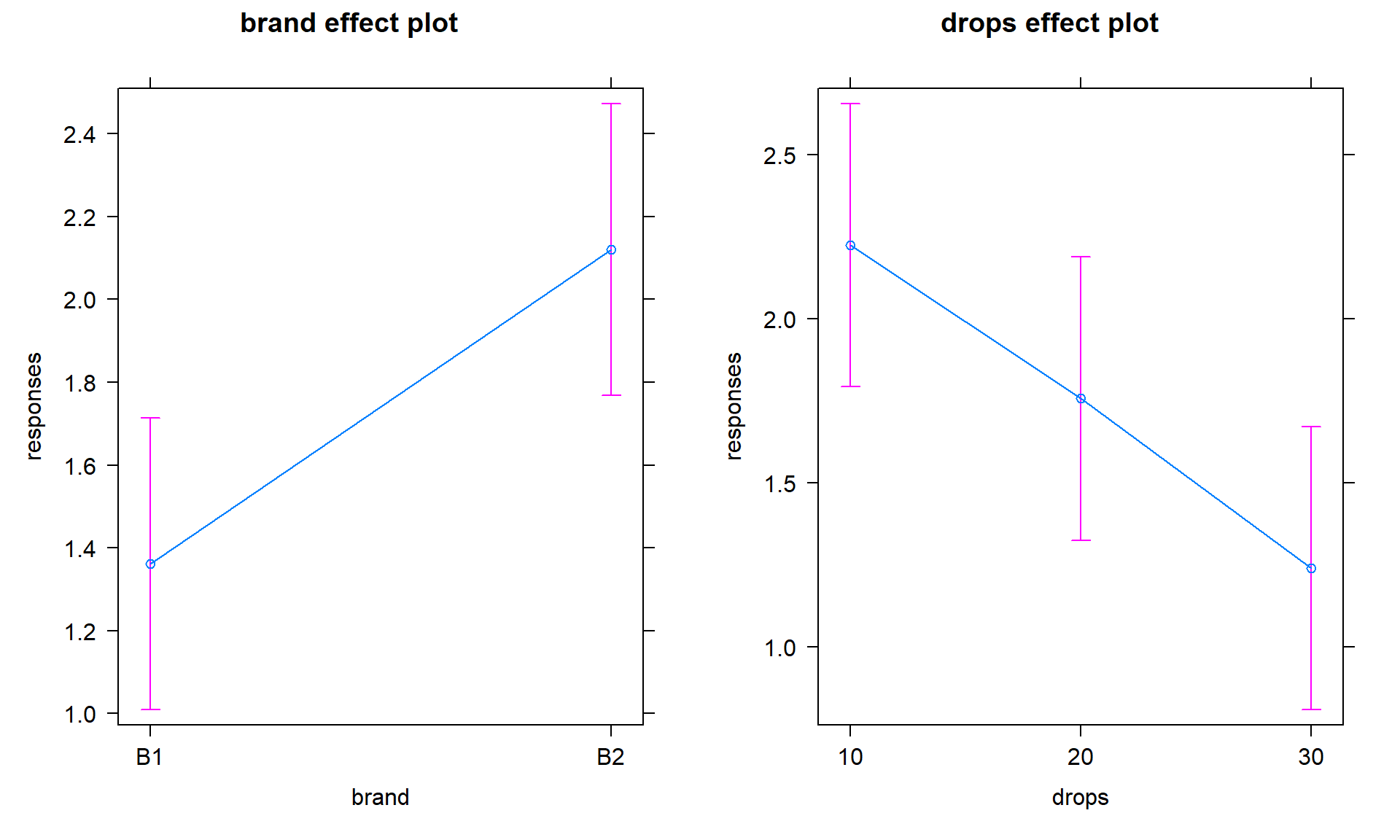

Treatment A appears to be the most efficacious treatment for both men and women. The mean times to relief are lower in Treatment A for both men and women and highest in Treatment C for both men and women. Across all treatments, women report longer times to pain relief (See below).

Notice that there is the same pattern of time to pain relief across treatments in both men and women (treatment effect). There is also a sex effect - specifically, time to pain relief is longer in women in every treatment.

Suppose that the same clinical trial is replicated in a second clinical site and the following data are observed.

Table - Time to Pain Relief by Treatment and Sex - Clinical Site 2

The ANOVA table for the data measured in clinical site 2 is shown below.

Table - Summary of Two-Factor ANOVA - Clinical Site 2

Notice that the overall test is significant (F=19.4, p=0.0001), there is a significant treatment effect, sex effect and a highly significant interaction effect. The table below contains the mean times to relief in each of the treatments for men and women.

Table - Mean Time to Pain Relief by Treatment and Gender - Clinical Site 2

Notice that now the differences in mean time to pain relief among the treatments depend on sex. Among men, the mean time to pain relief is highest in Treatment A and lowest in Treatment C. Among women, the reverse is true. This is an interaction effect (see below).

Notice above that the treatment effect varies depending on sex. Thus, we cannot summarize an overall treatment effect (in men, treatment C is best, in women, treatment A is best).

When interaction effects are present, some investigators do not examine main effects (i.e., do not test for treatment effect because the effect of treatment depends on sex). This issue is complex and is discussed in more detail in a later module.

- Privacy Policy

Home » ANOVA (Analysis of variance) – Formulas, Types, and Examples

ANOVA (Analysis of variance) – Formulas, Types, and Examples

Table of Contents

Analysis of Variance (ANOVA)

Analysis of Variance (ANOVA) is a statistical method used to test differences between two or more means. It is similar to the t-test, but the t-test is generally used for comparing two means, while ANOVA is used when you have more than two means to compare.

ANOVA is based on comparing the variance (or variation) between the data samples to the variation within each particular sample. If the between-group variance is high and the within-group variance is low, this provides evidence that the means of the groups are significantly different.

ANOVA Terminology

When discussing ANOVA, there are several key terms to understand:

- Factor : This is another term for the independent variable in your analysis. In a one-way ANOVA, there is one factor, while in a two-way ANOVA, there are two factors.

- Levels : These are the different groups or categories within a factor. For example, if the factor is ‘diet’ the levels might be ‘low fat’, ‘medium fat’, and ‘high fat’.

- Response Variable : This is the dependent variable or the outcome that you are measuring.

- Within-group Variance : This is the variance or spread of scores within each level of your factor.

- Between-group Variance : This is the variance or spread of scores between the different levels of your factor.

- Grand Mean : This is the overall mean when you consider all the data together, regardless of the factor level.

- Treatment Sums of Squares (SS) : This represents the between-group variability. It is the sum of the squared differences between the group means and the grand mean.

- Error Sums of Squares (SS) : This represents the within-group variability. It’s the sum of the squared differences between each observation and its group mean.

- Total Sums of Squares (SS) : This is the sum of the Treatment SS and the Error SS. It represents the total variability in the data.

- Degrees of Freedom (df) : The degrees of freedom are the number of values that have the freedom to vary when computing a statistic. For example, if you have ‘n’ observations in one group, then the degrees of freedom for that group is ‘n-1’.

- Mean Square (MS) : Mean Square is the average squared deviation and is calculated by dividing the sum of squares by the corresponding degrees of freedom.

- F-Ratio : This is the test statistic for ANOVAs, and it’s the ratio of the between-group variance to the within-group variance. If the between-group variance is significantly larger than the within-group variance, the F-ratio will be large and likely significant.

- Null Hypothesis (H0) : This is the hypothesis that there is no difference between the group means.

- Alternative Hypothesis (H1) : This is the hypothesis that there is a difference between at least two of the group means.

- p-value : This is the probability of obtaining a test statistic as extreme as the one that was actually observed, assuming that the null hypothesis is true. If the p-value is less than the significance level (usually 0.05), then the null hypothesis is rejected in favor of the alternative hypothesis.

- Post-hoc tests : These are follow-up tests conducted after an ANOVA when the null hypothesis is rejected, to determine which specific groups’ means (levels) are different from each other. Examples include Tukey’s HSD, Scheffe, Bonferroni, among others.

Types of ANOVA

Types of ANOVA are as follows:

One-way (or one-factor) ANOVA

This is the simplest type of ANOVA, which involves one independent variable . For example, comparing the effect of different types of diet (vegetarian, pescatarian, omnivore) on cholesterol level.

Two-way (or two-factor) ANOVA

This involves two independent variables. This allows for testing the effect of each independent variable on the dependent variable , as well as testing if there’s an interaction effect between the independent variables on the dependent variable.

Repeated Measures ANOVA

This is used when the same subjects are measured multiple times under different conditions, or at different points in time. This type of ANOVA is often used in longitudinal studies.

Mixed Design ANOVA

This combines features of both between-subjects (independent groups) and within-subjects (repeated measures) designs. In this model, one factor is a between-subjects variable and the other is a within-subjects variable.

Multivariate Analysis of Variance (MANOVA)

This is used when there are two or more dependent variables. It tests whether changes in the independent variable(s) correspond to changes in the dependent variables.

Analysis of Covariance (ANCOVA)

This combines ANOVA and regression. ANCOVA tests whether certain factors have an effect on the outcome variable after removing the variance for which quantitative covariates (interval variables) account. This allows the comparison of one variable outcome between groups, while statistically controlling for the effect of other continuous variables that are not of primary interest.

Nested ANOVA

This model is used when the groups can be clustered into categories. For example, if you were comparing students’ performance from different classrooms and different schools, “classroom” could be nested within “school.”

ANOVA Formulas

ANOVA Formulas are as follows:

Sum of Squares Total (SST)

This represents the total variability in the data. It is the sum of the squared differences between each observation and the overall mean.

- yi represents each individual data point

- y_mean represents the grand mean (mean of all observations)

Sum of Squares Within (SSW)

This represents the variability within each group or factor level. It is the sum of the squared differences between each observation and its group mean.

- yij represents each individual data point within a group

- y_meani represents the mean of the ith group

Sum of Squares Between (SSB)

This represents the variability between the groups. It is the sum of the squared differences between the group means and the grand mean, multiplied by the number of observations in each group.

- ni represents the number of observations in each group

- y_mean represents the grand mean

Degrees of Freedom

The degrees of freedom are the number of values that have the freedom to vary when calculating a statistic.

For within groups (dfW):

For between groups (dfB):

For total (dfT):

- N represents the total number of observations

- k represents the number of groups

Mean Squares

Mean squares are the sum of squares divided by the respective degrees of freedom.

Mean Squares Between (MSB):

Mean Squares Within (MSW):

F-Statistic

The F-statistic is used to test whether the variability between the groups is significantly greater than the variability within the groups.

If the F-statistic is significantly higher than what would be expected by chance, we reject the null hypothesis that all group means are equal.

Examples of ANOVA

Examples 1:

Suppose a psychologist wants to test the effect of three different types of exercise (yoga, aerobic exercise, and weight training) on stress reduction. The dependent variable is the stress level, which can be measured using a stress rating scale.

Here are hypothetical stress ratings for a group of participants after they followed each of the exercise regimes for a period:

- Yoga: [3, 2, 2, 1, 2, 2, 3, 2, 1, 2]

- Aerobic Exercise: [2, 3, 3, 2, 3, 2, 3, 3, 2, 2]

- Weight Training: [4, 4, 5, 5, 4, 5, 4, 5, 4, 5]

The psychologist wants to determine if there is a statistically significant difference in stress levels between these different types of exercise.

To conduct the ANOVA:

1. State the hypotheses:

- Null Hypothesis (H0): There is no difference in mean stress levels between the three types of exercise.

- Alternative Hypothesis (H1): There is a difference in mean stress levels between at least two of the types of exercise.

2. Calculate the ANOVA statistics:

- Compute the Sum of Squares Between (SSB), Sum of Squares Within (SSW), and Sum of Squares Total (SST).

- Calculate the Degrees of Freedom (dfB, dfW, dfT).

- Calculate the Mean Squares Between (MSB) and Mean Squares Within (MSW).

- Compute the F-statistic (F = MSB / MSW).

3. Check the p-value associated with the calculated F-statistic.

- If the p-value is less than the chosen significance level (often 0.05), then we reject the null hypothesis in favor of the alternative hypothesis. This suggests there is a statistically significant difference in mean stress levels between the three exercise types.

4. Post-hoc tests

- If we reject the null hypothesis, we conduct a post-hoc test to determine which specific groups’ means (exercise types) are different from each other.

Examples 2:

Suppose an agricultural scientist wants to compare the yield of three varieties of wheat. The scientist randomly selects four fields for each variety and plants them. After harvest, the yield from each field is measured in bushels. Here are the hypothetical yields:

The scientist wants to know if the differences in yields are due to the different varieties or just random variation.

Here’s how to apply the one-way ANOVA to this situation:

- Null Hypothesis (H0): The means of the three populations are equal.

- Alternative Hypothesis (H1): At least one population mean is different.

- Calculate the Degrees of Freedom (dfB for between groups, dfW for within groups, dfT for total).

- If the p-value is less than the chosen significance level (often 0.05), then we reject the null hypothesis in favor of the alternative hypothesis. This would suggest there is a statistically significant difference in mean yields among the three varieties.

- If we reject the null hypothesis, we conduct a post-hoc test to determine which specific groups’ means (wheat varieties) are different from each other.

How to Conduct ANOVA

Conducting an Analysis of Variance (ANOVA) involves several steps. Here’s a general guideline on how to perform it:

- Null Hypothesis (H0): The means of all groups are equal.

- Alternative Hypothesis (H1): At least one group mean is different from the others.

- The significance level (often denoted as α) is usually set at 0.05. This implies that you are willing to accept a 5% chance that you are wrong in rejecting the null hypothesis.

- Data should be collected for each group under study. Make sure that the data meet the assumptions of an ANOVA: normality, independence, and homogeneity of variances.

- Calculate the Degrees of Freedom (df) for each sum of squares (dfB, dfW, dfT).

- Compute the Mean Squares Between (MSB) and Mean Squares Within (MSW) by dividing the sum of squares by the corresponding degrees of freedom.

- Compute the F-statistic as the ratio of MSB to MSW.

- Determine the critical F-value from the F-distribution table using dfB and dfW.

- If the calculated F-statistic is greater than the critical F-value, reject the null hypothesis.

- If the p-value associated with the calculated F-statistic is smaller than the significance level (0.05 typically), you reject the null hypothesis.

- If you rejected the null hypothesis, you can conduct post-hoc tests (like Tukey’s HSD) to determine which specific groups’ means (if you have more than two groups) are different from each other.

- Regardless of the result, report your findings in a clear, understandable manner. This typically includes reporting the test statistic, p-value, and whether the null hypothesis was rejected.

When to use ANOVA

ANOVA (Analysis of Variance) is used when you have three or more groups and you want to compare their means to see if they are significantly different from each other. It is a statistical method that is used in a variety of research scenarios. Here are some examples of when you might use ANOVA:

- Comparing Groups : If you want to compare the performance of more than two groups, for example, testing the effectiveness of different teaching methods on student performance.

- Evaluating Interactions : In a two-way or factorial ANOVA, you can test for an interaction effect. This means you are not only interested in the effect of each individual factor, but also whether the effect of one factor depends on the level of another factor.

- Repeated Measures : If you have measured the same subjects under different conditions or at different time points, you can use repeated measures ANOVA to compare the means of these repeated measures while accounting for the correlation between measures from the same subject.

- Experimental Designs : ANOVA is often used in experimental research designs when subjects are randomly assigned to different conditions and the goal is to compare the means of the conditions.

Here are the assumptions that must be met to use ANOVA:

- Normality : The data should be approximately normally distributed.

- Homogeneity of Variances : The variances of the groups you are comparing should be roughly equal. This assumption can be tested using Levene’s test or Bartlett’s test.

- Independence : The observations should be independent of each other. This assumption is met if the data is collected appropriately with no related groups (e.g., twins, matched pairs, repeated measures).

Applications of ANOVA

The Analysis of Variance (ANOVA) is a powerful statistical technique that is used widely across various fields and industries. Here are some of its key applications:

Agriculture

ANOVA is commonly used in agricultural research to compare the effectiveness of different types of fertilizers, crop varieties, or farming methods. For example, an agricultural researcher could use ANOVA to determine if there are significant differences in the yields of several varieties of wheat under the same conditions.

Manufacturing and Quality Control

ANOVA is used to determine if different manufacturing processes or machines produce different levels of product quality. For instance, an engineer might use it to test whether there are differences in the strength of a product based on the machine that produced it.

Marketing Research

Marketers often use ANOVA to test the effectiveness of different advertising strategies. For example, a marketer could use ANOVA to determine whether different marketing messages have a significant impact on consumer purchase intentions.

Healthcare and Medicine

In medical research, ANOVA can be used to compare the effectiveness of different treatments or drugs. For example, a medical researcher could use ANOVA to test whether there are significant differences in recovery times for patients who receive different types of therapy.

ANOVA is used in educational research to compare the effectiveness of different teaching methods or educational interventions. For example, an educator could use it to test whether students perform significantly differently when taught with different teaching methods.

Psychology and Social Sciences

Psychologists and social scientists use ANOVA to compare group means on various psychological and social variables. For example, a psychologist could use it to determine if there are significant differences in stress levels among individuals in different occupations.

Biology and Environmental Sciences

Biologists and environmental scientists use ANOVA to compare different biological and environmental conditions. For example, an environmental scientist could use it to determine if there are significant differences in the levels of a pollutant in different bodies of water.

Advantages of ANOVA

Here are some advantages of using ANOVA:

Comparing Multiple Groups: One of the key advantages of ANOVA is the ability to compare the means of three or more groups. This makes it more powerful and flexible than the t-test, which is limited to comparing only two groups.

Control of Type I Error: When comparing multiple groups, the chances of making a Type I error (false positive) increases. One of the strengths of ANOVA is that it controls the Type I error rate across all comparisons. This is in contrast to performing multiple pairwise t-tests which can inflate the Type I error rate.

Testing Interactions: In factorial ANOVA, you can test not only the main effect of each factor, but also the interaction effect between factors. This can provide valuable insights into how different factors or variables interact with each other.

Handling Continuous and Categorical Variables: ANOVA can handle both continuous and categorical variables . The dependent variable is continuous and the independent variables are categorical.

Robustness: ANOVA is considered robust to violations of normality assumption when group sizes are equal. This means that even if your data do not perfectly meet the normality assumption, you might still get valid results.

Provides Detailed Analysis: ANOVA provides a detailed breakdown of variances and interactions between variables which can be useful in understanding the underlying factors affecting the outcome.

Capability to Handle Complex Experimental Designs: Advanced types of ANOVA (like repeated measures ANOVA, MANOVA, etc.) can handle more complex experimental designs, including those where measurements are taken on the same subjects over time, or when you want to analyze multiple dependent variables at once.

Disadvantages of ANOVA

Some limitations or disadvantages that are important to consider:

Assumptions: ANOVA relies on several assumptions including normality (the data follows a normal distribution), independence (the observations are independent of each other), and homogeneity of variances (the variances of the groups are roughly equal). If these assumptions are violated, the results of the ANOVA may not be valid.

Sensitivity to Outliers: ANOVA can be sensitive to outliers. A single extreme value in one group can affect the sum of squares and consequently influence the F-statistic and the overall result of the test.

Dichotomous Variables: ANOVA is not suitable for dichotomous variables (variables that can take only two values, like yes/no or male/female). It is used to compare the means of groups for a continuous dependent variable.

Lack of Specificity: Although ANOVA can tell you that there is a significant difference between groups, it doesn’t tell you which specific groups are significantly different from each other. You need to carry out further post-hoc tests (like Tukey’s HSD or Bonferroni) for these pairwise comparisons.

Complexity with Multiple Factors: When dealing with multiple factors and interactions in factorial ANOVA, interpretation can become complex. The presence of interaction effects can make main effects difficult to interpret.

Requires Larger Sample Sizes: To detect an effect of a certain size, ANOVA generally requires larger sample sizes than a t-test.

Equal Group Sizes: While not always a strict requirement, ANOVA is most powerful and its assumptions are most likely to be met when groups are of equal or similar sizes.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Factor Analysis – Steps, Methods and Examples

Substantive Framework – Types, Methods and...

Framework Analysis – Method, Types and Examples

Graphical Methods – Types, Examples and Guide

Probability Histogram – Definition, Examples and...

Content Analysis – Methods, Types and Examples

Statistics Made Easy

Understanding the Null Hypothesis for ANOVA Models

A one-way ANOVA is used to determine if there is a statistically significant difference between the mean of three or more independent groups.

A one-way ANOVA uses the following null and alternative hypotheses:

- H 0 : μ 1 = μ 2 = μ 3 = … = μ k (all of the group means are equal)

- H A : At least one group mean is different from the rest

To decide if we should reject or fail to reject the null hypothesis, we must refer to the p-value in the output of the ANOVA table.

If the p-value is less than some significance level (e.g. 0.05) then we can reject the null hypothesis and conclude that not all group means are equal.

A two-way ANOVA is used to determine whether or not there is a statistically significant difference between the means of three or more independent groups that have been split on two variables (sometimes called “factors”).

A two-way ANOVA tests three null hypotheses at the same time:

- All group means are equal at each level of the first variable

- All group means are equal at each level of the second variable

- There is no interaction effect between the two variables

To decide if we should reject or fail to reject each null hypothesis, we must refer to the p-values in the output of the two-way ANOVA table.

The following examples show how to decide to reject or fail to reject the null hypothesis in both a one-way ANOVA and two-way ANOVA.

Example 1: One-Way ANOVA

Suppose we want to know whether or not three different exam prep programs lead to different mean scores on a certain exam. To test this, we recruit 30 students to participate in a study and split them into three groups.

The students in each group are randomly assigned to use one of the three exam prep programs for the next three weeks to prepare for an exam. At the end of the three weeks, all of the students take the same exam.

The exam scores for each group are shown below:

When we enter these values into the One-Way ANOVA Calculator , we receive the following ANOVA table as the output:

Notice that the p-value is 0.11385 .

For this particular example, we would use the following null and alternative hypotheses:

- H 0 : μ 1 = μ 2 = μ 3 (the mean exam score for each group is equal)

Since the p-value from the ANOVA table is not less than 0.05, we fail to reject the null hypothesis.

This means we don’t have sufficient evidence to say that there is a statistically significant difference between the mean exam scores of the three groups.

Example 2: Two-Way ANOVA

Suppose a botanist wants to know whether or not plant growth is influenced by sunlight exposure and watering frequency.

She plants 40 seeds and lets them grow for two months under different conditions for sunlight exposure and watering frequency. After two months, she records the height of each plant. The results are shown below:

In the table above, we see that there were five plants grown under each combination of conditions.

For example, there were five plants grown with daily watering and no sunlight and their heights after two months were 4.8 inches, 4.4 inches, 3.2 inches, 3.9 inches, and 4.4 inches:

She performs a two-way ANOVA in Excel and ends up with the following output:

We can see the following p-values in the output of the two-way ANOVA table:

- The p-value for watering frequency is 0.975975 . This is not statistically significant at a significance level of 0.05.

- The p-value for sunlight exposure is 3.9E-8 (0.000000039) . This is statistically significant at a significance level of 0.05.

- The p-value for the interaction between watering frequency and sunlight exposure is 0.310898 . This is not statistically significant at a significance level of 0.05.

These results indicate that sunlight exposure is the only factor that has a statistically significant effect on plant height.

And because there is no interaction effect, the effect of sunlight exposure is consistent across each level of watering frequency.

That is, whether a plant is watered daily or weekly has no impact on how sunlight exposure affects a plant.

Additional Resources

The following tutorials provide additional information about ANOVA models:

How to Interpret the F-Value and P-Value in ANOVA How to Calculate Sum of Squares in ANOVA What Does a High F Value Mean in ANOVA?

Featured Posts

Hey there. My name is Zach Bobbitt. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

2 Replies to “Understanding the Null Hypothesis for ANOVA Models”

Hi, I’m a student at Stellenbosch University majoring in Conservation Ecology and Entomology and we are currently busy doing stats. I am still at a very entry level of stats understanding, so pages like these are of huge help. I wanted to ask, why is the sum of squares (treatment) for the one way ANOVA so high? I calculated it by hand and got a much lower number, could you please help point out if and where I went wrong?

As I understand it, SSB (treatment) is calculated by finding the mean of each group and the grand mean, and then calculating the sum of squares like this: GM = 85.5 x1 = 83.4 x2 = 89.3 x3 = 84.7

SSB = (85.5 – 83.4)^2 + (85.5 – 89.3)^2 + (85.5 – 84.7)^2 = 18.65 DF = 2

I would appreciate any help, thank you so much!

Hi Theo…Certainly! Here are the equations rewritten as they would be typed in Python:

### Sum of Squares Between Groups (SSB)

In a one-way ANOVA, the sum of squares between groups (SSB) measures the variation due to the interaction between the groups. It is calculated as follows:

1. **Calculate the group means**: “`python mean_group1 = 83.4 mean_group2 = 89.3 mean_group3 = 84.7 “`

2. **Calculate the grand mean**: “`python grand_mean = 85.5 “`

3. **Calculate the sum of squares between groups (SSB)**: Assuming each group has `n` observations: “`python n = 10 # Number of observations in each group

ssb = n * ((mean_group1 – grand_mean)**2 + (mean_group2 – grand_mean)**2 + (mean_group3 – grand_mean)**2) “`

### Example Calculation

For simplicity, let’s assume each group has 10 observations: “`python n = 10

ssb = n * ((83.4 – 85.5)**2 + (89.3 – 85.5)**2 + (84.7 – 85.5)**2) “`

Now calculate each term: “`python term1 = (83.4 – 85.5)**2 # term1 = (-2.1)**2 = 4.41 term2 = (89.3 – 85.5)**2 # term2 = (3.8)**2 = 14.44 term3 = (84.7 – 85.5)**2 # term3 = (-0.8)**2 = 0.64 “`

Sum these squared differences: “`python sum_of_squared_diffs = term1 + term2 + term3 # sum_of_squared_diffs = 4.41 + 14.44 + 0.64 = 19.49 ssb = n * sum_of_squared_diffs # ssb = 10 * 19.49 = 194.9 “`

So, the sum of squares between groups (SSB) is 194.9, assuming each group has 10 observations.

### Degrees of Freedom (DF)

The degrees of freedom for SSB is calculated as: “`python df_between = k – 1 “` where `k` is the number of groups.

For three groups: “`python k = 3 df_between = k – 1 # df_between = 3 – 1 = 2 “`

### Summary

– **SSB** should consider the number of observations in each group. – **DF** is the number of groups minus one.

By ensuring you include the number of observations per group in your SSB calculation, you can get the correct SSB value.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Join the Statology Community

Sign up to receive Statology's exclusive study resource: 100 practice problems with step-by-step solutions. Plus, get our latest insights, tutorials, and data analysis tips straight to your inbox!

By subscribing you accept Statology's Privacy Policy.

What Is An ANOVA Test In Statistics: Analysis Of Variance

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

On This Page:

An ANOVA test is a statistical test used to determine if there is a statistically significant difference between two or more categorical groups by testing for differences of means using a variance.

Another key part of ANOVA is that it splits the independent variable into two or more groups.

For example, one or more groups might be expected to influence the dependent variable, while the other group is used as a control group and is not expected to influence the dependent variable.

Assumptions of ANOVA

The assumptions of the ANOVA test are the same as the general assumptions for any parametric test:

- An ANOVA can only be conducted if there is no relationship between the subjects in each sample. This means that subjects in the first group cannot also be in the second group (e.g., independent samples/between groups).

- The different groups/levels must have equal sample sizes .

- An ANOVA can only be conducted if the dependent variable is normally distributed so that the middle scores are the most frequent and the extreme scores are the least frequent.

- Population variances must be equal (i.e., homoscedastic). Homogeneity of variance means that the deviation of scores (measured by the range or standard deviation, for example) is similar between populations.

Types of ANOVA Tests

There are different types of ANOVA tests. The two most common are a “One-Way” and a “Two-Way.”

The difference between these two types depends on the number of independent variables in your test.

One-way ANOVA

A one-way ANOVA (analysis of variance) has one categorical independent variable (also known as a factor) and a normally distributed continuous (i.e., interval or ratio level) dependent variable.

The independent variable divides cases into two or more mutually exclusive levels, categories, or groups.

The one-way ANOVA test for differences in the means of the dependent variable is broken down by the levels of the independent variable.

An example of a one-way ANOVA includes testing a therapeutic intervention (CBT, medication, placebo) on the incidence of depression in a clinical sample.

Note : Both the One-Way ANOVA and the Independent Samples t-Test can compare the means for two groups. However, only the One-Way ANOVA can compare the means across three or more groups.

Two-way (factorial) ANOVA

A two-way ANOVA (analysis of variance) has two or more categorical independent variables (also known as a factor) and a normally distributed continuous (i.e., interval or ratio level) dependent variable.

The independent variables divide cases into two or more mutually exclusive levels, categories, or groups. A two-way ANOVA is also called a factorial ANOVA.

An example of factorial ANOVAs include testing the effects of social contact (high, medium, low), job status (employed, self-employed, unemployed, retired), and family history (no family history, some family history) on the incidence of depression in a population.

What are “Groups” or “Levels”?

In ANOVA, “groups” or “levels” refer to the different categories of the independent variable being compared.

For example, if the independent variable is “eggs,” the levels might be Non-Organic, Organic, and Free Range Organic. The dependent variable could then be the price per dozen eggs.

ANOVA F -value

The test statistic for an ANOVA is denoted as F . The formula for ANOVA is F = variance caused by treatment/variance due to random chance.

The ANOVA F value can tell you if there is a significant difference between the levels of the independent variable, when p < .05. So, a higher F value indicates that the treatment variables are significant.

Note that the ANOVA alone does not tell us specifically which means were different from one another. To determine that, we would need to follow up with multiple comparisons (or post-hoc) tests.

When the initial F test indicates that significant differences exist between group means, post hoc tests are useful for determining which specific means are significantly different when you do not have specific hypotheses that you wish to test.

Post hoc tests compare each pair of means (like t-tests), but unlike t-tests, they correct the significance estimate to account for the multiple comparisons.

What Does “Replication” Mean?

Replication requires a study to be repeated with different subjects and experimenters. This would enable a statistical analyzer to confirm a prior study by testing the same hypothesis with a new sample.

How to run an ANOVA?

For large datasets, it is best to run an ANOVA in statistical software such as R or Stata. Let’s refer to our Egg example above.

Non-Organic, Organic, and Free-Range Organic Eggs would be assigned quantitative values (1,2,3). They would serve as our independent treatment variable, while the price per dozen eggs would serve as the dependent variable. Other erroneous variables may include “Brand Name” or “Laid Egg Date.”

Using data and the aov() command in R, we could then determine the impact Egg Type has on the price per dozen eggs.

ANOVA vs. t-test?

T-tests and ANOVA tests are both statistical techniques used to compare differences in means and spreads of the distributions across populations.

The t-test determines whether two populations are statistically different from each other, whereas ANOVA tests are used when an individual wants to test more than two levels within an independent variable.

Referring back to our egg example, testing Non-Organic vs. Organic would require a t-test while adding in Free Range as a third option demands ANOVA.

Rather than generate a t-statistic, ANOVA results in an f-statistic to determine statistical significance.

What does anova stand for?

ANOVA stands for Analysis of Variance. It’s a statistical method to analyze differences among group means in a sample. ANOVA tests the hypothesis that the means of two or more populations are equal, generalizing the t-test to more than two groups.

It’s commonly used in experiments where various factors’ effects are compared. It can also handle complex experiments with factors that have different numbers of levels.

When to use anova?

ANOVA should be used when one independent variable has three or more levels (categories or groups). It’s designed to compare the means of these multiple groups.

What does an anova test tell you?

An ANOVA test tells you if there are significant differences between the means of three or more groups. If the test result is significant, it suggests that at least one group’s mean differs from the others. It does not, however, specify which groups are different from each other.

Why do you use chi-square instead of ANOVA?

You use the chi-square test instead of ANOVA when dealing with categorical data to test associations or independence between two categorical variables. In contrast, ANOVA is used for continuous data to compare the means of three or more groups.

Related Articles

Exploratory Data Analysis

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

Convergent Validity: Definition and Examples

Content Validity in Research: Definition & Examples

Construct Validity In Psychology Research

Module 13: F-Distribution and One-Way ANOVA

One-way anova, learning outcomes.

- Conduct and interpret one-way ANOVA

The purpose of a one-way ANOVA test is to determine the existence of a statistically significant difference among several group means. The test actually uses variances to help determine if the means are equal or not. In order to perform a one-way ANOVA test, there are five basic assumptions to be fulfilled:

- Each population from which a sample is taken is assumed to be normal.

- All samples are randomly selected and independent.

- The populations are assumed to have equal standard deviations (or variances) .

- The factor is a categorical variable.

- The response is a numerical variable.

The Null and Alternative Hypotheses

The null hypothesis is simply that all the group population means are the same. The alternative hypothesis is that at least one pair of means is different. For example, if there are k groups:

H 0 : μ 1 = μ 2 = μ 3 = … = μ k

H a : At least two of the group means μ 1 , μ 2 , μ 3 , …, μ k are not equal.

The graphs, a set of box plots representing the distribution of values with the group means indicated by a horizontal line through the box, help in the understanding of the hypothesis test. In the first graph (red box plots), H 0 : μ 1 = μ 2 = μ 3 and the three populations have the same distribution if the null hypothesis is true. The variance of the combined data is approximately the same as the variance of each of the populations.

If the null hypothesis is false, then the variance of the combined data is larger which is caused by the different means as shown in the second graph (green box plots).

(b) H 0 is not true. All means are not the same; the differences are too large to be due to random variation.

Concept Review

Analysis of variance extends the comparison of two groups to several, each a level of a categorical variable (factor). Samples from each group are independent, and must be randomly selected from normal populations with equal variances. We test the null hypothesis of equal means of the response in every group versus the alternative hypothesis of one or more group means being different from the others. A one-way ANOVA hypothesis test determines if several population means are equal. The distribution for the test is the F distribution with two different degrees of freedom.

Assumptions:

- The populations are assumed to have equal standard deviations (or variances).

- OpenStax, Statistics, One-Way ANOVA. Located at : http://cnx.org/contents/[email protected] . License : CC BY: Attribution

- Introductory Statistics . Authored by : Barbara Illowski, Susan Dean. Provided by : Open Stax. Located at : http://cnx.org/contents/[email protected] . License : CC BY: Attribution . License Terms : Download for free at http://cnx.org/contents/[email protected]

- Completing a simple ANOVA table. Authored by : masterskills. Located at : https://youtu.be/OXA-bw9tGfo . License : All Rights Reserved . License Terms : Standard YouTube License

ANOVA Test is used to analyze the differences among the means of various groups using certain estimation procedures. ANOVA means analysis of variance. ANOVA test is a statistical significance test that is used to check whether the null hypothesis can be rejected or not during hypothesis testing.

An ANOVA test can be either one-way or two-way depending upon the number of independent variables. In this article, we will learn more about an ANOVA test, the one-way ANOVA and two-way ANOVA, its formulas and see certain associated examples.

What is ANOVA Test?

ANOVA test, in its simplest form, is used to check whether the means of three or more populations are equal or not. The ANOVA test applies when there are more than two independent groups. The goal of the ANOVA test is to check for variability within the groups as well as the variability among the groups. The ANOVA test statistic is given by the f test .

ANOVA Test Definition

ANOVA test can be defined as a type of test used in hypothesis testing to compare whether the means of two or more groups are equal or not. This test is used to check if the null hypothesis can be rejected or not depending upon the statistical significance exhibited by the parameters. The decision is made by comparing the ANOVA test statistic with the critical value.

ANOVA Test Example

Suppose it needs to be determined if consumption of a certain type of tea will result in a mean weight loss. Let there be three groups using three types of tea - green tea, earl grey tea, and jasmine tea. Thus, to compare if there was any mean weight loss exhibited by a certain group, the ANOVA test (one way) will be used.

Suppose a survey was conducted to check if there is an interaction between income and gender with anxiety level at job interviews. To conduct such a test a two-way ANOVA will be used.

ANOVA Formula

There are several components to the ANOVA formula. The best way to solve a problem on an ANOVA test is by organizing the formulas into an ANOVA table. The ANOVA formulas are given below.

Sum of squares between groups, SSB = \(\sum n_{j}(\overline{X}_{j}-\overline{X})^{2}\). Here, \(\overline{X}_{j}\) is the mean of the j th group, \(\overline{X}\) is the overall mean and \(n_{j}\) is the sample size of the j th group.

\(\overline{X}\) = \(\frac{\overline{X}_{1} + \overline{X}_{2} + \overline{X}_{3} + ... + \overline{X}_{j}}{j}\)

Sum of squares of errors, SSE = \(\sum\sum(X-\overline{X}_{j})^{2}\). Here, X refers to each data point in the j th group.

Total sum of squares, SST = SSB + SSE

Degrees of freedom between groups, df 1 = k - 1. Here, k denotes the number of groups.

Degrees of freedom of errors, df 2 = N - k, where N denotes the total number of observations across k groups.

Total degrees of freedom, df 3 = N - 1.

Mean squares between groups, MSB = SSB / (k - 1)

Mean squares of errors, MSE = SSE / (N - k)

ANOVA test statistic, f = MSB / MSE

Critical Value at \(\alpha\) = F(\(\alpha\), k - 1, N - k)

ANOVA Table

The ANOVA formulas can be arranged systematically in the form of a table. This ANOVA table can be summarized as follows:

One Way ANOVA

The one way ANOVA test is used to determine whether there is any difference between the means of three or more groups. A one way ANOVA will have only one independent variable. The hypothesis for a one way ANOVA test can be set up as follows:

Null Hypothesis, \(H_{0}\): \(\mu_{1}\) = \(\mu_{2}\) = \(\mu_{3}\) = ... = \(\mu_{k}\)

Alternative Hypothesis, \(H_{1}\): The means are not equal

Decision Rule: If test statistic > critical value then reject the null hypothesis and conclude that the means of at least two groups are statistically significant.

The steps to perform the one way ANOVA test are given below:

- Step 1: Calculate the mean for each group.

- Step 2: Calculate the total mean. This is done by adding all the means and dividing it by the total number of means.

- Step 3: Calculate the SSB.

- Step 4: Calculate the between groups degrees of freedom.

- Step 5: Calculate the SSE.

- Step 6: Calculate the degrees of freedom of errors.

- Step 7: Determine the MSB and the MSE.

- Step 8: Find the f test statistic.

- Step 9: Using the f table for the specified level of significance, \(\alpha\), find the critical value. This is given by F(\(\alpha\), df 1 . df 2 ).

- Step 10: If f > F then reject the null hypothesis.

Limitations of One Way ANOVA Test

The one way ANOVA is an omnibus test statistic. This implies that the test will determine whether the means of the various groups are statistically significant or not. However, it cannot distinguish the specific groups that have a statistically significant mean. Thus, to find the specific group with a different mean, a post hoc test needs to be conducted.

Two Way ANOVA

The two way ANOVA has two independent variables. Thus, it can be thought of as an extension of a one way ANOVA where only one variable affects the dependent variable. A two way ANOVA test is used to check the main effect of each independent variable and to see if there is an interaction effect between them. To examine the main effect, each factor is considered separately as done in a one way ANOVA. Furthermore, to check the interaction effect, all factors are considered at the same time. There are certain assumptions made for a two way ANOVA test. These are given as follows:

- The samples drawn from the population must be independent.

- The population should be approximately normally distributed.

- The groups should have the same sample size.

- The population variances are equal

Suppose in the two way ANOVA example, as mentioned above, the income groups are low, middle, high. The gender groups are female, male, and transgender. Then there will be 9 treatment groups and the three hypotheses can be set up as follows:

\(H_{01}\): All income groups have equal mean anxiety.

\(H_{11}\): All income groups do not have equal mean anxiety.

\(H_{02}\): All gender groups have equal mean anxiety.

\(H_{12}\): All gender groups do not have equal mean anxiety.

\(H_{03}\): Interaction effect does not exist

\(H_{13}\): Interaction effect exists.

Related Articles:

- Probability and Statistics

- Data Handling

- Z Score Formula

Important Notes on ANOVA Test

- ANOVA test is used to check whether the means of three or more groups are different or not by using estimation parameters such as the variance.

- An ANOVA table is used to summarize the results of an ANOVA test.

- There are two types of ANOVA tests - one way ANOVA and two way ANOVA

- One way ANOVA has only one independent variable while a two way ANOVA has two independent variables.

Examples on ANOVA Test

Example 1: Three types of fertilizers are used on three groups of plants for 5 weeks. We want to check if there is a difference in the mean growth of each group. Using the data given below apply a one way ANOVA test at 0.05 significant level.

\(H_{0}\): \(\mu_{1}\) = \(\mu_{2}\) = \(\mu_{3}\)

\(H_{1}\): The means are not equal

Total mean, \(\overline{X}\) = 8

\(n_{1}\) = \(n_{2}\) = \(n_{3}\) = 6, k = 3

SSB = 6(5 - 8) 2 + 6(9 - 8) 2 + 6(10 - 8) 2

df 1 = k - 1 = 2

SSE = 16 + 24 + 28 = 68

df 2 = N - k = 18 - 3 = 15

MSB = SSB / df 1 = 84 / 2 = 42

MSE = SSE / df 2 = 68 / 15 = 4.53

ANOVA test statistic, f = MSB / MSE = 42 / 4.53 = 9.33

Using the f table at \(\alpha\) = 0.05 the critical value is given as F(0.05, 2, 15) = 3.68

As f > F, thus, the null hypothesis is rejected and it can be concluded that there is a difference in the mean growth of the plants.

Answer: Reject the null hypothesis

Example 2: A trial was run to check the effects of different diets. Positive numbers indicate weight loss and negative numbers indicate weight gain. Check if there is an average difference in the weight of people following different diets using an ANOVA Table.

\(H_{0}\): \(\mu_{1}\) = \(\mu_{2}\) = \(\mu_{3}\) = \(\mu_{4}\)

Total mean, \(\overline{X}\) = 3.6

\(n_{1}\) = \(n_{2}\) = \(n_{3}\) = \(n_{4}\) = 5, k = 4

SSB = \(n_{1}(\overline{X}_{1}-\overline{X})^{2}\) + \(n_{2}(\overline{X}_{2}-\overline{X})^{2}\) +& \(n_{3}(\overline{X}_{3}-\overline{X})^{2}\) +\(n_{4}(\overline{X}_{4}-\overline{X})^{2}\)

SSE = 21.4 + 10 + 5.4 + 10.6 = 47.4

The ANOVA Table can be constructed as follows:

As no significance level is specified, \(\alpha\) = 0.05 is chosen.

F(0.05, 3, 16) = 3.24

As 8.43 > 3.24, thus, the null hypothesis is rejected and it can be concluded that there is a mean weight loss in the diets.

Example 3: Determine if there is a difference in the mean daily calcium intake for people with normal bone density, osteopenia, and osteoporosis at a 0.05 alpha level. The data was recorded as follows:

Using the ANOVA test the hypothesis is set up as follows:

Total mean, \(\overline{X}\) = 817.8

SSB = \(n_{1}(\overline{X}_{1}-\overline{X})^{2}\) + \(n_{2}(\overline{X}_{2}-\overline{X})^{2}\) + \(n_{3}(\overline{X}_{3}-\overline{X})^{2}\)

= 152,477.7

SSE = 130,083.3 + 240,000 + 449,750 = 819,833.3

Using the F table the critical value is F(0.05, 2, 15) = 3.68

As 1.395 < 3.68, the null hypothesis cannot be rejected and it is concluded that there is not enough evidence to prove that the mean daily calcium intake of the three groups is different.

Answer: Do not reject the null hypothesis

go to slide go to slide go to slide

Book a Free Trial Class

FAQs on ANOVA Test

What is an anova test in statistics.

ANOVA test in statistics refers to a hypothesis test that analyzes the variances of three or more populations to determine if the means are different or not.

How to Set Up the Hypothesis for an ANOVA Test?

In an ANOVA test the equality of the means of different groups has to be examined. Thus, the hypothesis is set up as follows:

What is the Formula for the ANOVA Test Statistic?

The ANOVA test uses the F statistic. The formula for the test statistic is given as F = mean squares between groups (MSB) / mean square between errors (MSE)

What is an ANOVA Table?

An ANOVA table is a table that is used to summarize the findings of an ANOVA test. There are 5 columns that consist of the source of variation, the sum of squares, degrees of freedom, mean squares, and the f statistic respectively.

How to Perform an ANOVA Test?

The steps to perform an ANOVA test are as follows:

- Set up the hypothesis.

- Find the means of each group and then determine the overall mean.

- Find the SSB and the corresponding degrees of freedom.

- Determine the SSE and the degrees of freedom.

- Find the MSB and the MSE.

- Divide the MSB by the MSE to find the test statistic.

- Compare the test statistic with the critical value to determine statistical significance.

What is a One Way ANOVA?

One way ANOVA is a type of ANOVA test that is conducted when there is only one independent variable. It is used to compare the means of the various test groups. Such a test can only give information on the statistical significance of the means however, it cannot determine which groups have the differing means.

What is a Two Way ANOVA?

A two way ANOVA is an extension of a one way ANOVA and is conducted when there are two independent variables. It is used to find the main effect as well as the interaction effect of the different factors.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

13.3: One-Factor ANOVA

- Last updated

- Save as PDF

- Page ID 155274

- Rice University

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Learning Objectives

- What null hypothesis is tested by ANOVA

- State the assumptions made of ANOVA

- Describe the uses of ANOVA

- Discuss the process of ANOVA

Analysis of Variance (ANOVA) is a statistical method used to test differences between two or more means. It may seem odd that the technique is called "Analysis of Variance" rather than "Analysis of Means." As you will see, the name is appropriate because inferences about means are made by analyzing variance.

ANOVA is used to test general rather than specific differences among means.

This section shows how ANOVA can be used to analyze a one-factor between-subjects design. We will use as our main example the "Smiles and Leniency" case study. In this study there were four conditions with \(34\) subjects in each condition. There was one score per subject. The effect of different types of smiles on the leniency shown to a person was investigated. Four different types of smiles (neutral, false, felt, miserable) were investigated.The null hypothesis tested by ANOVA is that the population means for all conditions are the same. This can be expressed as follows:

\[H_0: \mu _1 = \mu _2 = ... = \mu _k\]

where \(H_0\) is the null hypothesis and \(k\) is the number of conditions. In the "Smiles and Leniency" study, \(k = 4\) and the null hypothesis is

\[H_0: \mu _{false} = \mu _{felt} = \mu _{miserable} = \mu _{neutral}\]

If the null hypothesis is rejected, then it can be concluded that at least one of the population means is different from at least one other population mean.