ChatGPT Cheating: What to Do When It Happens

- Share article

The latest version of ChatGPT has only been around for a few months. But Aaron Romoslawski, the assistant principal at a Michigan high school, has already seen a handful of students trying to pass off writing produced by the artificial-intelligence-powered tool as their own work.

The signs are almost always obvious, Romoslawski said. Typically, a student will have been turning in work of a certain quality throughout the year, and then “suddenly, we’re seeing these much higher quality assignments pop up out of nowhere,” he said.

Romoslawski and his colleagues don’t start with a punitive response, however. “We see it as an opportunity to have a conversation.”

Those “don’t let the robot do your homework” talks are becoming all too common in schools these days. More than a quarter of K-12 teachers have caught their students cheating using ChatGPT , according to a recent survey by study.com, an online learning platform.

What’s the best way for educators to handle this high-tech form of plagiarism? Here are six tips drawn from educators and experts, including a handy guide created by CommonLit and Quill , two education technology nonprofits focused on building students’ literacy skills.

1. Make your expectations very clear

Students need to know what exactly constitutes cheating, whether AI tools are involved or not.

“Every school or district needs to put stakes in the ground [on a] policy around academic dishonesty, and what that means specifically,” said Michelle Brown, the founder and CEO of CommonLit. Schools can decide how much or how little students can rely on AI to make cosmetic changes or do research, she said, and should make that clear to students. She recommended “the heart of the policy [be] about allowing students to do intellectually rigorous work.”

2. Talk to students about AI in general and ChatGPT in particular

If it appears a student may have passed off ChatGPT’s work as their own, sit down with them one on one, CommonLit and Quill recommend. Then talk about the tool and AI in general. Questions could include: Have you heard of ChatGPT? What are other students saying about it? What do you think it should be used for? Discuss the promises—and potential pitfalls—of artificial intelligence.

“One of the big concerns right now is that teachers want to encourage curiosity about AI,” said Peter Gault, Quill’s founder and executive director. Strict discipline at this point “doesn’t sit right with teachers where there’s a lot of natural curiosity here.”

Romoslawski uses that approach. And so far, he hasn’t had a student try to use ChatGPT on an assignment twice. “We’ve gotten to the point where it’s a conversation and students are redoing the assignment in their own words,” he said.

3. If students use ChatGPT for an assignment, they must attribute what material they used from it

If students are allowed to use ChatGPT or another AI tool for research or other help, let them know how and why they should credit that information, Brown said. Since users can’t link back to a ChatGPT response, she suggested students share the prompt they used to generate the information in their citation.

When Romoslawski and his colleagues suspect a student used ChatGPT to complete an assignment when they weren’t supposed to, he also brings up citation, in part as a way into the conversation.

“We ask the students ‘did you use any resources that you don’t cite?’” he said. “And often, the student says ‘yes.’ And so, then it creates a conversation about how to properly cite and attribute and why we do that.”

4. Ask students directly if they used ChatGPT

Don’t beat around the bush if you suspect a student may have used AI to cheat. Ask them in a very straightforward way if they did, CommonLit and Quill say.

If students say “yes,” Romoslawski likes to get a sense of why. “More often than not, the student was just struggling on the assignment. They had a roadblock. They didn’t know what to do,” he said. “They were crunched for time, because we’re a high-achieving high school and our students are taking some pretty rigorous courses. This was their third homework assignment of the night and they just wanted to get through it.”

If the student says “no,” but you still suspect them of cheating, ask if they got other help with the assignment. If they still say “no,” explain your concerns by pointing out differences between the work they turned in and their previous writing, CommonLit and Quill suggest.

5. Don’t rely on ChatGPT detectors alone to determine if there was cheating

There are a number of tools—including one from OpenAI, ChatGPT’s developer—that purport to be able to distinguish an AI-crafted story or essay from one written by a human . But most of these detectors don’t publish their accuracy rates. And those that do are ineffective about 10 to 20 percent of the time.

“You can’t fully rely on that as the sole proof of academic dishonesty,” Brown said.

6. Make it clear why learning to write on your own is important

Students in general, and particularly students who take advantage of AI to cheat, need to understand what they are missing out on when they take a technology-enabled shortcut. Educators should try to persuade students that learning to write on their own will help them reason and think, or be critical to future job success, Gault said.

But others will need a more immediate incentive. The strongest argument one teacher came up with, according to Quill’s Gault? Tell students that learning to write will make them more persuasive, and therefore, “you can convince your parents to do what you want.”

A version of this article appeared in the March 08, 2023 edition of Education Week as ChatGPT Cheating: What to Do When It Happens

Sign Up for EdWeek Tech Leader

Edweek top school jobs.

Sign Up & Sign In

The ultimate homework cheat? How teachers are facing up to ChatGPT

ChatGPT took the internet by storm when it launched in late 2022, impressing by generating stories, poems, coding solutions, and beyond. Its potential to answer questions has seen New York City's education board ban it from schools - but could it really provide a homework shortcut?

By Tom Acres, technology reporter

Monday 9 January 2023 13:11, UK

"Have I seen this somewhere before?"

It's a question teachers have had to ask themselves while marking assignments since time immemorial.

But never mind students trawling through Wikipedia, or perusing SparkNotes for some Great Gatsby analysis, the backend of 2022 saw another challenge emerge for schools: ChatGPT.

The online chatbot, which can generate realistic responses on a whim, took the world by storm by its ability to do everything from solving computer bugs, to helping write a Sky News article about itself .

Last week, concerned about cheating students, America's largest education department banned it.

New York City 's teaching authority said while it could offer "quick and easy answers to questions, it does not build critical-thinking and problem-solving skills, which are essential for academic and lifelong success".

Of course, that's not going to stop pupils using it at home - but could they really use it as a homework shortcut?

More on Artificial Intelligence

Pupils to 'talk' to 3D Holocaust survivors with artificial intelligence technology

A lucky bet and unlimited coffee: How Nvidia became the world's most valuable company

Pope Francis warns AI poses risk to 'human dignity itself' as he becomes first pontiff to address G7

Related Topics:

- Artificial Intelligence

Teachers vs ChatGPT - round one

First up, Sky News asked a secondary school science teacher from Essex, who was not familiar with the bot, to feed ChatGPT a homework question.

Galaxies contain billions of stars. Compare the formation and life cycles of stars with a similar mass to the Sun to stars with a much greater mass than the Sun.

It's fair to say that ChatGPT let the mask slip almost immediately, as you can see in the images below.

Asking ChatGPT to answer the same question "to secondary school standard" prompted another detailed response.

The teacher's assessment?

"Well, this is definitely more detailed than any of my students. It does go beyond what you'd expect for GCSE, so I would be very suspicious if someone submitted it. I would assume that they'd copied and pasted from somewhere."

Teachers vs ChatGPT - round two

Next was a Kent primary school teacher, also unfamiliar with ChatGPT, who gave it a recent homework task.

Research a famous Londoner and write a biography of their lives, including their childhood and their career achievements.

No problem, said ChatGPT, though it's fair to say that any nine-year-old who submitted the answer below is either being fast-tracked to university or going straight into a lunchtime detention.

"Even just glancing at that, I'd say they copied it straight off the internet," said the teacher.

"No 11-year-old knows the word tumultuous."

'Key decisions' facing schools

So just as copying straight from a more familiar website is going to set alarm bells ringing for teachers, so too would lifting verbatim from ChatGPT.

But pupils are among the most internet-savvy people around, and ChatGPT's ability to instantly churn out seemingly textbook-level responses will still need to be monitored, teachers say.

Jane Basnett, director of digital learning at Downe House School in Berkshire, told Sky News the chatbot presented schools with some "key decisions" to make.

"As with all technology, schools have to teach students how to use technology properly," she said.

"So, with ChatGPT, students need to have the knowledge to know whether the work produced is any good, which is why we need to teach students to be discerning."

Click to subscribe to the Sky News Daily wherever you get your podcasts

Given its rapid emergence, Ms Basnett is already exploring how her school's anti-plagiarism systems will cope with auto-generated essays.

But just as teachers must consider teaching students about the benefits and pitfalls of using AI, Ms Basnett said her colleagues should also be open to its potential.

"ChatGPT is incredibly powerful and as a teacher I can see some benefits," she said.

"For example, I can type in a request to create a series of lessons on a particular grammar point, and it will create a lesson for me. It would take a teacher to analyse the created lesson and amend it, because the suggested lesson, whilst not bad, was not ideal. But, the key elements were there and it could be really useful.

"I could imagine using a created essay from ChatGPT and working through it with my students to examine the merits and faults of the essay."

Please use Chrome browser for a more accessible video player

Dr Peter Van der Putten, assistant professor of AI at Leiden University in the Netherlands, said institutions which chose to prohibit or ignore the technology would only be burying their head in the sand.

"It's there, just how like Google is there," said Dr Van der Putten.

"You can write it into your policies for preventing plagiarism, but it's a reality that the tool exists.

"Sometimes you do need to embrace these things, but be very clear about when you don't want it to be used."

'Bull****er on steroids'

For students and teachers alike, it's an opportunity to improve their digital literacy.

While it has proved its worth when tasked with being creative, such as to problem-solve or come up with ideas, true comprehension and understanding remains beyond it.

Developer OpenAI acknowledges answers can be "overly verbose" and even "incorrect or nonsensical", despite sounding legitimate in most cases, like some sort of desperate, underprepared job interviewee.

As Dr Van der Putten says, ChatGPT is often little more than a "bull*****er on steroids".

Teaching students about those limitations is the best way to ensure they don't over rely on it - even in a pinch.

Related Topics

Universities, schools react to student use of generative AI programs including ChatGPT

Uni student Daniel hesitates when asked if he has used ChatGPT to cheat on assignments before.

His answer is "no", but the 22-year-old feels the need to explain it further.

"I don't think it's cheating," he said.

"As long as you accredit it and use it for like a foundation for your assignment I think it's fine."

Schools and universities have been scrambling to keep up since ChatGPT and other generative AI language programs were released in late 2022.

University student Lan Lang, 18, said quite a few people used generative AI for assessments such as English assignments.

"I do get Chat to like explain stuff to me if teachers don't really explain it that well," Lan Lang said.

She said she used AI detection software on her work.

"We put it through Turnitin, which just basically detects if you've used AI, or if you've copied off anyone else's work," she said.

Caught out in schools

High school teacher Ryan Miller said he wasn't seeing a lot of generative AI used in the Year 12 and Year 8 classes he taught but understood from colleagues other age groups were using it.

"What I hear, when I'm in the staff room, is that a lot of Year 9s, 10s, [and] 11s are pushing the boundaries," Mr Miller said.

He said Year 12 students tended to be more careful after being warned at the start of the year and constantly reminded of consequences.

"Basically, they're told if their work is seen to be made ... predominantly with AI, that it won't be assessed," he said.

Mr Miller said Year 8s, being a little newer to the school, hadn't used it as much.

He said teachers tended to give students a warning if they were detected using generative AI.

"And nine times out of 10 they'll probably own up to it and say, 'Yeah, look, it wasn't ... 100 per cent my own work'," he said.

He said students would rewrite the work so it could be assessed again.

"But it's sort of a one warning per kid, per year for most teachers, I think," he said.

Fellow teacher Hugh Kinnane said generative AI was probably "pretty rife" in assignment work.

He said he most regularly saw it cropping up with students who were trying to avoid doing any work.

"And then it's a last-minute job," he said.

Drawing the line

University of Adelaide Deputy Vice-Chancellor Academic Jennie Shaw said while her university embraced the use of AI, it could still be used to cheat.

"So we're saying, of course, that is not allowed," Dr Shaw said.

She said generative AI was included in academic integrity modules for first-year students.

"We make it really clear to students what is OK and what is not OK," she said.

Dr Shaw said there were instances when students were encouraged to use generative AI and then critique the quality of its answer.

"What we are asking our students and our staff to do is to reference when they do use it," she said.

She said it was a requirement that as much content as possible was checked by similarity detection software.

According to Turnitin's website — which is used by the University of Adelaide as well as many other universities across Australia to detect AI-generated content— the company is committed to a false positive rate of less than 1 per cent to ensure not students are falsely accused of misconduct.

AI arms race

The software has put students at the centre of a battle for superiority between programs generating answers for their assignments and those designed to catch them out.

And according to Australian Institute for Machine Learning senior lecturer Feras Dayoub, some are getting caught in the crossfire.

He said companies that created AI chatbots were trying to be undetectable while companies that created AI detection software wanted to detect everything.

"There will be a lot of false positives," Dr Dayoub said.

He said it could be an unpleasant experience for the student if the detector was wrong.

University student Ethan, 19, said single words were sometimes highlighted in his Turnitin submissions.

"It can be a bit inaccurate," Ethan said.

Dr Shaw said she understood the detection software had its faults.

"We would find probably two thirds of anything they pick up saying there's some unacceptably high levels of similarity here is often just picking up patterns in language," she said.

"I know some universities have chosen to turn it off because it does turn up lots of false positives.

"We're choosing to use it at this point."

Changing education

The Department of Education released a nationwide framework in December last year for the use of generative AI in schools.

Dr Shaw said the technology was changing the way teachers taught and students learned.

"But we still need students to have deep knowledge," she said.

"We need them to know how to use the tools in their profession.

"And again, one of those in many professions will now be generative AI, and we need them to be able to call out when it's wrong."

Dr Dayoub said he would prefer a future in which there was no need for detectors because people had changed the way they taught and assessed.

He said another option would be to take a stricter approach, where students did the work themselves and there would be no help.

"In that case you need the detectors so there will be a huge market for these detectors and it will become a race," he said.

"I don't like that future."

- X (formerly Twitter)

Related Stories

Chatgpt's class divide: are public school bans on the ai tool giving private school kids an unfair edge.

Scarlett Johansson 'shocked' by ChatGPT voice 'eerily similar' to her own

ChatGPT was tipped to cause widespread cheating. Here's what students say happened

- Computer Science

- Information Technology Industry

- Secondary Schools

- Universities

- Skip to main content

- Keyboard shortcuts for audio player

'Everybody is cheating': Why this teacher has adopted an open ChatGPT policy

Mary Louise Kelly

Not all educators are shying away from artificial intelligence in the classroom. Jeff Pachoud/AFP via Getty Images hide caption

Not all educators are shying away from artificial intelligence in the classroom.

Ethan Mollick has a message for the humans and the machines: can't we all just get along?

After all, we are now officially in an A.I. world and we're going to have to share it, reasons the associate professor at the University of Pennsylvania's prestigious Wharton School.

"This was a sudden change, right? There is a lot of good stuff that we are going to have to do differently, but I think we could solve the problems of how we teach people to write in a world with ChatGPT," Mollick told NPR.

Ever since the chatbot ChatGPT launched in November, educators have raised concerns it could facilitate cheating.

Some school districts have banned access to the bot, and not without reason. The artificial intelligence tool from the company OpenAI can compose poetry. It can write computer code. It can maybe even pass an MBA exam.

One Wharton professor recently fed the chatbot the final exam questions for a core MBA course and found that, despite some surprising math errors, he would have given it a B or a B-minus in the class .

A new AI chatbot might do your homework for you. But it's still not an A+ student

And yet, not all educators are shying away from the bot.

This year, Mollick is not only allowing his students to use ChatGPT, they are required to. And he has formally adopted an A.I. policy into his syllabus for the first time.

He teaches classes in entrepreneurship and innovation, and said the early indications were the move was going great.

"The truth is, I probably couldn't have stopped them even if I didn't require it," Mollick said.

This week he ran a session where students were asked to come up with ideas for their class project. Almost everyone had ChatGPT running and were asking it to generate projects, and then they interrogated the bot's ideas with further prompts.

"And the ideas so far are great, partially as a result of that set of interactions," Mollick said.

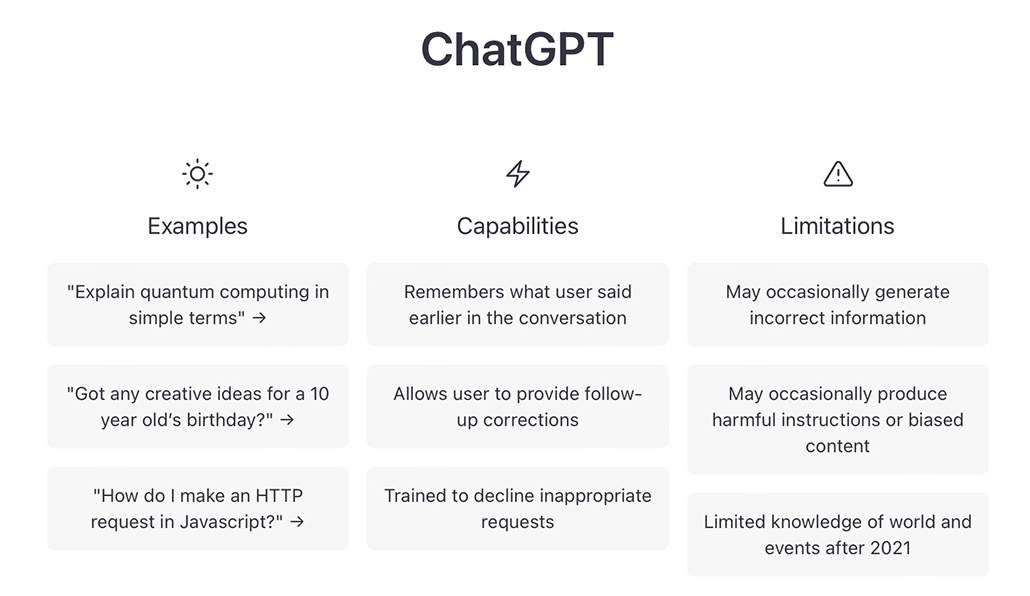

Users experimenting with the chatbot are warned before testing the tool that ChatGPT "may occasionally generate incorrect or misleading information." OpenAI/Screenshot by NPR hide caption

He readily admits he alternates between enthusiasm and anxiety about how artificial intelligence can change assessments in the classroom, but he believes educators need to move with the times.

"We taught people how to do math in a world with calculators," he said. Now the challenge is for educators to teach students how the world has changed again, and how they can adapt to that.

Mollick's new policy states that using A.I. is an "emerging skill"; that it can be wrong and students should check its results against other sources; and that they will be responsible for any errors or omissions provided by the tool.

And, perhaps most importantly, students need to acknowledge when and how they have used it.

"Failure to do so is in violation of academic honesty policies," the policy reads.

Planet Money

This 22-year-old is trying to save us from chatgpt before it changes writing forever.

Mollick isn't the first to try to put guardrails in place for a post-ChatGPT world.

Earlier this month, 22-year-old Princeton student Edward Tian created an app to detect if something had been written by a machine . Named GPTZero, it was so popular that when he launched it, the app crashed from overuse.

"Humans deserve to know when something is written by a human or written by a machine," Tian told NPR of his motivation.

Mollick agrees, but isn't convinced that educators can ever truly stop cheating.

He cites a survey of Stanford students that found many had already used ChatGPT in their final exams, and he points to estimates that thousands of people in places like Kenya are writing essays on behalf of students abroad .

"I think everybody is cheating ... I mean, it's happening. So what I'm asking students to do is just be honest with me," he said. "Tell me what they use ChatGPT for, tell me what they used as prompts to get it to do what they want, and that's all I'm asking from them. We're in a world where this is happening, but now it's just going to be at an even grander scale."

"I don't think human nature changes as a result of ChatGPT. I think capability did."

The radio interview with Ethan Mollick was produced by Gabe O'Connor and edited by Christopher Intagliata.

Faced with criticism it's a haven for cheaters, ChatGPT adds tool to catch them

Launch of text classifier follows weeks of criticism.

Social Sharing

The maker of ChatGPT is trying to curb its reputation as a freewheeling cheating machine with a new tool that can help teachers detect if a student or artificial intelligence wrote that homework.

The new AI Text Classifier launched Tuesday by OpenAI follows a weeks-long discussion at schools and colleges over fears that ChatGPT's ability to write just about anything on command could fuel academic dishonesty and hinder learning.

OpenAI cautions that its new tool — like others already available — is not foolproof. The method for detecting AI-written text "is imperfect and it will be wrong sometimes," said Jan Leike, head of OpenAI's alignment team tasked to make its systems safer.

"Because of that, it shouldn't be solely relied upon when making decisions," Leike said.

Teenagers and college students were among the millions of people who began experimenting with ChatGPT after it launched Nov. 30 as a free application on OpenAI's website . And while many found ways to use it creatively and harmlessly, the ease with which it could answer take-home test questions and assist with other assignments sparked a panic among some educators.

By the time schools opened for the new year, New York City, Los Angeles and other big public school districts began to block its use in classrooms and on school devices.

Educators, students see challenges, opportunities with new ChatGPT AI software

The Seattle Public Schools district initially blocked ChatGPT on all school devices in December but then opened access to educators who want to use it as a teaching tool, said Tim Robinson, the district spokesman.

"We can't afford to ignore it," Robinson said.

The district is also discussing possibly expanding the use of ChatGPT into classrooms to let teachers use it to train students to be better critical thinkers and to let students use the application as a "personal tutor" or to help generate new ideas when working on an assignment, Robinson said.

School districts around the country say they are seeing the conversation around ChatGPT evolve quickly.

"The initial reaction was 'OMG, how are we going to stem the tide of all the cheating that will happen with ChatGPT?'' said Devin Page, a technology specialist with the Calvert County Public School District in Maryland. Now there is a growing realization that "this is the future" and blocking it is not the solution, he said.

"I think we would be naive if we were not aware of the dangers this tool poses, but we also would fail to serve our students if we ban them and us from using it for all its potential power," said Page, who thinks districts like his own will eventually unblock ChatGPT, especially once the company's detection service is in place.

OpenAI emphasized the limitations of its detection tool in a blog post Tuesday, but said that in addition to deterring plagiarism, it could help to detect automated disinformation campaigns and other misuse of AI to mimic humans.

The longer a passage of text, the better the tool is at detecting if an AI or human wrote something. Type in any text — a college admissions essay, or a literary analysis of Ralph Ellison's Invisible Man — and the tool will label it as either "very unlikely, unlikely, unclear if it is, possibly, or likely" AI-generated.

But much like ChatGPT itself, which was trained on a huge trove of digitized books, newspapers and online writings but often confidently spits out falsehoods or nonsense, it's not easy to interpret how it came up with a result.

"We don't fundamentally know what kind of pattern it pays attention to, or how it works internally," Leike said. "There's really not much we could say at this point about how the classifier actually works."

Higher education institutions around the world also have begun debating responsible use of AI technology. Sciences Po, one of France's most prestigious universities, prohibited its use last week and warned that anyone found surreptitiously using ChatGPT and other AI tools to produce written or oral work could be banned from Sciences Po and other institutions.

In response to the backlash, OpenAI said it has been working for several weeks to craft new guidelines to help educators.

"Like many other technologies, it may be that one district decides that it's inappropriate for use in their classrooms," said OpenAI policy researcher Lama Ahmad. "We don't really push them one way or another. We just want to give them the information that they need to be able to make the right decisions for them."

- ChatGPT could help rather than hinder student learning, says B.C. professor

- Q&A Bot or not? This Canadian developed an app that weeds out AI-generated homework

- New AI chatbot can do students' homework for them

It's an unusually public role for the research-oriented San Francisco startup, now backed by billions of dollars in investment from its partner Microsoft and facing growing interest from the public and governments.

France's digital economy minister Jean-Noel Barrot recently met in California with OpenAI executives, including CEO Sam Altman, and a week later told an audience at the World Economic Forum in Davos, Switzerland that he was optimistic about the technology. But the government minister — a former professor at the Massachusetts Institute of Technology and the French business school HEC in Paris — said there are also difficult ethical questions that will need to be addressed.

"So if you're in the law faculty, there is room for concern because obviously ChatGPT, among other tools, will be able to deliver exams that are relatively impressive," he said. "If you are in the economics faculty, then you're fine because ChatGPT will have a hard time finding or delivering something that is expected when you are in a graduate-level economics faculty."

He said it will be increasingly important for users to understand the basics of how these systems work so they know what biases might exist.

3 ways to use ChatGPT to help students learn – and not cheat

Professor of Educational Psychology and Learning Technologies, The Ohio State University

Professor of Educational Psychology and Quantitative Research, Evaluation, and Measurement, The Ohio State University

Disclosure statement

The authors do not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and have disclosed no relevant affiliations beyond their academic appointment.

The Ohio State University provides funding as a founding partner of The Conversation US.

View all partners

- Bahasa Indonesia

Since ChatGPT can engage in conversation and generate essays, computer codes, charts and graphs that closely resemble those created by humans, educators worry students may use it to cheat . A growing number of school districts across the country have decided to block access to ChatGPT on computers and networks.

As professors of educational psychology and educational technology , we’ve found that the main reason students cheat is their academic motivation. For example, sometimes students are just motivated to get a high grade, whereas other times they are motivated to learn all that they can about a topic.

The decision to cheat or not, therefore, often relates to how academic assignments and tests are constructed and assessed, not on the availability of technological shortcuts. When they have the opportunity to rewrite an essay or retake a test if they don’t do well initially, students are less likely to cheat .

We believe teachers can use ChatGPT to increase their students’ motivation for learning and actually prevent cheating. Here are three strategies for doing that.

1. Treat ChatGPT as a learning partner

Our research demonstrates that students are more likely to cheat when assignments are designed in ways that encourage them to outperform their classmates. In contrast, students are less likely to cheat when teachers assign academic tasks that prompt them to work collaboratively and to focus on mastering content instead of getting a good grade.

Treating ChatGPT as a learning partner can help teachers shift the focus among their students from competition and performance to collaboration and mastery.

For example, a science teacher can assign students to work with ChatGPT to design a hydroponic vegetable garden. In this scenario, students could engage with ChatGPT to discuss the growing requirements for vegetables, brainstorm design ideas for a hydroponic system and analyze pros and cons of the design.

These activities are designed to promote mastery of content as they focus on the processes of learning rather than just the final grade.

2. Use ChatGPT to boost confidence

Research shows that when students feel confident that they can successfully do the work assigned to them, they are less likely to cheat . And an important way to boost students’ confidence is to provide them with opportunities to experience success .

ChatGPT can facilitate such experiences by offering students individualized support and breaking down complex problems into smaller challenges or tasks.

For example, suppose students are asked to attempt to design a hypothetical vehicle that can use gasoline more efficiently than a traditional car. Students who struggle with the project – and might be inclined to cheat – can use ChatGPT to break down the larger problem into smaller tasks. ChatGPT might suggest they first develop an overall concept for the vehicle before determining the size and weight of the vehicle and deciding what type of fuel will be used. Teachers could also ask students to compare the steps suggested by ChatGPT with steps that are recommended by other sources.

3. Prompt ChatGPT to give supportive feedback

It is well documented that personalized feedback supports students’ positive emotions, including self-confidence.

ChatGPT can be directed to deliver feedback using positive, empathetic and encouraging language. For example, if a student completes a math problem incorrectly, instead of merely telling the student “You are wrong and the correct answer is …,” ChatGPT may initiate a conversation with the student. Here’s a real response generated by ChatGPT: “Your answer is not correct, but it’s completely normal to encounter occasional errors or misconceptions along the way. Don’t be discouraged by this small setback; you’re on the right track! I’m here to support you and answer any questions you may have. You’re doing great!”

This will help students feel supported and understood while receiving feedback for improvement. Teachers can easily show students how to direct ChatGPT to provide them such feedback.

We believe that when teachers use ChatGPT and other AI chatbots thoughtfully – and also encourage students to use these tools responsibly in their schoolwork – students have an incentive to learn more and cheat less.

- Artificial intelligence (AI)

- Education technology

- K-12 education

- Educational psychology

- Large language models

- AI chatbots

Postdoctoral Research Fellowship

Health Safety and Wellbeing Advisor

Social Media Producer

Dean (Head of School), Indigenous Knowledges

Senior Research Fellow - Curtin Institute for Energy Transition (CIET)

Home — Blog — AI Hacks and Tips — ChatGPT Cheating Unveiled: Navigating the AI Landscape in Education

ChatGPT Cheating Unveiled: Navigating the AI Landscape in Education

Artificial Intelligence (AI) has rapidly integrated into our everyday lives, revolutionizing various fields, including education. This integration has brought along numerous benefits, making processes more efficient and accessible. However, alongside these advancements come significant challenges and ethical dilemmas. A prominent issue that has emerged in the academic sector is the use of AI tools like ChatGPT for cheating, commonly referred to as ChatGPT cheating.

Understanding the risks and consequences of ChatGPT cheating is crucial for students, educators, and AI enthusiasts alike. The question Is using AI cheating? often arises in academic discussions. AI tools have the potential to assist students in their learning journey, but when misused, they can compromise academic integrity. This phenomenon of AI cheating is becoming increasingly prevalent as more students discover the ease with which AI can produce essays, solve problems, and generate content.

In this blog post, we will delve into what ChatGPT is and how it works in an academic context. ChatGPT, a sophisticated language model, can generate human-like text based on the input it receives. While it can be a valuable educational tool, its allure for students lies in the possibility of using it to complete assignments and exams effortlessly, thus engaging in AI and cheating.

The impacts of ChatGPT cheating are far-reaching. For students, reliance on AI for academic tasks can hinder the development of critical thinking and problem-solving skills. For educators, detecting AI-generated work poses a significant challenge, complicating the assessment process. Furthermore, widespread AI cheating can undermine the value of academic qualifications and erode trust in educational institutions.

In conclusion, the question Is using AI cheating? underscores the need for a balanced approach to AI in education. While AI tools like ChatGPT offer substantial benefits, it is imperative to address the ethical concerns and develop strategies to prevent their misuse. By fostering an environment of academic integrity and promoting responsible AI usage, we can harness the potential of AI without compromising the principles of education.

What is ChatGPT?

ChatGPT is an advanced AI language model developed by OpenAI, designed to understand and generate human-like text based on user prompts. It has been trained on diverse datasets, which allows it to provide coherent and contextually relevant responses. Unlike traditional search engines that retrieve information from indexed web pages, ChatGPT generates text by predicting the most suitable words to follow a given input, making its interactions more conversational and fluid.

A common question that arises is, Is using AI cheating?. The debate on AI and cheating focuses on whether tools like ChatGPT constitute AI cheating in various contexts. While some argue that relying on ChatGPT for tasks like writing or problem-solving could be seen as chatgpt cheating, others believe that it enhances productivity and offers new ways to interact with technology.

Key features of ChatGPT include natural language processing (NLP) capabilities, the ability to engage in extended conversations, and the capacity to provide detailed, nuanced responses. These features make ChatGPT a powerful tool, offering a more interactive and personalized user experience compared to traditional resources. The ethical considerations around ai cheating continue to evolve as the technology becomes more integrated into daily life.

How ChatGPT Works in an Academic Context

ChatGPT leverages natural language processing (NLP) to effectively process and comprehend user inputs, which allows it to generate relevant and contextually appropriate responses. Its extensive knowledge base is derived from vast amounts of training data, enabling it to provide information on a wide range of topics. This makes ChatGPT an incredibly useful tool for students who interact with it through prompts and queries, asking questions or seeking assistance with various academic tasks.

In an academic setting, ChatGPT offers a multitude of benefits. It can assist with essay writing, research, problem-solving, and even exam preparation. For instance, a student struggling to draft an essay can use ChatGPT to generate coherent text that serves as either a primary draft or an inspiration for their own writing. Similarly, students facing difficulty with research can use the tool to gather summaries of complex topics or to generate a list of scholarly articles on the subject matter. ChatGPT's ability to produce well-structured and informative responses makes it a valuable asset for quick, efficient academic assistance.

However, the convenience and capabilities of ChatGPT also introduce significant ethical considerations. The term "chatgpt cheating" has emerged as a growing concern among educators and academic institutions. When used unethically, ChatGPT can facilitate academic dishonesty. For example, a student might misuse this AI tool to generate entire essays or solve complex problems without contributing their own effort, effectively engaging in ai cheating. This unethical use undermines the educational process, which is designed to develop critical thinking and problem-solving skills.

Moreover, the ease with which ChatGPT can produce high-quality text makes it tempting for students to rely on the tool rather than putting in the work to understand the material. This misuse can lead to a superficial understanding of the subject matter and can be detrimental to a student's overall educational experience. The risk of ai cheating is not limited to essay writing alone; students might also use the tool to get answers for take-home exams or other assignments, bypassing the learning process entirely.

Educational institutions are becoming increasingly aware of the potential for chatgpt cheating and are taking steps to mitigate this risk. Some are incorporating stricter plagiarism detection measures, while others are focusing on educating students about the ethical use of AI tools. It's important for students to understand that while ChatGPT can be a valuable academic aid, it should be used responsibly and ethically.

In conclusion, ChatGPT has the potential to be a remarkable tool for enhancing the academic experience. However, the risks associated with ai cheating cannot be ignored. Both students and educators must work together to ensure that this technology is used to support learning and intellectual growth, rather than to undermine it.

The Allure of ChatGPT for Students

The ease of use and quick response time of ChatGPT make it an attractive option for students. With just a few clicks, they can generate essays, answer homework questions, and gain insights on complex topics. The perceived anonymity of interacting with an AI tool further adds to its allure, as students may believe they can avoid detection. However, this convenience comes at a cost. Relying on ChatGPT for academic tasks can hinder the development of critical thinking, research skills, and independent problem-solving abilities. It's essential for students to recognize the difference between legitimate use and cheating.

ChatGPT has revolutionized the way students approach their studies by providing instant answers and solutions. This AI tool can be especially helpful when students face tight deadlines or need quick clarification on a difficult topic. The efficiency and accessibility of ChatGPT are undeniably appealing. Yet, it's crucial to consider the implications of such reliance. Utilizing ChatGPT to complete assignments can easily cross the line into AI cheating. When students depend too heavily on this technology, they risk missing out on the educational experiences that build essential skills.

AI cheating through ChatGPT is becoming a growing concern in the academic world. Educators are increasingly worried about students using AI tools to complete their work, bypassing the learning process. This form of cheating undermines the educational system's integrity and the value of genuine learning. When students use ChatGPT to generate essays or solve problems without understanding the underlying concepts, they cheat themselves out of valuable educational opportunities.

Moreover, the issue of AI cheating with ChatGPT raises ethical questions. Is it fair for students to submit AI-generated work as their own? While the technology can offer guidance and support, it should not replace the effort required to learn and master new material. Educators must address these ethical concerns by setting clear guidelines on the acceptable use of AI tools like ChatGPT.

In conclusion, ChatGPT offers incredible benefits for students, providing quick answers and easing the workload. However, it's essential to distinguish between legitimate use and AI cheating. Over-reliance on this technology can hinder the development of critical thinking and problem-solving skills, ultimately impacting a student's educational journey. Both students and educators must navigate the fine line between leveraging AI for learning and falling into the trap of AI cheating. By recognizing and addressing these challenges, we can ensure that the use of ChatGPT and similar tools enhances education rather than detracts from it.

Defining Academic Integrity in the Age of AI

Academic integrity traditionally focuses on honesty, originality, and the avoidance of plagiarism. However, the advent of AI blurs these lines, making it challenging to define what constitutes cheating. While using AI as a tool is acceptable, passing off AI-generated content as one's own work violates academic integrity.

Institutions are grappling with these new challenges, revising policies to address the use of AI in academics. Clear guidelines and education on ethical AI use are vital to maintaining academic standards and integrity in this evolving landscape.

Risks of Using ChatGPT for Academic Cheating

The risks of using ChatGPT for cheating are multifaceted. In the short term, students risk detection by instructors or plagiarism detection software, leading to academic penalties such as course failure, suspension, or expulsion. The long-term consequences are even more severe, including skill deficits, knowledge gaps, and ethical compromises that can have lasting professional repercussions.

Students who rely on ChatGPT for academic tasks may struggle to develop essential skills, affecting their personal and professional growth. Additionally, forming habits of dishonesty can impact their future career opportunities and ethical standards in their chosen fields.

Consequences of ChatGPT Cheating

The consequences of ChatGPT cheating extend beyond individual students. For educational institutions, widespread cheating can damage academic reputation, lead to accreditation challenges, and necessitate increased resource allocation for detection and prevention measures. This erosion of trust in academic credentials can have broader societal impacts, affecting workforce preparedness and ethical standards in professional fields.

Individuals who cheat using ChatGPT may experience poor learning outcomes, stunted personal development, and limited future opportunities. Educational institutions face the challenge of maintaining their integrity and credibility, while society at large grapples with the implications of diminished trust in educational credentials.

Detection Methods for ChatGPT-Generated Content

Detecting ChatGPT-generated content is a growing concern for educators. Linguistic analysis tools and AI-powered detection software can help identify AI-generated text by analyzing patterns and inconsistencies. Instructors can also employ strategies such as oral examinations and in-class writing assignments to verify student knowledge and authenticity.

These detection methods are crucial in upholding academic integrity and ensuring that students are genuinely acquiring the skills and knowledge they need for their future endeavors.

Alternatives to Cheating with ChatGPT

Rather than resorting to cheating, students can use AI tools like ChatGPT for legitimate educational purposes. ChatGPT can be a valuable brainstorming tool, helping students refine research questions or generate practice problems. Developing critical thinking and research skills through proper use of AI can enhance learning and academic performance.

Seeking academic support, such as tutoring, writing centers, and office hours, provides students with the help they need without compromising their integrity. Encouraging the ethical use of AI tools can foster a culture of honesty and excellence in education.

In conclusion, while ChatGPT offers incredible potential for enhancing educational experiences, its misuse for cheating undermines academic integrity and personal growth. Understanding the risks and consequences of ChatGPT cheating is essential for students, educators, and AI enthusiasts alike.

Maintaining academic integrity in the age of AI requires clear guidelines, ethical education, and robust detection methods. By using AI tools responsibly, students can enhance their learning experiences and prepare themselves for future success. For those seeking further guidance, exploring ethical AI use and academic support services can provide valuable insights and resources.

We use cookies to personalyze your web-site experience. By continuing we’ll assume you board with our cookie policy .

IEEE Account

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

It’s a wonderful world — and universe — out there.

Come explore with us!

Science News Explores

Think twice before using chatgpt for help with homework.

This new AI tool talks a lot like a person — but still makes mistakes

ChatGPT is impressive and can be quite useful. It can help people write text, for instance, and code. However, “it’s not magic,” says Casey Fiesler. In fact, it often seems intelligent and confident while making mistakes — and sometimes parroting biases.

Glenn Harvey

Share this:

- Google Classroom

By Kathryn Hulick

February 16, 2023 at 6:30 am

“We need to talk,” Brett Vogelsinger said. A student had just asked for feedback on an essay. One paragraph stood out. Vogelsinger, a 9th-grade English teacher in Doylestown, Pa., realized that the student hadn’t written the piece himself. He had used ChatGPT. It’s a new artificial intelligence (AI) tool. It answers questions. It writes code. And it can generate long essays and stories.

The company OpenAI made ChatGPT available for free at the end of November 2022. Within a week, it had more than a million users. Other tech companies are racing to put out similar tools. Google launched Bard in early February. The AI company Anthropic is testing a new chatbot named Claude. And another AI company, DeepMind, is working on a bot called Sparrow.

ChatGPT marks the beginning of a new wave of AI that will disrupt education. Whether that’s a good or bad thing remains to be seen.

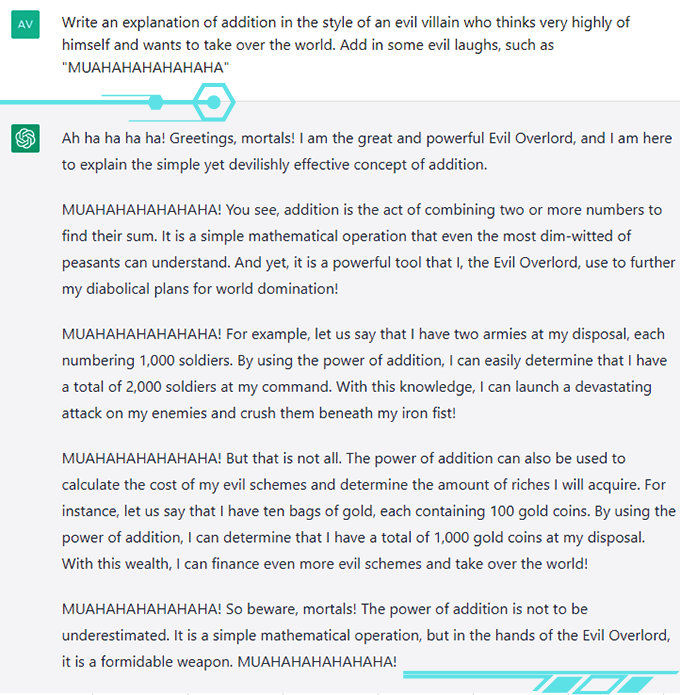

Some people have been using ChatGPT out of curiosity or for entertainment. I asked it to invent a silly excuse for not doing homework in the style of a medieval proclamation. In less than a second, it offered me: “Hark! Thy servant was beset by a horde of mischievous leprechauns, who didst steal mine quill and parchment, rendering me unable to complete mine homework.”

But students can also use it to cheat. When Stanford University’s student-run newspaper polled students at the university, 17 percent said they had used ChatGPT on assignments or exams during the end of 2022. Some admitted to submitting the chatbot’s writing as their own. For now, these students and others are probably getting away with cheating.

And that’s because ChatGPT does an excellent job. “It can outperform a lot of middle-school kids,” Vogelsinger says. He probably wouldn’t have known his student used it — except for one thing. “He copied and pasted the prompt,” says Vogelsinger.

This essay was still a work in progress. So Vogelsinger didn’t see this as cheating. Instead, he saw an opportunity. Now, the student is working with the AI to write that essay. It’s helping the student develop his writing and research skills.

“We’re color-coding,” says Vogelsinger. The parts the student writes are in green. Those parts that ChatGPT writes are in blue. Vogelsinger is helping the student pick and choose only a few sentences from the AI to keep. He’s allowing other students to collaborate with the tool as well. Most aren’t using it regularly, but a few kids really like it. Vogelsinger thinks it has helped them get started and to focus their ideas.

This story had a happy ending.

But at many schools and universities, educators are struggling with how to handle ChatGPT and other tools like it. In early January, New York City public schools banned ChatGPT on their devices and networks. They were worried about cheating. They also were concerned that the tool’s answers might not be accurate or safe. Many other school systems in the United States and elsewhere have followed suit.

Test yourself: Can you spot the ChatGPT answers in our quiz?

But some experts suspect that bots like ChatGPT could also be a great help to learners and workers everywhere. Like calculators for math or Google for facts, an AI chatbot makes something that once took time and effort much simpler and faster. With this tool, anyone can generate well-formed sentences and paragraphs — even entire pieces of writing.

How could a tool like this change the way we teach and learn?

The good, the bad and the weird

ChatGPT has wowed its users. “It’s so much more realistic than I thought a robot could be,” says Avani Rao. This high school sophomore lives in California. She hasn’t used the bot to do homework. But for fun, she’s prompted it to say creative or silly things. She asked it to explain addition, for instance, in the voice of an evil villain. Its answer is highly entertaining.

Tools like ChatGPT could help create a more equitable world for people who are trying to work in a second language or who struggle with composing sentences. Students could use ChatGPT like a coach to help improve their writing and grammar. Or it could explain difficult subjects. “It really will tutor you,” says Vogelsinger, who had one student come to him excited that ChatGPT had clearly outlined a concept from science class.

Teachers could use ChatGPT to help create lesson plans or activities — ones personalized to the needs or goals of specific students.

Several podcasts have had ChatGPT as a “guest” on the show. In 2023, two people are going to use an AI-powered chatbot like a lawyer. It will tell them what to say during their appearances in traffic court. The company that developed the bot is paying them to test the new tech. Their vision is a world in which legal help might be free.

@professorcasey Replying to @novshmozkapop #ChatGPT might be helpful but don’t ask it for help on your math homework. #openai #aiethics ♬ original sound – Professor Casey Fiesler

Xiaoming Zhai tested ChatGPT to see if it could write an academic paper . Zhai is an expert in science education at the University of Georgia in Athens. He was impressed with how easy it was to summarize knowledge and generate good writing using the tool. “It’s really amazing,” he says.

All of this sounds great. Still, some really big problems exist.

Most worryingly, ChatGPT and tools like it sometimes gets things very wrong. In an ad for Bard, the chatbot claimed that the James Webb Space Telescope took the very first picture of an exoplanet. That’s false. In a conversation posted on Twitter, ChatGPT said the fastest marine mammal was the peregrine falcon. A falcon, of course, is a bird and doesn’t live in the ocean.

ChatGPT can be “confidently wrong,” says Casey Fiesler. Its text, she notes, can contain “mistakes and bad information.” She is an expert in the ethics of technology at the University of Colorado Boulder. She has made multiple TikTok videos about the pitfalls of ChatGPT .

Also, for now, all of the bot’s training data came from before a date in 2021. So its knowledge is out of date.

Finally, ChatGPT does not provide sources for its information. If asked for sources, it will make them up. It’s something Fiesler revealed in another video . Zhai discovered the exact same thing. When he asked ChatGPT for citations, it gave him sources that looked correct. In fact, they were bogus.

Zhai sees the tool as an assistant. He double-checked its information and decided how to structure the paper himself. If you use ChatGPT, be honest about it and verify its information, the experts all say.

Under the hood

ChatGPT’s mistakes make more sense if you know how it works. “It doesn’t reason. It doesn’t have ideas. It doesn’t have thoughts,” explains Emily M. Bender. She is a computational linguist who works at the University of Washington in Seattle. ChatGPT may sound a lot like a person, but it’s not one. It is an AI model developed using several types of machine learning .

The primary type is a large language model. This type of model learns to predict what words will come next in a sentence or phrase. It does this by churning through vast amounts of text. It places words and phrases into a 3-D map that represents their relationships to each other. Words that tend to appear together, like peanut butter and jelly, end up closer together in this map.

Before ChatGPT, OpenAI had made GPT3. This very large language model came out in 2020. It had trained on text containing an estimated 300 billion words. That text came from the internet and encyclopedias. It also included dialogue transcripts, essays, exams and much more, says Sasha Luccioni. She is a researcher at the company HuggingFace in Montreal, Canada. This company builds AI tools.

OpenAI improved upon GPT3 to create GPT3.5. This time, OpenAI added a new type of machine learning. It’s known as “reinforcement learning with human feedback.” That means people checked the AI’s responses. GPT3.5 learned to give more of those types of responses in the future. It also learned not to generate hurtful, biased or inappropriate responses. GPT3.5 essentially became a people-pleaser.

During ChatGPT’s development, OpenAI added even more safety rules to the model. As a result, the chatbot will refuse to talk about certain sensitive issues or information. But this also raises another issue: Whose values are being programmed into the bot, including what it is — or is not — allowed to talk about?

OpenAI is not offering exact details about how it developed and trained ChatGPT. The company has not released its code or training data. This disappoints Luccioni. “I want to know how it works in order to help make it better,” she says.

When asked to comment on this story, OpenAI provided a statement from an unnamed spokesperson. “We made ChatGPT available as a research preview to learn from real-world use, which we believe is a critical part of developing and deploying capable, safe AI systems,” the statement said. “We are constantly incorporating feedback and lessons learned.” Indeed, some early experimenters got the bot to say biased things about race and gender. OpenAI quickly patched the tool. It no longer responds the same way.

ChatGPT is not a finished product. It’s available for free right now because OpenAI needs data from the real world. The people who are using it right now are their guinea pigs. If you use it, notes Bender, “You are working for OpenAI for free.”

Humans vs robots

How good is ChatGPT at what it does? Catherine Gao is part of one team of researchers that is putting the tool to the test.

At the top of a research article published in a journal is an abstract. It summarizes the author’s findings. Gao’s group gathered 50 real abstracts from research papers in medical journals. Then they asked ChatGPT to generate fake abstracts based on the paper titles. The team asked people who review abstracts as part of their job to identify which were which .

The reviewers mistook roughly one in every three (32 percent) of the AI-generated abstracts as human-generated. “I was surprised by how realistic and convincing the generated abstracts were,” says Gao. She is a doctor and medical researcher at Northwestern University’s Feinberg School of Medicine in Chicago, Ill.

In another study, Will Yeadon and his colleagues tested whether AI tools could pass a college exam . Yeadon is a physics teacher at Durham University in England. He picked an exam from a course that he teaches. The test asks students to write five short essays about physics and its history. Students who take the test have an average score of 71 percent, which he says is equivalent to an A in the United States.

Yeadon used a close cousin of ChatGPT, called davinci-003. It generated 10 sets of exam answers. Afterward, he and four other teachers graded them using their typical grading standards for students. The AI also scored an average of 71 percent. Unlike the human students, however, it had no very low or very high marks. It consistently wrote well, but not excellently. For students who regularly get bad grades in writing, Yeadon says, this AI “will write a better essay than you.”

These graders knew they were looking at AI work. In a follow-up study, Yeadon plans to use work from the AI and students and not tell the graders whose work they are looking at.

Educators and Parents, Sign Up for The Cheat Sheet

Weekly updates to help you use Science News Explores in the learning environment

Thank you for signing up!

There was a problem signing you up.

Cheat-checking with AI

People may not always be able to tell if ChatGPT wrote something or not. Thankfully, other AI tools can help. These tools use machine learning to scan many examples of AI-generated text. After training this way, they can look at new text and tell you whether it was most likely composed by AI or a human.

Most free AI-detection tools were trained on older language models, so they don’t work as well for ChatGPT. Soon after ChatGPT came out, though, one college student spent his holiday break building a free tool to detect its work . It’s called GPTZero .

The company Originality.ai sells access to another up-to-date tool. Founder Jon Gillham says that in a test of 10,000 samples of text composed by GPT3, the tool tagged 94 percent of them correctly. When ChatGPT came out, his team tested a much smaller set of 20 samples that had been created by GPT3, GPT3.5 and ChatGPT. Here, Gillham says, “it tagged all of them as AI-generated. And it was 99 percent confident, on average.”

In addition, OpenAI says they are working on adding “digital watermarks” to AI-generated text. They haven’t said exactly what they mean by this. But Gillham explains one possibility. The AI ranks many different possible words when it is generating text. Say its developers told it to always choose the word ranked in third place rather than first place at specific places in its output. These words would act “like a fingerprint,” says Gillham.

The future of writing

Tools like ChatGPT are only going to improve with time. As they get better, people will have to adjust to a world in which computers can write for us. We’ve made these sorts of adjustments before. As high-school student Rao points out, Google was once seen as a threat to education because it made it possible to instantly look up any fact. We adapted by coming up with teaching and testing materials that don’t require students to memorize things.

Now that AI can generate essays, stories and code, teachers may once again have to rethink how they teach and test. That might mean preventing students from using AI. They could do this by making students work without access to technology. Or they might invite AI into the writing process, as Vogelsinger is doing. Concludes Rao, “We might have to shift our point of view about what’s cheating and what isn’t.”

Students will still have to learn to write without AI’s help. Kids still learn to do basic math even though they have calculators. Learning how math works helps us learn to think about math problems. In the same way, learning to write helps us learn to think about and express ideas.

Rao thinks that AI will not replace human-generated stories, articles and other texts. Why? She says: “The reason those things exist is not only because we want to read it but because we want to write it.” People will always want to make their voices heard. ChatGPT is a tool that could enhance and support our voices — as long as we use it with care.

Correction: Gillham’s comment on the 20 samples that his team tested has been corrected to show how confident his team’s AI-detection tool was in identifying text that had been AI-generated (not in how accurately it detected AI-generated text).

Can you find the bot?

More stories from science news explores on tech.

Does AI steal art or help create it? It depends on who you ask

AI image generators tend to exaggerate stereotypes

This computer scientist is making virtual reality safer

Here’s why scientists want a good quantum computer

The desert planet in ‘Dune’ is pretty realistic, scientists say

Here’s how to build an internet on Mars

Bioelectronics research wins top award at 2024 Regeneron ISEF

Artificial intelligence is making it hard to tell truth from fiction

Have a language expert improve your writing

Check your paper for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

- Using AI tools

Is Using ChatGPT Cheating?

Published on June 29, 2023 by Eoghan Ryan . Revised on September 14, 2023.

Using ChatGPT and other AI tools to cheat is academically dishonest and can have severe consequences.

However, using these tools is not always academically dishonest . It’s important to understand how to use these tools correctly and ethically to complement your research and writing skills. You can learn more about how to use AI tools responsibly on our AI writing resources page.

Instantly correct all language mistakes in your text

Upload your document to correct all your mistakes in minutes

Table of contents

How can chatgpt be used to cheat, what are the risks of using chatgpt to cheat, how to use chatgpt without cheating, frequently asked questions.

ChatGPT and other AI tools can be used to cheat in various ways. This can be intentional or unintentional and can vary in severity. Some examples of the ways in which ChatGPT can be used to cheat include:

- AI-assisted plagiarism: Passing off AI-generated text as your own work (e.g., essays, homework assignments, take-home exams)

- Plagiarism : Having the tool rephrase content from another source and passing it off as your own work

- Self-plagiarism : Having the tool rewrite a paper you previously submitted with the intention of resubmitting it

- Data fabrication: Using ChatGPT to generate false data and presenting them as genuine findings to support your research

Using ChatGPT in these ways is academically dishonest and very likely to be prohibited by your university. Even if your guidelines don’t explicitly mention ChatGPT, actions like data fabrication are academically dishonest regardless of what tools are used.

Don't submit your assignments before you do this

The academic proofreading tool has been trained on 1000s of academic texts. Making it the most accurate and reliable proofreading tool for students. Free citation check included.

Try for free

ChatGPT does not solve all academic writing problems and using ChatGPT to cheat can have various negative impacts on yourself and others. ChatGPT cheating:

- Leads to gaps in your knowledge

- Is unfair to other students who did not cheat

- Potentially damages your reputation

- May result in the publication of inaccurate or false information

- May lead to dangerous situations if it allows you to avoid learning the fundamentals in some contexts (e.g., medicine)

When used correctly, ChatGPT and other AI tools can be helpful resources that complement your academic writing and research skills. Below are some tips to help you use ChatGPT ethically.

Follow university guidelines

Guidelines on how ChatGPT may be used vary across universities. It’s crucial to follow your institution’s policies regarding AI writing tools and to stay up to date with any changes. Always ask your instructor if you’re unsure what is allowed in your case.

Use the tool as a source of inspiration

If allowed by your institute, use ChatGPT outputs as a source of guidance or inspiration, rather than as a substitute for coursework. For example, you can use ChatGPT to write a research paper outline or to provide feedback on your text.

You can also use ChatGPT to paraphrase or summarize text to express your ideas more clearly and to condense complex information. Alternatively, you can use Scribbr’s free paraphrasing tool or Scribbr’s free text summarizer , which are designed specifically for these purposes.

Practice information literacy skills

Information literacy skills can help you use AI tools more effectively. For example, they can help you to understand what constitutes plagiarism, critically evaluate AI-generated outputs, and make informed judgments more generally.

You should also familiarize yourself with the user guidelines for any AI tools you use and get to know their intended uses and limitations .

Be transparent about how you use the tools

If you use ChatGPT as a primary source or to help with your research or writing process, you may be required to cite it or acknowledge its contribution in some way (e.g., by providing a link to the ChatGPT conversation). Check your institution’s guidelines or ask your professor for guidance.

Using ChatGPT in the following ways is generally considered academically dishonest:

- Passing off AI-generated content as your own work

- Having the tool rephrase plagiarized content and passing it off as your own work

- Using ChatGPT to generate false data and presenting them as genuine findings to support your research

Using ChatGPT to cheat can have serious academic consequences . It’s important that students learn how to use AI tools effectively and ethically.

Using ChatGPT to cheat is a serious offense and may have severe consequences.

However, when used correctly, ChatGPT can be a helpful resource that complements your academic writing and research skills. Some tips to use ChatGPT ethically include:

- Following your institution’s guidelines

- Understanding what constitutes plagiarism

- Being transparent about how you use the tool

No, it’s not a good idea to do so in general—first, because it’s normally considered plagiarism or academic dishonesty to represent someone else’s work as your own (even if that “someone” is an AI language model). Even if you cite ChatGPT , you’ll still be penalized unless this is specifically allowed by your university . Institutions may use AI detectors to enforce these rules.

Second, ChatGPT can recombine existing texts, but it cannot really generate new knowledge. And it lacks specialist knowledge of academic topics. Therefore, it is not possible to obtain original research results, and the text produced may contain factual errors.

However, you can usually still use ChatGPT for assignments in other ways, as a source of inspiration and feedback.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Ryan, E. (2023, September 14). Is Using ChatGPT Cheating?. Scribbr. Retrieved June 18, 2024, from https://www.scribbr.com/ai-tools/chatgpt-cheating/

Is this article helpful?

Eoghan Ryan

Other students also liked, how to write an essay with chatgpt | tips & examples, how to use chatgpt in your studies, what are the limitations of chatgpt.

Eoghan Ryan (Scribbr Team)

Thanks for reading! Hope you found this article helpful. If anything is still unclear, or if you didn’t find what you were looking for here, leave a comment and we’ll see if we can help.

Still have questions?

"i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

89 Percent of College Students Admit to Using ChatGPT for Homework, Study Claims

Wait, what, taicher's pet.

Educators are battling a new reality: easily accessible AI that allows students to take immense shortcuts in their education — and as it turns out, many appear to already be cheating with abandon.

Online course provider Study.com asked 1,000 students over the age of 18 about the use of ChatGPT, OpenAI's blockbuster chatbot, in the classroom.

The responses were surprising. A full 89 percent said they'd used it on homework. Some 48 percent confessed they'd already made use of it to complete an at-home test or quiz. Over 50 percent said they used ChatGPT to write an essay, while 22 percent admitted to having asked ChatGPT for a paper outline.

Honestly, those numbers sound so staggeringly high that we wonder about Study.com's methodology. But if there's a throughline here, it's that AI isn't just getting pretty good — it's also already weaving itself into the fabric of society, and the results could be far-reaching.

Muscle AItrophy

At the same time, according to the study, almost three-quarters of students said they wanted ChatGPT to be banned, indicating students are equally worried about cheating becoming the norm.

Educators are also understandably worried about AI having a major impact on their students' education, and are resorting to AI-detecting apps that attempt to suss out whether a student used ChatGPT.

But as we've found out for ourselves, the current crop of tools out there, like GPTZero, are still actively being developed and are far from perfect .

Future Shock

Some are worried AI chatbots could have a disastrous effect on education.

"Just because there is a machine that will help me lift up a dumbbell doesn’t mean my muscles will develop," Western Washington University history professor Johann Neem told The Wall Street Journal . "In the same way just because there is a machine that can write an essay doesn’t mean my mind will develop."

But others argue teachers should leverage powerful technologies like ChatGPT to prepare students for a new reality.

" I hope to inspire and educate you enough that you will want to learn how to leverage these tools, not just to learn to cheat better," Weber State University professor Alex Lawrence told the WSJ, while University of Pennsylvania's Ethan Mollick, said that he expects his literature students to leverage the tech to "write more" and "better."

"This is a force multiplier for writing," Mollick added. "I expect them to use it."

READ MORE: Professors Turn to ChatGPT to Teach Students a Lesson [ The Wall Street Journal ]

More on ChatGPT: BuzzFeed Announces Plans to Use OpenAI to Churn Out Content

Share This Article

ChatGPT just created a new tool to catch students trying to cheat using ChatGPT

The maker of ChatGPT is trying to curb its reputation as a freewheeling cheating machine with a new tool that can help teachers detect if a student or artificial intelligence wrote that homework.

The new AI Text Classifier launched Tuesday by OpenAI follows a weeks-long discussion at schools and colleges over fears that ChatGPT’s ability to write just about anything on command could fuel academic dishonesty and hinder learning.

OpenAI cautions that its new tool – like others already available – is not foolproof. The method for detecting AI-written text “is imperfect and it will be wrong sometimes,” said Jan Leike, head of OpenAI’s alignment team tasked to make its systems safer.

“Because of that, it shouldn’t be solely relied upon when making decisions,” Leike said.

Teenagers and college students were among the millions of people who began experimenting with ChatGPT after it launched Nov. 30 as a free application on OpenAI’s website. And while many found ways to use it creatively and harmlessly, the ease with which it could answer take-home test questions and assist with other assignments sparked a panic among some educators.

By the time schools opened for the new year, New York City, Los Angeles and other big public school districts began to block its use in classrooms and on school devices.

The Seattle Public Schools district initially blocked ChatGPT on all school devices in December but then opened access to educators who want to use it as a teaching tool, said Tim Robinson, the district spokesman.

“We can’t afford to ignore it,” Robinson said.

The district is also discussing possibly expanding the use of ChatGPT into classrooms to let teachers use it to train students to be better critical thinkers and to let students use the application as a “personal tutor” or to help generate new ideas when working on an assignment, Robinson said.

School districts around the country say they are seeing the conversation around ChatGPT evolve quickly.

“The initial reaction was ‘OMG, how are we going to stem the tide of all the cheating that will happen with ChatGPT,’” said Devin Page, a technology specialist with the Calvert County Public School District in Maryland. Now there is a growing realization that “this is the future” and blocking it is not the solution, he said.

“I think we would be naïve if we were not aware of the dangers this tool poses, but we also would fail to serve our students if we ban them and us from using it for all its potential power,” said Page, who thinks districts like his own will eventually unblock ChatGPT, especially once the company’s detection service is in place.

OpenAI emphasized the limitations of its detection tool in a blog post Tuesday, but said that in addition to deterring plagiarism, it could help to detect automated disinformation campaigns and other misuse of AI to mimic humans.

The longer a passage of text, the better the tool is at detecting if an AI or human wrote something. Type in any text — a college admissions essay, or a literary analysis of Ralph Ellison’s “Invisible Man” — and the tool will label it as either “very unlikely, unlikely, unclear if it is, possibly, or likely” AI-generated.

But much like ChatGPT itself, which was trained on a huge trove of digitized books, newspapers and online writings but often confidently spits out falsehoods or nonsense, it’s not easy to interpret how it came up with a result.

“We don’t fundamentally know what kind of pattern it pays attention to, or how it works internally,” Leike said. “There’s really not much we could say at this point about how the classifier actually works.”