Data Analytics Case Study: Complete Guide in 2024

What are data analytics case study interviews.

When you’re trying to land a data analyst job, the last thing to stand in your way is the data analytics case study interview.

One reason they’re so challenging is that case studies don’t typically have a right or wrong answer.

Instead, case study interviews require you to come up with a hypothesis for an analytics question and then produce data to support or validate your hypothesis. In other words, it’s not just about your technical skills; you’re also being tested on creative problem-solving and your ability to communicate with stakeholders.

This article provides an overview of how to answer data analytics case study interview questions. You can find an in-depth course in the data analytics learning path .

How to Solve Data Analytics Case Questions

Check out our video below on How to solve a Data Analytics case study problem:

With data analyst case questions, you will need to answer two key questions:

- What metrics should I propose?

- How do I write a SQL query to get the metrics I need?

In short, to ace a data analytics case interview, you not only need to brush up on case questions, but you also should be adept at writing all types of SQL queries and have strong data sense.

These questions are especially challenging to answer if you don’t have a framework or know how to answer them. To help you prepare, we created this step-by-step guide to answering data analytics case questions.

We show you how to use a framework to answer case questions, provide example analytics questions, and help you understand the difference between analytics case studies and product metrics case studies .

Data Analytics Cases vs Product Metrics Questions

Product case questions sometimes get lumped in with data analytics cases.

Ultimately, the type of case question you are asked will depend on the role. For example, product analysts will likely face more product-oriented questions.

Product metrics cases tend to focus on a hypothetical situation. You might be asked to:

Investigate Metrics - One of the most common types will ask you to investigate a metric, usually one that’s going up or down. For example, “Why are Facebook friend requests falling by 10 percent?”

Measure Product/Feature Success - A lot of analytics cases revolve around the measurement of product success and feature changes. For example, “We want to add X feature to product Y. What metrics would you track to make sure that’s a good idea?”

With product data cases, the key difference is that you may or may not be required to write the SQL query to find the metric.

Instead, these interviews are more theoretical and are designed to assess your product sense and ability to think about analytics problems from a product perspective. Product metrics questions may also show up in the data analyst interview , but likely only for product data analyst roles.

TRY CHECKING: Marketing Analytics Case Study Guide

Data Analytics Case Study Question: Sample Solution

Let’s start with an example data analytics case question :

You’re given a table that represents search results from searches on Facebook. The query column is the search term, the position column represents each position the search result came in, and the rating column represents the human rating from 1 to 5, where 5 is high relevance, and 1 is low relevance.

Each row in the search_events table represents a single search, with the has_clicked column representing if a user clicked on a result or not. We have a hypothesis that the CTR is dependent on the search result rating.

Write a query to return data to support or disprove this hypothesis.

search_results table:

search_events table

Step 1: With Data Analytics Case Studies, Start by Making Assumptions

Hint: Start by making assumptions and thinking out loud. With this question, focus on coming up with a metric to support the hypothesis. If the question is unclear or if you think you need more information, be sure to ask.

Answer. The hypothesis is that CTR is dependent on search result rating. Therefore, we want to focus on the CTR metric, and we can assume:

- If CTR is high when search result ratings are high, and CTR is low when the search result ratings are low, then the hypothesis is correct.

- If CTR is low when the search ratings are high, or there is no proven correlation between the two, then our hypothesis is not proven.

Step 2: Provide a Solution for the Case Question

Hint: Walk the interviewer through your reasoning. Talking about the decisions you make and why you’re making them shows off your problem-solving approach.

Answer. One way we can investigate the hypothesis is to look at the results split into different search rating buckets. For example, if we measure the CTR for results rated at 1, then those rated at 2, and so on, we can identify if an increase in rating is correlated with an increase in CTR.

First, I’d write a query to get the number of results for each query in each bucket. We want to look at the distribution of results that are less than a rating threshold, which will help us see the relationship between search rating and CTR.

This CTE aggregates the number of results that are less than a certain rating threshold. Later, we can use this to see the percentage that are in each bucket. If we re-join to the search_events table, we can calculate the CTR by then grouping by each bucket.

Step 3: Use Analysis to Backup Your Solution

Hint: Be prepared to justify your solution. Interviewers will follow up with questions about your reasoning, and ask why you make certain assumptions.

Answer. By using the CASE WHEN statement, I calculated each ratings bucket by checking to see if all the search results were less than 1, 2, or 3 by subtracting the total from the number within the bucket and seeing if it equates to 0.

I did that to get away from averages in our bucketing system. Outliers would make it more difficult to measure the effect of bad ratings. For example, if a query had a 1 rating and another had a 5 rating, that would equate to an average of 3. Whereas in my solution, a query with all of the results under 1, 2, or 3 lets us know that it actually has bad ratings.

Product Data Case Question: Sample Solution

In product metrics interviews, you’ll likely be asked about analytics, but the discussion will be more theoretical. You’ll propose a solution to a problem, and supply the metrics you’ll use to investigate or solve it. You may or may not be required to write a SQL query to get those metrics.

We’ll start with an example product metrics case study question :

Let’s say you work for a social media company that has just done a launch in a new city. Looking at weekly metrics, you see a slow decrease in the average number of comments per user from January to March in this city.

The company has been consistently growing new users in the city from January to March.

What are some reasons why the average number of comments per user would be decreasing and what metrics would you look into?

Step 1: Ask Clarifying Questions Specific to the Case

Hint: This question is very vague. It’s all hypothetical, so we don’t know very much about users, what the product is, and how people might be interacting. Be sure you ask questions upfront about the product.

Answer: Before I jump into an answer, I’d like to ask a few questions:

- Who uses this social network? How do they interact with each other?

- Has there been any performance issues that might be causing the problem?

- What are the goals of this particular launch?

- Has there been any changes to the comment features in recent weeks?

For the sake of this example, let’s say we learn that it’s a social network similar to Facebook with a young audience, and the goals of the launch are to grow the user base. Also, there have been no performance issues and the commenting feature hasn’t been changed since launch.

Step 2: Use the Case Question to Make Assumptions

Hint: Look for clues in the question. For example, this case gives you a metric, “average number of comments per user.” Consider if the clue might be helpful in your solution. But be careful, sometimes questions are designed to throw you off track.

Answer: From the question, we can hypothesize a little bit. For example, we know that user count is increasing linearly. That means two things:

- The decreasing comments issue isn’t a result of a declining user base.

- The cause isn’t loss of platform.

We can also model out the data to help us get a better picture of the average number of comments per user metric:

- January: 10000 users, 30000 comments, 3 comments/user

- February: 20000 users, 50000 comments, 2.5 comments/user

- March: 30000 users, 60000 comments, 2 comments/user

One thing to note: Although this is an interesting metric, I’m not sure if it will help us solve this question. For one, average comments per user doesn’t account for churn. We might assume that during the three-month period users are churning off the platform. Let’s say the churn rate is 25% in January, 20% in February and 15% in March.

Step 3: Make a Hypothesis About the Data

Hint: Don’t worry too much about making a correct hypothesis. Instead, interviewers want to get a sense of your product initiation and that you’re on the right track. Also, be prepared to measure your hypothesis.

Answer. I would say that average comments per user isn’t a great metric to use, because it doesn’t reveal insights into what’s really causing this issue.

That’s because it doesn’t account for active users, which are the users who are actually commenting. A better metric to investigate would be retained users and monthly active users.

What I suspect is causing the issue is that active users are commenting frequently and are responsible for the increase in comments month-to-month. New users, on the other hand, aren’t as engaged and aren’t commenting as often.

Step 4: Provide Metrics and Data Analysis

Hint: Within your solution, include key metrics that you’d like to investigate that will help you measure success.

Answer: I’d say there are a few ways we could investigate the cause of this problem, but the one I’d be most interested in would be the engagement of monthly active users.

If the growth in comments is coming from active users, that would help us understand how we’re doing at retaining users. Plus, it will also show if new users are less engaged and commenting less frequently.

One way that we could dig into this would be to segment users by their onboarding date, which would help us to visualize engagement and see how engaged some of our longest-retained users are.

If engagement of new users is the issue, that will give us some options in terms of strategies for addressing the problem. For example, we could test new onboarding or commenting features designed to generate engagement.

Step 5: Propose a Solution for the Case Question

Hint: In the majority of cases, your initial assumptions might be incorrect, or the interviewer might throw you a curveball. Be prepared to make new hypotheses or discuss the pitfalls of your analysis.

Answer. If the cause wasn’t due to a lack of engagement among new users, then I’d want to investigate active users. One potential cause would be active users commenting less. In that case, we’d know that our earliest users were churning out, and that engagement among new users was potentially growing.

Again, I think we’d want to focus on user engagement since the onboarding date. That would help us understand if we were seeing higher levels of churn among active users, and we could start to identify some solutions there.

Tip: Use a Framework to Solve Data Analytics Case Questions

Analytics case questions can be challenging, but they’re much more challenging if you don’t use a framework. Without a framework, it’s easier to get lost in your answer, to get stuck, and really lose the confidence of your interviewer. Find helpful frameworks for data analytics questions in our data analytics learning path and our product metrics learning path .

Once you have the framework down, what’s the best way to practice? Mock interviews with our coaches are very effective, as you’ll get feedback and helpful tips as you answer. You can also learn a lot by practicing P2P mock interviews with other Interview Query students. No data analytics background? Check out how to become a data analyst without a degree .

Finally, if you’re looking for sample data analytics case questions and other types of interview questions, see our guide on the top data analyst interview questions .

4 Case Study Questions for Interviewing Data Analysts at a Startup

A good data analyst is one who has an absolute passion for data, he/she has a strong understanding of the business/product you are running, and will be always seeking meaningful insights to help the team make better decisions.

Jan 22, 2019 . 4 min read

- If you're an aspiring data professionals wanting to learn more about how the underlying data world works, check out: The Analytics Setup Guidebook

- Doing a case study as part of analytics interview? Check out: Nailing An Analytics Interview Case Study: 10 Practical Tips

At Holistics, we understand the value of data in making business decisions as a Business Intelligence (BI) platform, and hiring the right data team is one of the key elements to get you there.

To get hired for a tech product startup, we all know just doing reporting alone won't distinguish a potential data analyst, a good data analyst is one who has an absolute passion for data. He/she has a strong understanding of the business/product you are running, and will be always seeking meaningful insights to help the team make better decisions.

That's the reason why we usually look for these characteristics below when interviewing data analyst candidates:

- Ability to adapt to a new domain quickly

- Ability to work independently to investigate and mine for interesting insights

- Product and business growth Mindset Technical skills

In this article, I'll be sharing with you some of our case studies that reveal the potential of data analyst candidates we've hired in the last few months.

For a list of questions to ask, you can refer to this link: How to interview a data analyst candidate

1. Analyze a Dataset

- Give us top 5–10 interesting insights you could find from this dataset

Give them a dataset, and let them use your tool or any tools they are familiar with to analyze it.

Expectations

- Communication: The first thing they should do is ask the interviewers to clarify the dataset and the problems to be solved, instead of just jumping into answering the question right away.

- Strong industry knowledge, or an indication of how quickly they can adapt to a new domain.

- The insights here should not only be about charts, but also the explanation behind what we should investigate more of, or make decisions on.

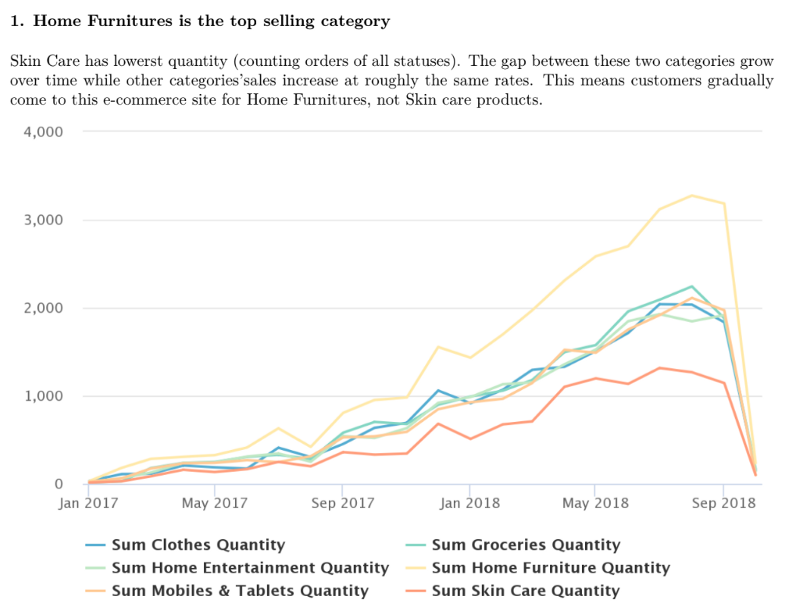

Let's take a look at some insights from our data analyst's work exploring an e-commerce dataset.

2. Product Mindset

In a product startup, the data analyst must also have the ability to understand the product as well as measure the success of the product.

- How would you improve our feature X (Search/Login/Dashboard…) using data?

- Show effort for independent research, and declaring some assumptions on what makes a feature good/bad.

- Ask/create a user flow for the feature, listing down all the possible steps that users should take to achieve that result. Let them assume they can get all the data they want, and ask what they would measure and how they will make decisions from there.

- Provide data and current insights to understand how often users actually use the feature and assess how they evaluate if it's still worth working on.

3. Business Sense

Data analysts need to be responsible for not only Product, but also Sales, Marketing, Financial analyses and more as well. Hence, they must be able to quickly adapt to any business model or distribution strategy.

- How would you increase our conversion rate?

- How would you know if a customer will upgrade or churn?

- The candidate should ask the interviewer to clarify the information, e.g. How the company defines conversion rate?

- Identify data sources and stages of the funnels, what are the data sources we have and what others we need, how to collect and consolidate the data?

- Ability to extract the data into meaningful insights that can inform business decisions, the insights would differ depending on the business model (B2B, B2C, etc.) e.g. able to list down all the factors that could affect users subscriptions (B2B).

- Able to compare and benchmark performance with industry insights e.g able to tell what is the average conversion rate of e-commerce companies.

4. Metric-driven

- Top 3 metrics to define the success of this product, what, why and how would you choose?

- To answer this question, the candidates need to have basic domain knowledge of the industry or product as well as the understanding of the product's core value propositions.

- A good candidate would also ask for information on company strategy and vision.

- Depending on each product and industry, the key metrics would be different, e.g. Facebook - Daily active users (DAU), Number of users adding 7 friends in the first 10 days; Holistics - Number of reports created and viewed, Number of users invited during the trial period; Uber - Weekly Rides, First ride/passenger …

According to my experience, there are a lot of data analysts who are just familiar with doing reporting from requirements, while talented analysts are eager to understand the data deeply and produce meaningful insights to help their team make better decisions, and they are definitely the players you want to have in your A+ team.

Finding a great data analyst is not easy, technical skill is essential, however, mindset is even more important. Therefore, list down all you need from a data analyst, trust your gut and hiring the right person will be a super advantage for your startup.

What's happening in the BI world?

Join 30k+ people to get insights from BI practitioners around the globe. In your inbox. Every week. Learn more

No spam, ever. We respect your email privacy. Unsubscribe anytime.

10 Real World Data Science Case Studies Projects with Example

Top 10 Data Science Case Studies Projects with Examples and Solutions in Python to inspire your data science learning in 2023.

BelData science has been a trending buzzword in recent times. With wide applications in various sectors like healthcare , education, retail, transportation, media, and banking -data science applications are at the core of pretty much every industry out there. The possibilities are endless: analysis of frauds in the finance sector or the personalization of recommendations on eCommerce businesses. We have developed ten exciting data science case studies to explain how data science is leveraged across various industries to make smarter decisions and develop innovative personalized products tailored to specific customers.

Walmart Sales Forecasting Data Science Project

Downloadable solution code | Explanatory videos | Tech Support

Table of Contents

Data science case studies in retail , data science case study examples in entertainment industry , data analytics case study examples in travel industry , case studies for data analytics in social media , real world data science projects in healthcare, data analytics case studies in oil and gas, what is a case study in data science, how do you prepare a data science case study, 10 most interesting data science case studies with examples.

So, without much ado, let's get started with data science business case studies !

With humble beginnings as a simple discount retailer, today, Walmart operates in 10,500 stores and clubs in 24 countries and eCommerce websites, employing around 2.2 million people around the globe. For the fiscal year ended January 31, 2021, Walmart's total revenue was $559 billion showing a growth of $35 billion with the expansion of the eCommerce sector. Walmart is a data-driven company that works on the principle of 'Everyday low cost' for its consumers. To achieve this goal, they heavily depend on the advances of their data science and analytics department for research and development, also known as Walmart Labs. Walmart is home to the world's largest private cloud, which can manage 2.5 petabytes of data every hour! To analyze this humongous amount of data, Walmart has created 'Data Café,' a state-of-the-art analytics hub located within its Bentonville, Arkansas headquarters. The Walmart Labs team heavily invests in building and managing technologies like cloud, data, DevOps , infrastructure, and security.

Walmart is experiencing massive digital growth as the world's largest retailer . Walmart has been leveraging Big data and advances in data science to build solutions to enhance, optimize and customize the shopping experience and serve their customers in a better way. At Walmart Labs, data scientists are focused on creating data-driven solutions that power the efficiency and effectiveness of complex supply chain management processes. Here are some of the applications of data science at Walmart:

i) Personalized Customer Shopping Experience

Walmart analyses customer preferences and shopping patterns to optimize the stocking and displaying of merchandise in their stores. Analysis of Big data also helps them understand new item sales, make decisions on discontinuing products, and the performance of brands.

ii) Order Sourcing and On-Time Delivery Promise

Millions of customers view items on Walmart.com, and Walmart provides each customer a real-time estimated delivery date for the items purchased. Walmart runs a backend algorithm that estimates this based on the distance between the customer and the fulfillment center, inventory levels, and shipping methods available. The supply chain management system determines the optimum fulfillment center based on distance and inventory levels for every order. It also has to decide on the shipping method to minimize transportation costs while meeting the promised delivery date.

Here's what valued users are saying about ProjectPro

Gautam Vermani

Data Consultant at Confidential

Tech Leader | Stanford / Yale University

Not sure what you are looking for?

iii) Packing Optimization

Also known as Box recommendation is a daily occurrence in the shipping of items in retail and eCommerce business. When items of an order or multiple orders for the same customer are ready for packing, Walmart has developed a recommender system that picks the best-sized box which holds all the ordered items with the least in-box space wastage within a fixed amount of time. This Bin Packing problem is a classic NP-Hard problem familiar to data scientists .

Whenever items of an order or multiple orders placed by the same customer are picked from the shelf and are ready for packing, the box recommendation system determines the best-sized box to hold all the ordered items with a minimum of in-box space wasted. This problem is known as the Bin Packing Problem, another classic NP-Hard problem familiar to data scientists.

Here is a link to a sales prediction data science case study to help you understand the applications of Data Science in the real world. Walmart Sales Forecasting Project uses historical sales data for 45 Walmart stores located in different regions. Each store contains many departments, and you must build a model to project the sales for each department in each store. This data science case study aims to create a predictive model to predict the sales of each product. You can also try your hands-on Inventory Demand Forecasting Data Science Project to develop a machine learning model to forecast inventory demand accurately based on historical sales data.

Get Closer To Your Dream of Becoming a Data Scientist with 70+ Solved End-to-End ML Projects

Amazon is an American multinational technology-based company based in Seattle, USA. It started as an online bookseller, but today it focuses on eCommerce, cloud computing , digital streaming, and artificial intelligence . It hosts an estimate of 1,000,000,000 gigabytes of data across more than 1,400,000 servers. Through its constant innovation in data science and big data Amazon is always ahead in understanding its customers. Here are a few data analytics case study examples at Amazon:

i) Recommendation Systems

Data science models help amazon understand the customers' needs and recommend them to them before the customer searches for a product; this model uses collaborative filtering. Amazon uses 152 million customer purchases data to help users to decide on products to be purchased. The company generates 35% of its annual sales using the Recommendation based systems (RBS) method.

Here is a Recommender System Project to help you build a recommendation system using collaborative filtering.

ii) Retail Price Optimization

Amazon product prices are optimized based on a predictive model that determines the best price so that the users do not refuse to buy it based on price. The model carefully determines the optimal prices considering the customers' likelihood of purchasing the product and thinks the price will affect the customers' future buying patterns. Price for a product is determined according to your activity on the website, competitors' pricing, product availability, item preferences, order history, expected profit margin, and other factors.

Check Out this Retail Price Optimization Project to build a Dynamic Pricing Model.

iii) Fraud Detection

Being a significant eCommerce business, Amazon remains at high risk of retail fraud. As a preemptive measure, the company collects historical and real-time data for every order. It uses Machine learning algorithms to find transactions with a higher probability of being fraudulent. This proactive measure has helped the company restrict clients with an excessive number of returns of products.

You can look at this Credit Card Fraud Detection Project to implement a fraud detection model to classify fraudulent credit card transactions.

New Projects

Let us explore data analytics case study examples in the entertainment indusry.

Ace Your Next Job Interview with Mock Interviews from Experts to Improve Your Skills and Boost Confidence!

Netflix started as a DVD rental service in 1997 and then has expanded into the streaming business. Headquartered in Los Gatos, California, Netflix is the largest content streaming company in the world. Currently, Netflix has over 208 million paid subscribers worldwide, and with thousands of smart devices which are presently streaming supported, Netflix has around 3 billion hours watched every month. The secret to this massive growth and popularity of Netflix is its advanced use of data analytics and recommendation systems to provide personalized and relevant content recommendations to its users. The data is collected over 100 billion events every day. Here are a few examples of data analysis case studies applied at Netflix :

i) Personalized Recommendation System

Netflix uses over 1300 recommendation clusters based on consumer viewing preferences to provide a personalized experience. Some of the data that Netflix collects from its users include Viewing time, platform searches for keywords, Metadata related to content abandonment, such as content pause time, rewind, rewatched. Using this data, Netflix can predict what a viewer is likely to watch and give a personalized watchlist to a user. Some of the algorithms used by the Netflix recommendation system are Personalized video Ranking, Trending now ranker, and the Continue watching now ranker.

ii) Content Development using Data Analytics

Netflix uses data science to analyze the behavior and patterns of its user to recognize themes and categories that the masses prefer to watch. This data is used to produce shows like The umbrella academy, and Orange Is the New Black, and the Queen's Gambit. These shows seem like a huge risk but are significantly based on data analytics using parameters, which assured Netflix that they would succeed with its audience. Data analytics is helping Netflix come up with content that their viewers want to watch even before they know they want to watch it.

iii) Marketing Analytics for Campaigns

Netflix uses data analytics to find the right time to launch shows and ad campaigns to have maximum impact on the target audience. Marketing analytics helps come up with different trailers and thumbnails for other groups of viewers. For example, the House of Cards Season 5 trailer with a giant American flag was launched during the American presidential elections, as it would resonate well with the audience.

Here is a Customer Segmentation Project using association rule mining to understand the primary grouping of customers based on various parameters.

Get FREE Access to Machine Learning Example Codes for Data Cleaning , Data Munging, and Data Visualization

In a world where Purchasing music is a thing of the past and streaming music is a current trend, Spotify has emerged as one of the most popular streaming platforms. With 320 million monthly users, around 4 billion playlists, and approximately 2 million podcasts, Spotify leads the pack among well-known streaming platforms like Apple Music, Wynk, Songza, amazon music, etc. The success of Spotify has mainly depended on data analytics. By analyzing massive volumes of listener data, Spotify provides real-time and personalized services to its listeners. Most of Spotify's revenue comes from paid premium subscriptions. Here are some of the examples of case study on data analytics used by Spotify to provide enhanced services to its listeners:

i) Personalization of Content using Recommendation Systems

Spotify uses Bart or Bayesian Additive Regression Trees to generate music recommendations to its listeners in real-time. Bart ignores any song a user listens to for less than 30 seconds. The model is retrained every day to provide updated recommendations. A new Patent granted to Spotify for an AI application is used to identify a user's musical tastes based on audio signals, gender, age, accent to make better music recommendations.

Spotify creates daily playlists for its listeners, based on the taste profiles called 'Daily Mixes,' which have songs the user has added to their playlists or created by the artists that the user has included in their playlists. It also includes new artists and songs that the user might be unfamiliar with but might improve the playlist. Similar to it is the weekly 'Release Radar' playlists that have newly released artists' songs that the listener follows or has liked before.

ii) Targetted marketing through Customer Segmentation

With user data for enhancing personalized song recommendations, Spotify uses this massive dataset for targeted ad campaigns and personalized service recommendations for its users. Spotify uses ML models to analyze the listener's behavior and group them based on music preferences, age, gender, ethnicity, etc. These insights help them create ad campaigns for a specific target audience. One of their well-known ad campaigns was the meme-inspired ads for potential target customers, which was a huge success globally.

iii) CNN's for Classification of Songs and Audio Tracks

Spotify builds audio models to evaluate the songs and tracks, which helps develop better playlists and recommendations for its users. These allow Spotify to filter new tracks based on their lyrics and rhythms and recommend them to users like similar tracks ( collaborative filtering). Spotify also uses NLP ( Natural language processing) to scan articles and blogs to analyze the words used to describe songs and artists. These analytical insights can help group and identify similar artists and songs and leverage them to build playlists.

Here is a Music Recommender System Project for you to start learning. We have listed another music recommendations dataset for you to use for your projects: Dataset1 . You can use this dataset of Spotify metadata to classify songs based on artists, mood, liveliness. Plot histograms, heatmaps to get a better understanding of the dataset. Use classification algorithms like logistic regression, SVM, and Principal component analysis to generate valuable insights from the dataset.

Explore Categories

Below you will find case studies for data analytics in the travel and tourism industry.

Airbnb was born in 2007 in San Francisco and has since grown to 4 million Hosts and 5.6 million listings worldwide who have welcomed more than 1 billion guest arrivals in almost every country across the globe. Airbnb is active in every country on the planet except for Iran, Sudan, Syria, and North Korea. That is around 97.95% of the world. Using data as a voice of their customers, Airbnb uses the large volume of customer reviews, host inputs to understand trends across communities, rate user experiences, and uses these analytics to make informed decisions to build a better business model. The data scientists at Airbnb are developing exciting new solutions to boost the business and find the best mapping for its customers and hosts. Airbnb data servers serve approximately 10 million requests a day and process around one million search queries. Data is the voice of customers at AirBnB and offers personalized services by creating a perfect match between the guests and hosts for a supreme customer experience.

i) Recommendation Systems and Search Ranking Algorithms

Airbnb helps people find 'local experiences' in a place with the help of search algorithms that make searches and listings precise. Airbnb uses a 'listing quality score' to find homes based on the proximity to the searched location and uses previous guest reviews. Airbnb uses deep neural networks to build models that take the guest's earlier stays into account and area information to find a perfect match. The search algorithms are optimized based on guest and host preferences, rankings, pricing, and availability to understand users’ needs and provide the best match possible.

ii) Natural Language Processing for Review Analysis

Airbnb characterizes data as the voice of its customers. The customer and host reviews give a direct insight into the experience. The star ratings alone cannot be an excellent way to understand it quantitatively. Hence Airbnb uses natural language processing to understand reviews and the sentiments behind them. The NLP models are developed using Convolutional neural networks .

Practice this Sentiment Analysis Project for analyzing product reviews to understand the basic concepts of natural language processing.

iii) Smart Pricing using Predictive Analytics

The Airbnb hosts community uses the service as a supplementary income. The vacation homes and guest houses rented to customers provide for rising local community earnings as Airbnb guests stay 2.4 times longer and spend approximately 2.3 times the money compared to a hotel guest. The profits are a significant positive impact on the local neighborhood community. Airbnb uses predictive analytics to predict the prices of the listings and help the hosts set a competitive and optimal price. The overall profitability of the Airbnb host depends on factors like the time invested by the host and responsiveness to changing demands for different seasons. The factors that impact the real-time smart pricing are the location of the listing, proximity to transport options, season, and amenities available in the neighborhood of the listing.

Here is a Price Prediction Project to help you understand the concept of predictive analysis which is widely common in case studies for data analytics.

Uber is the biggest global taxi service provider. As of December 2018, Uber has 91 million monthly active consumers and 3.8 million drivers. Uber completes 14 million trips each day. Uber uses data analytics and big data-driven technologies to optimize their business processes and provide enhanced customer service. The Data Science team at uber has been exploring futuristic technologies to provide better service constantly. Machine learning and data analytics help Uber make data-driven decisions that enable benefits like ride-sharing, dynamic price surges, better customer support, and demand forecasting. Here are some of the real world data science projects used by uber:

i) Dynamic Pricing for Price Surges and Demand Forecasting

Uber prices change at peak hours based on demand. Uber uses surge pricing to encourage more cab drivers to sign up with the company, to meet the demand from the passengers. When the prices increase, the driver and the passenger are both informed about the surge in price. Uber uses a predictive model for price surging called the 'Geosurge' ( patented). It is based on the demand for the ride and the location.

ii) One-Click Chat

Uber has developed a Machine learning and natural language processing solution called one-click chat or OCC for coordination between drivers and users. This feature anticipates responses for commonly asked questions, making it easy for the drivers to respond to customer messages. Drivers can reply with the clock of just one button. One-Click chat is developed on Uber's machine learning platform Michelangelo to perform NLP on rider chat messages and generate appropriate responses to them.

iii) Customer Retention

Failure to meet the customer demand for cabs could lead to users opting for other services. Uber uses machine learning models to bridge this demand-supply gap. By using prediction models to predict the demand in any location, uber retains its customers. Uber also uses a tier-based reward system, which segments customers into different levels based on usage. The higher level the user achieves, the better are the perks. Uber also provides personalized destination suggestions based on the history of the user and their frequently traveled destinations.

You can take a look at this Python Chatbot Project and build a simple chatbot application to understand better the techniques used for natural language processing. You can also practice the working of a demand forecasting model with this project using time series analysis. You can look at this project which uses time series forecasting and clustering on a dataset containing geospatial data for forecasting customer demand for ola rides.

Explore More Data Science and Machine Learning Projects for Practice. Fast-Track Your Career Transition with ProjectPro

7) LinkedIn

LinkedIn is the largest professional social networking site with nearly 800 million members in more than 200 countries worldwide. Almost 40% of the users access LinkedIn daily, clocking around 1 billion interactions per month. The data science team at LinkedIn works with this massive pool of data to generate insights to build strategies, apply algorithms and statistical inferences to optimize engineering solutions, and help the company achieve its goals. Here are some of the real world data science projects at LinkedIn:

i) LinkedIn Recruiter Implement Search Algorithms and Recommendation Systems

LinkedIn Recruiter helps recruiters build and manage a talent pool to optimize the chances of hiring candidates successfully. This sophisticated product works on search and recommendation engines. The LinkedIn recruiter handles complex queries and filters on a constantly growing large dataset. The results delivered have to be relevant and specific. The initial search model was based on linear regression but was eventually upgraded to Gradient Boosted decision trees to include non-linear correlations in the dataset. In addition to these models, the LinkedIn recruiter also uses the Generalized Linear Mix model to improve the results of prediction problems to give personalized results.

ii) Recommendation Systems Personalized for News Feed

The LinkedIn news feed is the heart and soul of the professional community. A member's newsfeed is a place to discover conversations among connections, career news, posts, suggestions, photos, and videos. Every time a member visits LinkedIn, machine learning algorithms identify the best exchanges to be displayed on the feed by sorting through posts and ranking the most relevant results on top. The algorithms help LinkedIn understand member preferences and help provide personalized news feeds. The algorithms used include logistic regression, gradient boosted decision trees and neural networks for recommendation systems.

iii) CNN's to Detect Inappropriate Content

To provide a professional space where people can trust and express themselves professionally in a safe community has been a critical goal at LinkedIn. LinkedIn has heavily invested in building solutions to detect fake accounts and abusive behavior on their platform. Any form of spam, harassment, inappropriate content is immediately flagged and taken down. These can range from profanity to advertisements for illegal services. LinkedIn uses a Convolutional neural networks based machine learning model. This classifier trains on a training dataset containing accounts labeled as either "inappropriate" or "appropriate." The inappropriate list consists of accounts having content from "blocklisted" phrases or words and a small portion of manually reviewed accounts reported by the user community.

Here is a Text Classification Project to help you understand NLP basics for text classification. You can find a news recommendation system dataset to help you build a personalized news recommender system. You can also use this dataset to build a classifier using logistic regression, Naive Bayes, or Neural networks to classify toxic comments.

Get confident to build end-to-end projects

Access to a curated library of 250+ end-to-end industry projects with solution code, videos and tech support.

Pfizer is a multinational pharmaceutical company headquartered in New York, USA. One of the largest pharmaceutical companies globally known for developing a wide range of medicines and vaccines in disciplines like immunology, oncology, cardiology, and neurology. Pfizer became a household name in 2010 when it was the first to have a COVID-19 vaccine with FDA. In early November 2021, The CDC has approved the Pfizer vaccine for kids aged 5 to 11. Pfizer has been using machine learning and artificial intelligence to develop drugs and streamline trials, which played a massive role in developing and deploying the COVID-19 vaccine. Here are a few data analytics case studies by Pfizer :

i) Identifying Patients for Clinical Trials

Artificial intelligence and machine learning are used to streamline and optimize clinical trials to increase their efficiency. Natural language processing and exploratory data analysis of patient records can help identify suitable patients for clinical trials. These can help identify patients with distinct symptoms. These can help examine interactions of potential trial members' specific biomarkers, predict drug interactions and side effects which can help avoid complications. Pfizer's AI implementation helped rapidly identify signals within the noise of millions of data points across their 44,000-candidate COVID-19 clinical trial.

ii) Supply Chain and Manufacturing

Data science and machine learning techniques help pharmaceutical companies better forecast demand for vaccines and drugs and distribute them efficiently. Machine learning models can help identify efficient supply systems by automating and optimizing the production steps. These will help supply drugs customized to small pools of patients in specific gene pools. Pfizer uses Machine learning to predict the maintenance cost of equipment used. Predictive maintenance using AI is the next big step for Pharmaceutical companies to reduce costs.

iii) Drug Development

Computer simulations of proteins, and tests of their interactions, and yield analysis help researchers develop and test drugs more efficiently. In 2016 Watson Health and Pfizer announced a collaboration to utilize IBM Watson for Drug Discovery to help accelerate Pfizer's research in immuno-oncology, an approach to cancer treatment that uses the body's immune system to help fight cancer. Deep learning models have been used recently for bioactivity and synthesis prediction for drugs and vaccines in addition to molecular design. Deep learning has been a revolutionary technique for drug discovery as it factors everything from new applications of medications to possible toxic reactions which can save millions in drug trials.

You can create a Machine learning model to predict molecular activity to help design medicine using this dataset . You may build a CNN or a Deep neural network for this data analyst case study project.

Access Data Science and Machine Learning Project Code Examples

9) Shell Data Analyst Case Study Project

Shell is a global group of energy and petrochemical companies with over 80,000 employees in around 70 countries. Shell uses advanced technologies and innovations to help build a sustainable energy future. Shell is going through a significant transition as the world needs more and cleaner energy solutions to be a clean energy company by 2050. It requires substantial changes in the way in which energy is used. Digital technologies, including AI and Machine Learning, play an essential role in this transformation. These include efficient exploration and energy production, more reliable manufacturing, more nimble trading, and a personalized customer experience. Using AI in various phases of the organization will help achieve this goal and stay competitive in the market. Here are a few data analytics case studies in the petrochemical industry:

i) Precision Drilling

Shell is involved in the processing mining oil and gas supply, ranging from mining hydrocarbons to refining the fuel to retailing them to customers. Recently Shell has included reinforcement learning to control the drilling equipment used in mining. Reinforcement learning works on a reward-based system based on the outcome of the AI model. The algorithm is designed to guide the drills as they move through the surface, based on the historical data from drilling records. It includes information such as the size of drill bits, temperatures, pressures, and knowledge of the seismic activity. This model helps the human operator understand the environment better, leading to better and faster results will minor damage to machinery used.

ii) Efficient Charging Terminals

Due to climate changes, governments have encouraged people to switch to electric vehicles to reduce carbon dioxide emissions. However, the lack of public charging terminals has deterred people from switching to electric cars. Shell uses AI to monitor and predict the demand for terminals to provide efficient supply. Multiple vehicles charging from a single terminal may create a considerable grid load, and predictions on demand can help make this process more efficient.

iii) Monitoring Service and Charging Stations

Another Shell initiative trialed in Thailand and Singapore is the use of computer vision cameras, which can think and understand to watch out for potentially hazardous activities like lighting cigarettes in the vicinity of the pumps while refueling. The model is built to process the content of the captured images and label and classify it. The algorithm can then alert the staff and hence reduce the risk of fires. You can further train the model to detect rash driving or thefts in the future.

Here is a project to help you understand multiclass image classification. You can use the Hourly Energy Consumption Dataset to build an energy consumption prediction model. You can use time series with XGBoost to develop your model.

10) Zomato Case Study on Data Analytics

Zomato was founded in 2010 and is currently one of the most well-known food tech companies. Zomato offers services like restaurant discovery, home delivery, online table reservation, online payments for dining, etc. Zomato partners with restaurants to provide tools to acquire more customers while also providing delivery services and easy procurement of ingredients and kitchen supplies. Currently, Zomato has over 2 lakh restaurant partners and around 1 lakh delivery partners. Zomato has closed over ten crore delivery orders as of date. Zomato uses ML and AI to boost their business growth, with the massive amount of data collected over the years from food orders and user consumption patterns. Here are a few examples of data analyst case study project developed by the data scientists at Zomato:

i) Personalized Recommendation System for Homepage

Zomato uses data analytics to create personalized homepages for its users. Zomato uses data science to provide order personalization, like giving recommendations to the customers for specific cuisines, locations, prices, brands, etc. Restaurant recommendations are made based on a customer's past purchases, browsing history, and what other similar customers in the vicinity are ordering. This personalized recommendation system has led to a 15% improvement in order conversions and click-through rates for Zomato.

You can use the Restaurant Recommendation Dataset to build a restaurant recommendation system to predict what restaurants customers are most likely to order from, given the customer location, restaurant information, and customer order history.

ii) Analyzing Customer Sentiment

Zomato uses Natural language processing and Machine learning to understand customer sentiments using social media posts and customer reviews. These help the company gauge the inclination of its customer base towards the brand. Deep learning models analyze the sentiments of various brand mentions on social networking sites like Twitter, Instagram, Linked In, and Facebook. These analytics give insights to the company, which helps build the brand and understand the target audience.

iii) Predicting Food Preparation Time (FPT)

Food delivery time is an essential variable in the estimated delivery time of the order placed by the customer using Zomato. The food preparation time depends on numerous factors like the number of dishes ordered, time of the day, footfall in the restaurant, day of the week, etc. Accurate prediction of the food preparation time can help make a better prediction of the Estimated delivery time, which will help delivery partners less likely to breach it. Zomato uses a Bidirectional LSTM-based deep learning model that considers all these features and provides food preparation time for each order in real-time.

Data scientists are companies' secret weapons when analyzing customer sentiments and behavior and leveraging it to drive conversion, loyalty, and profits. These 10 data science case studies projects with examples and solutions show you how various organizations use data science technologies to succeed and be at the top of their field! To summarize, Data Science has not only accelerated the performance of companies but has also made it possible to manage & sustain their performance with ease.

FAQs on Data Analysis Case Studies

A case study in data science is an in-depth analysis of a real-world problem using data-driven approaches. It involves collecting, cleaning, and analyzing data to extract insights and solve challenges, offering practical insights into how data science techniques can address complex issues across various industries.

To create a data science case study, identify a relevant problem, define objectives, and gather suitable data. Clean and preprocess data, perform exploratory data analysis, and apply appropriate algorithms for analysis. Summarize findings, visualize results, and provide actionable recommendations, showcasing the problem-solving potential of data science techniques.

About the Author

ProjectPro is the only online platform designed to help professionals gain practical, hands-on experience in big data, data engineering, data science, and machine learning related technologies. Having over 270+ reusable project templates in data science and big data with step-by-step walkthroughs,

© 2024

© 2024 Iconiq Inc.

Privacy policy

User policy

Write for ProjectPro

Data Analytics Case Study Guide 2024

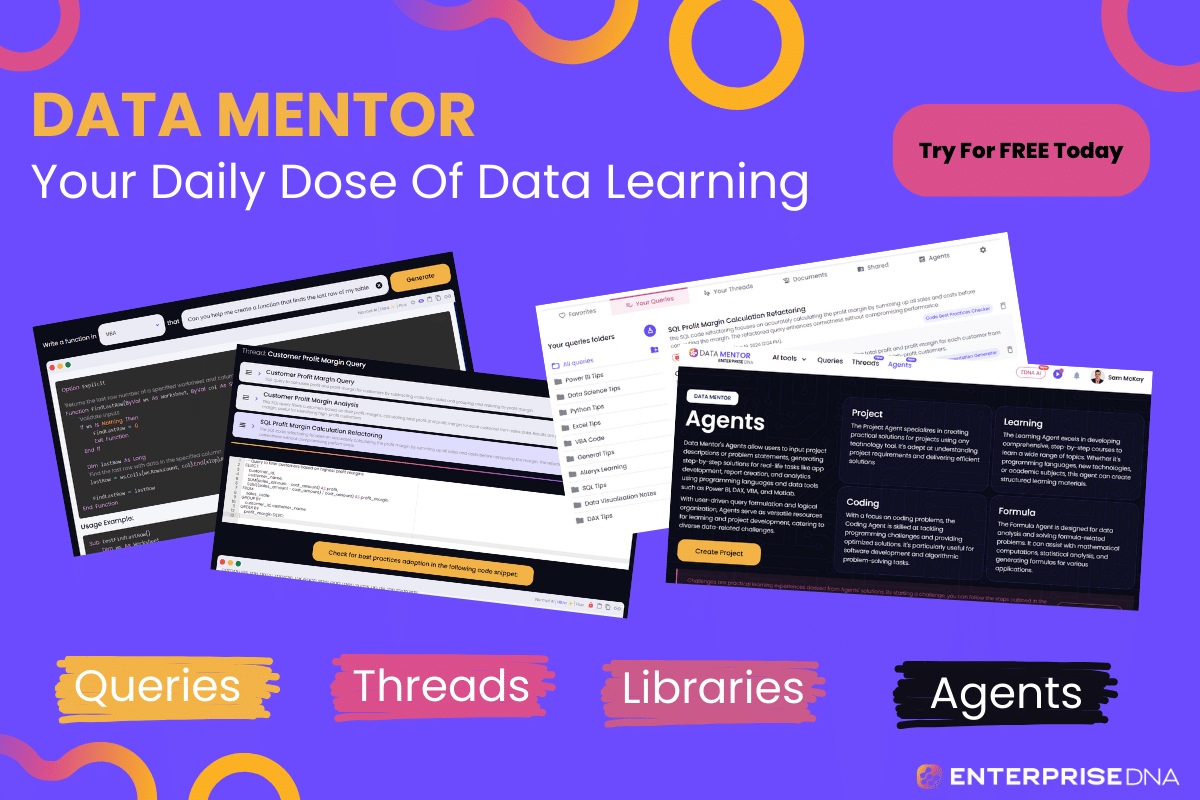

by Sam McKay, CFA | Data Analytics

Data analytics case studies reveal how businesses harness data for informed decisions and growth.

For aspiring data professionals, mastering the case study process will enhance your skills and increase your career prospects.

So, how do you approach a case study?

Use these steps to process a data analytics case study:

Understand the Problem: Grasp the core problem or question addressed in the case study.

Collect Relevant Data: Gather data from diverse sources, ensuring accuracy and completeness.

Apply Analytical Techniques: Use appropriate methods aligned with the problem statement.

Visualize Insights: Utilize visual aids to showcase patterns and key findings.

Derive Actionable Insights: Focus on deriving meaningful actions from the analysis.

This article will give you detailed steps to navigate a case study effectively and understand how it works in real-world situations.

By the end of the article, you will be better equipped to approach a data analytics case study, strengthening your analytical prowess and practical application skills.

Let’s dive in!

Table of Contents

What is a Data Analytics Case Study?

A data analytics case study is a real or hypothetical scenario where analytics techniques are applied to solve a specific problem or explore a particular question.

It’s a practical approach that uses data analytics methods, assisting in deciphering data for meaningful insights. This structured method helps individuals or organizations make sense of data effectively.

Additionally, it’s a way to learn by doing, where there’s no single right or wrong answer in how you analyze the data.

So, what are the components of a case study?

Key Components of a Data Analytics Case Study

A data analytics case study comprises essential elements that structure the analytical journey:

Problem Context: A case study begins with a defined problem or question. It provides the context for the data analysis , setting the stage for exploration and investigation.

Data Collection and Sources: It involves gathering relevant data from various sources , ensuring data accuracy, completeness, and relevance to the problem at hand.

Analysis Techniques: Case studies employ different analytical methods, such as statistical analysis, machine learning algorithms, or visualization tools, to derive meaningful conclusions from the collected data.

Insights and Recommendations: The ultimate goal is to extract actionable insights from the analyzed data, offering recommendations or solutions that address the initial problem or question.

Now that you have a better understanding of what a data analytics case study is, let’s talk about why we need and use them.

Why Case Studies are Integral to Data Analytics

Case studies serve as invaluable tools in the realm of data analytics, offering multifaceted benefits that bolster an analyst’s proficiency and impact:

Real-Life Insights and Skill Enhancement: Examining case studies provides practical, real-life examples that expand knowledge and refine skills. These examples offer insights into diverse scenarios, aiding in a data analyst’s growth and expertise development.

Validation and Refinement of Analyses: Case studies demonstrate the effectiveness of data-driven decisions across industries, providing validation for analytical approaches. They showcase how organizations benefit from data analytics. Also, this helps in refining one’s own methodologies

Showcasing Data Impact on Business Outcomes: These studies show how data analytics directly affects business results, like increasing revenue, reducing costs, or delivering other measurable advantages. Understanding these impacts helps articulate the value of data analytics to stakeholders and decision-makers.

Learning from Successes and Failures: By exploring a case study, analysts glean insights from others’ successes and failures, acquiring new strategies and best practices. This learning experience facilitates professional growth and the adoption of innovative approaches within their own data analytics work.

Including case studies in a data analyst’s toolkit helps gain more knowledge, improve skills, and understand how data analytics affects different industries.

Using these real-life examples boosts confidence and success, guiding analysts to make better and more impactful decisions in their organizations.

But not all case studies are the same.

Let’s talk about the different types.

Types of Data Analytics Case Studies

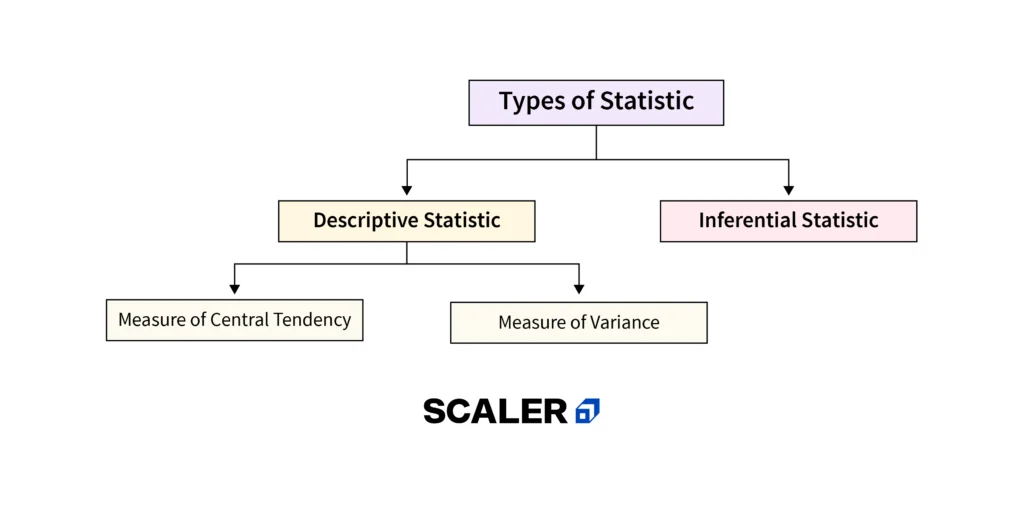

Data analytics encompasses various approaches tailored to different analytical goals:

Exploratory Case Study: These involve delving into new datasets to uncover hidden patterns and relationships, often without a predefined hypothesis. They aim to gain insights and generate hypotheses for further investigation.

Predictive Case Study: These utilize historical data to forecast future trends, behaviors, or outcomes. By applying predictive models, they help anticipate potential scenarios or developments.

Diagnostic Case Study: This type focuses on understanding the root causes or reasons behind specific events or trends observed in the data. It digs deep into the data to provide explanations for occurrences.

Prescriptive Case Study: This case study goes beyond analytics; it provides actionable recommendations or strategies derived from the analyzed data. They guide decision-making processes by suggesting optimal courses of action based on insights gained.

Each type has a specific role in using data to find important insights, helping in decision-making, and solving problems in various situations.

Regardless of the type of case study you encounter, here are some steps to help you process them.

Roadmap to Handling a Data Analysis Case Study

Embarking on a data analytics case study requires a systematic approach, step-by-step, to derive valuable insights effectively.

Here are the steps to help you through the process:

Step 1: Understanding the Case Study Context: Immerse yourself in the intricacies of the case study. Delve into the industry context, understanding its nuances, challenges, and opportunities.

Identify the central problem or question the study aims to address. Clarify the objectives and expected outcomes, ensuring a clear understanding before diving into data analytics.

Step 2: Data Collection and Validation: Gather data from diverse sources relevant to the case study. Prioritize accuracy, completeness, and reliability during data collection. Conduct thorough validation processes to rectify inconsistencies, ensuring high-quality and trustworthy data for subsequent analysis.

Step 3: Problem Definition and Scope: Define the problem statement precisely. Articulate the objectives and limitations that shape the scope of your analysis. Identify influential variables and constraints, providing a focused framework to guide your exploration.

Step 4: Exploratory Data Analysis (EDA): Leverage exploratory techniques to gain initial insights. Visualize data distributions, patterns, and correlations, fostering a deeper understanding of the dataset. These explorations serve as a foundation for more nuanced analysis.

Step 5: Data Preprocessing and Transformation: Cleanse and preprocess the data to eliminate noise, handle missing values, and ensure consistency. Transform data formats or scales as required, preparing the dataset for further analysis.

Step 6: Data Modeling and Method Selection: Select analytical models aligning with the case study’s problem, employing statistical techniques, machine learning algorithms, or tailored predictive models.

In this phase, it’s important to develop data modeling skills. This helps create visuals of complex systems using organized data, which helps solve business problems more effectively.

Understand key data modeling concepts, utilize essential tools like SQL for database interaction, and practice building models from real-world scenarios.

Furthermore, strengthen data cleaning skills for accurate datasets, and stay updated with industry trends to ensure relevance.

Step 7: Model Evaluation and Refinement: Evaluate the performance of applied models rigorously. Iterate and refine models to enhance accuracy and reliability, ensuring alignment with the objectives and expected outcomes.

Step 8: Deriving Insights and Recommendations: Extract actionable insights from the analyzed data. Develop well-structured recommendations or solutions based on the insights uncovered, addressing the core problem or question effectively.

Step 9: Communicating Results Effectively: Present findings, insights, and recommendations clearly and concisely. Utilize visualizations and storytelling techniques to convey complex information compellingly, ensuring comprehension by stakeholders.

Step 10: Reflection and Iteration: Reflect on the entire analysis process and outcomes. Identify potential improvements and lessons learned. Embrace an iterative approach, refining methodologies for continuous enhancement and future analyses.

This step-by-step roadmap provides a structured framework for thorough and effective handling of a data analytics case study.

Now, after handling data analytics comes a crucial step; presenting the case study.

Presenting Your Data Analytics Case Study

Presenting a data analytics case study is a vital part of the process. When presenting your case study, clarity and organization are paramount.

To achieve this, follow these key steps:

Structuring Your Case Study: Start by outlining relevant and accurate main points. Ensure these points align with the problem addressed and the methodologies used in your analysis.

Crafting a Narrative with Data: Start with a brief overview of the issue, then explain your method and steps, covering data collection, cleaning, stats, and advanced modeling.

Visual Representation for Clarity: Utilize various visual aids—tables, graphs, and charts—to illustrate patterns, trends, and insights. Ensure these visuals are easy to comprehend and seamlessly support your narrative.

Highlighting Key Information: Use bullet points to emphasize essential information, maintaining clarity and allowing the audience to grasp key takeaways effortlessly. Bold key terms or phrases to draw attention and reinforce important points.

Addressing Audience Queries: Anticipate and be ready to answer audience questions regarding methods, assumptions, and results. Demonstrating a profound understanding of your analysis instills confidence in your work.

Integrity and Confidence in Delivery: Maintain a neutral tone and avoid exaggerated claims about findings. Present your case study with integrity, clarity, and confidence to ensure the audience appreciates and comprehends the significance of your work.

By organizing your presentation well, telling a clear story through your analysis, and using visuals wisely, you can effectively share your data analytics case study.

This method helps people understand better, stay engaged, and draw valuable conclusions from your work.

We hope by now, you are feeling very confident processing a case study. But with any process, there are challenges you may encounter.

Key Challenges in Data Analytics Case Studies

A data analytics case study can present various hurdles that necessitate strategic approaches for successful navigation:

Challenge 1: Data Quality and Consistency

Challenge: Inconsistent or poor-quality data can impede analysis, leading to erroneous insights and flawed conclusions.

Solution: Implement rigorous data validation processes, ensuring accuracy, completeness, and reliability. Employ data cleansing techniques to rectify inconsistencies and enhance overall data quality.

Challenge 2: Complexity and Scale of Data

Challenge: Managing vast volumes of data with diverse formats and complexities poses analytical challenges.

Solution: Utilize scalable data processing frameworks and tools capable of handling diverse data types. Implement efficient data storage and retrieval systems to manage large-scale datasets effectively.

Challenge 3: Interpretation and Contextual Understanding

Challenge: Interpreting data without contextual understanding or domain expertise can lead to misinterpretations.

Solution: Collaborate with domain experts to contextualize data and derive relevant insights. Invest in understanding the nuances of the industry or domain under analysis to ensure accurate interpretations.

Challenge 4: Privacy and Ethical Concerns

Challenge: Balancing data access for analysis while respecting privacy and ethical boundaries poses a challenge.

Solution: Implement robust data governance frameworks that prioritize data privacy and ethical considerations. Ensure compliance with regulatory standards and ethical guidelines throughout the analysis process.

Challenge 5: Resource Limitations and Time Constraints

Challenge: Limited resources and time constraints hinder comprehensive analysis and exhaustive data exploration.

Solution: Prioritize key objectives and allocate resources efficiently. Employ agile methodologies to iteratively analyze and derive insights, focusing on the most impactful aspects within the given timeframe.

Recognizing these challenges is key; it helps data analysts adopt proactive strategies to mitigate obstacles. This enhances the effectiveness and reliability of insights derived from a data analytics case study.

Now, let’s talk about the best software tools you should use when working with case studies.

Top 5 Software Tools for Case Studies

In the realm of case studies within data analytics, leveraging the right software tools is essential.

Here are some top-notch options:

Tableau : Renowned for its data visualization prowess, Tableau transforms raw data into interactive, visually compelling representations, ideal for presenting insights within a case study.

Python and R Libraries: These flexible programming languages provide many tools for handling data, doing statistics, and working with machine learning, meeting various needs in case studies.

Microsoft Excel : A staple tool for data analytics, Excel provides a user-friendly interface for basic analytics, making it useful for initial data exploration in a case study.

SQL Databases : Structured Query Language (SQL) databases assist in managing and querying large datasets, essential for organizing case study data effectively.

Statistical Software (e.g., SPSS , SAS ): Specialized statistical software enables in-depth statistical analysis, aiding in deriving precise insights from case study data.

Choosing the best mix of these tools, tailored to each case study’s needs, greatly boosts analytical abilities and results in data analytics.

Final Thoughts

Case studies in data analytics are helpful guides. They give real-world insights, improve skills, and show how data-driven decisions work.

Using case studies helps analysts learn, be creative, and make essential decisions confidently in their data work.

Check out our latest clip below to further your learning!

Frequently Asked Questions

What are the key steps to analyzing a data analytics case study.

When analyzing a case study, you should follow these steps:

Clarify the problem : Ensure you thoroughly understand the problem statement and the scope of the analysis.

Make assumptions : Define your assumptions to establish a feasible framework for analyzing the case.

Gather context : Acquire relevant information and context to support your analysis.

Analyze the data : Perform calculations, create visualizations, and conduct statistical analysis on the data.

Provide insights : Draw conclusions and develop actionable insights based on your analysis.

How can you effectively interpret results during a data scientist case study job interview?

During your next data science interview, interpret case study results succinctly and clearly. Utilize visual aids and numerical data to bolster your explanations, ensuring comprehension.

Frame the results in an audience-friendly manner, emphasizing relevance. Concentrate on deriving insights and actionable steps from the outcomes.

How do you showcase your data analyst skills in a project?

To demonstrate your skills effectively, consider these essential steps. Begin by selecting a problem that allows you to exhibit your capacity to handle real-world challenges through analysis.

Methodically document each phase, encompassing data cleaning, visualization, statistical analysis, and the interpretation of findings.

Utilize descriptive analysis techniques and effectively communicate your insights using clear visual aids and straightforward language. Ensure your project code is well-structured, with detailed comments and documentation, showcasing your proficiency in handling data in an organized manner.

Lastly, emphasize your expertise in SQL queries, programming languages, and various analytics tools throughout the project. These steps collectively highlight your competence and proficiency as a skilled data analyst, demonstrating your capabilities within the project.

Can you provide an example of a successful data analytics project using key metrics?

A prime illustration is utilizing analytics in healthcare to forecast hospital readmissions. Analysts leverage electronic health records, patient demographics, and clinical data to identify high-risk individuals.

Implementing preventive measures based on these key metrics helps curtail readmission rates, enhancing patient outcomes and cutting healthcare expenses.

This demonstrates how data analytics, driven by metrics, effectively tackles real-world challenges, yielding impactful solutions.

Why would a company invest in data analytics?

Companies invest in data analytics to gain valuable insights, enabling informed decision-making and strategic planning. This investment helps optimize operations, understand customer behavior, and stay competitive in their industry.

Ultimately, leveraging data analytics empowers companies to make smarter, data-driven choices, leading to enhanced efficiency, innovation, and growth.

Related Posts

Beginner’s Guide to Choosing the Right Data Visualization

Data Analytics

As a beginner in data visualization, you’ll need to learn the various chart types to effectively...

Ultimate Guide to Mastering Color in Data Visualization

Color plays a vital role in the success of data visualization. When used effectively, it can help guide...

Simple To Use Best Practises For Data Visualization

So you’ve got a bunch of data and you want to make it look pretty. Or maybe you’ve heard about this...

Exploring The Benefits Of Geospatial Data Visualization Techniques

Data visualization has come a long way from simple bar charts and line graphs. As the volume and...

4 Types of Data Analytics: Explained

In a world full of data, data analytics is the heart and soul of an operation. It's what transforms raw...

Data Analytics Outsourcing: Pros and Cons Explained

In today's data-driven world, businesses are constantly swimming in a sea of information, seeking the...

What Does a Data Analyst Do on a Daily Basis?

In the digital age, data plays a significant role in helping organizations make informed decisions and...

The New Equation

Executive leadership hub - What’s important to the C-suite?

Tech Effect

Shared success benefits

Loading Results

No Match Found

Data analytics case study data files

Inventory analysis case study data files:.

Beginning Inventory

Purchase Prices

Vendor Invoices

Ending Inventory

Inventory Analysis Case Study Instructor files:

Instructor guide

Phase 1 - Data Collection and Preparation

Phase 2 - Data Discovery and Visualization

Phase 3 - Introduction to Statistical Analysis

Stay up to date

Subscribe to our University Relations distribution list

Julie Peters

University Relations leader, PwC US

© 2017 - 2024 PwC. All rights reserved. PwC refers to the PwC network and/or one or more of its member firms, each of which is a separate legal entity. Please see www.pwc.com/structure for further details.

- Data Privacy Framework

- Cookie info

- Terms and conditions

- Site provider

- Your Privacy Choices

FOR EMPLOYERS

Top 10 real-world data science case studies.

Aditya Sharma

Aditya is a content writer with 5+ years of experience writing for various industries including Marketing, SaaS, B2B, IT, and Edtech among others. You can find him watching anime or playing games when he’s not writing.

Frequently Asked Questions

Real-world data science case studies differ significantly from academic examples. While academic exercises often feature clean, well-structured data and simplified scenarios, real-world projects tackle messy, diverse data sources with practical constraints and genuine business objectives. These case studies reflect the complexities data scientists face when translating data into actionable insights in the corporate world.

Real-world data science projects come with common challenges. Data quality issues, including missing or inaccurate data, can hinder analysis. Domain expertise gaps may result in misinterpretation of results. Resource constraints might limit project scope or access to necessary tools and talent. Ethical considerations, like privacy and bias, demand careful handling.

Lastly, as data and business needs evolve, data science projects must adapt and stay relevant, posing an ongoing challenge.

Real-world data science case studies play a crucial role in helping companies make informed decisions. By analyzing their own data, businesses gain valuable insights into customer behavior, market trends, and operational efficiencies.

These insights empower data-driven strategies, aiding in more effective resource allocation, product development, and marketing efforts. Ultimately, case studies bridge the gap between data science and business decision-making, enhancing a company's ability to thrive in a competitive landscape.

Key takeaways from these case studies for organizations include the importance of cultivating a data-driven culture that values evidence-based decision-making. Investing in robust data infrastructure is essential to support data initiatives. Collaborating closely between data scientists and domain experts ensures that insights align with business goals.

Finally, continuous monitoring and refinement of data solutions are critical for maintaining relevance and effectiveness in a dynamic business environment. Embracing these principles can lead to tangible benefits and sustainable success in real-world data science endeavors.

Data science is a powerful driver of innovation and problem-solving across diverse industries. By harnessing data, organizations can uncover hidden patterns, automate repetitive tasks, optimize operations, and make informed decisions.

In healthcare, for example, data-driven diagnostics and treatment plans improve patient outcomes. In finance, predictive analytics enhances risk management. In transportation, route optimization reduces costs and emissions. Data science empowers industries to innovate and solve complex challenges in ways that were previously unimaginable.

Hire remote developers

Tell us the skills you need and we'll find the best developer for you in days, not weeks.

Currently taking bookings for June >>

Data Analysis Case Study: Learn From Humana’s Automated Data Analysis Project

Lillian Pierson, P.E.

Playback speed:

Got data? Great! Looking for that perfect data analysis case study to help you get started using it? You’re in the right place.

If you’ve ever struggled to decide what to do next with your data projects, to actually find meaning in the data, or even to decide what kind of data to collect, then KEEP READING…

Deep down, you know what needs to happen. You need to initiate and execute a data strategy that really moves the needle for your organization. One that produces seriously awesome business results.

But how you’re in the right place to find out..

As a data strategist who has worked with 10 percent of Fortune 100 companies, today I’m sharing with you a case study that demonstrates just how real businesses are making real wins with data analysis.

In the post below, we’ll look at:

- A shining data success story;

- What went on ‘under-the-hood’ to support that successful data project; and

- The exact data technologies used by the vendor, to take this project from pure strategy to pure success

If you prefer to watch this information rather than read it, it’s captured in the video below:

Here’s the url too: https://youtu.be/xMwZObIqvLQ

3 Action Items You Need To Take

To actually use the data analysis case study you’re about to get – you need to take 3 main steps. Those are:

- Reflect upon your organization as it is today (I left you some prompts below – to help you get started)

- Review winning data case collections (starting with the one I’m sharing here) and identify 5 that seem the most promising for your organization given it’s current set-up

- Assess your organization AND those 5 winning case collections. Based on that assessment, select the “QUICK WIN” data use case that offers your organization the most bang for it’s buck