Official websites use .gov

A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS

A lock ( ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

Public Health Surveillance in Electronic Health Records: Lessons From PCORnet

ORIGINAL RESEARCH — Volume 21 — July 11, 2024

Nidhi Ghildayal, PhD 1 ; Kshema Nagavedu, MPH 1 ; Jennifer L. Wiltz, MD, MPH 2 ; Soowoo Back, MPH 1 ; Tegan K. Boehmer, PhD 3 ; Christine Draper 1 ; Adi V. Gundlapalli, MD, PhD 3 ; Casie Horgan, MPH 1 ; Keith A. Marsolo, PhD 4 ; Nik R. Mazumder, MD, MPH 5 ; Juliane Reynolds, MPH 1 ; Matthew Ritchey, DPT 3 ; Sharon Saydah, PhD 6 ; Yacob G. Tedla 7 ; Thomas W. Carton, PhD 8 ; Jason P. Block, MD, MPH 1 ( View author affiliations )

Suggested citation for this article: Ghildayal N, Nagavedu K, Wiltz JL, Back S, Boehmer TK, Draper C, et al. Public Health Surveillance in Electronic Health Records: Lessons From PCORnet. Prev Chronic Dis 2024;21:230417. DOI: http://dx.doi.org/10.5888/pcd21.230417 .

PEER REVIEWED

Introduction

Acknowledgments, author information.

What is already known on this topic?

Existing survey-based surveillance programs provide important information on the epidemiology of chronic and infectious diseases. Electronic health record (EHR) data can be used to supplement surveillance efforts.

What is added by this report?

In this study, we describe the attributes and challenges of using EHR data for disease surveillance. We describe surveillance case studies and future directions for enhancing opportunities to use EHR data for public health surveillance.

What are the implications for public health practice?

EHR data have an important role for public health surveillance both for chronic and infectious diseases, providing comprehensive information available soon after data collection. Strategic funding and financing models need to be developed, and federal, state, and local support could help establish EHRs as an important sustainable mechanism for surveillance.

PCORnet, the National Patient-Centered Clinical Research Network, is a large research network of health systems that map clinical data to a standardized data model. In 2018, we expanded existing infrastructure to facilitate use for public health surveillance. We describe benefits and challenges of using PCORnet for surveillance and describe case studies.

In 2018, infrastructure enhancements included addition of a table to store patients’ residential zip codes and expansion of a modular program to generate population health statistics across conditions. Chronic disease surveillance case studies conducted in 2019 assessed atrial fibrillation (AF) and cirrhosis. In April 2020, PCORnet established an infrastructure to support COVID-19 surveillance with institutions frequently updating their electronic health record data.

By August 2023, 53 PCORnet sites (84%) had a 5-digit zip code available on at least 95% of their patient populations. Among 148,223 newly diagnosed AF patients eligible for oral anticoagulant (OAC) therapy, 43.3% were on any OAC (17.8% warfarin, 28.5% any novel oral anticoagulant) within a year of the AF diagnosis. Among 60,268 patients with cirrhosis (2015–2019), common documented etiologies included unknown (48%), hepatitis C infection (23%), and alcohol use (22%). During October 2022 through December 2023, across 34 institutions, the proportion of COVID-19 patients who were cared for in the inpatient setting was 9.1% among 887,051 adults aged 20 years or older and 6.0% among 139,148 children younger than 20 years.

Conclusions

PCORnet provides important data that may augment traditional public health surveillance programs across diverse conditions. PCORnet affords longitudinal population health assessments among large catchments of the population with clinical, treatment, and geographic information, with capabilities to deliver rapid information needed during public health emergencies.

Electronic health records (EHRs) contain extensive longitudinal health information about patients and populations (1). Over the last decade, prompted by federal meaningful use guidelines and incentives, EHRs have become ubiquitous in health care settings (2). Because of their wide availability, EHRs are a viable option for disease surveillance and have some advantages over traditional survey-based surveillance methods, such as the National Health and Nutrition Examination Survey and the Behavioral Risk Factor Surveillance System ( Table 1 ) (3,4).

Some of the most important attributes of EHRs for surveillance include timeliness of data and availability for large populations. EHR data are collected daily through routine clinical care delivery and can be made available quickly if resources are available for processing and data curation. In contrast, large national surveillance programs typically use surveys or field data collection, followed by data processing that can lead to extensive lag times between data collection and availability. The scope of EHR data available also can provide important granular information about subgroups. For example, although retrieving metro area and small area modeling estimates via national surveillance surveys is possible, these data are often restricted for privacy reasons and, in some cases, are imputed rather than directly measured (4–6). Furthermore, the sample size of surveys limits the availability of data on rare conditions or less common subgroups of individuals, such as among racial and ethnic minority groups (4,6). Because of the availability of data on vast populations that allow for numbers large enough to stratify by even uncommon subgroups, EHRs can provide data for specific geographic regions and populations (3,6,7).

Another area of potential benefit of EHRs for surveillance is the availability of longitudinal objective, measured data, such as vital signs and laboratory values. These data allow for more accurate definitions (ie, phenotypes) of disease, such as using a combination of medication prescriptions, laboratory values, and vital signs to define chronic disease (4). Measured data can also enable an objective determination of disease severity and disease control over time, such as defining whether patients are meeting guideline control targets for diabetes or hypertension by using glycosylated hemoglobin or measured blood pressure values. These data can provide information both cross-sectionally and longitudinally in cohorts that receive care over time. National surveillance surveys typically rely on self-reported information or single vital sign or laboratory values to define disease prevalence and incidence. Lastly, EHRs can offer longitudinal information with short latency, allowing for capture of information on changing health status, in contrast to that collected through the lengthy process of repeated survey administration (7).

EHR data present some challenges, including with data quality and representativeness. Missing data also are common for myriad reasons. The fragmented health care system in the US precludes comprehensive data integration across care settings, and patients often receive care in multiple institutions with different data systems (8,9). Even when information from other health care institutions can be viewed within the health care system that serves as the medical home for a patient (eg, Care Everywhere in Epic), that information may not be captured in clinical data warehouses that can be used for surveillance. Clinical notes written in free text may not be easily translated to structured data fields, resulting in missing information on symptoms and exposures (10). Furthermore, clinical data available in EHRs do not typically include information on social determinants, quality-of-life measures, and other health behavior information that could be more readily collected through national surveys. Some social determinants data can be integrated when available geographic information can be linked to community-level data from the US Census and other data resources (11,12). Another drawback of EHR surveillance is that data may not be representative, and clinical practice patterns may differ between sites, leading to heterogeneity in data available due solely to the differential ascertainment of diagnoses, for example. Exploration of the epidemiology of disease by geography also is heavily dependent on the number of institutions per geographic area providing data. EHR data have information on patients who are seeking care, likely biasing inferences toward certain demographic groups receiving more medical care and patients who have chronic conditions, have health insurance coverage, or live in urban areas (12). However, unlike claims data that are typically limited to commercial insurance, Medicaid, or Medicare separately, EHR data are typically agnostic to payer source and have information on patients with a diverse array of insurance sources, including those who are uninsured (13) ( Table 1 ).

In this article, we discuss the use of EHR data for public health surveillance in a large national research network and present case studies of its use for chronic disease and its later adaptation for COVID-19 surveillance during a public health emergency.

PCORnet as a data source for public health surveillance

PCORnet, the National Patient-Centered Clinical Research Network, is a research infrastructure program that was established to support use of health care data for comparative effectiveness research (14). This network-of-networks includes more than 60 health care systems embedded in 8 Clinical Research Networks (https://pcornet.org/network/), with a regulatory infrastructure that prioritizes data sharing while protecting patient privacy. Data from millions of patients from different source EHR systems are harmonized locally into a standard data set, called a Common Data Model (CDM). This CDM is updated over time to incorporate new and evolving data elements and is nearly identical across all participating institutions, allowing for centralized querying and interoperability of data across sites (15). The data elements include comprehensive clinical information, including prescriptions, diagnoses, procedures, vital measures, laboratory values, and geographic information, among other data elements, from all care settings relevant for a specific health care system (eg, ambulatory, emergency department, inpatient).

Quarterly data quality reviews allow for a comprehensive assessment of conformance, completeness, plausibility, and persistence, with feedback provided on issues discovered. Data sharing across the network is accommodated by a Master Data Sharing Agreement, with further regulatory processes outlined to accommodate varied circumstances required for research and operations (14,16). PCORnet also has a “front door” mechanism for investigators to request data queries or study collaborators (17).

PCORnet has a distributed query infrastructure, and users can submit a query and obtain a coordinated response that combines data across participating health systems (16). PCORnet also is an engaged network in which investigators, informatics specialists, clinicians, patients, and other partners from sites can provide context and information regarding the data available from that site.

Reusable SAS-based tools that have been developed for PCORnet are available for querying data, with regular updates for CDM changes and to enhance functionality for new data needs. These tools are modular descriptive programs that can be quickly adapted to create and characterize cohorts with aggregate data, using tables and variables defined in the PCORnet CDM. While PCORnet has protocols allowing for the transfer of patient-level data to requestors, the availability of a reusable process for obtaining aggregate data from partners allows for assessments that can often be completed quickly. While less flexible than centralized, pooled data available for analysis, aggregate data are typically sufficient for surveillance.

PCORnet has several capabilities that foster successful public health surveillance. As a national EHR surveillance program with multiple contributing entities, PCORnet contains data on more than 30 million patients annually (16). PCORnet has broad geographic representation with most sites providing data from both inpatient and outpatient settings (https://pcornet.org/data/). The network provides access to patients with longitudinal follow-up, often over many years; populations large enough to allow for examination of subgroups, such as by race and ethnicity, geography, and multimorbidity; and opportunities to capture adequate numbers of patients with rare diseases to make important inferences about prevalence. Data captured on race are considerably more complete than those found in some other commonly used clinical data sets (18). For example, among all patients with encounters in 34 PCORnet sites during October 2022 through December 2023, race and ethnicity information was missing for 9.5% of those younger than 20 years and 8.7% of those aged 20 years or older ( Table 2 ). Race and ethnicity missingness was lower for patients with diagnostic codes for COVID-19, positive laboratory tests for SARS-CoV-2, or recent prescriptions for COVID-19 medications: 7.6% for patients younger than 20 years and 5.1% for patients aged 20 years or older (data not shown).

In 2018, PCORnet began to expand capabilities of the network to conduct EHR-based surveillance, specifically focused on chronic disease. The program was initially used for pilot projects that built capacity for geographic data capture. In March 2020, the network began exploring whether its resources, including the newly established capabilities for chronic disease surveillance, could be adapted for COVID-19 surveillance. This shift required some changes, especially to provide more timely data. PCORnet expanded its infrastructure to include the ability to frequently, up to twice monthly, refresh data. With regularly refreshed data and modular programs, data can now be available for public health professionals and researchers in a matter of weeks. Simple analyses that only require basic counts and frequencies can be provided even more quickly.

Expanded data and tools for surveillance and case studies in PCORnet

Starting in 2019 with CDM version 5.0, PCORnet incorporated a new, optional CDM table containing patient-level geographic information. This table allowed sites to include patient information on 9- and 5-digit zip code, city, state, and the start and end date for that address information. To accommodate surveillance queries in PCORnet, we developed a geographic assessment module to query this address data (16). The module allows for the characterization of a cohort based on the most recent address stratified by zip code, city, state, or Census region. Queries also can pull patient-level data with zip-code or mapped US Census Bureau’s data elements. The geographic module was piloted at several PCORnet health systems for chronic disease surveillance case studies, including atrial fibrillation (AF) and liver cirrhosis.

Starting in April 2020, select PCORnet institutions collaborated on a response to the COVID-19 pandemic that would allow for more frequent querying of data. Institutions developed a CDM that contained data for a subset of their total patient population, including only patients who had a diagnostic code for a respiratory virus or infection or a viral laboratory test for SARS-CoV-2. The inclusion criteria for this subset CDM were later expanded to include COVID-19 therapeutics and vaccines. Filtering the broader population using these criteria allowed for quicker refreshes of data, facilitating reports on data with a latency of a few weeks, in contrast to the regular quarterly updates. This process also was a more practical approach for sites, given that frequent refreshes of their complete patient population data would take extensive effort and data storage. Sites initially updated their filtered CDM biweekly and then later monthly or on request.

The PCORnet team leading this surveillance effort also changed the modular statistical programs to allow for characterization of cohorts using results of qualitative viral testing information, available mortality information (ie, typically deaths reported to the health care system or in-hospital deaths) and records of vaccinations given in the health care system or populated in EHRs from state registry linkages, when available. The statistical programs also were updated to allow for distributed advanced analytics, including the use of multiple regression models that execute behind institutions’ firewalls and return only summary model output; these results can be combined across sites using meta-analytic techniques (19). Since October 2020, PCORnet has participated in a cooperative agreement funded by the Centers for Disease Control and Prevention (CDC) to provide COVID-19 information from up to 43 PCORnet institutions on a biweekly basis.

The geographic query module was released for use in PCORnet in July 2019. Geographic data returned from queries were well distributed but contained many sparsely populated zip codes. Zip code data typically were not available retrospectively; many sites only began capturing the geographic information prospectively at the time of its CDM release. County information was added to the CDM during the release of CDM 6.1 in April 2023.

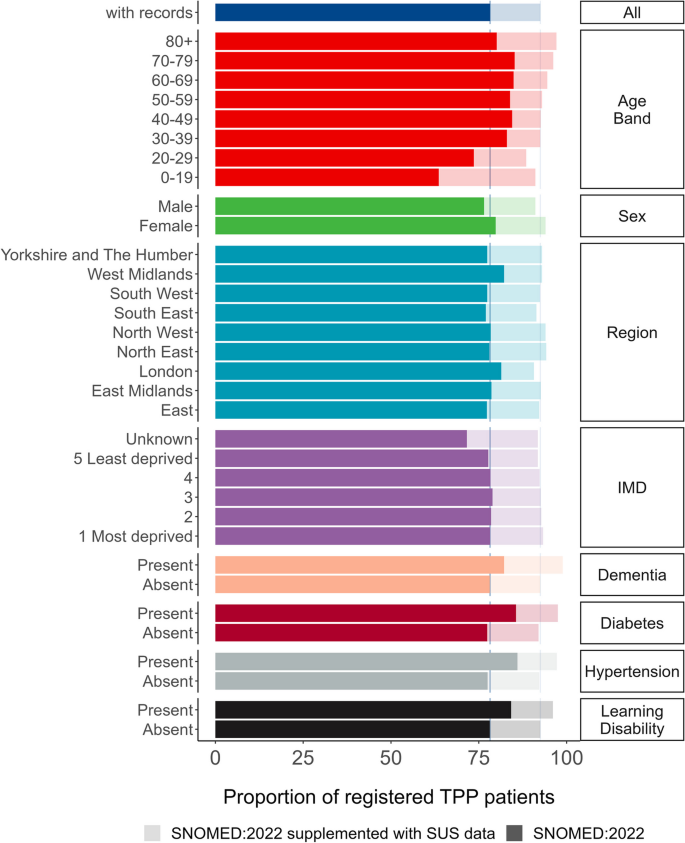

By August 2023, 62 of 63 (98%) PCORnet institutions had populated geographic information. Among sites, 59 (94%) had at least some information on 5-digit zip code, with 53 (84%) having 5-digit zip available on at least 95% of their patient population. For 9-digit zip codes, 42 (67%) sites had some information on patients, with 10 (16%) having this information populated for at least 75% of their population. The pilot projects on AF and liver cirrhosis tested the implementation of this geographic data table and use of the geographic query module.

Oral anticoagulant use

Oral anticoagulant (OAC) therapy is proven to reduce the risk of stroke and is the standard treatment for stroke risk reduction in patients with AF (20,21). Some local studies have found that about half of patients with AF at risk of stroke do not get OAC prescriptions (22–25). However, little information exists on the rate of prescriptions of OACs across US states. We used data from 4 PCORnet Clinical Research Networks (CAPriCORN, STAR, REACHnet, and ADVANCE) and investigated the OAC prescription rate in 22 states. Patients newly diagnosed with AF between January 2014 and December 2019, with a CHADSVASC score of 2 or more, no history of stroke, and known zip code were included in our analysis. The CHADSVASC score includes information on risk factors for stroke among patients with AF and is used to calculate a predicted probability of stroke; a score of 2 or more is considered high risk for stroke (26). Among 148,223 newly diagnosed AF patients eligible for an OAC, 43.3% were on any OAC, 17.8% received any warfarin, and 28.5% received any novel oral anticoagulant (NOAC) in the year following AF diagnosis. OAC prescription rates varied greatly across states, ranging from 28.4% in Virginia to 54.0% in Indiana.

OAC prescriptions continue to be low in patients with AF and vary across health systems and geographic regions. These results are consistent with findings from previous studies (22–25). Our findings provided comprehensive information on OAC use across regions but were not nationally representative. The study only examined health systems that were part of the CRNs involved in the study: 6 from CAPriCORN, 2 from REACHnet, and 1 each from STAR and ADVANCE.

Cirrhosis, irreversible damage to the liver, is a leading cause of illness and death in the US (27). Despite its importance as a major medical condition, one of the most important challenges for determining population prevalence and geographic distribution is the lack of a unified repository of patients with cirrhosis. PCORnet provided an opportunity to explore the epidemiology of cirrhosis using diagnostic codes in EHRs. In this pilot study, we included any patient aged 18 years or older with a qualifying International Classification of Diseases (ICD) code for cirrhosis (ie, ICD-9 or ICD-10) who received care at a participating center during the calendar years 2015–2018. The study included 9 health systems from 3 Clinical Research Networks, with strong overlap with the AF pilot: STAR, CAPriCORN, and REACHnet. Patient zip code was assessed as zip code of residence both within 90 days of cohort inclusion and within any prior period before inclusion.

Overall, we identified 60,268 patients with ICD codes for cirrhosis. Patients were 58% (n = 34,908) male, 57% (n = 34,458) White race, and 81% (n = 48,646) non-Hispanic ethnicity, with a mean age of 58 years. The most common etiologies for cirrhosis were hepatitis C (n = 13,882; 23%) and alcohol (n = 13,187; 22%); however, nearly half of patients (n = 29,177, 48%) did not have a clear etiology of liver disease documented in the EHR. When geographic data were restricted to a period that was within 90 days of diagnosis of cirrhosis, residential zip code was highly missing (86% missing); missingness was much lower for records of any zip code documented in the EHR before study inclusion (33% missing). This study was conducted relatively soon after the geographic information was first provided in the CDM. Because most sites populated their geographic information prospectively, missingness will improve over time, allowing for PCORnet to be effectively used for important public health surveillance of cirrhosis by geography.

COVID-19 surveillance

PCORnet was able to quickly transition to infectious disease surveillance and began reporting COVID-19 national data in April 2020. Since October 1, 2020, working with CDC, 43 PCORnet institutions have been engaged in a broad surveillance effort in which queries are conducted up to twice monthly on varied topics, with aggregate data provided to CDC in support of pandemic response. The surveillance effort has led to over 50 data queries. In a recent query, focused on the period of October 1, 2022, to December 31, 2023, 34 of the participating PCORnet institutions recorded 887,051 patients aged 20 years or older and 139,148 patients younger than 20 years who tested positive for SARS-COV-2, received a COVID-19 therapeutic, or had an ICD-10 code for COVID-19, with geographic information available by state. Among these patients, 80,712 (9%) of the patients aged 20 years or older and 8,322 (6%) of the patients younger than 20 years were cared for in the inpatient setting. We have mapped zip code data available in this population to designated geographic variables, including US Census Bureau variables for rurality and urbanicity and area deprivation index. These variables were well populated with missing data for 3%–4% of the population. Most patients lived in urban settings (88%–89%). For area deprivation index scores, 44% of patients aged 20 years or older and 51% of patients younger than 20 years were in the top 2 quartiles (ie, higher area deprivation).

This COVID-19 surveillance program has generated important information on the prevalence of post-acute sequelae of SARS-CoV-2 infection (28), disparities in uptake of COVID-19 therapeutics (18,29), cardiac complications after COVID-19 mRNA vaccines and SARS-CoV-2 infection (30), and association of uncontrolled diabetes and hypertension and severe COVID-19 (19). Information also was captured on trends in chronic and infectious disease incidence and preventive care services before and during the pandemic and the incidence of and therapeutics for mpox to support CDC’s response. The infrastructure developed for this CDC-funded project also was leveraged for other large-scale research programs, such as providing preliminary data for the National Institutes of Health RECOVER Initiative (31).

With the availability of patient-level geographic information, large populations, and comprehensive longitudinal clinical data, PCORnet and similar networks can fill in gaps for existing national surveillance infrastructure. Pilot surveillance projects provided valuable lessons for use of PCORnet infrastructure that was leveraged for the national COVID-19 public health response.

Streamlined regulatory processes are critical to accommodate efficient surveillance work. For example, we pursued individual institutional review board (IRB) approvals for each chronic disease surveillance pilot project; all IRB approvals required more than 6 months to complete. Lead sites faced difficulties in coordinating single IRBs (eg, through SMART IRB) across participating sites and ascertaining whether sites should be obtaining IRB determination for limited or nonlimited data sets. These processes were streamlined during the COVID-19 pandemic. The collaborative PCORnet CDC COVID-19 project was exempt from IRB review because it constituted public health surveillance required or authorized by a public health authority, as specified under the Common Rule. Our experience demonstrates that clear network guidance on approvals necessary for varied types of data exchange could help streamline surveillance projects. Fewer requirements should be needed for projects using only aggregate data, even with the inclusion of some geographic information (32). This guidance will be most important for surveillance projects that are not directed by a public health authority and, thus, not exempt from IRB review (33).

Implementation of the pilot projects also revealed both issues and benefits that arose from using various organizing units for geographic data. The city variable was not useful due to varied spellings. Zip code was well-populated at the 5-digit level, providing expanded geographic capabilities that go beyond other data sources, such as insurance claims. Counties can be useful in some cases, such as for states that use geographic divisions other than standard ones (eg, Utah Small Area Codes); county was added as a new geographic unit for the PCORnet CDM in 2023. Ultimately, census tracts or block groups are likely most useful because these geographic units are typically more uniform than larger zip code areas. Regulatory processes could help ease the way for networks to use this information more readily. Direct linkage to US Census Bureau and other community-level data might also preclude the need to share actual geographic identifiers.

Implications for practice

EHR-based networks have important potential for surveillance of key priority areas that align with health and public health missions. When rapidly refreshed data with short latency are required, PCORnet has shown that it can be used for COVID-19 surveillance and other infectious disease outbreaks or epidemics, with data that are available with often very short latency from the time of collection. These rapidly available data in PCORnet allowed for timely reporting of infection trends, including information on patient demographics, comorbidities, and treatments used or prescribed. Timely data can also be important for chronic disease surveillance but may not require updates as frequently as for emerging infectious diseases.

EHR data could be improved to address some of the challenges for its use in surveillance. Developing and deploying population statistical weighting schemes for data in EHRs, which have data only on patients seeking care from designated health care institutions, could help alleviate issues related to generalizability of populations (34,35). Strategic funding and financing models should be developed, and federal, state, and local support could help establish EHRs as an important sustainable mechanism for surveillance (36). The continued success of using PCORnet for large-scale surveillance also can expand its engagement of partners to ensure that data can be used most efficiently to support population health priorities (36).

Dr Block and Dr Carton were co-senior authors and contributed equally to this article. This study was funded in part by a grant from the Patient-Centered Outcomes Research Institute (PCORI 283-3709) and Cooperative Agreement number 6-NU38OT000316, funded by CDC. The authors declare no potential conflicts of interest with respect to the research, authorship, or publication of this article. No copyrighted materials, surveys, instruments, or tools were used in this research.

Corresponding Author: Jason P. Block, MD, MPH, Department of Population Medicine, Harvard Medical School, Harvard Pilgrim Health Care Institute, 401 Park Dr, Ste 401, Boston, MA 02215 ( [email protected] ).

Author Affiliations: 1 Department of Population Medicine, Harvard Medical School, Harvard Pilgrim Health Care Institute, Boston, Massachusetts. 2 National Center for Chronic Disease Prevention and Health Promotion, Centers for Disease Control and Prevention, Atlanta, Georgia. 3 Office of Public Health Data, Surveillance, and Technology, Centers for Disease Control and Prevention, Atlanta, Georgia. 4 Department of Population Health Sciences, Duke Clinical Research Institute, Duke University School of Medicine, Durham, North Carolina. 5 Department of Internal Medicine, University of Michigan Health, Ann Arbor, Michigan. 6 Coronavirus and Other Respiratory Viruses Division, Centers for Disease Control and Prevention, Atlanta, Georgia. 7 Department of Medicine, Vanderbilt University Medical Center, Nashville, Tennessee. 8 Louisiana Public Health Institute, New Orleans, Louisiana.

- Gunter TD, Terry NP. The emergence of national electronic health record architectures in the United States and Australia: models, costs, and questions. J Med Internet Res . 2005;7(1):e3. PubMed doi:10.2196/jmir.7.1.e3

- Agency for Healthcare Research and Quality. Module 17, electronic health records and meaningful use. https://www.ahrq.gov/ncepcr/tools/pf-handbook/mod17.html

- Birkhead GS, Klompas M, Shah NR. Uses of electronic health records for public health surveillance to advance public health. Annu Rev Public Health . 2015;36(1):345–359. PubMed doi:10.1146/annurev-publhealth-031914-122747

- Klompas M, Cocoros NM, Menchaca JT, Erani D, Hafer E, Herrick B, et al. . State and local chronic disease surveillance using electronic health record systems. Am J Public Health . 2017;107(9):1406–1412. PubMed doi:10.2105/AJPH.2017.303874

- Centers for Disease Control and Prevention. PLACES: local data for better health, methodology. Accessed March 28, 2024. https://www.cdc.gov/places/methodology/

- Casey JA, Schwartz BS, Stewart WF, Adler NE. Using electronic health records for population health research: a review of methods and applications. Annu Rev Public Health . 2016;37(1):61–81. PubMed doi:10.1146/annurev-publhealth-032315-021353

- McVeigh KH, Newton-Dame R, Chan PY, Thorpe LE, Schreibstein L, Tatem KS, et al. . Can electronic health records be used for population health surveillance? Validating population health metrics against established survey data. EGEMS (Wash DC) . 2016;4(1):1267. PubMed doi:10.13063/2327-9214.1267

- Eggleston EM, Weitzman ER. Innovative uses of electronic health records and social media for public health surveillance. Curr Diab Rep . 2014;14(3):468. PubMed doi:10.1007/s11892-013-0468-7

- Lenert L, Sundwall DN. Public health surveillance and meaningful use regulations: a crisis of opportunity. Am J Public Health . 2012;102(3):e1–e7. PubMed doi:10.2105/AJPH.2011.300542

- Willis SJ, Cocoros NM, Randall LM, Ochoa AM, Haney G, Hsu KK, et al. . Electronic health record use in public health infectious disease surveillance, USA, 2018–2019. Curr Infect Dis Rep . 2019;21(10):32. PubMed doi:10.1007/s11908-019-0694-5

- Elliott AF, Davidson A, Lum F, Chiang MF, Saaddine JB, Zhang X, et al. . Use of electronic health records and administrative data for public health surveillance of eye health and vision-related conditions in the United States. Am J Ophthalmol . 2012;154(6 Suppl):S63–S70. PubMed doi:10.1016/j.ajo.2011.10.002

- Birkhead GS. Successes and continued challenges of electronic health records for chronic disease surveillance. Am J Public Health . 2017;107(9):1365–1367. PubMed doi:10.2105/AJPH.2017.303938

- Huguet N, Angier H, Rdesinski R, Hoopes M, Marino M, Holderness H, et al. . Cervical and colorectal cancer screening prevalence before and after Affordable Care Act Medicaid expansion. Prev Med . 2019;124:91–97. PubMed doi:10.1016/j.ypmed.2019.05.003

- Forrest CB, McTigue KM, Hernandez AF, Cohen LW, Cruz H, Haynes K, et al. . PCORnet® 2020: current state, accomplishments, and future directions. J Clin Epidemiol . 2021;129:60–67. PubMed doi:10.1016/j.jclinepi.2020.09.036

- National Patient-Centered Clinical Research Network. PCORnet data, common data model (CDM) specification, version 6.0. Accessed March 28, 2024. https://pcornet.org/wp-content/uploads/2020/12/PCORnet-Common-Data-Model-v60-2020_10_221.pdf

- National Patient-Centered Clinical Research Network. Data. Accessed March 28, 2024. https://pcornet.org/data/

- National Patient-Centered Clinical Research Network. Front door. Accessed March 28, 2024. https://pcornet.org/front-door/

- Boehmer TK, Koumans EH, Skillen EL, Kappelman MD, Carton TW, Patel A, et al. . Racial and ethnic disparities in outpatient treatment of COVID-19 — United States, January–July 2022. MMWR Morb Mortal Wkly Rep . 2022;71(43):1359–1365. PubMed doi:10.15585/mmwr.mm7143a2

- Jackson SL, Woodruff RC, Nagavedu K, Fearrington J, Rolka DB, Twentyman E, et al. ; PCORnet Collaboration Authors. Association between hypertension and diabetes control and COVID-19 severity: National Patient-Centered Clinical Research Network, United States, March 2020 to February 2022. J Am Heart Assoc . 2023;12(21):e030240. PubMed doi:10.1161/JAHA.122.030240

- Connolly SJ, Ezekowitz MD, Yusuf S, Eikelboom J, Oldgren J, Parekh A, et al. ; RE-LY Steering Committee and Investigators. Dabigatran versus warfarin in patients with atrial fibrillation. N Engl J Med . 2009;361(12):1139–1151. PubMed doi:10.1056/NEJMoa0905561

- Patel MR, Mahaffey KW, Garg J, Pan G, Singer DE, Hacke W, et al. ; ROCKET AF Investigators. Rivaroxaban versus warfarin in nonvalvular atrial fibrillation. N Engl J Med . 2011;365(10):883–891. PubMed doi:10.1056/NEJMoa1009638

- Dentali F, Riva N, Crowther M, Turpie AG, Lip GY, Ageno W. Efficacy and safety of the novel oral anticoagulants in atrial fibrillation: a systematic review and meta-analysis of the literature. Circulation . 2012;126(20):2381–2391. PubMed doi:10.1161/CIRCULATIONAHA.112.115410

- Marzec LN, Wang J, Shah ND, Chan PS, Ting HH, Gosch KL, et al. . Influence of direct oral anticoagulants on rates of oral anticoagulation for atrial fibrillation. J Am Coll Cardiol . 2017;69(20):2475–2484. PubMed doi:10.1016/j.jacc.2017.03.540

- Essien UR, Holmes DN, Jackson LR II, Fonarow GC, Mahaffey KW, Reiffel JA, et al. . Association of race/ethnicity with oral anticoagulant use in patients with atrial fibrillation: findings from the Outcomes Registry for Better Informed Treatment of Atrial Fibrillation II. JAMA Cardiol . 2018;3(12):1174–1182. PubMed doi:10.1001/jamacardio.2018.3945

- Birman-Deych E, Radford MJ, Nilasena DS, Gage BF. Use and effectiveness of warfarin in Medicare beneficiaries with atrial fibrillation. Stroke . 2006;37(4):1070–1074. PubMed doi:10.1161/01.STR.0000208294.46968.a4

- Camm AJ, Lip GY, De Caterina R, Savelieva I, Atar D, Hohnloser SH, et al. ; ESC Committee for Practice Guidelines (CPG). 2012 Focused update of the ESC Guidelines for the Management of Atrial Fibrillation: an update of the 2010 ESC Guidelines for the Management of Atrial Fibrillation. Developed with the special contribution of the European Heart Rhythm Association. Eur Heart J . 2012;33(21):2719–2747. PubMed doi:10.1093/eurheartj/ehs253

- Centers for Disease Control and Prevention, National Center for Health Statistics. Chronic liver disease and cirrhosis. Accessed March 28, 2024. https://www.cdc.gov/nchs/fastats/liver-disease.htm

- Hernandez-Romieu AC, Carton TW, Saydah S, Azziz-Baumgartner E, Boehmer TK, Garret NY, et al. . Prevalence of select new symptoms and conditions among persons aged younger than 20 years and 20 years or older at 31 to 150 days after testing positive or negative for SARS-CoV-2. JAMA Netw Open . 2022;5(2):e2147053. PubMed doi:10.1001/jamanetworkopen.2021.47053

- Wiltz JL, Feehan AK, Molinari NM, Ladva CN, Truman BI, Hall J, et al. . Racial and ethnic disparities in receipt of medications for treatment of COVID-19 — United States, March 2020–August 2021. MMWR Morb Mortal Wkly Rep . 2022;71(3):96–102. PubMed doi:10.15585/mmwr.mm7103e1

- Block JP, Boehmer TK, Forrest CB, Carton TW, Lee GM, Ajani UA, et al. . Cardiac complications after SARS-CoV-2 infection and mRNA COVID-19 vaccination — PCORnet, United States, January 2021–January 2022. MMWR Morb Mortal Wkly Rep . 2022;71(14):517–523. PubMed doi:10.15585/mmwr.mm7114e1

- RECOVER: Researching COVID to Enhance Recovery. Building capacity for collaboration. March 28, 2024. https://recovercovid.org/infrastructure

- US Department of Health and Human Services. Standards for privacy of individually identifiable health information; final rule. Accessed March 28, 2024. https://www.hhs.gov/sites/default/files/ocr/privacy/hipaa/administrative/privacyrule/privrulepd.pdf

- US Department of Health and Human Services. Activities deemed not to be research: public health surveillance 2018 requirements. Accessed March 28, 2024. https://www.hhs.gov/ohrp/regulations-and-policy/requests-for-comments/draft-guidance-activities-deemed-not-be-research-public-health-surveillance/index.html

- Hohman KH, Martinez AK, Klompas M, Kraus EM, Li W, Carton TW, et al. Leveraging electronic health record data for timely chronic disease surveillance: the Multi-State EHR-Based Network for Disease Surveillance. J Public Health Manag Pract . 2023;29(2):162–73.

- Nasuti L, Andrews B, Li W, Wiltz J, Hohman KH, Patanian M. Using latent class analysis to inform the design of an EHR-based national chronic disease surveillance model. Chronic Illn . 2023;19(3):675–680. PubMed doi:10.1177/17423953221099043

- Patient-Centered Outcomes Research Institute. PCORnet: progress, challenges, and opportunities ahead. Accessed March 28, 2024. https://www.pcori.org/blog/pcornet-progress-challenges-and-opportunities-ahead

| Surveillance system attributes | Traditional national surveillance surveys | EHRs | ||

|---|---|---|---|---|

| Strengths | Weaknesses | Strengths | Weaknesses | |

| NA | Can take years between data collection and availability | Available soon after collected | NA | |

| In-depth availability of patient-reported data on behaviors; extensive collection of social determinants of health data | Limited sample sizes, especially for less common sociodemographic groups | Data on millions of patients provides ability to estimate disease prevalence for rare diseases, less common subgroups (Native Hawaiian/Pacific Islander, American Indian/Alaska Native), and small area geographic units and population-based cohorts | Limited availability of patient-reported data; social determinants data availability increasing but limited to insurance type and linked Census data for many EHRs | |

| Objectively measured health outcomes (vitals, laboratory values) according to study protocol | Cross-sectional or panel designs limit longitudinal follow-up | Longitudinal follow-up on patients allows tracking changes over time; data available on disease control over time | Many data are unstructured (eg, patient notes) and less available for use; structured data standardization is variable; identification of diseases often depends on use of nonspecific diagnostic codes; prescription data typically available but pharmacy dispensing may not be | |

| Nationally representative by design; typically covers entire US population with probability-based sampling strategies | Certain populations can be under-represented (eg, people without a landline telephone, the institutionalized population); characteristics of respondents may differ from nonrespondents in measured or unmeasured ways | Some research networks have data available on people in all US states and territories; patients with multiple types of insurance (commercial and government insurance) are typically available | Representative of care-seeking population, which may limit broad surveillance questions at the population level; representativeness of urban versus rural populations dependent on institutions contributing data | |

| Data collected according to study protocol; robust data completeness and curation | Telephone surveys used in some programs reliant on self-report; all surveys subject to nonresponse | Objective measures of some disease (eg, diabetes, obesity) and robust computable phenotypes of others | Missing data are common; data not collected according to a standardized protocol | |

| Infrastructure established by federal agencies to collect data; sampling and weighting strategies well validated and centrally applied by data collectors; some flexibility on adding new questions and data elements | Requires substantial resources and staff to facilitate | Data collected for routine clinical activities and only additional resources for collection required for new data elements | Data processing requires substantial resources, especially to address data quality issues that can arise; adding new data elements challenging | |

Abbreviation: NA, not applicable. a Examples: National Health and Nutrition Examination Survey (NHANES, www.cdc.gov/nchs/nhanes ), Behavioral Risk Factor Surveillance System (BRFSS, www.cdc.gov/brfss ). b Example: National Patient-Centered Clinical Research Network (PCORnet).

| Race and ethnicity | Children, adolescents, young adults (aged <20 y) | Adults (aged ≥20 y) |

|---|---|---|

| N (%) | ||

| NH American Indian/Alaska Native | 32,351 (0.4) | 100,070 (0.5) |

| NH Asian | 276,545 (3.4) | 686,624 (3.2) |

| NH Black or African American | 1,266,244 (15.7) | 2,973,069 (13.8) |

| Hispanic | 1,743,201 (21.6) | 3,246,099 (15.1) |

| NH Multiple race | 115,798 (1.4) | 54,400 (0.3) |

| NH Native Hawaiian/Other Pacific Islander | 23,348 (0.3) | 40,290 (0.2) |

| NH Other race | 233,508 (2.9) | 436,754 (2.0) |

| NH White | 3,746,223 (46.5) | 12,430,453 (57.8) |

| Missing | 768,425 (9.5) | 1,869,061 (8.7) |

Abbreviations: NH, Non-Hispanic; PCORnet, National Patient-Centered Clinical Research Network. a Includes any patients with a designated ethnicity as Hispanic, regardless of race. All racial groups had ethnicity categories of non-Hispanic or missing/other Hispanic ethnicity.

The opinions expressed by authors contributing to this journal do not necessarily reflect the opinions of the U.S. Department of Health and Human Services, the Public Health Service, the Centers for Disease Control and Prevention, or the authors’ affiliated institutions.

Exit Notification / Disclaimer Policy

- The Centers for Disease Control and Prevention (CDC) cannot attest to the accuracy of a non-federal website.

- Linking to a non-federal website does not constitute an endorsement by CDC or any of its employees of the sponsors or the information and products presented on the website.

- You will be subject to the destination website's privacy policy when you follow the link.

- CDC is not responsible for Section 508 compliance (accessibility) on other federal or private website.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Published: 04 July 2024

Harnessing EHR data for health research

- Alice S. Tang ORCID: orcid.org/0000-0003-4745-0714 1 ,

- Sarah R. Woldemariam 1 ,

- Silvia Miramontes 1 ,

- Beau Norgeot ORCID: orcid.org/0000-0003-2629-701X 2 ,

- Tomiko T. Oskotsky ORCID: orcid.org/0000-0001-7393-5120 1 &

- Marina Sirota ORCID: orcid.org/0000-0002-7246-6083 1 , 3

Nature Medicine ( 2024 ) Cite this article

660 Accesses

13 Altmetric

Metrics details

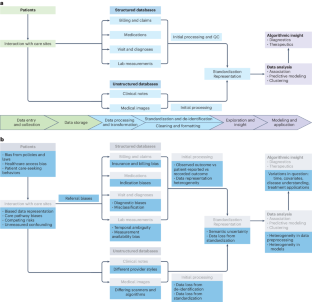

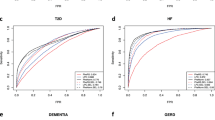

- Computational biology and bioinformatics

- Machine learning

With the increasing availability of rich, longitudinal, real-world clinical data recorded in electronic health records (EHRs) for millions of patients, there is a growing interest in leveraging these records to improve the understanding of human health and disease and translate these insights into clinical applications. However, there is also a need to consider the limitations of these data due to various biases and to understand the impact of missing information. Recognizing and addressing these limitations can inform the design and interpretation of EHR-based informatics studies that avoid confusing or incorrect conclusions, particularly when applied to population or precision medicine. Here we discuss key considerations in the design, implementation and interpretation of EHR-based informatics studies, drawing from examples in the literature across hypothesis generation, hypothesis testing and machine learning applications. We outline the growing opportunities for EHR-based informatics studies, including association studies and predictive modeling, enabled by evolving AI capabilities—while addressing limitations and potential pitfalls to avoid.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 12 print issues and online access

195,33 € per year

only 16,28 € per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Axes of a revolution: challenges and promises of big data in healthcare

A novel method for causal structure discovery from EHR data and its application to type-2 diabetes mellitus

Quantitative disease risk scores from EHR with applications to clinical risk stratification and genetic studies

Gillum, R. F. From papyrus to the electronic tablet: a brief history of the clinical medical record with lessons for the digital age. Am. J. Med. 126 , 853–857 (2013).

Article PubMed Google Scholar

US Food and Drug Administration. Real-World Evidence. FDA https://www.fda.gov/science-research/science-and-research-special-topics/real-world-evidence/ (5 February 2023).

Office of the National Coordinator for Health Information Technology. National Trends in Hospital and Physician Adoption of Electronic Health Records. HealthIT.gov https://www.healthit.gov/data/quickstats/national-trends-hospital-and-physician-adoption-electronic-health-records/ (2021).

Liu, F. & Panagiotakos, D. Real-world data: a brief review of the methods, applications, challenges and opportunities. BMC Med. Res. Methodol. 22 , 287 (2022).

Article PubMed PubMed Central Google Scholar

Cowie, M. R. et al. Electronic health records to facilitate clinical research. Clin. Res. Cardiol. 106 , 1–9 (2017).

Kierkegaard, P. Electronic health record: wiring Europe’s healthcare. Comput. Law Secur. Rev. 27 , 503–515 (2011).

Article Google Scholar

Wen, H. -C., Chang, W. -P., Hsu, M. -H., Ho, C. -H. & Chu, C. -M. An assessment of the interoperability of electronic health record exchanges among hospitals and clinics in Taiwan. JMIR Med. Inform. 7 , e12630 (2019).

Johnson, A. E. W. et al. MIMIC-IV, a freely accessible electronic health record dataset. Sci. Data 10 , 1 (2023).

Article CAS PubMed PubMed Central Google Scholar

All of Us Research Program Investigators. The ‘All of Us’ Research Program. N. Engl. J. Med . 381 , 668–676 (2019).

Sudlow, C. et al. UK Biobank: an open access resource for identifying the causes of a wide range of complex diseases of middle and old age. PLoS Med. 12 , e1001779 (2015).

Sinha, P., Sunder, G., Bendale, P., Mantri, M. & Dande, A. Electronic Health Record: Standards, Coding Systems, Frameworks, and Infrastructures (Wiley, 2012); https://doi.org/10.1002/9781118479612

Overhage, J. M., Ryan, P. B., Reich, C. G., Hartzema, A. G. & Stang, P. E. Validation of a common data model for active safety surveillance research. J. Am. Med. Inform. Assoc. 19 , 54–60 (2012).

Murugadoss, K. et al. Building a best-in-class automated de-identification tool for electronic health records through ensemble learning. Patterns 2 , 100255 (2021).

Yogarajan, V., Pfahringer, B. & Mayo, M. A review of automatic end-to-end de-identification: is high accuracy the only metric? Appl. Artif. Intell. 34 , 251–269 (2020).

Mandl, K. D. & Perakslis, E. D. HIPAA and the leak of ‘deidentified’ EHR data. N. Engl. J. Med. 384 , 2171–2173 (2021).

Norgeot, B. et al. Protected Health Information filter (Philter): accurately and securely de-identifying free-text clinical notes. NPJ Digit. Med. 3 , 57 (2020).

Steurer, M. A. et al. Cohort study of respiratory hospital admissions, air quality and sociodemographic factors in preterm infants born in California. Paediatr. Perinat. Epidemiol. 34 , 130–138 (2020).

Costello, J. M., Steurer, M. A., Baer, R. J., Witte, J. S. & Jelliffe‐Pawlowski, L. L. Residential particulate matter, proximity to major roads, traffic density and traffic volume as risk factors for preterm birth in California. Paediatr. Perinat. Epidemiol. 36 , 70–79 (2022).

Yan, C. et al. Differences in health professionals’ engagement with electronic health records based on inpatient race and ethnicity. JAMA Netw. Open 6 , e2336383 (2023).

Lotfata, A., Moosazadeh, M., Helbich, M. & Hoseini, B. Socioeconomic and environmental determinants of asthma prevalence: a cross-sectional study at the U.S. county level using geographically weighted random forests. Int. J. Health Geogr. 22 , 18 (2023).

Li, L. et al. Identification of type 2 diabetes subgroups through topological analysis of patient similarity. Sci. Transl. Med. 7 , 311ra174 (2015).

De Freitas, J. K. et al. Phe2vec: automated disease phenotyping based on unsupervised embeddings from electronic health records. Patterns 2 , 100337 (2021).

Tang, A. S. et al. Deep phenotyping of Alzheimer’s disease leveraging electronic medical records identifies sex-specific clinical associations. Nat. Commun. 13 , 675 (2022).

Su, C. et al. Clinical subphenotypes in COVID-19: derivation, validation, prediction, temporal patterns, and interaction with social determinants of health. NPJ Digit. Med. 4 , 110 (2021).

Glicksberg, B. S. et al. PatientExploreR: an extensible application for dynamic visualization of patient clinical history from electronic health records in the OMOP common data model. Bioinformatics 35 , 4515–4518 (2019).

Huang, Z., Dong, W., Bath, P., Ji, L. & Duan, H. On mining latent treatment patterns from electronic medical records. Data Min. Knowl. Discov. 29 , 914–949 (2015).

Zaballa, O., Pérez, A., Gómez Inhiesto, E., Acaiturri Ayesta, T. & Lozano, J. A. Identifying common treatments from electronic health records with missing information. An application to breast cancer. PLoS ONE 15 , e0244004 (2020).

Lou, S. S., Liu, H., Harford, D., Lu, C. & Kannampallil, T. Characterizing the macrostructure of electronic health record work using raw audit logs: an unsupervised action embeddings approach. J. Am. Med. Inform. Assoc. 30 , 539–544 (2023).

Glicksberg, B. S. et al. Comparative analyses of population-scale phenomic data in electronic medical records reveal race-specific disease networks. Bioinformatics 32 , i101–i110 (2016).

Obermeyer, Z., Powers, B., Vogeli, C. & Mullainathan, S. Dissecting racial bias in an algorithm used to manage the health of populations. Science 366 , 447–453 (2019).

Article CAS PubMed Google Scholar

Smith, M. A. et al. Insights into measuring health disparities using electronic health records from a statewide network of health systems: a case study. J. Clin. Transl. Sci. 7 , e54 (2023).

Swerdel, J. N., Hripcsak, G. & Ryan, P. B. PheValuator: development and evaluation of a phenotype algorithm evaluator. J. Biomed. Inform. 97 , 103258 (2019).

Denny, J. C. et al. PheWAS: demonstrating the feasibility of a phenome-wide scan to discover gene-disease associations. Bioinformatics 26 , 1205–1210 (2010).

Chen, C., Ding, S. & Wang, J. Digital health for aging populations. Nat. Med. 29 , 1623–1630 (2023).

Woldemariam, S. R., Tang, A. S., Oskotsky, T. T., Yaffe, K. & Sirota, M. Similarities and differences in Alzheimer’s dementia comorbidities in racialized populations identified from electronic medical records. Commun. Med. 3 , 50 (2023).

Austin, P. C. An introduction to propensity score methods for reducing the effects of confounding in observational studies. Multivariate Behav. Res. 46 , 399–424 (2011).

Karlin, L. et al. Use of the propensity score matching method to reduce recruitment bias in observational studies: application to the estimation of survival benefit of non-myeloablative allogeneic transplantation in patients with multiple myeloma relapsing after a first autologous transplantation. Blood 112 , 1133 (2008).

Ho, D., Imai, K., King, G. & Stuart, E. A. MatchIt: nonparametric preprocessing for parametric causal inference. J. Stat. Softw. 42 , 8 (2011).

Zhang, Z., Kim, H. J., Lonjon, G. & Zhu, Y. Balance diagnostics after propensity score matching. Ann. Transl. Med. 7 , 16 (2019).

Landi, I. et al. Deep representation learning of electronic health records to unlock patient stratification at scale. NPJ Digit. Med. 3 , 96 (2020).

Bai, W. et al. A population-based phenome-wide association study of cardiac and aortic structure and function. Nat. Med . https://doi.org/10.1038/s41591-020-1009-y (2020).

Engels, E. A. et al. Comprehensive evaluation of medical conditions associated with risk of non-Hodgkin lymphoma using medicare claims (‘MedWAS’). Cancer Epidemiol. Biomark. Prev. 25 , 1105–1113 (2016).

Article CAS Google Scholar

Bastarache, L., Denny, J. C. & Roden, D. M. Phenome-wide association studies. J. Am. Med. Assoc. 327 , 75–76 (2022).

Yazdany, J. et al. Rheumatology informatics system for effectiveness: a national informatics‐enabled registry for quality improvement. Arthritis Care Res. 68 , 1866–1873 (2016).

Nelson, C. A., Bove, R., Butte, A. J. & Baranzini, S. E. Embedding electronic health records onto a knowledge network recognizes prodromal features of multiple sclerosis and predicts diagnosis. J. Am. Med. Inform. Assoc. 29 , 424–434 (2022).

Tang, A. S. et al. Leveraging electronic health records and knowledge networks for Alzheimer’s disease prediction and sex-specific biological insights. Nat. Aging 4 , 379–395 (2024).

Mullainathan, S. & Obermeyer, Z. Diagnosing physician error: a machine learning approach to low-value health care. Q. J. Econ. 137 , 679–727 (2022).

Makin, T. R. & Orban De Xivry, J. -J. Ten common statistical mistakes to watch out for when writing or reviewing a manuscript. eLife 8 , e48175 (2019).

Carrigan, G. et al. External comparator groups derived from real-world data used in support of regulatory decision making: use cases and challenges. Curr. Epidemiol. Rep. 9 , 326–337 (2022).

Hersh, W. R. et al. Caveats for the use of operational electronic health record data in comparative effectiveness research. Med. Care 51 , S30–S37 (2013).

Rudrapatna, V. A. & Butte, A. J. Opportunities and challenges in using real-world data for health care. J. Clin. Invest. 130 , 565–574 (2020).

Belthangady, C. et al. Causal deep learning reveals the comparative effectiveness of antihyperglycemic treatments in poorly controlled diabetes. Nat. Commun. 13 , 6921 (2022).

Roger, J. et al. Leveraging electronic health records to identify risk factors for recurrent pregnancy loss across two medical centers: a case–control study. Preprint at Res. Sq. https://doi.org/10.21203/rs.3.rs-2631220/v2 (2023).

Gervasi, S. S. et al. The potential for bias in machine learning and opportunities for health insurers to address it: article examines the potential for bias in machine learning and opportunities for health insurers to address it. Health Aff. 41 , 212–218 (2022).

Sai, S. et al. Generative AI for transformative healthcare: a comprehensive study of emerging models, applications, case studies, and limitations. IEEE Access 12 , 31078–31106 (2024).

Wang, M. et al. A systematic review of automatic text summarization for biomedical literature and EHRs. J. Am. Med. Inform. Assoc. 28 , 2287–2297 (2021).

Katsoulakis, E. et al. Digital twins for health: a scoping review. NPJ Digit. Med. 7 , 77 (2024).

Thirunavukarasu, A. J. et al. Large language models in medicine. Nat. Med. 29 , 1930–1940 (2023).

Meskó, B. & Topol, E. J. The imperative for regulatory oversight of large language models (or generative AI) in healthcare. NPJ Digit. Med. 6 , 120 (2023).

Hastings, J. Preventing harm from non-conscious bias in medical generative AI. Lancet Digit. Health 6 , e2–e3 (2024).

Lett, E., Asabor, E., Beltrán, S., Cannon, A. M. & Arah, O. A. Conceptualizing, contextualizing, and operationalizing race in quantitative health sciences research. Ann. Fam. Med. 20 , 157–163 (2022).

Belonwu, S. A. et al. Sex-stratified single-cell RNA-seq analysis identifies sex-specific and cell type-specific transcriptional responses in Alzheimer’s disease across two brain regions. Mol. Neurobiol. https://doi.org/10.1007/s12035-021-02591-8 (2021).

Krumholz, A. Driving and epilepsy: a review and reappraisal. J. Am. Med. Assoc. 265 , 622–626 (1991).

Xu, J. et al. Data-driven discovery of probable Alzheimer’s disease and related dementia subphenotypes using electronic health records. Learn. Health Syst. 4 , e10246 (2020).

Vyas, D. A., Eisenstein, L. G. & Jones, D. S. Hidden in plain sight—reconsidering the use of race correction in clinical algorithms. N. Engl. J. Med. 383 , 874–882 (2020).

Dagdelen, J. et al. Structured information extraction from scientific text with large language models. Nat. Commun. 15 , 1418 (2024).

Hu, Y. et al. Improving large language models for clinical named entity recognition via prompt engineering. J. Am. Med. Inform. Assoc. 27 , ocad259 (2024).

Microsoft. microsoft/FHIR-Converter (2024).

Torfi, A., Fox, E. A. & Reddy, C. K. Differentially private synthetic medical data generation using convolutional GANs. Inf. Sci. 586 , 485–500 (2022).

Yoon, J., Jordon, J. & van der Schaar, M. GAIN: missing data imputation using generative adversarial nets. Preprint at https://arxiv.org/abs/1806.02920v1 (2018).

Shi, J., Wang, D., Tesei, G. & Norgeot, B. Generating high-fidelity privacy-conscious synthetic patient data for causal effect estimation with multiple treatments. Front. Artif. Intell. 5 , 918813 (2022).

Stuart, E. A. Matching methods for causal inference: a review and a look forward. Stat. Sci. 25 , 1–21 (2010).

Murali, L., Gopakumar, G., Viswanathan, D. M. & Nedungadi, P. Towards electronic health record-based medical knowledge graph construction, completion, and applications: a literature study. J. Biomed. Inform. 143 , 104403 (2023).

Li, Y. et al. BEHRT: transformer for electronic health records. Sci. Rep. 10 , 7155 (2020).

Guo, L. L. et al. EHR foundation models improve robustness in the presence of temporal distribution shift. Sci. Rep. 13 , 3767 (2023).

Zhu, R. et al. Clinical pharmacology applications of real‐world data and real‐world evidence in drug development and approval—an industry perspective. Clin. Pharmacol. Ther. 114 , 751–767 (2023).

Voss, E. A. et al. Accuracy of an automated knowledge base for identifying drug adverse reactions. J. Biomed. Inform. 66 , 72–81 (2017).

Taubes, A. et al. Experimental and real-world evidence supporting the computational repurposing of bumetanide for APOE4-related Alzheimer’s disease. Nat. Aging 1 , 932–947 (2021).

Gold, R. et al. Using electronic health record-based clinical decision support to provide social risk-informed care in community health centers: protocol for the design and assessment of a clinical decision support tool. JMIR Res. Protoc. 10 , e31733 (2021).

Varga, A. N. et al. Dealing with confounding in observational studies: a scoping review of methods evaluated in simulation studies with single‐point exposure. Stat. Med. 42 , 487–516 (2023).

Carrigan, G. et al. Using electronic health records to derive control arms for early phase single‐arm lung cancer trials: proof‐of‐concept in randomized controlled trials. Clin. Pharmacol. Ther. 107 , 369–377 (2020).

Infante-Rivard, C. & Cusson, A. Reflection on modern methods: selection bias—a review of recent developments. Int. J. Epidemiol. 47 , 1714–1722 (2018).

Degtiar, I. & Rose, S. A review of generalizability and transportability. Annu. Rev. Stat. Appl. 10 , 501–524 (2023).

Badhwar, A. et al. A multiomics approach to heterogeneity in Alzheimer’s disease: focused review and roadmap. Brain 143 , 1315–1331 (2020).

Stuart, E. A. & Rubin, D. B. Matching with multiple control groups with adjustment for group differences. J. Educ. Behav. Stat. 33 , 279–306 (2008).

Hernan, M. A. & Robins, J. M. Causal Inference: What If (Taylor and Francis, 2024).

Hernan, M. A. Causal knowledge as a prerequisite for confounding evaluation: an application to birth defects epidemiology. Am. J. Epidemiol. 155 , 176–184 (2002).

Dang, L. E. et al. A causal roadmap for generating high-quality real-world evidence. J. Clin. Transl. Sci. 7 , e212 (2023).

Hernán, M. A. & Robins, J. M. Using big data to emulate a target trial when a randomized trial is not available. Am. J. Epidemiol. 183 , 758–764 (2016).

Oskotsky, T. et al. Mortality risk among patients with COVID-19 prescribed selective serotonin reuptake inhibitor antidepressants. JAMA Netw. Open 4 , e2133090 (2021).

Sperry, M. M. et al. Target-agnostic drug prediction integrated with medical record analysis uncovers differential associations of statins with increased survival in COVID-19 patients. PLoS Comput. Biol. 19 , e1011050 (2023).

Amit, G. et al. Antidepressant use during pregnancy and the risk of preterm birth – a cohort study. NPJ Womens Health 2 , 5 (2024); https://doi.org/10.1038/s44294-024-00008-0

Download references

Author information

Authors and affiliations.

Bakar Computational Health Sciences Institute, University of California, San Francisco, San Francisco, CA, USA

Alice S. Tang, Sarah R. Woldemariam, Silvia Miramontes, Tomiko T. Oskotsky & Marina Sirota

Qualified Health, Palo Alto, CA, USA

Beau Norgeot

Department of Pediatrics, University of California, San Francisco, San Francisco, CA, USA

Marina Sirota

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Marina Sirota .

Ethics declarations

Competing interests.

B.N. is an employee at Qualified Health. The other authors declare no competing interests.

Peer review

Peer review information.

Nature Medicine thanks Wenbo Wu and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Primary Handling Editor: Karen O’Leary, in collaboration with the Nature Medicine team.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Cite this article.

Tang, A.S., Woldemariam, S.R., Miramontes, S. et al. Harnessing EHR data for health research. Nat Med (2024). https://doi.org/10.1038/s41591-024-03074-8

Download citation

Received : 03 January 2024

Accepted : 17 May 2024

Published : 04 July 2024

DOI : https://doi.org/10.1038/s41591-024-03074-8

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

It’s Time for a New Kind of Electronic Health Record

- John Glaser

We need to shift from reactive to preventative care.

Well before the Covid-19 pandemic struck, electronic health records were the bane of physicians’ existences. In all too many cases, EHRs seemed to create a huge amount of extra work and generate too few benefits. The pandemic has made the deficiencies of these systems glaringly apparent. This article discusses how EHRs should be transformed so they become an indispensable tool in keeping individual patients and patient populations healthy.

In these difficult times, we’ve made a number of our coronavirus articles free for all readers. To get all of HBR’s content delivered to your inbox, sign up for the Daily Alert newsletter.

The Covid-19 pandemic presents the U.S. health care system with a mind-boggling array of challenges. One of the most urgent is coping with a simultaneous glut and dearth of information . Between tracking outbreaks, staying abreast of the latest information on effective treatments and vaccine development, keeping tabs on how each patient is doing, and recognizing and documenting a seemingly endless stream of weird new symptoms, the entire medical community is being chronically overwhelmed.

- John Glaser is an executive in residence at Harvard Medical School. He previously served as the CIO of Partners Healthcare (now Mass General Brigham), a senior vice president at Cerner, and the CEO of Siemens Health Services. He is co-chair of the HL7 Advisory Council and a board member of the National Committee for Quality Assurance.

Partner Center

Certification of Health IT

Health information technology advisory committee (hitac), health equity, hti-1 final rule, hti-2 proposed rule, information blocking, interoperability, patient access to health records, clinical quality and safety, health it and health information exchange basics, health it in health care settings, health it resources, laws, regulation, and policy, onc funding opportunities, onc hitech programs, privacy, security, and hipaa, scientific initiatives, standards & technology, usability and provider burden.

Specialists Achieve Meaningful Use with Support from Kentucky’s Regional Extension Center and the Department for Medicaid Services

Vendors and communities working together: a catalyst for interoperability and exchange, successful electronic information exchange through direct pilot implementation with cerner and the lewis and clark information exchange (lacie), medallies and the direct project support secure exchange of clinical information in ehr systems, care coordination improved through health information exchange, viewing patients as partners: patient portal implementation and adoption, urban health plan in new york uses its ehr meaningfully to improve care coordination, solo family practitioner demonstrates care coordination with referring physicians, small practice monitors clinical quality through ehr system templates, rural health clinic exchanges information with hospitals and physicians for improved coordination of care, reducing vaccine preventable disease through immunization registries, quality improvement in a primary care practice.

Open Survey

EDITORIAL article

Editorial: ethical considerations in electronic data in healthcare.

- 1 Department of Computer Engineering, Faculty of Engineering, The Hashemite University, Zarqa, Jordan

- 2 College of Technological Innovation, Zayed University, Abu Dhabi, United Arab Emirates

Editorial on the Research Topic Ethical considerations in electronic data in healthcare

1 Introduction

Electronic data has revolutionized the healthcare sector in the digital age, promising enhanced patient care, streamlined operations, and groundbreaking medical research. However, this transformation has complex ethical challenges that need careful consideration. The surge in electronic health records (EHRs), big data analytics, and telemedicine raises significant questions about privacy, consent, data ownership, and equity. Integrating these technologies into our healthcare systems is crucial to navigating these ethical dilemmas thoughtfully.

This editorial explores the ethical considerations surrounding electronic data in healthcare, drawing insights from a series of articles that explore various facets of this multifaceted issue. These contributions collectively provide a comprehensive view of the challenges and propose pathways for ethically sound practices in managing electronic healthcare data.

2 Privacy and confidentiality: safeguarding patient information

One of the foremost ethical concerns is the protection of patient privacy in an era where data breaches and cyber-attacks are increasingly common. Carmichael et al. 's article, “ Personal Data Store Ecosystems in Health and Social Care ,” underscores the need for robust security measures to prevent unauthorized access to sensitive patient information. She highlights the tension between the accessibility of data for medical purposes and the imperative to protect patient confidentiality.

3 Informed consent: respecting patient autonomy

Informed consent is a cornerstone of ethical healthcare practices, but its application becomes complex with electronic data. Benevento et al. explore this Research Topic in their article, “ Measuring the willingness to share personal Health information: a systematic review .”

4 Data ownership and control: who owns the data?

The question of data ownership is another critical ethical issue. In the article, “ Brave (in a) New World: An Ethical Perspective on Chatbots for Medical Advice ,” Erren et al. examine the legal and ethical implications of data ownership in the healthcare sector. They discuss the competing interests of patients, healthcare providers, and third-party companies, and advocate for policies that prioritize patient rights.

5 Equity and access: bridging the digital divide

The digital divide presents a significant barrier to equitable healthcare. Adepoju et al. address this in their piece, “ Access to Technology, Internet Usage, and Online Health information-seeking behaviors in a racially diverse, lower-income population .” They highlight how disparities in digital access can exacerbate existing health inequalities, with marginalized communities often being the most disadvantaged. The authors advocate for policies and initiatives that promote digital literacy and provide equitable access to technology, ensuring that the benefits of electronic data in healthcare are shared broadly across all segments of society.

6 Ethical use of big data: balancing innovation and privacy

The utilization of big data in healthcare offers immense innovation potential, but it also poses significant ethical challenges. Pu et al. 's article, “ A Medical Big Data Access Control Model Based on Smart Contracts and Risk in the Blockchain Environment ,” investigates the ethical considerations of using large datasets for medical research and decision-making. He discusses the balance between the benefits of big data, such as improved patient outcomes and medical advancements, and the risks, including privacy violations and data misuse. Pu et al. emphasizes the need for ethical frameworks that guide the responsible use of big data while fostering innovation.

7 Conclusion

As we navigate the digital transformation of healthcare, it is imperative to address the ethical challenges associated with electronic data. Protecting patient privacy, ensuring informed consent, safeguarding against digital threats, promoting equity and access, and maintaining transparency and accountability are all critical components of ethical practice in this new landscape. The insights from the articles in this series highlight the complexities and propose thoughtful approaches to managing these Research Topic. The ethical considerations in healthcare data demand our attention and action. Together, these articles offer a roadmap for healthcare providers, policymakers, and technology developers to build a more ethical and inclusive healthcare system, where the promise of electronic data can be fully realized without compromising ethical standards.

8 Summary of contributing articles

1. “ Barriers and facilitators related to healthcare practitioner use of real-time prescription monitoring tools in Australia ” by Hoppe et al. :

- Using an online survey, investigate the barriers and facilitators related to healthcare practitioners' use of real-time prescription monitoring (RTPM) tools in Australia.

- Further research is needed to gain an understanding of healthcare practitioners' use of RTPM tools and how to minimize barriers and optimize use for the essential delivery of quality healthcare.

2. “ Measuring the willingness to share personal health information: a systematic review ” by Benevento et al. :

- Analyze the determinants and describe the measurement of the willingness to disclose personal health information.

- Systematic review of articles assessing willingness to share personal health information as a primary or secondary outcome.

3. “ Brave (in a) new world: an ethical perspective on chatbots for medical advice ” by Erren et al. :

- Emphasizes the significant ethical challenges associated with the use of AI chatbots in medical contexts, such as privacy and confidentiality.

- Discusses the necessity of regulating AI, particularly in the medical field, to avoid potential harms, and raises critical questions about who controls AI, how personal data is protected, and who is liable for the advice provided by AI.

4. “ Access to technology, internet usage, and online health information-seeking behaviors in a racially diverse, lower-income population ” by Adepoju et al. :

- Examines access to technology, internet usage, and online health information-seeking behaviors, in a racially diverse, lower-income population using a survey.

- Identifies the gap between technology adoption and effective use for health purposes, highlighting a critical area for improving public health efforts to leverage digital resources.

- Revealed that higher income, higher education levels, and female gender were significantly associated with increased online health information-seeking behaviors.

5. “ Personal data store ecosystems in health and social care ” by Carmichael et al. :

- Highlights the potential of personal data storage to transform health and social care through enhanced individual data control and usage.

- Points out the significant challenges that need to be addressed for their successful adoption, such as Technical and Operational Hurdles, User Engagement, and Data Governance.

6. “ A Medical Big Data Access Control Model based on Smart Contracts and Risk in the Blockchain Environment ” by Pu et al. :

- Proposes a smart contract and risk-based access control model (SCR-BAC) integrated with traditional risk-based access control and deploys risk-based access control policies in the form of smart contracts into the blockchain, thereby ensuring the protection of medical data.

- Demonstrates that the access control model effectively curbs the access behavior of malicious doctors to a certain extent and imposes a limiting effect on the internal abuse and privacy leakage of medical big data.

7. “ Large language models in physical therapy: time to adapt and adept ” by Naqvi et al. :

- Examines how large language models (LLMs) driven by deep ML can offer human-like performance but face challenges in accuracy due to vast data in Physical Therapy (PT) and rehabilitation practice.

- Urges PTs to engage in learning and shaping AI models by highlighting the need for ethical use and human supervision to address potential biases.

Through a comprehensive understanding and proactive management of these ethical issues, we can ensure that the digital revolution in healthcare is both transformative and just, benefiting all patients and society.

Author contributions

DM: Conceptualization, Investigation, Methodology, Project administration, Resources, Supervision, Writing – original draft, Writing – review & editing. MA-K: Conceptualization, Investigation, Methodology, Validation, Writing – review & editing.

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note