Advertisement

Better, Virtually: the Past, Present, and Future of Virtual Reality Cognitive Behavior Therapy

- Open access

- Published: 20 October 2020

- Volume 14 , pages 23–46, ( 2021 )

Cite this article

You have full access to this open access article

- Philip Lindner ORCID: orcid.org/0000-0002-3061-501X 1

18k Accesses

42 Citations

3 Altmetric

Explore all metrics

Virtual reality (VR) is an immersive technology capable of creating a powerful, perceptual illusion of being present in a virtual environment. VR technology has been used in cognitive behavior therapy since the 1990s and accumulated an impressive evidence base, yet with the recent release of consumer VR platforms came a true paradigm shift in the capabilities and scalability of VR for mental health. This narrative review summarizes the past, present, and future of the field, including milestone studies and discussions on the clinical potential of alternative embodiment, gamification, avatar therapists, virtual gatherings, immersive storytelling, and more. Although the future is hard to predict, clinical VR has and will continue to be inherently intertwined with what are now rapid developments in technology, presenting both challenges and exciting opportunities to do what is not possible in the real world.

Similar content being viewed by others

Health, Health-Related Quality of Life, and Quality of Life: What is the Difference?

Cognitive–behavioral therapy for management of mental health and stress-related disorders: Recent advances in techniques and technologies

Social interactions in the metaverse: Framework, initial evidence, and research roadmap

Avoid common mistakes on your manuscript.

Introduction

Since its merging into a coherent therapeutic tradition in the 1980s, cognitive behavior therapy (CBT) has proven a remarkable success in treating a wide range of mental disorders, psychosomatic conditions, and many non-medical issues for which sufferers need help with. A distinguishing feature of CBT, common to and prominent in both its behavioral and cognitive roots, is the emphasis on carrying out exercises designed to change behavior and/or cognitions related to some problem area (Mennin et al. 2013 ). This is often explicitly framed as a multi-stage process, including first providing a psychotherapeutic rationale for the exercise, and then detailed and concrete planning, controlled execution, reporting of specific outcomes, drawing lessons learned, and progressing to the next exercise. Since exercises are so central in CBT—congruent with its historical emphasis on specific as opposed to common factors (Buchholz and Abramowitz 2020 )—this therapeutic tradition is inherently well suited for technology-mediated delivery formats that do not rely on there being a traditional client-therapist relationship. The rapid, paradigm shifting and often unpredictable development and dissemination in the last decades of different consumer information technologies (everything from personal computers to portable media players, smartphones, and wearables) have allowed researchers and clinicians to explore new avenues for treatment design and delivery. A prominent success story of the merger of technology and psychotherapy is Internet-administered CBT (iCBT), which enjoys a robust evidence base with demonstrated efficacy equivalent to face-to-face treatments for mental disorders and psychosomatic conditions (Carlbring et al. 2018 ), and is now implemented in routine care in many countries (Titov et al. 2018 ). iCBT, as it is typically packaged, is in essence a digital form of bibliotherapy: a virtual self-help book where modules replace chapters, delivered not on paper but via an online platform, with or without support from an online therapist (often through asynchronous messaging). These modules convey in writing what would otherwise be conveyed orally in the face-to-face format, covering both psychoeducation and exercises. The bibliotherapeutic roots of iCBT are apparent in that many trials adapted existing self-help books (Andersson et al. 2016 ) or have afterwards been published as such (Carlbring et al. 2001 ).

Without any doubt, iCBT was certainly revolutionary at the time of the first appearance, challenging entrenched preconceptions of what psychotherapy is, offering unlimited dissemination of evidence-based treatment, and raising the scientific standard of psychotherapy research to that of the medical field (Andersson 2016 ). The novelty of iCBT however lied in the format of delivery, not the therapeutic content. With immersive technology like virtual reality (VR), it is possible to revolutionize not only how treatment is delivered but also how change-promoting experiences are designed and evaluated, by making the unrealistic a reality. This narrative review will introduce readers to VR technology and how it can be put to clinical use, and discuss the past, present, and future of VR-CBT for mental disorders. The aim is not to provide a systematic review (Freeman et al. 2017 ) or a meta-analysis of the field (Botella et al. 2017 ; Carl et al. 2018 ; Wechsler et al. 2019 ) but rather to give a historical overview and context, showing how developments in technology have fueled clinical progress. Perspectives on the future of the field will also be provided. The focus will be on VR-CBT treatments for anxiety disorders since this application dominates the extant literature, although other clinical applications will also be discussed. In addition to being a treatment tool, VR is also seeing increasing use as an experimental platform for studying psychopathology (Juvrud et al. 2018 ) and treatment mechanisms (Scheveneels et al. 2019 ); coverage of this exciting application is however beyond the scope of this review.

What Is Virtual Reality?

In essence, VR refers to any technology that creates a simulated experience of being present in a virtual environment that replaces the physical world (Riva et al. 2016 ). This sense of (virtual) presence is a key concept in VR and is what distinguishes this technology from others along the so-called mixed reality spectrum: someone playing a traditional video game, for example, or even reading a captivating book, may very well become immersed in it but is unlikely to feel physically transported to the locale depicted on their computer screen. Experiencing presence in VR is a powerful perceptual illusion, yet an illusion nonetheless: the environment may indeed prompt overt cognitions like “I know this is not real”—as individuals undergoing VR exposure therapy often think and say aloud as a safety behavior—yet this is done after the same user has already acted congruent with the environment, thereby demonstrating that it is nonetheless perceived as real (Slater 2018 ).

To create this perceptual illusion, special hardware is required. As will be discussed in the coming sections, until only a few years ago, such hardware was inaccessible, expensive, and required trained professionals to both develop software for and use. While it is possible to transform a (restricted) physical environment into a virtual one by projecting interactive images onto the walls—a so-called CAVE setup (Bouchard et al. 2013 )—developments in head-mounted display (HMD) technology has made this latter VR approach the dominant one, especially with the release of consumer VR platforms that are all of the HMD kind. Modern VR HMDs come in two versions: mobile devices, either freestanding or smartphone-based, and tethered devices. Mobile devices offer simplicity of use and do not physically constrain the user, yet are computationally limited and must also be recharged. Tethered devices require a high-end computer or gaming console to run, connected via cable. It should be noted that wireless tethering is being developed and will likely be released in the years to come and that VR devices like the Oculus Quest can now run in both mobile and tethered modes, offering more computational power in the latter.

Since VR HMDs first appeared in the 1960s, these have relied on the same core principles to create a sense of presence in a virtual world: by including two 2D displays (one covering each eye, thereby also withholding the real world) showing views with offset angles corresponding to an average (or custom) interocular distance, binocular depth perception can be simulated, termed stereoscopy. In addition, the HMD continuously measures head rotation in all directions (pitch, yaw, and roll, so-called three degrees of freedom or 3DOF) using gyroscopes and adapts the visual presentation accordingly, giving the user the perceptual illusion of being able to look around the virtual environment (Scarfe and Glennerster 2019 ). Immersion and presence are both mediated by interactivity with the virtual world, and since vision is the dominant sense in humans (Posner et al. 1976 ), stereoscopy coupled with 3DOF is a simple but powerful setup to create VR. Modern VR platforms typically also include adaptive stereo sound, as well as wireless handheld controllers that can be used both for mouse-type pointing and to control virtual hands. In addition, there are now both tethered and mobile VR platforms that have 6DOF functionality: alongside rotation tracking, these devices also continuously measure movement in X, Y, and Z (positional tracking) using either cameras placed in the physical room (outside-in tracking) or integrated into the HMD (inside-out tracking) and then use this data to update the visual presentation, giving the user the ability to also physically walk around the virtual environment.

Not unexpectedly, the history of clinical VR is intertwined with the history of VR technology. The sections that follow provide a brief historical overview of these parallel tracks of development, divided into the past (roughly 1968–2013), present (2014–2020), and future.

Recognizable VR technology has existed since the 1960s, emerging from and taking root in the bourgeoning computer science scene (Sutherland 1968 ) and aerospace industry (Furness 1986 ), developing alongside advances in computational power and display technology. By the 1990s, a series of failed attempts to mass-commercialize what was still unripe VR technology into gaming products struck a hard blow to the VR field, pushing it back to the peripheries and the niche applications found there (e.g., flight simulation) for which this technology had always been valuable. It would take almost 20 years before anyone made a serious attempt at consumer VR again (see below), yet by the mid-1990s, the inherent capabilities of VR had become apparent to clinical research psychologists around the world. Remarkably, the capabilities and advantages of VR in treating anxiety disorders that were raised already in 1996 (Glantz et al. 1996 ) are still echoed today (Lindner et al. 2017 ). Since the virtual environment is built from scratch, it can be made fully controllable, flexible, adaptive, and interactive in ways not practically feasible or even possible in the real world. In treating spider phobia, for example, the therapist or patient could conveniently choose the specific type of spider to be used in exposure, linearly increase how frightening the virtual spider looks, and make its behavior adaptive to how the user behaves. Further, VR exposure will always be safer than in vivo equivalent exposure and additionally solves practical issues in providing exposure therapy: there is no need to leave the therapy room, either to perform exposure exercises or prepare stimuli material in advance.

While the mid-to-late 1990s was not an exciting period in terms of VR technology development, the period marks the beginning of using VR for mental health purposes. Early published case reports and trials from well-funded laboratories revealed the feasibility and promise of VR exposure therapy for acrophobia (Rothbaum et al. 1995a , 1995b ), aviophobia (Rothbaum et al. 1996 ), claustrophobia (Botella et al. 1998 ), spider phobia (Carlin et al. 1997 ), and PTSD (Rothbaum et al. 1999 ). In all cases, VR was used to generate virtual equivalents of phobic stimuli to perform otherwise rather traditional exposure therapy, resulting in impressive symptom reductions considering the hardware limitations of the period. However, the unique clinical advantages of VR were put to use already at this early stage: in the claustrophobia exposure paradigm, for example, the user had the ability to move a wall of a virtual room, making it bigger or smaller (Botella et al. 1998 ). In addition to enabling a convenient, linear version of the fear hierarchy, such features also provide the user with an important sense of control over the exposure scenario (Lindner et al. 2017 ). Another early example of innovative and unique applications was a small feasibility study on using VR to treat body image disturbances (Perpiñá et al. 1999 ), an important component of eating disorders that—at least at the time—was a neglected topic in CBT protocols (Rosen 1996 ). This first VR study included several pioneering clinical components, including the possibility to change the size of different body sections of a human avatar body, with the patient’s actual body overlaid to highlight discrepancies that could then also be highlighted and discussed in traditional Socratic dialogue. This example thereby illustrates how well VR experiences can be integrated into a CBT framework, in which mapping and resolving discrepancies are an important generic component.

These first studies used VR hardware like the Division dVisor, a bulky and heavy HMD by today’s standard that included an aft counterweight just to balance the weight of the dual LCDs capable of generating around 146,000 pixels at 10 frames per second (about 1/80th of the pixels per second that a modern tethered HMD is capable of). The system additionally required a high-end computer and several peripherals to run and costs up to a hundred thousand euros depending on the setup. Not surprisingly, the heavy and bulky HMDs of the time, with their low resolutions and framerates, were prone to induce so-called cybersickness (Rebenitsch and Owen 2016 ): symptoms resembling motion sickness believed to be caused primarily by sensory conflict between the visual and vestibular/proprioceptor systems, although display properties in themselves also play a role (Saredakis et al. 2020 ). Cybersickness was a well-recognized phenomenon already in the 1990s (McCauley and Sharkey 1992 ) yet was much less an issue in clinical VR than in, e.g., the aerospace industry where VR was typically (and still is) used to simulate flight, i.e., virtual movement (as per the visual system) without physical movement (as per the vestibular/proprioceptor systems). Many of the common principles for the design of VR exposure paradigms emerged at this early stage, including minimizing first-person movement to avoid inducing cybersickness—letting the feared stimuli come to the user and not the other way around, which may even have therapeutic benefits in some cases (Lindner et al. 2017 ).

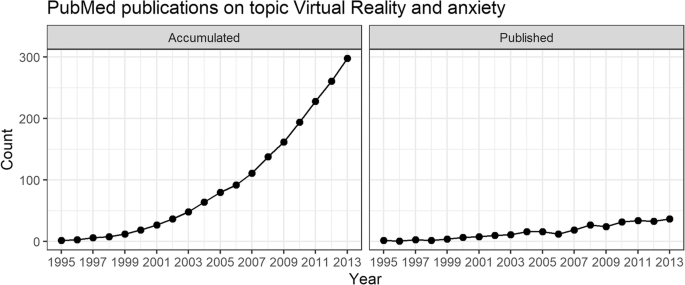

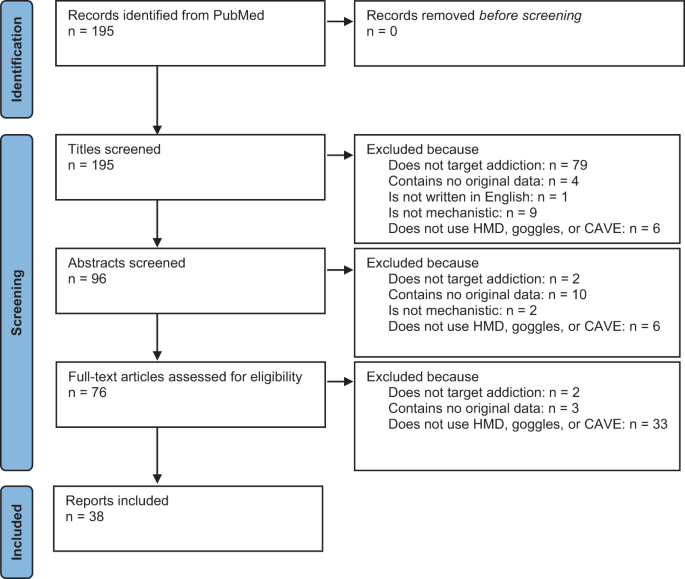

The decade or so that followed saw an exponential growth of research on clinical applications of VR, as shown in the graph below on published and accumulated papers on VR and anxiety over time (Fig. 1 ) and as evident by several meta-analytic studies being published around this time (Parsons and Rizzo 2008 ; Powers and Emmelkamp 2008 ). By 2012, there were k = 21 randomized controlled trials of VR exposure therapy for anxiety disorders available for meta-analysis, showing, e.g., that VR exposure therapy outperformed waitlist control conditions, had similar outcomes to other evidence-based interventions, and had stable long-term effects (Opriş et al. 2012 ). Regarding the latter, it should be noted that only three studies at the time had follow-up periods of 1 year or more; studies with longer follow-up periods have since been published (Anderson et al. 2016 ). A landmark meta-analysis was published in 2015 showing that previously reported within- and between-group effect sizes remained when only considering trials with in vivo behavioral outcomes, revealing that fear reductions in VR does indeed translate to reduced fear also in vivo (Morina et al. 2015 ).

VR research published up until release of consumer VR technology

In addition to establishing VR as an efficacious treatment of many anxiety disorders, the early 2000s also saw a growing research interest in the mechanisms of VR-CBT, in particular on the role of presence: does increased presence drive increased distress during VR exposure (and indirectly treatment outcomes), vice versa, or is this association bidirectional? A 2014 meta-analysis reported an overall significant correlation of r = .28 (95% CI: 0.18–0.38) between self-reported distress and presence during exposure, albeit with significant differences across sample clinical characteristics and type of VR equipment used (Ling et al. 2014 ). Two of the covered studies deserve special mention: a 2004 study on acrophobia exposure experimentally assigned participants to experiencing VR exposure with either a low-presence HMD or a high-presence CAVE, and despite finding the expected difference in presence scores, the groups did not differ on outcomes (although it should be noted that this contrast was low powered) (Krijn et al. 2004 ). A 2007 study on VR exposure for aviophobia found that while presence partially mediated the association between pre-existing anxiety and distress during exposure, presence did not moderate outcomes, suggesting that presence is a necessary but insufficient requirement for symptom reduction (Price and Anderson 2007 ), congruent with the 2004 study. Unfortunately, the complex associations between presence, distress, and outcomes have not cleared much since then, although recent experimental research has begun to shed more light on this important aspect (Gromer et al. 2019 ). It is not unlikely that measurement error from the self-reported presence ratings contribute to this confusion: using behavioral measures of presence—e.g., a behavioral response consistent with the virtual environment as per the very definition of presence (Slater 2018 )—was in fact proposed and showed promise already in the early 2000s (Freeman et al. 2000 ) yet did not become popular in clinical paradigms due to the more elaborate procedure and data analysis required compared to self-report ratings. With the release of consumer VR platforms, developing appropriate paradigms and analyzing data has become much more convenient, hopefully paving the way for a renaissance of behavioral measures of presence.

Finally, the first extended decade of the new millennium was also a period when researchers first began examining the contextual factors of importance to field, including views on VR held by both patients and clinicians. One early study reported that nine out of ten spider-fearful individuals would prefer VR exposure over in vivo exposure (Garcia-Palacios et al. 2001 ); to what degree such a preference serves as a proxy measure for greater baseline severity and functions as a safety behavior remains unknown and has been the subject of surprisingly little research since. Two later survey studies found that clinicians have a generally favorable view of using VR clinically, although they also reported fears about required training, handling the equipment and, financial costs, and reported an overall low degree of acquaintance with the technology (Schwartzman et al. 2012 ; Segal et al. 2011 ). Recent survey research, conducted after the advent of consumer VR, indicates that these fears have now decreased, although both professional and even recreational experiences of using VR were still rare, and knowledge of VR exposure therapy still low (Lindner et al. 2019d ). Together, the first three studies provided early evidence that there were no substantial human barriers to implementing VR interventions in regular clinical settings—a quest that endured into the period of modern VR.

The Present

The history of modern VR really begins in 2012, when a start-up company named Oculus (later purchased by Facebook) revived the VR field by launching a crowdfunding campaign to finance the development and release of a modern VR HMD, primarily for gaming. This happened at a critical point in time when the maturation of two related technologies converged: the rapid development of smartphones, which had begun only a few years prior, meant that high-quality flat screen technology was now readily available along with miniaturized peripheral hardware (e.g., gyroscopes). In parallel, consumer gaming computers had now grown powerful enough to render impressive graphical quality, even with the increased resolution, field of view, and refresh rate required by VR HMDs. A working prototype of the tethered Oculus Rift HMD (Development Kit 2) was shipped in 2014 and was quickly adapted for use in clinical research (Anderson et al. 2017 ; Peterson et al. 2018 ). At around the same time, the Samsung Gear VR platform was announced and soon released, making it the first mobile VR device to see widespread consumer adoption. It too quickly saw use in clinical research (Lindner et al. 2019c ; Miloff et al. 2016 ; Spiegel et al. 2019 ; Tashjian et al. 2017 ). The Gear VR platform featured a unique, since abandoned design wherein a compatible smartphone from the same brand snapped into place at the front of a simpler HMD containing only optics, a touchpad, and rotation trackers. The smartphone then served as the display and ran the VR applications. This solution was meant to lower the threshold for mass adoption by offering VR at a lower price to users that already had a powerful smartphone that could be put to use. Google used the same solution for both their simpler Cardboard VR platform (for use with nearly any smartphone) and their Gear-equivalent Daydream platform (for use with only a few compatible smartphone models). Although both the Gear and Cardboard platforms became relatively popular among consumers—the extremely low-cost Cardboard solution also finding some unique clinical applications in that it allowed for unprecedented, low-cost distribution of VR for at-home use (Donker et al. 2019 ; Lindner et al. 2019b )—these platforms have since been abandoned by both Samsung and Google without replacements. The required hardware matching was simply not cost-effective, and requiring a compatible smartphone was ultimately deemed to exclude more potential adopters than those brought on by the lowered threshold for adoption. The release and ensuing popularity of the affordably priced, mobile Oculus Go device convinced the industry that freestanding mobile VR was the future—that high-quality freestanding HMDs could be developed and released to a cost only negligibly higher than smartphone-dependent solutions. Other influential hardware releases in the last few years include the tethered HTC Vive (a competitor to the Rift platform), the tethered Playstation VR platform, and the recent release of the mobile Oculus Quest which provides 6DOF through inside-out tracking, allows interaction through hand gesture mapping (making hand controllers optional in many applications), and can also be run in tethered mode for increased performance.

The section below on the present state of clinical VR will not be told chronologically and is not exhaustive but rather touches upon a selection of topics of current interest in the field.

Automated Treatments

Arguably the most exciting recent development in the field of clinical VR is the rise of automated VR exposure therapy applications. Three high-quality randomized trials have been published recently, featuring three different applications: two on acrophobia with comparison against waiting-list (Donker et al. 2019 ; Freeman et al. 2018 ) and one on spider phobia examining non-inferiority against gold-standard in vivo exposure (Miloff et al. 2019 ). Findings from the later spider phobia trial have since been replicated in a single-subject trial with simulated real-world conditions (Lindner et al. 2020b ) and valuable usage data from one of the acrophobia trials has also been reported (Donker et al. 2020 ). A qualitative study on the experience of undergoing automated VR exposure therapy for spider phobia has also been published (Lindner et al. 2020c ). All three trials report impressive symptom reductions, revealing the public health and clinical potential of this innovative approach to treatment. Recent advances in this field include a large, ongoing randomized controlled trial of automated VR-CBT for anxious avoidance of social situations among patients with psychosis (Freeman et al. 2019 ; Lambe et al. 2020 ).

Automation in this context means that no human therapist took part in immediate treatment delivery. Instead, these applications are designed to be freestanding and offer a complete therapeutic experience, including onboarding and psychoeducation, instructions, gamified cognitive-behavioral exercises, a virtual therapist (see below), and more—all packaged in a user-friendly interface, sometimes with an explicit, overarching narrative. The term gamification refers to the application of traditional game components, originally designed for enjoyment, to a non-gaming setting (Koivisto and Hamari 2019 ). Such components typically include simple game mechanics, earning points by completion of tasks, and overt reinforcement of progress through, e.g., unlockables and collecting badges. When combined with onboarding and a cohesive, progressive, and possibly interactive narrative, and with an explicit goal other than pure enjoyment, the experience may be considered a so called serious game (Fleming et al. 2017 ; Laamarti et al. 2014 ). Findings from the first qualitative study on automated VR exposure therapy showed that even aversive experiences like exposure therapy can indeed be framed and viewed by users as a serious game with a psychotherapeutic goal (Lindner et al. 2020c ). Being a high-immersion technology, the VR modality is inherently well suited for gamification, with gaming remaining the unique selling point of VR, driving consumer adoption. Gamification has long been assumed to increase compliance and thereby treatment effects by increasing both short- and long-term engagements and making aversive experiences less so. Congruently, qualitative research has shown gamification elements are indeed perceived as attractive features by users (Faric et al. 2019a , 2019b ; Lindner et al. 2020c ; Tobler-Ammann et al. 2017 ). Empirical evidence for the presumed effects is however surprisingly scarce (Fleming et al. 2017 ; Johnson et al. 2016 ). Automated VR interventions distributed as applications on ordinary digital marketplaces have the potential to reach tens or hundreds of thousands of users (Lindner et al. 2019c ), providing not only a vector for substantial public health impact but also the necessary sample sizes to use factorial designs (Chakraborty et al. 2009 ) and randomized A-B testing to disentangle the causal impact of each gamification component.

Virtual Therapists

Interestingly enough, all three automated VR exposure applications mentioned above opted to include a virtual therapist of some sort (Donker et al. 2019 ; Freeman et al. 2018 ; Miloff et al. 2019 ), either voiceover or as an embodied agent. Such a feature serves many purposes: it reminds users of the therapeutic context, is a convenient and familiar way of conveying information (psychoeducation) and reinforcing progress, and adds a pleasant human touch. Little is known however about this novel addition to the VR arsenal. Early research examined working alliance towards the virtual environment itself, finding psychometric properties that suggest that the alliance concept can indeed be applied in this way (Miragall et al. 2015 ). Recently, a novel instrument has been developed specifically to measure working alliance with an embodied virtual therapist, using data from automated VR exposure therapy for spider phobia (Lindner et al. 2020b ; Miloff et al. 2019 ), showing that a relationship similar to a working alliance does seem to form with the virtual therapist and that the quality of this relationship predicted long-term improvements (Miloff et al. 2020 ). Qualitative interview research on the same VR intervention for spider phobia showed that the virtual therapist was an appreciated feature (Lindner et al. 2020c ). These findings are consistent with research on unguided iCBT and bibliotherapy, for example, showing that a relationship similar to a working alliance (but obviously not exactly the same) can develop with the therapeutic material itself (Heim et al. 2018 ). How best to make therapeutic use of virtual therapists remains an important topic for future research.

Virtual embodiment entails creating a perceptual illusion of being present in a body other than one’s own physical. In VR, this is typically achieved by having the user see a virtual body positioned below the camera position (an impression which can be amplified by placing virtual mirrors in the environment) and promoting body ownership by allowing the user to move this body using hand controllers and/or 6DOF positional tracking. Building on early research (Perpina et al. 2003 ; Perpiñá et al. 1999 ), a VR full-body illusion has been shown to decrease body image disturbance in anorexia nervosa (Keizer et al. 2016 ). More recently, a VR body swap illusion has been used to increase self-compassion (Cebolla et al. 2019 ). In another recent study, participants practiced delivering compassion in one virtual body and then experienced a recorded version of this act embodied as the receiving party, leading to reduced depression and self-criticism and increased self-compassion (Falconer et al. 2016 ). Another innovative approach involves allowing a single user to alternate between two virtual bodies (one being Sigmund Freud) engaged in a conversation, essentially a form of semi-externalized self-dialogue. Compared to a scripted control condition, participants engaged in embodied self-dialogue reported being helped and changed to a greater degree (Slater et al. 2019 ). In addition to inspiring a new line of research, this type of paradigm presents a fine example of how a generic CBT technique like perspective-changing can be empowered and amplified using VR (Lindner et al. 2019a ).

VR for Other Disorders

A 2017 systematic review found that at the time, most clinical VR research had been conducted on anxiety disorders (including PTSD and OCD), schizophrenia, substance use disorders, and eating disorders, with only two studies on depression (Freeman et al. 2017 ). Notably, VR interventions for autism (Didehbani et al. 2016 ; Maskey et al. 2019 ) were not covered by the systematic search, nor were gambling disorder (Bouchard et al. 2017 ), stress (Anderson et al. 2017 ; Serrano et al. 2016 ), or ADHD (Neguț et al. 2017 ). A recent survey study on attitudes towards VR among practicing CBT clinicians found that those who worked clinically with neuropsychiatric disorders, personality disorders, and psychosomatic disorders were more inclined to report that VR could be used with the respective disorder (Lindner et al. 2019d ), suggesting that novel clinical applications of VR are indeed possible. The VR field is currently expanding rapidly, including new research on innovative VR treatments for disorders that have previously received little attention like depression (Migoya-Borja et al. 2020 ; Schleider et al. 2019 ), sleep problems (Lee and Kang 2020 ), and worry (Guitard et al. 2019 ). Recent work has also studied how VR can be used for modifying cognitions (Silviu Matu 2019 ) and feared self-perceptions (Wong 2019 ), in approach-avoidance training for obesity (Kakoschke 2019 ), and to treat aggressive behavior in children (Alsem 2019 ), revealing how VR has matured into a flexible, innovative treatment tool.

The future of VR-CBT will continue to be inherently intertwined with the development of VR technology, yet the latter now develops at a pace so rapid that clinical researchers are struggling to keep up and make full use of the new capabilities offered by new technologies. It has proven notoriously difficult to predict advances in technology that could in turn drive novel therapeutic applications: progress is both linear (as with, e.g., display properties like resolution and refresh rate), discrete and unexpected (as with, e.g., the development of inside-out tracking enabling 6DOF also on mobile VR HMDs), and a complex combination thereof. Nonetheless, some predictions on the future of clinical VR for mental health can be made based on obvious gaps in the extant research literature, trends in consumer VR, and recent technological advances.

Beyond Efficacy: Demonstrating Effectiveness

Clinical research can be placed along a continuum ranging from basic science, to efficacy and effectiveness trials (Wieland et al. 2017 ). The efficacy-effectiveness continuum is noteworthy since it emphasizes that study design and study aims need to be adapted to the context of the extant literature. In building an evidence base for a new intervention, one would first examine efficacy (“Does the intervention work under optimal conditions?”), and then proceed to examining effectiveness (“Does the intervention work under real-world conditions?”). In the case of VR exposure therapy for anxiety disorders, more than a dozen efficacy trials conducted over 20 years have convincingly shown that this intervention is efficacious (Carl et al. 2019 ; Fodor et al. 2018 ; Wechsler et al. 2019 ) and associated with low rates of deterioration (Fernández-Álvarez et al. 2019 ). To the author’s knowledge, only a single effectiveness trial of VR exposure therapy has been published to date: although it demonstrated feasibility and replicated the effect size from the preceding efficacy trial, the sample size was relatively small and for ethical and practical reasons, a single-case design was chosen instead of comparison with treatment-as-usual (Lindner et al. 2020a ). The lack of large, multi-arm effectiveness trials presents a substantial gap in the extant literature and should be considered a research priority in the years to come, if VR is ever to become a part of routine clinical care.

Still Awaiting Mass Adoption by Consumers

Despite VR now being an accessible and affordable consumer product (Lindner et al. 2017 ), mass adoption has yet to occur and growth remains linear rather than exponential, hindering the full public health and clinical potential of VR. The exact number of sold VR devices and active users is difficult to estimate for many reasons, yet publically released hardware statistics from the Steam gaming platform in spring 2020 revealed that around 2% of the platform’s active user base has access to VR, equivalent to roughly 2 million users. At the end of 2019, Sony confirmed having sold more than five million units of their Playstation VR device. If one includes simpler Cardboard-based VR HMDs that require a smartphone to run and offers only a rudimentary VR experience, there are at least twenty million VR units distributed worldwide, possibly twice that. Usage patterns among device owners will likely vary considerably, from daily to one-time use. By comparison, approximately half of the world’s population is now estimated to have access to a smartphone. Releasing mental health interventions, packaged as applications, on ordinary VR content marketplaces would allow dissemination on an almost unprecedented scale. Even in the early days of consumer VR, a first-generation VR relaxation application reached 40,000 unique users in 2 years (Lindner et al. 2019c ), a number that would likely be surpassed rapidly at time of writing. Still, until VR mass adoption by consumers, the dissemination potential is limited by the overlap of early adopters, those experiencing mental health problems, and those that view this medium as appropriate for help-seeking.

The comparably low adoption rate also hinders some promising clinical applications, e.g., having a patient perform VR exposure tasks in-between in-vivo exposure sessions or completely by themselves using an automated intervention. Today, this would likely require the clinic to lend or rent out the specific VR equipment (sending it by mail if necessary). While this approach does indeed offer an innovative solution to a clear clinical need, the lack of interest thus far among ordinary clinics demonstrates that it also comes with potent barriers. Costs in acquiring and maintaining the equipment, as well as those relating to the logistics of distributing it, may simply outweigh the benefits it brings. Until consumer mass adoption, this approach is unlikely to be successful unless applicable health insurance models begin to incorporate and reimburse it. In the first effectiveness trial of VR exposure therapy in routine care, the clinic was reimbursed either through existing occupational healthcare contracts or the patients payed out-of-pocket (Lindner et al. 2020a ), in no case with any additional cost included to cover the clinics investment in VR. Whether such models are financially sustainable at scale remains to be evaluated.

Novel Uses of Virtual Embodiment

Arguably, clinical researchers have only begun to scratch the surface of the clinical potential of virtual embodiment, especially with regard to how such experiences can be merged with traditional, evidence-based CBT techniques. With regard to exposure therapy, for example, one could imagine allowing patients to experience the very thing they fear by embodying them, e.g., as a feared conversation partner in a virtual social scenario, allowing them to truly experience it from both perspectives. Such an experience should not be less tolerable than standard exposure and has the potential to promote rapid fear reduction since one need not fear themselves. Virtual embodiment could also, for example, be used to allow individuals with substance use disorders to interact with their influenced self through embodiment of a concerned significant other, providing a potentially powerful transformative experience of the negative effects of substance misuse, the full extent of which may not otherwise be perceived by the person affected.

Full Immersion Through Innovative User Interfaces and Making Use of This Data

While research has begun to collect and analyze user engagement metrics and self-reported data from self-guided VR interventions (Donker et al. 2020 ), there has been surprisingly little research on data that offers deeper insight. The entire concept of (HMD) VR relies on continuous head rotation tracking, making rotation a suitable user interface through the use of a crosshair that fixates eye gaze and synchronizes it (at least to same degree) with head rotation. In fact, human-computer interaction research has shown that head rotation provides an adequate proxy measure of eye gaze: gaze tends to focus around a rotation-controlled crosshair, and gaze shifts above 25° are typically accompanied by subsequent head movement with a lag of 30–150 ms (Sidenmark and Gellersen 2020 ). In general, head rotation data has seen very few published clinical applications beyond its immediate role in graphical presentation, either as a way of adapting the virtual environment (e.g., prompting a “Look up!” message during public speaking exposure when the user stares at the floor as a safety behavior) or as a non-invasive, continuous measure that can be used for further analysis. A rare 2016 study demonstrated the value of this data by showing that horizontal rotation during VR exposure for public speaking anxiety correlated with distress in female participants (Won et al. 2016 ). Until the average consumer VR HMDs includes proper eye tracking technology—already available in some high-grade consumer HMDs—head rotation appears to be a valuable proxy measure that can be put to greater use. This includes the possibility of using VR head rotation data as a proxy measure of other physiological variables like heart rate that indicate emotional distress, proof-of-concept of which has already been demonstrated (Noori et al. 2019 ) and is possible with related methods (Lomaliza and Park 2019 ).

The future of VR-CBT will likely also see greater use of other user interfaces that are already available technology-wise yet have seen limited use thus far in clinical applications. Embodied conversational agents, capable of instantaneous natural language processing (Provoost et al. 2017 ), may, for example, be used to include interactive, virtual therapists that the user can speak with freely without preselected options. HMDs like the Oculus Quest can now use its 6DOF cameras to track hand movements directly, bypassing the need for hand controllers to map hand movement and enabling the user to interact with the virtual environment with individual fingers. This could be used clinically for simulating touching phobic stimuli, for example (Hoffman et al. 2003 ; Tardif et al. 2019 ). 6DOF technology, although not new but now much more user-friendly, remains underutilized in clinical VR, in particular as core clinical components. VR exergames, for example, could provide a form of behavioral activation for depression (Lindner et al. 2019a ). All these discussed user interfaces rely on continuous, non-invasive measurement that provide large amounts of data. Research on how this data can be used with machine learning (Pfeiffer et al. 2020 ) to, e.g., predict clinical outcomes will likely be another topic of interest in the years to come. Collecting vast amounts of data on (proxy) gaze, motor actions and in-virtuo behaviors from VR usage—in addition to the camera mapping of physical surroundings required by inside-out tracking 6DOF—is however not without ethical aspects; privacy concerns have already been raised (Slater et al. 2020 ; Spiegel 2018 ) and must continue to be discussed within clinical research, especially since this issue will likely grow more prominent among VR users in general.

The Importance of (and Need for) Tailoring and Adaptation

Many previously studied VR paradigms have included features that allowed the user to customize the environment to fit their therapeutic needs, including the “Virtual Iraq/Afghanistan” paradigm developed for PTSD treatment that allowed users to recreate specific traumatic scenarios (Rizzo et al. 2010 ). To what degree virtual environments need to be tailored to the specific user remains an open question of great importance to the field since so-called sandbox-type paradigms require additional developmental resources, which may not be cost-effective in relation to efficacy. This question has however received surprisingly little research attention thus far. One recent study compared exposure to standardized catastrophic scenarios in VR, to imaginal exposure with personalized scenarios, and found no difference in evoked anxiety (Guitard et al. 2019 ), suggesting that perfect tailoring is at least not necessary. A study on (360° video) VR relaxation found a strong correlation between averages preference rating of different virtual nature environments and average improvement in positive mood (Gao et al. 2019 ). A related research question concerns the benefits of including adaptive virtual environments. Research on VR biofeedback paradigm (Fominykh et al. 2017 ) have demonstrated the feasibility of including such adaptive components, which could easily be combined with other CBT techniques like exposure. Having, e.g., already created a series of spider models with increasingly frightening appearances and behaviors (Miloff et al. 2019 ), it would certainly be possible with today’s technology to create an exposure task wherein the spider stimuli morphs automatically depending on the heart rate or some other continuous measure of emotional distress acquired using off-the-shelf, wearable technology integrated through an API. Whether adaptive and/or tailored virtual scenarios show additional clinical benefits that warrant the extra developmental and practical resources required remains to be examined. Relating to the issue of what works for whom, more individual patient data meta-analytic research (Fernández-Álvarez et al. 2019 ) with high-resolution variables is needed to establish predictors of treatment response, non-response and negative effects (see below).

Social VR is growing increasingly popular and refers to any application allowing two or more people to meet and directly interact in a virtual environment, typically through embodied avatars. Many VR games already feature or are explicitly built around multiplayer functionality, including virtual tennis and realistic shooter games. More generic virtual meetup applications have also begun to appear, and it is likely only a matter of time before a VR equivalent of Second Life becomes ubiquitous (Sonia Huang 2011 )—hence (presumably) Facebook’s interest in the technology. In terms of research, studies on social presence experienced with both avatars and agents (Fox et al. 2015 ) have a long and extensive history, with a recent systematic review identifying k = 152 studies investigating different factors of importance (Oh et al. 2018 ). However, clinical applications of social VR have thus far been scarce. There are a number of possible uses of social VR in CBT: social VR could be used as an immersive type of videoconferencing psychotherapy (Tarp et al. 2017 ), virtual gatherings could be used as a form of behavioral activation in depression (Lindner et al. 2019a ), a patient in non-automated VR exposure therapy would likely benefit from observing an embodied avatar therapist modeling non-phobic responses (Olsson and Phelps 2007 ; Öst 1989 ), VR could also make it convenient to perform VR exposure therapy for public speaking anxiety (Kahlon et al. 2019 ) in front of avatars instead of agents, and more.

Therapeutic Storytelling

The fact that modern consumer VR is primarily marketed, and used, as an entertainment platform hints at the potential of using this immersive technology to distribute powerful storytelling experiences that are designed to be therapeutic in themselves, i.e., going beyond the simple overarching narratives that may be included as gamification elements. Using techniques, principles, and lessons learned from the field of VR entertainment, it may be possible to develop interactive or even passive VR experiences that tell stories that have a significant and stable impact on how individuals view themselves and others, e.g., by allowing individuals to experience emotionally charged events and scenarios from different perspectives, conceptually akin to traditional cognitive rescripting exercises for early traumatic memories (Wild and Clark 2011 ). Although there is some preliminary, indirect research in support of this approach (Shin 2018 ), therapeutic effects on psychopathology have yet to be demonstrated. Of note, the idea of using storytelling therapeutically is not new to the field of clinical psychology: so-called creative bibliotherapy—patient reading selected works of fiction, as opposed to self-help material—has a long history yet has received very little research attention (Troscianko 2018 ) and can therefore not currently be considered an evidence-based treatment. Whether VR equivalents can make clinical use of storytelling remains to be evaluated, yet this approach shows prima facie potential as a low-threshold, single-session intervention that could be distributed at scale and would likely be viewed as attractive by users.

Raising Research Quality

Concerns have been raised about research quality of trials examining VR exposure therapy for anxiety disorders, primarily the reliance on small samples, and no or questionable control conditions (Page and Coxon 2016 ). It should however be noted that meta-analytic research has found no correlation between study quality and observed effect size (McCann et al. 2014 ). The small sample sizes that characterized early (and to a lesser degree, also current) research is not unexpected given the added practical requirements in providing VR treatment, at least with the previous generation of technology: in addition to all the regular logistics required of any psychotherapy trial, such trials also required acquiring expensive hardware, developing special software and training therapists in the use of equipment that was often far from user-friendly. However, since the expected effect sizes in these studies were large (as with in vivo exposure therapy), even smaller trials may nonetheless have been well powered; further, the fact that VR allows for a greater, even full degree of standardization should decrease outcome variance and thereby sample size requirement (Lindner et al. 2020b ).

The advent of modern consumer VR has resolved most of the logistic barriers to running larger clinical trials (but see below), as reflected in the larger sample sizes found in recent studies (Donker et al. 2019 ; Freeman et al. 2018 ; Miloff et al. 2019 ). Hopefully, with technological progress now having made greater sample sizes feasible, future VR research will also address the critique of suboptimal control conditions. The choice of control condition is a long-standing debate and multifaceted issue in psychotherapy research, with placebo interventions remaining the gold-standard despite being hard to implement in research on traditional psychotherapy (Gold et al. 2017 ). VR however is inherently well suited for the use of placebo interventions: therapeutic techniques may be packaged in non-traditional ways (e.g., as serious games) and the occurrence and precise extent of each component can easily be modified. Patients, in turn, are also likely to have markedly fewer preconceptions of what constitutes a VR psychological treatment, offering good grounds for (double) blinding. Future research contrasting active VR interventions against VR placebos is of special importance in VR applications for mental health problems where the immersion itself may have an effect. This includes VR pain management (Kenney and Milling 2016 ) where (sensory) distraction is often explicitly framed as the mediating mechanism (Gupta et al. 2018 ), as well as VR relaxation (Anderson et al. 2017 ) which is believed to work by evoking a strong sense of presence in a calming virtual environment (Seabrook et al. 2020 ). Research contrasting VR interventions with active components to VR placebos without active components, would be able to disentangle the specific effect of the presumed therapeutic mechanism from the nonspecific effect of using immersive technology, and would also raise the research quality of the field.

Finally, as the field of clinical VR grows and expands, research must continue to be vigilant to negative effects and aspects. VR has a long history of studying negative effects in the form of cybersickness (Rebenitsch and Owen 2016 ), meta-analytic research has revealed low rates of deterioration in VR exposure therapy (Fernández-Álvarez et al. 2019 ), and trials have already begun (Miloff et al. 2019 ) to include and report results from broader measures of negative effects used elsewhere in psychotherapy research (Rozental et al. 2016 ). New patient groups, treatment forms and delivery modalities nonetheless continue to raise new challenges. Recent survey research suggests that ordinary clinicians do continue to see certain risks in using VR in therapy, although positive views outweigh negative (Lindner et al. 2019d ). Widespread reports of difficulties in implementing exposure therapy for PTSD in clinical settings (Waller and Turner 2016 ), for example, stress the importance of considering therapist views in efforts to disseminate new treatments. In disseminating automated VR treatments for, e.g., depression (Lindner et al. 2019a ) and phobias (Garcia-Palacios et al. 2007 ), care must also be taken to avoid that engaging with such interventions serve as avoidance behaviors to seeking more comprehensive help. One could however certainly imagine automated VR treatments as part of a stepped-care model, and in most cases, any treatment will be preferable to none. Research on consumer smartphone applications for mental health (Larsen et al. 2019 ; Shen et al. 2015 ) suggests that few future consumer VR applications that will be released on ordinary digital marketplaces can be expected to be evidence-based and effective.

Conclusions

It has now been 25 years since the first mental health applications of VR technology appeared and much has happened since, both in terms of scientific progression and technological advances. The recent release of consumer VR platforms constitutes a true paradigm shift in the development and dissemination of clinical VR, which has inspired and continues to inspire a new generation of interventions grounded in a CBT framework. How the field will develop is as difficult to predict now as in the field’s infancy. A review article from 1996 on “VR psychotherapy” made a number of interesting predictions on the future of the field: while some predictions did turn out true, most ultimately did not (Glantz et al. 1996 ). At the turn of the millennium, few people would have predicted that less than 20 years later, half of the world’s population would own a device that not only lets you speak to nearly anyone on the globe, but also features instant, fast access to the Internet, more computational power than high-end computers of the day, several high-definition digital cameras and a display good enough to view movies on—all packaged in a device weighing less than 200 g, less than a centimeter thick and affordable enough that many people switch models every year. VR and other mixed reality technologies are still awaiting mass adoption, but when this does happen, there will already be a firm evidence base to inform the next generation of VR interventions for mental health. The field of VR-CBT is expanding rapidly with new publications every week, but the same constraints on time and funding prevalent elsewhere in academic research apply also here. Thus, while the field at large will hopefully continue to house an impressive width of research interests, individual research groups and researchers will likely find themselves at a crossroads of sort, whether they like it or not. Do we continue exploring the efficacy of innovative clinical applications of new VR technology under optimal conditions, or is the time ripe to focus on public health dissemination and implementation in routine care? Should we focus on developing automated treatments or user-friendly VR tools for clinicians to solve practical issues and do things not possible in real life? Do we expand to include new interventions for previously neglected mental disorders, or should we aim to improve on existing intervention for disorders that VR has been shown to work with? Should we continue to create virtual equivalents of what we otherwise do in the therapy room, or is it time to leave the constraints of the real world behind and truly think outside the (real world) box? As in the mid-1990s, such decisions will shape the future of VR-CBT. Given the momentum that consumer VR has already picked up this time around, it certainly looks like consumer VR is now here to stay—and if so, so is VR-CBT.

Alsem, S. (2019). Using interactive virtual reality to treat aggressive behavior problems in children. in 9th World Congress of Behavioural and Cognitive Therapies .

Google Scholar

Anderson, P. L., Edwards, S. M., & Goodnight, J. R. (2016). Virtual reality and exposure group therapy for social anxiety disorder: results from a 4–6 year follow-up. Cognitive Therapy and Research . https://doi.org/10.1007/s10608-016-9820-y .

Anderson, A. P., Mayer, M. D., Fellows, A. M., Cowan, D. R., Hegel, M. T., & Buckey, J. C. (2017). Relaxation with immersive natural scenes presented using virtual reality. Aerospace Medicine and Human Performance, 88 , 520–526. https://doi.org/10.3357/AMHP.4747.2017 .

Article PubMed Google Scholar

Andersson, G. (2016). Internet-delivered psychological treatments. Annual Review of Clinical Psychology, 12 , 157–179. https://doi.org/10.1146/annurev-clinpsy-021815-093006 .

Andersson, E., Hedman, E., Wadström, O., Boberg, J., Andersson, E. Y., Axelsson, E., et al. (2016). Internet-based extinction therapy for Worry: A Randomized Controlled Trial. Behav. Ther. doi: https://doi.org/10.1016/j.beth.2016.07.003 .

Botella, C., Baños, R. M., Perpiñá, C., Villa, H., Alcañiz, M., & Rey, A. (1998). Virtual reality treatment of claustrophobia: a case report. Behaviour Research and Therapy, 36 , 239–246. https://doi.org/10.1016/S0005-7967(97)10006-7 .

Botella, C., Fernández-Álvarez, J., Guillén, V., García-Palacios, A., & Baños, R. (2017). Recent Progress in virtual reality exposure therapy for phobias: a systematic review. Current Psychiatry Reports, 19 , 42. https://doi.org/10.1007/s11920-017-0788-4 .

Bouchard, S., Bernier, F., Boivin, É., Dumoulin, S., Laforest, M., Guitard, T., et al. (2013). Empathy toward virtual humans depicting a known or unknown person expressing pain. Cyberpsychology, Behavior and Social Networking, 16 , 61–71. https://doi.org/10.1089/cyber.2012.1571 .

Bouchard, S., Robillard, G., Giroux, I., Jacques, C., Loranger, C., St-pierre, M., et al. (2017). Using virtual reality in the treatment of gambling disorder : the development of a new tool for cognitive behaviour therapy. Frontiers in Psychology, 8 , 1–10. https://doi.org/10.3389/fpsyt.2017.00027 .

Article Google Scholar

Buchholz, J. L., & Abramowitz, J. S. (2020). The therapeutic alliance in exposure therapy for anxiety-related disorders: a critical review. Journal of Anxiety Disorders, 70 , 102194. https://doi.org/10.1016/j.janxdis.2020.102194 .

Carl, E., Stein, T., A., Levihn-Coon, A., Pogue, J. R., Rothbaum, B., Emmelkamp, P., et al. (2018). Virtual reality exposure therapy for anxiety and related disorders: a meta-analysis of randomized controlled trials. Journal of Anxiety Disorders . https://doi.org/10.1016/j.janxdis.2018.08.003 .

Carl, E., Stein, A. T., Levihn-Coon, A., Pogue, J. R., Rothbaum, B., Emmelkamp, P., et al. (2019). Virtual reality exposure therapy for anxiety and related disorders: a meta-analysis of randomized controlled trials. Journal of Anxiety Disorders, 61 , 27–36. https://doi.org/10.1016/j.janxdis.2018.08.003 .

Carlbring, P., Westling, B. E., Ljungstrand, P., Ekselius, L., & Andersson, G. (2001). Treatment of panic disorder via the internet: a randomized trial of a self-help program. Behavior Therapy, 32 , 751–764. https://doi.org/10.1016/S0005-7894(01)80019-8 .

Carlbring, P., Andersson, G., Cuijpers, P., Riper, H., & Hedman-Lagerlöf, E. (2018). Internet-based vs. face-to-face cognitive behavior therapy for psychiatric and somatic disorders: an updated systematic review and meta-analysis. Cognitive Behaviour Therapy, 47 , 1–18. https://doi.org/10.1080/16506073.2017.1401115 .

Carlin, A. S., Hoffman, H. G., & Weghorst, S. (1997). Virtual reality and tactile augmentation in the treatment of spider phobia: a case report. Behaviour Research and Therapy, 35 , 153–158. https://doi.org/10.1016/S0005-7967(96)00085-X .

Cebolla, A., Herrero, R., Ventura, S., Miragall, M., Bellosta-Batalla, M., Llorens, R., et al. (2019). Putting oneself in the body of others: a pilot study on the efficacy of an embodied virtual reality system to generate self-compassion. Frontiers in Psychology, 10 . https://doi.org/10.3389/fpsyg.2019.01521 .

Chakraborty, B., Collins, L. M., Strecher, V. J., & Murphy, S. A. (2009). Developing multicomponent interventions using fractional factorial designs. Statistics in Medicine, 28 , 2687–2708. https://doi.org/10.1002/sim.3643 .

Article PubMed PubMed Central Google Scholar

Didehbani, N., Allen, T., Kandalaft, M., Krawczyk, D., & Chapman, S. (2016). Virtual reality social cognition training for children with high functioning autism. Computers in Human Behavior, 62 , 703–711. https://doi.org/10.1016/j.chb.2016.04.033 .

Donker, T., Cornelisz, I., van Klaveren, C., van Straten, A., Carlbring, P., Cuijpers, P., et al. (2019). Effectiveness of self-guided app-based virtual reality cognitive behavior therapy for acrophobia: a randomized clinical trial. JAMA Psychiatry, 76 , 682. https://doi.org/10.1001/jamapsychiatry.2019.0219 .

Donker, T., Klaveren, C. Van, Cornelisz, I., Kok, R. N., and van Gelder, J.-L. van (2020). Analysis of usage data from a self-guided app-based virtual reality cognitive behavior therapy for acrophobia: a randomized controlled trial. Journal of Clinical Medicine 9, 1614. doi: https://doi.org/10.3390/jcm9061614 .

Falconer, C. J., Rovira, A., King, J. A., Gilbert, P., Antley, A., Fearon, P., et al. (2016). Embodying self-compassion within virtual reality and its effects on patients with depression. BJPsych Open, 2 , 74–80. https://doi.org/10.1192/bjpo.bp.115.002147 .

Faric, N., Potts, H. W. W., Hon, A., Smith, L., Newby, K., Steptoe, A., et al. (2019a). What players of virtual reality exercise games want: thematic analysis of web-based reviews. Journal of Medical Internet Research, 21 , 1–13. https://doi.org/10.2196/13833 .

Faric, N., Yorke, E., Varnes, L., Newby, K., Potts, H. W., Smith, L., et al. (2019b). Younger adolescents’ perceptions of physical activity, Exergaming, and virtual reality: qualitative intervention development study. JMIR Serious Games, 7 , e11960. https://doi.org/10.2196/11960 .

Fernández-Álvarez, J., Rozental, A., Carlbring, P., Colombo, D., Riva, G., Anderson, P. L., et al. (2019). Deterioration rates in virtual reality therapy: an individual patient data level meta-analysis. Journal of Anxiety Disorders, 61 , 3–17. https://doi.org/10.1016/j.janxdis.2018.06.005 .

Fleming, T. M., Bavin, L., Stasiak, K., Hermansson-Webb, E., Merry, S. N., Cheek, C., et al. (2017). Serious games and gamification for mental health: current status and promising directions. Frontiers in Psychiatry, 7 . https://doi.org/10.3389/fpsyt.2016.00215 .

Fodor, L. A., Coteț, C. D., Cuijpers, P., Szamoskozi, Ș., David, D., & Cristea, I. A. (2018). The effectiveness of virtual reality based interventions for symptoms of anxiety and depression: a meta-analysis. Scientific Reports, 8 , 10323. https://doi.org/10.1038/s41598-018-28113-6 .

Fominykh, M., Prasolova-Førland, E., Stiles, T. C., Krogh, A. B., & Linde, M. (2017). Conceptual framework for therapeutic training with biofeedback in virtual reality: first evaluation of a relaxation simulator. Journal of Interactive Learning Research, 29 (1), 51–75.

Fox, J., Ahn, S. J. (. G.)., Janssen, J. H., Yeykelis, L., Segovia, K. Y., & Bailenson, J. N. (2015). Avatars versus agents: a meta-analysis quantifying the effect of agency on social influence. Human Computer Interaction, 30 , 401–432. https://doi.org/10.1080/07370024.2014.921494 .

Freeman, J., Avons, S. E., Meddis, R., Pearson, D. E., & IJsselsteijn, W. (2000). Using behavioral realism to estimate presence: a study of the utility of postural responses to motion stimuli. Presence Teleoperators and Virtual Environments, 9 , 149–164. https://doi.org/10.1162/105474600566691 .

Freeman, D., Reeve, S., Robinson, A., Ehlers, A., Clark, D., Spanlang, B., et al. (2017). Virtual reality in the assessment, understanding, and treatment of mental health disorders. Psychological Medicine, 47 , 2393–2400. https://doi.org/10.1017/S003329171700040X .

Freeman, D., Haselton, P., Freeman, J., Spanlang, B., Kishore, S., Albery, E., et al. (2018). Automated psychological therapy using immersive virtual reality for treatment of fear of heights: a single-blind, parallel-group, randomised controlled trial. Lancet Psychiatry, 5 , 625–632. https://doi.org/10.1016/S2215-0366(18)30226-8 .

Freeman, D., Yu, L. M., Kabir, T., Martin, J., Craven, M., Leal, J., et al. (2019). Automated virtual reality (VR) cognitive therapy for patients with psychosis: study protocol for a single-blind parallel group randomised controlled trial (gameChange). BMJ Open, 9 , 1–8. https://doi.org/10.1136/bmjopen-2019-031606 .

Furness, T. A. (1986). The super cockpit and its human factors challenges. Proceedings of the Human Factors Society Annual Meeting, 30 , 48–52. https://doi.org/10.1177/154193128603000112 .

Gao, T., Zhang, T., Zhu, L., Gao, Y., & Qiu, L. (2019). Exploring psychophysiological restoration and individual preference in the different environments based on virtual reality. International Journal of Environmental Research and Public Health, 16 , 1–14. https://doi.org/10.3390/ijerph16173102 .

Garcia-Palacios, A., Hoffman, H. G., See, S. K., Tsai, A., & Botella, C. (2001). Redefining therapeutic success with virtual reality exposure therapy. Cyberpsychology & Behavior, 4 , 341–348. https://doi.org/10.1089/109493101300210231 .

Garcia-Palacios, A., Botella, C., Hoffman, H., & Fabregat, S. (2007). Comparing acceptance and refusal rates of virtual reality exposure vs. in vivo exposure by patients with specific phobias. CyberPsychology and Behaviour, 10 , 722–724. https://doi.org/10.1089/cpb.2007.9962 .

Glantz, K., Durlach, N. I., Barnett, R. C., & Aviles, W. A. (1996). Virtual reality (VR) for psychotherapy: From the physical to the social environment. Psychotherapy: Theory, Research, Practice, Training, 33 , 464–473. https://doi.org/10.1037/0033-3204.33.3.464 .

Gold, S. M., Enck, P., Hasselmann, H., Friede, T., Hegerl, U., Mohr, D. C., et al. (2017). Control conditions for randomised trials of behavioural interventions in psychiatry: a decision framework. Lancet Psychiatry, 4 , 725–732. https://doi.org/10.1016/S2215-0366(17)30153-0 .

Gromer, D., Reinke, M., Christner, I., & Pauli, P. (2019). Causal interactive links between presence and fear in virtual reality height exposure. Frontiers in Psychology, 10 , 1–11. https://doi.org/10.3389/fpsyg.2019.00141 .

Guitard, T., Bouchard, S., Bélanger, C., & Berthiaume, M. (2019). Exposure to a standardized catastrophic scenario in virtual reality or a personalized scenario in imagination for generalized anxiety disorder. Journal of Clinical Medicine, 8 , 309. https://doi.org/10.3390/jcm8030309 .

Article PubMed Central Google Scholar

Gupta, A., Scott, K., & Dukewich, M. (2018). Innovative technology using virtual reality in the treatment of pain: does it reduce pain via distraction, or is there more to it? Pain Medicine (United States), 19 , 151–159. https://doi.org/10.1093/pm/pnx109 .

Heim, E., Rötger, A., Lorenz, N., & Maercker, A. (2018). Working alliance with an avatar: how far can we go with internet interventions? Internet Interventions, 11 , 41–46. https://doi.org/10.1016/j.invent.2018.01.005 .

Hoffman, H. G., Garcia-Palacios, A., Carlin, A., Furness III, T. A., & Botella-Arbona, C. (2003). Interfaces that heal: Coupling real and virtual objects to treat spider phobia. International Journal of Human Computer Interaction, 16 , 283–300. https://doi.org/10.1207/S15327590IJHC1602_08 .

Johnson, D., Deterding, S., Kuhn, K.-A., Staneva, A., Stoyanov, S., & Hides, L. (2016). Gamification for health and wellbeing: a systematic review of the literature. Internet Interventions, 6 , 89–106. https://doi.org/10.1016/j.invent.2016.10.002 .

Juvrud, J., Gredebäck, G., Åhs, F., Lerin, N., Nyström, P., Kastrati, G., et al. (2018). The immersive virtual reality lab: possibilities for remote experimental manipulations of autonomic activity on a large scale. Frontiers in Neuroscience, 12 . https://doi.org/10.3389/fnins.2018.00305 .

Kahlon, S., Lindner, P., & Nordgreen, T. (2019). Virtual reality exposure therapy for adolescents with fear of public speaking: a non-randomized feasibility and pilot study. Child and Adolescent Psychiatry and Mental Health, 13 , 47. https://doi.org/10.1186/s13034-019-0307-y .

Kakoschke, N. (2019). Participatory design of a virtual reality approach-avoidance training intervention for obesity. in 9th World Congress of Behavioural and Cognitive Therapies.

Keizer, A., Van Elburg, A., Helms, R., & Dijkerman, H. C. (2016). A virtual reality full body illusion improves body image disturbance in anorexia nervosa. PLoS One, 11 , 1–21. https://doi.org/10.1371/journal.pone.0163921 .

Kenney, M. P., & Milling, L. S. (2016). The effectiveness of virtual reality distraction for reducing pain: a meta-analysis. Psychology of Consciousness: Theory, Research and Practice, 3 , 199–210. https://doi.org/10.1037/cns0000084 .

Koivisto, J., & Hamari, J. (2019). The rise of motivational information systems: a review of gamification research. International Journal of Information Management, 45 , 191–210. https://doi.org/10.1016/j.ijinfomgt.2018.10.013 .

Krijn, M., Emmelkamp, P. M. G., Biemond, R., De Wilde De Ligny, C., Schuemie, M. J., & Van Der Mast, C. A. P. G. (2004). Treatment of acrophobia in virtual reality: the role of immersion and presence. Behaviour Research and Therapy, 42 , 229–239. https://doi.org/10.1016/S0005-7967(03)00139-6 .

Laamarti, F., Eid, M., & El Saddik, A. (2014). An overview of serious games. Internatiol Journal Computer Games Technology, 2014 . https://doi.org/10.1155/2014/358152 .

Lambe, S., Knight, I., Kabir, T., West, J., Patel, R., Lister, R., et al. (2020). Developing an automated VR cognitive treatment for psychosis: gameChange VR therapy. Journal of Behavioral and Cognitive Therapy, 30 , 33–40. https://doi.org/10.1016/j.jbct.2019.12.001 .

Larsen, M. E., Huckvale, K., Nicholas, J., Torous, J., Birrell, L., Li, E., et al. (2019). Using science to sell apps: evaluation of mental health app store quality claims. npj Digit Med 2. https://doi.org/10.1038/s41746-019-0093-1 .

Lee, S. Y., & Kang, J. (2020). Effect of virtual reality meditation on sleep quality of intensive care unit patients: a randomised controlled trial. Intensive & Critical Care Nursing, 59 , 102849. https://doi.org/10.1016/j.iccn.2020.102849 .

Lindner, P., Miloff, A., Hamilton, W., Reuterskiöld, L., Andersson, G., Powers, M. B., et al. (2017). Creating state of the art, next-generation virtual reality exposure therapies for anxiety disorders using consumer hardware platforms: design considerations and future directions. Cognitive Behaviour Therapy, 46 , 404–420. https://doi.org/10.1080/16506073.2017.1280843 .

Lindner, P., Hamilton, W., Miloff, A., & Carlbring, P. (2019a). How to treat depression with low-intensity virtual reality interventions: perspectives on translating cognitive behavioral techniques into the virtual reality modality and how to make anti-depressive use of virtual reality–unique experiences. Frontiers in Psychiatry, 10 , 1–6. https://doi.org/10.3389/fpsyt.2019.00792 .

Lindner, P., Miloff, A., Fagernäs, S., Andersen, J., Sigeman, M., Andersson, G., et al. (2019b). Therapist-led and self-led one-session virtual reality exposure therapy for public speaking anxiety with consumer hardware and software: a randomized controlled trial. Journal of Anxiety Disorders, 61 , 45–54. https://doi.org/10.1016/j.janxdis.2018.07.003 .

Lindner, P., Miloff, A., Hamilton, W., & Carlbring, P. (2019c). The potential of consumer-targeted virtual reality relaxation applications: descriptive usage, uptake and application performance statistics for a first-generation application. Frontiers in Psychology, 10 , 1–6. https://doi.org/10.3389/fpsyg.2019.00132 .

Lindner, P., Miloff, A., Zetterlund, E., Reuterskiöld, L., Andersson, G., & Carlbring, P. (2019d). Attitudes toward and familiarity with virtual reality therapy among practicing cognitive behavior therapists: a cross-sectional survey study in the era of consumer VR platforms. Frontiers in Psychology, 10 , 1–10. https://doi.org/10.3389/fpsyg.2019.00176 .

Lindner, P., Dagöö, J., Hamilton, W., Miloff, A., Andersson, G., Schill, A., et al. (2020a). Virtual reality exposure therapy for public speaking anxiety in routine care: a single-subject effectiveness trial. Cognitive Behaviour Therapy, 1–21 . https://doi.org/10.1080/16506073.2020.1795240 .

Lindner, P., Miloff, A., Bergman, C., Andersson, G., Hamilton, W., & Carlbring, P. (2020b). Gamified, automated virtual reality exposure therapy for fear of spiders: a single-subject trial under simulated real-world conditions. Frontiers in Psychiatry . https://doi.org/10.3389/fpsyt.2020.00116 .

Lindner, P., Rozental, A., Jurell, A., Reuterskiöld, L., Andersson, G., Hamilton, W., et al. (2020c). Experiences of gamified and automated virtual reality exposure therapy for spider phobia: a qualitative study. JMIR Serious Games . https://doi.org/10.2196/17807 .

Ling, Y., Nefs, H. T., Morina, N., Heynderickx, I., & Brinkman, W. P. (2014). A meta-analysis on the relationship between self-reported presence and anxiety in virtual reality exposure therapy for anxiety disorders. PLoS One, 9 , 1–12. https://doi.org/10.1371/journal.pone.0096144 .

Lomaliza, J. P., and Park, H. (2019). Improved heart-rate measurement from mobile face videos. Electronics 8. https://doi.org/10.3390/electronics8060663 .

Maskey, M., Rodgers, J., Grahame, V., Glod, M., Honey, E., Kinnear, J., et al. (2019). A randomised controlled feasibility trial of immersive virtual reality treatment with cognitive behaviour therapy for specific phobias in young people with autism spectrum disorder. Journal of Autism and Developmental Disorders, 49 , 1912–1927. https://doi.org/10.1007/s10803-018-3861-x .

McCann, R. A., Armstrong, C. M., Skopp, N. A., Edwards-Stewart, A., Smolenski, D. J., June, J. D., et al. (2014). Virtual reality exposure therapy for the treatment of anxiety disorders: an evaluation of research quality. Journal of Anxiety Disorders, 28 , 625–631. https://doi.org/10.1016/j.janxdis.2014.05.010 .

McCauley, M. E., & Sharkey, T. J. (1992). Cybersickness: perception of self-motion in virtual environments. Presence Teleoperators and Virtual Environments, 1 , 311–318. https://doi.org/10.1162/pres.1992.1.3.311 .

Mennin, D. S., Ellard, K. K., Fresco, D. M., & Gross, J. J. (2013). United we stand: emphasizing commonalities across cognitive-behavioral therapies. Behavior Therapy, 44 , 234–248. https://doi.org/10.1016/j.beth.2013.02.004 .

Migoya-Borja, M., Delgado-Gómez, D., Carmona-Camacho, R., Porras-Segovia, A., López-Moriñigo, J.-D., Sánchez-Alonso, M., et al. (2020). Feasibility of a virtual reality-based psychoeducational tool (VRight) for depressive patients. Cyberpsychology, Behavior and Social Networking, 23 , 246–252. https://doi.org/10.1089/cyber.2019.0497 .

Miloff, A., Lindner, P., Hamilton, W., Reuterskiöld, L., Andersson, G., & Carlbring, P. (2016). Single-session gamified virtual reality exposure therapy for spider phobia vs. traditional exposure therapy: study protocol for a randomized controlled non-inferiority trial. Trials, 17 , 60. https://doi.org/10.1186/s13063-016-1171-1 .

Miloff, A., Lindner, P., Dafgård, P., Deak, S., Garke, M., Hamilton, W., et al. (2019). Automated virtual reality exposure therapy for spider phobia vs. in-vivo one-session treatment: a randomized non-inferiority trial. Behaviour Research and Therapy, 118 , 130–140. https://doi.org/10.1016/j.brat.2019.04.004 .

Miloff, A., Carlbring, P., Hamilton, W., Andersson, G., Reuterskiöld, L., & Lindner, P. (2020). Measuring alliance toward embodied virtual therapists in the era of automated treatments with the virtual therapist alliance scale (VTAS): development and psychometric evaluation. Journal of Medical Internet Research, 22 , e16660. https://doi.org/10.2196/16660 .

Miragall, M., Baños, R. M., Cebolla, A., & Botella, C. (2015). Working alliance inventory applied to virtual and augmented reality (WAI-VAR): psychometrics and therapeutic outcomes. Frontiers in Psychology, 6 . https://doi.org/10.3389/fpsyg.2015.01531 .

Morina, N., Ijntema, H., Meyerbröker, K., & Emmelkamp, P. M. G. (2015). Can virtual reality exposure therapy gains be generalized to real-life? A meta-analysis of studies applying behavioral assessments. Behaviour Research and Therapy, 74 , 18–24. https://doi.org/10.1016/j.brat.2015.08.010 .

Neguț, A., Jurma, A. M., & David, D. (2017). Virtual-reality-based attention assessment of ADHD: ClinicaVR: classroom-CPT versus a traditional continuous performance test. Child Neuropsychology, 23 , 692–712. https://doi.org/10.1080/09297049.2016.1186617 .

Noori, F. M., Kahlon, S., Lindner, P., Nordgreen, T., Torresen, J., & Riegler, M. (2019). Heart rate prediction from head movement during virtual reality treatment for social anxiety. In In 2019 International Conference on Content-Based Multimedia Indexing (CBMI) (IEEE) (pp. 1–5). https://doi.org/10.1109/CBMI.2019.8877454 .

Chapter Google Scholar

Oh, C. S., Bailenson, J. N., & Welch, G. F. (2018). A systematic review of social presence: definition, antecedents, and implications. Frontiers in Robotics and AI, 5 , 472–475. https://doi.org/10.3389/frobt.2018.00114 .

Olsson, A., & Phelps, E. A. (2007). Social learning of fear. Nature Neuroscience, 10 , 1095–1102. https://doi.org/10.1038/nn1968 .

Opriş, D., Pintea, S., García-Palacios, A., Botella, C., Szamosközi, Ş., & David, D. (2012). Virtual reality exposure therapy in anxiety disorders: a quantitative meta-analysis. Depression and Anxiety, 29 , 85–93. https://doi.org/10.1002/da.20910 .

Öst, L. G. (1989). One-session treatment for specific phobias. Behaviour Research and Therapy, 27 , 1–7.

Page, S., & Coxon, M. (2016). Virtual reality exposure therapy for anxiety disorders: small samples and no controls? Frontiers in Psychology, 7 , 1–4. https://doi.org/10.3389/fpsyg.2016.00326 .

Parsons, T. D., & Rizzo, A. a. (2008). Affective outcomes of virtual reality exposure therapy for anxiety and specific phobias: a meta-analysis. Journal of Behavior Therapy and Experimental Psychiatry, 39 , 250–261. https://doi.org/10.1016/j.jbtep.2007.07.007 .

Perpiñá, C., Botella, C., Baños, R., Marco, H., Alcañiz, M., & Quero, S. (1999). Body image and virtual reality in eating disorders: is exposure to virtual reality more effective than the classical body image treatment? Cyberpsychology & Behavior, 2 , 149–155. https://doi.org/10.1089/cpb.1999.2.149 .

Perpina, C., Botella, C., & Ban, R. M. (2003). Virtual reality in eating disorders. European Eating Disorders Review, 278 , 261–278. https://doi.org/10.1002/erv.520 .

Peterson, S. M., Furuichi, E., & Ferris, D. P. (2018). Effects of virtual reality high heights exposure during beam-walking on physiological stress and cognitive loading. PLoS One, 13 , 1–17. https://doi.org/10.1371/journal.pone.0200306 .

Pfeiffer, J., Pfeiffer, T., Meißner, M., and Weiß, E. (2020). Eye-tracking-based classification of information search behavior using machine learning: evidence from experiments in physical shops and virtual reality shopping environments. Inf. Syst. Res., isre.2019.0907. doi: https://doi.org/10.1287/isre.2019.0907 .