- How it works

Meta-Analysis – Guide with Definition, Steps & Examples

Published by Owen Ingram at April 26th, 2023 , Revised On April 26, 2023

“A meta-analysis is a formal, epidemiological, quantitative study design that uses statistical methods to generalise the findings of the selected independent studies. “

Meta-analysis and systematic review are the two most authentic strategies in research. When researchers start looking for the best available evidence concerning their research work, they are advised to begin from the top of the evidence pyramid. The evidence available in the form of meta-analysis or systematic reviews addressing important questions is significant in academics because it informs decision-making.

What is Meta-Analysis

Meta-analysis estimates the absolute effect of individual independent research studies by systematically synthesising or merging the results. Meta-analysis isn’t only about achieving a wider population by combining several smaller studies. It involves systematic methods to evaluate the inconsistencies in participants, variability (also known as heterogeneity), and findings to check how sensitive their findings are to the selected systematic review protocol.

When Should you Conduct a Meta-Analysis?

Meta-analysis has become a widely-used research method in medical sciences and other fields of work for several reasons. The technique involves summarising the results of independent systematic review studies.

The Cochrane Handbook explains that “an important step in a systematic review is the thoughtful consideration of whether it is appropriate to combine the numerical results of all, or perhaps some, of the studies. Such a meta-analysis yields an overall statistic (together with its confidence interval) that summarizes the effectiveness of an experimental intervention compared with a comparator intervention” (section 10.2).

A researcher or a practitioner should choose meta-analysis when the following outcomes are desirable.

For generating new hypotheses or ending controversies resulting from different research studies. Quantifying and evaluating the variable results and identifying the extent of conflict in literature through meta-analysis is possible.

To find research gaps left unfilled and address questions not posed by individual studies. Primary research studies involve specific types of participants and interventions. A review of these studies with variable characteristics and methodologies can allow the researcher to gauge the consistency of findings across a wider range of participants and interventions. With the help of meta-analysis, the reasons for differences in the effect can also be explored.

To provide convincing evidence. Estimating the effects with a larger sample size and interventions can provide convincing evidence. Many academic studies are based on a very small dataset, so the estimated intervention effects in isolation are not fully reliable.

Elements of a Meta-Analysis

Deeks et al. (2019), Haidilch (2010), and Grant & Booth (2009) explored the characteristics, strengths, and weaknesses of conducting the meta-analysis. They are briefly explained below.

Characteristics:

- A systematic review must be completed before conducting the meta-analysis because it provides a summary of the findings of the individual studies synthesised.

- You can only conduct a meta-analysis by synthesising studies in a systematic review.

- The studies selected for statistical analysis for the purpose of meta-analysis should be similar in terms of comparison, intervention, and population.

Strengths:

- A meta-analysis takes place after the systematic review. The end product is a comprehensive quantitative analysis that is complicated but reliable.

- It gives more value and weightage to existing studies that do not hold practical value on their own.

- Policy-makers and academicians cannot base their decisions on individual research studies. Meta-analysis provides them with a complex and solid analysis of evidence to make informed decisions.

Criticisms:

- The meta-analysis uses studies exploring similar topics. Finding similar studies for the meta-analysis can be challenging.

- When and if biases in the individual studies or those related to reporting and specific research methodologies are involved, the meta-analysis results could be misleading.

Steps of Conducting the Meta-Analysis

The process of conducting the meta-analysis has remained a topic of debate among researchers and scientists. However, the following 5-step process is widely accepted.

Step 1: Research Question

The first step in conducting clinical research involves identifying a research question and proposing a hypothesis . The potential clinical significance of the research question is then explained, and the study design and analytical plan are justified.

Step 2: Systematic Review

The purpose of a systematic review (SR) is to address a research question by identifying all relevant studies that meet the required quality standards for inclusion. While established journals typically serve as the primary source for identified studies, it is important to also consider unpublished data to avoid publication bias or the exclusion of studies with negative results.

While some meta-analyses may limit their focus to randomized controlled trials (RCTs) for the sake of obtaining the highest quality evidence, other experimental and quasi-experimental studies may be included if they meet the specific inclusion/exclusion criteria established for the review.

Step 3: Data Extraction

After selecting studies for the meta-analysis, researchers extract summary data or outcomes, as well as sample sizes and measures of data variability for both intervention and control groups. The choice of outcome measures depends on the research question and the type of study, and may include numerical or categorical measures.

For instance, numerical means may be used to report differences in scores on a questionnaire or changes in a measurement, such as blood pressure. In contrast, risk measures like odds ratios (OR) or relative risks (RR) are typically used to report differences in the probability of belonging to one category or another, such as vaginal birth versus cesarean birth.

Step 4: Standardisation and Weighting Studies

After gathering all the required data, the fourth step involves computing suitable summary measures from each study for further examination. These measures are typically referred to as Effect Sizes and indicate the difference in average scores between the control and intervention groups. For instance, it could be the variation in blood pressure changes between study participants who used drug X and those who used a placebo.

Since the units of measurement often differ across the included studies, standardization is necessary to create comparable effect size estimates. Standardization is accomplished by determining, for each study, the average score for the intervention group, subtracting the average score for the control group, and dividing the result by the relevant measure of variability in that dataset.

In some cases, the results of certain studies must carry more significance than others. Larger studies, as measured by their sample sizes, are deemed to produce more precise estimates of effect size than smaller studies. Additionally, studies with less variability in data, such as smaller standard deviation or narrower confidence intervals, are typically regarded as higher quality in study design. A weighting statistic that aims to incorporate both of these factors, known as inverse variance, is commonly employed.

Step 5: Absolute Effect Estimation

The ultimate step in conducting a meta-analysis is to choose and utilize an appropriate model for comparing Effect Sizes among diverse studies. Two popular models for this purpose are the Fixed Effects and Random Effects models. The Fixed Effects model relies on the premise that each study is evaluating a common treatment effect, implying that all studies would have estimated the same Effect Size if sample variability were equal across all studies.

Conversely, the Random Effects model posits that the true treatment effects in individual studies may vary from each other, and endeavors to consider this additional source of interstudy variation in Effect Sizes. The existence and magnitude of this latter variability is usually evaluated within the meta-analysis through a test for ‘heterogeneity.’

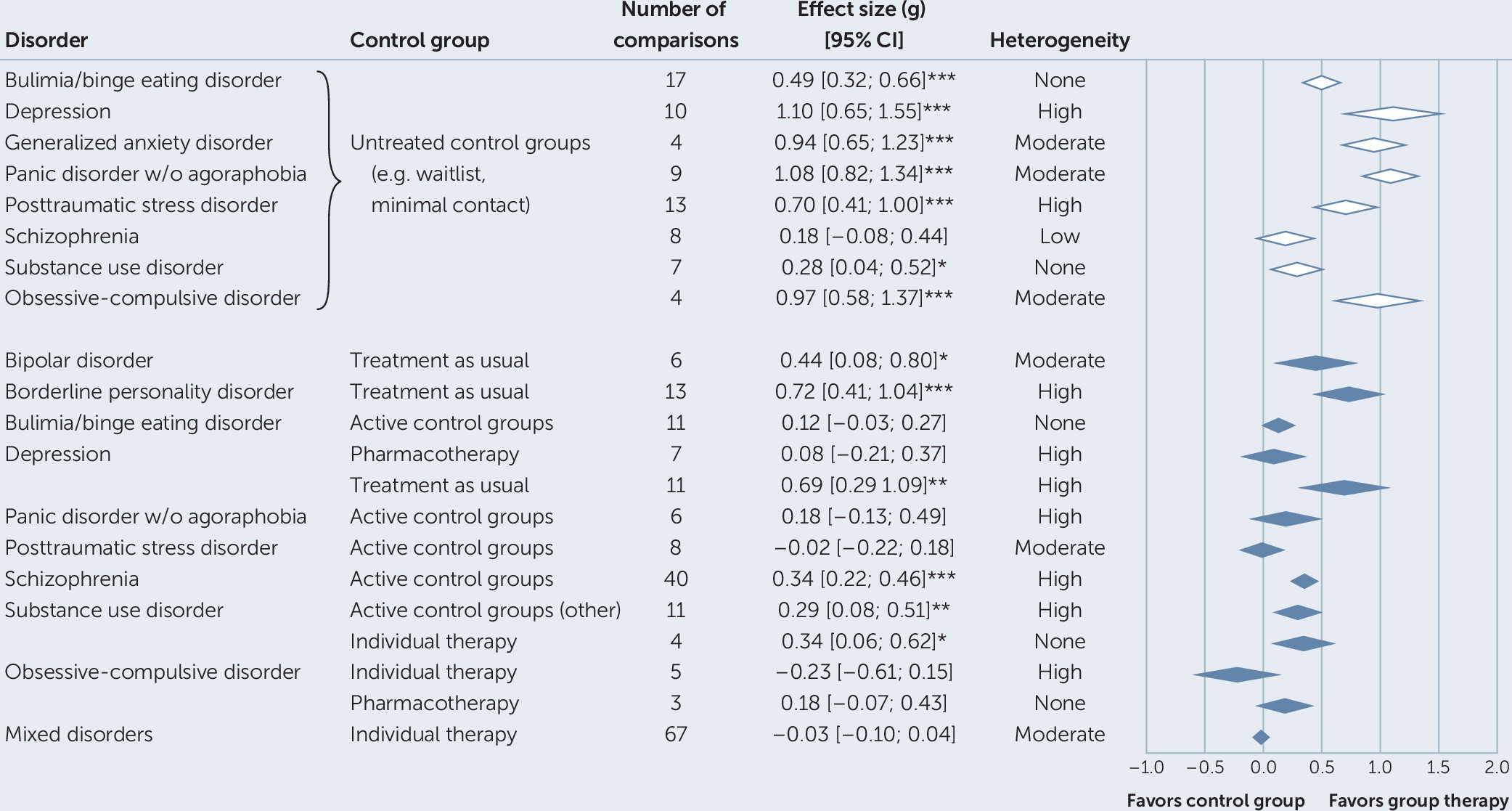

Forest Plot

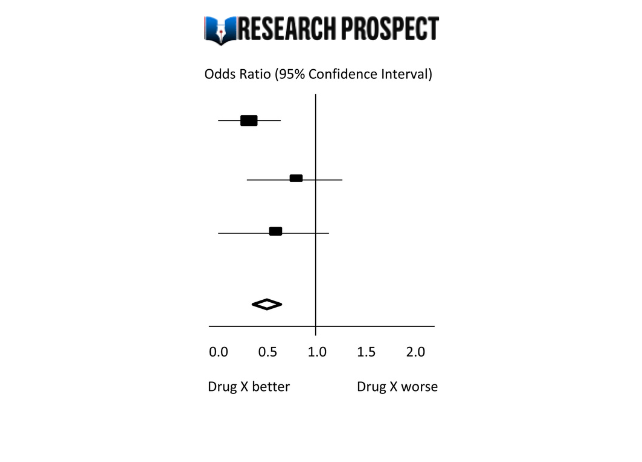

The results of a meta-analysis are often visually presented using a “Forest Plot”. This type of plot displays, for each study, included in the analysis, a horizontal line that indicates the standardized Effect Size estimate and 95% confidence interval for the risk ratio used. Figure A provides an example of a hypothetical Forest Plot in which drug X reduces the risk of death in all three studies.

However, the first study was larger than the other two, and as a result, the estimates for the smaller studies were not statistically significant. This is indicated by the lines emanating from their boxes, including the value of 1. The size of the boxes represents the relative weights assigned to each study by the meta-analysis. The combined estimate of the drug’s effect, represented by the diamond, provides a more precise estimate of the drug’s effect, with the diamond indicating both the combined risk ratio estimate and the 95% confidence interval limits.

Figure-A: Hypothetical Forest Plot

Relevance to Practice and Research

Evidence Based Nursing commentaries often include recently published systematic reviews and meta-analyses, as they can provide new insights and strengthen recommendations for effective healthcare practices. Additionally, they can identify gaps or limitations in current evidence and guide future research directions.

The quality of the data available for synthesis is a critical factor in the strength of conclusions drawn from meta-analyses, and this is influenced by the quality of individual studies and the systematic review itself. However, meta-analysis cannot overcome issues related to underpowered or poorly designed studies.

Therefore, clinicians may still encounter situations where the evidence is weak or uncertain, and where higher-quality research is required to improve clinical decision-making. While such findings can be frustrating, they remain important for informing practice and highlighting the need for further research to fill gaps in the evidence base.

Methods and Assumptions in Meta-Analysis

Ensuring the credibility of findings is imperative in all types of research, including meta-analyses. To validate the outcomes of a meta-analysis, the researcher must confirm that the research techniques used were accurate in measuring the intended variables. Typically, researchers establish the validity of a meta-analysis by testing the outcomes for homogeneity or the degree of similarity between the results of the combined studies.

Homogeneity is preferred in meta-analyses as it allows the data to be combined without needing adjustments to suit the study’s requirements. To determine homogeneity, researchers assess heterogeneity, the opposite of homogeneity. Two widely used statistical methods for evaluating heterogeneity in research results are Cochran’s-Q and I-Square, also known as I-2 Index.

Difference Between Meta-Analysis and Systematic Reviews

Meta-analysis and systematic reviews are both research methods used to synthesise evidence from multiple studies on a particular topic. However, there are some key differences between the two.

Systematic reviews involve a comprehensive and structured approach to identifying, selecting, and critically appraising all available evidence relevant to a specific research question. This process involves searching multiple databases, screening the identified studies for relevance and quality, and summarizing the findings in a narrative report.

Meta-analysis, on the other hand, involves using statistical methods to combine and analyze the data from multiple studies, with the aim of producing a quantitative summary of the overall effect size. Meta-analysis requires the studies to be similar enough in terms of their design, methodology, and outcome measures to allow for meaningful comparison and analysis.

Therefore, systematic reviews are broader in scope and summarize the findings of all studies on a topic, while meta-analyses are more focused on producing a quantitative estimate of the effect size of an intervention across multiple studies that meet certain criteria. In some cases, a systematic review may be conducted without a meta-analysis if the studies are too diverse or the quality of the data is not sufficient to allow for statistical pooling.

Software Packages For Meta-Analysis

Meta-analysis can be done through software packages, including free and paid options. One of the most commonly used software packages for meta-analysis is RevMan by the Cochrane Collaboration.

Assessing the Quality of Meta-Analysis

Assessing the quality of a meta-analysis involves evaluating the methods used to conduct the analysis and the quality of the studies included. Here are some key factors to consider:

- Study selection: The studies included in the meta-analysis should be relevant to the research question and meet predetermined criteria for quality.

- Search strategy: The search strategy should be comprehensive and transparent, including databases and search terms used to identify relevant studies.

- Study quality assessment: The quality of included studies should be assessed using appropriate tools, and this assessment should be reported in the meta-analysis.

- Data extraction: The data extraction process should be systematic and clearly reported, including any discrepancies that arose.

- Analysis methods: The meta-analysis should use appropriate statistical methods to combine the results of the included studies, and these methods should be transparently reported.

- Publication bias: The potential for publication bias should be assessed and reported in the meta-analysis, including any efforts to identify and include unpublished studies.

- Interpretation of results: The results should be interpreted in the context of the study limitations and the overall quality of the evidence.

- Sensitivity analysis: Sensitivity analysis should be conducted to evaluate the impact of study quality, inclusion criteria, and other factors on the overall results.

Overall, a high-quality meta-analysis should be transparent in its methods and clearly report the included studies’ limitations and the evidence’s overall quality.

Hire an Expert Writer

Orders completed by our expert writers are

- Formally drafted in an academic style

- Free Amendments and 100% Plagiarism Free – or your money back!

- 100% Confidential and Timely Delivery!

- Free anti-plagiarism report

- Appreciated by thousands of clients. Check client reviews

Examples of Meta-Analysis

- STANLEY T.D. et JARRELL S.B. (1989), « Meta-regression analysis : a quantitative method of literature surveys », Journal of Economics Surveys, vol. 3, n°2, pp. 161-170.

- DATTA D.K., PINCHES G.E. et NARAYANAN V.K. (1992), « Factors influencing wealth creation from mergers and acquisitions : a meta-analysis », Strategic Management Journal, Vol. 13, pp. 67-84.

- GLASS G. (1983), « Synthesising empirical research : Meta-analysis » in S.A. Ward and L.J. Reed (Eds), Knowledge structure and use : Implications for synthesis and interpretation, Philadelphia : Temple University Press.

- WOLF F.M. (1986), Meta-analysis : Quantitative methods for research synthesis, Sage University Paper n°59.

- HUNTER J.E., SCHMIDT F.L. et JACKSON G.B. (1982), « Meta-analysis : cumulating research findings across studies », Beverly Hills, CA : Sage.

Frequently Asked Questions

What is a meta-analysis in research.

Meta-analysis is a statistical method used to combine results from multiple studies on a specific topic. By pooling data from various sources, meta-analysis can provide a more precise estimate of the effect size of a treatment or intervention and identify areas for future research.

Why is meta-analysis important?

Meta-analysis is important because it combines and summarizes results from multiple studies to provide a more precise and reliable estimate of the effect of a treatment or intervention. This helps clinicians and policymakers make evidence-based decisions and identify areas for further research.

What is an example of a meta-analysis?

A meta-analysis of studies evaluating physical exercise’s effect on depression in adults is an example. Researchers gathered data from 49 studies involving a total of 2669 participants. The studies used different types of exercise and measures of depression, which made it difficult to compare the results.

Through meta-analysis, the researchers calculated an overall effect size and determined that exercise was associated with a statistically significant reduction in depression symptoms. The study also identified that moderate-intensity aerobic exercise, performed three to five times per week, was the most effective. The meta-analysis provided a more comprehensive understanding of the impact of exercise on depression than any single study could provide.

What is the definition of meta-analysis in clinical research?

Meta-analysis in clinical research is a statistical technique that combines data from multiple independent studies on a particular topic to generate a summary or “meta” estimate of the effect of a particular intervention or exposure.

This type of analysis allows researchers to synthesise the results of multiple studies, potentially increasing the statistical power and providing more precise estimates of treatment effects. Meta-analyses are commonly used in clinical research to evaluate the effectiveness and safety of medical interventions and to inform clinical practice guidelines.

Is meta-analysis qualitative or quantitative?

Meta-analysis is a quantitative method used to combine and analyze data from multiple studies. It involves the statistical synthesis of results from individual studies to obtain a pooled estimate of the effect size of a particular intervention or treatment. Therefore, meta-analysis is considered a quantitative approach to research synthesis.

You May Also Like

Descriptive research is carried out to describe current issues, programs, and provides information about the issue through surveys and various fact-finding methods.

A hypothesis is a research question that has to be proved correct or incorrect through hypothesis testing – a scientific approach to test a hypothesis.

Textual analysis is the method of analysing and understanding the text. We need to look carefully at the text to identify the writer’s context and message.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

- Open access

- Published: 01 August 2019

A step by step guide for conducting a systematic review and meta-analysis with simulation data

- Gehad Mohamed Tawfik 1 , 2 ,

- Kadek Agus Surya Dila 2 , 3 ,

- Muawia Yousif Fadlelmola Mohamed 2 , 4 ,

- Dao Ngoc Hien Tam 2 , 5 ,

- Nguyen Dang Kien 2 , 6 ,

- Ali Mahmoud Ahmed 2 , 7 &

- Nguyen Tien Huy 8 , 9 , 10

Tropical Medicine and Health volume 47 , Article number: 46 ( 2019 ) Cite this article

820k Accesses

316 Citations

94 Altmetric

Metrics details

The massive abundance of studies relating to tropical medicine and health has increased strikingly over the last few decades. In the field of tropical medicine and health, a well-conducted systematic review and meta-analysis (SR/MA) is considered a feasible solution for keeping clinicians abreast of current evidence-based medicine. Understanding of SR/MA steps is of paramount importance for its conduction. It is not easy to be done as there are obstacles that could face the researcher. To solve those hindrances, this methodology study aimed to provide a step-by-step approach mainly for beginners and junior researchers, in the field of tropical medicine and other health care fields, on how to properly conduct a SR/MA, in which all the steps here depicts our experience and expertise combined with the already well-known and accepted international guidance.

We suggest that all steps of SR/MA should be done independently by 2–3 reviewers’ discussion, to ensure data quality and accuracy.

SR/MA steps include the development of research question, forming criteria, search strategy, searching databases, protocol registration, title, abstract, full-text screening, manual searching, extracting data, quality assessment, data checking, statistical analysis, double data checking, and manuscript writing.

Introduction

The amount of studies published in the biomedical literature, especially tropical medicine and health, has increased strikingly over the last few decades. This massive abundance of literature makes clinical medicine increasingly complex, and knowledge from various researches is often needed to inform a particular clinical decision. However, available studies are often heterogeneous with regard to their design, operational quality, and subjects under study and may handle the research question in a different way, which adds to the complexity of evidence and conclusion synthesis [ 1 ].

Systematic review and meta-analyses (SR/MAs) have a high level of evidence as represented by the evidence-based pyramid. Therefore, a well-conducted SR/MA is considered a feasible solution in keeping health clinicians ahead regarding contemporary evidence-based medicine.

Differing from a systematic review, unsystematic narrative review tends to be descriptive, in which the authors select frequently articles based on their point of view which leads to its poor quality. A systematic review, on the other hand, is defined as a review using a systematic method to summarize evidence on questions with a detailed and comprehensive plan of study. Furthermore, despite the increasing guidelines for effectively conducting a systematic review, we found that basic steps often start from framing question, then identifying relevant work which consists of criteria development and search for articles, appraise the quality of included studies, summarize the evidence, and interpret the results [ 2 , 3 ]. However, those simple steps are not easy to be reached in reality. There are many troubles that a researcher could be struggled with which has no detailed indication.

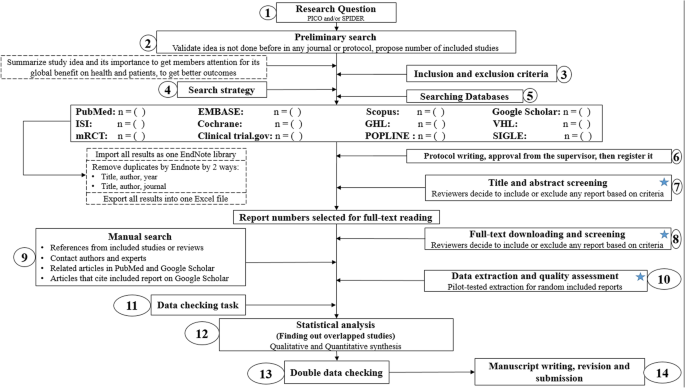

Conducting a SR/MA in tropical medicine and health may be difficult especially for young researchers; therefore, understanding of its essential steps is crucial. It is not easy to be done as there are obstacles that could face the researcher. To solve those hindrances, we recommend a flow diagram (Fig. 1 ) which illustrates a detailed and step-by-step the stages for SR/MA studies. This methodology study aimed to provide a step-by-step approach mainly for beginners and junior researchers, in the field of tropical medicine and other health care fields, on how to properly and succinctly conduct a SR/MA; all the steps here depicts our experience and expertise combined with the already well known and accepted international guidance.

Detailed flow diagram guideline for systematic review and meta-analysis steps. Note : Star icon refers to “2–3 reviewers screen independently”

Methods and results

Detailed steps for conducting any systematic review and meta-analysis.

We searched the methods reported in published SR/MA in tropical medicine and other healthcare fields besides the published guidelines like Cochrane guidelines {Higgins, 2011 #7} [ 4 ] to collect the best low-bias method for each step of SR/MA conduction steps. Furthermore, we used guidelines that we apply in studies for all SR/MA steps. We combined these methods in order to conclude and conduct a detailed flow diagram that shows the SR/MA steps how being conducted.

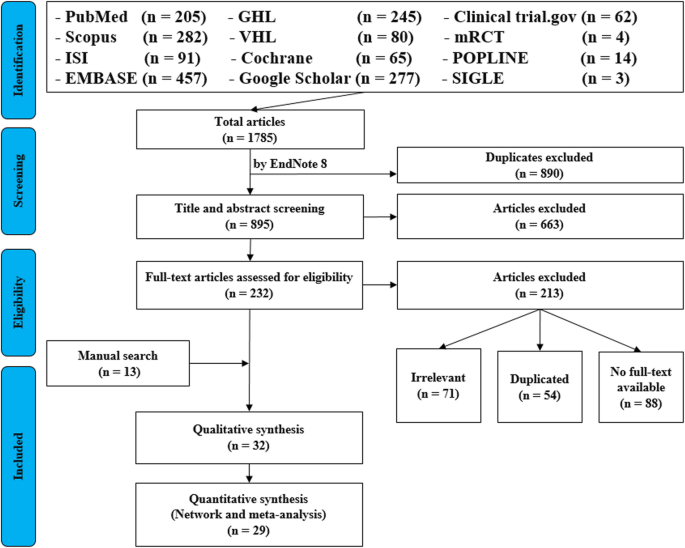

Any SR/MA must follow the widely accepted Preferred Reporting Items for Systematic Review and Meta-analysis statement (PRISMA checklist 2009) (Additional file 5 : Table S1) [ 5 ].

We proposed our methods according to a valid explanatory simulation example choosing the topic of “evaluating safety of Ebola vaccine,” as it is known that Ebola is a very rare tropical disease but fatal. All the explained methods feature the standards followed internationally, with our compiled experience in the conduct of SR beside it, which we think proved some validity. This is a SR under conduct by a couple of researchers teaming in a research group, moreover, as the outbreak of Ebola which took place (2013–2016) in Africa resulted in a significant mortality and morbidity. Furthermore, since there are many published and ongoing trials assessing the safety of Ebola vaccines, we thought this would provide a great opportunity to tackle this hotly debated issue. Moreover, Ebola started to fire again and new fatal outbreak appeared in the Democratic Republic of Congo since August 2018, which caused infection to more than 1000 people according to the World Health Organization, and 629 people have been killed till now. Hence, it is considered the second worst Ebola outbreak, after the first one in West Africa in 2014 , which infected more than 26,000 and killed about 11,300 people along outbreak course.

Research question and objectives

Like other study designs, the research question of SR/MA should be feasible, interesting, novel, ethical, and relevant. Therefore, a clear, logical, and well-defined research question should be formulated. Usually, two common tools are used: PICO or SPIDER. PICO (Population, Intervention, Comparison, Outcome) is used mostly in quantitative evidence synthesis. Authors demonstrated that PICO holds more sensitivity than the more specific SPIDER approach [ 6 ]. SPIDER (Sample, Phenomenon of Interest, Design, Evaluation, Research type) was proposed as a method for qualitative and mixed methods search.

We here recommend a combined approach of using either one or both the SPIDER and PICO tools to retrieve a comprehensive search depending on time and resources limitations. When we apply this to our assumed research topic, being of qualitative nature, the use of SPIDER approach is more valid.

PICO is usually used for systematic review and meta-analysis of clinical trial study. For the observational study (without intervention or comparator), in many tropical and epidemiological questions, it is usually enough to use P (Patient) and O (outcome) only to formulate a research question. We must indicate clearly the population (P), then intervention (I) or exposure. Next, it is necessary to compare (C) the indicated intervention with other interventions, i.e., placebo. Finally, we need to clarify which are our relevant outcomes.

To facilitate comprehension, we choose the Ebola virus disease (EVD) as an example. Currently, the vaccine for EVD is being developed and under phase I, II, and III clinical trials; we want to know whether this vaccine is safe and can induce sufficient immunogenicity to the subjects.

An example of a research question for SR/MA based on PICO for this issue is as follows: How is the safety and immunogenicity of Ebola vaccine in human? (P: healthy subjects (human), I: vaccination, C: placebo, O: safety or adverse effects)

Preliminary research and idea validation

We recommend a preliminary search to identify relevant articles, ensure the validity of the proposed idea, avoid duplication of previously addressed questions, and assure that we have enough articles for conducting its analysis. Moreover, themes should focus on relevant and important health-care issues, consider global needs and values, reflect the current science, and be consistent with the adopted review methods. Gaining familiarity with a deep understanding of the study field through relevant videos and discussions is of paramount importance for better retrieval of results. If we ignore this step, our study could be canceled whenever we find out a similar study published before. This means we are wasting our time to deal with a problem that has been tackled for a long time.

To do this, we can start by doing a simple search in PubMed or Google Scholar with search terms Ebola AND vaccine. While doing this step, we identify a systematic review and meta-analysis of determinant factors influencing antibody response from vaccination of Ebola vaccine in non-human primate and human [ 7 ], which is a relevant paper to read to get a deeper insight and identify gaps for better formulation of our research question or purpose. We can still conduct systematic review and meta-analysis of Ebola vaccine because we evaluate safety as a different outcome and different population (only human).

Inclusion and exclusion criteria

Eligibility criteria are based on the PICO approach, study design, and date. Exclusion criteria mostly are unrelated, duplicated, unavailable full texts, or abstract-only papers. These exclusions should be stated in advance to refrain the researcher from bias. The inclusion criteria would be articles with the target patients, investigated interventions, or the comparison between two studied interventions. Briefly, it would be articles which contain information answering our research question. But the most important is that it should be clear and sufficient information, including positive or negative, to answer the question.

For the topic we have chosen, we can make inclusion criteria: (1) any clinical trial evaluating the safety of Ebola vaccine and (2) no restriction regarding country, patient age, race, gender, publication language, and date. Exclusion criteria are as follows: (1) study of Ebola vaccine in non-human subjects or in vitro studies; (2) study with data not reliably extracted, duplicate, or overlapping data; (3) abstract-only papers as preceding papers, conference, editorial, and author response theses and books; (4) articles without available full text available; and (5) case reports, case series, and systematic review studies. The PRISMA flow diagram template that is used in SR/MA studies can be found in Fig. 2 .

PRISMA flow diagram of studies’ screening and selection

Search strategy

A standard search strategy is used in PubMed, then later it is modified according to each specific database to get the best relevant results. The basic search strategy is built based on the research question formulation (i.e., PICO or PICOS). Search strategies are constructed to include free-text terms (e.g., in the title and abstract) and any appropriate subject indexing (e.g., MeSH) expected to retrieve eligible studies, with the help of an expert in the review topic field or an information specialist. Additionally, we advise not to use terms for the Outcomes as their inclusion might hinder the database being searched to retrieve eligible studies because the used outcome is not mentioned obviously in the articles.

The improvement of the search term is made while doing a trial search and looking for another relevant term within each concept from retrieved papers. To search for a clinical trial, we can use these descriptors in PubMed: “clinical trial”[Publication Type] OR “clinical trials as topic”[MeSH terms] OR “clinical trial”[All Fields]. After some rounds of trial and refinement of search term, we formulate the final search term for PubMed as follows: (ebola OR ebola virus OR ebola virus disease OR EVD) AND (vaccine OR vaccination OR vaccinated OR immunization) AND (“clinical trial”[Publication Type] OR “clinical trials as topic”[MeSH Terms] OR “clinical trial”[All Fields]). Because the study for this topic is limited, we do not include outcome term (safety and immunogenicity) in the search term to capture more studies.

Search databases, import all results to a library, and exporting to an excel sheet

According to the AMSTAR guidelines, at least two databases have to be searched in the SR/MA [ 8 ], but as you increase the number of searched databases, you get much yield and more accurate and comprehensive results. The ordering of the databases depends mostly on the review questions; being in a study of clinical trials, you will rely mostly on Cochrane, mRCTs, or International Clinical Trials Registry Platform (ICTRP). Here, we propose 12 databases (PubMed, Scopus, Web of Science, EMBASE, GHL, VHL, Cochrane, Google Scholar, Clinical trials.gov , mRCTs, POPLINE, and SIGLE), which help to cover almost all published articles in tropical medicine and other health-related fields. Among those databases, POPLINE focuses on reproductive health. Researchers should consider to choose relevant database according to the research topic. Some databases do not support the use of Boolean or quotation; otherwise, there are some databases that have special searching way. Therefore, we need to modify the initial search terms for each database to get appreciated results; therefore, manipulation guides for each online database searches are presented in Additional file 5 : Table S2. The detailed search strategy for each database is found in Additional file 5 : Table S3. The search term that we created in PubMed needs customization based on a specific characteristic of the database. An example for Google Scholar advanced search for our topic is as follows:

With all of the words: ebola virus

With at least one of the words: vaccine vaccination vaccinated immunization

Where my words occur: in the title of the article

With all of the words: EVD

Finally, all records are collected into one Endnote library in order to delete duplicates and then to it export into an excel sheet. Using remove duplicating function with two options is mandatory. All references which have (1) the same title and author, and published in the same year, and (2) the same title and author, and published in the same journal, would be deleted. References remaining after this step should be exported to an excel file with essential information for screening. These could be the authors’ names, publication year, journal, DOI, URL link, and abstract.

Protocol writing and registration

Protocol registration at an early stage guarantees transparency in the research process and protects from duplication problems. Besides, it is considered a documented proof of team plan of action, research question, eligibility criteria, intervention/exposure, quality assessment, and pre-analysis plan. It is recommended that researchers send it to the principal investigator (PI) to revise it, then upload it to registry sites. There are many registry sites available for SR/MA like those proposed by Cochrane and Campbell collaborations; however, we recommend registering the protocol into PROSPERO as it is easier. The layout of a protocol template, according to PROSPERO, can be found in Additional file 5 : File S1.

Title and abstract screening

Decisions to select retrieved articles for further assessment are based on eligibility criteria, to minimize the chance of including non-relevant articles. According to the Cochrane guidance, two reviewers are a must to do this step, but as for beginners and junior researchers, this might be tiresome; thus, we propose based on our experience that at least three reviewers should work independently to reduce the chance of error, particularly in teams with a large number of authors to add more scrutiny and ensure proper conduct. Mostly, the quality with three reviewers would be better than two, as two only would have different opinions from each other, so they cannot decide, while the third opinion is crucial. And here are some examples of systematic reviews which we conducted following the same strategy (by a different group of researchers in our research group) and published successfully, and they feature relevant ideas to tropical medicine and disease [ 9 , 10 , 11 ].

In this step, duplications will be removed manually whenever the reviewers find them out. When there is a doubt about an article decision, the team should be inclusive rather than exclusive, until the main leader or PI makes a decision after discussion and consensus. All excluded records should be given exclusion reasons.

Full text downloading and screening

Many search engines provide links for free to access full-text articles. In case not found, we can search in some research websites as ResearchGate, which offer an option of direct full-text request from authors. Additionally, exploring archives of wanted journals, or contacting PI to purchase it if available. Similarly, 2–3 reviewers work independently to decide about included full texts according to eligibility criteria, with reporting exclusion reasons of articles. In case any disagreement has occurred, the final decision has to be made by discussion.

Manual search

One has to exhaust all possibilities to reduce bias by performing an explicit hand-searching for retrieval of reports that may have been dropped from first search [ 12 ]. We apply five methods to make manual searching: searching references from included studies/reviews, contacting authors and experts, and looking at related articles/cited articles in PubMed and Google Scholar.

We describe here three consecutive methods to increase and refine the yield of manual searching: firstly, searching reference lists of included articles; secondly, performing what is known as citation tracking in which the reviewers track all the articles that cite each one of the included articles, and this might involve electronic searching of databases; and thirdly, similar to the citation tracking, we follow all “related to” or “similar” articles. Each of the abovementioned methods can be performed by 2–3 independent reviewers, and all the possible relevant article must undergo further scrutiny against the inclusion criteria, after following the same records yielded from electronic databases, i.e., title/abstract and full-text screening.

We propose an independent reviewing by assigning each member of the teams a “tag” and a distinct method, to compile all the results at the end for comparison of differences and discussion and to maximize the retrieval and minimize the bias. Similarly, the number of included articles has to be stated before addition to the overall included records.

Data extraction and quality assessment

This step entitles data collection from included full-texts in a structured extraction excel sheet, which is previously pilot-tested for extraction using some random studies. We recommend extracting both adjusted and non-adjusted data because it gives the most allowed confounding factor to be used in the analysis by pooling them later [ 13 ]. The process of extraction should be executed by 2–3 independent reviewers. Mostly, the sheet is classified into the study and patient characteristics, outcomes, and quality assessment (QA) tool.

Data presented in graphs should be extracted by software tools such as Web plot digitizer [ 14 ]. Most of the equations that can be used in extraction prior to analysis and estimation of standard deviation (SD) from other variables is found inside Additional file 5 : File S2 with their references as Hozo et al. [ 15 ], Xiang et al. [ 16 ], and Rijkom et al. [ 17 ]. A variety of tools are available for the QA, depending on the design: ROB-2 Cochrane tool for randomized controlled trials [ 18 ] which is presented as Additional file 1 : Figure S1 and Additional file 2 : Figure S2—from a previous published article data—[ 19 ], NIH tool for observational and cross-sectional studies [ 20 ], ROBINS-I tool for non-randomize trials [ 21 ], QUADAS-2 tool for diagnostic studies, QUIPS tool for prognostic studies, CARE tool for case reports, and ToxRtool for in vivo and in vitro studies. We recommend that 2–3 reviewers independently assess the quality of the studies and add to the data extraction form before the inclusion into the analysis to reduce the risk of bias. In the NIH tool for observational studies—cohort and cross-sectional—as in this EBOLA case, to evaluate the risk of bias, reviewers should rate each of the 14 items into dichotomous variables: yes, no, or not applicable. An overall score is calculated by adding all the items scores as yes equals one, while no and NA equals zero. A score will be given for every paper to classify them as poor, fair, or good conducted studies, where a score from 0–5 was considered poor, 6–9 as fair, and 10–14 as good.

In the EBOLA case example above, authors can extract the following information: name of authors, country of patients, year of publication, study design (case report, cohort study, or clinical trial or RCT), sample size, the infected point of time after EBOLA infection, follow-up interval after vaccination time, efficacy, safety, adverse effects after vaccinations, and QA sheet (Additional file 6 : Data S1).

Data checking

Due to the expected human error and bias, we recommend a data checking step, in which every included article is compared with its counterpart in an extraction sheet by evidence photos, to detect mistakes in data. We advise assigning articles to 2–3 independent reviewers, ideally not the ones who performed the extraction of those articles. When resources are limited, each reviewer is assigned a different article than the one he extracted in the previous stage.

Statistical analysis

Investigators use different methods for combining and summarizing findings of included studies. Before analysis, there is an important step called cleaning of data in the extraction sheet, where the analyst organizes extraction sheet data in a form that can be read by analytical software. The analysis consists of 2 types namely qualitative and quantitative analysis. Qualitative analysis mostly describes data in SR studies, while quantitative analysis consists of two main types: MA and network meta-analysis (NMA). Subgroup, sensitivity, cumulative analyses, and meta-regression are appropriate for testing whether the results are consistent or not and investigating the effect of certain confounders on the outcome and finding the best predictors. Publication bias should be assessed to investigate the presence of missing studies which can affect the summary.

To illustrate basic meta-analysis, we provide an imaginary data for the research question about Ebola vaccine safety (in terms of adverse events, 14 days after injection) and immunogenicity (Ebola virus antibodies rise in geometric mean titer, 6 months after injection). Assuming that from searching and data extraction, we decided to do an analysis to evaluate Ebola vaccine “A” safety and immunogenicity. Other Ebola vaccines were not meta-analyzed because of the limited number of studies (instead, it will be included for narrative review). The imaginary data for vaccine safety meta-analysis can be accessed in Additional file 7 : Data S2. To do the meta-analysis, we can use free software, such as RevMan [ 22 ] or R package meta [ 23 ]. In this example, we will use the R package meta. The tutorial of meta package can be accessed through “General Package for Meta-Analysis” tutorial pdf [ 23 ]. The R codes and its guidance for meta-analysis done can be found in Additional file 5 : File S3.

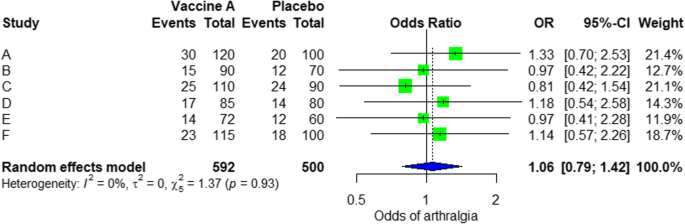

For the analysis, we assume that the study is heterogenous in nature; therefore, we choose a random effect model. We did an analysis on the safety of Ebola vaccine A. From the data table, we can see some adverse events occurring after intramuscular injection of vaccine A to the subject of the study. Suppose that we include six studies that fulfill our inclusion criteria. We can do a meta-analysis for each of the adverse events extracted from the studies, for example, arthralgia, from the results of random effect meta-analysis using the R meta package.

From the results shown in Additional file 3 : Figure S3, we can see that the odds ratio (OR) of arthralgia is 1.06 (0.79; 1.42), p value = 0.71, which means that there is no association between the intramuscular injection of Ebola vaccine A and arthralgia, as the OR is almost one, and besides, the P value is insignificant as it is > 0.05.

In the meta-analysis, we can also visualize the results in a forest plot. It is shown in Fig. 3 an example of a forest plot from the simulated analysis.

Random effect model forest plot for comparison of vaccine A versus placebo

From the forest plot, we can see six studies (A to F) and their respective OR (95% CI). The green box represents the effect size (in this case, OR) of each study. The bigger the box means the study weighted more (i.e., bigger sample size). The blue diamond shape represents the pooled OR of the six studies. We can see the blue diamond cross the vertical line OR = 1, which indicates no significance for the association as the diamond almost equalized in both sides. We can confirm this also from the 95% confidence interval that includes one and the p value > 0.05.

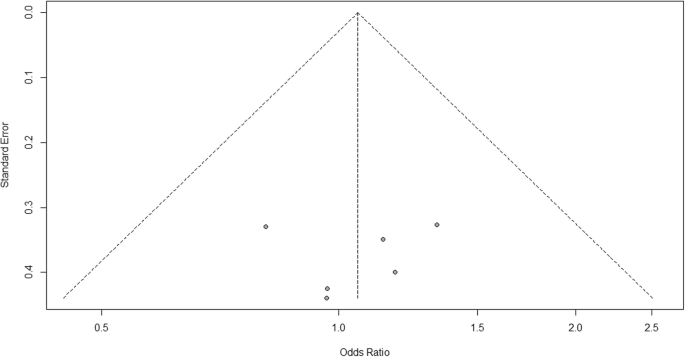

For heterogeneity, we see that I 2 = 0%, which means no heterogeneity is detected; the study is relatively homogenous (it is rare in the real study). To evaluate publication bias related to the meta-analysis of adverse events of arthralgia, we can use the metabias function from the R meta package (Additional file 4 : Figure S4) and visualization using a funnel plot. The results of publication bias are demonstrated in Fig. 4 . We see that the p value associated with this test is 0.74, indicating symmetry of the funnel plot. We can confirm it by looking at the funnel plot.

Publication bias funnel plot for comparison of vaccine A versus placebo

Looking at the funnel plot, the number of studies at the left and right side of the funnel plot is the same; therefore, the plot is symmetry, indicating no publication bias detected.

Sensitivity analysis is a procedure used to discover how different values of an independent variable will influence the significance of a particular dependent variable by removing one study from MA. If all included study p values are < 0.05, hence, removing any study will not change the significant association. It is only performed when there is a significant association, so if the p value of MA done is 0.7—more than one—the sensitivity analysis is not needed for this case study example. If there are 2 studies with p value > 0.05, removing any of the two studies will result in a loss of the significance.

Double data checking

For more assurance on the quality of results, the analyzed data should be rechecked from full-text data by evidence photos, to allow an obvious check for the PI of the study.

Manuscript writing, revision, and submission to a journal

Writing based on four scientific sections: introduction, methods, results, and discussion, mostly with a conclusion. Performing a characteristic table for study and patient characteristics is a mandatory step which can be found as a template in Additional file 5 : Table S3.

After finishing the manuscript writing, characteristics table, and PRISMA flow diagram, the team should send it to the PI to revise it well and reply to his comments and, finally, choose a suitable journal for the manuscript which fits with considerable impact factor and fitting field. We need to pay attention by reading the author guidelines of journals before submitting the manuscript.

The role of evidence-based medicine in biomedical research is rapidly growing. SR/MAs are also increasing in the medical literature. This paper has sought to provide a comprehensive approach to enable reviewers to produce high-quality SR/MAs. We hope that readers could gain general knowledge about how to conduct a SR/MA and have the confidence to perform one, although this kind of study requires complex steps compared to narrative reviews.

Having the basic steps for conduction of MA, there are many advanced steps that are applied for certain specific purposes. One of these steps is meta-regression which is performed to investigate the association of any confounder and the results of the MA. Furthermore, there are other types rather than the standard MA like NMA and MA. In NMA, we investigate the difference between several comparisons when there were not enough data to enable standard meta-analysis. It uses both direct and indirect comparisons to conclude what is the best between the competitors. On the other hand, mega MA or MA of patients tend to summarize the results of independent studies by using its individual subject data. As a more detailed analysis can be done, it is useful in conducting repeated measure analysis and time-to-event analysis. Moreover, it can perform analysis of variance and multiple regression analysis; however, it requires homogenous dataset and it is time-consuming in conduct [ 24 ].

Conclusions

Systematic review/meta-analysis steps include development of research question and its validation, forming criteria, search strategy, searching databases, importing all results to a library and exporting to an excel sheet, protocol writing and registration, title and abstract screening, full-text screening, manual searching, extracting data and assessing its quality, data checking, conducting statistical analysis, double data checking, manuscript writing, revising, and submitting to a journal.

Availability of data and materials

Not applicable.

Abbreviations

Network meta-analysis

Principal investigator

Population, Intervention, Comparison, Outcome

Preferred Reporting Items for Systematic Review and Meta-analysis statement

Quality assessment

Sample, Phenomenon of Interest, Design, Evaluation, Research type

Systematic review and meta-analyses

Bello A, Wiebe N, Garg A, Tonelli M. Evidence-based decision-making 2: systematic reviews and meta-analysis. Methods Mol Biol (Clifton, NJ). 2015;1281:397–416.

Article Google Scholar

Khan KS, Kunz R, Kleijnen J, Antes G. Five steps to conducting a systematic review. J R Soc Med. 2003;96(3):118–21.

Rys P, Wladysiuk M, Skrzekowska-Baran I, Malecki MT. Review articles, systematic reviews and meta-analyses: which can be trusted? Polskie Archiwum Medycyny Wewnetrznej. 2009;119(3):148–56.

PubMed Google Scholar

Higgins JPT, Green S. Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]. 2011.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ. 2009;339:b2535.

Methley AM, Campbell S, Chew-Graham C, McNally R, Cheraghi-Sohi S. PICO, PICOS and SPIDER: a comparison study of specificity and sensitivity in three search tools for qualitative systematic reviews. BMC Health Serv Res. 2014;14:579.

Gross L, Lhomme E, Pasin C, Richert L, Thiebaut R. Ebola vaccine development: systematic review of pre-clinical and clinical studies, and meta-analysis of determinants of antibody response variability after vaccination. Int J Infect Dis. 2018;74:83–96.

Article CAS Google Scholar

Shea BJ, Reeves BC, Wells G, Thuku M, Hamel C, Moran J, ... Henry DA. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017;358:j4008.

Giang HTN, Banno K, Minh LHN, Trinh LT, Loc LT, Eltobgy A, et al. Dengue hemophagocytic syndrome: a systematic review and meta-analysis on epidemiology, clinical signs, outcomes, and risk factors. Rev Med Virol. 2018;28(6):e2005.

Morra ME, Altibi AMA, Iqtadar S, Minh LHN, Elawady SS, Hallab A, et al. Definitions for warning signs and signs of severe dengue according to the WHO 2009 classification: systematic review of literature. Rev Med Virol. 2018;28(4):e1979.

Morra ME, Van Thanh L, Kamel MG, Ghazy AA, Altibi AMA, Dat LM, et al. Clinical outcomes of current medical approaches for Middle East respiratory syndrome: a systematic review and meta-analysis. Rev Med Virol. 2018;28(3):e1977.

Vassar M, Atakpo P, Kash MJ. Manual search approaches used by systematic reviewers in dermatology. Journal of the Medical Library Association: JMLA. 2016;104(4):302.

Naunheim MR, Remenschneider AK, Scangas GA, Bunting GW, Deschler DG. The effect of initial tracheoesophageal voice prosthesis size on postoperative complications and voice outcomes. Ann Otol Rhinol Laryngol. 2016;125(6):478–84.

Rohatgi AJaiWa. Web Plot Digitizer. ht tp. 2014;2.

Hozo SP, Djulbegovic B, Hozo I. Estimating the mean and variance from the median, range, and the size of a sample. BMC Med Res Methodol. 2005;5(1):13.

Wan X, Wang W, Liu J, Tong T. Estimating the sample mean and standard deviation from the sample size, median, range and/or interquartile range. BMC Med Res Methodol. 2014;14(1):135.

Van Rijkom HM, Truin GJ, Van’t Hof MA. A meta-analysis of clinical studies on the caries-inhibiting effect of fluoride gel treatment. Carries Res. 1998;32(2):83–92.

Higgins JP, Altman DG, Gotzsche PC, Juni P, Moher D, Oxman AD, et al. The Cochrane Collaboration's tool for assessing risk of bias in randomised trials. BMJ. 2011;343:d5928.

Tawfik GM, Tieu TM, Ghozy S, Makram OM, Samuel P, Abdelaal A, et al. Speech efficacy, safety and factors affecting lifetime of voice prostheses in patients with laryngeal cancer: a systematic review and network meta-analysis of randomized controlled trials. J Clin Oncol. 2018;36(15_suppl):e18031-e.

Wannemuehler TJ, Lobo BC, Johnson JD, Deig CR, Ting JY, Gregory RL. Vibratory stimulus reduces in vitro biofilm formation on tracheoesophageal voice prostheses. Laryngoscope. 2016;126(12):2752–7.

Sterne JAC, Hernán MA, Reeves BC, Savović J, Berkman ND, Viswanathan M, et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ. 2016;355.

RevMan The Cochrane Collaboration %J Copenhagen TNCCTCC. Review Manager (RevMan). 5.0. 2008.

Schwarzer GJRn. meta: An R package for meta-analysis. 2007;7(3):40-45.

Google Scholar

Simms LLH. Meta-analysis versus mega-analysis: is there a difference? Oral budesonide for the maintenance of remission in Crohn’s disease: Faculty of Graduate Studies, University of Western Ontario; 1998.

Download references

Acknowledgements

This study was conducted (in part) at the Joint Usage/Research Center on Tropical Disease, Institute of Tropical Medicine, Nagasaki University, Japan.

Author information

Authors and affiliations.

Faculty of Medicine, Ain Shams University, Cairo, Egypt

Gehad Mohamed Tawfik

Online research Club http://www.onlineresearchclub.org/

Gehad Mohamed Tawfik, Kadek Agus Surya Dila, Muawia Yousif Fadlelmola Mohamed, Dao Ngoc Hien Tam, Nguyen Dang Kien & Ali Mahmoud Ahmed

Pratama Giri Emas Hospital, Singaraja-Amlapura street, Giri Emas village, Sawan subdistrict, Singaraja City, Buleleng, Bali, 81171, Indonesia

Kadek Agus Surya Dila

Faculty of Medicine, University of Khartoum, Khartoum, Sudan

Muawia Yousif Fadlelmola Mohamed

Nanogen Pharmaceutical Biotechnology Joint Stock Company, Ho Chi Minh City, Vietnam

Dao Ngoc Hien Tam

Department of Obstetrics and Gynecology, Thai Binh University of Medicine and Pharmacy, Thai Binh, Vietnam

Nguyen Dang Kien

Faculty of Medicine, Al-Azhar University, Cairo, Egypt

Ali Mahmoud Ahmed

Evidence Based Medicine Research Group & Faculty of Applied Sciences, Ton Duc Thang University, Ho Chi Minh City, 70000, Vietnam

Nguyen Tien Huy

Faculty of Applied Sciences, Ton Duc Thang University, Ho Chi Minh City, 70000, Vietnam

Department of Clinical Product Development, Institute of Tropical Medicine (NEKKEN), Leading Graduate School Program, and Graduate School of Biomedical Sciences, Nagasaki University, 1-12-4 Sakamoto, Nagasaki, 852-8523, Japan

You can also search for this author in PubMed Google Scholar

Contributions

NTH and GMT were responsible for the idea and its design. The figure was done by GMT. All authors contributed to the manuscript writing and approval of the final version.

Corresponding author

Correspondence to Nguyen Tien Huy .

Ethics declarations

Ethics approval and consent to participate, consent for publication, competing interests.

The authors declare that they have no competing interests.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:.

Figure S1. Risk of bias assessment graph of included randomized controlled trials. (TIF 20 kb)

Additional file 2:

Figure S2. Risk of bias assessment summary. (TIF 69 kb)

Additional file 3:

Figure S3. Arthralgia results of random effect meta-analysis using R meta package. (TIF 20 kb)

Additional file 4:

Figure S4. Arthralgia linear regression test of funnel plot asymmetry using R meta package. (TIF 13 kb)

Additional file 5:

Table S1. PRISMA 2009 Checklist. Table S2. Manipulation guides for online database searches. Table S3. Detailed search strategy for twelve database searches. Table S4. Baseline characteristics of the patients in the included studies. File S1. PROSPERO protocol template file. File S2. Extraction equations that can be used prior to analysis to get missed variables. File S3. R codes and its guidance for meta-analysis done for comparison between EBOLA vaccine A and placebo. (DOCX 49 kb)

Additional file 6:

Data S1. Extraction and quality assessment data sheets for EBOLA case example. (XLSX 1368 kb)

Additional file 7:

Data S2. Imaginary data for EBOLA case example. (XLSX 10 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

Reprints and permissions

About this article

Cite this article.

Tawfik, G.M., Dila, K.A.S., Mohamed, M.Y.F. et al. A step by step guide for conducting a systematic review and meta-analysis with simulation data. Trop Med Health 47 , 46 (2019). https://doi.org/10.1186/s41182-019-0165-6

Download citation

Received : 30 January 2019

Accepted : 24 May 2019

Published : 01 August 2019

DOI : https://doi.org/10.1186/s41182-019-0165-6

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Tropical Medicine and Health

ISSN: 1349-4147

- Submission enquiries: Access here and click Contact Us

- General enquiries: [email protected]

Systematic Reviews and Meta Analysis

- Getting Started

- Guides and Standards

- Review Protocols

- Databases and Sources

- Randomized Controlled Trials

- Controlled Clinical Trials

- Observational Designs

- Tests of Diagnostic Accuracy

- Software and Tools

- Where do I get all those articles?

- Collaborations

- EPI 233/528

- Countway Mediated Search

- Risk of Bias (RoB)

Systematic review Q & A

What is a systematic review.

A systematic review is guided filtering and synthesis of all available evidence addressing a specific, focused research question, generally about a specific intervention or exposure. The use of standardized, systematic methods and pre-selected eligibility criteria reduce the risk of bias in identifying, selecting and analyzing relevant studies. A well-designed systematic review includes clear objectives, pre-selected criteria for identifying eligible studies, an explicit methodology, a thorough and reproducible search of the literature, an assessment of the validity or risk of bias of each included study, and a systematic synthesis, analysis and presentation of the findings of the included studies. A systematic review may include a meta-analysis.

For details about carrying out systematic reviews, see the Guides and Standards section of this guide.

Is my research topic appropriate for systematic review methods?

A systematic review is best deployed to test a specific hypothesis about a healthcare or public health intervention or exposure. By focusing on a single intervention or a few specific interventions for a particular condition, the investigator can ensure a manageable results set. Moreover, examining a single or small set of related interventions, exposures, or outcomes, will simplify the assessment of studies and the synthesis of the findings.

Systematic reviews are poor tools for hypothesis generation: for instance, to determine what interventions have been used to increase the awareness and acceptability of a vaccine or to investigate the ways that predictive analytics have been used in health care management. In the first case, we don't know what interventions to search for and so have to screen all the articles about awareness and acceptability. In the second, there is no agreed on set of methods that make up predictive analytics, and health care management is far too broad. The search will necessarily be incomplete, vague and very large all at the same time. In most cases, reviews without clearly and exactly specified populations, interventions, exposures, and outcomes will produce results sets that quickly outstrip the resources of a small team and offer no consistent way to assess and synthesize findings from the studies that are identified.

If not a systematic review, then what?

You might consider performing a scoping review . This framework allows iterative searching over a reduced number of data sources and no requirement to assess individual studies for risk of bias. The framework includes built-in mechanisms to adjust the analysis as the work progresses and more is learned about the topic. A scoping review won't help you limit the number of records you'll need to screen (broad questions lead to large results sets) but may give you means of dealing with a large set of results.

This tool can help you decide what kind of review is right for your question.

Can my student complete a systematic review during her summer project?

Probably not. Systematic reviews are a lot of work. Including creating the protocol, building and running a quality search, collecting all the papers, evaluating the studies that meet the inclusion criteria and extracting and analyzing the summary data, a well done review can require dozens to hundreds of hours of work that can span several months. Moreover, a systematic review requires subject expertise, statistical support and a librarian to help design and run the search. Be aware that librarians sometimes have queues for their search time. It may take several weeks to complete and run a search. Moreover, all guidelines for carrying out systematic reviews recommend that at least two subject experts screen the studies identified in the search. The first round of screening can consume 1 hour per screener for every 100-200 records. A systematic review is a labor-intensive team effort.

How can I know if my topic has been been reviewed already?

Before starting out on a systematic review, check to see if someone has done it already. In PubMed you can use the systematic review subset to limit to a broad group of papers that is enriched for systematic reviews. You can invoke the subset by selecting if from the Article Types filters to the left of your PubMed results, or you can append AND systematic[sb] to your search. For example:

"neoadjuvant chemotherapy" AND systematic[sb]

The systematic review subset is very noisy, however. To quickly focus on systematic reviews (knowing that you may be missing some), simply search for the word systematic in the title:

"neoadjuvant chemotherapy" AND systematic[ti]

Any PRISMA-compliant systematic review will be captured by this method since including the words "systematic review" in the title is a requirement of the PRISMA checklist. Cochrane systematic reviews do not include 'systematic' in the title, however. It's worth checking the Cochrane Database of Systematic Reviews independently.

You can also search for protocols that will indicate that another group has set out on a similar project. Many investigators will register their protocols in PROSPERO , a registry of review protocols. Other published protocols as well as Cochrane Review protocols appear in the Cochrane Methodology Register, a part of the Cochrane Library .

- Next: Guides and Standards >>

- Last Updated: Feb 26, 2024 3:17 PM

- URL: https://guides.library.harvard.edu/meta-analysis

| | |

- Open access

- Published: 03 March 2017

Meta-evaluation of meta-analysis: ten appraisal questions for biologists

- Shinichi Nakagawa 1 , 2 ,

- Daniel W. A. Noble 1 ,

- Alistair M. Senior 3 , 4 &

- Malgorzata Lagisz 1

BMC Biology volume 15 , Article number: 18 ( 2017 ) Cite this article

43k Accesses

329 Citations

97 Altmetric

Metrics details

Meta-analysis is a statistical procedure for analyzing the combined data from different studies, and can be a major source of concise up-to-date information. The overall conclusions of a meta-analysis, however, depend heavily on the quality of the meta-analytic process, and an appropriate evaluation of the quality of meta-analysis (meta-evaluation) can be challenging. We outline ten questions biologists can ask to critically appraise a meta-analysis. These questions could also act as simple and accessible guidelines for the authors of meta-analyses. We focus on meta-analyses using non-human species, which we term ‘biological’ meta-analysis. Our ten questions are aimed at enabling a biologist to evaluate whether a biological meta-analysis embodies ‘mega-enlightenment’, a ‘mega-mistake’, or something in between.

Meta-analyses can be important and informative, but are they all?

Last year saw 40 years since the coining of the term ‘meta-analysis’ by Gene Glass in 1976 [ 1 , 2 ]. Meta-analyses, in which data from multiple studies are combined to evaluate an overall effect, or effect size, were first introduced to the medical and social sciences, where humans are the main species of interest [ 3 , 4 , 5 ]. Decades later, meta-analysis has infiltrated different areas of biological sciences [ 6 ], including ecology, evolutionary biology, conservation biology, and physiology. Here non-human species, or even ecosystems, are the main focus [ 7 , 8 , 9 , 10 , 11 , 12 ]. Despite this somewhat later arrival, interest in meta-analysis has been rapidly increasing in biological sciences. We have argued that the remarkable surge in interest over the last several years may indicate that meta-analysis is superseding traditional (narrative) reviews as a more objective and informative way of summarizing biological topics [ 8 ].

It is likely that the majority of us (biologists) have never conducted a meta-analysis. Chances are, however, that almost all of us have read at least one. Meta-analysis can not only provide quantitative information (such as overall effects and consistency among studies), but also qualitative information (such as dominant research trends and current knowledge gaps). In contrast to that of many medical and social scientists [ 3 , 5 ], the training of a biologist does not typically include meta-analysis [ 13 ] and, consequently, it may be difficult for a biologist to evaluate and interpret a meta-analysis. As with original research studies, the quality of meta-analyses vary immensely. For example, recent reviews have revealed that many meta-analyses in ecology and evolution miss, or perform poorly, several critical steps that are routinely implemented in the medical and social sciences [ 14 , 15 ] (but also see [ 16 , 17 ]).

The aim of this review is to provide ten appraisal questions that one should ask when reading a meta-analysis (cf., [ 18 , 19 ]), although these questions could also be used as simple and accessible guidelines for researchers conducting meta-analyses. In this review, we only deal with ‘narrow sense’ or ‘formal’ meta-analyses, where a statistical model is used to combine common effect sizes across studies, and the model takes into account sampling error, which is a function of sample size upon which each effect size is based (more details below; for discussions on the definitions of meta-analysis, see [ 15 , 20 , 21 ]). Further, our emphasis is on ‘biological’ meta-analyses, which deal with non-human species, including model organisms (nematodes, fruit flies, mice, and rats [ 22 ]) and non-model organisms, multiple species, or even entire ecosystems. For medical and social science meta-analyses concerning human subjects, large bodies of literature and excellent guidelines already exist, especially from overseeing organizations such as the Cochrane (Collaboration) and the Campbell Collaboration. We refer to the literature and the practices from these ‘experienced’ disciplines where appropriate. An overview and roadmap of this review is presented in Fig. 1 . Clearly, we cannot cover all details, but we cite key references in each section so that interested readers can follow up.

Mapping the process (on the left ) and main evaluation questions (on the right ) for meta-analysis. References to the relevant figures (Figs. 2 , 3 , 4 , 5 and 6 ) are included in the blue ovals

Q1: Is the search systematic and transparently documented?

When we read a biological meta-analysis, it used to be (and probably still is) common to see a statement like “a comprehensive search of the literature was conducted” without mention of the date and type of databases the authors searched. Documentation on keyword strings and inclusion criteria is often also very poor, making replication of search outcomes difficult or impossible. Superficial documentation also makes it hard to tell whether the search really was comprehensive, and, more importantly, systematic.

A comprehensive search attempts to identify (almost) all relevant studies/data for a given meta-analysis, and would thus not only include multiple major databases for finding published studies, but also make use of various lesser-known databases to locate reports and unpublished studies. Despite the common belief that search results should be similar among major databases, overlaps can sometimes be only moderate. For example, overlap in search results between Web of Science and Scopus (two of the most popular academic databases) is only 40–50% in many major fields [ 23 ]. As well as reading that a search is comprehensive, it is not uncommon to read that a search was systematic. A systematic search needs to follow a set of pre-determined protocols aimed at minimizing bias in the resulting data set. For example, a search of a single database, with pre-defined focal questions, search strings, and inclusion/exclusion criteria, can be considered systematic, negating some bias, though not necessarily being comprehensive. It is notable that a comprehensive search is preferable but not necessary (and often very difficult to do) whereas a systematic search is a must [ 24 ].

For most meta-analyses in medicine and social sciences, the search steps are systematic and well documented for reproducibility. This is because these studies follow a protocol named the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) statement [ 25 , 26 ]; note that a meta-analysis should usually be a part of a systematic review, although a systematic review may or may not include meta-analysis. The PRISMA statement facilitates transparency in reporting meta-analytic studies. Although it was developed for health sciences, we believe that the details of the four key elements of the PRISMA flow diagram (‘identification’, ‘screening’, ‘eligibility’, and ‘included’) should also be reported in a biological meta-analysis [ 8 ]. Figure 2 shows: A) the key ideas of the PRISMA statement, which the reader should compare with the content of a biological meta-analysis; and B) an example of a PRISMA diagram, which should be included as part of meta-analysis documentation. The bottom line is that one should assess whether search and screening procedures are reproducible and systematic (if not comprehensive; to minimize potential bias), given what is described in the meta-analytic paper [ 27 , 28 ].

Preferred Reporting Items for Systematic Reviews and Meta-Analyses. (PRISMA). a The main components of a systematic review or meta-analysis. The data search (identification) stage should, ideally, be preceded by the development of a detailed study protocol and its preregistration. Searching at least two literature databases, along with other sources of published and unpublished studies (using backward and forward citations, reviews, field experts, own data, grey and non-English literature) is recommended. It is also necessary to report search dates and exact keyword strings. The screening and eligibility stage should be based on a set of predefined study inclusion and exclusion criteria. Criteria might differ for the initial screening (title, abstract) compared with the full-text screening, but both need to be reported in detail. It is good practice to have at least two people involved in screening, with a plan in place for disagreement resolution and calculating disagreement rates. It is recommended that the list of studies excluded at the full-text screening stage, with reasons for their exclusion, is reported. It is also necessary to include a full list of studies included in the final dataset, with their basic characteristics. The extraction and coding (included) stage may also be performed by at least two people (as is recommended in medical meta-analysis). The authors should record the figures, tables, or text fragments within each paper from which the data were extracted, as well as report intermediate calculations, transformations, simplifications, and assumptions made during data extraction. These details make tracing mistakes easier and improve reproducibility. Documentation should include: a summary of the dataset, information on data and study details requested from authors, details of software used, and code for analyses (if applicable). b It is now becoming compulsory to present a PRISMA diagram, which records the flow of information starting from the data search and leading to the final data set. WoS Web of Science

Q2: What question and what effect size?

A meta-analysis should not just be descriptive. The best meta-analyses ask questions or test hypotheses, as is the case with original research. The meta-analytic questions and hypotheses addressed will generally determine the types of effect size statistics the authors use [ 29 , 30 , 31 , 32 ], as we explain below. Three broad groups of effect size statistics are based on are: 1) the difference between the means of two groups (for example, control versus treatment); 2) the relationship, or correlation, between two variables; and 3) the incidence of two outcomes (for example, dead or alive) in two groups (often represented in a 2 by 2 contingency table); see [ 3 , 7 ] for comprehensive lists of effect size statistics. Corresponding common effect size statistics are: 1) standardized mean difference (SMD; often referred to as d , Cohen’s d , Hedges’ d or Hedges’ g ) and the natural logarithm (log) of the response ratio (denoted as either ln R or ln RR [ 33 ]); 2) Fisher’s z -transformed correlation coefficient (often denoted as Zr ); and 3) the natural logarithm of the odds ratio (ln OR ) and relative risk (ln RR ; not to be confused with the response ratio).

We have also used and developed methods associated with less common effect size statistics such as log hazard ratio (ln HR ) for comparing survival curves [ 34 , 35 , 36 , 37 ], and also the log coefficient of variation ratio (ln CVR ) for comparing differences between the variances, rather than means, of two groups [ 38 , 39 , 40 ]. It is important to assess whether a study used an appropriate effect size statistic for the focal question. For example, when the authors are interested in the effect of a certain treatment, they should typically use SMD or response ratio, rather than Zr . Most biological meta-analyses will use one of the standardized effect sizes mentioned above. These effect sizes are referred to as standardized because they are unit-less (dimension-less), and thus are comparable across studies, even if those studies use different units for reporting (for example, size can be measured by weight [g] or length [cm]). However, unstandardized effect sizes (raw mean difference or regression coefficients) can be used, as happens in medical and social sciences, when all studies use common and directly comparable units (for example, blood pressure [mmHg]).

That being said, a biological meta-analysis will often bring together original studies of different types (such as combinations of experimental and observational studies). As a general rule, SMD is considered a better fit for experimental studies, whereas Zr is better for observational (correlational) studies. In some cases different effect sizes might be calculated for different studies in a meta-analysis and then be converted to a common type prior to analysis: for example, Zr and SMD (and also ln OR ) are inter-convertible. Thus, if we were, for example, interested in the effect of temperature on growth, we could combine results from experimental studies that compare mean growth at two temperatures (SMD) with results from observational studies that compare growth across a temperature gradient ( Zr ) in a single meta-analysis by transforming SMD from experimental studies to Zr [ 29 , 30 , 31 , 32 ].

Q3: Is non-independence taken into account?