Test faster, fix more

Most testing is ineffective

Normal “automated” software testing is surprisingly manual. Every scenario the computer runs, someone had to write by hand. Hypothesis can fix this.

Hypothesis is a new generation of tools for automating your testing process. It combines human understanding of your problem domain with machine intelligence to improve the quality of your testing process while spending less time writing tests.

Don’t believe us? Here’s what some of our users have to say:

At Lyst we've used it in a wide variety of situations, from testing APIs to machine learning algorithms and in all cases it's given us a great deal more confidence in that code. Alex Stapleton , Lead Backend Engineer at Lyst

When it comes to validating the correctness of your tools, nothing comes close to the thoroughness and power of Hypothesis. Cory Benfield , Open source Python developer

Hypothesis has been brilliant for expanding the coverage of our test cases, and also for making them much easier to read and understand, so we’re sure we’re testing the things we want in the way we want. Kristian Glass , Director of Technology at LaterPay

Hypothesis has located real defects in our code which went undetected by traditional test cases, simply because Hypothesis is more relentlessly devious about test case generation than us mere humans! Rob Smallshire , Sixty North

See more at our testimonials page .

What is Hypothesis?

Hypothesis is a modern implementation of property based testing , designed from the ground up for mainstream languages.

Hypothesis runs your tests against a much wider range of scenarios than a human tester could, finding edge cases in your code that you would otherwise have missed. It then turns them into simple and easy to understand failures that save you time and money compared to fixing them if they slipped through the cracks and a user had run into them instead.

Hypothesis currently has a fully featured open source Python implementation and a proof of concept Java implementation that we are looking for customers to partner with to turn into a finished project. Plans for C and C++ support are also in the works.

How do I use it?

Hypothesis integrates into your normal testing workflow. Getting started is as simple as installing a library and writing some code using it - no new services to run, no new test runners to learn.

Right now only the Python version of Hypothesis is production ready. To get started with it, check out the documentation or read some of the introductory articles here on this site .

Once you’ve got started, or if you have a large number of people who want to get started all at once, you may wish to engage our training services .

If you still want to know more, sign up to our newsletter to get an email every 1-2 weeks about the latest and greatest Hypothesis developments and how to test your software better.

Why Create hypothesis-auto?

Read Latest Documentation - Browse GitHub Code Repository

hypothesis-auto is an extension for the Hypothesis project that enables fully automatic tests for type annotated functions.

Key Features:

- Type Annotation Powered : Utilize your function's existing type annotations to build dozens of test cases automatically.

- Low Barrier : Start utilizing property-based testing in the lowest barrier way possible. Just run auto_test(FUNCTION) to run dozens of test.

- pytest Compatible : Like Hypothesis itself, hypothesis-auto has built-in compatibility with the popular pytest testing framework. This means that you can turn your automatically generated tests into individual pytest test cases with one line.

- Scales Up : As you find your self needing to customize your auto_test cases, you can easily utilize all the features of Hypothesis , including custom strategies per a parameter.

Installation:

To get started - install hypothesis-auto into your projects virtual environment:

pip3 install hypothesis-auto

poetry add hypothesis-auto

pipenv install hypothesis-auto

Usage Examples:

In old usage examples you will see _ prefixed parameters like _auto_verify= . This was done to avoid conflicting with existing function parameters. Based on community feedback the project switched to _ suffixes, such as auto_verify_= to keep the likely hood of conflicting low while avoiding the connotation of private parameters.

Framework independent usage

Basic auto_test usage:, adding an allowed exception:, using auto_test with a custom verification method:.

Custom verification methods should take a single Scenario and raise an exception to signify errors.

For the full set of parameters, you can pass into auto_test see its API reference documentation .

pytest usage

Using auto_pytest_magic to auto-generate dozens of pytest test cases:, using auto_pytest to run dozens of test case within a temporary directory:, using auto_pytest_magic with a custom verification method:.

For the full reference of the pytest integration API see the API reference documentation .

I wanted a no/low resistance way to start incorporating property-based tests across my projects. Such a solution that also encouraged the use of type hints was a win/win for me.

I hope you too find hypothesis-auto useful!

~Timothy Crosley

Data Science for Psychologists

79 notes on hypothesis testing, 79.1 hypothesis testing for a single proportion, 79.1.1 case study: organ donors.

Organ donors may seek the assistance of a medical consultant to help them navigate the surgical process. The consultant’s goal is to minimize any potential complications during the procedure and recovery. Patients may choose a consultant based on their past clients’ complication rates.

A consultant marketed her services by highlighting her exceptional track record. She stated that while the average complication rate for liver donor surgeries in the US is 10%, only 3 out of 62 surgeries she facilitated resulted in complications. She believes this rate demonstrates the significant impact of her work in reducing complications, making her a top choice for potential patients.

79.1.1.1 Data

79.1.2 parameter vs. statistic.

A parameter for a hypothesis test is the “true” value of interest. We typically estimate the parameter using a sample statistic as a point estimate .

\(p~\) : true rate of complication

\(\hat{p}~\) : rate of complication in the sample = \(\frac{3}{62}\) = 0.048

79.1.3 Correlation vs. causation

Is it possible to assess the consultant’s claim using the data?

No. The claim is that there is a causal connection, but the data are observational. For example, maybe patients who can afford a medical consultant can afford better medical care, which can also lead to a lower complication rate. Although it is not possible to assess the causal claim, it is still possible to test for an association using these data. The question to consider is, is the low complication rate of 3 out of 62 surgeries ( \(\hat{p}\) = 0.048) simply due to chance?

79.1.4 Two claims

- Null hypothesis: “There is nothing going on”

Complication rate for this consultant is no different than the US average of 10%

- Alternative hypothesis: “There is something going on”

Complication rate for this consultant is lower than the US average of 10%

79.1.5 Hypothesis testing as a court trial

Null hypothesis , \(H_0\) : Defendant is innocent

Alternative hypothesis , \(H_A\) : Defendant is guilty

Present the evidence: Collect data

Judge the evidence: “Could these data plausibly have happened by chance if the null hypothesis were true?”

- Yes: Fail to reject \(H_0\)

- No: Reject \(H_0\)

79.1.6 Hypothesis testing framework

Start with a null hypothesis, \(H_0\) , that represents the status quo

Set an alternative hypothesis, \(H_A\) , that represents the research question, i.e. what we’re testing for

Conduct a hypothesis test under the assumption that the null hypothesis is true and calculate a p-value (probability of observed or more extreme outcome given that the null hypothesis is true)

- if the test results suggest that the data do not provide convincing evidence for the alternative hypothesis, stick with the null hypothesis

- if they do, then reject the null hypothesis in favor of the alternative

79.1.7 Setting the hypotheses

Which of the following is the correct set of hypotheses?

\(H_0: p = 0.10\) ; \(H_A: p \ne 0.10\)

\(H_0: p = 0.10\) ; \(H_A: p > 0.10\)

\(H_0: p = 0.10\) ; \(H_A: p < 0.10\)

\(H_0: \hat{p} = 0.10\) ; \(H_A: \hat{p} \ne 0.10\)

\(H_0: \hat{p} = 0.10\) ; \(H_A: \hat{p} > 0.10\)

\(H_0: \hat{p} = 0.10\) ; \(H_A: \hat{p} < 0.10\)

79.1.8 Simulating the null distribution

Since \(H_0: p = 0.10\) , we need to simulate a null distribution where the probability of success (complication) for each trial (patient) is 0.10.

Describe how you would simulate the null distribution for this study using a bag of chips. How many chips? What colors? What do the colors indicate? How many draws? With replacement or without replacement ?

79.1.9 What do we expect?

When sampling from the null distribution, what is the expected proportion of success (complications)?

79.1.10 Simulation

Here are some simulations….

This process is getting boring… We need a way to automate this process!

79.1.11 Using tidymodels to generate the null distribution

79.1.12 visualizing the null distribution.

What would you expect the center of the null distribution to be?

79.1.13 Calculating the p-value, visually

What is the p-value, i.e. in what % of the simulations was the simulated sample proportion at least as extreme as the observed sample proportion?

79.1.14 Calculating the p-value, directly

79.1.15 significance level.

We often use 5% as the cutoff for whether the p-value is low enough that the data are unlikely to have come from the null model. This cutoff value is called the significance level , \(\alpha\) .

If p-value < \(\alpha\) , reject \(H_0\) in favor of \(H_A\) : The data provide convincing evidence for the alternative hypothesis.

If p-value > \(\alpha\) , fail to reject \(H_0\) in favor of \(H_A\) : The data do not provide convincing evidence for the alternative hypothesis.

79.1.16 Conclusion

What is the conclusion of the hypothesis test?

Since the p-value is greater than the significance level, we fail to reject the null hypothesis. These data do not provide convincing evidence that this consultant incurs a lower complication rate than 10% (overall US complication rate).

79.1.17 Let’s get real

100 simulations is not sufficient

We usually simulate around 15,000 times to get an accurate distribution, but we’ll do 1,000 here for efficiency.

79.1.18 Run the test

79.1.19 visualize and calculate.

79.2 One vs. two sided hypothesis tests

79.2.1 types of alternative hypotheses.

One sided (one tailed) alternatives: The parameter is hypothesized to be less than or greater than the null value, < or >

Two sided (two tailed) alternatives: The parameter is hypothesized to be not equal to the null value, \(\ne\)

- Calculated as two times the tail area beyond the observed sample statistic

- More objective, and hence more widely preferred

Average systolic blood pressure of people with Stage 1 Hypertension is 150 mm Hg.

Suppose we want to use a hypothesis test to evaluate whether a new blood pressure medication has an effect on the average blood pressure of heart patients. What are the hypotheses?

79.3 Testing for independence

79.3.1 is yawning contagious.

Do you think yawning is contagious?

An experiment conducted by the MythBusters tested if a person can be subconsciously influenced into yawning if another person near them yawns. ( Video )

79.3.2 Study description

This study involved 50 participants who were randomly divided into two groups. 34 participants were in the treatment group where they saw someone near them yawn, while 16 participants were in the control group where they did not witness a yawn.

The data are in the openintro package: yawn

79.3.3 Proportion of yawners

- Proportion of yawners in the treatment group: \(\frac{10}{34} = 0.2941\)

- Proportion of yawners in the control group: \(\frac{4}{16} = 0.25\)

- Difference: \(0.2941 - 0.25 = 0.0441\)

- Our results match the ones calculated on the MythBusters episode.

79.3.4 Independence?

Based on the proportions we calculated, do you think yawning is really contagious, i.e. are seeing someone yawn and yawning dependent?

79.3.5 Dependence, or another possible explanation?

The observed differences might suggest that yawning is contagious, i.e. seeing someone yawn and yawning are dependent.

But the differences are small enough that we might wonder if they might simple be due to chance .

Perhaps if we were to repeat the experiment, we would see slightly different results.

So we will do just that - well, somewhat - and see what happens.

Instead of actually conducting the experiment many times, we will simulate our results.

79.3.6 Two competing claims

“There is nothing going on.” Yawning and seeing someone yawn are independent , yawning is not contagious, observed difference in proportions is simply due to chance. \(\rightarrow\) Null hypothesis

“There is something going on.” Yawning and seeing someone yawn are dependent , yawning is contagious, observed difference in proportions is not due to chance. \(\rightarrow\) Alternative hypothesis

79.3.7 Simulation setup

A regular deck of cards is comprised of 52 cards: 4 aces, 4 of numbers 2-10, 4 jacks, 4 queens, and 4 kings.

Take out two aces from the deck of cards and set them aside.

The remaining 50 playing cards to represent each participant in the study:

- 14 face cards (including the 2 aces) represent the people who yawn.

- 36 non-face cards represent the people who don’t yawn.

79.3.8 Running the simulation

Shuffle the 50 cards at least 7 times 5 to ensure that the cards counted out are from a random process.

Count out the top 16 cards and set them aside. These cards represent the people in the control group.

Out of the remaining 34 cards (treatment group) count the (the number of people who yawned in the treatment group).

Calculate the difference in proportions of yawners (treatment - control), and plot it on the board.

Mark the difference you find on the dot plot on the board.

79.3.9 Simulation by hand

Do the simulation results suggest that yawning is contagious, i.e. does seeing someone yawn and yawning appear to be dependent?

79.3.10 Simulation by computation

- Start with the data frame

- Since the response variable is categorical, specify the level which should be considered as “success”

- State the null hypothesis (yawning and whether or not you see someone yawn are independent)

- Generate simulated differences via permutation

- Since the explanatory variable is categorical, specify the order in which the subtraction should occur for the calculation of the sample statistic, \((\hat{p}_{treatment} - \hat{p}_{control})\) .

79.3.11 Recap

- Save the result

79.3.12 Visualizing the null distribution

79.3.13 Calculating the p-value, visually

What is the p-value, i.e. in what % of the simulations was the simulated difference in sample proportion at least as extreme as the observed difference in sample proportions?

79.3.14 Calculating the p-value, directly

79.3.15 conclusion.

Do you “buy” this conclusion?

http://www.dartmouth.edu/~chance/course/topics/winning_number.html ↩︎

Integrating hypothesis highlights with obsidian.md

25 Feb 2021

# annotations # augmentation # obsidian # hypothesis # exploratory-search

Tools for exploratory search and web annotation

There’s a whole lot to write about building a personal information system. The memex , described in 1945 by Vannevar Bush inspired an ocean of thoughts on augmentation, and human computer interaction (perhaps most prominently championed by Doug Engelbart ). However, personal libraries, encyclopedias and knowledge work pre-dates the days of the computer (see e.g. this ref on the history of the encyclopedia and knowledge systems , or the “Reading Strategies for Coping with Information Overload, ca.1550-1700” ).

The history of information management / information overload is fascinating, but that’s for another time. In this blog, I want to introduce two of my favourite tools to interact with new information on the web, and a simple way to link them.

Hypothesis - annotating the web

The first tool is Hypothesis . Hypothesis is an open source initiative to annotate the web. I’ve enabled it on this page, so the option to annotate something should pop up if you try to highlight any part of the text. This allows for a conversation to happen in the marginalia of any web-document.

What’s cool about this is that it invites for a conversation with the document, and you can revisit that conversation in the future. Again, this is not conceptually new. Many people (e.g. academics) still print their documents to highlight and annotate them. A much older example of this type of conversation on a document is the Talmud, one of the central Jewish texts, printed with the primary layer of text (the Misnah, see image Anatomy of a page of Talmud: (A) Mishnah, (B) Gemara, (C) Commentary of Rashi, Rabbi Shlomo Itzchaki, 1040-1105, (D) Tosfot, a series of commentators following Rashi, (E) various additional commentaries around the edge of the page. ) in the center of each page and commentaries of that text layered around it (Abram, 1996; chap 7).

From a simple browser perspective, it also removes friction from note taking - you don’t need to open a new note, copy important passages and add your thoughts to them - you can highlight and annotate directly.

Obsidian.md - the power of linked thought

Until very recently, organising personal notes on a computer was a matter of placing files in hierarchically organised folders. To cut the criticism short, it’s clunky and it’s not how the mind works. We connect things in many intricate ways. Just think of words and semantic networks: orange would be connected to a network of colours, and the meanings attached to that colour (e.g. an amber traffic light, sunset, etc), but also to the fruit, the tree, jam recipes etc. In the same way, a note that is relevant to a given topic may be related to another topic and may be useful to be retrieved across multiple contexts (not just a fixed folder).

The most vocal advocate of hypertext, hyperlinks etc (connecting related texts to a document via links) is to this day probably still Ted Nelson (he started his initial Xanadu project in 1960 and is still fighting for his initial vision of the interet today ). Among other battles, he’s defended strongly that hyperlinks should go both way. Through bi-directional links, this creates a network of notes, carrying an implicit hierarchy (see e.g. the idea of network centrality ).

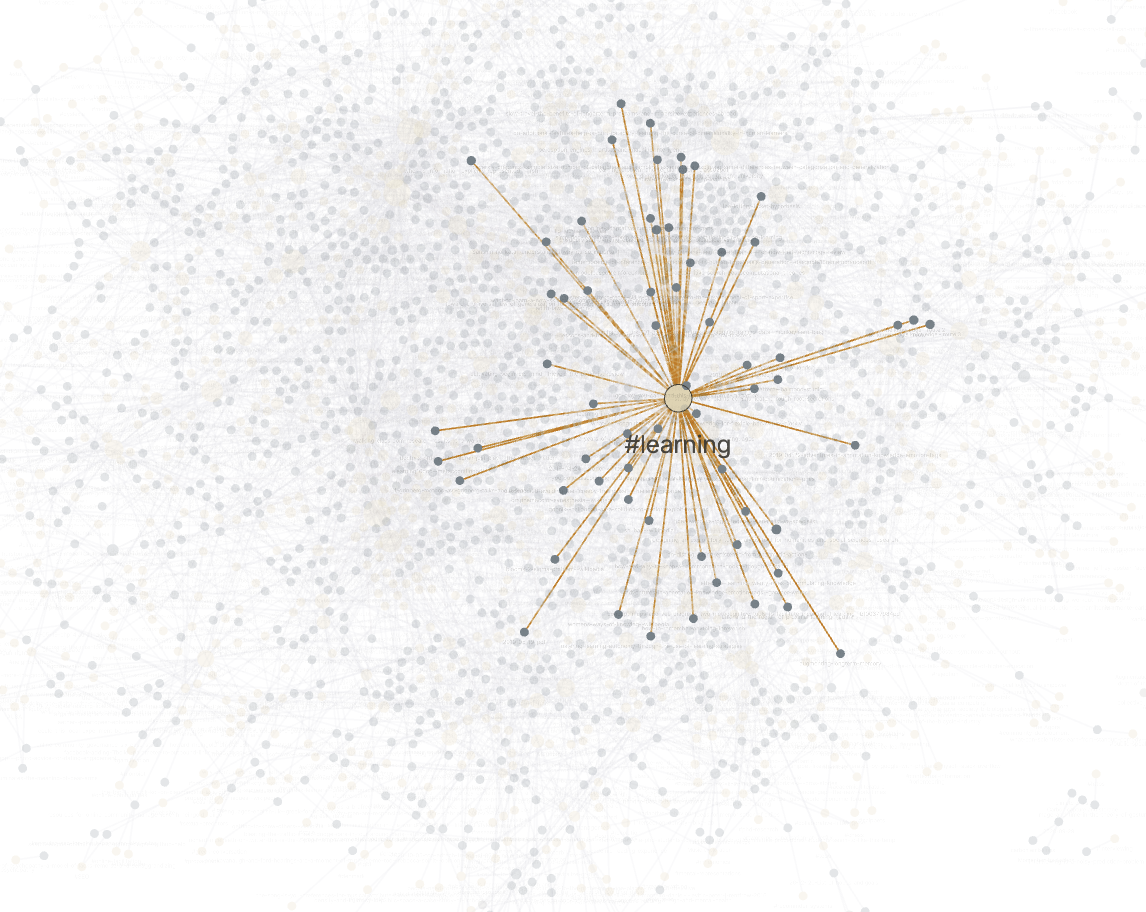

But I digress.. A new wind has brought a revival of enthusiasm for tools that support linked thought. The one I’ve been interested in is called Obsidian.md (ht to esben sørig ). It can basically be thought of as a personal wiki, but with the ease of simply opening a new tab and writing away. On top of biredictional links, it also has the #tag functionality, which creates a further network layer. One of the hoped behaviours that arises from the creation and use of these networks of notes is what some call “gardening” of notes: revisiting previous notes, which fosters memory consolidation and serependitous connections. A further benefit is efficient search (especially ambiguous search, because there are multiple ways to go about it), and a better “big picture” overview of one’s notes/thoughts.

Linking the two - linked annotations

I got very excited about the idea of having highlights and annotations directly populating a network of notes, as it connects neatly two intuitive ways of engaging with knowledge. One of the issues I’ve found with bookmarks and notetaking (I use pinboard for this) is how difficult it can be to revisit them, or to navigate through previously explored topics (cf. gardening). Obsidian offers this seemlessly with tags, hyperlinks and the graph view.

I’ve put instructions and a python script in this github repository .

The process is as simple as exporting hypothesis annotations and parsing the json file to extract separate markdown files, while grouping highlights and notes on a single document.

I hope this will encourage more annotations ☀️ and more gardening 🌱

- Details and advanced features

- Edit on GitHub

Details and advanced features ¶

This is an account of slightly less common Hypothesis features that you don’t need to get started but will nevertheless make your life easier.

Additional test output ¶

Normally the output of a failing test will look something like:

With the repr of each keyword argument being printed.

Sometimes this isn’t enough, either because you have a value with a __repr__() method that isn’t very descriptive or because you need to see the output of some intermediate steps of your test. That’s where the note function comes in:

Report this value for the minimal failing example.

The note is printed for the minimal failing example of the test in order to include any additional information you might need in your test.

Test statistics ¶

If you are using pytest you can see a number of statistics about the executed tests by passing the command line argument --hypothesis-show-statistics . This will include some general statistics about the test:

For example if you ran the following with --hypothesis-show-statistics :

You would see:

The final “Stopped because” line is particularly important to note: It tells you the setting value that determined when the test should stop trying new examples. This can be useful for understanding the behaviour of your tests. Ideally you’d always want this to be max_examples .

In some cases (such as filtered and recursive strategies) you will see events mentioned which describe some aspect of the data generation:

You would see something like:

You can also mark custom events in a test using the event function:

Record an event that occurred during this test. Statistics on the number of test runs with each event will be reported at the end if you run Hypothesis in statistics reporting mode.

Event values should be strings or convertible to them. If an optional payload is given, it will be included in the string for Test statistics .

You will then see output like:

Arguments to event can be any hashable type, but two events will be considered the same if they are the same when converted to a string with str .

Making assumptions ¶

Sometimes Hypothesis doesn’t give you exactly the right sort of data you want - it’s mostly of the right shape, but some examples won’t work and you don’t want to care about them. You can just ignore these by aborting the test early, but this runs the risk of accidentally testing a lot less than you think you are. Also it would be nice to spend less time on bad examples - if you’re running 100 examples per test (the default) and it turns out 70 of those examples don’t match your needs, that’s a lot of wasted time.

Calling assume is like an assert that marks the example as bad, rather than failing the test.

This allows you to specify properties that you assume will be true, and let Hypothesis try to avoid similar examples in future.

For example suppose you had the following test:

Running this gives us:

This is annoying. We know about NaN and don’t really care about it, but as soon as Hypothesis finds a NaN example it will get distracted by that and tell us about it. Also the test will fail and we want it to pass.

So let’s block off this particular example:

And this passes without a problem.

In order to avoid the easy trap where you assume a lot more than you intended, Hypothesis will fail a test when it can’t find enough examples passing the assumption.

If we’d written:

Then on running we’d have got the exception:

How good is assume? ¶

Hypothesis has an adaptive exploration strategy to try to avoid things which falsify assumptions, which should generally result in it still being able to find examples in hard to find situations.

Suppose we had the following:

Unsurprisingly this fails and gives the falsifying example [] .

Adding assume(xs) to this removes the trivial empty example and gives us [0] .

Adding assume(all(x > 0 for x in xs)) and it passes: the sum of a list of positive integers is positive.

The reason that this should be surprising is not that it doesn’t find a counter-example, but that it finds enough examples at all.

In order to make sure something interesting is happening, suppose we wanted to try this for long lists. e.g. suppose we added an assume(len(xs) > 10) to it. This should basically never find an example: a naive strategy would find fewer than one in a thousand examples, because if each element of the list is negative with probability one-half, you’d have to have ten of these go the right way by chance. In the default configuration Hypothesis gives up long before it’s tried 1000 examples (by default it tries 200).

Here’s what happens if we try to run this:

In: test_sum_is_positive()

As you can see, Hypothesis doesn’t find many examples here, but it finds some - enough to keep it happy.

In general if you can shape your strategies better to your tests you should - for example integers(1, 1000) is a lot better than assume(1 <= x <= 1000) , but assume will take you a long way if you can’t.

Defining strategies ¶

The type of object that is used to explore the examples given to your test function is called a SearchStrategy . These are created using the functions exposed in the hypothesis.strategies module.

Many of these strategies expose a variety of arguments you can use to customize generation. For example for integers you can specify min and max values of integers you want. If you want to see exactly what a strategy produces you can ask for an example:

Many strategies are built out of other strategies. For example, if you want to define a tuple you need to say what goes in each element:

Further details are available in a separate document .

The gory details of given parameters ¶

A decorator for turning a test function that accepts arguments into a randomized test.

This is the main entry point to Hypothesis.

The @given decorator may be used to specify which arguments of a function should be parametrized over. You can use either positional or keyword arguments, but not a mixture of both.

For example all of the following are valid uses:

The following are not:

The rules for determining what are valid uses of given are as follows:

You may pass any keyword argument to given .

Positional arguments to given are equivalent to the rightmost named arguments for the test function.

Positional arguments may not be used if the underlying test function has varargs, arbitrary keywords, or keyword-only arguments.

Functions tested with given may not have any defaults.

The reason for the “rightmost named arguments” behaviour is so that using @given with instance methods works: self will be passed to the function as normal and not be parametrized over.

The function returned by given has all the same arguments as the original test, minus those that are filled in by @given . Check the notes on framework compatibility to see how this affects other testing libraries you may be using.

Targeted example generation ¶

Targeted property-based testing combines the advantages of both search-based and property-based testing. Instead of being completely random, T-PBT uses a search-based component to guide the input generation towards values that have a higher probability of falsifying a property. This explores the input space more effectively and requires fewer tests to find a bug or achieve a high confidence in the system being tested than random PBT. ( Löscher and Sagonas )

This is not always a good idea - for example calculating the search metric might take time better spent running more uniformly-random test cases, or your target metric might accidentally lead Hypothesis away from bugs - but if there is a natural metric like “floating-point error”, “load factor” or “queue length”, we encourage you to experiment with targeted testing.

Calling this function with an int or float observation gives it feedback with which to guide our search for inputs that will cause an error, in addition to all the usual heuristics. Observations must always be finite.

Hypothesis will try to maximize the observed value over several examples; almost any metric will work so long as it makes sense to increase it. For example, -abs(error) is a metric that increases as error approaches zero.

Example metrics:

Number of elements in a collection, or tasks in a queue

Mean or maximum runtime of a task (or both, if you use label )

Compression ratio for data (perhaps per-algorithm or per-level)

Number of steps taken by a state machine

The optional label argument can be used to distinguish between and therefore separately optimise distinct observations, such as the mean and standard deviation of a dataset. It is an error to call target() with any label more than once per test case.

The more examples you run, the better this technique works.

As a rule of thumb, the targeting effect is noticeable above max_examples=1000 , and immediately obvious by around ten thousand examples per label used by your test.

Test statistics include the best score seen for each label, which can help avoid the threshold problem when the minimal example shrinks right down to the threshold of failure ( issue #2180 ).

We recommend that users also skim the papers introducing targeted PBT; from ISSTA 2017 and ICST 2018 . For the curious, the initial implementation in Hypothesis uses hill-climbing search via a mutating fuzzer, with some tactics inspired by simulated annealing to avoid getting stuck and endlessly mutating a local maximum.

Custom function execution ¶

Hypothesis provides you with a hook that lets you control how it runs examples.

This lets you do things like set up and tear down around each example, run examples in a subprocess, transform coroutine tests into normal tests, etc. For example, TransactionTestCase in the Django extra runs each example in a separate database transaction.

The way this works is by introducing the concept of an executor. An executor is essentially a function that takes a block of code and run it. The default executor is:

You define executors by defining a method execute_example on a class. Any test methods on that class with @given used on them will use self.execute_example as an executor with which to run tests. For example, the following executor runs all its code twice:

Note: The functions you use in map, etc. will run inside the executor. i.e. they will not be called until you invoke the function passed to execute_example .

An executor must be able to handle being passed a function which returns None, otherwise it won’t be able to run normal test cases. So for example the following executor is invalid:

and should be rewritten as:

An alternative hook is provided for use by test runner extensions such as pytest-trio , which cannot use the execute_example method. This is not recommended for end-users - it is better to write a complete test function directly, perhaps by using a decorator to perform the same transformation before applying @given .

For authors of test runners however, assigning to the inner_test attribute of the hypothesis attribute of the test will replace the interior test.

The new inner_test must accept and pass through all the *args and **kwargs expected by the original test.

If the end user has also specified a custom executor using the execute_example method, it - and all other execution-time logic - will be applied to the new inner test assigned by the test runner.

Making random code deterministic ¶

While Hypothesis’ example generation can be used for nondeterministic tests, debugging anything nondeterministic is usually a very frustrating exercise. To make things worse, our example shrinking relies on the same input causing the same failure each time - though we show the un-shrunk failure and a decent error message if it doesn’t.

By default, Hypothesis will handle the global random and numpy.random random number generators for you, and you can register others:

Register (a weakref to) the given Random-like instance for management by Hypothesis.

You can pass instances of structural subtypes of random.Random (i.e., objects with seed, getstate, and setstate methods) to register_random(r) to have their states seeded and restored in the same way as the global PRNGs from the random and numpy.random modules.

All global PRNGs, from e.g. simulation or scheduling frameworks, should be registered to prevent flaky tests. Hypothesis will ensure that the PRNG state is consistent for all test runs, always seeding them to zero and restoring the previous state after the test, or, reproducibly varied if you choose to use the random_module() strategy.

register_random only makes weakrefs to r , thus r will only be managed by Hypothesis as long as it has active references elsewhere at runtime. The pattern register_random(MyRandom()) will raise a ReferenceError to help protect users from this issue. This check does not occur for the PyPy interpreter. See the following example for an illustration of this issue

Inferred strategies ¶

In some cases, Hypothesis can work out what to do when you omit arguments. This is based on introspection, not magic, and therefore has well-defined limits.

builds() will check the signature of the target (using signature() ). If there are required arguments with type annotations and no strategy was passed to builds() , from_type() is used to fill them in. You can also pass the value ... ( Ellipsis ) as a keyword argument, to force this inference for arguments with a default value.

@given does not perform any implicit inference for required arguments, as this would break compatibility with pytest fixtures. ... ( Ellipsis ), can be used as a keyword argument to explicitly fill in an argument from its type annotation. You can also use the hypothesis.infer alias if writing a literal ... seems too weird.

@given(...) can also be specified to fill all arguments from their type annotations.

Limitations ¶

Hypothesis does not inspect PEP 484 type comments at runtime. While from_type() will work as usual, inference in builds() and @given will only work if you manually create the __annotations__ attribute (e.g. by using @annotations(...) and @returns(...) decorators).

The typing module changes between different Python releases, including at minor versions. These are all supported on a best-effort basis, but you may encounter problems. Please report them to us, and consider updating to a newer version of Python as a workaround.

Type annotations in Hypothesis ¶

If you install Hypothesis and use mypy 0.590+, or another PEP 561 -compatible tool, the type checker should automatically pick up our type hints.

Hypothesis’ type hints may make breaking changes between minor releases.

Upstream tools and conventions about type hints remain in flux - for example the typing module itself is provisional - and we plan to support the latest version of this ecosystem, as well as older versions where practical.

We may also find more precise ways to describe the type of various interfaces, or change their type and runtime behaviour together in a way which is otherwise backwards-compatible. We often omit type hints for deprecated features or arguments, as an additional form of warning.

There are known issues inferring the type of examples generated by deferred() , recursive() , one_of() , dictionaries() , and fixed_dictionaries() . We will fix these, and require correspondingly newer versions of Mypy for type hinting, as the ecosystem improves.

Writing downstream type hints ¶

Projects that provide Hypothesis strategies and use type hints may wish to annotate their strategies too. This is a supported use-case, again on a best-effort provisional basis. For example:

SearchStrategy is the type of all strategy objects. It is a generic type, and covariant in the type of the examples it creates. For example:

integers() is of type SearchStrategy[int] .

lists(integers()) is of type SearchStrategy[List[int]] .

SearchStrategy[Dog] is a subtype of SearchStrategy[Animal] if Dog is a subtype of Animal (as seems likely).

SearchStrategy should only be used in type hints. Please do not inherit from, compare to, or otherwise use it in any way outside of type hints. The only supported way to construct objects of this type is to use the functions provided by the hypothesis.strategies module!

The Hypothesis pytest plugin ¶

Hypothesis includes a tiny plugin to improve integration with pytest , which is activated by default (but does not affect other test runners). It aims to improve the integration between Hypothesis and Pytest by providing extra information and convenient access to config options.

pytest --hypothesis-show-statistics can be used to display test and data generation statistics .

pytest --hypothesis-profile=<profile name> can be used to load a settings profile .

pytest --hypothesis-verbosity=<level name> can be used to override the current verbosity level .

pytest --hypothesis-seed=<an int> can be used to reproduce a failure with a particular seed .

pytest --hypothesis-explain can be used to temporarily enable the explain phase .

Finally, all tests that are defined with Hypothesis automatically have @pytest.mark.hypothesis applied to them. See here for information on working with markers .

Pytest will load the plugin automatically if Hypothesis is installed. You don’t need to do anything at all to use it.

Use with external fuzzers ¶

Sometimes, you might want to point a traditional fuzzer such as python-afl , pythonfuzz , or Google’s atheris (for Python and native extensions) at your code. Wouldn’t it be nice if you could use any of your @given tests as fuzz targets, instead of converting bytestrings into your objects by hand?

Depending on the input to fuzz_one_input , one of three things will happen:

If the bytestring was invalid, for example because it was too short or failed a filter or assume() too many times, fuzz_one_input returns None .

If the bytestring was valid and the test passed, fuzz_one_input returns a canonicalised and pruned buffer which will replay that test case. This is provided as an option to improve the performance of mutating fuzzers, but can safely be ignored.

If the test failed , i.e. raised an exception, fuzz_one_input will add the pruned buffer to the Hypothesis example database and then re-raise that exception. All you need to do to reproduce, minimize, and de-duplicate all the failures found via fuzzing is run your test suite!

Note that the interpretation of both input and output bytestrings is specific to the exact version of Hypothesis you are using and the strategies given to the test, just like the example database and @reproduce_failure decorator.

Interaction with settings ¶

fuzz_one_input uses just enough of Hypothesis’ internals to drive your test function with a fuzzer-provided bytestring, and most settings therefore have no effect in this mode. We recommend running your tests the usual way before fuzzing to get the benefits of healthchecks, as well as afterwards to replay, shrink, deduplicate, and report whatever errors were discovered.

The database setting is used by fuzzing mode - adding failures to the database to be replayed when you next run your tests is our preferred reporting mechanism and response to the ‘fuzzer taming’ problem .

The verbosity and stateful_step_count settings work as usual.

The deadline , derandomize , max_examples , phases , print_blob , report_multiple_bugs , and suppress_health_check settings do not affect fuzzing mode.

Thread-Safety Policy ¶

As discussed in issue #2719 , Hypothesis is not truly thread-safe and that’s unlikely to change in the future. This policy therefore describes what you can expect if you use Hypothesis with multiple threads.

Running tests in multiple processes , e.g. with pytest -n auto , is fully supported and we test this regularly in CI - thanks to process isolation, we only need to ensure that DirectoryBasedExampleDatabase can’t tread on its own toes too badly. If you find a bug here we will fix it ASAP.

Running separate tests in multiple threads is not something we design or test for, and is not formally supported. That said, anecdotally it does mostly work and we would like it to keep working - we accept reasonable patches and low-priority bug reports. The main risks here are global state, shared caches, and cached strategies.

Using multiple threads within a single test , or running a single test simultaneously in multiple threads, makes it pretty easy to trigger internal errors. We usually accept patches for such issues unless readability or single-thread performance suffer.

Hypothesis assumes that tests are single-threaded, or do a sufficiently-good job of pretending to be single-threaded. Tests that use helper threads internally should be OK, but the user must be careful to ensure that test outcomes are still deterministic. In particular it counts as nondeterministic if helper-thread timing changes the sequence of dynamic draws using e.g. the data() .

Interacting with any Hypothesis APIs from helper threads might do weird/bad things, so avoid that too - we rely on thread-local variables in a few places, and haven’t explicitly tested/audited how they respond to cross-thread API calls. While data() and equivalents are the most obvious danger, other APIs might also be subtly affected.

Tools, Plug-ins and Integrations

These tools are created and maintained by the Hypothesis community. Some are code experiments, some are full implementations being adopted by users every day. If you have a project or know of one that should be listed here, please let us know !

Learning Management Systems (LMS)

Link: https://web.hypothes.is/education/lms/

Description: An official Hypothesis application that enables integration with any LMS supporting IMS Learning Tools Interoperability (LTI), such as Blackboard Learn, Desire2Learn Brightspace, Instructure Canvas, Moodle, Sakai, and Schoology, to provide capabilities including single sign-on and automatic private group provisioning for each class.

Content Management Systems (CMS)

Link: https://wordpress.org/plugins/hypothesis/

Screencast: http://jonudell.net/h/wordpress-via-pdf.mp4 (illustrates PDF activation)

Description: Built by Jake Hartnell and Benjamin Young. Adds the Hypothesis sidebar to your wordpress website. Converts links to PDFs in the Media folder to via.hypothes.is links.

WordPress Hypothesis aggregator

Link: https://github.com/kshaffer/hypothesis_aggregator

Description: A WordPress plugin that allows you to aggregate Hypothesis annotations on a single page or post on your WordPress blog.

OJS Hypothesis Plugin

Link: https://github.com/asmecher/hypothesis

Description: adds Hypothesis integration to the public article view of Open Journal Systems , permitting annotation and commenting.

Omeka Annotator

Link: https://github.com/ebellempire/Annotator

Description: A Hypothesis text annotation plugin for Omeka .

DocDrop.org

Link: http://docdrop.org/

Description: A place to upload PDFs and other documents for annotation using Hypothesis, as well as a Google Drive plugin for PDFs.

Drupal, from Little Blue Labs

Link: https://www.drupal.org/project/hypothesis

Description: This simple module embeds Hypothesis into your Drupal site.

Archiving and downloading annotations

Use any of the following community-provided tools to export (download) your annotations.

For note-taking apps

Send Hypothesis annotations directly into your notes.

Roam: (via RoamJS) Hypothesis Extension

Import your Hypothesis notes and highlights into Roam.

Readwise has a built-in integration with Hypothesis. Visit your syncs to set it up.

Obsidian: Obsidian Hypothesis Plugin

Obsidian Hypothesis (Community Plugin) is an unofficial plugin to synchronize Hypothesis web article highlights/annotations into your Obsidian Vault.

Alternatives for technical users

- Gooseberry : A command line utility to generate a knowledge base from Hypothesis annotations

- Annotation tools : python tools to link Hypothesis and Pinboard annotations to Obsidian

- Chris Aldrich’s gist : retrieve your annotations into Obsidian (for templater plugin)

LogSeq: Logseq Hypothesis Plugin

Retrieve your online web annotations and insert them directly into Logseq. All you need to do is enter your API key, and then you can add all your annotations to your Logseq.

Org Mode: hypothesis.el

Import data from hypothes.is into Emac.

Online tools from the community

Standalone tools found on the web.

View annotations by user, group, URL, or tag. Export results to HTML, CSV, text, or Markdown. Screencast: https://jonudell.net/h/facet.mp4

Hypothesis collector

Create google sheets with annotations based on search queries.

Chrome extensions

Grab Hypothesis annotations directly from your browser.

Hypothesis to Markdown bullet points

Browser extension for fetching and formatting Hypothes.is annotations into markdown bullet points, ready for copying into Roam, Notion or similar apps.

For technical users

If you have some skills at the command line or in a text editor, these ones are for you!

Gooseberry CLI tool

A command line utility to generate a knowledge base from Hypothesis annotations. (Very slick, with Obsidian compatibility, too)

Hypotheiss + Steampipe

Steampipe is an open source CLI to instantly query cloud APIs using SQL. List annotations from Hypothesis.

hypothesis-to-bullet

Script to fetch Hypothes.is annotations or Twitter threads and output Markdown that is suitable for Roam for macOS computers.

recon16-annotations

Place annotations on a Cloudant database for analysis.

Added functionality

Slack notifications.

Link: https://github.com/judell/h_notify

Description: Receive Slack notifications made by specific users, or groups, or on specific urls or tags.

Hypothesis EPUB reader

Link: https://github.com/JakeHartnell/HypothesisReader

Description: A mashup of the open source Hypothesis and Epub.js projects.

Annotran: Annotate to translate

Link: https://github.com/birkbeckOLH/annotran

Description: Annotran is an extension to the hypothesis annotation framework that allows users to translate web pages themselves and to view other users’ translations. Read about it here and here .

Annotation Powered Survey

Link: https://github.com/judell/AnnotationPoweredSurvey

Description: A toolkit for building online surveys that ask questions to which answers are annotations.

ClinGen Workflow

Link: https://github.com/judell/ClinGen

Description: An approach to annotation-powered biocuration.

CopyAnnotations

Link: https://github.com/judell/CopyAnnotations

Description: Copy top-level annotations from one URL (and/or group) to another.

Link: https://github.com/judell/TagRename

Description: Rename Hypothesis tags.

Programming tools

Hypothesis ruby gem.

Link: https://github.com/javierarce/hypothesis

Description: Unofficial ruby gem for the Hypothesis API.

Link: https://github.com/embo-press/hypothepy/tree/master/hypothepy

Description: A lightweight Python API for Hypothes.is

Link: https://github.com/kshaffer/pypothesis

Description: Python scripts for interacting with the Hypothesis API.

Link: https://github.com/judell/Hypothesis

Description: Yet another Python wrapper for the Hypothesis API.

Hypothesisr

Link: https://github.com/mdlincoln/hypothesisr

Description: An R package that allows users to add, search for, and retrieve annotation data.

HypothesisPHP

https://github.com/asmecher/HypothesisPHP

Description: A simple PHP wrapper for the Hypothesis API.

https://github.com/tgbugs/hypush

Monitor the Hypothesis websocket in order to push realtime notifications to other channels.

Integrations

Link: https://github.com/SciCrunch/scibot

Description: This tool finds RRIDs in articles, looks them up in the SciCrunch resolver and creates Hypothesis annotations that anchor to the RRIDs and display lookup results. More info here .

AAAS/Discover

Link: https://github.com/judell/StandaloneAnchoring

Description: The American Association for the Advancement of Science (AAAS) uses Hypothesis for graduate students to annotate articles using specific highlight colors and categories. More info here .

Extracting structured findings from scientific papers

Link: https://gist.github.com/judell/ca913dd64a29f852afd3

Description: David Kennedy reviews the literature in his field and extracts findings, which are structured interpretations of statements in scientific papers. He’s using Hypothesis to create reports based on structured annotation. More here .

Hypothesis/Europe PubMed Central

Screencast: http://jonudell.net/h/scibot-epmc-v1.mp4

Description: Bring annotations from two systems together in the context of a document.

Infinite Ulysses

Link: https://github.com/amandavisconti/infinite-ulysses-public

Description: created by Amanda Visconti , Infinite Ulysses lets readers of James Joyce’s challenging novel Ulysses while annotating it with their questions and interpretations and perusing annotations from fellow readers.

Other ideas to try

Screen-reader-accessible annotation.

Screencast: http://jonudell.net/h/accessible_annotation_02.mp4

Link: https://blog.jonudell.net/2016/09/28/towards-accessible-annotation-a-prototype-and-some-questions/

Description: Use a hotkey to select an element, hear it spoken, and open the annotation editor.

Copy annotation to clipboard

Screencast: http://jonudell.net/h/copy_paste_annotation_v1.mp4

Description: Copy the text of the annotation to the clipboard by clicking an icon.

Highlight groups with annotations

Screencast: http://jonudell.net/h/highlight-groups-with-annotations-v1.mp4

Description: Highlights the group name with a different color on the dropdown menu, when there are annotations on the group.

Group admin

Screencast: http://jonudell.net/h/group-admin-v1.mp4

Description: Enables a group of users who have given their H API keys to be subject to admin by an “admin user” who is the H user running the prototype extension. The admin user running can edit or delete annotations by subject users.

Controlled tagging

Description: Restrict tags to a controlled list.

Screencast: http://jonudell.net/h/controlled_tagging_v1.mp4 (manage the list in a Google doc, activate it on a per-Hypothesis-group basis)

Screencast: http://jonudell.net/h/controlled-tagging-with-scigraph-ontology.mp4 (source the list from an external ontology service)

Screencast: http://jonudell.net/h/annotation-applet_03.mp4 (embed a single-page app in the annotation body to enable full control of tag interaction and context)

Search/faceted navigation

Description: An early prototype of faceted navigation

Screencast: http://jonudell.net/h/activity_pages_early_prototype.mp4

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

Simulation Hypothesis

Popular repositories loading.

志怪录:鬼话连篇 / 平生不做亏心事 半夜不怕鬼敲门

平生不做亏心事 半夜不怕鬼敲门 / 鬼话连篇

Repositories

This organization has no public members. You must be a member to see who’s a part of this organization.

Top languages

Most used topics.

IMAGES

VIDEO

COMMENTS

The Hypothesis browser extensions. JavaScript 478 125. bouncer Public. The "hyp.is" service that takes a user to a URL with Hypothesis activated. Python 47 26. pdf.js-hypothes.is Public. PDF.js + Hypothesis viewer / annotator. JavaScript 363 72.

A Hypothesis implementation then generates simple and comprehensible examples that make your tests fail. This simplifies writing your tests and makes them more powerful at the same time, by letting software automate the boring bits and do them to a higher standard than a human would, freeing you to focus on the higher level test logic.

Hypothesis is a powerful, flexible, and easy to use library for property-based testing. Python 7.4k 578 hypothesis-java hypothesis-java Public

Getting started. Now you have the extension up and running. It's time to start annotating some documents. Create an account using the sidebar on the right of the screen. Pin the Hypothesis extension in Chrome (1 and 2), then activate the sidebar by clicking the button in the location bar (3). Go forth and annotate!

Welcome to Hypothesis! Hypothesis is a Python library for creating unit tests which are simpler to write and more powerful when run, finding edge cases in your code you wouldn't have thought to look for. It is stable, powerful and easy to add to any existing test suite. It works by letting you write tests that assert that something should be ...

Go to any page or document in your browser with the Hypothesis extension turned on. Select the content you want to annotate, and a window will open on the right side of the browser. You can also view and respond to other public or group annotations. View our Annotation Basics for tips on using Hypothesis.

Hypothesis. There are a couple of i nstallations of Hypothesis to choose from: If you want to annotate and comment on documents then install our browser extension. If you wish to install Hypothesis on your own site then head over to GitHub .

For example, everything_except(int) returns a strategy that can generate anything that from_type() can ever generate, except for instances of int, and excluding instances of types added via register_type_strategy(). This is useful when writing tests which check that invalid input is rejected in a certain way. hypothesis.strategies. frozensets (elements, *, min_size = 0, max_size = None ...

Annotate articles, websites, videos, documents, apps, and more - without clicking away or posting elsewhere. Hypothesis is easy to use and based on open web standards, so it works across the entire internet. 01. Comment & Highlight. 02.

Hypothesis is open source. We typically use the 2-Clause BSD License (aka the "Simplified BSD License" or the "FreeBSD License"). If you're ready to dive in, check out our contributor guide which details our work-flow, conventions, and explains our contributor license agreement. <p>Contribute Our team is working hard to build products ...

Annotate any PDF: If you've already equipped your browser with Hypothesis, just navigate to a PDF online or open any PDF saved on your device using your browser's File menu, and then activate Hypothesis in your browser to start annotating. If you don't have Hypothesis in your browser yet, you can also drag and drop any PDF on the target ...

Hypothesis for Python - version 6.71.0. This release adds "GitHubArtifactDatabase", a new database backend. that allows developers to access the examples found by a Github. Actions CI job. This is particularly useful for workflows that involve. continuous fuzzing, like HypoFuzz. Thanks to Agustín Covarrubias for this feature!

A detail: This works because Hypothesis ignores any arguments it hasn't been told to provide (positional arguments start from the right), so the self argument to the test is simply ignored and works as normal. This also means that Hypothesis will play nicely with other ways of parameterizing tests. e.g it works fine if you use pytest fixtures ...

Hypothesis is a modern implementation of property based testing, designed from the ground up for mainstream languages. Hypothesis runs your tests against a much wider range of scenarios than a human tester could, finding edge cases in your code that you would otherwise have missed. It then turns them into simple and easy to understand failures ...

hypothesis-auto is an extension for the Hypothesis project that enables fully automatic tests for type annotated functions.. Key Features: Type Annotation Powered: Utilize your function's existing type annotations to build dozens of test cases automatically.; Low Barrier: Start utilizing property-based testing in the lowest barrier way possible.Just run auto_test(FUNCTION) to run dozens of test.

Hypothesis is released under the 2-Clause BSD License, sometimes referred to as the "Simplified BSD License" or the "FreeBSD License". Some third-party components are included. They are subject to their own licenses. All of the license information can be found in the included LICENSE file.

79.1.6 Hypothesis testing framework. Start with a null hypothesis, \(H_0\), that represents the status quo Set an alternative hypothesis, \(H_A\), that represents the research question, i.e. what we're testing for Conduct a hypothesis test under the assumption that the null hypothesis is true and calculate a p-value (probability of observed or more extreme outcome given that the null ...

Open source projects using Hypothesis; Edit on GitHub; Open source projects using Hypothesis¶ The following is a non-exhaustive list of open source projects I know are using Hypothesis. If you're aware of any others please add them to the list! The only inclusion criterion right now is that if it's a Python library then it should be ...

The Hypothesis client is released under the 2-Clause BSD License, sometimes referred to as the "Simplified BSD License".Some third-party components are included. They are subject to their own licenses. All of the license information can be found in the included LICENSE file.

The first tool is Hypothesis. Hypothesis is an open source initiative to annotate the web. I've enabled it on this page, so the option to annotate something should pop up if you try to highlight any part of the text. This allows for a conversation to happen in the marginalia of any web-document. What's cool about this is that it invites for ...

It aims to improve the integration between Hypothesis and Pytest by providing extra information and convenient access to config options. pytest --hypothesis-show-statistics can be used to display test and data generation statistics. pytest --hypothesis-profile=<profile name> can be used to load a settings profile.

Alternatives for technical users. Gooseberry: A command line utility to generate a knowledge base from Hypothesis annotations. Annotation tools: python tools to link Hypothesis and Pinboard annotations to Obsidian. Chris Aldrich's gist: retrieve your annotations into Obsidian (for templater plugin)

Once you have checked out and built the Hypothesis client, you can use it by running the following command in the browser-extension repository: Where "../client" is the path to your Hypothesis client checkout. After that a call to make build will use the built client from the client repository. Please consult the client's documentation for ...

Unleash the possibilities of AI Native Gaming. Simulation Hypothesis has 2 repositories available. Follow their code on GitHub.