Choosing an AWS database service

Taking the first step

Introduction

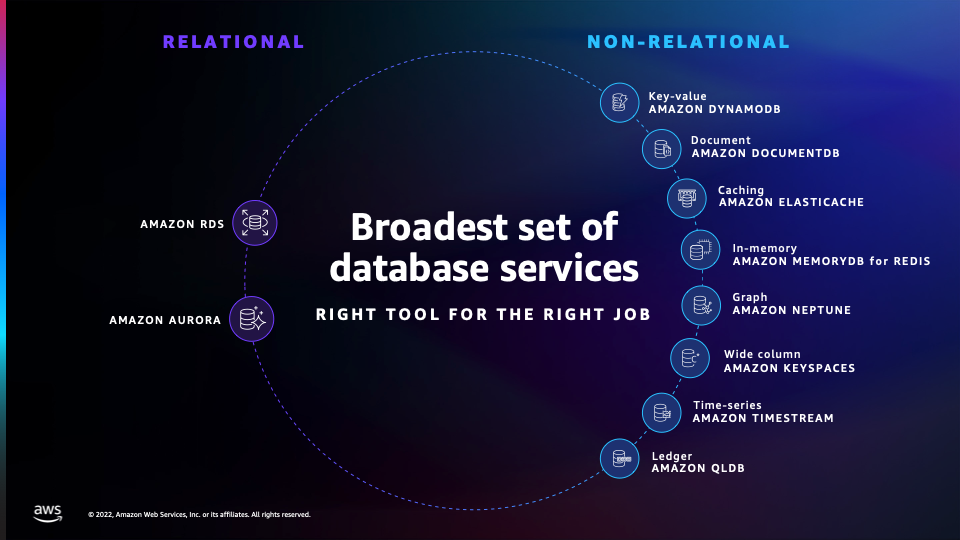

Amazon Web Services (AWS) offers a growing number of database options (currently more than 15) to support diverse data models. These include relational, key-value, document, in-memory, graph, time-series, wide column, and ledger databases.

Choosing the right database or multiple databases requires you to make a series of decisions based on your organizational needs. This decision guide will help you ask the right questions, provide a clear path for implementation, and help you migrate from your existing database.

This six and a half minute video explains the basics of choosing an AWS database.

Databases are important backend systems used to store data for any type of app, whether it's a small mobile app or an enterprise app with internet-scale and real-time requirements.

This decision guide is designed to help you understand the range of choices available to you, establish the criteria that make sense for you to make your database choice, provide you with detailed information on the unique properties of each database—and then allow you to dive deeper into the capabilities that each offers.

What kinds of apps do people build using AWS databases?

Internet-scale apps: These apps can handle millions of requests per second over hundreds of terabytes of data. They automatically scale vertically and horizontally to accommodate your spiky workloads.

Real-time apps: Real-time apps such as caching, session stores, gaming leaderboards, ride-hailing, ad-targeting, and real-time analytics need microsecond latency and high throughput to support millions of requests per second.

Enterprise apps: Enterprise apps manage core business processes, such as sales, billing, customer service, human resources, and line-of-business processes, such as a reservation system at a hotel chain or a risk-management system at an insurance company. These apps need databases that are fast, scalable, secure, available, and reliable.

Generative AI apps: Your data is the key to moving from generic applications to generative AI applications that create differentiating value for your customers and their business. Often, this differentiating data is stored in operational databases powering your applications.

This guide focuses on databases suitable for Online Transaction Processing (OLTP) applications. If you need to store and analyse massive amounts of data quickly and efficiently (typically met by an online analytical processing (OLAP) application), AWS offers Amazon Redshift, a fully managed, cloud-based data warehousing service that is designed to handle large-scale analytics workloads.

There are two high-level categories of AWS OLTP databases—relational and non-relational.

The AWS relational database family includes eight popular engines for Amazon RDS and Amazon Aurora. The Amazon Aurora engines include Amazon Aurora with MySQL compatibility, and Amazon Aurora with PostgreSQL compatibility. The other RDS engines include Db2, MySQL, MariaDB, PostgreSQL, Oracle, and SQL Server. AWS also offers deployment options such as Amazon RDS Custom and Amazon RDS on Outposts.

The non-relational database options are designed for specific data models including key-value, document, caching, in-memory, graph, time series, wide column, and ledger data models.

We explore all of these in detail in the Choose section of this guide.

Database migration

Before deciding which database service you want to use to work with your data, you should spend time thinking about your business objective, database selection, and how you are going to migrate your existing databases.

The best database migration strategy helps you take full advantage of the AWS Cloud. This might involve migrating your applications to use purpose-built cloud databases. You might just want the benefit of using a fully managed version of your existing database, such as RDS for PostgreSQL or RDS for MySQL. Alternatively, you might want to migrate from your commercial licensed databases, such as Oracle or SQL Server, to Amazon Aurora. Consider modernizing your applications and choosing the databases that best suit your applications' workflow requirements.

For example, if you choose to first transition your applications and then transform them, you might decide to re-platform (which makes no changes to the application you use, but lets you take advantage of a fully managed service in the cloud). When you are fully in the AWS Cloud, you can start working to modernize your application. This strategy can help you exit your current on-premises environment quickly, and then focus on modernization.

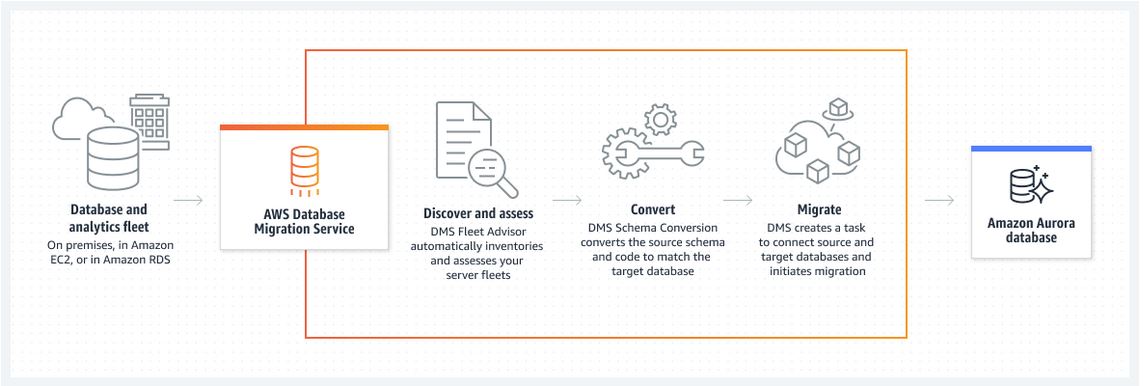

The following image shows how the AWS Database Migration Service is used to move data to Amazon Aurora. For resources to help with your migration strategy, see the Explore section.

You're considering hosting a database on AWS. This might be to support a greenfield/pilot project as a first step in your cloud migration journey, or you might want to migrate an existing workload with as little disruption as possible. Or perhaps you would like to port your workload to managed AWS services or even refactor it to be fully cloud-native.

Whatever your goal, considering the right questions will make your database decision easier. Here's a summary of the key criteria to consider.

The first major consideration when choosing your database is your business objective. What is the strategic direction driving your organization to change? As suggested in the 7 Rs of AWS , consider whether you want to rehost an existing workload, or refactor to a new platform to shed commercial license commitments.

You can choose a rehosting strategy to deploy to the cloud faster, with fewer data migration headaches. Install your database engine software on Amazon EC2, migrate your data, and manage your database much as you do on-premises. While rehosting is a fast path to the cloud, you are still left with the operational tasks such as upgrades, patches, backups, capacity planning and management, maintaining performance, and availability targets.

Alternatively, you can choose a re-platform strategy where you migrate your on-premises relational database to a fully managed Amazon RDS instance.

You may consider this an opportunity to refactor your workload to be cloud-native, making use of Amazon Aurora or purpose-built NoSQL databases such as Amazon DynamoDB, Amazon Neptune, or Amazon DocumentDB.

Finally, AWS offers serverless databases, which can scale to an application's demands with a pay-for-use pricing model and built-in high availability. Serverless databases increase your agility and optimize costs. In addition to not needing to provision, patch, or manage servers, many AWS serverless databases provide zero downtime maintenance.

AWS serverless offerings include Amazon Aurora Serverless, Amazon DynamoDB, Amazon ElastiCache, Amazon Keyspaces, Amazon Timestream and Amazon Neptune serverless, the graph database.

The core of any database choice includes the characteristics of the data that you need to store, retrieve, analyze, and work with. This includes your data model (is it relational, structured or semi-structured, using a highly connected dataset, or time-series?), data access (how do you need to access your data?), the extent to which you need real-time data, and whether there is a particular data record size you have in mind.

Your primary operational considerations are all about where your data is going to live and how it will be managed. The two key choices you need to make are:

Whether it will be self-hosted or fully managed : The core question here is where is your team going to provide the most value to the business? If your database is self-hosted, you will be responsible for the day-to-day maintenance, monitoring and patching of the database. Choosing a fully managed AWS database simplifies your work by removing undifferentiated database management tasks, allowing your team to focus on delivering value such as schema design, query construction, query optimization, and also responsible for supporting the development of applications that align with your business objectives.

Whether you need a serverless or provisioned database : DynamoDB, Amazon Keyspaces, Timestream, ElastiCache, Neptune, and Aurora provide models for how to think about this choice. Amazon Aurora Serverless v2 , for example, is suitable for demanding, highly variable workloads. For example, your database usage might be heavy for a short period of time, followed by long periods of light activity or no activity at all.

Database resiliency is key for any business. Achieving it means paying attention to a number of key factors, including capabilities for backup and restore, replication, failover, and point-in-time recovery (PITR).

Consider whether your database will need to support a high concurrency of transactions (10,000 or more) and whether it needs to be deployed in multiple geographic regions.

If your workload requires extremely high read performance with a response time measured in microseconds rather than single-digit milliseconds, you might want to consider using in-memory caching solutions such as Amazon ElastiCache alongside your database, or a database that supports in-memory data access such as MemoryDB.

Security is a shared responsibility between AWS and you. The AWS shared responsibility model describes this as security of the cloud managed by AWS, and security in the cloud managed by the customer. Specific security considerations include data protection at all levels of your data, authentication, compliance, data security, storage of sensitive data and support for auditing requirements.

Now that you know the criteria by which you are evaluating your database options, you are ready to choose which AWS database services might be a good fit for your organizational requirements.

This table highlights the type of data each database is optimized to handle. Use it to help determine the database that is the best fit for your use case.

Just as there is no single database that can satisfy all possible use cases effectively at the same time, any particular database type discussed above, may not satisfy all your requirements perfectly.

Consider your needs and workload requirements carefully and prioritize based on the considerations covered above, the requirements you must meet to the highest standard, the ones you might have some flexibility on, or even the ones you can do without. This can form a system of values, that will help you make effective trade-offs, that lead to the best possible outcome for your unique circumstances.

Also consider that, usually, you will be able to cover your application requirements with a mix of best-fit databases. Building a solution with multiple database types allows you to lean on each, for the strengths it provides.

For example, in an e-commerce use case, you may use DocumentDB (for product catalogs and user profiles), leaning on the flexibility provided by semi-structured data (but also the low, predictable latency afforded by DynamoDB, when your users are browsing your product catalog). You may also use Aurora for inventory and order processing, where a relational data model and transaction support may be more valuable to you.

To help you learn more about each of the available AWS database services, we have provided a pathway to explore how each of the services work. The following section provides links to in-depth documentation, hands-on tutorials, and resources to get you started.

To use the Amazon Web Services Documentation, Javascript must be enabled. Please refer to your browser's Help pages for instructions.

Thanks for letting us know we're doing a good job!

If you've got a moment, please tell us what we did right so we can do more of it.

Thanks for letting us know this page needs work. We're sorry we let you down.

If you've got a moment, please tell us how we can make the documentation better.

Real-world Case Studies: How Organizations Solved Data Integration Challenges with AWS ETL Tools

Introduction to the importance of data integration and the challenges organizations face in achieving it.

In today's data-driven world, organizations are constantly faced with the challenge of integrating vast amounts of data from various sources. Data integration plays a crucial role in enabling businesses to make informed decisions and gain valuable insights. However, achieving seamless data integration can be a complex and time-consuming process. That's where AWS ETL tools come into play. With their cost-effective, scalable, and streamlined solutions, these tools have proven to be game-changers for organizations facing data integration challenges. In this blog post, we will explore real-world case studies that demonstrate how organizations have successfully solved their data integration challenges using AWS ETL tools. So, let's dive in and discover the power of these tools in overcoming data integration hurdles.

Overview of AWS ETL Tools

Introduction to aws etl tools.

In today's data-driven world, organizations are faced with the challenge of integrating and analyzing vast amounts of data from various sources. This is where Extract, Transform, Load (ETL) tools come into play. ETL tools are essential for data integration as they enable the extraction of data from multiple sources, transform it into a consistent format, and load it into a target system or database.

Amazon Web Services (AWS) offers a range of powerful ETL tools that help organizations tackle their data integration challenges effectively. These tools provide scalable and cost-effective solutions for managing and processing large volumes of data. Let's take a closer look at some of the key AWS ETL tools.

AWS Glue: Simplifying Data Integration

AWS Glue is a fully managed extract, transform, and load (ETL) service that makes it easy to prepare and load data for analytics. It provides a serverless environment for running ETL jobs on big data sets stored in Amazon S3 or other databases. With AWS Glue, you can discover, catalog, and transform your data quickly and easily.

One of the key features of AWS Glue is its ability to automatically generate ETL code based on your source and target schemas. This eliminates the need for manual coding and reduces development time significantly. Additionally, AWS Glue supports various data formats such as CSV, JSON, Parquet, and more, making it compatible with different types of data sources.

AWS Data Pipeline: Orchestrating Data Workflows

AWS Data Pipeline is another powerful tool offered by AWS for orchestrating and automating the movement and transformation of data between different services. It allows you to define complex workflows using a visual interface or JSON templates.

With AWS Data Pipeline, you can schedule regular data transfers between various AWS services like Amazon S3, Amazon RDS, Amazon Redshift, etc., without writing any custom code. It also provides built-in fault tolerance and error handling, ensuring the reliability of your data workflows.

AWS Database Migration Service: Seamlessly Migrating Data

Migrating data from legacy systems to the cloud can be a complex and time-consuming process. AWS Database Migration Service simplifies this task by providing a fully managed service for migrating databases to AWS quickly and securely.

Whether you are migrating from on-premises databases or other cloud platforms, AWS Database Migration Service supports a wide range of source and target databases, including Oracle, MySQL, PostgreSQL, Amazon Aurora, and more. It handles schema conversion, data replication, and ongoing replication tasks seamlessly, minimizing downtime during the migration process.

Tapdata: A Modern Data Integration Solution

While AWS ETL tools offer robust capabilities for data integration, there are also third-party solutions that complement these tools and provide additional features. One such solution is Tapdata .

Tapdata is a modern data integration platform that offers real-time data capture and synchronization capabilities. It allows organizations to capture data from various sources in real-time and keep it synchronized across different systems. This ensures that businesses have access to up-to-date information for their analytics and decision-making processes.

One of the key advantages of Tapdata is its flexible and adaptive schema. It can handle structured, semi-structured, and unstructured data efficiently, making it suitable for diverse use cases. Additionally, Tapdata offers a low code/no code pipeline development and transformation environment, enabling users to build complex data pipelines without extensive coding knowledge.

Tapdata is trusted by industry leaders across various sectors such as e-commerce, finance, healthcare, and more. It offers a free-forever tier for users to get started with basic data integration needs. By combining the power of Tapdata with AWS ETL tools like Glue or Data Pipeline, organizations can enhance their data integration capabilities significantly.

Common Data Integration Challenges

Overview of data integration challenges.

Data integration is a critical process for organizations that need to combine and unify data from various sources to gain valuable insights and make informed business decisions. However, this process comes with its own set of challenges that organizations must overcome to ensure successful data integration. In this section, we will discuss some common data integration challenges faced by organizations and explore potential solutions.

One of the most prevalent challenges in data integration is dealing with data silos . Data silos occur when different departments or systems within an organization store their data in separate repositories, making it difficult to access and integrate the information effectively. This can lead to fragmented insights and hinder the ability to make accurate decisions based on a holistic view of the data.

To overcome this challenge, organizations can implement a centralized data storage solution that consolidates all relevant data into a single repository. AWS offers various tools like AWS Glue and AWS Data Pipeline that enable seamless extraction, transformation, and loading (ETL) processes to bring together disparate datasets from different sources. By breaking down these silos and creating a unified view of the data, organizations can enhance collaboration across departments and improve decision-making capabilities.

Disparate Data Formats

Another common challenge in data integration is dealing with disparate data formats. Different systems often use different formats for storing and representing data, making it challenging to merge them seamlessly. For example, one system may use CSV files while another uses JSON or XML files.

AWS provides powerful ETL tools like AWS Glue that support multiple file formats and provide built-in connectors for popular databases such as Amazon Redshift, Amazon RDS, and Amazon S3. These tools can automatically detect the schema of different datasets and transform them into a consistent format for easy integration. Additionally, AWS Glue supports custom transformations using Python or Scala code, allowing organizations to handle complex data format conversions efficiently.

Complex Data Transformation Requirements

Organizations often face complex data transformation requirements when integrating data from multiple sources. Data may need to be cleansed, standardized, or enriched before it can be effectively integrated and analyzed. This process can involve tasks such as deduplication, data validation, and data enrichment.

AWS Glue provides a visual interface for creating ETL jobs that simplify the process of transforming and preparing data for integration. It offers a wide range of built-in transformations and functions that organizations can leverage to clean, validate, and enrich their data. Additionally, AWS Glue supports serverless execution, allowing organizations to scale their data integration processes based on demand without worrying about infrastructure management.

Data Security and Compliance

Data security and compliance are critical considerations in any data integration project. Organizations must ensure that sensitive information is protected throughout the integration process and comply with relevant regulations such as GDPR or HIPAA.

AWS provides robust security features and compliance certifications to address these concerns. AWS Glue supports encryption at rest and in transit to protect sensitive data during storage and transfer. Additionally, AWS services like AWS Identity and Access Management (IAM) enable organizations to manage user access control effectively.

Case Study 1: How Company X Leveraged AWS Glue for Data Integration

Overview of company x's data integration challenge.

Company X, a leading global organization in the retail industry, faced significant challenges when it came to data integration. With operations spread across multiple regions and numerous systems generating vast amounts of data, they struggled to consolidate and harmonize information from various sources. This resulted in data silos, inconsistencies, and poor data quality.

The primary goal for Company X was to streamline their data integration processes and improve the overall quality of their data. They needed a solution that could automate the transformation of raw data into a usable format while ensuring accuracy and reliability.

Solution: Leveraging AWS Glue

To address their data integration challenges, Company X turned to AWS Glue, a fully managed extract, transform, and load (ETL) service offered by Amazon Web Services (AWS). AWS Glue provided them with a scalable and cost-effective solution for automating their data transformation processes.

By leveraging AWS Glue's powerful capabilities, Company X was able to build an end-to-end ETL pipeline that extracted data from various sources, transformed it according to predefined business rules, and loaded it into a centralized data warehouse. The service offered pre-built connectors for popular databases and file formats, making it easy for Company X to integrate their diverse range of systems.

One key advantage of using AWS Glue was its ability to automatically discover the schema of the source data. This eliminated the need for manual intervention in defining the structure of each dataset. Additionally, AWS Glue provided visual tools for creating and managing ETL jobs, simplifying the development process for Company X's technical team.

Another significant benefit of using AWS Glue was its serverless architecture. This meant that Company X did not have to worry about provisioning or managing infrastructure resources. The service automatically scaled up or down based on demand, ensuring optimal performance without any additional effort or cost.

Results and Benefits

The implementation of AWS Glue brought about several positive outcomes and benefits for Company X. Firstly, the automation of data transformation processes significantly improved efficiency. What used to take days or even weeks to complete manually now happened in a matter of hours. This allowed Company X to make faster and more informed business decisions based on up-to-date data.

Furthermore, by eliminating manual intervention, AWS Glue reduced the risk of human errors and ensured data accuracy. The predefined business rules applied during the transformation process standardized the data across different sources, resulting in improved data quality.

In terms of cost savings, AWS Glue proved to be highly cost-effective for Company X. The pay-as-you-go pricing model meant that they only paid for the resources they consumed during ETL jobs. Compared to building and maintaining a custom ETL solution or using traditional ETL tools, AWS Glue offered significant cost advantages.

Additionally, AWS Glue's scalability allowed Company X to handle increasing volumes of data without any performance degradation. As their business grew and new systems were added, AWS Glue seamlessly accommodated the additional workload without requiring any manual intervention or infrastructure upgrades.

In summary, by leveraging AWS Glue for their data integration needs, Company X successfully overcame their challenges related to consolidating and improving the quality of their data. The automation provided by AWS Glue not only enhanced efficiency but also ensured accuracy and reliability. With significant cost savings and scalability benefits, AWS Glue emerged as an ideal solution for Company X's data integration requirements.

Overall, this case study demonstrates how organizations can leverage AWS ETL tools like AWS Glue to solve complex data integration challenges effectively. By adopting such solutions, businesses can streamline their processes, improve data quality, and drive better decision-making capabilities while optimizing costs and ensuring scalability.

Case Study 2: Overcoming Data Silos with AWS Data Pipeline

Overview of data silos challenge.

Data silos are a common challenge faced by organizations when it comes to data integration. These silos occur when data is stored in separate systems or databases that are not easily accessible or compatible with each other. This can lead to inefficiencies, duplication of efforts, and limited visibility into the organization's data.

For example, Company Y was struggling with data silos as their customer information was scattered across multiple systems and databases. The marketing team had their own CRM system, while the sales team used a different database to store customer details. This fragmentation made it difficult for the organization to have a holistic view of their customers and hindered effective decision-making.

To overcome this challenge, Company Y needed a solution that could seamlessly move and transform data across different systems and databases, breaking down the barriers created by data silos.

Solution: Utilizing AWS Data Pipeline

Company Y turned to AWS Data Pipeline to address their data integration challenges. AWS Data Pipeline is a web service that allows organizations to orchestrate the movement and transformation of data between different AWS services and on-premises data sources.

By leveraging AWS Data Pipeline, Company Y was able to create workflows that automated the movement of customer data from various sources into a centralized repository. This allowed them to consolidate their customer information and eliminate the need for manual intervention in transferring data between systems.

One key advantage of using AWS Data Pipeline was its scalability and flexibility. As Company Y's business requirements evolved, they were able to easily modify their workflows within AWS Data Pipeline without disrupting existing processes. This adaptability ensured that they could keep up with changing demands and continue improving their data integration capabilities.

The implementation of AWS Data Pipeline brought about several positive outcomes for Company Y:

Improved Data Accessibility: With all customer information consolidated in one central repository, employees across different departments had easy access to accurate and up-to-date customer data. This enhanced data accessibility enabled better collaboration and decision-making within the organization.

Reduced Data Duplication: Prior to implementing AWS Data Pipeline, Company Y had multiple instances of customer data stored in different systems. This duplication not only wasted storage space but also increased the risk of inconsistencies and errors. By centralizing their data using AWS Data Pipeline, Company Y was able to eliminate data duplication and ensure a single source of truth for customer information.

Enhanced Data Integration Across Systems: AWS Data Pipeline facilitated seamless integration between various systems and databases within Company Y's infrastructure. This allowed them to break down the barriers created by data silos and establish a unified view of their customers. As a result, they were able to gain valuable insights into customer behavior, preferences, and trends, enabling more targeted marketing campaigns and personalized customer experiences.

Case Study 3: Migrating Legacy Data with AWS Database Migration Service

Overview of legacy data migration challenge.

Migrating legacy data from on-premises databases to the cloud can be a complex and challenging task for organizations. The specific data integration challenge faced by the organization in this case study was related to migrating large volumes of legacy data to AWS cloud. The organization had accumulated a vast amount of data over the years, stored in various on-premises databases. However, with the increasing need for scalability, improved accessibility, and reduced maintenance costs, they decided to migrate their legacy data to AWS.

The main challenge they encountered was the sheer volume of data that needed to be migrated. It was crucial for them to ensure minimal downtime during the migration process and maintain data integrity throughout. They needed a reliable solution that could handle the migration efficiently while minimizing any potential disruptions to their operations.

Solution: Leveraging AWS Database Migration Service

To address this challenge, the organization turned to AWS Database Migration Service (DMS). AWS DMS is a fully managed service that enables seamless and secure migration of databases to AWS with minimal downtime. It supports both homogeneous and heterogeneous migrations, making it an ideal choice for organizations with diverse database environments.

The organization leveraged AWS DMS to migrate their legacy data from on-premises databases to AWS cloud. They were able to take advantage of its robust features such as schema conversion, continuous replication, and automatic database conversion. This allowed them to migrate their data efficiently while ensuring compatibility between different database engines.

One key advantage of using AWS DMS was its seamless integration with existing AWS infrastructure. The organization already had an established AWS environment, including Amazon S3 for storage and Amazon Redshift for analytics. With AWS DMS, they were able to easily integrate their migrated data into these existing services without any major disruptions or additional configuration.

The implementation of AWS DMS brought about several positive outcomes and benefits for the organization. Firstly, it significantly improved data accessibility. By migrating their legacy data to AWS cloud, the organization was able to centralize and consolidate their data in a scalable and easily accessible environment. This allowed their teams to access and analyze the data more efficiently, leading to better decision-making processes.

Additionally, the migration to AWS cloud resulted in reduced maintenance costs for the organization. With on-premises databases, they had to invest significant resources in hardware maintenance, software updates, and security measures. By migrating to AWS, they were able to offload these responsibilities to AWS's managed services, reducing their overall maintenance costs.

Furthermore, the scalability offered by AWS DMS allowed the organization to handle future growth effortlessly. As their data continued to expand, they could easily scale up their storage capacity and computing power without any disruptions or additional investments in infrastructure.

Best Practices for Data Integration with AWS ETL Tools

Data governance and quality assurance.

Data governance and quality assurance are crucial aspects of data integration with AWS ETL tools. Implementing effective data governance policies and ensuring data quality assurance can significantly enhance the success of data integration projects. Here are some practical tips and best practices for organizations looking to leverage AWS ETL tools for data integration:

Establish clear data governance policies : Define clear guidelines and processes for managing data across the organization. This includes defining roles and responsibilities, establishing data ownership, and implementing data access controls.

Ensure data accuracy and consistency : Perform regular checks to ensure the accuracy and consistency of the integrated data. This can be achieved by implementing automated validation processes, conducting periodic audits, and resolving any identified issues promptly.

Implement metadata management : Metadata provides valuable information about the integrated datasets, such as their source, structure, and transformations applied. Implementing a robust metadata management system helps in understanding the lineage of the integrated data and facilitates easier troubleshooting.

Maintain data lineage : Establish mechanisms to track the origin of each piece of integrated data throughout its lifecycle. This helps in maintaining transparency, ensuring compliance with regulations, and facilitating traceability during troubleshooting or auditing processes.

Enforce security measures : Implement appropriate security measures to protect sensitive or confidential information during the integration process. This includes encrypting data at rest and in transit, implementing access controls based on user roles, and regularly monitoring access logs for any suspicious activities.

Perform regular backups : Regularly back up integrated datasets to prevent loss of critical information due to hardware failures or accidental deletions. Implement automated backup processes that store backups in secure locations with proper version control.

Scalability Considerations

Scalability is a key consideration when designing data integration processes with AWS ETL tools. By leveraging serverless architectures and auto-scaling capabilities offered by AWS services, organizations can ensure that their integration workflows can handle increasing data volumes and processing demands. Here are some important scalability considerations for data integration with AWS ETL tools:

Utilize serverless architectures : AWS offers serverless services like AWS Lambda, which allow organizations to run code without provisioning or managing servers. By leveraging serverless architectures, organizations can automatically scale their integration workflows based on the incoming data volume, ensuring efficient utilization of resources.

Leverage auto-scaling capabilities : AWS provides auto-scaling capabilities for various services, such as Amazon EC2 and Amazon Redshift. These capabilities automatically adjust the capacity of resources based on workload fluctuations. By configuring auto-scaling policies, organizations can ensure that their integration processes can handle peak loads without manual intervention.

Optimize data transfer : When integrating large volumes of data, it is essential to optimize the transfer process to minimize latency and maximize throughput. Utilize AWS services like Amazon S3 Transfer Acceleration or AWS Direct Connect to improve data transfer speeds and reduce network latency.

Design fault-tolerant workflows : Plan for potential failures by designing fault-tolerant workflows that can handle errors gracefully and resume processing from the point of failure. Utilize features like AWS Step Functions or Amazon Simple Queue Service (SQS) to build resilient workflows that can recover from failures automatically.

Monitor performance and resource utilization : Regularly monitor the performance of your integration workflows and track resource utilization metrics using AWS CloudWatch or third-party monitoring tools. This helps in identifying bottlenecks, optimizing resource allocation, and ensuring efficient scaling based on actual usage patterns.

Consider multi-region deployments : For high availability and disaster recovery purposes, consider deploying your integration workflows across multiple AWS regions. This ensures that even if one region experiences an outage, the integration processes can continue seamlessly in another region.

By following these best practices for data governance, quality assurance, and scalability considerations when using AWS ETL tools, organizations can ensure successful and efficient data integration processes. These practices not only enhance the reliability and accuracy of integrated data but also enable organizations to scale their integration workflows as their data volumes and processing demands grow.

Cost Optimization Strategies

Resource allocation and optimization.

When it comes to using AWS ETL tools, cost optimization is a crucial aspect that organizations need to consider. By implementing effective strategies, businesses can ensure that they are making the most out of their resources while minimizing unnecessary expenses. In this section, we will discuss some cost optimization strategies when using AWS ETL tools, including resource allocation and leveraging cost-effective storage options.

Optimizing Resource Allocation

One of the key factors in cost optimization is optimizing resource allocation. AWS provides various ETL tools that allow organizations to scale their resources based on their specific needs. By carefully analyzing the data integration requirements and workload patterns, businesses can allocate resources efficiently, avoiding overprovisioning or underutilization.

To optimize resource allocation, it is essential to monitor the performance of ETL jobs regularly. AWS offers monitoring and logging services like Amazon CloudWatch and AWS CloudTrail, which provide valuable insights into resource utilization and job execution times. By analyzing these metrics, organizations can identify any bottlenecks or areas for improvement in their data integration processes.

Another approach to optimizing resource allocation is by utilizing serverless architectures offered by AWS ETL tools like AWS Glue. With serverless computing, businesses only pay for the actual compute time used during job execution, eliminating the need for provisioning and managing dedicated servers. This not only reduces costs but also improves scalability and agility.

Leveraging Cost-Effective Storage Options

In addition to optimizing resource allocation, leveraging cost-effective storage options can significantly impact overall costs when using AWS ETL tools. AWS provides various storage services with different pricing models that cater to different data integration requirements.

For example, Amazon S3 (Simple Storage Service) offers highly scalable object storage at a low cost per gigabyte. It allows organizations to store large volumes of data generated during ETL processes without worrying about capacity limitations or high storage costs. Additionally, S3 provides features like lifecycle policies and intelligent tiering, which automatically move data to cost-effective storage classes based on access patterns.

Another cost-effective storage option is Amazon Redshift. It is a fully managed data warehousing service that provides high-performance analytics at a lower cost compared to traditional on-premises solutions. By leveraging Redshift for storing and analyzing integrated data, organizations can achieve significant cost savings while benefiting from its scalability and performance capabilities.

Best Practices for Cost Optimization

To further optimize costs when using AWS ETL tools, it is essential to follow some best practices:

Right-sizing resources : Analyze the workload requirements and choose the appropriate instance types and sizes to avoid overprovisioning or underutilization.

Implementing data compression : Compressing data before storing it in AWS services like S3 or Redshift can significantly reduce storage costs.

Data lifecycle management : Define proper data retention policies and use features like lifecycle policies in S3 to automatically move infrequently accessed data to cheaper storage classes.

Monitoring and optimization : Continuously monitor resource utilization, job execution times, and overall system performance to identify areas for optimization.

By following these best practices, organizations can ensure that they are effectively managing their costs while maintaining optimal performance in their data integration processes.

Future Trends and Innovations in Data Integration with AWS

Emerging trends in data integration.

As technology continues to advance at a rapid pace, the field of data integration is also evolving. AWS is at the forefront of these innovations, offering cutting-edge solutions that enable organizations to seamlessly integrate and analyze their data. In this section, we will explore some of the emerging trends and innovations in data integration with AWS.

Integration of Machine Learning Capabilities

One of the most exciting trends in data integration is the integration of machine learning capabilities. Machine learning algorithms have the ability to analyze large volumes of data and identify patterns and insights that humans may not be able to detect. With AWS, organizations can leverage machine learning tools such as Amazon SageMaker to build, train, and deploy machine learning models for data integration purposes.

By incorporating machine learning into their data integration processes, organizations can automate repetitive tasks, improve accuracy, and gain valuable insights from their data. For example, machine learning algorithms can be used to automatically categorize and tag incoming data, making it easier to organize and analyze.

Real-Time Data Streaming

Another trend in data integration is the increasing demand for real-time data streaming. Traditional batch processing methods are no longer sufficient for organizations that require up-to-the-minute insights from their data. AWS offers services such as Amazon Kinesis Data Streams and Amazon Managed Streaming for Apache Kafka (MSK) that enable real-time streaming of large volumes of data.

Real-time streaming allows organizations to process and analyze incoming data as it arrives, enabling them to make timely decisions based on the most current information available. This is particularly valuable in industries such as finance, e-commerce, and IoT where real-time insights can drive business growth and competitive advantage.

Use of Data Lakes

Data lakes have emerged as a popular approach for storing and analyzing large volumes of structured and unstructured data. A data lake is a centralized repository that allows organizations to store all types of raw or processed data in its native format. AWS provides a comprehensive suite of services for building and managing data lakes, including Amazon S3, AWS Glue, and Amazon Athena.

By leveraging data lakes, organizations can break down data silos and enable cross-functional teams to access and analyze data from various sources. Data lakes also support advanced analytics techniques such as machine learning and artificial intelligence, allowing organizations to derive valuable insights from their data.

In conclusion, the real-world case studies highlighted in this blog post demonstrate the effectiveness of AWS ETL tools in solving data integration challenges. These tools offer a cost-effective and scalable solution for organizations looking to streamline their data integration processes.

One key takeaway from these case studies is the cost-effectiveness of AWS ETL tools. By leveraging cloud-based resources, organizations can avoid the high upfront costs associated with traditional on-premises solutions. This allows them to allocate their budget more efficiently and invest in other areas of their business.

Additionally, the scalability of AWS ETL tools is a significant advantage. As organizations grow and their data integration needs increase, these tools can easily accommodate the expanding workload. With AWS's elastic infrastructure, organizations can scale up or down as needed, ensuring optimal performance and efficiency.

Furthermore, AWS ETL tools provide a streamlined approach to data integration. The intuitive user interface and pre-built connectors simplify the process, reducing the time and effort required to integrate disparate data sources. This allows organizations to quickly gain insights from their data and make informed decisions.

In light of these benefits, we encourage readers to explore AWS ETL tools for their own data integration needs. By leveraging these tools, organizations can achieve cost savings, scalability, and a streamlined data integration process. Take advantage of AWS's comprehensive suite of ETL tools and unlock the full potential of your data today.

Success Stories and Benefits of Real-world Database Integration

Best Practices for Cost-Efficient Performance Optimization with AWS ETL Tools

Streamline Data Integration and Transformation with the Best ETL Tools for SQL Server

Comparing Reverse ETL with Alternative Data Integration Methods

Simplified Real-Time Data Integration using Tapdata

Everything you need for enterprise-grade data replication

© Copyright 2024 Tapdata - All Rights Reserved

- Cloud Computing

AWS Case Studies: Services and Benefits in 2024

Home Blog Cloud Computing AWS Case Studies: Services and Benefits in 2024

With its extensive range of cloud services, Amazon Web Services (AWS) has completely changed the way businesses run. Organisations demonstrate how AWS has revolutionized their operations by enabling scalability, cost-efficiency, and innovation through many case studies. AWS's computing power, storage, database management, and artificial intelligence technologies have benefited businesses of all sizes, from startups to multinational corporations. These include improved security, agility, worldwide reach, and lower infrastructure costs. With Amazon AWS educate program it helps businesses in various industries to increase growth, enhance workflow, and maintain their competitiveness in today's ever-changing digital landscape. So, let's discuss the AWS cloud migration case study and its importance in getting a better understanding of the topic in detail.

What are AWS Case Studies, and Why are They Important?

The AWS case studies comprehensively explain how companies or organizations have used Amazon Web Services (AWS) to solve problems, boost productivity, and accomplish objectives. These studies provide real-life scenarios of Amazon Web Services (AWS) in operation, showcasing the wide range of sectors and use cases in which AWS can be successfully implemented. They offer vital lessons and inspiration for anyone considering or already using AWS by providing insights into the tactics, solutions, and best practices businesses use the AWS Cloud Engineer program . The Amazon ec2 case study is crucial since it provides S's capabilities, assisting prospective clients in comprehending the valuable advantages and showcasing AWS's dependability, scalability, and affordability in fostering corporate innovation and expansion.

What are the Services Provided by AWS, and What are its Use Cases?

The case study on AWS in Cloud Computing provided and its use cases mentioned:

Elastic Compute Cloud (EC2) Use Cases

Amazon Elastic Compute Cloud (EC2) enables you to quickly spin up virtual computers with no initial expenditure and no need for a significant hardware investment. Use the AWS admin console or automation scripts to provision new servers for testing and production environments promptly and shut them down when not in use.

AWS EC2 use cases consist of:

- With options for load balancing and auto-scaling, create a fault-tolerant architecture.

- Select EC2 accelerated computing instances if you require a lot of processing power and GPU capability for deep learning and machine learning.

Relational Database Service (RDS) Use Cases

Since Amazon Relational Database Service (Amazon RDS) is a managed database service, it alleviates the stress associated with maintaining, administering, and other database-related responsibilities.

AWS RDS uses common cases, including:

- Without additional overhead or staff expenditures, a new database server can be deployed in minutes and significantly elevate dependability and uptime. It is the perfect fit for complex daily database requirements that are OLTP/transactional.

- RDS should be utilized with NoSQL databases like Amazon OpenSearch Service (for text and unstructured data) and DynamoDB (for low-latency/high-traffic use cases).

AWS Workspaces

AWS offers Amazon Workspaces, a fully managed, persistent desktop virtualization service, to help remote workers and give businesses access to virtual desktops within the cloud. With it, users can access the data, apps, and resources they require from any supported device, anywhere, at any time.

AWS workspaces use cases

- IT can set up and manage access fast. With the web filter, you can allow outgoing traffic from a Workspace to reach your chosen internal sites.

- Some companies can work without physical offices and rely solely on SaaS apps. Thus, there is no on-premises infrastructure. They use cloud-based desktops via AWS Workspaces and other services in these situations.

AWS Case Studies

Now, we'll be discussing different case studies of AWS, which are mentioned below: -

Case Study - 1: Modern Web Application Platform with AWS

American Public Media, the programming section of Minnesota Public Radio, is one of the world's biggest producers and distributors of public television. To host their podcast, streaming music, and news websites on AWS, they worked to develop a proof of concept.

After reviewing an outdated active-passive disaster recovery plan, MPR decided to upgrade to a cloud infrastructure to modernize its apps and methodology. This infrastructure would need to be adaptable to changes within the technology powering their apps, scalable to accommodate their audience growth, and resilient to support their disaster recovery strategy.

MPR and AWS determined that MPR News and the public podcast websites should be hosted on the new infrastructure to show off AWS as a feasible choice. Furthermore, AWS must host multiple administrative apps to demonstrate its private cloud capabilities. These applications would be an image manager, a schedule editor, and a configuration manager.

To do this, AWS helped MPR set up an EKS Kubernetes cluster . The apps would be able to grow automatically according to workload and traffic due to the cluster. AWS and MPR developed Elasticsearch at Elastic.co and a MySQL instance in RDS to hold application data.

Business Benefits

Considerable cost savings were made possible by the upgraded infrastructure. Fewer servers would need to be acquired for these vital applications due to the decrease in hardware requirements. Additionally, switching to AWS made switching from Akamai CDN to CloudFront simple. This action reduced MPR's yearly expenses by thousands.

Case Study - 2: Platform Modernisation to Deploy to AWS

Foodsby was able to proceed with its expansion goals after receiving a $6 million investment in 2017, but it still needed to modernize its mobile and web applications. For a faster time to launch to AWS, they improved and enhanced their web, iOS, and Android applications.

Sunsetting technology put this project on a surged timeline. Selecting the mobile application platform required serious analysis and expert advice to establish consensus across internal stakeholders.

Improving the creation of front-end and back-end web apps that separated them into microservices to enable AWS hosting, maximizing scalability. Strengthening recommended full Native for iOS and Android and quickly creating and implementing that solution.

Case Study - 3: Cloud Platform with Kubernetes

SPS Commerce hired AWS to assist them with developing a more secure cloud platform, expanding their cloud deployment choices through Kubernetes, and educating their engineers on these advanced technologies.

SPS serves over 90,000 retail, distribution, grocery, and e-commerce businesses. However, to maintain its growth, SPS needs to remove obstacles to deploying new applications on AWS and other cloud providers in the future. They wanted a partner to teach their internal development team DevOps principles and reveal them to Kubernetes best practices, even though they knew Kubernetes would help them achieve this.

To speed up new project cycle times, decrease ramp-up times, and improve the team's Kubernetes proficiency, it assisted with developing a multi-team, Kubernetes-based platform with a uniform development method. The standards for development and deployment and assisted them in establishing the deployment pipeline.

Most teams can plug, play, and get code up and running quickly due to the streamlined deployment interface. SPS Commerce benefits from Kubernetes' flexibility and can avoid vendor lock-in, which they require to switch cloud providers.

Case Study - 4: Using Unified Payment Solutions to Simplify Government Services

The customer, who had a portfolio of firms within its authority, needed to improve experience to overcome the difficulty of combining many payment methods into a single, unified solution.

Due to the customers' varied acquisitions, the payment system landscape became fragmented, making it more difficult for clients to make payments throughout a range of platforms as well as technologies. Providing a streamlined payment experience could have been improved by this lack of coherence and standardization.

It started developing a single, cloud-based payment system that complies with the customers' microservices-based reference design. CRUD services were created after the user interface for client administration was set at the beginning of the project.

With this, the customer can streamline operations and increase efficiency by providing a smooth payment experience.

The new system demonstrated a tremendous improvement over the old capability, demonstrating the ability to handle thousands of transactions per second.

Maintaining system consistency and facilitating scalability and maintenance were made more accessible by aligning with the reference architecture.

Case Study - 5: Accelerated Data Migration to AWS

They selected improvements to create an AWS cloud migration case study cloud platform to safely transfer their data from a managed service provider to AWS during the early phases of a worldwide pandemic.

Early in 2020, COVID-19 was discovered, and telemedicine services were used to lessen the strain on hospital infrastructure. The number of telehealth web queries increased dramatically overnight, from 5,000 to 40,000 per minute. Through improvement, Zipnosis was able to change direction and reduce the duration of its AWS migration plan from six to three months. The AWS architecture case study includes HIPAA, SOC2, and HITRUST certification requirements. They also wanted to move their historic database smoothly across several web-facing applications while adhering to service level agreements (SLAs), which limited downtime.

Using Terraform and Elastic Kubernetes Service, the AWS platform creates a modern, infrastructure-as-code, HIPAA-compliant, and HITRUST-certified environment. With the help of serverless components, tools were developed to roll out an Application Envelope, enabling the creation of a HIPAA-compliant environment that could be activated quickly.

Currently, Zipnosis has internal platform management. Now that there is more flexibility, scaling up and down is more affordable and accessible. Their services are more marketable to potential clients because of their scalable, secure, and efficient infrastructure. Their use of modern technologies, such as Kubernetes on Amazon EKS, simplifies hiring top people. Zipnosis is in an excellent position to move forward.

Case Study - 6: Transforming Healthcare Staffing

The customer's outdated application presented difficulties. It was based on the outdated DBROCKET platform and needed an intuitive user interface, testing tools, and extensibility. Modernizing the application was improving the job and giving the customer an improved, scalable, and maintainable solution.

Although the customer's old application was crucial for predicting hospital staffing needs, maintenance, and improvements were challenging due to its reliance on the obscure DBROCKET platform. Hospitals lost money on inefficient staff scheduling due to the application's lack of responsiveness and a mobile-friendly interface.

Choosing Spring Boot and Groovy for back-end development to offer better maintainability and extensibility throughout the improved migration of the application from DBROCKET to a new technology stack. Unit tests were used to increase the reliability and standard of the code.

Efficiency at Catalis increased dramatically when the advanced document redaction technology was put in place. They were able to process papers at a significantly higher rate because the automated procedure cut down the time and effort needed for manual redaction.

Catalis cut infrastructure costs by utilizing serverless architecture and cloud-based services. They saved a significant amount of money because they were no longer required to upgrade and maintain on-premises servers.

The top-notch Knowledgehut best Cloud Computing courses that meet different demands and skill levels are available at KnowledgeHut. Through comprehensive curriculum, hands-on exercises, and expert-led instruction, attendees may learn about and gain practical experience with cloud platforms, including AWS, Azure, Google Cloud, and more. Professionals who complete these courses will be efficient to succeed in the quickly developing sector of cloud computing.

Finally, a case study of AWS retail case studies offers a range of features and advantages. These studies show how firms in various industries use AWS for innovation and growth, from scalability to cost efficiency. AWS offers a robust infrastructure and a range of technologies to satisfy changing business needs, whether related to improving customer experiences with cloud-based solutions or streamlining processes using AI and machine learning. These case studies provide substantial proof of AWS's influence on digital transformation and the success of organizations.

Frequently Asked Questions (FAQs)

From the case study of Amazon web services, companies can learn how other businesses use AWS services to solve real-world problems, increase productivity, cut expenses, and innovate. For those looking to optimize their cloud strategy and operations, these case studies provide insightful information, optimal methodologies, and purpose.

You can obtain case studies on AWS through the AWS website, which has a special section with a large selection of case studies from different industries. In addition, AWS releases updated case studies regularly via various marketing platforms and on its blog.

The case study of Amazon web services, which offers specific instances of how AWS services have been successfully applied in various settings, can significantly assist in the decision-making process for IT initiatives. Project planning and strategy can be informed by the insights, best practices, and possible solutions these case studies provide.

Kingson Jebaraj

Kingson Jebaraj is a highly respected technology professional, recognized as both a Microsoft Most Valuable Professional (MVP) and an Alibaba Most Valuable Professional. With a wealth of experience in cloud computing, Kingson has collaborated with renowned companies like Microsoft, Reliance Telco, Novartis, Pacific Controls UAE, Alibaba Cloud, and G42 UAE. He specializes in architecting innovative solutions using emerging technologies, including cloud and edge computing, digital transformation, IoT, and programming languages like C, C++, Python, and NLP.

Avail your free 1:1 mentorship session.

Something went wrong

Upcoming Cloud Computing Batches & Dates

Real Stories of Cost Optimization on AWS

In this post, we will unveil real-life customer sagas where businesses harnessed the power of aws to supercharge their savings.

I am a Senior AWS Technical Account Manager, and in my role I provide consultative architectural and operational guidance delivered in the context of customers' applications and use-cases to help them achieve the greatest value from AWS. My role is not just about providing technical support but also helping our valued customers optimize their cloud resources to achieve cost-efficiency without sacrificing performance. In this blog post, we'll explore various strategies, tactics, and real-world examples of how I've assisted our large enterprise customers in their cost optimization endeavors. I'll share real-life use cases, metrics, and strategies that have proven effective. Stay tuned for a Bonus strategy at the end.

The Cost Optimization Framework

In the ever-evolving landscape of cloud computing, Enterprises are continually seeking ways to maximize the benefits of AWS while keeping costs in check. Before diving into specific customer cases, let's outline the fundamental framework for AWS cost optimization. It involves several key pillars:

Implementing Cloud Financial Management

Implementing Cloud Financial Management starts with the customer identifying key stakeholders from Finance and Technology, thus forming a cost optimization function that's responsible to establish and maintain cost awareness internally. A suite of AWS Services can help the customer achieve this, such as AWS Budgets and AWS Cost Anomaly Detection to accurately forecast AWS spend. Tools like AWS Cost Explorer and AWS Billing Console can be leveraged to derive real-time insights into AWS spend , while AWS Cost and Usage reports serve an excellent source to obtain fine-grain usage metrics. Additionally, customers can subscribe to AWS Blogposts to stay relevant to Roadmap items of interest.

Expenditure and Usage Awareness

Expenditure and Usage awareness among internal teams, can be enabled via a unified Account structure with AWS Organizations, allowing customers to seamlessly allocate costs and usage to the various Business Units within the company. Customers can setup and enable Cost Allocation tags as part of their charge-back strategy to hold internal product teams accountable for their AWS Costs and Usage.

Cost Effective Resources

Using purpose-built resource types for specific workloads is going to be key to cost savings. Other opportunities includes, Instance resizing for both Cost and Performance or leveraging managed services such as Amazon Aurora , Amazon ElastiCache and Amazon DynamoDB to minimize the overhead of managing and maintaining the servers. Customers can also take advantage of AWS' cost-effective pricing options such as Savings Plans , Reserved Instances or Spot for their workloads, saving significant amount of costs as compared to OnDemand.

Manage Demand and Supply

It is paramount for customers to keep their operating costs low. To facilitate that, customers can consider adopting auto-scaling across key AWS services such as Amazon EC2, Amazon DynamoDB and Amazon ElastiCache. This ensures that the customer only pays for the computing resources they need, and not have to overpay.

Optimizing over Time

In AWS, you optimize over time by reviewing new services and implementing them in your workload. As AWS releases new services and features, it is a best practice to review your existing architectural decisions to ensure that they remain cost effective. As your requirements change, be aggressive in decommissioning resources, components, and workloads that you no longer require.

Real-world Cost Optimization Stories

Case study 1: leveraging various aws pricing options.

A Digital AdExchange realized over 60% in cost savings by adopting AWS EC2 Spot for their real-time bidding application, while maintaining the same throughput. The customer updated their application to use disparate Instance Types (~20) and over 50% of those Instance types were used in the spot fleets that replaced the original auto-scaling groups, thus providing a significant amount of resistance to fluctuations in the spot market. The customer also implemented a log recovery pipeline to combat the problem of losing log data on terminated spot instances. The customer had unpredictable traffic patterns but needed cost predictability. So, in addition to Spot, we helped them implement a mixed Reservations strategy, combining EC2 Savings Plans for steady-state workloads and Compute Savings Plans for flexibility. While the EC2 Instance Savings Plans provided the customer with over 65% price benefits, the Compute Savings Plans unlocked over 60% in potential cost savings. This led to annual cost savings of over $1 million. Furthermore, Advertising technology (ad tech) companies use Amazon DynamoDB to store various kinds of marketing data, such as user profiles, user events, clicks, and visited links. Some of the use cases include real-time bidding (RTB), ad targeting, and attribution. These use cases require a high request rate (millions of requests per second), low and predictable latency, and reliability. As a fully managed service, DynamoDB allows ad tech companies to meet all of these requirements without having to invest resources in database operations. With accelerated DynamoDB costs Month-over-month, the customer quickly adopted DynamoDB Reserved Capacity to receive a significant discount over Provisioned Capacity (Read and Write) and this helped the customer save an estimated $1M annually. Lastly, the customer was also able to save ~$300k with ElastiCache RIs and $150k with OpenSearch RIs.

Case Study 2: Utilizing native AWS tools to optimize costs

One of our largest social media customers experienced a sudden but sustained spike in their AWS costs for a specific Business Unit. We implemented a comprehensive tagging strategy, enabling them to allocate costs with precision. This led to better cost accountability and improved budget management. We also set up AWS Cost and Usage reports to provide granular insights into Cost and Usage, and we were able to identify the key driver behind the cost increase. This proactive approach allowed the company to identify and address cost anomalies in real-time, saving them thousands of dollars. Additionally, it helped the customer gain visibility into escalating Compute and Database costs. AWS Trusted Advisor is a service that inspects your AWS environment and provides recommendations for optimizing your resources. For one of our largest social media customers, we utilized AWS Trusted Advisor, which identified Underutilized and Idle resources that could potentially be terminated savings the customer $200k monthly across Amazon EC2, EBS, RDS and Load Balancers. Furthermore, the tool also provided recommendations around. Lastly with AWS Compute Optimizer, the customer was able to uncover $60k in monthly savings achieved through EC2 Rightsizing recommendations.

As promised, here's the Bonus

AWS Graviton is a game-changer for cost optimization and performance in Cloud Computing. Graviton instances, powered by Arm-based processors, offer a compelling value proposition. Graviton instances typically come at a lower price point compared to their x86 counterparts, making them a cost-effective choice. They also consume less power and have a smaller carbon footprint, reducing operational costs and contributing to an organization's sustainability goals. For businesses with demanding workloads, this can translate into significant savings over time. Along with the cost benefits, the Graviton instances are also designed for performance and efficiency making it a lucrative choice for many types of workloads, especially those that are highly parallelizable, like web servers and microservices. A major streaming company whose key workloads are hosted on AWS were able to assess $1M in monthly net savings (20%) by adopting Graviton across Amazon EC2, RDS, ElastiCache and OpenSearch. This allowed the customer actively re-invest the realized savings into other growth areas such as Generative AI that helped accelerate their business outcomes.

As a AWS Technical Account Manager, I'm committed to staying at the forefront of these developments, ensuring that our customers have access to the latest cost optimization strategies and technologies. Cost optimization on AWS isn't a one-time task but a continuous journey. By leveraging rightsizing, reserved instances, spot instances, lifecycle policies, and effective cost allocation, we've helped numerous enterprise customers unlock substantial savings while maintaining or even improving their cloud workloads. The key takeaway is that cost optimization on AWS is achievable with the right strategies, tools, and a commitment to ongoing monitoring and improvement. As your partner in the cloud, I'm here to guide you on this journey, ensuring that your AWS infrastructure remains cost-efficient, agile, and aligned with your business objectives. In the world of AWS, the path to cost optimization is paved with data-driven insights, smart resource management, and a collaborative partnership between AWS and our valued customers. Together, we'll continue to optimize and thrive in the cloud.

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.

- Services Status

- Trust Center

- Cloud Insights

- Documentation

- Knowledge Base

- Learning Services

- Hardware Universe

- Partner Hub

- NetApp On-premises

- Cloud Volumes ONTAP

- Amazon FSx for NetApp ONTAP

- Azure NetApp Files

- Google Cloud NetApp Volumes

- Copy and Sync

- Edge caching

- Backup and recovery

- Disaster recovery

- Replication

- Ransomware Protection

- Observability

- Classification

- AIOps & storage health

- Centralized File Storage

- Cyber Resilience

- Disaster Recovery

- Electronic Design Automation

- Google Cloud

- Workload Migration

- Cloud Storage

- Hybrid Cloud

- TCO Google Cloud

- TCO Cloud Tiering

- TCO Cloud Backup

- Cloud Volumes ONTAP Sizer

- Global File Cache ROI

- Azure NetApp Files Performance

- Cloud Insights ROI Calculator

- AVS/ANF TCO Estimator

- GCVE/CVS TCO Estimator

- VMC+FSx for ONTAP

- NetApp Keystone STaaS Cost Calculator

- CVS Google Cloud

- NetApp Community

- Success Stories

- Global Availability Map

- Resource Library

- Professional Services

- Cloud Solution Providers

- Data Protection

- AWS Big Data

- AWS Database

- AWS High Availability

- AWS Migration

- AWS Snapshots

- Infrastructure as Code AWS

- All Blog Posts

- Azure Backup

- Azure Big Data

- Azure Cost Management

- Azure Database

- Azure High Availability

- Azure Migration

- HPC on Azure

- Infrastructure as Code Azure

- Linux on Azure

- SAP on Azure

- Google Cloud Backup

- Google Cloud Database

- Google Cloud Migration

- Google Cloud Pricing

- Google Cloud Storage

- Cloud Backup

- Cloud Backup Services

- Backup Strategy

- Ransomware Recovery

- Kubernetes on AWS

- Kubernetes on Azure

- Kubernetes Storage

- Kubernetes Data Management

- CI/CD Pipeline

- Cloud Migration

- Desktop as a Service

- Elasticsearch

- Hybrid Cloud Management

- Muticloud Storage

- OpenShift Container Platform

- Virtual Desktop Infrastructure

- VMware Cloud

- Cloud Database

- Digital Transformation

- Cloud Automation

- Help Center

- Get Started

- Database Case Studies with Cloud Volumes ONTAP

More about AWS Database

- AWS PostgreSQL: Managed or Self-Managed?

- MySQL Database Migration: Amazon EC2-Hosted vs. Amazon RDS

- AWS MySQL: MySQL as a Service vs. Self Managed in the Cloud

- Amazon DocumentDB: Basics and Best Practices

- AWS NoSQL: Choosing the Best Option for You

- AWS Database as a Service: 8 Ways to Manage Databases in AWS

- AWS Database Migration Service: Copy-Paste Your Database to Amazon

- AWS Oracle RDS: Running Your First Oracle Database on Amazon

- DynamoDB Pricing: How to Optimize Usage and Reduce Costs

- AWS Database Services: Finding the Right Database for You

- Overcome Amazon RDS Instance Size Limits with Data Tiering to Amazon S3

Subscribe to our blog

Thanks for subscribing to the blog.

October 20, 2020

Topics: cloud volumes ontap aws database customer case study advanced 7 minute read.

Databases are home to companies’ most prized possession—data. Migrating that data to a cloud-based database, such as an AWS database , can be incredibly worthwhile, helping to consolidate data sets into a single shareable and scalable resource. However, migration itself can be challenging, and once replicated in the cloud, databases must be highly available, secured, manageable, recoverable, and performant.

In this blog post we demonstrate how Cloud Volumes ONTAP supports the migration and operation of databases in the cloud through several customer success stories.

Optimizing Cloud Databases with Cloud Volumes ONTAP

Concerto cloud: sql cost reductions on aws.

Concerto Cloud Services is a fully managed cloud provider that offers its clients a customizable hybrid cloud platform. It is an expert in seamlessly deploying enterprise applications across on-premises, public cloud, and in third-party environments.

The company manages both large on-premises data centers and resources in the cloud. With a large footprint in the cloud, Concerto Cloud was looking for a way to reduce its cloud database storage costs and increase manageability to help their customers navigate multicloud environments.

The cloud provider used Cloud Volumes ONTAP to migrate 800 SQL databases to AWS. By using Cloud Volumes ONTAP it gained considerable benefits including:

- Reduced database costs and optimized storage structure through data tiering.

- 96% data reduction with Cloud Volumes ONTAP’s storage efficiencies.

- Its customers can switch between private and public clouds on demand.

- Gained confidence in meeting its customers’ existing and future requirements.

For more information, take a look at the full database case study here .

Learn more about SQL in AWS with Cloud Volumes ONTAP Managed Storage here.

Mellanox: MongoDB Data Consolidation and Cost Reduction on Azure

Mellanox is a global supplier of high-performance computer networking products that use InfiniBand and Ethernet technology. Its product portfolio provides low latency and fast data throughput to accelerate data centers and applications. Its solutions include cables, switches, processors, silicon, and network adaptors.

Mellanox manages data systems on-premises in factories all over the world. These data centers weren’t adequately built to scale and did not support the performance and reliability metrics needed to meet the company’s desired rate of product innovation. To tackle this problem, Mellanox searched for a solution that could consolidate its siloed on-premises production data sets.

The company applied Cloud Volumes ONTAP on Azure to unify the data in their cloud-based MongoDB database which contained production logs as well as other production data. As a result Mellanox experienced the following benefits:

- Data from its factories around the world were unified into one scalable, shareable, reliable and cost-effective platform.

- Infrequently-used data was offloaded to Azure Blob storage with data tiering, so only fresh, high-demand data is kept on their SSDs.

- 90% of backup and disaster recovery cold data was tiered to lower-cost object storage.

- 69% reduction in production data footprint and costs using Cloud Volumes ONTAP’s data deduplication technology .

For more information, take a look at the full case study here .

Learn more about NoSQL database deployment with Cloud Volumes ONTAP .

AdvancedMD: SQL “Lift and Shift” Migration to AWS

The Utah-based company provides a suite of integrated SaaS cloud-based tools for medical practices. Catering to 26,000 practitioners across 8,600 practices, its portfolio includes telemedicine, patient relationship management, practice management, business analytics and benchmarking, and electronic health records.

AdvancedMD needed to branch away from its parent company’s data center and establish their own data center in the cloud within a very short time period. To solve this problem, it began using Cloud Volumes ONTAP to enhance its SQL database management on AWS, and gained:

- Easy lift and shift of their sensitive data into AWS to form their own data center.

- Boosted SQL database performance and database storage management.

- Quick access and recovery of customer data and their application stack.

- Set up an easy file management system without changing their existing processes.

For more information, take a look at the database case study here .

Business and Financial Software Company: Hadoop Data Lakes and Oracle on AWS

This US-based business and financial software company provides small businesses with financial, accounting, and tax preparation software. Its flagship solutions process 25% of the United States GDP and 40% of the United States taxes.

The company decided to adopt a cloud-only strategy, migrating to AWS completely by the end of 2020. To achieve this goal it needed a way to migrate its large Hadoop environment to AWS. Using NetApp Cloud Sync Service , the company migrated PBs of Hadoop data lakes from on-premises NFS to AWS S3, and can now also replicate its Oracle database from on-premises to AWS RDS and between different AWS regions.

Applying Cloud Volumes ONTAP to its production workloads led to additional benefits:

- Centralized management with NetApp Cloud Manager .

- High Availability across availability zones with RPO=0 and RTO < 60.