- PRO Courses Guides New Tech Help Pro Expert Videos About wikiHow Pro Upgrade Sign In

- EDIT Edit this Article

- EXPLORE Tech Help Pro About Us Random Article Quizzes Request a New Article Community Dashboard This Or That Game Popular Categories Arts and Entertainment Artwork Books Movies Computers and Electronics Computers Phone Skills Technology Hacks Health Men's Health Mental Health Women's Health Relationships Dating Love Relationship Issues Hobbies and Crafts Crafts Drawing Games Education & Communication Communication Skills Personal Development Studying Personal Care and Style Fashion Hair Care Personal Hygiene Youth Personal Care School Stuff Dating All Categories Arts and Entertainment Finance and Business Home and Garden Relationship Quizzes Cars & Other Vehicles Food and Entertaining Personal Care and Style Sports and Fitness Computers and Electronics Health Pets and Animals Travel Education & Communication Hobbies and Crafts Philosophy and Religion Work World Family Life Holidays and Traditions Relationships Youth

- Browse Articles

- Learn Something New

- Quizzes Hot

- This Or That Game

- Train Your Brain

- Explore More

- Support wikiHow

- About wikiHow

- Log in / Sign up

- Computers and Electronics

- Online Communications

How to Get ChatGPT to Write an Essay: Prompts, Outlines, & More

Last Updated: June 2, 2024 Fact Checked

Getting ChatGPT to Write the Essay

Using ai to help you write, expert interview.

This article was written by Bryce Warwick, JD and by wikiHow staff writer, Nicole Levine, MFA . Bryce Warwick is currently the President of Warwick Strategies, an organization based in the San Francisco Bay Area offering premium, personalized private tutoring for the GMAT, LSAT and GRE. Bryce has a JD from the George Washington University Law School. This article has been fact-checked, ensuring the accuracy of any cited facts and confirming the authority of its sources. This article has been viewed 49,174 times.

Are you curious about using ChatGPT to write an essay? While most instructors have tools that make it easy to detect AI-written essays, there are ways you can use OpenAI's ChatGPT to write papers without worrying about plagiarism or getting caught. In addition to writing essays for you, ChatGPT can also help you come up with topics, write outlines, find sources, check your grammar, and even format your citations. This wikiHow article will teach you the best ways to use ChatGPT to write essays, including helpful example prompts that will generate impressive papers.

Things You Should Know

- To have ChatGPT write an essay, tell it your topic, word count, type of essay, and facts or viewpoints to include.

- ChatGPT is also useful for generating essay topics, writing outlines, and checking grammar.

- Because ChatGPT can make mistakes and trigger AI-detection alarms, it's better to use AI to assist with writing than have it do the writing.

- Before using the OpenAI's ChatGPT to write your essay, make sure you understand your instructor's policies on AI tools. Using ChatGPT may be against the rules, and it's easy for instructors to detect AI-written essays.

- While you can use ChatGPT to write a polished-looking essay, there are drawbacks. Most importantly, ChatGPT cannot verify facts or provide references. This means that essays created by ChatGPT may contain made-up facts and biased content. [1] X Research source It's best to use ChatGPT for inspiration and examples instead of having it write the essay for you.

- The topic you want to write about.

- Essay length, such as word or page count. Whether you're writing an essay for a class, college application, or even a cover letter , you'll want to tell ChatGPT how much to write.

- Other assignment details, such as type of essay (e.g., personal, book report, etc.) and points to mention.

- If you're writing an argumentative or persuasive essay , know the stance you want to take so ChatGPT can argue your point.

- If you have notes on the topic that you want to include, you can also provide those to ChatGPT.

- When you plan an essay, think of a thesis, a topic sentence, a body paragraph, and the examples you expect to present in each paragraph.

- It can be like an outline and not an extensive sentence-by-sentence structure. It should be a good overview of how the points relate.

- "Write a 2000-word college essay that covers different approaches to gun violence prevention in the United States. Include facts about gun laws and give ideas on how to improve them."

- This prompt not only tells ChatGPT the topic, length, and grade level, but also that the essay is personal. ChatGPT will write the essay in the first-person point of view.

- "Write a 4-page college application essay about an obstacle I have overcome. I am applying to the Geography program and want to be a cartographer. The obstacle is that I have dyslexia. Explain that I have always loved maps, and that having dyslexia makes me better at making them."

Tyrone Showers

Be specific when using ChatGPT. Clear and concise prompts outlining your exact needs help ChatGPT tailor its response. Specify the desired outcome (e.g., creative writing, informative summary, functional resume), any length constraints (word or character count), and the preferred emotional tone (formal, humorous, etc.)

- In our essay about gun control, ChatGPT did not mention school shootings. If we want to discuss this topic in the essay, we can use the prompt, "Discuss school shootings in the essay."

- Let's say we review our college entrance essay and realize that we forgot to mention that we grew up without parents. Add to the essay by saying, "Mention that my parents died when I was young."

- In the Israel-Palestine essay, ChatGPT explored two options for peace: A 2-state solution and a bi-state solution. If you'd rather the essay focus on a single option, ask ChatGPT to remove one. For example, "Change my essay so that it focuses on a bi-state solution."

Pay close attention to the content ChatGPT generates. If you use ChatGPT often, you'll start noticing its patterns, like its tendency to begin articles with phrases like "in today's digital world." Once you spot patterns, you can refine your prompts to steer ChatGPT in a better direction and avoid repetitive content.

- "Give me ideas for an essay about the Israel-Palestine conflict."

- "Ideas for a persuasive essay about a current event."

- "Give me a list of argumentative essay topics about COVID-19 for a Political Science 101 class."

- "Create an outline for an argumentative essay called "The Impact of COVID-19 on the Economy."

- "Write an outline for an essay about positive uses of AI chatbots in schools."

- "Create an outline for a short 2-page essay on disinformation in the 2016 election."

- "Find peer-reviewed sources for advances in using MRNA vaccines for cancer."

- "Give me a list of sources from academic journals about Black feminism in the movie Black Panther."

- "Give me sources for an essay on current efforts to ban children's books in US libraries."

- "Write a 4-page college paper about how global warming is changing the automotive industry in the United States."

- "Write a 750-word personal college entrance essay about how my experience with homelessness as a child has made me more resilient."

- You can even refer to the outline you created with ChatGPT, as the AI bot can reference up to 3000 words from the current conversation. For example: "Write a 1000 word argumentative essay called 'The Impact of COVID-19 on the United States Economy' using the outline you provided. Argue that the government should take more action to support businesses affected by the pandemic."

- One way to do this is to paste a list of the sources you've used, including URLs, book titles, authors, pages, publishers, and other details, into ChatGPT along with the instruction "Create an MLA Works Cited page for these sources."

- You can also ask ChatGPT to provide a list of sources, and then build a Works Cited or References page that includes those sources. You can then replace sources you didn't use with the sources you did use.

Expert Q&A

- Because it's easy for teachers, hiring managers, and college admissions offices to spot AI-written essays, it's best to use your ChatGPT-written essay as a guide to write your own essay. Using the structure and ideas from ChatGPT, write an essay in the same format, but using your own words. Thanks Helpful 0 Not Helpful 0

- Always double-check the facts in your essay, and make sure facts are backed up with legitimate sources. Thanks Helpful 0 Not Helpful 0

- If you see an error that says ChatGPT is at capacity , wait a few moments and try again. Thanks Helpful 0 Not Helpful 0

- Using ChatGPT to write or assist with your essay may be against your instructor's rules. Make sure you understand the consequences of using ChatGPT to write or assist with your essay. Thanks Helpful 1 Not Helpful 0

- ChatGPT-written essays may include factual inaccuracies, outdated information, and inadequate detail. [3] X Research source Thanks Helpful 0 Not Helpful 0

You Might Also Like

Thanks for reading our article! If you’d like to learn more about completing school assignments, check out our in-depth interview with Bryce Warwick, JD .

- ↑ https://help.openai.com/en/articles/6783457-what-is-chatgpt

- ↑ https://platform.openai.com/examples/default-essay-outline

- ↑ https://www.ipl.org/div/chatgpt/

About This Article

- Send fan mail to authors

Is this article up to date?

Featured Articles

Trending Articles

Watch Articles

- Terms of Use

- Privacy Policy

- Do Not Sell or Share My Info

- Not Selling Info

wikiHow Tech Help Pro:

Level up your tech skills and stay ahead of the curve

ChatGPT: Everything you need to know about the AI-powered chatbot

ChatGPT, OpenAI’s text-generating AI chatbot, has taken the world by storm since its launch in November 2022. What started as a tool to hyper-charge productivity through writing essays and code with short text prompts has evolved into a behemoth used by more than 92% of Fortune 500 companies .

That growth has propelled OpenAI itself into becoming one of the most-hyped companies in recent memory. And its latest partnership with Apple for its upcoming generative AI offering, Apple Intelligence, has given the company another significant bump in the AI race.

2024 also saw the release of GPT-4o, OpenAI’s new flagship omni model for ChatGPT. GPT-4o is now the default free model, complete with voice and vision capabilities. But after demoing GPT-4o, OpenAI paused one of its voices , Sky, after allegations that it was mimicking Scarlett Johansson’s voice in “Her.”

OpenAI is facing internal drama, including the sizable exit of co-founder and longtime chief scientist Ilya Sutskever as the company dissolved its Superalignment team. OpenAI is also facing a lawsuit from Alden Global Capital-owned newspapers , including the New York Daily News and the Chicago Tribune, for alleged copyright infringement, following a similar suit filed by The New York Times last year.

Here’s a timeline of ChatGPT product updates and releases, starting with the latest, which we’ve been updating throughout the year. And if you have any other questions, check out our ChatGPT FAQ here.

Timeline of the most recent ChatGPT updates

February 2024, january 2024.

- ChatGPT FAQs

OpenAI delays ChatGPT’s new Voice Mode

OpenAI planned to start rolling out its advanced Voice Mode feature to a small group of ChatGPT Plus users in late June, but it says lingering issues forced it to postpone the launch to July. OpenAI says Advanced Voice Mode might not launch for all ChatGPT Plus customers until the fall, depending on whether it meets certain internal safety and reliability checks.

ChatGPT releases app for Mac

ChatGPT for macOS is now available for all users . With the app, users can quickly call up ChatGPT by using the keyboard combination of Option + Space. The app allows users to upload files and other photos, as well as speak to ChatGPT from their desktop and search through their past conversations.

The ChatGPT desktop app for macOS is now available for all users. Get faster access to ChatGPT to chat about email, screenshots, and anything on your screen with the Option + Space shortcut: https://t.co/2rEx3PmMqg pic.twitter.com/x9sT8AnjDm — OpenAI (@OpenAI) June 25, 2024

Apple brings ChatGPT to its apps, including Siri

Apple announced at WWDC 2024 that it is bringing ChatGPT to Siri and other first-party apps and capabilities across its operating systems. The ChatGPT integrations, powered by GPT-4o, will arrive on iOS 18, iPadOS 18 and macOS Sequoia later this year, and will be free without the need to create a ChatGPT or OpenAI account. Features exclusive to paying ChatGPT users will also be available through Apple devices .

Apple is bringing ChatGPT to Siri and other first-party apps and capabilities across its operating systems #WWDC24 Read more: https://t.co/0NJipSNJoS pic.twitter.com/EjQdPBuyy4 — TechCrunch (@TechCrunch) June 10, 2024

House Oversight subcommittee invites Scarlett Johansson to testify about ‘Sky’ controversy

Scarlett Johansson has been invited to testify about the controversy surrounding OpenAI’s Sky voice at a hearing for the House Oversight Subcommittee on Cybersecurity, Information Technology, and Government Innovation. In a letter, Rep. Nancy Mace said Johansson’s testimony could “provide a platform” for concerns around deepfakes.

ChatGPT experiences two outages in a single day

ChatGPT was down twice in one day: one multi-hour outage in the early hours of the morning Tuesday and another outage later in the day that is still ongoing. Anthropic’s Claude and Perplexity also experienced some issues.

You're not alone, ChatGPT is down once again. pic.twitter.com/Ydk2vNOOK6 — TechCrunch (@TechCrunch) June 4, 2024

The Atlantic and Vox Media ink content deals with OpenAI

The Atlantic and Vox Media have announced licensing and product partnerships with OpenAI . Both agreements allow OpenAI to use the publishers’ current content to generate responses in ChatGPT, which will feature citations to relevant articles. Vox Media says it will use OpenAI’s technology to build “audience-facing and internal applications,” while The Atlantic will build a new experimental product called Atlantic Labs .

I am delighted that @theatlantic now has a strategic content & product partnership with @openai . Our stories will be discoverable in their new products and we'll be working with them to figure out new ways that AI can help serious, independent media : https://t.co/nfSVXW9KpB — nxthompson (@nxthompson) May 29, 2024

OpenAI signs 100K PwC workers to ChatGPT’s enterprise tier

OpenAI announced a new deal with management consulting giant PwC . The company will become OpenAI’s biggest customer to date, covering 100,000 users, and will become OpenAI’s first partner for selling its enterprise offerings to other businesses.

OpenAI says it is training its GPT-4 successor

OpenAI announced in a blog post that it has recently begun training its next flagship model to succeed GPT-4. The news came in an announcement of its new safety and security committee, which is responsible for informing safety and security decisions across OpenAI’s products.

Former OpenAI director claims the board found out about ChatGPT on Twitter

On the The TED AI Show podcast, former OpenAI board member Helen Toner revealed that the board did not know about ChatGPT until its launch in November 2022. Toner also said that Sam Altman gave the board inaccurate information about the safety processes the company had in place and that he didn’t disclose his involvement in the OpenAI Startup Fund.

Sharing this, recorded a few weeks ago. Most of the episode is about AI policy more broadly, but this was my first longform interview since the OpenAI investigation closed, so we also talked a bit about November. Thanks to @bilawalsidhu for a fun conversation! https://t.co/h0PtK06T0K — Helen Toner (@hlntnr) May 28, 2024

ChatGPT’s mobile app revenue saw biggest spike yet following GPT-4o launch

The launch of GPT-4o has driven the company’s biggest-ever spike in revenue on mobile , despite the model being freely available on the web. Mobile users are being pushed to upgrade to its $19.99 monthly subscription, ChatGPT Plus, if they want to experiment with OpenAI’s most recent launch.

OpenAI to remove ChatGPT’s Scarlett Johansson-like voice

After demoing its new GPT-4o model last week, OpenAI announced it is pausing one of its voices , Sky, after users found that it sounded similar to Scarlett Johansson in “Her.”

OpenAI explained in a blog post that Sky’s voice is “not an imitation” of the actress and that AI voices should not intentionally mimic the voice of a celebrity. The blog post went on to explain how the company chose its voices: Breeze, Cove, Ember, Juniper and Sky.

We’ve heard questions about how we chose the voices in ChatGPT, especially Sky. We are working to pause the use of Sky while we address them. Read more about how we chose these voices: https://t.co/R8wwZjU36L — OpenAI (@OpenAI) May 20, 2024

ChatGPT lets you add files from Google Drive and Microsoft OneDrive

OpenAI announced new updates for easier data analysis within ChatGPT . Users can now upload files directly from Google Drive and Microsoft OneDrive, interact with tables and charts, and export customized charts for presentations. The company says these improvements will be added to GPT-4o in the coming weeks.

We're rolling out interactive tables and charts along with the ability to add files directly from Google Drive and Microsoft OneDrive into ChatGPT. Available to ChatGPT Plus, Team, and Enterprise users over the coming weeks. https://t.co/Fu2bgMChXt pic.twitter.com/M9AHLx5BKr — OpenAI (@OpenAI) May 16, 2024

OpenAI inks deal to train AI on Reddit data

OpenAI announced a partnership with Reddit that will give the company access to “real-time, structured and unique content” from the social network. Content from Reddit will be incorporated into ChatGPT, and the companies will work together to bring new AI-powered features to Reddit users and moderators.

We’re partnering with Reddit to bring its content to ChatGPT and new products: https://t.co/xHgBZ8ptOE — OpenAI (@OpenAI) May 16, 2024

OpenAI debuts GPT-4o “omni” model now powering ChatGPT

OpenAI’s spring update event saw the reveal of its new omni model, GPT-4o, which has a black hole-like interface , as well as voice and vision capabilities that feel eerily like something out of “Her.” GPT-4o is set to roll out “iteratively” across its developer and consumer-facing products over the next few weeks.

OpenAI demos real-time language translation with its latest GPT-4o model. pic.twitter.com/pXtHQ9mKGc — TechCrunch (@TechCrunch) May 13, 2024

OpenAI to build a tool that lets content creators opt out of AI training

The company announced it’s building a tool, Media Manager, that will allow creators to better control how their content is being used to train generative AI models — and give them an option to opt out. The goal is to have the new tool in place and ready to use by 2025.

OpenAI explores allowing AI porn

In a new peek behind the curtain of its AI’s secret instructions , OpenAI also released a new NSFW policy . Though it’s intended to start a conversation about how it might allow explicit images and text in its AI products, it raises questions about whether OpenAI — or any generative AI vendor — can be trusted to handle sensitive content ethically.

OpenAI and Stack Overflow announce partnership

In a new partnership, OpenAI will get access to developer platform Stack Overflow’s API and will get feedback from developers to improve the performance of their AI models. In return, OpenAI will include attributions to Stack Overflow in ChatGPT. However, the deal was not favorable to some Stack Overflow users — leading to some sabotaging their answer in protest .

U.S. newspapers file copyright lawsuit against OpenAI and Microsoft

Alden Global Capital-owned newspapers, including the New York Daily News, the Chicago Tribune, and the Denver Post, are suing OpenAI and Microsoft for copyright infringement. The lawsuit alleges that the companies stole millions of copyrighted articles “without permission and without payment” to bolster ChatGPT and Copilot.

OpenAI inks content licensing deal with Financial Times

OpenAI has partnered with another news publisher in Europe, London’s Financial Times , that the company will be paying for content access. “Through the partnership, ChatGPT users will be able to see select attributed summaries, quotes and rich links to FT journalism in response to relevant queries,” the FT wrote in a press release.

OpenAI opens Tokyo hub, adds GPT-4 model optimized for Japanese

OpenAI is opening a new office in Tokyo and has plans for a GPT-4 model optimized specifically for the Japanese language. The move underscores how OpenAI will likely need to localize its technology to different languages as it expands.

Sam Altman pitches ChatGPT Enterprise to Fortune 500 companies

According to Reuters, OpenAI’s Sam Altman hosted hundreds of executives from Fortune 500 companies across several cities in April, pitching versions of its AI services intended for corporate use.

OpenAI releases “more direct, less verbose” version of GPT-4 Turbo

Premium ChatGPT users — customers paying for ChatGPT Plus, Team or Enterprise — can now use an updated and enhanced version of GPT-4 Turbo . The new model brings with it improvements in writing, math, logical reasoning and coding, OpenAI claims, as well as a more up-to-date knowledge base.

Our new GPT-4 Turbo is now available to paid ChatGPT users. We’ve improved capabilities in writing, math, logical reasoning, and coding. Source: https://t.co/fjoXDCOnPr pic.twitter.com/I4fg4aDq1T — OpenAI (@OpenAI) April 12, 2024

ChatGPT no longer requires an account — but there’s a catch

You can now use ChatGPT without signing up for an account , but it won’t be quite the same experience. You won’t be able to save or share chats, use custom instructions, or other features associated with a persistent account. This version of ChatGPT will have “slightly more restrictive content policies,” according to OpenAI. When TechCrunch asked for more details, however, the response was unclear:

“The signed out experience will benefit from the existing safety mitigations that are already built into the model, such as refusing to generate harmful content. In addition to these existing mitigations, we are also implementing additional safeguards specifically designed to address other forms of content that may be inappropriate for a signed out experience,” a spokesperson said.

OpenAI’s chatbot store is filling up with spam

TechCrunch found that the OpenAI’s GPT Store is flooded with bizarre, potentially copyright-infringing GPTs . A cursory search pulls up GPTs that claim to generate art in the style of Disney and Marvel properties, but serve as little more than funnels to third-party paid services and advertise themselves as being able to bypass AI content detection tools.

The New York Times responds to OpenAI’s claims that it “hacked” ChatGPT for its copyright lawsuit

In a court filing opposing OpenAI’s motion to dismiss The New York Times’ lawsuit alleging copyright infringement, the newspaper asserted that “OpenAI’s attention-grabbing claim that The Times ‘hacked’ its products is as irrelevant as it is false.” The New York Times also claimed that some users of ChatGPT used the tool to bypass its paywalls.

OpenAI VP doesn’t say whether artists should be paid for training data

At a SXSW 2024 panel, Peter Deng, OpenAI’s VP of consumer product dodged a question on whether artists whose work was used to train generative AI models should be compensated . While OpenAI lets artists “opt out” of and remove their work from the datasets that the company uses to train its image-generating models, some artists have described the tool as onerous.

A new report estimates that ChatGPT uses more than half a million kilowatt-hours of electricity per day

ChatGPT’s environmental impact appears to be massive. According to a report from The New Yorker , ChatGPT uses an estimated 17,000 times the amount of electricity than the average U.S. household to respond to roughly 200 million requests each day.

ChatGPT can now read its answers aloud

OpenAI released a new Read Aloud feature for the web version of ChatGPT as well as the iOS and Android apps. The feature allows ChatGPT to read its responses to queries in one of five voice options and can speak 37 languages, according to the company. Read aloud is available on both GPT-4 and GPT-3.5 models.

ChatGPT can now read responses to you. On iOS or Android, tap and hold the message and then tap “Read Aloud”. We’ve also started rolling on web – click the "Read Aloud" button below the message. pic.twitter.com/KevIkgAFbG — OpenAI (@OpenAI) March 4, 2024

OpenAI partners with Dublin City Council to use GPT-4 for tourism

As part of a new partnership with OpenAI, the Dublin City Council will use GPT-4 to craft personalized itineraries for travelers, including recommendations of unique and cultural destinations, in an effort to support tourism across Europe.

A law firm used ChatGPT to justify a six-figure bill for legal services

New York-based law firm Cuddy Law was criticized by a judge for using ChatGPT to calculate their hourly billing rate . The firm submitted a $113,500 bill to the court, which was then halved by District Judge Paul Engelmayer, who called the figure “well above” reasonable demands.

ChatGPT experienced a bizarre bug for several hours

ChatGPT users found that ChatGPT was giving nonsensical answers for several hours , prompting OpenAI to investigate the issue. Incidents varied from repetitive phrases to confusing and incorrect answers to queries. The issue was resolved by OpenAI the following morning.

Match Group announced deal with OpenAI with a press release co-written by ChatGPT

The dating app giant home to Tinder, Match and OkCupid announced an enterprise agreement with OpenAI in an enthusiastic press release written with the help of ChatGPT . The AI tech will be used to help employees with work-related tasks and come as part of Match’s $20 million-plus bet on AI in 2024.

ChatGPT will now remember — and forget — things you tell it to

As part of a test, OpenAI began rolling out new “memory” controls for a small portion of ChatGPT free and paid users, with a broader rollout to follow. The controls let you tell ChatGPT explicitly to remember something, see what it remembers or turn off its memory altogether. Note that deleting a chat from chat history won’t erase ChatGPT’s or a custom GPT’s memories — you must delete the memory itself.

We’re testing ChatGPT's ability to remember things you discuss to make future chats more helpful. This feature is being rolled out to a small portion of Free and Plus users, and it's easy to turn on or off. https://t.co/1Tv355oa7V pic.twitter.com/BsFinBSTbs — OpenAI (@OpenAI) February 13, 2024

OpenAI begins rolling out “Temporary Chat” feature

Initially limited to a small subset of free and subscription users, Temporary Chat lets you have a dialogue with a blank slate. With Temporary Chat, ChatGPT won’t be aware of previous conversations or access memories but will follow custom instructions if they’re enabled.

But, OpenAI says it may keep a copy of Temporary Chat conversations for up to 30 days for “safety reasons.”

Use temporary chat for conversations in which you don’t want to use memory or appear in history. pic.twitter.com/H1U82zoXyC — OpenAI (@OpenAI) February 13, 2024

ChatGPT users can now invoke GPTs directly in chats

Paid users of ChatGPT can now bring GPTs into a conversation by typing “@” and selecting a GPT from the list. The chosen GPT will have an understanding of the full conversation, and different GPTs can be “tagged in” for different use cases and needs.

You can now bring GPTs into any conversation in ChatGPT – simply type @ and select the GPT. This allows you to add relevant GPTs with the full context of the conversation. pic.twitter.com/Pjn5uIy9NF — OpenAI (@OpenAI) January 30, 2024

ChatGPT is reportedly leaking usernames and passwords from users’ private conversations

Screenshots provided to Ars Technica found that ChatGPT is potentially leaking unpublished research papers, login credentials and private information from its users. An OpenAI representative told Ars Technica that the company was investigating the report.

ChatGPT is violating Europe’s privacy laws, Italian DPA tells OpenAI

OpenAI has been told it’s suspected of violating European Union privacy , following a multi-month investigation of ChatGPT by Italy’s data protection authority. Details of the draft findings haven’t been disclosed, but in a response, OpenAI said: “We want our AI to learn about the world, not about private individuals.”

OpenAI partners with Common Sense Media to collaborate on AI guidelines

In an effort to win the trust of parents and policymakers, OpenAI announced it’s partnering with Common Sense Media to collaborate on AI guidelines and education materials for parents, educators and young adults. The organization works to identify and minimize tech harms to young people and previously flagged ChatGPT as lacking in transparency and privacy .

OpenAI responds to Congressional Black Caucus about lack of diversity on its board

After a letter from the Congressional Black Caucus questioned the lack of diversity in OpenAI’s board, the company responded . The response, signed by CEO Sam Altman and Chairman of the Board Bret Taylor, said building a complete and diverse board was one of the company’s top priorities and that it was working with an executive search firm to assist it in finding talent.

OpenAI drops prices and fixes ‘lazy’ GPT-4 that refused to work

In a blog post , OpenAI announced price drops for GPT-3.5’s API, with input prices dropping to 50% and output by 25%, to $0.0005 per thousand tokens in, and $0.0015 per thousand tokens out. GPT-4 Turbo also got a new preview model for API use, which includes an interesting fix that aims to reduce “laziness” that users have experienced.

Expanding the platform for @OpenAIDevs : new generation of embedding models, updated GPT-4 Turbo, and lower pricing on GPT-3.5 Turbo. https://t.co/7wzCLwB1ax — OpenAI (@OpenAI) January 25, 2024

OpenAI bans developer of a bot impersonating a presidential candidate

OpenAI has suspended AI startup Delphi, which developed a bot impersonating Rep. Dean Phillips (D-Minn.) to help bolster his presidential campaign. The ban comes just weeks after OpenAI published a plan to combat election misinformation, which listed “chatbots impersonating candidates” as against its policy.

OpenAI announces partnership with Arizona State University

Beginning in February, Arizona State University will have full access to ChatGPT’s Enterprise tier , which the university plans to use to build a personalized AI tutor, develop AI avatars, bolster their prompt engineering course and more. It marks OpenAI’s first partnership with a higher education institution.

Winner of a literary prize reveals around 5% her novel was written by ChatGPT

After receiving the prestigious Akutagawa Prize for her novel The Tokyo Tower of Sympathy, author Rie Kudan admitted that around 5% of the book quoted ChatGPT-generated sentences “verbatim.” Interestingly enough, the novel revolves around a futuristic world with a pervasive presence of AI.

Sam Altman teases video capabilities for ChatGPT and the release of GPT-5

In a conversation with Bill Gates on the Unconfuse Me podcast, Sam Altman confirmed an upcoming release of GPT-5 that will be “fully multimodal with speech, image, code, and video support.” Altman said users can expect to see GPT-5 drop sometime in 2024.

OpenAI announces team to build ‘crowdsourced’ governance ideas into its models

OpenAI is forming a Collective Alignment team of researchers and engineers to create a system for collecting and “encoding” public input on its models’ behaviors into OpenAI products and services. This comes as a part of OpenAI’s public program to award grants to fund experiments in setting up a “democratic process” for determining the rules AI systems follow.

OpenAI unveils plan to combat election misinformation

In a blog post, OpenAI announced users will not be allowed to build applications for political campaigning and lobbying until the company works out how effective their tools are for “personalized persuasion.”

Users will also be banned from creating chatbots that impersonate candidates or government institutions, and from using OpenAI tools to misrepresent the voting process or otherwise discourage voting.

The company is also testing out a tool that detects DALL-E generated images and will incorporate access to real-time news, with attribution, in ChatGPT.

Snapshot of how we’re preparing for 2024’s worldwide elections: • Working to prevent abuse, including misleading deepfakes • Providing transparency on AI-generated content • Improving access to authoritative voting information https://t.co/qsysYy5l0L — OpenAI (@OpenAI) January 15, 2024

OpenAI changes policy to allow military applications

In an unannounced update to its usage policy , OpenAI removed language previously prohibiting the use of its products for the purposes of “military and warfare.” In an additional statement, OpenAI confirmed that the language was changed in order to accommodate military customers and projects that do not violate their ban on efforts to use their tools to “harm people, develop weapons, for communications surveillance, or to injure others or destroy property.”

ChatGPT subscription aimed at small teams debuts

Aptly called ChatGPT Team , the new plan provides a dedicated workspace for teams of up to 149 people using ChatGPT as well as admin tools for team management. In addition to gaining access to GPT-4, GPT-4 with Vision and DALL-E3, ChatGPT Team lets teams build and share GPTs for their business needs.

OpenAI’s GPT store officially launches

After some back and forth over the last few months, OpenAI’s GPT Store is finally here . The feature lives in a new tab in the ChatGPT web client, and includes a range of GPTs developed both by OpenAI’s partners and the wider dev community.

To access the GPT Store, users must be subscribed to one of OpenAI’s premium ChatGPT plans — ChatGPT Plus, ChatGPT Enterprise or the newly launched ChatGPT Team.

the GPT store is live! https://t.co/AKg1mjlvo2 fun speculation last night about which GPTs will be doing the best by the end of today. — Sam Altman (@sama) January 10, 2024

Developing AI models would be “impossible” without copyrighted materials, OpenAI claims

Following a proposed ban on using news publications and books to train AI chatbots in the U.K., OpenAI submitted a plea to the House of Lords communications and digital committee. OpenAI argued that it would be “impossible” to train AI models without using copyrighted materials, and that they believe copyright law “does not forbid training.”

OpenAI claims The New York Times’ copyright lawsuit is without merit

OpenAI published a public response to The New York Times’s lawsuit against them and Microsoft for allegedly violating copyright law, claiming that the case is without merit.

In the response , OpenAI reiterates its view that training AI models using publicly available data from the web is fair use. It also makes the case that regurgitation is less likely to occur with training data from a single source and places the onus on users to “act responsibly.”

We build AI to empower people, including journalists. Our position on the @nytimes lawsuit: • Training is fair use, but we provide an opt-out • "Regurgitation" is a rare bug we're driving to zero • The New York Times is not telling the full story https://t.co/S6fSaDsfKb — OpenAI (@OpenAI) January 8, 2024

OpenAI’s app store for GPTs planned to launch next week

After being delayed in December , OpenAI plans to launch its GPT Store sometime in the coming week, according to an email viewed by TechCrunch. OpenAI says developers building GPTs will have to review the company’s updated usage policies and GPT brand guidelines to ensure their GPTs are compliant before they’re eligible for listing in the GPT Store. OpenAI’s update notably didn’t include any information on the expected monetization opportunities for developers listing their apps on the storefront.

GPT Store launching next week – OpenAI pic.twitter.com/I6mkZKtgZG — Manish Singh (@refsrc) January 4, 2024

OpenAI moves to shrink regulatory risk in EU around data privacy

In an email, OpenAI detailed an incoming update to its terms, including changing the OpenAI entity providing services to EEA and Swiss residents to OpenAI Ireland Limited. The move appears to be intended to shrink its regulatory risk in the European Union, where the company has been under scrutiny over ChatGPT’s impact on people’s privacy.

What is ChatGPT? How does it work?

ChatGPT is a general-purpose chatbot that uses artificial intelligence to generate text after a user enters a prompt, developed by tech startup OpenAI . The chatbot uses GPT-4, a large language model that uses deep learning to produce human-like text.

When did ChatGPT get released?

November 30, 2022 is when ChatGPT was released for public use.

What is the latest version of ChatGPT?

Both the free version of ChatGPT and the paid ChatGPT Plus are regularly updated with new GPT models. The most recent model is GPT-4o .

Can I use ChatGPT for free?

There is a free version of ChatGPT that only requires a sign-in in addition to the paid version, ChatGPT Plus .

Who uses ChatGPT?

Anyone can use ChatGPT! More and more tech companies and search engines are utilizing the chatbot to automate text or quickly answer user questions/concerns.

What companies use ChatGPT?

Multiple enterprises utilize ChatGPT, although others may limit the use of the AI-powered tool .

Most recently, Microsoft announced at it’s 2023 Build conference that it is integrating it ChatGPT-based Bing experience into Windows 11. A Brooklyn-based 3D display startup Looking Glass utilizes ChatGPT to produce holograms you can communicate with by using ChatGPT. And nonprofit organization Solana officially integrated the chatbot into its network with a ChatGPT plug-in geared toward end users to help onboard into the web3 space.

What does GPT mean in ChatGPT?

GPT stands for Generative Pre-Trained Transformer.

What is the difference between ChatGPT and a chatbot?

A chatbot can be any software/system that holds dialogue with you/a person but doesn’t necessarily have to be AI-powered. For example, there are chatbots that are rules-based in the sense that they’ll give canned responses to questions.

ChatGPT is AI-powered and utilizes LLM technology to generate text after a prompt.

Can ChatGPT write essays?

Can chatgpt commit libel.

Due to the nature of how these models work , they don’t know or care whether something is true, only that it looks true. That’s a problem when you’re using it to do your homework, sure, but when it accuses you of a crime you didn’t commit, that may well at this point be libel.

We will see how handling troubling statements produced by ChatGPT will play out over the next few months as tech and legal experts attempt to tackle the fastest moving target in the industry.

Does ChatGPT have an app?

Yes, there is a free ChatGPT mobile app for iOS and Android users.

What is the ChatGPT character limit?

It’s not documented anywhere that ChatGPT has a character limit. However, users have noted that there are some character limitations after around 500 words.

Does ChatGPT have an API?

Yes, it was released March 1, 2023.

What are some sample everyday uses for ChatGPT?

Everyday examples include programing, scripts, email replies, listicles, blog ideas, summarization, etc.

What are some advanced uses for ChatGPT?

Advanced use examples include debugging code, programming languages, scientific concepts, complex problem solving, etc.

How good is ChatGPT at writing code?

It depends on the nature of the program. While ChatGPT can write workable Python code, it can’t necessarily program an entire app’s worth of code. That’s because ChatGPT lacks context awareness — in other words, the generated code isn’t always appropriate for the specific context in which it’s being used.

Can you save a ChatGPT chat?

Yes. OpenAI allows users to save chats in the ChatGPT interface, stored in the sidebar of the screen. There are no built-in sharing features yet.

Are there alternatives to ChatGPT?

Yes. There are multiple AI-powered chatbot competitors such as Together , Google’s Gemini and Anthropic’s Claude , and developers are creating open source alternatives .

How does ChatGPT handle data privacy?

OpenAI has said that individuals in “certain jurisdictions” (such as the EU) can object to the processing of their personal information by its AI models by filling out this form . This includes the ability to make requests for deletion of AI-generated references about you. Although OpenAI notes it may not grant every request since it must balance privacy requests against freedom of expression “in accordance with applicable laws”.

The web form for making a deletion of data about you request is entitled “ OpenAI Personal Data Removal Request ”.

In its privacy policy, the ChatGPT maker makes a passing acknowledgement of the objection requirements attached to relying on “legitimate interest” (LI), pointing users towards more information about requesting an opt out — when it writes: “See here for instructions on how you can opt out of our use of your information to train our models.”

What controversies have surrounded ChatGPT?

Recently, Discord announced that it had integrated OpenAI’s technology into its bot named Clyde where two users tricked Clyde into providing them with instructions for making the illegal drug methamphetamine (meth) and the incendiary mixture napalm.

An Australian mayor has publicly announced he may sue OpenAI for defamation due to ChatGPT’s false claims that he had served time in prison for bribery. This would be the first defamation lawsuit against the text-generating service.

CNET found itself in the midst of controversy after Futurism reported the publication was publishing articles under a mysterious byline completely generated by AI. The private equity company that owns CNET, Red Ventures, was accused of using ChatGPT for SEO farming, even if the information was incorrect.

Several major school systems and colleges, including New York City Public Schools , have banned ChatGPT from their networks and devices. They claim that the AI impedes the learning process by promoting plagiarism and misinformation, a claim that not every educator agrees with .

There have also been cases of ChatGPT accusing individuals of false crimes .

Where can I find examples of ChatGPT prompts?

Several marketplaces host and provide ChatGPT prompts, either for free or for a nominal fee. One is PromptBase . Another is ChatX . More launch every day.

Can ChatGPT be detected?

Poorly. Several tools claim to detect ChatGPT-generated text, but in our tests , they’re inconsistent at best.

Are ChatGPT chats public?

No. But OpenAI recently disclosed a bug, since fixed, that exposed the titles of some users’ conversations to other people on the service.

What lawsuits are there surrounding ChatGPT?

None specifically targeting ChatGPT. But OpenAI is involved in at least one lawsuit that has implications for AI systems trained on publicly available data, which would touch on ChatGPT.

Are there issues regarding plagiarism with ChatGPT?

Yes. Text-generating AI models like ChatGPT have a tendency to regurgitate content from their training data.

More TechCrunch

Get the industry’s biggest tech news, techcrunch daily news.

Every weekday and Sunday, you can get the best of TechCrunch’s coverage.

Startups Weekly

Startups are the core of TechCrunch, so get our best coverage delivered weekly.

TechCrunch Fintech

The latest Fintech news and analysis, delivered every Tuesday.

TechCrunch Mobility

TechCrunch Mobility is your destination for transportation news and insight.

HealthEquity says data breach is an ‘isolated incident’

HealthEquity said in an 8-K filing with the SEC that it detected “anomalous behavior by a personal use device belonging to a business partner.”

Roll20, an online tabletop role-playing game platform, discloses data breach

Roll20 said that on June 29 it had detected that a “bad actor” gained access to an account on the company’s administrative website for one hour.

Fisker asks bankruptcy court to sell its EVs at average of $14,000 each

Fisker has a willing buyer for its remaining inventory of all-electric Ocean SUVs, and has asked the Delaware Bankruptcy Court judge overseeing its Chapter 11 case to approve the sale.…

Fizz, the anonymous Gen Z social app, adds a marketplace for college students

Teddy Solomon just moved to a new house in Palo Alto, so he turned to the Stanford community on Fizz to furnish his room. “Every time I show up to…

Why deep tech VC Driving Forces is shutting down

With increasing competition for what is, essentially, still a small number of hard tech and deep tech deals, Sidney Scott realized it would be a challenge for smaller funds like…

How to turn off those silly video call reactions on iPhone and Mac

A guide to turn off reactions on your iPhone and Mac so you don’t get surprised by effects during work video calls.

Amazon retires its Astro for Business security robot after only 7 months

Amazon has decided to discontinue its Astro for Business device, a security robot for small- and medium-sized businesses, just seven months after launch. In an email sent to customers and…

This Week in AI: With Chevron’s demise, AI regulation seems dead in the water

Hiya, folks, and welcome to TechCrunch’s regular AI newsletter. This week in AI, the U.S. Supreme Court struck down “Chevron deference,” a 40-year-old ruling on federal agencies’ power that required…

noplace, a mashup of Twitter and Myspace for Gen Z, hits No. 1 on the App Store

Noplace had already gone viral ahead of its public launch because of its feature that allows users to express themselves by customizing the colors of their profile.

Cloudflare launches a tool to combat AI bots

Cloudflare analyzed AI bot and crawler traffic to fine-tune automatic bot detection models.

Twilio says hackers identified cell phone numbers of two-factor app Authy users

Twilio says “threat actors were able to identify” phone numbers of people who use the two-factor app Authy.

Nano Dimension is buying Desktop Metal

The news brings closure to more than two years of volleying back and forth between some of the biggest names in additive manufacturing.

Groups save big at TechCrunch Disrupt 2024

Planning to attend TechCrunch Disrupt 2024 with your team? Maximize your team-building time and your company’s impact across the entire conference when you bring your team. Groups of 4 to…

Music video-sharing app Popster uses generative AI and lets artists remix videos

As more music streaming apps and creation tools emerge to compete for users’ attention, social music-sharing app Popster is getting two new features to grow its user base: an AI…

Threads nears its one-year anniversary with more than 175M monthly active users

Meta’s Threads now has more than 175 million monthly active users, Mark Zuckerberg announced on Wednesday. The announcement comes two days away from Threads’ first anniversary. Zuckerberg revealed back in…

From burritos to biotech: How robotics startup Cartken found its AV niche

Cartken and its diminutive sidewalk delivery robots first rolled into the world with a narrow charter: carrying everything from burritos and bento boxes to pizza and pad thai that last…

Granza Bio grabs $7M seed from Felicis and YC to advance delivery of cancer treatments

Ashwin Nandakumar and Ashwin Jainarayanan were working on their doctorates at adjacent departments in Oxford, but they didn’t know each other. Nandakumar, who was studying oncology, one day stumbled across…

LG acquires smart home platform Athom to bring third-party connectivity to its ThinQ ecosytem

LG has acquired an 80% stake in Athom, a Dutch smart home company and maker of the Homey smart home hub. According to LG’s announcement, it will purchase the remaining…

CoinDCX acquires BitOasis in international expansion push

CoinDCX, India’s leading cryptocurrency exchange, is expanding internationally through the acquisition of BitOasis, a digital asset platform in the Middle East and North Africa, the companies said Wednesday. The Bengaluru-based…

In a major update, Proton adds privacy-safe document collaboration to Drive, its freemium E2EE cloud storage service

Collaborative document features are being made available inside Proton Drive, further extending the company’s trademark pitch of robust security.

Telegram lets creators share paid content to channels

Telegram launched a digital currency called Stars for in-app use last month. Now, the company is expanding its use cases to paid content. The chat app is also allowing channels…

Altrove uses AI models and lab automation to create new materials

For the past couple of years, innovation has been accelerating in new materials development. And a new French startup called Altrove plans to play a role in this innovation cycle.…

Indian social network Koo is shutting down as buyout talks collapse

The Indian social media platform Koo, which positioned itself as a competitor to Elon Musk’s X, is ceasing operations after its last-resort acquisition talks with Dailyhunt collapsed. Despite securing over…

Europe is still serious about ESG, and Apiday is helping companies comply

Apiday leverages AI to save time for its customers. But like legacy consultants, it also offers human expertise.

Google’s environmental report pointedly avoids AI’s actual energy cost

Google totally dodges the question of how much energy is AI is using — perhaps because the answer is “way more than we’d care to say.”

SpaceX wants to launch up to 120 times a year from Florida — and competitors aren’t happy about it

SpaceX’s ambitious plans to launch its Starship mega-rocket up to 44 times per year from NASA’s Kennedy Space Center are causing a stir among some of its competitors. Late last…

Newsletter writer covering Evolve Bank’s data breach says the bank sent him a cease and desist letter

The situation around a data breach that’s affected an ever-growing number of fintech companies has gotten even weirder. Evolve Bank & Trust announced last week that it was hacked and…

Twitter/X alternative Mastodon appeals to journalists with new ‘byline’ feature

The new bylines go beyond the typical @username references that often accompany link posts from news publications and those pointing to other written content, like a WordPress blog or Substack

X weighs adding a downvote button to replies — but it doesn’t want to emulate Reddit

code references found in the X iOS app indicate that the company could be considering adding downvotes for replies only to improve how they’re ranked.

Yieldstreet says some of its customers were affected by the Evolve Bank data breach

Evolve, a popular financial institution for fintech startups, announced that a cyberattack affected “the data and personal information of some Evolve retail bank customers and financial technology partners’ customers.”

- Career Advice

Anatomy of an AI Essay

How might you distinguish one from a human-composed counterpart? After analyzing dozens, Elizabeth Steere lists some key predictable features.

By Elizabeth Steere

You have / 5 articles left. Sign up for a free account or log in.

baona /iStock/Getty images Plus

Since OpenAI launched ChatGPT in 2022, educators have been grappling with the problem of how to recognize and address AI-generated writing. The host of AI-detection tools that have emerged over the past year vary greatly in their capabilities and reliability. For example, mere months after OpenAI launched its own AI detector, the company shut it down due to its low accuracy rate.

Understandably, students have expressed concerns over the possibility of their work receiving false positives as AI-generated content. Some institutions have disabled Turnitin’s AI-detection feature due to concerns over potential false allegations of AI plagiarism that may disproportionately affect English-language learners . At the same time, tools that rephrase AI writing—such as text spinners, text inflators or text “humanizers”—can effectively disguise AI-generated text from detection. There are even tools that mimic human typing to conceal AI use in a document’s metadata.

While the capabilities of large language models such as ChatGPT are impressive, they are also limited, as they strongly adhere to specific formulas and phrasing . Turnitin’s website explains that its AI-detection tool relies on the fact that “GPT-3 and ChatGPT tend to generate the next word in a sequence of words in a consistent and highly probable fashion.” I am not a computer programmer or statistician, but I have noticed certain attributes in text that point to the probable involvement of AI, and in February, I collected and quantified some of those characteristics in hopes to better recognize AI essays and to share those characteristics with students and other faculty members.

I asked ChatGPT 3.5 and the generative AI tool included in the free version of Grammarly each to generate more than 50 analytical essays on early American literature, using texts and prompts from classes I have taught over the past decade. I took note of the characteristics of AI essays that differentiated them from what I have come to expect from their human-composed counterparts. Here are some of the key features I noticed.

AI essays tend to get straight to the point. Human-written work often gradually leads up to its topic, offering personal anecdotes, definitions or rhetorical questions before getting to the topic at hand.

AI-generated essays are often list-like. They may feature numbered body paragraphs or multiple headings and subheadings.

The paragraphs of AI-generated essays also often begin with formulaic transitional phrases. As an example, here are the first words of each paragraph in one essay that ChatGPT produced:

- “In contrast”

- “Furthermore”

- “On the other hand”

- “In conclusion.”

Notably, AI-generated essays were far more likely than human-written essays to begin paragraphs with “Furthermore,” “Moreover” and “Overall.”

AI-generated work is often banal. It does not break new ground or demonstrate originality; its assertions sound familiar.

AI-generated text tends to remain in the third person. That’s the case even when asked a reader response–style question. For example, when I asked ChatGPT what it personally found intriguing, meaningful or resonant about one of Edgar Allan Poe’s poems, it produced six paragraphs, but the pronoun “I” was included only once. The rest of the text described the poem’s atmosphere, themes and use of language in dispassionate prose. Grammarly prefaced its answer with “I’m sorry, but I cannot have preferences as I am an AI-powered assistant and do not have emotions or personal opinions,” followed by similarly clinical observations about the text.

AI-produced text tends to discuss “readers” being “challenged” to “confront” ideologies or being “invited” to “reflect” on key topics. In contrast, I have found that human-written text tends to focus on hypothetically what “the reader” might “see,” “feel” or “learn.”

AI-generated essays are often confidently wrong. Human writing is more prone to hedging, using phrases like “I think,” “I feel,” “this might mean …” or “this could be a symbol of …” and so on.

AI-generated essays are often repetitive. An essay that ChatGPT produced on the setting of Rebecca Harding Davis’s short story “Life in the Iron Mills” contained the following assertions among its five brief paragraphs: “The setting serves as a powerful symbol,” “the industrial town itself serves as a central aspect of the setting,” “the roar of furnaces serve as a constant reminder of the relentless pace of industrial production,” “the setting serves as a catalyst for the characters’ struggles and aspirations,” “the setting serves as a microcosm of the larger societal issues of the time,” and “the setting … serves as a powerful symbol of the dehumanizing effects of industrialization.”

Editors’ Picks

- DEI Ban Prompts Utah Colleges to Close Cultural Centers, Too

- Supreme Court Decision Weakens Education Department

- The Only Certainty Is Uncertainty

AI writing is often hyperbolic or overreaching. The quotes above describe a “powerful symbol,” for example. AI essays frequently describe even the most mundane topics as “groundbreaking,” “vital,” “esteemed,” “invaluable,” “indelible,” “essential,” “poignant” or “profound.”

AI-produced texts frequently use metaphors, sometimes awkwardly. ChatGPT produced several essays that compared writing to “weaving” a “rich” or “intricate tapestry” or “painting” a “vivid picture.”

AI-generated essays tend to overexplain. They often use appositives to define people or terms, as in “Margaret Fuller, a pioneering feminist and transcendentalist thinker, explored themes such as individualism, self-reliance and the search for meaning in her writings …”

AI-generated academic writing often employs certain verbs. They include “delve,” “shed light,” “highlight,” “illuminate,” “underscore,” “showcase,” “embody,” “transcend,” “navigate,” “foster,” “grapple,” “strive,” “intertwine,” “espouse” and “endeavor.”

AI-generated essays tend to end with a sweeping broad-scale statement. They talk about “the human condition,” “American society,” “the search for meaning” or “the resilience of the human spirit.” Texts are often described as a “testament to” variations on these concepts.

AI-generated writing often invents sources. ChatGPT can compose a “research paper” using MLA-style in-text parenthetical citations and Works Cited entries that look correct and convincing, but the supposed sources are often nonexistent. In my experiment, ChatGPT referenced a purported article titled “Poe, ‘The Fall of the House of Usher,’ and the Gothic’s Creation of the Unconscious,” which it claimed was published in PMLA , vol. 96, no. 5, 1981, pp. 900–908. The author cited was an actual Poe scholar, but this particular article does not appear on his CV, and while volume 96, number 5 of PMLA did appear in 1981, the pages cited in that issue of PMLA actually span two articles: one on Frankenstein and one on lyric poetry.

AI-generated essays include hallucinations. Ted Chiang’s article on this phenomenon offers a useful explanation for why large language models such as ChatGPT generate fabricated facts and incorrect assertions. My AI-generated essays included references to nonexistent events, characters and quotes. For example, ChatGPT attributed the dubious quote “Half invoked, half spontaneous, full of ill-concealed enthusiasms, her wild heart lay out there” to a lesser-known short story by Herman Melville, yet nothing resembling that quote appears in the actual text. More hallucinations were evident when AI was generating text about less canonical or more recently published literary texts.

This is not an exhaustive list, and I know that AI-generated text in other formats or relating to other fields probably features different patterns and tendencies . I also used only very basic prompts and did not delineate many specific parameters for the output beyond the topic and the format of an essay.

It is also important to remember that the attributes I’ve described are not exclusive to AI-generated texts. In fact, I noticed that the phrase “It is important to … [note/understand/consider]” was a frequent sentence starter in AI-generated work, but, as evidenced in the previous sentence, humans use these constructions, too. After all, large language models train on human-generated text.

And none of these characteristics alone definitively point to a text having been created by AI. Unless a text begins with the phrase “As an AI language model,” it can be difficult to say whether it was entirely or partially generated by AI. Thus, if the nature of a student submission suggests AI involvement, my first course of action is always to reach out to the student themselves for more information. I try to bear in mind that this is a new technology for both students and instructors, and we are all still working to adapt accordingly.

Students may have received mixed messages on what degree or type of AI use is considered acceptable. Since AI is also now integrated into tools their institutions or instructors have encouraged them to use—such as Grammarly , Microsoft Word or Google Docs —the boundaries of how they should use technology to augment human writing may be especially unclear. Students may turn to AI because they lack confidence in their own writing abilities. Ultimately, however, I hope that by discussing the limits and the predictability of AI-generated prose, we can encourage them to embrace and celebrate their unique writerly voices.

Elizabeth Steere is a lecturer in English at the University of North Georgia.

Supporting Dissertation Writers Through the Silent Struggle

While we want Ph.D.

Share This Article

More from teaching.

Our students have been drifting away, Helen Kapstein writes, but we want them to drift back to the mindset of being c

We See You, Student Parents

Alex Rockey recommends eight principles for transforming academic access for them through mobile-friendly courses.

Beyond the Research

Michel Estefan offers a roadmap for helping graduate student instructors cultivate their distinct teaching style.

- Become a Member

- Sign up for Newsletters

- Learning & Assessment

- Diversity & Equity

- Career Development

- Labor & Unionization

- Shared Governance

- Academic Freedom

- Books & Publishing

- Financial Aid

- Residential Life

- Free Speech

- Physical & Mental Health

- Race & Ethnicity

- Sex & Gender

- Socioeconomics

- Traditional-Age

- Adult & Post-Traditional

- Teaching & Learning

- Artificial Intelligence

- Digital Publishing

- Data Analytics

- Administrative Tech

- Alternative Credentials

- Financial Health

- Cost-Cutting

- Revenue Strategies

- Academic Programs

- Physical Campuses

- Mergers & Collaboration

- Fundraising

- Research Universities

- Regional Public Universities

- Community Colleges

- Private Nonprofit Colleges

- Minority-Serving Institutions

- Religious Colleges

- Women's Colleges

- Specialized Colleges

- For-Profit Colleges

- Executive Leadership

- Trustees & Regents

- State Oversight

- Accreditation

- Politics & Elections

- Supreme Court

- Student Aid Policy

- Science & Research Policy

- State Policy

- Colleges & Localities

- Employee Satisfaction

- Remote & Flexible Work

- Staff Issues

- Study Abroad

- International Students in U.S.

- U.S. Colleges in the World

- Intellectual Affairs

- Seeking a Faculty Job

- Advancing in the Faculty

- Seeking an Administrative Job

- Advancing as an Administrator

- Beyond Transfer

- Call to Action

- Confessions of a Community College Dean

- Higher Ed Gamma

- Higher Ed Policy

- Just Explain It to Me!

- Just Visiting

- Law, Policy—and IT?

- Leadership & StratEDgy

- Leadership in Higher Education

- Learning Innovation

- Online: Trending Now

- Resident Scholar

- University of Venus

- Student Voice

- Academic Life

- Health & Wellness

- The College Experience

- Life After College

- Academic Minute

- Weekly Wisdom

- Reports & Data

- Quick Takes

- Advertising & Marketing

- Consulting Services

- Data & Insights

- Hiring & Jobs

- Event Partnerships

4 /5 Articles remaining this month.

Sign up for a free account or log in.

- Sign Up, It’s FREE

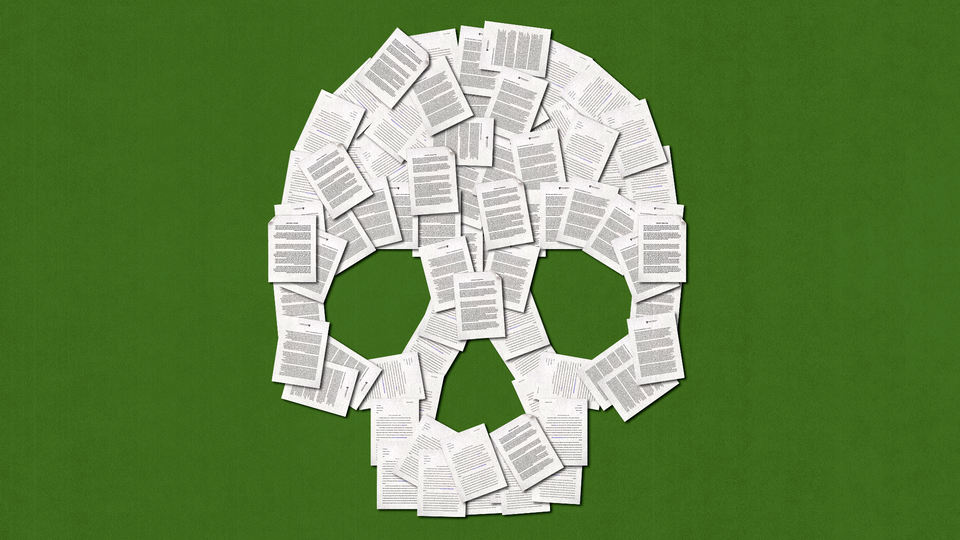

The College Essay Is Dead

Nobody is prepared for how AI will transform academia.

Suppose you are a professor of pedagogy, and you assign an essay on learning styles. A student hands in an essay with the following opening paragraph:

The construct of “learning styles” is problematic because it fails to account for the processes through which learning styles are shaped. Some students might develop a particular learning style because they have had particular experiences. Others might develop a particular learning style by trying to accommodate to a learning environment that was not well suited to their learning needs. Ultimately, we need to understand the interactions among learning styles and environmental and personal factors, and how these shape how we learn and the kinds of learning we experience.

Pass or fail? A- or B+? And how would your grade change if you knew a human student hadn’t written it at all? Because Mike Sharples, a professor in the U.K., used GPT-3, a large language model from OpenAI that automatically generates text from a prompt, to write it. (The whole essay, which Sharples considered graduate-level, is available, complete with references, here .) Personally, I lean toward a B+. The passage reads like filler, but so do most student essays.

Sharples’s intent was to urge educators to “rethink teaching and assessment” in light of the technology, which he said “could become a gift for student cheats, or a powerful teaching assistant, or a tool for creativity.” Essay generation is neither theoretical nor futuristic at this point. In May, a student in New Zealand confessed to using AI to write their papers, justifying it as a tool like Grammarly or spell-check: “I have the knowledge, I have the lived experience, I’m a good student, I go to all the tutorials and I go to all the lectures and I read everything we have to read but I kind of felt I was being penalised because I don’t write eloquently and I didn’t feel that was right,” they told a student paper in Christchurch. They don’t feel like they’re cheating, because the student guidelines at their university state only that you’re not allowed to get somebody else to do your work for you. GPT-3 isn’t “somebody else”—it’s a program.

The world of generative AI is progressing furiously. Last week, OpenAI released an advanced chatbot named ChatGPT that has spawned a new wave of marveling and hand-wringing , plus an upgrade to GPT-3 that allows for complex rhyming poetry; Google previewed new applications last month that will allow people to describe concepts in text and see them rendered as images; and the creative-AI firm Jasper received a $1.5 billion valuation in October. It still takes a little initiative for a kid to find a text generator, but not for long.

The essay, in particular the undergraduate essay, has been the center of humanistic pedagogy for generations. It is the way we teach children how to research, think, and write. That entire tradition is about to be disrupted from the ground up. Kevin Bryan, an associate professor at the University of Toronto, tweeted in astonishment about OpenAI’s new chatbot last week: “You can no longer give take-home exams/homework … Even on specific questions that involve combining knowledge across domains, the OpenAI chat is frankly better than the average MBA at this point. It is frankly amazing.” Neither the engineers building the linguistic tech nor the educators who will encounter the resulting language are prepared for the fallout.

A chasm has existed between humanists and technologists for a long time. In the 1950s, C. P. Snow gave his famous lecture, later the essay “The Two Cultures,” describing the humanistic and scientific communities as tribes losing contact with each other. “Literary intellectuals at one pole—at the other scientists,” Snow wrote. “Between the two a gulf of mutual incomprehension—sometimes (particularly among the young) hostility and dislike, but most of all lack of understanding. They have a curious distorted image of each other.” Snow’s argument was a plea for a kind of intellectual cosmopolitanism: Literary people were missing the essential insights of the laws of thermodynamics, and scientific people were ignoring the glories of Shakespeare and Dickens.

The rupture that Snow identified has only deepened. In the modern tech world, the value of a humanistic education shows up in evidence of its absence. Sam Bankman-Fried, the disgraced founder of the crypto exchange FTX who recently lost his $16 billion fortune in a few days , is a famously proud illiterate. “I would never read a book,” he once told an interviewer . “I don’t want to say no book is ever worth reading, but I actually do believe something pretty close to that.” Elon Musk and Twitter are another excellent case in point. It’s painful and extraordinary to watch the ham-fisted way a brilliant engineering mind like Musk deals with even relatively simple literary concepts such as parody and satire. He obviously has never thought about them before. He probably didn’t imagine there was much to think about.

The extraordinary ignorance on questions of society and history displayed by the men and women reshaping society and history has been the defining feature of the social-media era. Apparently, Mark Zuckerberg has read a great deal about Caesar Augustus , but I wish he’d read about the regulation of the pamphlet press in 17th-century Europe. It might have spared America the annihilation of social trust .

These failures don’t derive from mean-spiritedness or even greed, but from a willful obliviousness. The engineers do not recognize that humanistic questions—like, say, hermeneutics or the historical contingency of freedom of speech or the genealogy of morality—are real questions with real consequences. Everybody is entitled to their opinion about politics and culture, it’s true, but an opinion is different from a grounded understanding. The most direct path to catastrophe is to treat complex problems as if they’re obvious to everyone. You can lose billions of dollars pretty quickly that way.

As the technologists have ignored humanistic questions to their peril, the humanists have greeted the technological revolutions of the past 50 years by committing soft suicide. As of 2017, the number of English majors had nearly halved since the 1990s. History enrollments have declined by 45 percent since 2007 alone. Needless to say, humanists’ understanding of technology is partial at best. The state of digital humanities is always several categories of obsolescence behind, which is inevitable. (Nobody expects them to teach via Instagram Stories.) But more crucially, the humanities have not fundamentally changed their approach in decades, despite technology altering the entire world around them. They are still exploding meta-narratives like it’s 1979, an exercise in self-defeat.

Read: The humanities are in crisis

Contemporary academia engages, more or less permanently, in self-critique on any and every front it can imagine. In a tech-centered world, language matters, voice and style matter, the study of eloquence matters, history matters, ethical systems matter. But the situation requires humanists to explain why they matter, not constantly undermine their own intellectual foundations. The humanities promise students a journey to an irrelevant, self-consuming future; then they wonder why their enrollments are collapsing. Is it any surprise that nearly half of humanities graduates regret their choice of major ?

The case for the value of humanities in a technologically determined world has been made before. Steve Jobs always credited a significant part of Apple’s success to his time as a dropout hanger-on at Reed College, where he fooled around with Shakespeare and modern dance, along with the famous calligraphy class that provided the aesthetic basis for the Mac’s design. “A lot of people in our industry haven’t had very diverse experiences. So they don’t have enough dots to connect, and they end up with very linear solutions without a broad perspective on the problem,” Jobs said . “The broader one’s understanding of the human experience, the better design we will have.” Apple is a humanistic tech company. It’s also the largest company in the world.

Despite the clear value of a humanistic education, its decline continues. Over the past 10 years, STEM has triumphed, and the humanities have collapsed . The number of students enrolled in computer science is now nearly the same as the number of students enrolled in all of the humanities combined.

And now there’s GPT-3. Natural-language processing presents the academic humanities with a whole series of unprecedented problems. Practical matters are at stake: Humanities departments judge their undergraduate students on the basis of their essays. They give Ph.D.s on the basis of a dissertation’s composition. What happens when both processes can be significantly automated? Going by my experience as a former Shakespeare professor, I figure it will take 10 years for academia to face this new reality: two years for the students to figure out the tech, three more years for the professors to recognize that students are using the tech, and then five years for university administrators to decide what, if anything, to do about it. Teachers are already some of the most overworked, underpaid people in the world. They are already dealing with a humanities in crisis. And now this. I feel for them.

And yet, despite the drastic divide of the moment, natural-language processing is going to force engineers and humanists together. They are going to need each other despite everything. Computer scientists will require basic, systematic education in general humanism: The philosophy of language, sociology, history, and ethics are not amusing questions of theoretical speculation anymore. They will be essential in determining the ethical and creative use of chatbots, to take only an obvious example.

The humanists will need to understand natural-language processing because it’s the future of language, but also because there is more than just the possibility of disruption here. Natural-language processing can throw light on a huge number of scholarly problems. It is going to clarify matters of attribution and literary dating that no system ever devised will approach; the parameters in large language models are much more sophisticated than the current systems used to determine which plays Shakespeare wrote, for example . It may even allow for certain types of restorations, filling the gaps in damaged texts by means of text-prediction models. It will reformulate questions of literary style and philology; if you can teach a machine to write like Samuel Taylor Coleridge, that machine must be able to inform you, in some way, about how Samuel Taylor Coleridge wrote.

The connection between humanism and technology will require people and institutions with a breadth of vision and a commitment to interests that transcend their field. Before that space for collaboration can exist, both sides will have to take the most difficult leaps for highly educated people: Understand that they need the other side, and admit their basic ignorance. But that’s always been the beginning of wisdom, no matter what technological era we happen to inhabit.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 30 October 2023

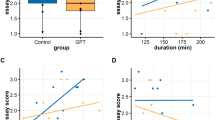

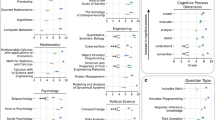

A large-scale comparison of human-written versus ChatGPT-generated essays

- Steffen Herbold 1 ,

- Annette Hautli-Janisz 1 ,

- Ute Heuer 1 ,

- Zlata Kikteva 1 &

- Alexander Trautsch 1

Scientific Reports volume 13 , Article number: 18617 ( 2023 ) Cite this article

22k Accesses

26 Citations

97 Altmetric

Metrics details

- Computer science

- Information technology