Research Methods Resources

Methods at a glance.

This section provides information and examples of methodological issues to be aware of when working with different study designs. Virtually all studies face methodological issues regarding the selection of the primary outcome(s), sample size estimation, missing outcomes, and multiple comparisons. Randomized studies face additional challenges related to the method for randomization. Other studies face specific challenges associated with their study design such as those that arise in effectiveness-implementation research; multiphase optimization strategy (MOST) studies; sequential, multiple assignment, randomized trials (SMART); crossover designs; non-inferiority trials; regression discontinuity designs; and paired availability designs. Some studies face issues involving exact tests, adherence to behavioral interventions, noncompliance in encouragement designs, evaluation of risk prediction models, or evaluation of surrogate endpoints.

Learn more about broadly applicable methods

Experiments, including clinical trials, differ considerably in the methods used to assign participants to study conditions (or study arms) and to deliver interventions to those participants.

This section provides information related to the design and analysis of experiments in which

- participants are assigned in groups (or clusters) and individual observations are analyzed to evaluate the effect of the intervention,

- participants are assigned individually but receive at least some of their intervention with other participants or through an intervention agent shared with other participants,

- participants are assigned in groups (or clusters) but groups cross over to the intervention condition at pre-determined time points in sequential, staggered fashion until all groups receive the intervention, and

- participants are assigned in groups, which are assigned to receive the intervention based on a cutoff value of some score value, and individual observations are used to evaluate the effect of the intervention.

This material is relevant for both human and animal studies as well as basic and applied research. And while it is important for investigators to become familiar with the issues presented on this website, it is even more important that they collaborate with a methodologist who is familiar with these issues.

In a parallel group-randomized trial (GRT), groups or clusters are randomized to study conditions, and observations are taken on the members of those groups with no crossover to a different condition during the trial.

Learn more about GRTs

In an individually randomized group-treatment (IRGT) trial, individuals are randomized to study conditions but receive at least some of their intervention with other participants or through an intervention agent shared with other participants.

Learn more about IRGTs

In a stepped wedge group- or cluster-randomized trial (SWGRT), groups or clusters are randomized to sequences that cross over to the intervention condition at predetermined time points in a staggered fashion until all groups receive the intervention.

Learn more about SWGRTs

In a group or cluster regression discontinuity design (GRDD), groups or clusters are assigned to study conditions if a group-level summary crosses a cut-off defined by an assignment score. Observations are taken on members of the groups.

Learn more about GRDDs

NIH Clinical Trial Requirements

The NIH launched a series of initiatives to enhance the accountability and transparency of clinical research. These initiatives target key points along the entire clinical trial lifecycle, from concept to reporting the results.

Check out the Frequently Asked Questions section or send us a message .

Disclaimer: Substantial effort has been made to provide accurate and complete information on this website. However, we cannot guarantee that there will be no errors. Neither the U.S. Government nor the National Institutes of Health (NIH) assumes any legal liability for the accuracy, completeness, or usefulness of any information, products, or processes disclosed herein, or represents that use of such information, products, or processes would not infringe on privately owned rights. The NIH does not endorse or recommend any commercial products, processes, or services. The views and opinions of authors expressed on NIH websites do not necessarily state or reflect those of the U.S. Government, and they may not be used for advertising or product endorsement purposes.

- Skip to main content

- Skip to FDA Search

- Skip to in this section menu

- Skip to footer links

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

On Oct. 1, 2024, the FDA began implementing a reorganization impacting many parts of the agency. We are in the process of updating FDA.gov content to reflect these changes.

U.S. Food and Drug Administration

- Search

- Menu

- For Patients

- Clinical Trials: What Patients Need to Know

What Are the Different Types of Clinical Research?

Different types of clinical research are used depending on what the researchers are studying. Below are descriptions of some different kinds of clinical research.

Treatment Research generally involves an intervention such as medication, psychotherapy, new devices, or new approaches to surgery or radiation therapy.

Prevention Research looks for better ways to prevent disorders from developing or returning. Different kinds of prevention research may study medicines, vitamins, vaccines, minerals, or lifestyle changes.

Diagnostic Research refers to the practice of looking for better ways to identify a particular disorder or condition.

Screening Research aims to find the best ways to detect certain disorders or health conditions.

Quality of Life Research explores ways to improve comfort and the quality of life for individuals with a chronic illness.

Genetic studies aim to improve the prediction of disorders by identifying and understanding how genes and illnesses may be related. Research in this area may explore ways in which a person’s genes make him or her more or less likely to develop a disorder. This may lead to development of tailor-made treatments based on a patient’s genetic make-up.

Epidemiological studies seek to identify the patterns, causes, and control of disorders in groups of people.

An important note: some clinical research is “outpatient,” meaning that participants do not stay overnight at the hospital. Some is “inpatient,” meaning that participants will need to stay for at least one night in the hospital or research center. Be sure to ask the researchers what their study requires.

Phases of clinical trials: when clinical research is used to evaluate medications and devices Clinical trials are a kind of clinical research designed to evaluate and test new interventions such as psychotherapy or medications. Clinical trials are often conducted in four phases. The trials at each phase have a different purpose and help scientists answer different questions.

Phase I trials Researchers test an experimental drug or treatment in a small group of people for the first time. The researchers evaluate the treatment’s safety, determine a safe dosage range, and identify side effects.

Phase II trials The experimental drug or treatment is given to a larger group of people to see if it is effective and to further evaluate its safety.

Phase III trials The experimental study drug or treatment is given to large groups of people. Researchers confirm its effectiveness, monitor side effects, compare it to commonly used treatments, and collect information that will allow the experimental drug or treatment to be used safely.

Phase IV trials Post-marketing studies, which are conducted after a treatment is approved for use by the FDA, provide additional information including the treatment or drug’s risks, benefits, and best use.

Examples of other kinds of clinical research Many people believe that all clinical research involves testing of new medications or devices. This is not true, however. Some studies do not involve testing medications and a person’s regular medications may not need to be changed. Healthy volunteers are also needed so that researchers can compare their results to results of people with the illness being studied. Some examples of other kinds of research include the following:

A long-term study that involves psychological tests or brain scans

A genetic study that involves blood tests but no changes in medication

A study of family history that involves talking to family members to learn about people’s medical needs and history.

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Clinical research study designs: The essentials

Ambika g chidambaram, maureen josephson.

- Author information

- Article notes

- Copyright and License information

Correspondence , Maureen Josephson, Children's Hospital of Philadelphia, PA 19104, USA. Email: [email protected]

Corresponding author.

Received 2019 Nov 16; Accepted 2019 Dec 3; Collection date 2019 Dec.

This is an open access article under the terms of the http://creativecommons.org/licenses/by-nc-nd/4.0/ License, which permits use and distribution in any medium, provided the original work is properly cited, the use is non‐commercial and no modifications or adaptations are made.

In clinical research, our aim is to design a study which would be able to derive a valid and meaningful scientific conclusion using appropriate statistical methods. The conclusions derived from a research study can either improve health care or result in inadvertent harm to patients. Hence, this requires a well‐designed clinical research study that rests on a strong foundation of a detailed methodology and governed by ethical clinical principles. The purpose of this review is to provide the readers an overview of the basic study designs and its applicability in clinical research.

Keywords: Clinical research study design, Clinical trials, Experimental study designs, Observational study designs, Randomization

Introduction

In clinical research, our aim is to design a study, which would be able to derive a valid and meaningful scientific conclusion using appropriate statistical methods that can be translated to the “real world” setting. 1 Before choosing a study design, one must establish aims and objectives of the study, and choose an appropriate target population that is most representative of the population being studied. The conclusions derived from a research study can either improve health care or result in inadvertent harm to patients. Hence, this requires a well‐designed clinical research study that rests on a strong foundation of a detailed methodology and is governed by ethical principles. 2

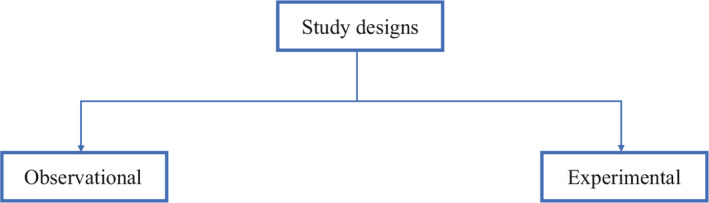

From an epidemiological standpoint, there are two major types of clinical study designs, observational and experimental. 3 Observational studies are hypothesis‐generating studies, and they can be further divided into descriptive and analytic. Descriptive observational studies provide a description of the exposure and/or the outcome, and analytic observational studies provide a measurement of the association between the exposure and the outcome. Experimental studies, on the other hand, are hypothesis testing studies. It involves an intervention that tests the association between the exposure and outcome. Each study design is different, and so it would be important to choose a design that would most appropriately answer the question in mind and provide the most valuable information. We will be reviewing each study design in detail (Figure 1 ).

Overview of clinical research study designs

Observational study designs

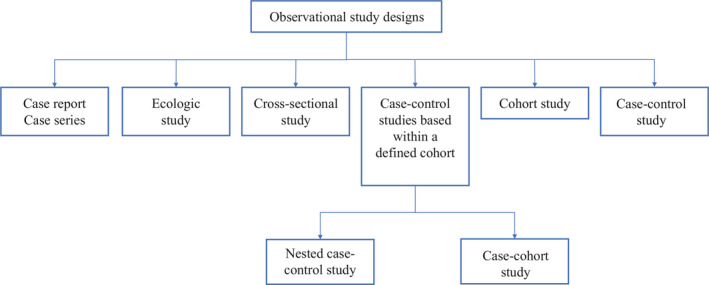

Observational studies ask the following questions: what, who, where and when. There are many study designs that fall under the umbrella of descriptive study designs, and they include, case reports, case series, ecologic study, cross‐sectional study, cohort study and case‐control study (Figure 2 ).

Classification of observational study designs

Case reports and case series

Every now and then during clinical practice, we come across a case that is atypical or ‘out of the norm’ type of clinical presentation. This atypical presentation is usually described as case reports which provides a detailed and comprehensive description of the case. 4 It is one of the earliest forms of research and provides an opportunity for the investigator to describe the observations that make a case unique. There are no inferences obtained and therefore cannot be generalized to the population which is a limitation. Most often than not, a series of case reports make a case series which is an atypical presentation found in a group of patients. This in turn poses the question for a new disease entity and further queries the investigator to look into mechanistic investigative opportunities to further explore. However, in a case series, the cases are not compared to subjects without the manifestations and therefore it cannot determine which factors in the description are unique to the new disease entity.

Ecologic study

Ecological studies are observational studies that provide a description of population group characteristics. That is, it describes characteristics to all individuals within a group. For example, Prentice et al 5 measured incidence of breast cancer and per capita intake of dietary fat, and found a correlation that higher per capita intake of dietary fat was associated with an increased incidence of breast cancer. But the study does not conclude specifically which subjects with breast cancer had a higher dietary intake of fat. Thus, one of the limitations with ecologic study designs is that the characteristics are attributed to the whole group and so the individual characteristics are unknown.

Cross‐sectional study

Cross‐sectional studies are study designs used to evaluate an association between an exposure and outcome at the same time. It can be classified under either descriptive or analytic, and therefore depends on the question being answered by the investigator. Since, cross‐sectional studies are designed to collect information at the same point of time, this provides an opportunity to measure prevalence of the exposure or the outcome. For example, a cross‐sectional study design was adopted to estimate the global need for palliative care for children based on representative sample of countries from all regions of the world and all World Bank income groups. 6 The limitation of cross‐sectional study design is that temporal association cannot be established as the information is collected at the same point of time. If a study involves a questionnaire, then the investigator can ask questions to onset of symptoms or risk factors in relation to onset of disease. This would help in obtaining a temporal sequence between the exposure and outcome. 7

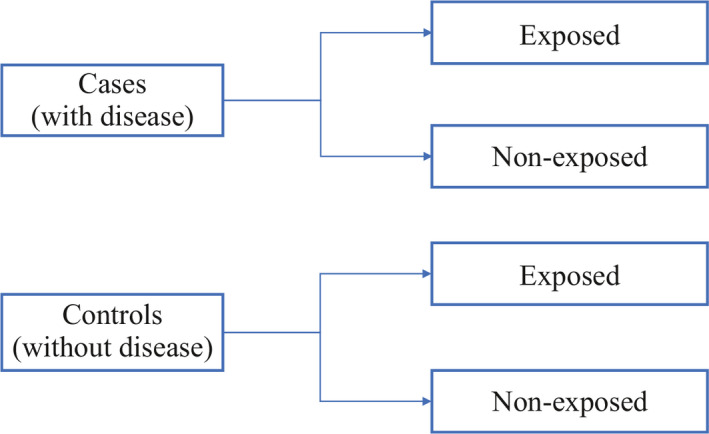

Case‐control study

Case‐control studies are study designs that compare two groups, such as the subjects with disease (cases) to the subjects without disease (controls), and to look for differences in risk factors. 8 This study is used to study risk factors or etiologies for a disease, especially if the disease is rare. Thus, case‐control studies can also be hypothesis testing studies and therefore can suggest a causal relationship but cannot prove. It is less expensive and less time‐consuming than cohort studies (described in section “Cohort study”). An example of a case‐control study was performed in Pakistan evaluating the risk factors for neonatal tetanus. They retrospectively reviewed a defined cohort for cases with and without neonatal tetanus. 9 They found a strong association of the application of ghee (clarified butter) as a risk factor for neonatal tetanus. Although this suggests a causal relationship, cause cannot be proven by this methodology (Figure 3 ).

Case‐control study design

One of the limitations of case‐control studies is that they cannot estimate prevalence of a disease accurately as a proportion of cases and controls are studied at a time. Case‐control studies are also prone to biases such as recall bias, as the subjects are providing information based on their memory. Hence, the subjects with disease are likely to remember the presence of risk factors compared to the subjects without disease.

One of the aspects that is often overlooked is the selection of cases and controls. It is important to select the cases and controls appropriately to obtain a meaningful and scientifically sound conclusion and this can be achieved by implementing matching. Matching is defined by Gordis et al as ‘the process of selecting the controls so that they are similar to the cases in certain characteristics such as age, race, sex, socioeconomic status and occupation’ 7 This would help identify risk factors or probable etiologies that are not due to differences between the cases and controls.

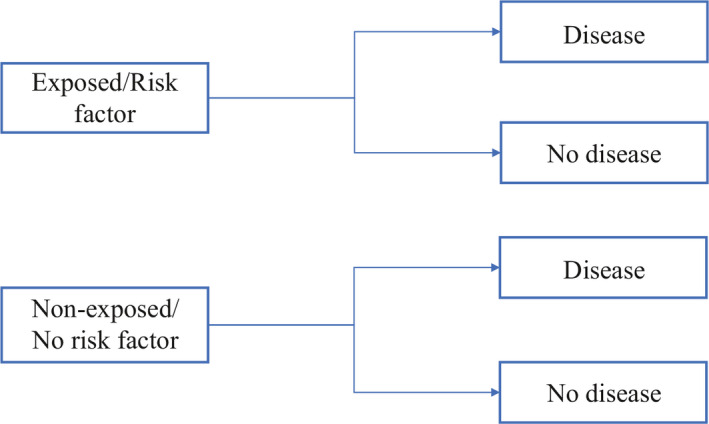

Cohort study

Cohort studies are study designs that compare two groups, such as the subjects with exposure/risk factor to the subjects without exposure/risk factor, for differences in incidence of outcome/disease. Most often, cohort study designs are used to study outcome(s) from a single exposure/risk factor. Thus, cohort studies can also be hypothesis testing studies and can infer and interpret a causal relationship between an exposure and a proposed outcome, but cannot establish it (Figure 4 ).

Cohort study design

Cohort studies can be classified as prospective and retrospective. 7 Prospective cohort studies follow subjects from presence of risk factors/exposure to development of disease/outcome. This could take up to years before development of disease/outcome, and therefore is time consuming and expensive. On the other hand, retrospective cohort studies identify a population with and without the risk factor/exposure based on past records and then assess if they had developed the disease/outcome at the time of study. Thus, the study design for prospective and retrospective cohort studies are similar as we are comparing populations with and without exposure/risk factor to development of outcome/disease.

Cohort studies are typically chosen as a study design when the suspected exposure is known and rare, and the incidence of disease/outcome in the exposure group is suspected to be high. The choice between prospective and retrospective cohort study design would depend on the accuracy and reliability of the past records regarding the exposure/risk factor.

Some of the biases observed with cohort studies include selection bias and information bias. Some individuals who have the exposure may refuse to participate in the study or would be lost to follow‐up, and in those instances, it becomes difficult to interpret the association between an exposure and outcome. Also, if the information is inaccurate when past records are used to evaluate for exposure status, then again, the association between the exposure and outcome becomes difficult to interpret.

Case‐control studies based within a defined cohort

Case‐control studies based within a defined cohort is a form of study design that combines some of the features of a cohort study design and a case‐control study design. When a defined cohort is embedded in a case‐control study design, all the baseline information collected before the onset of disease like interviews, surveys, blood or urine specimens, then the cohort is followed onset of disease. One of the advantages of following the above design is that it eliminates recall bias as the information regarding risk factors is collected before onset of disease. Case‐control studies based within a defined cohort can be further classified into two types: Nested case‐control study and Case‐cohort study.

Nested case‐control study

A nested case‐control study consists of defining a cohort with suspected risk factors and assigning a control within a cohort to the subject who develops the disease. 10 Over a period, cases and controls are identified and followed as per the investigator's protocol. Hence, the case and control are matched on calendar time and length of follow‐up. When this study design is implemented, it is possible for the control that was selected early in the study to develop the disease and become a case in the latter part of the study.

Case‐cohort Study

A case‐cohort study is similar to a nested case‐control study except that there is a defined sub‐cohort which forms the groups of individuals without the disease (control), and the cases are not matched on calendar time or length of follow‐up with the control. 11 With these modifications, it is possible to compare different disease groups with the same sub‐cohort group of controls and eliminates matching between the case and control. However, these differences will need to be accounted during analysis of results.

Experimental study design

The basic concept of experimental study design is to study the effect of an intervention. In this study design, the risk factor/exposure of interest/treatment is controlled by the investigator. Therefore, these are hypothesis testing studies and can provide the most convincing demonstration of evidence for causality. As a result, the design of the study requires meticulous planning and resources to provide an accurate result.

The experimental study design can be classified into 2 groups, that is, controlled (with comparison) and uncontrolled (without comparison). 1 In the group without controls, the outcome is directly attributed to the treatment received in one group. This fails to prove if the outcome was truly due to the intervention implemented or due to chance. This can be avoided if a controlled study design is chosen which includes a group that does not receive the intervention (control group) and a group that receives the intervention (intervention/experiment group), and therefore provide a more accurate and valid conclusion.

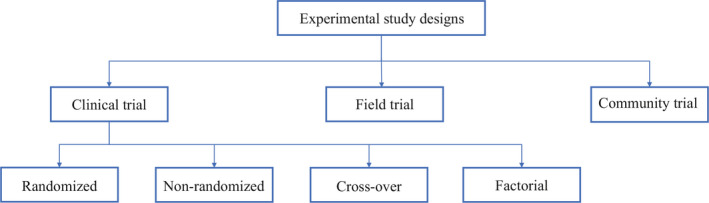

Experimental study designs can be divided into 3 broad categories: clinical trial, community trial, field trial. The specifics of each study design are explained below (Figure 5 ).

Experimental study designs

Clinical trial

Clinical trials are also known as therapeutic trials, which involve subjects with disease and are placed in different treatment groups. It is considered a gold standard approach for epidemiological research. One of the earliest clinical trial studies was performed by James Lind et al in 1747 on sailors with scurvy. 12 Lind divided twelve scorbutic sailors into six groups of two. Each group received the same diet, in addition to a quart of cider (group 1), twenty‐five drops of elixir of vitriol which is sulfuric acid (group 2), two spoonfuls of vinegar (group 3), half a pint of seawater (group 4), two oranges and one lemon (group 5), and a spicy paste plus a drink of barley water (group 6). The group who ate two oranges and one lemon had shown the most sudden and visible clinical effects and were taken back at the end of 6 days as being fit for duty. During Lind's time, this was not accepted but was shown to have similar results when repeated 47 years later in an entire fleet of ships. Based on the above results, in 1795 lemon juice was made a required part of the diet of sailors. Thus, clinical trials can be used to evaluate new therapies, such as new drug or new indication, new drug combination, new surgical procedure or device, new dosing schedule or mode of administration, or a new prevention therapy.

While designing a clinical trial, it is important to select the population that is best representative of the general population. Therefore, the results obtained from the study can be generalized to the population from which the sample population was selected. It is also as important to select appropriate endpoints while designing a trial. Endpoints need to be well‐defined, reproducible, clinically relevant and achievable. The types of endpoints include continuous, ordinal, rates and time‐to‐event, and it is typically classified as primary, secondary or tertiary. 2 An ideal endpoint is a purely clinical outcome, for example, cure/survival, and thus, the clinical trials will become very long and expensive trials. Therefore, surrogate endpoints are used that are biologically related to the ideal endpoint. Surrogate endpoints need to be reproducible, easily measured, related to the clinical outcome, affected by treatment and occurring earlier than clinical outcome. 2

Clinical trials are further divided into randomized clinical trial, non‐randomized clinical trial, cross‐over clinical trial and factorial clinical trial.

Randomized clinical trial

A randomized clinical trial is also known as parallel group randomized trials or randomized controlled trials. Randomized clinical trials involve randomizing subjects with similar characteristics to two groups (or multiple groups): the group that receives the intervention/experimental therapy and the other group that received the placebo (or standard of care). 13 This is typically performed by using a computer software, manually or by other methods. Hence, we can measure the outcomes and efficacy of the intervention/experimental therapy being studied without bias as subjects have been randomized to their respective groups with similar baseline characteristics. This type of study design is considered gold standard for epidemiological research. However, this study design is generally not applicable to rare and serious disease process as it would unethical to treat that group with a placebo. Please see section “Randomization” for detailed explanation regarding randomization and placebo.

Non‐randomized clinical trial

A non‐randomized clinical trial involves an approach to selecting controls without randomization. With this type of study design a pattern is usually adopted, such as, selection of subjects and controls on certain days of the week. Depending on the approach adopted, the selection of subjects becomes predictable and therefore, there is bias with regards to selection of subjects and controls that would question the validity of the results obtained.

Historically controlled studies can be considered as a subtype of non‐randomized clinical trial. In this study design subtype, the source of controls is usually adopted from the past, such as from medical records and published literature. 1 The advantages of this study design include being cost‐effective, time saving and easily accessible. However, since this design depends on already collected data from different sources, the information obtained may not be accurate, reliable, lack uniformity and/or completeness as well. Though historically controlled studies maybe easier to conduct, the disadvantages will need to be taken into account while designing a study.

Cross‐over clinical trial

In cross‐over clinical trial study design, there are two groups who undergoes the same intervention/experiment at different time periods of the study. That is, each group serves as a control while the other group is undergoing the intervention/experiment. 14 Depending on the intervention/experiment, a ‘washout’ period is recommended. This would help eliminate residuals effects of the intervention/experiment when the experiment group transitions to be the control group. Hence, the outcomes of the intervention/experiment will need to be reversible as this type of study design would not be possible if the subject is undergoing a surgical procedure.

Factorial trial

A factorial trial study design is adopted when the researcher wishes to test two different drugs with independent effects on the same population. Typically, the population is divided into 4 groups, the first with drug A, the second with drug B, the third with drug A and B, and the fourth with neither drug A nor drug B. The outcomes for drug A are compared to those on drug A, drug A and B and to those who were on drug B and neither drug A nor drug B. 15 The advantages of this study design that it saves time and helps to study two different drugs on the same study population at the same time. However, this study design would not be applicable if either of the drugs or interventions overlaps with each other on modes of action or effects, as the results obtained would not attribute to a particular drug or intervention.

Community trial

Community trials are also known as cluster‐randomized trials, involve groups of individuals with and without disease who are assigned to different intervention/experiment groups. Hence, groups of individuals from a certain area, such as a town or city, or a certain group such as school or college, will undergo the same intervention/experiment. 16 Hence, the results will be obtained at a larger scale; however, will not be able to account for inter‐individual and intra‐individual variability.

Field trial

Field trials are also known as preventive or prophylactic trials, and the subjects without the disease are placed in different preventive intervention groups. 16 One of the hypothetical examples for a field trial would be to randomly assign to groups of a healthy population and to provide an intervention to a group such as a vitamin and following through to measure certain outcomes. Hence, the subjects are monitored over a period of time for occurrence of a particular disease process.

Overview of methodologies used within a study design

Randomization.

Randomization is a well‐established methodology adopted in research to prevent bias due to subject selection, which may impact the result of the intervention/experiment being studied. It is one of the fundamental principles of an experimental study designs and ensures scientific validity. It provides a way to avoid predicting which subjects are assigned to a certain group and therefore, prevent bias on the final results due to subject selection. This also ensures comparability between groups as most baseline characteristics are similar prior to randomization and therefore helps to interpret the results regarding the intervention/experiment group without bias.

There are various ways to randomize and it can be as simple as a ‘flip of a coin’ to use computer software and statistical methods. To better describe randomization, there are three types of randomization: simple randomization, block randomization and stratified randomization.

Simple randomization

In simple randomization, the subjects are randomly allocated to experiment/intervention groups based on a constant probability. That is, if there are two groups A and B, the subject has a 0.5 probability of being allocated to either group. This can be performed in multiple ways, and one of which being as simple as a ‘flip of a coin’ to using random tables or numbers. 17 The advantage of using this methodology is that it eliminates selection bias. However, the disadvantage with this methodology is that an imbalance in the number allocated to each group as well as the prognostic factors between groups. Hence, it is more challenging in studies with a small sample size.

Block randomization

In block randomization, the subjects of similar characteristics are classified into blocks. The aim of block randomization is to balance the number of subjects allocated to each experiment/intervention group. For example, let's assume that there are four subjects in each block, and two of the four subjects in each block will be randomly allotted to each group. Therefore, there will be two subjects in one group and two subjects in the other group. 17 The disadvantage with this methodology is that there is still a component of predictability in the selection of subjects and the randomization of prognostic factors is not performed. However, it helps to control the balance between the experiment/intervention groups.

Stratified randomization

In stratified randomization, the subjects are defined based on certain strata, which are covariates. 18 For example, prognostic factors like age can be considered as a covariate, and then the specified population can be randomized within each age group related to an experiment/intervention group. The advantage with this methodology is that it enables comparability between experiment/intervention groups and thus makes result analysis more efficient. But, with this methodology the covariates will need to be measured and determined before the randomization process. The sample size will help determine the number of strata that would need to be chosen for a study.

Blinding is a methodology adopted in a study design to intentionally not provide information related to the allocation of the groups to the subject participants, investigators and/or data analysts. 19 The purpose of blinding is to decrease influence associated with the knowledge of being in a particular group on the study result. There are 3 forms of blinding: single‐blinded, double‐blinded and triple‐blinded. 1 In single‐blinded studies, otherwise called as open‐label studies, the subject participants are not revealed which group that they have been allocated to. However, the investigator and data analyst will be aware of the allocation of the groups. In double‐blinded studies, both the study participants and the investigator will be unaware of the group to which they were allocated to. Double‐blinded studies are typically used in clinical trials to test the safety and efficacy of the drugs. In triple‐blinded studies, the subject participants, investigators and data analysts will not be aware of the group allocation. Thus, triple‐blinded studies are more difficult and expensive to design but the results obtained will exclude confounding effects from knowledge of group allocation.

Blinding is especially important in studies where subjective response are considered as outcomes. This is because certain responses can be modified based on the knowledge of the experiment group that they are in. For example, a group allocated in the non‐intervention group may not feel better as they are not getting the treatment, or an investigator may pay more attention to the group receiving treatment, and thereby potentially affecting the final results. However, certain treatments cannot be blinded such as surgeries or if the treatment group requires an assessment of the effect of intervention such as quitting smoking.

Placebo is defined in the Merriam‐Webster dictionary as ‘an inert or innocuous substance used especially in controlled experiments testing the efficacy of another substance (such as drug)’. 20 A placebo is typically used in a clinical research study to evaluate the safety and efficacy of a drug/intervention. This is especially useful if the outcome measured is subjective. In clinical drug trials, a placebo is typically a drug that resembles the drug to be tested in certain characteristics such as color, size, shape and taste, but without the active substance. This helps to measure effects of just taking the drug, such as pain relief, compared to the drug with the active substance. If the effect is positive, for example, improvement in mood/pain, then it is called placebo effect. If the effect is negative, for example, worsening of mood/pain, then it is called nocebo effect. 21

The ethics of placebo‐controlled studies is complex and remains a debate in the medical research community. According to the Declaration of Helsinki on the use of placebo released in October 2013, “The benefits, risks, burdens and effectiveness of a new intervention must be tested against those of the best proven intervention(s), except in the following circumstances:

Where no proven intervention exists, the use of placebo, or no intervention, is acceptable; or

Where for compelling and scientifically sound methodological reasons the use of any intervention less effective than the best proven one, the use of placebo, or no intervention is necessary to determine the efficacy or safety of an intervention and the patients who receive any intervention less effective than the best proven one, placebo, or no intervention will not be subject to additional risks of serious or irreversible harm as a result of not receiving the best proven intervention.

Extreme care must be taken to avoid abuse of this option”. 22

Hence, while designing a research study, both the scientific validity and ethical aspects of the study will need to be thoroughly evaluated.

Bias has been defined as “any systematic error in the design, conduct or analysis of a study that results in a mistaken estimate of an exposure's effect on the risk of disease”. 23 There are multiple types of biases and so, in this review we will focus on the following types: selection bias, information bias and observer bias. Selection bias is when a systematic error is committed while selecting subjects for the study. Selection bias will affect the external validity of the study if the study subjects are not representative of the population being studied and therefore, the results of the study will not be generalizable. Selection bias will affect the internal validity of the study if the selection of study subjects in each group is influenced by certain factors, such as, based on the treatment of the group assigned. One of the ways to decrease selection bias is to select the study population that would representative of the population being studied, or to randomize (discussed in section “Randomization”).

Information bias is when a systematic error is committed while obtaining data from the study subjects. This can be in the form of recall bias when subject is required to remember certain events from the past. Typically, subjects with the disease tend to remember certain events compared to subjects without the disease. Observer bias is a systematic error when the study investigator is influenced by the certain characteristics of the group, that is, an investigator may pay closer attention to the group receiving the treatment versus the group not receiving the treatment. This may influence the results of the study. One of the ways to decrease observer bias is to use blinding (discussed in section “Blinding”).

Thus, while designing a study it is important to take measure to limit bias as much as possible so that the scientific validity of the study results is preserved to its maximum.

Overview of drug development in the United States of America

Now that we have reviewed the various clinical designs, clinical trials form a major part in development of a drug. In the United States, the Food and Drug Administration (FDA) plays an important role in getting a drug approved for clinical use. It includes a robust process that involves four different phases before a drug can be made available to the public. Phase I is conducted to determine a safe dose. The study subjects consist of normal volunteers and/or subjects with disease of interest, and the sample size is typically small and not more than 30 subjects. The primary endpoint consists of toxicity and adverse events. Phase II is conducted to evaluate of safety of dose selected in Phase I, to collect preliminary information on efficacy and to determine factors to plan a randomized controlled trial. The study subjects consist of subjects with disease of interest and the sample size is also small but more that Phase I (40–100 subjects). The primary endpoint is the measure of response. Phase III is conducted as a definitive trial to prove efficacy and establish safety of a drug. Phase III studies are randomized controlled trials and depending on the drug being studied, it can be placebo‐controlled, equivalence, superiority or non‐inferiority trials. The study subjects consist of subjects with disease of interest, and the sample size is typically large but no larger than 300 to 3000. Phase IV is performed after a drug is approved by the FDA and it is also called the post‐marketing clinical trial. This phase is conducted to evaluate new indications, to determine safety and efficacy in long‐term follow‐up and new dosing regimens. This phase helps to detect rare adverse events that would not be picked up during phase III studies and decrease in the delay in the release of the drug in the market. Hence, this phase depends heavily on voluntary reporting of side effects and/or adverse events by physicians, non‐physicians or drug companies. 2

We have discussed various clinical research study designs in this comprehensive review. Though there are various designs available, one must consider various ethical aspects of the study. Hence, each study will require thorough review of the protocol by the institutional review board before approval and implementation.

CONFLICT OF INTEREST

Chidambaram AG, Josephson M. Clinical research study designs: The essentials. Pediatr Invest. 2019;3:245‐252. 10.1002/ped4.12166

- 1. Lim HJ, Hoffmann RG. Study design: The basics. Methods Mol Biol. 2007;404:1‐17. [ DOI ] [ PubMed ] [ Google Scholar ]

- 2. Umscheid CA, Margolis DJ, Grossman CE. Key concepts of clinical trials: A narrative review. Postgrad Med. 2011;123:194‐204. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 3. Grimes DA, Schulz KF. An overview of clinical research: The lay of the land. Lancet. 2002;359:57‐61. [ DOI ] [ PubMed ] [ Google Scholar ]

- 4. Wright SM, Kouroukis C. Capturing zebras: What to do with a reportable case. CMAJ. 2000;163:429‐431. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 5. Prentice RL, Kakar F, Hursting S, Sheppard L, Klein R, Kushi LH. Aspects of the rationale for the women's health trial. J Natl Cancer Inst. 1988;80:802‐814. [ DOI ] [ PubMed ] [ Google Scholar ]

- 6. Connor SR, Downing J, Marston J. Estimating the global need for palliative care for children: A cross‐sectional analysis. J Pain Symptom Manage. 2017;53:171‐177. [ DOI ] [ PubMed ] [ Google Scholar ]

- 7. Celentano DD, Szklo M. Gordis epidemiology. 6th ed Elsevier, Inc.; 2019. [ Google Scholar ]

- 8. Schulz KF, Altman DG, Moher D, CONSORT Group . CONSORT 2010 statement : Updated guidelines for reporting parallel group randomised trials. Int J Surg. 2011;9:672‐677. [ DOI ] [ PubMed ] [ Google Scholar ]

- 9. Traverso HP, Bennett JV, Kahn AJ, Agha SB, Rahim H, Kamil S, et al. Ghee applications to the umbilical cord: A risk factor for neonatal tetanus. Lancet. 1989;1:486‐488. [ DOI ] [ PubMed ] [ Google Scholar ]

- 10. Ernster VL. Nested case‐control studies. Prev Med. 1994;23:587‐590. [ DOI ] [ PubMed ] [ Google Scholar ]

- 11. Barlow WE, Ichikawa L, Rosner D, Izumi S. Analysis of case‐cohort designs. J Clin Epidemiol. 1999;52:1165‐1172. [ DOI ] [ PubMed ] [ Google Scholar ]

- 12. Lind J. Nutrition classics. A treatise of the scurvy by james lind, MDCCLIII. Nutr Rev. 1983;41:155‐157. [ DOI ] [ PubMed ] [ Google Scholar ]

- 13. Dennison DK. Components of a randomized clinical trial. J Periodontal Res. 1997;32:430‐438. [ DOI ] [ PubMed ] [ Google Scholar ]

- 14. Sibbald B, Roberts C. Understanding controlled trials. Crossover trials. BMJ. 1998;316:1719. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 15. Cipriani A, Barbui C. What is a factorial trial? Epidemiol Psychiatr Sci. 2013;22:213‐215. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 16. Margetts BM, Nelson M. Design concepts in nutritional epidemiology. 2nd ed Oxford University Press; 1997:415‐417. [ Google Scholar ]

- 17. Altman DG, Bland JM. How to randomise. BMJ. 1999;319:703‐704. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 18. Suresh K. An overview of randomization techniques: An unbiased assessment of outcome in clinical research. J Hum Reprod Sci. 2011;4:8‐11. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ] [ Retracted ]

- 19. Karanicolas PJ, Farrokhyar F, Bhandari M. Practical tips for surgical research: Blinding: Who, what, when, why, how? Can J Surg. 2010;53:345‐348. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 20. Placebo. Merriam‐Webster Dictionary. Accessed 10/28/2019.

- 21. Pozgain I, Pozgain Z, Degmecic D. Placebo and nocebo effect: A mini‐review. Psychiatr Danub. 2014;26:100‐107. [ PubMed ] [ Google Scholar ]

- 22. World Medical Association . World medical association declaration of helsinki: Ethical principles for medical research involving human subjects. JAMA. 2013;310:2191‐2194. [ DOI ] [ PubMed ] [ Google Scholar ]

- 23. Schlesselman JJ. Case‐control studies: Design, conduct, and analysis. United States of America: New York: Oxford University Press; 1982:124‐143. [ Google Scholar ]

- View on publisher site

- PDF (543.3 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

- Open access

- Published: 07 September 2020

A tutorial on methodological studies: the what, when, how and why

- Lawrence Mbuagbaw ORCID: orcid.org/0000-0001-5855-5461 1 , 2 , 3 ,

- Daeria O. Lawson 1 ,

- Livia Puljak 4 ,

- David B. Allison 5 &

- Lehana Thabane 1 , 2 , 6 , 7 , 8

BMC Medical Research Methodology volume 20 , Article number: 226 ( 2020 ) Cite this article

44k Accesses

59 Citations

45 Altmetric

Metrics details

Methodological studies – studies that evaluate the design, analysis or reporting of other research-related reports – play an important role in health research. They help to highlight issues in the conduct of research with the aim of improving health research methodology, and ultimately reducing research waste.

We provide an overview of some of the key aspects of methodological studies such as what they are, and when, how and why they are done. We adopt a “frequently asked questions” format to facilitate reading this paper and provide multiple examples to help guide researchers interested in conducting methodological studies. Some of the topics addressed include: is it necessary to publish a study protocol? How to select relevant research reports and databases for a methodological study? What approaches to data extraction and statistical analysis should be considered when conducting a methodological study? What are potential threats to validity and is there a way to appraise the quality of methodological studies?

Appropriate reflection and application of basic principles of epidemiology and biostatistics are required in the design and analysis of methodological studies. This paper provides an introduction for further discussion about the conduct of methodological studies.

Peer Review reports

The field of meta-research (or research-on-research) has proliferated in recent years in response to issues with research quality and conduct [ 1 , 2 , 3 ]. As the name suggests, this field targets issues with research design, conduct, analysis and reporting. Various types of research reports are often examined as the unit of analysis in these studies (e.g. abstracts, full manuscripts, trial registry entries). Like many other novel fields of research, meta-research has seen a proliferation of use before the development of reporting guidance. For example, this was the case with randomized trials for which risk of bias tools and reporting guidelines were only developed much later – after many trials had been published and noted to have limitations [ 4 , 5 ]; and for systematic reviews as well [ 6 , 7 , 8 ]. However, in the absence of formal guidance, studies that report on research differ substantially in how they are named, conducted and reported [ 9 , 10 ]. This creates challenges in identifying, summarizing and comparing them. In this tutorial paper, we will use the term methodological study to refer to any study that reports on the design, conduct, analysis or reporting of primary or secondary research-related reports (such as trial registry entries and conference abstracts).

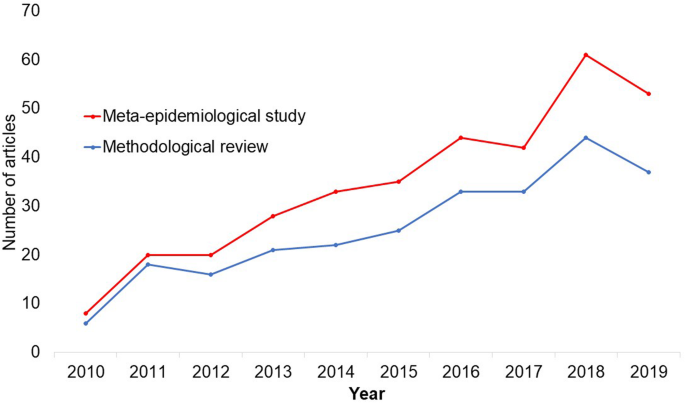

In the past 10 years, there has been an increase in the use of terms related to methodological studies (based on records retrieved with a keyword search [in the title and abstract] for “methodological review” and “meta-epidemiological study” in PubMed up to December 2019), suggesting that these studies may be appearing more frequently in the literature. See Fig. 1 .

Trends in the number studies that mention “methodological review” or “meta-

epidemiological study” in PubMed.

The methods used in many methodological studies have been borrowed from systematic and scoping reviews. This practice has influenced the direction of the field, with many methodological studies including searches of electronic databases, screening of records, duplicate data extraction and assessments of risk of bias in the included studies. However, the research questions posed in methodological studies do not always require the approaches listed above, and guidance is needed on when and how to apply these methods to a methodological study. Even though methodological studies can be conducted on qualitative or mixed methods research, this paper focuses on and draws examples exclusively from quantitative research.

The objectives of this paper are to provide some insights on how to conduct methodological studies so that there is greater consistency between the research questions posed, and the design, analysis and reporting of findings. We provide multiple examples to illustrate concepts and a proposed framework for categorizing methodological studies in quantitative research.

What is a methodological study?

Any study that describes or analyzes methods (design, conduct, analysis or reporting) in published (or unpublished) literature is a methodological study. Consequently, the scope of methodological studies is quite extensive and includes, but is not limited to, topics as diverse as: research question formulation [ 11 ]; adherence to reporting guidelines [ 12 , 13 , 14 ] and consistency in reporting [ 15 ]; approaches to study analysis [ 16 ]; investigating the credibility of analyses [ 17 ]; and studies that synthesize these methodological studies [ 18 ]. While the nomenclature of methodological studies is not uniform, the intents and purposes of these studies remain fairly consistent – to describe or analyze methods in primary or secondary studies. As such, methodological studies may also be classified as a subtype of observational studies.

Parallel to this are experimental studies that compare different methods. Even though they play an important role in informing optimal research methods, experimental methodological studies are beyond the scope of this paper. Examples of such studies include the randomized trials by Buscemi et al., comparing single data extraction to double data extraction [ 19 ], and Carrasco-Labra et al., comparing approaches to presenting findings in Grading of Recommendations, Assessment, Development and Evaluations (GRADE) summary of findings tables [ 20 ]. In these studies, the unit of analysis is the person or groups of individuals applying the methods. We also direct readers to the Studies Within a Trial (SWAT) and Studies Within a Review (SWAR) programme operated through the Hub for Trials Methodology Research, for further reading as a potential useful resource for these types of experimental studies [ 21 ]. Lastly, this paper is not meant to inform the conduct of research using computational simulation and mathematical modeling for which some guidance already exists [ 22 ], or studies on the development of methods using consensus-based approaches.

When should we conduct a methodological study?

Methodological studies occupy a unique niche in health research that allows them to inform methodological advances. Methodological studies should also be conducted as pre-cursors to reporting guideline development, as they provide an opportunity to understand current practices, and help to identify the need for guidance and gaps in methodological or reporting quality. For example, the development of the popular Preferred Reporting Items of Systematic reviews and Meta-Analyses (PRISMA) guidelines were preceded by methodological studies identifying poor reporting practices [ 23 , 24 ]. In these instances, after the reporting guidelines are published, methodological studies can also be used to monitor uptake of the guidelines.

These studies can also be conducted to inform the state of the art for design, analysis and reporting practices across different types of health research fields, with the aim of improving research practices, and preventing or reducing research waste. For example, Samaan et al. conducted a scoping review of adherence to different reporting guidelines in health care literature [ 18 ]. Methodological studies can also be used to determine the factors associated with reporting practices. For example, Abbade et al. investigated journal characteristics associated with the use of the Participants, Intervention, Comparison, Outcome, Timeframe (PICOT) format in framing research questions in trials of venous ulcer disease [ 11 ].

How often are methodological studies conducted?

There is no clear answer to this question. Based on a search of PubMed, the use of related terms (“methodological review” and “meta-epidemiological study”) – and therefore, the number of methodological studies – is on the rise. However, many other terms are used to describe methodological studies. There are also many studies that explore design, conduct, analysis or reporting of research reports, but that do not use any specific terms to describe or label their study design in terms of “methodology”. This diversity in nomenclature makes a census of methodological studies elusive. Appropriate terminology and key words for methodological studies are needed to facilitate improved accessibility for end-users.

Why do we conduct methodological studies?

Methodological studies provide information on the design, conduct, analysis or reporting of primary and secondary research and can be used to appraise quality, quantity, completeness, accuracy and consistency of health research. These issues can be explored in specific fields, journals, databases, geographical regions and time periods. For example, Areia et al. explored the quality of reporting of endoscopic diagnostic studies in gastroenterology [ 25 ]; Knol et al. investigated the reporting of p -values in baseline tables in randomized trial published in high impact journals [ 26 ]; Chen et al. describe adherence to the Consolidated Standards of Reporting Trials (CONSORT) statement in Chinese Journals [ 27 ]; and Hopewell et al. describe the effect of editors’ implementation of CONSORT guidelines on reporting of abstracts over time [ 28 ]. Methodological studies provide useful information to researchers, clinicians, editors, publishers and users of health literature. As a result, these studies have been at the cornerstone of important methodological developments in the past two decades and have informed the development of many health research guidelines including the highly cited CONSORT statement [ 5 ].

Where can we find methodological studies?

Methodological studies can be found in most common biomedical bibliographic databases (e.g. Embase, MEDLINE, PubMed, Web of Science). However, the biggest caveat is that methodological studies are hard to identify in the literature due to the wide variety of names used and the lack of comprehensive databases dedicated to them. A handful can be found in the Cochrane Library as “Cochrane Methodology Reviews”, but these studies only cover methodological issues related to systematic reviews. Previous attempts to catalogue all empirical studies of methods used in reviews were abandoned 10 years ago [ 29 ]. In other databases, a variety of search terms may be applied with different levels of sensitivity and specificity.

Some frequently asked questions about methodological studies

In this section, we have outlined responses to questions that might help inform the conduct of methodological studies.

Q: How should I select research reports for my methodological study?

A: Selection of research reports for a methodological study depends on the research question and eligibility criteria. Once a clear research question is set and the nature of literature one desires to review is known, one can then begin the selection process. Selection may begin with a broad search, especially if the eligibility criteria are not apparent. For example, a methodological study of Cochrane Reviews of HIV would not require a complex search as all eligible studies can easily be retrieved from the Cochrane Library after checking a few boxes [ 30 ]. On the other hand, a methodological study of subgroup analyses in trials of gastrointestinal oncology would require a search to find such trials, and further screening to identify trials that conducted a subgroup analysis [ 31 ].

The strategies used for identifying participants in observational studies can apply here. One may use a systematic search to identify all eligible studies. If the number of eligible studies is unmanageable, a random sample of articles can be expected to provide comparable results if it is sufficiently large [ 32 ]. For example, Wilson et al. used a random sample of trials from the Cochrane Stroke Group’s Trial Register to investigate completeness of reporting [ 33 ]. It is possible that a simple random sample would lead to underrepresentation of units (i.e. research reports) that are smaller in number. This is relevant if the investigators wish to compare multiple groups but have too few units in one group. In this case a stratified sample would help to create equal groups. For example, in a methodological study comparing Cochrane and non-Cochrane reviews, Kahale et al. drew random samples from both groups [ 34 ]. Alternatively, systematic or purposeful sampling strategies can be used and we encourage researchers to justify their selected approaches based on the study objective.

Q: How many databases should I search?

A: The number of databases one should search would depend on the approach to sampling, which can include targeting the entire “population” of interest or a sample of that population. If you are interested in including the entire target population for your research question, or drawing a random or systematic sample from it, then a comprehensive and exhaustive search for relevant articles is required. In this case, we recommend using systematic approaches for searching electronic databases (i.e. at least 2 databases with a replicable and time stamped search strategy). The results of your search will constitute a sampling frame from which eligible studies can be drawn.

Alternatively, if your approach to sampling is purposeful, then we recommend targeting the database(s) or data sources (e.g. journals, registries) that include the information you need. For example, if you are conducting a methodological study of high impact journals in plastic surgery and they are all indexed in PubMed, you likely do not need to search any other databases. You may also have a comprehensive list of all journals of interest and can approach your search using the journal names in your database search (or by accessing the journal archives directly from the journal’s website). Even though one could also search journals’ web pages directly, using a database such as PubMed has multiple advantages, such as the use of filters, so the search can be narrowed down to a certain period, or study types of interest. Furthermore, individual journals’ web sites may have different search functionalities, which do not necessarily yield a consistent output.

Q: Should I publish a protocol for my methodological study?

A: A protocol is a description of intended research methods. Currently, only protocols for clinical trials require registration [ 35 ]. Protocols for systematic reviews are encouraged but no formal recommendation exists. The scientific community welcomes the publication of protocols because they help protect against selective outcome reporting, the use of post hoc methodologies to embellish results, and to help avoid duplication of efforts [ 36 ]. While the latter two risks exist in methodological research, the negative consequences may be substantially less than for clinical outcomes. In a sample of 31 methodological studies, 7 (22.6%) referenced a published protocol [ 9 ]. In the Cochrane Library, there are 15 protocols for methodological reviews (21 July 2020). This suggests that publishing protocols for methodological studies is not uncommon.

Authors can consider publishing their study protocol in a scholarly journal as a manuscript. Advantages of such publication include obtaining peer-review feedback about the planned study, and easy retrieval by searching databases such as PubMed. The disadvantages in trying to publish protocols includes delays associated with manuscript handling and peer review, as well as costs, as few journals publish study protocols, and those journals mostly charge article-processing fees [ 37 ]. Authors who would like to make their protocol publicly available without publishing it in scholarly journals, could deposit their study protocols in publicly available repositories, such as the Open Science Framework ( https://osf.io/ ).

Q: How to appraise the quality of a methodological study?

A: To date, there is no published tool for appraising the risk of bias in a methodological study, but in principle, a methodological study could be considered as a type of observational study. Therefore, during conduct or appraisal, care should be taken to avoid the biases common in observational studies [ 38 ]. These biases include selection bias, comparability of groups, and ascertainment of exposure or outcome. In other words, to generate a representative sample, a comprehensive reproducible search may be necessary to build a sampling frame. Additionally, random sampling may be necessary to ensure that all the included research reports have the same probability of being selected, and the screening and selection processes should be transparent and reproducible. To ensure that the groups compared are similar in all characteristics, matching, random sampling or stratified sampling can be used. Statistical adjustments for between-group differences can also be applied at the analysis stage. Finally, duplicate data extraction can reduce errors in assessment of exposures or outcomes.

Q: Should I justify a sample size?

A: In all instances where one is not using the target population (i.e. the group to which inferences from the research report are directed) [ 39 ], a sample size justification is good practice. The sample size justification may take the form of a description of what is expected to be achieved with the number of articles selected, or a formal sample size estimation that outlines the number of articles required to answer the research question with a certain precision and power. Sample size justifications in methodological studies are reasonable in the following instances:

Comparing two groups

Determining a proportion, mean or another quantifier

Determining factors associated with an outcome using regression-based analyses

For example, El Dib et al. computed a sample size requirement for a methodological study of diagnostic strategies in randomized trials, based on a confidence interval approach [ 40 ].

Q: What should I call my study?

A: Other terms which have been used to describe/label methodological studies include “ methodological review ”, “methodological survey” , “meta-epidemiological study” , “systematic review” , “systematic survey”, “meta-research”, “research-on-research” and many others. We recommend that the study nomenclature be clear, unambiguous, informative and allow for appropriate indexing. Methodological study nomenclature that should be avoided includes “ systematic review” – as this will likely be confused with a systematic review of a clinical question. “ Systematic survey” may also lead to confusion about whether the survey was systematic (i.e. using a preplanned methodology) or a survey using “ systematic” sampling (i.e. a sampling approach using specific intervals to determine who is selected) [ 32 ]. Any of the above meanings of the words “ systematic” may be true for methodological studies and could be potentially misleading. “ Meta-epidemiological study” is ideal for indexing, but not very informative as it describes an entire field. The term “ review ” may point towards an appraisal or “review” of the design, conduct, analysis or reporting (or methodological components) of the targeted research reports, yet it has also been used to describe narrative reviews [ 41 , 42 ]. The term “ survey ” is also in line with the approaches used in many methodological studies [ 9 ], and would be indicative of the sampling procedures of this study design. However, in the absence of guidelines on nomenclature, the term “ methodological study ” is broad enough to capture most of the scenarios of such studies.

Q: Should I account for clustering in my methodological study?

A: Data from methodological studies are often clustered. For example, articles coming from a specific source may have different reporting standards (e.g. the Cochrane Library). Articles within the same journal may be similar due to editorial practices and policies, reporting requirements and endorsement of guidelines. There is emerging evidence that these are real concerns that should be accounted for in analyses [ 43 ]. Some cluster variables are described in the section: “ What variables are relevant to methodological studies?”

A variety of modelling approaches can be used to account for correlated data, including the use of marginal, fixed or mixed effects regression models with appropriate computation of standard errors [ 44 ]. For example, Kosa et al. used generalized estimation equations to account for correlation of articles within journals [ 15 ]. Not accounting for clustering could lead to incorrect p -values, unduly narrow confidence intervals, and biased estimates [ 45 ].

Q: Should I extract data in duplicate?

A: Yes. Duplicate data extraction takes more time but results in less errors [ 19 ]. Data extraction errors in turn affect the effect estimate [ 46 ], and therefore should be mitigated. Duplicate data extraction should be considered in the absence of other approaches to minimize extraction errors. However, much like systematic reviews, this area will likely see rapid new advances with machine learning and natural language processing technologies to support researchers with screening and data extraction [ 47 , 48 ]. However, experience plays an important role in the quality of extracted data and inexperienced extractors should be paired with experienced extractors [ 46 , 49 ].

Q: Should I assess the risk of bias of research reports included in my methodological study?

A : Risk of bias is most useful in determining the certainty that can be placed in the effect measure from a study. In methodological studies, risk of bias may not serve the purpose of determining the trustworthiness of results, as effect measures are often not the primary goal of methodological studies. Determining risk of bias in methodological studies is likely a practice borrowed from systematic review methodology, but whose intrinsic value is not obvious in methodological studies. When it is part of the research question, investigators often focus on one aspect of risk of bias. For example, Speich investigated how blinding was reported in surgical trials [ 50 ], and Abraha et al., investigated the application of intention-to-treat analyses in systematic reviews and trials [ 51 ].

Q: What variables are relevant to methodological studies?

A: There is empirical evidence that certain variables may inform the findings in a methodological study. We outline some of these and provide a brief overview below:

Country: Countries and regions differ in their research cultures, and the resources available to conduct research. Therefore, it is reasonable to believe that there may be differences in methodological features across countries. Methodological studies have reported loco-regional differences in reporting quality [ 52 , 53 ]. This may also be related to challenges non-English speakers face in publishing papers in English.

Authors’ expertise: The inclusion of authors with expertise in research methodology, biostatistics, and scientific writing is likely to influence the end-product. Oltean et al. found that among randomized trials in orthopaedic surgery, the use of analyses that accounted for clustering was more likely when specialists (e.g. statistician, epidemiologist or clinical trials methodologist) were included on the study team [ 54 ]. Fleming et al. found that including methodologists in the review team was associated with appropriate use of reporting guidelines [ 55 ].

Source of funding and conflicts of interest: Some studies have found that funded studies report better [ 56 , 57 ], while others do not [ 53 , 58 ]. The presence of funding would indicate the availability of resources deployed to ensure optimal design, conduct, analysis and reporting. However, the source of funding may introduce conflicts of interest and warrant assessment. For example, Kaiser et al. investigated the effect of industry funding on obesity or nutrition randomized trials and found that reporting quality was similar [ 59 ]. Thomas et al. looked at reporting quality of long-term weight loss trials and found that industry funded studies were better [ 60 ]. Kan et al. examined the association between industry funding and “positive trials” (trials reporting a significant intervention effect) and found that industry funding was highly predictive of a positive trial [ 61 ]. This finding is similar to that of a recent Cochrane Methodology Review by Hansen et al. [ 62 ]

Journal characteristics: Certain journals’ characteristics may influence the study design, analysis or reporting. Characteristics such as journal endorsement of guidelines [ 63 , 64 ], and Journal Impact Factor (JIF) have been shown to be associated with reporting [ 63 , 65 , 66 , 67 ].

Study size (sample size/number of sites): Some studies have shown that reporting is better in larger studies [ 53 , 56 , 58 ].

Year of publication: It is reasonable to assume that design, conduct, analysis and reporting of research will change over time. Many studies have demonstrated improvements in reporting over time or after the publication of reporting guidelines [ 68 , 69 ].

Type of intervention: In a methodological study of reporting quality of weight loss intervention studies, Thabane et al. found that trials of pharmacologic interventions were reported better than trials of non-pharmacologic interventions [ 70 ].

Interactions between variables: Complex interactions between the previously listed variables are possible. High income countries with more resources may be more likely to conduct larger studies and incorporate a variety of experts. Authors in certain countries may prefer certain journals, and journal endorsement of guidelines and editorial policies may change over time.

Q: Should I focus only on high impact journals?

A: Investigators may choose to investigate only high impact journals because they are more likely to influence practice and policy, or because they assume that methodological standards would be higher. However, the JIF may severely limit the scope of articles included and may skew the sample towards articles with positive findings. The generalizability and applicability of findings from a handful of journals must be examined carefully, especially since the JIF varies over time. Even among journals that are all “high impact”, variations exist in methodological standards.

Q: Can I conduct a methodological study of qualitative research?

A: Yes. Even though a lot of methodological research has been conducted in the quantitative research field, methodological studies of qualitative studies are feasible. Certain databases that catalogue qualitative research including the Cumulative Index to Nursing & Allied Health Literature (CINAHL) have defined subject headings that are specific to methodological research (e.g. “research methodology”). Alternatively, one could also conduct a qualitative methodological review; that is, use qualitative approaches to synthesize methodological issues in qualitative studies.

Q: What reporting guidelines should I use for my methodological study?

A: There is no guideline that covers the entire scope of methodological studies. One adaptation of the PRISMA guidelines has been published, which works well for studies that aim to use the entire target population of research reports [ 71 ]. However, it is not widely used (40 citations in 2 years as of 09 December 2019), and methodological studies that are designed as cross-sectional or before-after studies require a more fit-for purpose guideline. A more encompassing reporting guideline for a broad range of methodological studies is currently under development [ 72 ]. However, in the absence of formal guidance, the requirements for scientific reporting should be respected, and authors of methodological studies should focus on transparency and reproducibility.

Q: What are the potential threats to validity and how can I avoid them?

A: Methodological studies may be compromised by a lack of internal or external validity. The main threats to internal validity in methodological studies are selection and confounding bias. Investigators must ensure that the methods used to select articles does not make them differ systematically from the set of articles to which they would like to make inferences. For example, attempting to make extrapolations to all journals after analyzing high-impact journals would be misleading.

Many factors (confounders) may distort the association between the exposure and outcome if the included research reports differ with respect to these factors [ 73 ]. For example, when examining the association between source of funding and completeness of reporting, it may be necessary to account for journals that endorse the guidelines. Confounding bias can be addressed by restriction, matching and statistical adjustment [ 73 ]. Restriction appears to be the method of choice for many investigators who choose to include only high impact journals or articles in a specific field. For example, Knol et al. examined the reporting of p -values in baseline tables of high impact journals [ 26 ]. Matching is also sometimes used. In the methodological study of non-randomized interventional studies of elective ventral hernia repair, Parker et al. matched prospective studies with retrospective studies and compared reporting standards [ 74 ]. Some other methodological studies use statistical adjustments. For example, Zhang et al. used regression techniques to determine the factors associated with missing participant data in trials [ 16 ].

With regard to external validity, researchers interested in conducting methodological studies must consider how generalizable or applicable their findings are. This should tie in closely with the research question and should be explicit. For example. Findings from methodological studies on trials published in high impact cardiology journals cannot be assumed to be applicable to trials in other fields. However, investigators must ensure that their sample truly represents the target sample either by a) conducting a comprehensive and exhaustive search, or b) using an appropriate and justified, randomly selected sample of research reports.

Even applicability to high impact journals may vary based on the investigators’ definition, and over time. For example, for high impact journals in the field of general medicine, Bouwmeester et al. included the Annals of Internal Medicine (AIM), BMJ, the Journal of the American Medical Association (JAMA), Lancet, the New England Journal of Medicine (NEJM), and PLoS Medicine ( n = 6) [ 75 ]. In contrast, the high impact journals selected in the methodological study by Schiller et al. were BMJ, JAMA, Lancet, and NEJM ( n = 4) [ 76 ]. Another methodological study by Kosa et al. included AIM, BMJ, JAMA, Lancet and NEJM ( n = 5). In the methodological study by Thabut et al., journals with a JIF greater than 5 were considered to be high impact. Riado Minguez et al. used first quartile journals in the Journal Citation Reports (JCR) for a specific year to determine “high impact” [ 77 ]. Ultimately, the definition of high impact will be based on the number of journals the investigators are willing to include, the year of impact and the JIF cut-off [ 78 ]. We acknowledge that the term “generalizability” may apply differently for methodological studies, especially when in many instances it is possible to include the entire target population in the sample studied.

Finally, methodological studies are not exempt from information bias which may stem from discrepancies in the included research reports [ 79 ], errors in data extraction, or inappropriate interpretation of the information extracted. Likewise, publication bias may also be a concern in methodological studies, but such concepts have not yet been explored.

A proposed framework

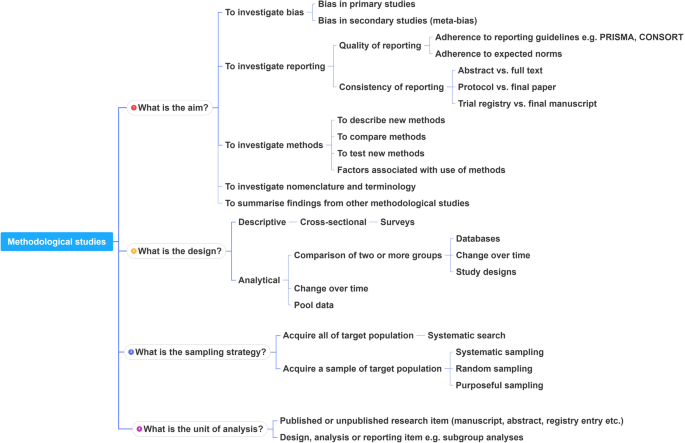

In order to inform discussions about methodological studies, the development of guidance for what should be reported, we have outlined some key features of methodological studies that can be used to classify them. For each of the categories outlined below, we provide an example. In our experience, the choice of approach to completing a methodological study can be informed by asking the following four questions:

What is the aim?

Methodological studies that investigate bias