hypothesis 6.105.1

pip install hypothesis Copy PIP instructions

Released: Jul 7, 2024

A library for property-based testing

Verified details

Maintainers.

Unverified details

Project links.

- Documentation

GitHub Statistics

- Open issues:

License: Mozilla Public License 2.0 (MPL 2.0) (MPL-2.0)

Author: David R. MacIver and Zac Hatfield-Dodds

Tags python, testing, fuzzing, property-based-testing

Requires: Python >=3.8

Provides-Extra: all , cli , codemods , crosshair , dateutil , django , dpcontracts , ghostwriter , lark , numpy , pandas , pytest , pytz , redis , zoneinfo

Classifiers

- 5 - Production/Stable

- OSI Approved :: Mozilla Public License 2.0 (MPL 2.0)

- Microsoft :: Windows

- Python :: 3

- Python :: 3 :: Only

- Python :: 3.8

- Python :: 3.9

- Python :: 3.10

- Python :: 3.11

- Python :: 3.12

- Python :: Implementation :: CPython

- Python :: Implementation :: PyPy

- Education :: Testing

- Software Development :: Testing

Project description

Hypothesis is an advanced testing library for Python. It lets you write tests which are parametrized by a source of examples, and then generates simple and comprehensible examples that make your tests fail. This lets you find more bugs in your code with less work.

Hypothesis is extremely practical and advances the state of the art of unit testing by some way. It’s easy to use, stable, and powerful. If you’re not using Hypothesis to test your project then you’re missing out.

Quick Start/Installation

If you just want to get started:

Links of interest

The main Hypothesis site is at hypothesis.works , and contains a lot of good introductory and explanatory material.

Extensive documentation and examples of usage are available at readthedocs .

If you want to talk to people about using Hypothesis, we have both an IRC channel and a mailing list .

If you want to receive occasional updates about Hypothesis, including useful tips and tricks, there’s a TinyLetter mailing list to sign up for them .

If you want to contribute to Hypothesis, instructions are here .

If you want to hear from people who are already using Hypothesis, some of them have written about it .

If you want to create a downstream package of Hypothesis, please read these guidelines for packagers .

Project details

Release history release notifications | rss feed.

Jul 7, 2024

Jul 4, 2024

Jun 29, 2024

Jun 25, 2024

Jun 24, 2024

Jun 14, 2024

Jun 5, 2024

May 29, 2024

May 23, 2024

May 22, 2024

May 15, 2024

May 13, 2024

May 12, 2024

May 10, 2024

May 6, 2024

May 5, 2024

May 4, 2024

Apr 28, 2024

Apr 8, 2024

Mar 31, 2024

Mar 24, 2024

Mar 23, 2024

Mar 20, 2024

Mar 19, 2024

Mar 18, 2024

Mar 14, 2024

Mar 12, 2024

Mar 11, 2024

Mar 10, 2024

Mar 9, 2024

Mar 4, 2024

Feb 29, 2024

Feb 27, 2024

Feb 25, 2024

Feb 24, 2024

Feb 22, 2024

Feb 20, 2024

Feb 18, 2024

Feb 15, 2024

Feb 14, 2024

Feb 12, 2024

Feb 8, 2024

Feb 5, 2024

Feb 4, 2024

Feb 3, 2024

Jan 31, 2024

Jan 30, 2024

Jan 27, 2024

Jan 25, 2024

Jan 23, 2024

Jan 22, 2024

Jan 21, 2024

Jan 18, 2024

Jan 17, 2024

Jan 16, 2024

Jan 15, 2024

Jan 13, 2024

Jan 12, 2024

Jan 11, 2024

Jan 10, 2024

Jan 8, 2024

Dec 27, 2023

Dec 16, 2023

Dec 10, 2023

Dec 8, 2023

Nov 27, 2023

Nov 20, 2023

Nov 19, 2023

Nov 16, 2023

Nov 13, 2023

Nov 5, 2023

Oct 16, 2023

Oct 15, 2023

Oct 12, 2023

Oct 6, 2023

Oct 1, 2023

Sep 25, 2023

Sep 18, 2023

Sep 17, 2023

Sep 16, 2023

Sep 10, 2023

Sep 6, 2023

Sep 5, 2023

Sep 4, 2023

Sep 3, 2023

Sep 1, 2023

Aug 28, 2023

Aug 20, 2023

Aug 18, 2023

Aug 12, 2023

Aug 8, 2023

Aug 6, 2023

Aug 5, 2023

Jul 20, 2023

Jul 15, 2023

Jul 11, 2023

Jul 10, 2023

Jul 6, 2023

Jun 27, 2023

Jun 26, 2023

Jun 22, 2023

Jun 19, 2023

Jun 17, 2023

Jun 15, 2023

Jun 13, 2023

Jun 12, 2023

Jun 11, 2023

Jun 9, 2023

Jun 4, 2023

May 31, 2023

May 30, 2023

May 27, 2023

May 26, 2023

May 14, 2023

May 4, 2023

Apr 30, 2023

Apr 28, 2023

Apr 26, 2023

Apr 27, 2023

Apr 25, 2023

Apr 24, 2023

Apr 19, 2023

Apr 16, 2023

Apr 7, 2023

Apr 3, 2023

Mar 27, 2023

Mar 16, 2023

Mar 15, 2023

Feb 17, 2023

Feb 12, 2023

Feb 9, 2023

Feb 5, 2023

Feb 4, 2023

Feb 3, 2023

Feb 2, 2023

Jan 27, 2023

Jan 26, 2023

Jan 24, 2023

Jan 23, 2023

Jan 20, 2023

Jan 14, 2023

Jan 8, 2023

Jan 7, 2023

Jan 6, 2023

Dec 11, 2022

Dec 4, 2022

Dec 2, 2022

Nov 30, 2022

Nov 26, 2022

Nov 19, 2022

Nov 14, 2022

Oct 28, 2022

Oct 17, 2022

Oct 10, 2022

Oct 5, 2022

Oct 2, 2022

Sep 29, 2022

Sep 18, 2022

Sep 5, 2022

Aug 20, 2022

Aug 12, 2022

Aug 10, 2022

Aug 2, 2022

Jul 25, 2022

Jul 22, 2022

Jul 19, 2022

Jul 18, 2022

Jul 17, 2022

Jul 9, 2022

Jul 5, 2022

Jul 4, 2022

Jul 3, 2022

Jun 29, 2022

Jun 27, 2022

Jun 25, 2022

Jun 23, 2022

Jun 15, 2022

Jun 12, 2022

Jun 10, 2022

Jun 7, 2022

Jun 2, 2022

Jun 1, 2022

May 25, 2022

May 19, 2022

May 18, 2022

May 15, 2022

May 11, 2022

May 3, 2022

May 1, 2022

Apr 30, 2022

Apr 29, 2022

Apr 27, 2022

Apr 22, 2022

Apr 21, 2022

Apr 18, 2022

Apr 16, 2022

Apr 13, 2022

Apr 12, 2022

Apr 10, 2022

Apr 9, 2022

Apr 1, 2022

Mar 29, 2022

Mar 27, 2022

Mar 26, 2022

Mar 17, 2022

Mar 7, 2022

Mar 3, 2022

Mar 1, 2022

Feb 26, 2022

Feb 21, 2022

Feb 18, 2022

Feb 13, 2022

Jan 31, 2022

Jan 19, 2022

Jan 17, 2022

Jan 8, 2022

Jan 5, 2022

Dec 31, 2021

Dec 30, 2021

Dec 23, 2021

Dec 15, 2021

Dec 14, 2021

Dec 11, 2021

Dec 10, 2021

Dec 9, 2021

Dec 5, 2021

Dec 3, 2021

Dec 2, 2021

Nov 29, 2021

Nov 28, 2021

Nov 26, 2021

Nov 22, 2021

Nov 21, 2021

Nov 19, 2021

Nov 18, 2021

Nov 16, 2021

Nov 15, 2021

Nov 13, 2021

Nov 5, 2021

Nov 1, 2021

Oct 23, 2021

Oct 20, 2021

Oct 18, 2021

Oct 8, 2021

Sep 29, 2021

Sep 26, 2021

Sep 24, 2021

Sep 19, 2021

Sep 16, 2021

Sep 15, 2021

Sep 13, 2021

Sep 11, 2021

Sep 10, 2021

Sep 9, 2021

Sep 8, 2021

Sep 6, 2021

Aug 31, 2021

Aug 30, 2021

Aug 29, 2021

Aug 27, 2021

Aug 22, 2021

Aug 20, 2021

Aug 16, 2021

Aug 14, 2021

Aug 7, 2021

Jul 27, 2021

Jul 26, 2021

Jul 18, 2021

Jul 12, 2021

Jul 2, 2021

Jun 9, 2021

Jun 4, 2021

Jun 3, 2021

Jun 2, 2021

May 30, 2021

May 28, 2021

May 27, 2021

May 26, 2021

May 24, 2021

May 23, 2021

May 20, 2021

May 18, 2021

May 17, 2021

May 6, 2021

Apr 26, 2021

Apr 17, 2021

Apr 15, 2021

Apr 12, 2021

Apr 11, 2021

Apr 7, 2021

Apr 6, 2021

Apr 5, 2021

Apr 1, 2021

Mar 28, 2021

Mar 27, 2021

Mar 14, 2021

Mar 11, 2021

Mar 10, 2021

Mar 9, 2021

Mar 7, 2021

Mar 4, 2021

Mar 2, 2021

Feb 28, 2021

Feb 26, 2021

Feb 25, 2021

Feb 24, 2021

Feb 20, 2021

Feb 12, 2021

Jan 31, 2021

Jan 29, 2021

Jan 27, 2021

Jan 23, 2021

Jan 14, 2021

Jan 13, 2021

Jan 8, 2021

Jan 7, 2021

Jan 6, 2021

Jan 5, 2021

Jan 4, 2021

Jan 3, 2021

Jan 2, 2021

Jan 1, 2021

Dec 24, 2020

Dec 11, 2020

Dec 10, 2020

Dec 9, 2020

Dec 5, 2020

Nov 28, 2020

Nov 18, 2020

Nov 8, 2020

Nov 3, 2020

Oct 30, 2020

Oct 26, 2020

Oct 24, 2020

Oct 20, 2020

Oct 15, 2020

Oct 14, 2020

Oct 7, 2020

Oct 3, 2020

Oct 2, 2020

Sep 25, 2020

Sep 24, 2020

Sep 21, 2020

Sep 15, 2020

Sep 14, 2020

Sep 11, 2020

Sep 9, 2020

Sep 7, 2020

Sep 6, 2020

Sep 4, 2020

Aug 30, 2020

Aug 28, 2020

Aug 27, 2020

Aug 24, 2020

Aug 20, 2020

Aug 19, 2020

Aug 17, 2020

Aug 16, 2020

Aug 14, 2020

Aug 13, 2020

Aug 12, 2020

Aug 10, 2020

Aug 4, 2020

Aug 3, 2020

Jul 31, 2020

Jul 29, 2020

Jul 27, 2020

Jul 26, 2020

Jul 25, 2020

Jul 23, 2020

Jul 21, 2020

Jul 18, 2020

Jul 17, 2020

Jul 15, 2020

Jul 13, 2020

Jul 12, 2020

Jun 30, 2020

Jun 27, 2020

Jun 26, 2020

Jun 25, 2020

Jun 22, 2020

Jun 21, 2020

Jun 19, 2020

Jun 10, 2020

May 27, 2020

May 21, 2020

May 19, 2020

May 13, 2020

May 12, 2020

May 10, 2020

May 7, 2020

May 4, 2020

Apr 24, 2020

Apr 22, 2020

Apr 19, 2020

Apr 18, 2020

Apr 16, 2020

Apr 15, 2020

Apr 14, 2020

Apr 12, 2020

Mar 24, 2020

Mar 23, 2020

Mar 19, 2020

Mar 18, 2020

Feb 29, 2020

Feb 16, 2020

Feb 14, 2020

Feb 13, 2020

Feb 7, 2020

Feb 6, 2020

Feb 1, 2020

Jan 30, 2020

Jan 26, 2020

Jan 21, 2020

Jan 19, 2020

Jan 12, 2020

Jan 11, 2020

Jan 9, 2020

Jan 6, 2020

Jan 3, 2020

Jan 1, 2020

Dec 29, 2019

Dec 28, 2019

Dec 22, 2019

Dec 21, 2019

Dec 19, 2019

Dec 18, 2019

Dec 17, 2019

Dec 16, 2019

Dec 15, 2019

Dec 11, 2019

Dec 9, 2019

Dec 7, 2019

Dec 5, 2019

Dec 2, 2019

Dec 1, 2019

Nov 29, 2019

Nov 28, 2019

Nov 27, 2019

Nov 26, 2019

Nov 25, 2019

Nov 24, 2019

Nov 23, 2019

Nov 22, 2019

Nov 20, 2019

Nov 12, 2019

Nov 11, 2019

Nov 8, 2019

Nov 7, 2019

Nov 6, 2019

Nov 5, 2019

Nov 4, 2019

Nov 3, 2019

Nov 2, 2019

Nov 1, 2019

Oct 30, 2019

Oct 27, 2019

Oct 21, 2019

Oct 17, 2019

Oct 16, 2019

Oct 14, 2019

Oct 9, 2019

Oct 7, 2019

Oct 4, 2019

Oct 2, 2019

Oct 1, 2019

Sep 28, 2019

Sep 20, 2019

Sep 17, 2019

Sep 9, 2019

Sep 4, 2019

Aug 23, 2019

Aug 21, 2019

Aug 20, 2019

Aug 5, 2019

Jul 30, 2019

Jul 29, 2019

Jul 28, 2019

Jul 24, 2019

Jul 14, 2019

Jul 12, 2019

Jul 11, 2019

Jul 8, 2019

Jul 7, 2019

Jul 5, 2019

Jul 4, 2019

Jul 3, 2019

Jun 26, 2019

Jun 23, 2019

Jun 21, 2019

Jun 7, 2019

Jun 6, 2019

Jun 4, 2019

May 29, 2019

May 28, 2019

May 26, 2019

May 19, 2019

May 16, 2019

May 9, 2019

May 8, 2019

May 7, 2019

May 6, 2019

May 5, 2019

Apr 30, 2019

Apr 29, 2019

Apr 24, 2019

Apr 19, 2019

Apr 16, 2019

Apr 12, 2019

Apr 9, 2019

Apr 7, 2019

Apr 5, 2019

Apr 3, 2019

Mar 31, 2019

Mar 30, 2019

Mar 19, 2019

Mar 18, 2019

Mar 15, 2019

Mar 13, 2019

Mar 12, 2019

Mar 11, 2019

Mar 9, 2019

Mar 6, 2019

Mar 4, 2019

Mar 3, 2019

Mar 1, 2019

Feb 28, 2019

Feb 27, 2019

Feb 25, 2019

Feb 24, 2019

Feb 23, 2019

Feb 22, 2019

Feb 21, 2019

Feb 19, 2019

Feb 18, 2019

Feb 15, 2019

Feb 14, 2019

Feb 12, 2019

Feb 11, 2019

Feb 10, 2019

Feb 8, 2019

Feb 6, 2019

Feb 5, 2019

Feb 3, 2019

Feb 2, 2019

Jan 25, 2019

Jan 24, 2019

Jan 23, 2019

Jan 22, 2019

Jan 16, 2019

Jan 14, 2019

Jan 11, 2019

Jan 10, 2019

Jan 9, 2019

Jan 8, 2019

Jan 7, 2019

Jan 6, 2019

Jan 4, 2019

Jan 3, 2019

Jan 2, 2019

Dec 31, 2018

Dec 30, 2018

Dec 29, 2018

Dec 28, 2018

Dec 21, 2018

Dec 20, 2018

Dec 19, 2018

Dec 18, 2018

Dec 17, 2018

Dec 13, 2018

Dec 12, 2018

Dec 11, 2018

Dec 8, 2018

Oct 29, 2018

Oct 27, 2018

Oct 25, 2018

Oct 23, 2018

Oct 22, 2018

Oct 18, 2018

Oct 16, 2018

Oct 11, 2018

Oct 10, 2018

Oct 9, 2018

Oct 8, 2018

Oct 3, 2018

Oct 1, 2018

Sep 30, 2018

Sep 27, 2018

Sep 26, 2018

Sep 25, 2018

Sep 24, 2018

Sep 18, 2018

Sep 17, 2018

Sep 16, 2018

Sep 15, 2018

Sep 14, 2018

Sep 9, 2018

Sep 8, 2018

Sep 3, 2018

Sep 1, 2018

Aug 30, 2018

Aug 29, 2018

Aug 28, 2018

Aug 27, 2018

Aug 23, 2018

Aug 21, 2018

Aug 20, 2018

Aug 19, 2018

Aug 18, 2018

Aug 15, 2018

Aug 14, 2018

Aug 10, 2018

Aug 9, 2018

Aug 8, 2018

Aug 6, 2018

Aug 5, 2018

Aug 3, 2018

Aug 2, 2018

Aug 1, 2018

Jul 31, 2018

Jul 30, 2018

Jul 28, 2018

Jul 26, 2018

Jul 24, 2018

Jul 23, 2018

Jul 22, 2018

Jul 20, 2018

Jul 19, 2018

Jul 8, 2018

Jul 5, 2018

Jul 4, 2018

Jul 3, 2018

Jun 30, 2018

Jun 27, 2018

Jun 26, 2018

Jun 24, 2018

Jun 20, 2018

Jun 19, 2018

Jun 18, 2018

Jun 16, 2018

Jun 14, 2018

Jun 13, 2018

May 20, 2018

May 16, 2018

May 11, 2018

May 10, 2018

May 9, 2018

Apr 22, 2018

Apr 21, 2018

Apr 20, 2018

Apr 17, 2018

Apr 14, 2018

Apr 13, 2018

Apr 12, 2018

Apr 11, 2018

Apr 6, 2018

Apr 5, 2018

Apr 4, 2018

Apr 1, 2018

Mar 30, 2018

Mar 29, 2018

Mar 24, 2018

Mar 20, 2018

Mar 19, 2018

Mar 15, 2018

Mar 12, 2018

Mar 5, 2018

Mar 2, 2018

Mar 1, 2018

Feb 26, 2018

Feb 25, 2018

Feb 23, 2018

Feb 18, 2018

Feb 17, 2018

Feb 13, 2018

Feb 5, 2018

Jan 27, 2018

Jan 24, 2018

Jan 23, 2018

Jan 22, 2018

Jan 21, 2018

Jan 20, 2018

Jan 13, 2018

Jan 8, 2018

Jan 7, 2018

Jan 6, 2018

Jan 4, 2018

Jan 2, 2018

Dec 23, 2017

Dec 21, 2017

Dec 20, 2017

Dec 17, 2017

Dec 12, 2017

Dec 10, 2017

Dec 9, 2017

Dec 6, 2017

Dec 4, 2017

Dec 2, 2017

Dec 1, 2017

Nov 29, 2017

Nov 28, 2017

Nov 23, 2017

Nov 22, 2017

Nov 21, 2017

Nov 18, 2017

Nov 12, 2017

Nov 10, 2017

Nov 6, 2017

Nov 2, 2017

Nov 1, 2017

Oct 16, 2017

Oct 15, 2017

Oct 13, 2017

Oct 9, 2017

Oct 8, 2017

Oct 6, 2017

Sep 30, 2017

Sep 29, 2017

Sep 27, 2017

Sep 25, 2017

Sep 24, 2017

Sep 22, 2017

Sep 19, 2017

Sep 18, 2017

Sep 16, 2017

Sep 15, 2017

Sep 14, 2017

Sep 13, 2017

Sep 12, 2017

Sep 11, 2017

Sep 6, 2017

Sep 5, 2017

Sep 1, 2017

Aug 31, 2017

Aug 29, 2017

Aug 28, 2017

Aug 26, 2017

Aug 25, 2017

Aug 24, 2017

Aug 23, 2017

Aug 22, 2017

Aug 21, 2017

Aug 20, 2017

Aug 18, 2017

Aug 17, 2017

Aug 16, 2017

Aug 15, 2017

Aug 13, 2017

Aug 7, 2017

Aug 4, 2017

Aug 3, 2017

Aug 2, 2017

Jul 23, 2017

Jul 20, 2017

Jul 16, 2017

Jul 7, 2017

Jun 19, 2017

Jun 17, 2017

Jun 11, 2017

Jun 10, 2017

May 28, 2017

May 23, 2017

May 22, 2017

May 19, 2017

May 17, 2017

May 9, 2017

Apr 26, 2017

Apr 23, 2017

Apr 22, 2017

Apr 21, 2017

Mar 20, 2017

Dec 20, 2016

Oct 31, 2016

Oct 5, 2016

Sep 26, 2016

Sep 23, 2016

Sep 22, 2016

Jul 13, 2016

Jul 7, 2016

May 27, 2016

May 24, 2016

May 1, 2016

Apr 30, 2016

Apr 29, 2016

Mar 6, 2016

Feb 25, 2016

Feb 24, 2016

Feb 23, 2016

Feb 18, 2016

Feb 17, 2016

Jan 10, 2016

Jan 9, 2016

Dec 22, 2015

Dec 21, 2015

Dec 16, 2015

Dec 15, 2015

Dec 8, 2015

Nov 24, 2015

Nov 1, 2015

Oct 29, 2015

Oct 18, 2015

Sep 27, 2015

Sep 23, 2015

Sep 16, 2015

Aug 31, 2015

Aug 26, 2015

Aug 22, 2015

Aug 19, 2015

Aug 4, 2015

Aug 3, 2015

Jul 27, 2015

Jul 24, 2015

Jul 21, 2015

Jul 20, 2015

Jul 18, 2015

Jul 17, 2015

Jul 16, 2015

Jul 10, 2015

Jun 29, 2015

Jun 8, 2015

May 21, 2015

May 14, 2015

May 5, 2015

May 4, 2015

Apr 22, 2015

Apr 15, 2015

Apr 14, 2015

Apr 7, 2015

Apr 6, 2015

Mar 27, 2015

Mar 26, 2015

Mar 25, 2015

Mar 23, 2015

Mar 22, 2015

Mar 21, 2015

Mar 20, 2015

Mar 14, 2015

Feb 10, 2015

Feb 5, 2015

Feb 4, 2015

Feb 3, 2015

Jan 21, 2015

Jan 16, 2015

Jan 13, 2015

Jan 12, 2015

Jan 8, 2015

Jan 7, 2015

Dec 14, 2013

May 3, 2013

Mar 26, 2013

Mar 24, 2013

Mar 23, 2013

Mar 13, 2013

Mar 12, 2013

Mar 10, 2013

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages .

Source Distribution

Uploaded Jul 7, 2024 Source

Built Distribution

Uploaded Jul 7, 2024 Python 3

Hashes for hypothesis-6.105.1.tar.gz

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | ||

| MD5 | ||

| BLAKE2b-256 |

Hashes for hypothesis-6.105.1-py3-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | ||

| MD5 | ||

| BLAKE2b-256 |

- português (Brasil)

Supported by

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

Hypothesis is a powerful, flexible, and easy to use library for property-based testing.

HypothesisWorks/hypothesis

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 14,654 Commits | ||||

| workflows | workflows | |||

Repository files navigation

Hypothesis is a family of testing libraries which let you write tests parametrized by a source of examples. A Hypothesis implementation then generates simple and comprehensible examples that make your tests fail. This simplifies writing your tests and makes them more powerful at the same time, by letting software automate the boring bits and do them to a higher standard than a human would, freeing you to focus on the higher level test logic.

This sort of testing is often called "property-based testing", and the most widely known implementation of the concept is the Haskell library QuickCheck , but Hypothesis differs significantly from QuickCheck and is designed to fit idiomatically and easily into existing styles of testing that you are used to, with absolutely no familiarity with Haskell or functional programming needed.

Hypothesis for Python is the original implementation, and the only one that is currently fully production ready and actively maintained.

Hypothesis for Other Languages

The core ideas of Hypothesis are language agnostic and in principle it is suitable for any language. We are interested in developing and supporting implementations for a wide variety of languages, but currently lack the resources to do so, so our porting efforts are mostly prototypes.

The two prototype implementations of Hypothesis for other languages are:

- Hypothesis for Ruby is a reasonable start on a port of Hypothesis to Ruby.

- Hypothesis for Java is a prototype written some time ago. It's far from feature complete and is not under active development, but was intended to prove the viability of the concept.

Additionally there is a port of the core engine of Hypothesis, Conjecture, to Rust. It is not feature complete but in the long run we are hoping to move much of the existing functionality to Rust and rebuild Hypothesis for Python on top of it, greatly lowering the porting effort to other languages.

Any or all of these could be turned into full fledged implementations with relatively little effort (no more than a few months of full time work), but as well as the initial work this would require someone prepared to provide or fund ongoing maintenance efforts for them in order to be viable.

Releases 671

Used by 26.2k.

Contributors 303

- Python 90.1%

- Jupyter Notebook 5.1%

Your Data Guide

How to Perform Hypothesis Testing Using Python

Step into the intriguing world of hypothesis testing, where your natural curiosity meets the power of data to reveal truths!

This article is your key to unlocking how those everyday hunches—like guessing a group’s average income or figuring out who owns their home—can be thoroughly checked and proven with data.

Thanks for reading Your Data Guide! Subscribe for free to receive new posts and support my work.

I am going to take you by the hand and show you, in simple steps, how to use Python to explore a hypothesis about the average yearly income.

By the time we’re done, you’ll not only get the hang of creating and testing hypotheses but also how to use statistical tests on actual data.

Perfect for up-and-coming data scientists, anyone with a knack for analysis, or just if you’re keen on data, get ready to gain the skills to make informed decisions and turn insights into real-world actions.

Join me as we dive deep into the data, one hypothesis at a time!

Before we get started, elevate your data skills with my expert eBooks—the culmination of my experiences and insights.

Support my work and enhance your journey. Check them out:

eBook 1: Personal INTERVIEW Ready “SQL” CheatSheet

eBook 2: Personal INTERVIEW Ready “Statistics” Cornell Notes

Best Selling eBook: Top 50+ ChatGPT Personas for Custom Instructions

Data Science Bundle ( Cheapest ): The Ultimate Data Science Bundle: Complete

ChatGPT Bundle ( Cheapest ): The Ultimate ChatGPT Bundle: Complete

💡 Checkout for more such resources: https://codewarepam.gumroad.com/

What is a hypothesis, and how do you test it?

A hypothesis is like a guess or prediction about something specific, such as the average income or the percentage of homeowners in a group of people.

It’s based on theories, past observations, or questions that spark our curiosity.

For instance, you might predict that the average yearly income of potential customers is over $50,000 or that 60% of them own their homes.

To see if your guess is right, you gather data from a smaller group within the larger population and check if the numbers ( like the average income, percentage of homeowners, etc. ) from this smaller group match your initial prediction.

You also set a rule for how sure you need to be to trust your findings, often using a 5% chance of error as a standard measure . This means you’re 95% confident in your results. — Level of Significance (0.05)

There are two main types of hypotheses : the null hypothesi s, which is your baseline saying there’s no change or difference, and the alternative hypothesis , which suggests there is a change or difference.

For example,

If you start with the idea that the average yearly income of potential customers is $50,000,

The alternative could be that it’s not $50,000—it could be less or more, depending on what you’re trying to find out.

To test your hypothesis, you calculate a test statistic —a number that shows how much your sample data deviates from what you predicted.

How you calculate this depends on what you’re studying and the kind of data you have. For example, to check an average, you might use a formula that considers your sample’s average, the predicted average, the variation in your sample data, and how big your sample is.

This test statistic follows a known distribution ( like the t-distribution or z-distribution ), which helps you figure out the p-value.

The p-value tells you the odds of seeing a test statistic as extreme as yours if your initial guess was correct.

A small p-value means your data strongly disagrees with your initial guess.

Finally, you decide on your hypothesis by comparing the p-value to your error threshold.

If the p-value is smaller or equal, you reject the null hypothesis, meaning your data shows a significant difference that’s unlikely due to chance.

If the p-value is larger, you stick with the null hypothesis , suggesting your data doesn’t show a meaningful difference and any change might just be by chance.

We’ll go through an example that tests if the average annual income of prospective customers exceeds $50,000.

This process involves stating hypotheses , specifying a significance level , collecting and analyzing data , and drawing conclusions based on statistical tests.

Example: Testing a Hypothesis About Average Annual Income

Step 1: state the hypotheses.

Null Hypothesis (H0): The average annual income of prospective customers is $50,000.

Alternative Hypothesis (H1): The average annual income of prospective customers is more than $50,000.

Step 2: Specify the Significance Level

Significance Level: 0.05, meaning we’re 95% confident in our findings and allow a 5% chance of error.

Step 3: Collect Sample Data

We’ll use the ProspectiveBuyer table, assuming it's a random sample from the population.

This table has 2,059 entries, representing prospective customers' annual incomes.

Step 4: Calculate the Sample Statistic

In Python, we can use libraries like Pandas and Numpy to calculate the sample mean and standard deviation.

SampleMean: 56,992.43

SampleSD: 32,079.16

SampleSize: 2,059

Step 5: Calculate the Test Statistic

We use the t-test formula to calculate how significantly our sample mean deviates from the hypothesized mean.

Python’s Scipy library can handle this calculation:

T-Statistic: 4.62

Step 6: Calculate the P-Value

The p-value is already calculated in the previous step using Scipy's ttest_1samp function, which returns both the test statistic and the p-value.

P-Value = 0.0000021

Step 7: State the Statistical Conclusion

We compare the p-value with our significance level to decide on our hypothesis:

Since the p-value is less than 0.05, we reject the null hypothesis in favor of the alternative.

Conclusion:

There’s strong evidence to suggest that the average annual income of prospective customers is indeed more than $50,000.

This example illustrates how Python can be a powerful tool for hypothesis testing, enabling us to derive insights from data through statistical analysis.

How to Choose the Right Test Statistics

Choosing the right test statistic is crucial and depends on what you’re trying to find out, the kind of data you have, and how that data is spread out.

Here are some common types of test statistics and when to use them:

T-test statistic:

This one’s great for checking out the average of a group when your data follows a normal distribution or when you’re comparing the averages of two such groups.

The t-test follows a special curve called the t-distribution . This curve looks a lot like the normal bell curve but with thicker ends, which means more chances for extreme values.

The t-distribution’s shape changes based on something called degrees of freedom , which is a fancy way of talking about your sample size and how many groups you’re comparing.

Z-test statistic:

Use this when you’re looking at the average of a normally distributed group or the difference between two group averages, and you already know the standard deviation for all in the population.

The z-test follows the standard normal distribution , which is your classic bell curve centered at zero and spreading out evenly on both sides.

Chi-square test statistic:

This is your go-to for checking if there’s a difference in variability within a normally distributed group or if two categories are related.

The chi-square statistic follows its own distribution, which leans to the right and gets its shape from the degrees of freedom —basically, how many categories or groups you’re comparing.

F-test statistic:

This one helps you compare the variability between two groups or see if the averages of more than two groups are all the same, assuming all groups are normally distributed.

The F-test follows the F-distribution , which is also right-skewed and has two types of degrees of freedom that depend on how many groups you have and the size of each group.

In simple terms, the test you pick hinges on what you’re curious about, whether your data fits the normal curve, and if you know certain specifics, like the population’s standard deviation.

Each test has its own special curve and rules based on your sample’s details and what you’re comparing.

Join my community of learners! Subscribe to my newsletter for more tips, tricks, and exclusive content on mastering Data Science & AI. — Your Data Guide Join my community of learners! Subscribe to my newsletter for more tips, tricks, and exclusive content on mastering data science and AI. By Richard Warepam ⭐️ Visit My Gumroad Shop: https://codewarepam.gumroad.com/

Ready for more?

Hypothesis Testing with Python

Learn how to plan, implement, and interpret different kinds of hypothesis tests in Python.

Skill level

Time to complete

Certificate of completion

Prerequisites

About this course

In this course, you’ll learn to plan, implement, and interpret a hypothesis test in Python. Hypothesis testing is used to address questions about a population based on a subset from that population. For example, A/B testing is a framework for learning about consumer behavior based on a small sample of consumers.

This course assumes some preexisting knowledge of Python, including the NumPy and pandas libraries.

Introduction to Hypothesis Testing

Find out what you’ll learn in this course and why it’s important.

Hypothesis testing: Testing a Sample Statistic

Learn about hypothesis testing and implement binomial and one-sample t-tests in Python.

Hypothesis Testing: Testing an Association

Learn about hypothesis tests that can be used to evaluate whether there is an association between two variables.

Experimental Design

Learn to design an experiment to make a decision using a hypothesis test.

Hypothesis Testing Projects

Practice your hypothesis testing skills with some additional projects!

Certificate of completion available with Plus or Pro

The platform

Hands-on learning

Projects in this course

Heart disease research part i, heart disease research part ii, a/b testing at nosh mish mosh, earn a certificate of completion.

- Show proof Receive a certificate that demonstrates you've completed a course or path.

- Build a collection The more courses and paths you complete, the more certificates you collect.

- Share with your network Easily add certificates of completion to your LinkedIn profile to share your accomplishments.

Reviews from learners

Our learners work at.

- Google Logo

- Amazon Logo

- Microsoft Logo

- Reddit Logo

- Spotify Logo

- YouTube Logo

- Instagram Logo

Frequently asked questions about Hypothesis Testing with Python

What is hypothesis testing.

After drawing conclusions from data, you have to make sure it’s correct, and hypothesis testing involves using statistical methods to validate our results.

Why is hypothesis testing important?

What kind of jobs perform hypothesis testing, what else should i study if i am learning about hypothesis testing, join over 50 million learners and start hypothesis testing with python today, looking for something else, related resources, software testing methodologies, pen testing, introduction to testing with mocha and chai, related courses and paths, hypothesis testing: experimental design, hypothesis testing: associations, hypothesis testing: significance thresholds, browse more topics.

- Math 94,367 learners enrolled

- Python 4,815,223 learners enrolled

- Data Science 6,140,634 learners enrolled

- Code Foundations 13,695,096 learners enrolled

- For Business 10,064,281 learners enrolled

- Computer Science 7,925,447 learners enrolled

- Web Development 7,209,780 learners enrolled

- Cloud Computing 4,383,993 learners enrolled

- Data Analytics 4,217,204 learners enrolled

Unlock additional features with a paid plan

Practice projects, assessments, certificate of completion.

Test faster, fix more

How Hypothesis Works

Hypothesis has a very different underlying implementation to any other property-based testing system. As far as I know, it’s an entirely novel design that I invented.

Central to this design is the following feature set which every Hypothesis strategy supports automatically (the only way to break this is by having the data generated depend somehow on external global state):

- All generated examples can be safely mutated

- All generated examples can be saved to disk (this is important because Hypothesis remembers and replays previous failures).

- All generated examples can be shrunk

- All invariants that hold in generation must hold during shrinking ( though the probability distribution can of course change, so things which are only supported with high probability may not be).

(Essentially no other property based systems manage one of these claims, let alone all)

The initial mechanisms for supporting this were fairly complicated, but after passing through a number of iterations I hit on a very powerful underlying design that unifies all of these features.

It’s still fairly complicated in implementation, but most of that is optimisations and things needed to make the core idea work. More importantly, the complexity is quite contained: A fairly small kernel handles all of the complexity, and there is little to no additional complexity (at least, compared to how it normally looks) in defining new strategies, etc.

This article will give a high level overview of that model and how it works.

Hypothesis consists of essentially three parts, each built on top of the previous:

- A low level interactive byte stream fuzzer called Conjecture

- A strategy library for turning Conjecture’s byte streams into high level structured data.

- A testing interface for driving test with data from Hypothesis’s strategy library.

I’ll focus purely on the first two here, as the latter is complex but mostly a matter of plumbing.

The basic object exposed by Conjecture is a class called TestData, which essentially looks like an open file handle you can read bytes from:

(note: The Python code in this article isn’t an exact copy of what’s found in Hypothesis, but has been simplified for pedagogical reasons).

A strategy is then just an object which implements a single abstract method from the strategy class:

The testing interface then turns test functions plus the strategies they need into something that takes a TestData object and returns True if the test fails and False if it passes.

For a simple example, we can implement a strategy for unsigned 64-bit integers as follows:

As well as returning bytes, draw_bytes can raise an exception that stops the test. This is useful as a way to stop examples from getting too big (and will also be necessary for shrinking, as we’ll see in a moment).

From this it should be fairly clear how we support saving and mutation: Saving every example is possible because we can just write the bytes that produced it to disk, and mutation is possible because strategies are just returning values that we don’t in any way hang on to.

But how does shrinking work?

Well the key idea is the one I mentioned in my last article about shrinking - shrinking inputs suffices to shrink outputs. In this case the input is the byte stream.

Once Hypothesis has found a failure it begins shrinking the byte stream using a TestData object that looks like the following:

Shrinking now reduces to shrinking the byte array that gets passed in as data, subject to the condition that our transformed test function still returns True.

Shrinking of the byte array is designed to try to minimize it according to the following rules:

- Shorter is always simpler.

- Given two byte arrays of the same length, the one which is lexicographically earlier (considering bytes as unsigned 8 bit integers) is simpler.

You can imagine that some variant of Delta Debugging is used for the purpose of shrinking the byte array, repeatedly deleting data and lowering bytes until no byte may be deleted or lowered. It’s a lot more complicated than that, but I’m mostly going to gloss over that part for now.

As long as the strategy is well written (and to some extent even when it’s not - it requires a certain amount of active sabotage to create strategies that produce more complex data given fewer bytes) this results in shrinks to the byte array giving good shrinks to the generated data. e.g. our 64-bit unsigned integers are chosen to be big endian so that shrinking the byte data lexicographically shrinks the integer towards zero.

In order to get really good deleting behaviour in our strategies we need to be a little careful about how we arrange things, so that deleting in the underlying bytestream corresponds to deleting in generated data.

For example, suppose we tried to implement lists as follows:

The problem with this is that deleting data doesn’t actually result in deleting elements - all that will happen is that drawing will run off the end of the buffer. You can potentially shrink n_elmements, but that only lets you delete things from the end of the list and will leave a bunch of left over data at the end if you do - if this is the last data drawn that’s not a problem, and it might be OK anyway if the data usefully runs into the next strategy, but it works fairly unreliably.

I am in fact working on an improvement to how shrinking works for strategies that are defined like this - they’re quite common in user code, so they’re worth supporting - but it’s better to just have deletion of elements correspond to deletion of data in the underlying bytestream. We can do this as follows:

We now draw lists as a series True, element, True, element, …, False, etc. So if you delete the interval in the byte stream that starts with a True and finishes at the end of an element, that just deletes that element from the list and shifts everything afterwards left one space.

Given some careful strategy design this ends up working pretty well. It does however run into problems in two minor cases:

- It doesn’t generate very good data

- It doesn’t shrink very well

Fortunately both of these are fixable.

The reason for the lack of good data is that Conjecture doesn’t know enough to produce a good distribution of bytes for the specific special values for your strategy. e.g. in our unsigned 64 bit integer examples above it can probably guess that 0 is a special value, but it’s not necessarily obvious that e.g. focusing on small values is quite useful.

This gets worse as you move further away from things that look like unsigned integers. e.g. if you’re turning bytes into floats, how is Conjecture supposed to know that Infinity is an interesting value?

The simple solution is to allow the user to provide a distribution hint:

Where a distribution function takes a Random object and a number of bytes.

This lets users specify the distribution of bytes. It won’t necessarily be respected - e.g. it certainly isn’t in shrinking, but the fuzzer can and does mutate the values during generation too - but it provides a good starting point which allows you to highlight special values, etc.

So for example we could redefine our integer strategy as:

Now we have a biased integer distribution which will produce integers between 0 and 100 half the time.

We then use the strategies to generate our initial buffers. For example we could pass in a TestData implementation that looked like this:

This draws data from the provided distribution and records it, so at the end we have a record of all the bytes we’ve drawn so that we can replay the test afterwards.

This turns out to be mostly enough. I’ve got some pending research to replace this API with something a bit more structured (the ideal would be that instead of opaque distribution objects you draw from an explicit mixture of grammars), but for the moment research on big changes like that is side lined because nobody is funding Hypothesis development, so I’ve not got very far with it.

Initial designs tried to avoid this approach by using data from the byte stream to define the distribution, but this ended up producing quite opaque structures in the byte stream that didn’t shrink very well, and this turned out to be simpler.

The second problem of it not shrinking well is also fairly easily resolved: The problem is not that we can’t shrink it well, but that shrinking ends up being slow because we can’t tell what we need to do: In our lists example above, the only way we currently have to delete elements is to delete the corresponding intervals, and the only way we have to find the right intervals is to try all of them. This potentially requires O(n^2) deletions to get the right one.

The solution is just to do a bit more book keeping as we generate data to mark useful intervals. TestData now looks like this:

We then pass everything through data.draw instead of strategy.do_draw to maintain this bookkeeping.

These mark useful boundaries in the bytestram that we can try deleting: Intervals which don’t cross a value boundary are much more likely to be useful to delete.

There are a large number of other details that are required to make Hypothesis work: The shrinker and the strategy library are both carefully developed to work together, and this requires a fairly large number of heuristics and special cases to make things work, as well as a bunch of book keeping beyond the intervals that I’ve glossed over.

It’s not a perfect system, but it works and works well: This has been the underlying implementation of Hypothesis since the 3.0 relase in early 2016, and the switch over was nearly transparent to end users: the previous implementation was much closer to a classic QuickCheck model (with a great deal of extra complexity to support the full Hypothesis feature set).

In a lot of cases it even works better than heavily customized solutions: For example, a benefit of the byte based approach is that all parts of the data are fully comprehensible to it. Often more structured shrinkers get stuck in local minima because shrinking one part of the data requires simultaneously shrinking another part of the data, whileas Hypothesis can just spot patterns in the data and speculatively shrink them together to see if it works.

The support for chaining data generation together is another thing that benefits here. In Hypothesis you can chain strategies together like this:

The idea is that flatmap lets you chain strategy definitions together by drawing data that is dependent on a value from other strategies.

This works fairly well in modern Hypothesis, but has historically (e.g. in test.check or pre 3.0 Hypothesis) been a problem for integrated testing and generation.

The reason this is normally a problem is that if you shrink the first value you’ve drawn then you essentially have to invalidate the value drawn from bind(value): There’s no real way to retain it because it came from a completely different generator. This potentially results in throwing away a lot of previous work if a shrink elsewhere suddenly makes it to shrink the initial value.

With the Hypothesis byte stream approach this is mostly a non-issue: As long as the new strategy has roughly the same shape as the old strategy it will just pick up where the old shrinks left off because they operate on the same underlying byte stream.

This sort of structure does cause problems for Hypothesis if shrinking the first value would change the structure of the bound strategy too much, but in practice it usually seems to work out pretty well because there’s enough flexibility in how the shrinks happen that the shrinker can usually work past it.

This model has proven pretty powerful even in its current form, but there’s also a lot of scope to expand it.

But hopefully not by too much. One of the advantages of the model in its current form though is its simplicity. The Hypothesis for Java prototype was written in an afternoon and is pretty powerful. The whole of the Conjecture implementation in Python is a bit under a thousand significant lines of fairly portable code. Although the strategy library and testing interface are still a fair bit of work, I’m still hopeful that the Hypothesis/Conjecture approach is the tool needed to bring an end to the dark era of property based testing libraries that don’t implement shrinking at all.

How to Perform Hypothesis Testing in Python (With Examples)

A hypothesis test is a formal statistical test we use to reject or fail to reject some statistical hypothesis.

This tutorial explains how to perform the following hypothesis tests in Python:

- One sample t-test

- Two sample t-test

- Paired samples t-test

Let’s jump in!

Example 1: One Sample t-test in Python

A one sample t-test is used to test whether or not the mean of a population is equal to some value.

For example, suppose we want to know whether or not the mean weight of a certain species of some turtle is equal to 310 pounds.

To test this, we go out and collect a simple random sample of turtles with the following weights:

Weights : 300, 315, 320, 311, 314, 309, 300, 308, 305, 303, 305, 301, 303

The following code shows how to use the ttest_1samp() function from the scipy.stats library to perform a one sample t-test:

The t test statistic is -1.5848 and the corresponding two-sided p-value is 0.1389 .

The two hypotheses for this particular one sample t-test are as follows:

- H 0 : µ = 310 (the mean weight for this species of turtle is 310 pounds)

- H A : µ ≠310 (the mean weight is not 310 pounds)

Because the p-value of our test (0.1389) is greater than alpha = 0.05, we fail to reject the null hypothesis of the test.

We do not have sufficient evidence to say that the mean weight for this particular species of turtle is different from 310 pounds.

Example 2: Two Sample t-test in Python

A two sample t-test is used to test whether or not the means of two populations are equal.

For example, suppose we want to know whether or not the mean weight between two different species of turtles is equal.

To test this, we collect a simple random sample of turtles from each species with the following weights:

Sample 1 : 300, 315, 320, 311, 314, 309, 300, 308, 305, 303, 305, 301, 303

Sample 2 : 335, 329, 322, 321, 324, 319, 304, 308, 305, 311, 307, 300, 305

The following code shows how to use the ttest_ind() function from the scipy.stats library to perform this two sample t-test:

The t test statistic is – 2.1009 and the corresponding two-sided p-value is 0.0463 .

The two hypotheses for this particular two sample t-test are as follows:

- H 0 : µ 1 = µ 2 (the mean weight between the two species is equal)

- H A : µ 1 ≠ µ 2 (the mean weight between the two species is not equal)

Since the p-value of the test (0.0463) is less than .05, we reject the null hypothesis.

This means we have sufficient evidence to say that the mean weight between the two species is not equal.

Example 3: Paired Samples t-test in Python

A paired samples t-test is used to compare the means of two samples when each observation in one sample can be paired with an observation in the other sample.

For example, suppose we want to know whether or not a certain training program is able to increase the max vertical jump (in inches) of basketball players.

To test this, we may recruit a simple random sample of 12 college basketball players and measure each of their max vertical jumps. Then, we may have each player use the training program for one month and then measure their max vertical jump again at the end of the month.

The following data shows the max jump height (in inches) before and after using the training program for each player:

Before : 22, 24, 20, 19, 19, 20, 22, 25, 24, 23, 22, 21

After : 23, 25, 20, 24, 18, 22, 23, 28, 24, 25, 24, 20

The following code shows how to use the ttest_rel() function from the scipy.stats library to perform this paired samples t-test:

The t test statistic is – 2.5289 and the corresponding two-sided p-value is 0.0280 .

The two hypotheses for this particular paired samples t-test are as follows:

- H 0 : µ 1 = µ 2 (the mean jump height before and after using the program is equal)

- H A : µ 1 ≠ µ 2 (the mean jump height before and after using the program is not equal)

Since the p-value of the test (0.0280) is less than .05, we reject the null hypothesis.

This means we have sufficient evidence to say that the mean jump height before and after using the training program is not equal.

Additional Resources

You can use the following online calculators to automatically perform various t-tests:

One Sample t-test Calculator Two Sample t-test Calculator Paired Samples t-test Calculator

Featured Posts

Hey there. My name is Zach Bobbitt. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

One Reply to “How to Perform Hypothesis Testing in Python (With Examples)”

Nice post. Could you please clear my one doubt regarding alpha value . i can see in your example, it is a two tail test. As i understand in that case our alpha value should be alpha/2 i.e 0.025 . Here you are taking it as 0.05. ?

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Join the Statology Community

Sign up to receive Statology's exclusive study resource: 100 practice problems with step-by-step solutions. Plus, get our latest insights, tutorials, and data analysis tips straight to your inbox!

By subscribing you accept Statology's Privacy Policy.

What Is Hypothesis Testing? Types and Python Code Example

Curiosity has always been a part of human nature. Since the beginning of time, this has been one of the most important tools for birthing civilizations. Still, our curiosity grows — it tests and expands our limits. Humanity has explored the plains of land, water, and air. We've built underwater habitats where we could live for weeks. Our civilization has explored various planets. We've explored land to an unlimited degree.

These things were possible because humans asked questions and searched until they found answers. However, for us to get these answers, a proven method must be used and followed through to validate our results. Historically, philosophers assumed the earth was flat and you would fall off when you reached the edge. While philosophers like Aristotle argued that the earth was spherical based on the formation of the stars, they could not prove it at the time.

This is because they didn't have adequate resources to explore space or mathematically prove Earth's shape. It was a Greek mathematician named Eratosthenes who calculated the earth's circumference with incredible precision. He used scientific methods to show that the Earth was not flat. Since then, other methods have been used to prove the Earth's spherical shape.

When there are questions or statements that are yet to be tested and confirmed based on some scientific method, they are called hypotheses. Basically, we have two types of hypotheses: null and alternate.

A null hypothesis is one's default belief or argument about a subject matter. In the case of the earth's shape, the null hypothesis was that the earth was flat.

An alternate hypothesis is a belief or argument a person might try to establish. Aristotle and Eratosthenes argued that the earth was spherical.

Other examples of a random alternate hypothesis include:

- The weather may have an impact on a person's mood.

- More people wear suits on Mondays compared to other days of the week.

- Children are more likely to be brilliant if both parents are in academia, and so on.

What is Hypothesis Testing?

Hypothesis testing is the act of testing whether a hypothesis or inference is true. When an alternate hypothesis is introduced, we test it against the null hypothesis to know which is correct. Let's use a plant experiment by a 12-year-old student to see how this works.

The hypothesis is that a plant will grow taller when given a certain type of fertilizer. The student takes two samples of the same plant, fertilizes one, and leaves the other unfertilized. He measures the plants' height every few days and records the results in a table.

After a week or two, he compares the final height of both plants to see which grew taller. If the plant given fertilizer grew taller, the hypothesis is established as fact. If not, the hypothesis is not supported. This simple experiment shows how to form a hypothesis, test it experimentally, and analyze the results.

In hypothesis testing, there are two types of error: Type I and Type II.

When we reject the null hypothesis in a case where it is correct, we've committed a Type I error. Type II errors occur when we fail to reject the null hypothesis when it is incorrect.

In our plant experiment above, if the student finds out that both plants' heights are the same at the end of the test period yet opines that fertilizer helps with plant growth, he has committed a Type I error.

However, if the fertilized plant comes out taller and the student records that both plants are the same or that the one without fertilizer grew taller, he has committed a Type II error because he has failed to reject the null hypothesis.

What are the Steps in Hypothesis Testing?

The following steps explain how we can test a hypothesis:

Step #1 - Define the Null and Alternative Hypotheses

Before making any test, we must first define what we are testing and what the default assumption is about the subject. In this article, we'll be testing if the average weight of 10-year-old children is more than 32kg.

Our null hypothesis is that 10 year old children weigh 32 kg on average. Our alternate hypothesis is that the average weight is more than 32kg. Ho denotes a null hypothesis, while H1 denotes an alternate hypothesis.

Step #2 - Choose a Significance Level

The significance level is a threshold for determining if the test is valid. It gives credibility to our hypothesis test to ensure we are not just luck-dependent but have enough evidence to support our claims. We usually set our significance level before conducting our tests. The criterion for determining our significance value is known as p-value.

A lower p-value means that there is stronger evidence against the null hypothesis, and therefore, a greater degree of significance. A p-value of 0.05 is widely accepted to be significant in most fields of science. P-values do not denote the probability of the outcome of the result, they just serve as a benchmark for determining whether our test result is due to chance. For our test, our p-value will be 0.05.

Step #3 - Collect Data and Calculate a Test Statistic

You can obtain your data from online data stores or conduct your research directly. Data can be scraped or researched online. The methodology might depend on the research you are trying to conduct.

We can calculate our test using any of the appropriate hypothesis tests. This can be a T-test, Z-test, Chi-squared, and so on. There are several hypothesis tests, each suiting different purposes and research questions. In this article, we'll use the T-test to run our hypothesis, but I'll explain the Z-test, and chi-squared too.

T-test is used for comparison of two sets of data when we don't know the population standard deviation. It's a parametric test, meaning it makes assumptions about the distribution of the data. These assumptions include that the data is normally distributed and that the variances of the two groups are equal. In a more simple and practical sense, imagine that we have test scores in a class for males and females, but we don't know how different or similar these scores are. We can use a t-test to see if there's a real difference.

The Z-test is used for comparison between two sets of data when the population standard deviation is known. It is also a parametric test, but it makes fewer assumptions about the distribution of data. The z-test assumes that the data is normally distributed, but it does not assume that the variances of the two groups are equal. In our class test example, with the t-test, we can say that if we already know how spread out the scores are in both groups, we can now use the z-test to see if there's a difference in the average scores.

The Chi-squared test is used to compare two or more categorical variables. The chi-squared test is a non-parametric test, meaning it does not make any assumptions about the distribution of data. It can be used to test a variety of hypotheses, including whether two or more groups have equal proportions.

Step #4 - Decide on the Null Hypothesis Based on the Test Statistic and Significance Level

After conducting our test and calculating the test statistic, we can compare its value to the predetermined significance level. If the test statistic falls beyond the significance level, we can decide to reject the null hypothesis, indicating that there is sufficient evidence to support our alternative hypothesis.

On the other contrary, if the test statistic does not exceed the significance level, we fail to reject the null hypothesis, signifying that we do not have enough statistical evidence to conclude in favor of the alternative hypothesis.

Step #5 - Interpret the Results

Depending on the decision made in the previous step, we can interpret the result in the context of our study and the practical implications. For our case study, we can interpret whether we have significant evidence to support our claim that the average weight of 10 year old children is more than 32kg or not.

For our test, we are generating random dummy data for the weight of the children. We'll use a t-test to evaluate whether our hypothesis is correct or not.

For a better understanding, let's look at what each block of code does.

The first block is the import statement, where we import numpy and scipy.stats . Numpy is a Python library used for scientific computing. It has a large library of functions for working with arrays. Scipy is a library for mathematical functions. It has a stat module for performing statistical functions, and that's what we'll be using for our t-test.

The weights of the children were generated at random since we aren't working with an actual dataset. The random module within the Numpy library provides a function for generating random numbers, which is randint .

The randint function takes three arguments. The first (20) is the lower bound of the random numbers to be generated. The second (40) is the upper bound, and the third (100) specifies the number of random integers to generate. That is, we are generating random weight values for 100 children. In real circumstances, these weight samples would have been obtained by taking the weight of the required number of children needed for the test.

Using the code above, we declared our null and alternate hypotheses stating the average weight of a 10-year-old in both cases.

t_stat and p_value are the variables in which we'll store the results of our functions. stats.ttest_1samp is the function that calculates our test. It takes in two variables, the first is the data variable that stores the array of weights for children, and the second (32) is the value against which we'll test the mean of our array of weights or dataset in cases where we are using a real-world dataset.

The code above prints both values for t_stats and p_value .

Lastly, we evaluated our p_value against our significance value, which is 0.05. If our p_value is less than 0.05, we reject the null hypothesis. Otherwise, we fail to reject the null hypothesis. Below is the output of this program. Our null hypothesis was rejected.

In this article, we discussed the importance of hypothesis testing. We highlighted how science has advanced human knowledge and civilization through formulating and testing hypotheses.

We discussed Type I and Type II errors in hypothesis testing and how they underscore the importance of careful consideration and analysis in scientific inquiry. It reinforces the idea that conclusions should be drawn based on thorough statistical analysis rather than assumptions or biases.

We also generated a sample dataset using the relevant Python libraries and used the needed functions to calculate and test our alternate hypothesis.

Thank you for reading! Please follow me on LinkedIn where I also post more data related content.

Technical support engineer with 4 years of experience & 6 months in data analytics. Passionate about data science, programming, & statistics.

If you read this far, thank the author to show them you care. Say Thanks

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

anaconda / packages / hypothesis 6.100.1 0

A library for property based testing

- License: MPL-2.0

- Home: https://hypothesis.works/

- Development: https://github.com/HypothesisWorks/hypothesis-python

- Documentation: https://hypothesis.readthedocs.io/

- 47886 total downloads

- Last upload: 2 months and 22 days ago

- linux-64 v6.100.1

- linux-aarch64 v6.100.1

- linux-s390x v6.100.1

- osx-64 v6.100.1

- osx-arm64 v6.100.1

- win-64 v6.100.1

- noarch v6.29.3

- linux-32 v4.32.2

- linux-ppc64le v6.82.0

- win-32 v4.54.2

conda install

Description.

Hypothesis is an advanced testing library for Python. It lets you write tests which are parametrized by a source of examples, and then generates simple and comprehensible examples that make your tests fail. This lets you find more bugs in your code with less work.

© 2024 Anaconda, Inc. All Rights Reserved. (v3.0.9) Legal | Privacy Policy

- Some more examples

- Edit on GitHub

Some more examples ¶

This is a collection of examples of how to use Hypothesis in interesting ways. It’s small for now but will grow over time.

All of these examples are designed to be run under pytest , and nose should work too.

How not to sort by a partial order ¶

The following is an example that’s been extracted and simplified from a real bug that occurred in an earlier version of Hypothesis. The real bug was a lot harder to find.

Suppose we’ve got the following type:

Each node is a label and a sequence of some data, and we have the relationship sorts_before meaning the data of the left is an initial segment of the right. So e.g. a node with value [1, 2] will sort before a node with value [1, 2, 3] , but neither of [1, 2] nor [1, 3] will sort before the other.

We have a list of nodes, and we want to topologically sort them with respect to this ordering. That is, we want to arrange the list so that if x.sorts_before(y) then x appears earlier in the list than y. We naively think that the easiest way to do this is to extend the partial order defined here to a total order by breaking ties arbitrarily and then using a normal sorting algorithm. So we define the following code:

This takes the order defined by sorts_before and extends it by breaking ties by comparing the node labels.

But now we want to test that it works.

First we write a function to verify that our desired outcome holds:

This will return false if it ever finds a pair in the wrong order and return true otherwise.

Given this function, what we want to do with Hypothesis is assert that for all sequences of nodes, the result of calling sort_nodes on it is sorted.

First we need to define a strategy for Node:

We want to generate short lists of values so that there’s a decent chance of one being a prefix of the other (this is also why the choice of bool as the elements). We then define a strategy which builds a node out of an integer and one of those short lists of booleans.

We can now write a test:

this immediately fails with the following example:

The reason for this is that because False is not a prefix of (True, True) nor vice versa, sorting things the first two nodes are equal because they have equal labels. This makes the whole order non-transitive and produces basically nonsense results.

But this is pretty unsatisfying. It only works because they have the same label. Perhaps we actually wanted our labels to be unique. Let’s change the test to do that.

We define a function to deduplicate nodes by labels, and can now map that over a strategy for lists of nodes to give us a strategy for lists of nodes with unique labels:

Hypothesis quickly gives us an example of this still being wrong:

Now this is a more interesting example. None of the nodes will sort equal. What is happening here is that the first node is strictly less than the last node because (False,) is a prefix of (False, False). This is in turn strictly less than the middle node because neither is a prefix of the other and -2 < -1. The middle node is then less than the first node because -1 < 0.

So, convinced that our implementation is broken, we write a better one:

This is just insertion sort slightly modified - we swap a node backwards until swapping it further would violate the order constraints. The reason this works is because our order is a partial order already (this wouldn’t produce a valid result for a general topological sorting - you need the transitivity).

We now run our test again and it passes, telling us that this time we’ve successfully managed to sort some nodes without getting it completely wrong. Go us.

Time zone arithmetic ¶

This is an example of some tests for pytz which check that various timezone conversions behave as you would expect them to. These tests should all pass, and are mostly a demonstration of some useful sorts of thing to test with Hypothesis, and how the datetimes() strategy works.

Condorcet’s paradox ¶

A classic paradox in voting theory, called Condorcet’s paradox, is that majority preferences are not transitive. That is, there is a population and a set of three candidates A, B and C such that the majority of the population prefer A to B, B to C and C to A.

Wouldn’t it be neat if we could use Hypothesis to provide an example of this?

Well as you can probably guess from the presence of this section, we can! The main trick is to decide how we want to represent the result of an election - for this example, we’ll use a list of “votes”, where each vote is a list of candidates in the voters preferred order. Without further ado, here is the code:

The example Hypothesis gives me on my first run (your mileage may of course vary) is:

Which does indeed do the job: The majority (votes 0 and 1) prefer B to C, the majority (votes 0 and 2) prefer A to B and the majority (votes 1 and 2) prefer C to A. This is in fact basically the canonical example of the voting paradox.

Fuzzing an HTTP API ¶

Hypothesis’s support for testing HTTP services is somewhat nascent. There are plans for some fully featured things around this, but right now they’re probably quite far down the line.

But you can do a lot yourself without any explicit support! Here’s a script I wrote to throw arbitrary data against the API for an entirely fictitious service called Waspfinder (this is only lightly obfuscated and you can easily figure out who I’m actually talking about, but I don’t want you to run this code and hammer their API without their permission).

All this does is use Hypothesis to generate arbitrary JSON data matching the format their API asks for and check for 500 errors. More advanced tests which then use the result and go on to do other things are definitely also possible. The schemathesis package provides an excellent example of this!

- Do Not Sell My Personal Info

- ⋅

- Technical SEO

How To Use Python To Test SEO Theories (And Why You Should)

Learn how to test your SEO theories using Python. Discover the steps required to pre-test search engine rank factors and validate implementation sitewide.

When working on sites with traffic, there is as much to lose as there is to gain from implementing SEO recommendations.

The downside risk of an SEO implementation gone wrong can be mitigated using machine learning models to pre-test search engine rank factors.

Pre-testing aside, split testing is the most reliable way to validate SEO theories before making the call to roll out the implementation sitewide or not.

We will go through the steps required on how you would use Python to test your SEO theories.

Choose Rank Positions

One of the challenges of testing SEO theories is the large sample sizes required to make the test conclusions statistically valid.

Split tests – popularized by Will Critchlow of SearchPilot – favor traffic-based metrics such as clicks, which is fine if your company is enterprise-level or has copious traffic.

If your site doesn’t have that envious luxury, then traffic as an outcome metric is likely to be a relatively rare event, which means your experiments will take too long to run and test.

Instead, consider rank positions. Quite often, for small- to mid-size companies looking to grow, their pages will often rank for target keywords that don’t yet rank high enough to get traffic.

Over the timeframe of your test, for each data point of time, for example day, week or month, there are likely to be multiple rank position data points for multiple keywords. In comparison to using a metric of traffic (which is likely to have much less data per page per date), which reduces the time period required to reach a minimum sample size if using rank position.

Thus, rank position is great for non-enterprise-sized clients looking to conduct SEO split tests who can attain insights much faster.

Google Search Console Is Your Friend

Deciding to use rank positions in Google makes using the data source a straightforward (and conveniently a low-cost) decision in Google Search Console (GSC) , assuming it’s set up.

GSC is a good fit here because it has an API that allows you to extract thousands of data points over time and filter for URL strings.

While the data may not be the gospel truth, it will at least be consistent, which is good enough.

Filling In Missing Data

GSC only reports data for URLs that have pages, so you’ll need to create rows for dates and fill in the missing data.

The Python functions used would be a combination of merge() (think VLOOKUP function in Excel ) used to add missing data rows per URL and filling the data you want to be inputed for those missing dates on those URLs.

For traffic metrics, that’ll be zero, whereas for rank positions, that’ll be either the median (if you’re going to assume the URL was ranking when no impressions were generated) or 100 (to assume it wasn’t ranking).

The code is given here .

Check The Distribution And Select Model

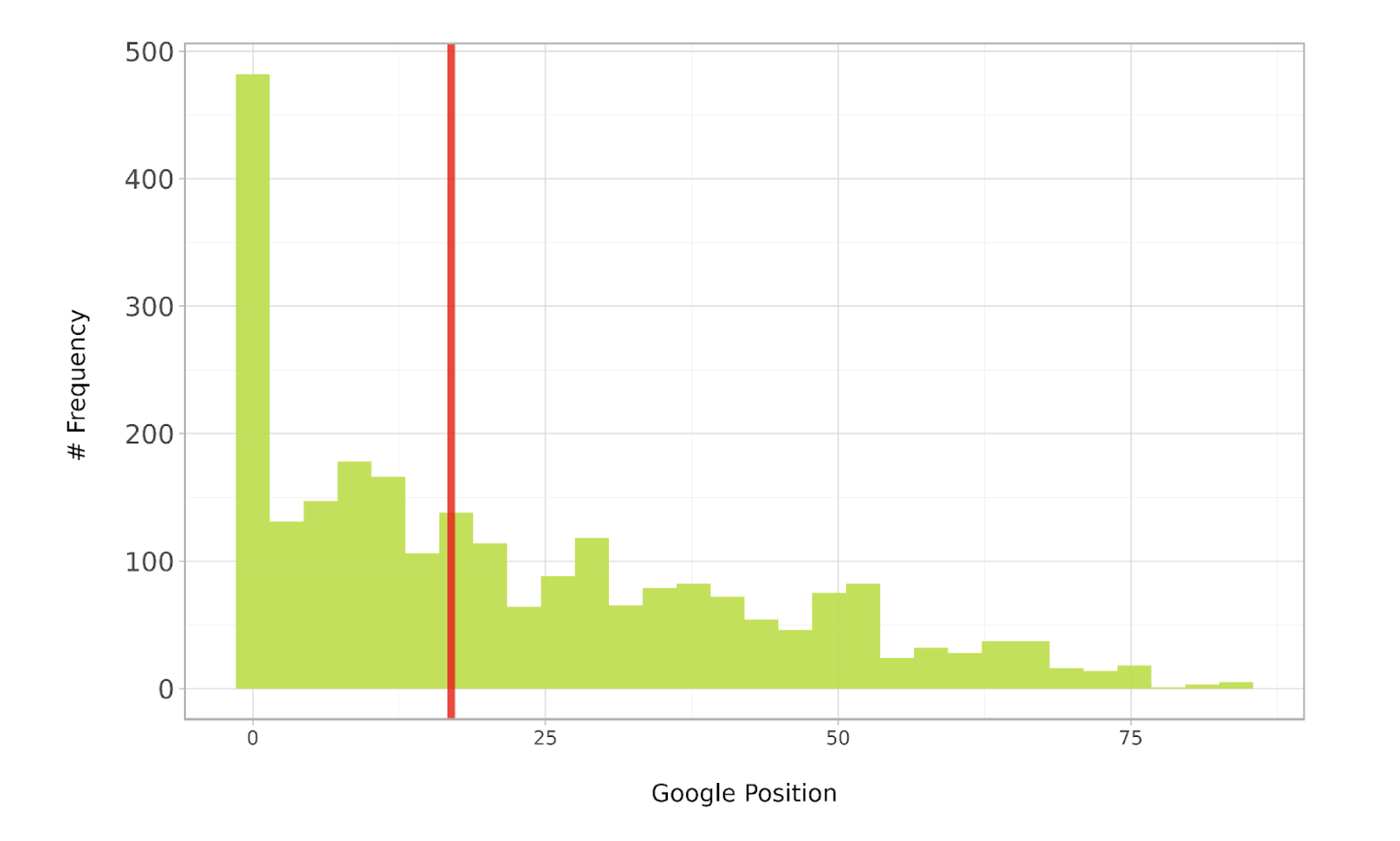

The distribution of any data represents its nature, in terms of where the most popular value (mode) for a given metric, say rank position (in our case the chosen metric) is for a given sample population.

The distribution will also tell us how close the rest of the data points are to the middle (mean or median), i.e., how spread out (or distributed) the rank positions are in the dataset.

This is critical as it will affect the choice of model when evaluating your SEO theory test.

Using Python, this can be done both visually and analytically; visually by executing this code:

The chart above shows that the distribution is positively skewed (think skewer pointing right), meaning most of the keywords rank in the higher-ranked positions (shown towards the left of the red median line). To run this code please make sure to install required libraries via command pip install pandas plotnine :

Now, we know which test statistic to use to discern whether the SEO theory is worth pursuing. In this case, there is a selection of models appropriate for this type of distribution.

Minimum Sample Size

The selected model can also be used to determine the minimum sample size required.

The required minimum sample size ensures that any observed differences between groups (if any) are real and not random luck.

That is, the difference as a result of your SEO experiment or hypothesis is statistically significant, and the probability of the test correctly reporting the difference is high (known as power).

This would be achieved by simulating a number of random distributions fitting the above pattern for both test and control and taking tests.

When running the code, we see the following:

To break it down, the numbers represent the following using the example below:

(39.333, : proportion of simulation runs or experiments in which significance will be reached, i.e., consistency of reaching significance and robustness.

1.0) : statistical power, the probability the test correctly rejects the null hypothesis, i.e., the experiment is designed in such a way that a difference will be correctly detected at this sample size level.

60000: sample size

The above is interesting and potentially confusing to non-statisticians. On the one hand, it suggests that we’ll need 230,000 data points (made of rank data points during a time period) to have a 92% chance of observing SEO experiments that reach statistical significance. Yet, on the other hand with 10,000 data points, we’ll reach statistical significance – so, what should we do?

Experience has taught me that you can reach significance prematurely, so you’ll want to aim for a sample size that’s likely to hold at least 90% of the time – 220,000 data points are what we’ll need.

This is a really important point because having trained a few enterprise SEO teams, all of them complained of conducting conclusive tests that didn’t produce the desired results when rolling out the winning test changes.

Hence, the above process will avoid all that heartache, wasted time, resources and injured credibility from not knowing the minimum sample size and stopping tests too early.

Assign And Implement

With that in mind, we can now start assigning URLs between test and control to test our SEO theory.

In Python, we’d use the np.where() function (think advanced IF function in Excel), where we have several options to partition our subjects, either on string URL pattern, content type, keywords in title, or other depending on the SEO theory you’re looking to validate.

Use the Python code given here .

Strictly speaking, you would run this to collect data going forward as part of a new experiment. But you could test your theory retrospectively, assuming that there were no other changes that could interact with the hypothesis and change the validity of the test.

Something to keep in mind, as that’s a bit of an assumption!

Once the data has been collected, or you’re confident you have the historical data, then you’re ready to run the test.

In our rank position case, we will likely use a model like the Mann-Whitney test due to its distributive properties.

However, if you’re using another metric, such as clicks, which is poisson-distributed, for example, then you’ll need another statistical model entirely.

The code to run the test is given here .