- Skip to main content

- Keyboard shortcuts for audio player

13.7 Cosmos & Culture

What makes science science.

Tania Lombrozo

In a post published last week, Adam Frank argued for the importance of public facts, and of science as a method for ascertaining them.

He emphasized the role of agreement in establishing public facts, and verifiable evidence as the crucial ingredient that makes agreement possible.

Today, I want to consider two additional aspects of science as a method for ascertaining public facts — that is, the facts that we should all accept together. The first is that scientific conclusions can change. And the second is that scientific methods can change.

Far from undercutting the value of public facts, understanding how and why these changes occur reveals why science is our best bet for getting the facts right.

First, the body of scientific knowledge is continually evolving. Scientists don't simply add more facts to our scientific repository; they question new evidence as it comes in, and they repeatedly reexamine prior conclusions. That means that the body of scientific knowledge isn't just growing, it's also changing.

At first glance, this change can be unsettling. How can we trust science, if scientific conclusions are continually subject to change ?

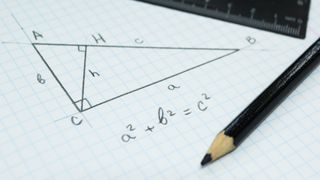

The key is that scientific conclusions don't change on a whim. They change in response to new evidence, new analyses and new arguments — the sorts of things we can publicly agree (or disagree) about, that we can evaluate together. And scientific conclusions are almost always based on induction, not deduction. That is, science involves drawing inferences from premises to conclusion, where the premises can affect the probability of the conclusions but don't establish them with certainty.

When you put these pieces together, the alternative to an evolving body of scientific knowledge is a non-starter. To embrace a static body of scientific knowledge is to reject the potential relevance of new information. It's a commitment to the idea that a conclusion based on all the evidence available is no better than a conclusion based on the subset of evidence we happened to obtain first. If a changing body of scientific knowledge is unsettling, this alternative is untenable.

A second feature of science is that scientific methods are continually evolving. Many of us learned "the" scientific method in grade school, a step-by-step procedure for doing science. But this recipe-book approach to science is oversimplified and misleading . Scientists employ a variety of methods, and these methods are refined as we learn more. New technologies, like telescopes or brain imaging devices, allow us to ask new questions in new ways. But equally important, strategies for analyzing data and drawing conclusions change as well. Statistical methods improve, as do experimental designs. The randomized controlled trial is a scientific innovation; a way to draw better conclusions about cause and effect. A double-blind experiment is a scientific innovation; a way to prevent subtle psychological processes from influencing the results.

What drives this methodological innovation? And what makes the outcome a set of methods we should trust?

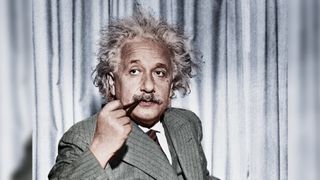

In an undergraduate course that I'm teaching this semester, we introduce students to an unconventional definition of science. The course, Letters & Sciences 22: Sense and Sensibility and Science, comes from an interdisciplinary collaboration between a philosopher (John Campbell), a social psychologist (Robert MacCoun), a Nobel-prize-winning physicist (Saul Perlmutter), and a cognitive scientist (me).

On the first day of class, Prof. Perlmutter defines science as a collection of heuristic tricks that are constantly being invented to side-step our mental weaknesses and play to our strengths. On this view, science isn't a recipe, it's a warning. The warning is this: We are fallible.

But recognizing our fallibility, we can do better. Once we learn that placebo effects can occur, we design drug trials to compare drugs against placebos. Once we learn that repeated statistical significance testing can inflate the probability of a false positive, we build in corrective measures. And we shouldn't wait for these lessons to fortuitously come along; we should vigorously seek them out. A common theme in the course, concludes Perlmutter, is that science is about actively hunting for where we are wrong, for where we are fooling ourselves.

Scientific methods thus evolve alongside scientific conclusions, and the engine that drives this change is remarkably simple. In an essay published earlier this month at Edge.org , I argue that science is powerful because it involves the systematic evaluation of alternatives. To determine which evidence is worth pursuing, we consider which alternatives are plausible, and we seek out evidence that will discriminate between them. As we encounter new evidence or new arguments, we evaluate the possibility that alternative conclusions are now better supported, and alternative methods better guides to the truth.

Scientific thinking isn't just a tool for working scientists; it's an approach to getting the facts right by entertaining all the ways we might get the facts wrong. Only when viable alternatives have been eliminated can we be pretty confident we've got something right.

So let me end with a plea. The plea isn't for people to accept any particular scientific consensus, or any particular public fact. It's a plea for people to embrace the value of considering alternative possibilities, and evaluating those possibilities against the best evidence and arguments at our disposal. And it's a plea for us to do so together, with the kinds of evidence we can verify and share, and the kinds of arguments we can subject to public scrutiny. And if you're not convinced, please consider the alternatives.

Tania Lombrozo is a psychology professor at the University of California, Berkeley. She writes about psychology, cognitive science and philosophy, with occasional forays into parenting and veganism. You can keep up with more of what she is thinking on Twitter: @TaniaLombrozo

- scientific knowledge

- public facts

- scientific methods

- alternatives

All About the Ocean

The ocean covers 70 percent of Earth's surface.

Biology, Earth Science, Oceanography, Geography, Physical Geography

Loading ...

This article is also available in Spanish .

The ocean covers 70 percent of Earth 's surface. It contains about 1.35 billion cubic kilometers (324 million cubic miles) of water, which is about 97 percent of all the water on Earth. The ocean makes all life on Earth possible, and makes the planet appear blue when viewed from space. Earth is the only planet in our solar system that is definitely known to contain liquid water. Although the ocean is one continuous body of water, oceanographers have divided it into five principal areas: the Pacific, Atlantic, Indian, Arctic, and Southern Oceans. The Atlantic, Indian, and Pacific Oceans merge into icy waters around Antarctica. Climate The ocean plays a vital role in climate and weather . The sun’s heat causes water to evaporate , adding moisture to the air. The oceans provide most of this evaporated water. The water vapor condenses to form clouds, which release their moisture as rain or other kinds of precipitation . All life on Earth depends on this process, called the water cycle . The atmosphere receives much of its heat from the ocean. As the sun warms the water, the ocean transfers heat to the atmosphere. In turn, the atmosphere distributes the heat around the globe. Because water absorbs and loses heat more slowly than land masses, the ocean helps balance global temperatures by absorbing heat in the summer and releasing it in the winter. Without the ocean to help regulate global temperatures, Earth’s climate would be bitterly cold. Ocean Formation After Earth began to form about 4.6 billion years ago, it gradually separated into layers of lighter and heavier rock. The lighter rock rose and formed Earth’s crust . The heavier rock sank and formed Earth’s core and mantle . The ocean’s water came from rocks inside the newly forming Earth. As the molten rocks cooled, they released water vapor and other gases. Eventually, the water vapor condensed and covered the crust with a primitive ocean. Today, hot gases from the Earth’s interior continue to produce new water at the bottom of the ocean. Ocean Floor Scientists began mapping the ocean floor in the 1920s. They used instruments called echo sounders , which measure water depths using sound waves . Echo sounders use sonar technology. Sonar is an acronym for SOund Navigation And Ranging. The sonar showed that the ocean floor has dramatic physical features, including huge mountains, deep canyons , steep cliffs , and wide plains . The ocean’s crust is a thin layer of volcanic rock called basalt . The ocean floor is divided into several different areas. The first is the continental shelf , the nearly flat, underwater extension of a continent. Continental shelves vary in width. They are usually wide along low-lying land, and narrow along mountainous coasts. A shelf is covered in sediment from the nearby continent. Some of the sediment is deposited by rivers and trapped by features such as natural dams. Most sediment comes from the last glacial period , or Ice Age, when the oceans receded and exposed the continental shelf. This sediment is called relict sediment . At the outer edge of the continental shelf, the land drops off sharply in what is called the continental slope . The slope descends almost to the bottom of the ocean. Then it tapers off into a gentler slope known as the continental rise. The continental rise descends to the deep ocean floor, which is called the abyssal plain . Abyssal plains are broad, flat areas that lie at depths of about 4,000 to 6,000 meters (13,123 to 19,680 feet). Abyssal plains cover 30 percent of the ocean floor and are the flattest feature on Earth. They are covered by fine-grained sediment like clay and silt. Pelagic sediments, the remains of small ocean organisms, also drift down from upper layers of the ocean. Scattered across abyssal plains are abyssal hills and underwater volcanic peaks called seamounts. Rising from the abyssal plains in each major ocean is a huge chain of mostly undersea mountains. Called the mid-ocean ridge , the chain circles Earth, stretching more than 64,000 kilometers (40,000 miles). Much of the mid-ocean ridge is split by a deep central rift, or crack. Mid-ocean ridges mark the boundaries between tectonic plates . Molten rock from Earth’s interior wells up from the rift, building new seafloor in a process called seafloor spreading . A major portion of the ridge runs down the middle of the Atlantic Ocean and is known as the Mid-Atlantic Ridge. It was not directly seen or explored until 1973. Some areas of the ocean floor have deep, narrow depressions called ocean trenches . They are the deepest parts of the ocean. The deepest spot of all is the Challenger Deep , which lies in the Mariana Trench in the Pacific Ocean near the island of Guam. Its true depth is not known, but the most accurate measurements put the Challenger Deep at 11,000 meters (36,198 feet) below the ocean’s surface—that’s more than 2,000 meters (6,000 feet) taller than Mount Everest, Earth’s highest point. The pressure in the Challenger Deep is about eight tons per square inch.

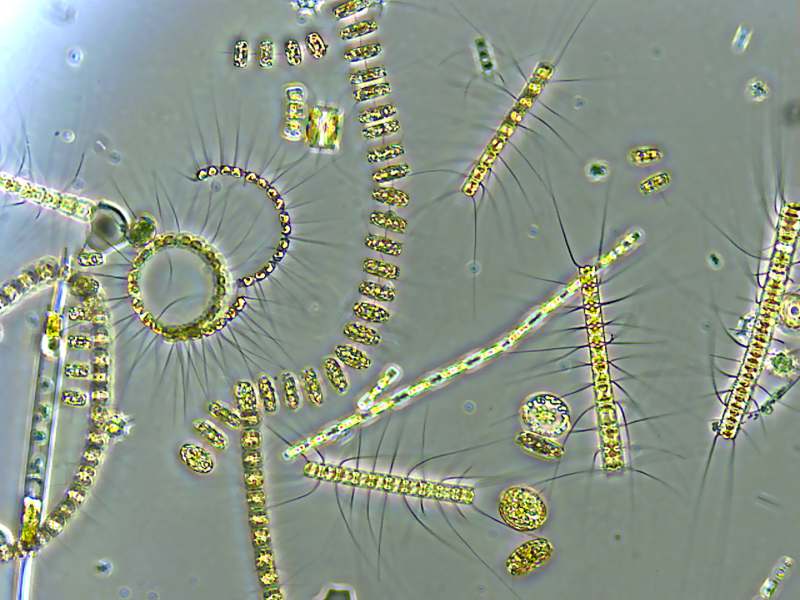

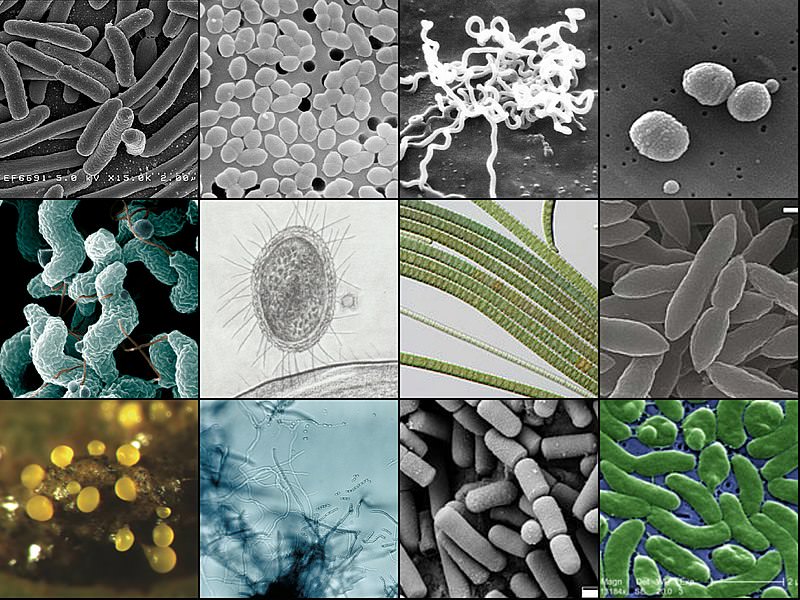

Ocean Life Zones From the shoreline to the deepest seafloor, the ocean teems with life. The hundreds of thousands of marine species range from microscopic algae to the largest creature to have ever lived on Earth, the blue whale. The ocean has five major life zones, each with organisms uniquely adapted to their specific marine ecosystem . The epipelagic zone (1) is the sunlit upper layer of the ocean. It reaches from the surface to about 200 meters (660 feet) deep. The epipelagic zone is also known as the photic or euphotic zone, and can exist in lakes as well as the ocean. The sunlight in the epipelagic zone allows photosynthesis to occur. Photosynthesis is the process by which some organisms convert sunlight and carbon dioxide into energy and oxygen . In the ocean, photosynthesis takes place in plants and algae. Plants such as seagrass are similar to land plants—they have roots, stems, and leaves. Algae is a type of aquatic organism that can photosynthesize sunlight. Large algae such as kelp are called seaweed . Phytoplankton also live in the epipelagic zone. Phytoplankton are microscopic organisms that include plants, algae, and bacteria. They are only visible when billions of them form algal blooms , and appear as green or blue splotches in the ocean. Phytoplankton are a basis of the ocean food web . Through photosynthesis, phytoplankton are responsible for almost half the oxygen released into Earth’s atmosphere. Animals such as krill (a type of shrimp), fish, and microscopic organisms called zooplankton all eat phytoplankton. In turn, these animals are eaten by whales, bigger fish, ocean birds, and human beings. The next zone down, stretching to about 1,000 meters (3,300 feet) deep, is the mesopelagic zone (2). This zone is also known as the twilight zone because the light there is very dim. The lack of sunlight means there are no plants in the mesopelagic zone, but large fish and whales dive there to hunt prey . Fish in this zone are small and luminous . One of the most common is the lanternfish, which has organs along its side that produce light. Sometimes, animals from the mesopelagic zone (such as sperm whales ( Physeter macrocephalus ) and squid) dive into the bathypelagic zone (3), which reaches to about 4,000 meters (13,100 feet) deep. The bathypelagic zone is also known as the midnight zone because no light reaches it. Animals that live in the bathypelagic zone are small, but they often have huge mouths, sharp teeth, and expandable stomachs that let them eat any food that comes along. Most of this food comes from the remains of plants and animals drifting down from upper pelagic zones. Many bathypelagic animals do not have eyes because they are unneeded in the dark. Because the pressure is so great and it is so difficult to find nutrients , fish in the bathypelagic zone move slowly and have strong gills to extract oxygen from the water. The water at the bottom of the ocean, the abyssopelagic zone (4), is very salty and cold (2 degrees Celsius, or 35 degrees Fahrenheit). At depths up to 6,000 meters (19,700 feet), the pressure is very strong—11,000 pounds per square inch. This makes it impossible for most animals to live. Animals in this zone have bizarre adaptations to cope with their ecosystem. Many fish have jaws that look unhinged. The jaws allow them to drag their open mouth along the seafloor to find food, such as mussels, shrimp, and microscopic organisms. Many of the animals in this zone, including squid and fish, are bioluminescent. Bioluminescent organisms produce light through chemical reactions in their bodies. A type of angler fish, for example, has a glowing growth extending in front of its huge, toothy mouth. When smaller fish are attracted to the light, the angler fish simply snaps its jaws to eat its prey. The deepest ocean zone, found in trenches and canyons, is called the hadalpelagic zone (5). Few organisms live here. They include tiny isopods , a type of crustacean related to crabs and shrimp. Invertebrates such as sponges and sea cucumbers thrive in the abyssopelagic and hadalpelagic zones. Like many sea stars and jellyfish, these animals are almost entirely dependent on falling parts of dead or decaying plants and animals, called marine detritus . Not all bottom dwellers, however, depend on marine detritus. In 1977, oceanographers discovered a community of creatures on the ocean floor that feed on bacteria around openings called hydrothermal vents. These vents discharge superheated water enriched with minerals from Earth’s interior. The minerals nourish unique bacteria, which in turn nourish creatures such as crabs, clams, and tube worms. Ocean Currents Currents are streams of water running through a larger body of water. Oceans, rivers, and streams have currents. The ocean’s salinity and temperature and the coast’s geographic features determine an ocean current’s behavior. Earth’s rotation and wind also influence ocean currents. Currents flowing near the surface transport heat from the tropics to the poles and move cooler water back toward the Equator . This keeps the ocean from becoming extremely hot or cold. Deep, cold currents transport oxygen to organisms throughout the ocean. They also carry rich supplies of nutrients that all living things need. The nutrients come from plankton and the remains of other organisms that drift down and decay on the ocean floor. Along some coasts, winds and currents produce a phenomenon called upwelling . As winds push surface water away from shore, deep currents of cold water rise to take its place. This upwelling of deep water brings up nutrients that nourish new growth of plankton, providing food for fish. Ocean food chains constantly recycle food and energy this way.

Some ocean currents are enormous and extremely powerful. One of the most powerful is the Gulf Stream , a warm surface current that originates in the tropical Caribbean Sea and flows northeast along the eastern coast of the United States. The Gulf Stream measures up to 80 kilometers (50 miles) wide and is more than a kilometer (3,281 feet) deep. Like other ocean currents, the Gulf Stream plays a major role in climate. As the current travels north, it transfers moisture from its warm tropical waters to the air above. Westerly, or prevailing, winds carry the warm, moist air to the British Isles and to Scandinavia , causing them to have milder winters than they otherwise would experience at their northern latitudes . Northern parts of Norway are near the Arctic Circle but remain ice-free for most of the year because of the Gulf Stream. The weather pattern known as El Niño includes a change to the Humboldt Current (also called the Peru Current) off the western coast of South America. In El Niño conditions, a current of warm surface water travels east along the Equator and prevents the normal upwelling of the cold, nutrient-rich Humboldt Current. El Niño, which can devastate the fisheries of Peru and Ecuador, occurs every two to seven years, usually in December. The paths of ocean currents are partially determined by Earth’s rotation. This is known as the Coriolis effect . It causes large systems, such as winds and ocean currents that would normally move in a straight line, to veer to the right in the northern hemisphere and to the left in the southern hemisphere . People and the Ocean For thousands of years, people have depended on the ocean as a source of food and as a route for trade and exploration . Today, people continue to travel on the ocean and rely on the resources it contains. Nations continue to negotiate how to determine the extent of their territory beyond the coast. The United Nations’ Law of the Sea treaty established exclusive economic zones (EEZs), extending 200 nautical miles (230 miles) beyond a nation’s coastline. Even though some countries have not signed or ratified the treaty (including the U.S.), it is regarded as standard. Russia has proposed extending its EEZ beyond 200 nautical miles because two mid-ocean ridges, the Lomonosov and Medeleev Ridges, are extensions of the continental shelf belonging to Russia. This territory includes the North Pole. Russian explorers in a submersible vehicle planted a metal Russian flag on the disputed territory in 2007. Through the centuries, people have sailed the ocean on trade routes . Today, ships still carry most of the world’s freight , particularly bulky goods such as machinery, grain, and oil . Ocean ports are areas of commerce and culture. Water and land transportation meet there, and so do people of different professions: businesspeople who import and export goods and services; dockworkers who load and unload cargo ; and ships’ crews. Ports also have a high concentration of migrants and immigrants with a wide variety of ethnicities, nationalities, languages, and religions. Important ports in the U.S. are New York/ New Jersey and New Orleans. The busiest ports around the world include the Port of Shanghai in China and the Port of Rotterdam in the Netherlands. Ocean ports are also important for a nation’s armed forces. Some ports are used exclusively for military purposes, although most share space with commercial businesses. “The sun never sets on the British Empire” is a phrase used to explain the scope of the empire of Great Britain , mostly in the 19th century. Although based on the small European island nation of Great Britain, British military sea power extended its empire from Africa to the Americas, Asia, and Australia. Scientists and other experts hope the ocean will be used more widely as a source of renewable energy . Some countries have already harnessed the energy of ocean waves, temperature, currents, or tides to power turbines and generate electricity. One source of renewable energy are generators that are powered by tidal streams or ocean currents. They convert the movement of currents into energy. Ocean current generators have not been developed on a large scale, but are working in some places in Ireland and Norway. Some conservationists criticize the impact the large constructions have on the marine environment. Another source of renewable energy is ocean thermal energy conversion (OTEC). It uses the difference in temperature between the warm, surface water and cold, deep water to run an engine. OTEC facilities exist in places with significant differences in ocean depth: Japan, India and the U.S. state of Hawai'i, for instance. An emerging source of renewable energy is salinity gradient power , also known as osmotic power. It is an energy source that uses the power of freshwater entering into saltwater. This technology is still being developed, but it has potential in delta areas where fresh river water is constantly interacting with the ocean. Fishing Fishers catch more than 90 million tons of seafood each year, including more than 100 species of fish and shellfish . Millions of people, from professional fishers to business owners like restaurant owners and boat builders, depend on fisheries for their livelihood . Fishing can be classified in two ways. In subsistence fishing, fishers use their catch to help meet the nutritional needs of their families or communities. In commercial fishing , fishers sell their catch for money, goods or services. Popular subsistence and commercial fish are tuna, cod, and shrimp. Ocean fishing is also a popular recreational sport. Sport fishing can be competitive or noncompetitive. In sport fishing tournaments, individuals or teams compete for prizes based on the size of a particular species caught in a specific time period. Both competitive and noncompetitive sport fishers need licenses to fish, and may or may not keep the caught fish. Increasingly, sport fishers practice catch-and-release fishing, where a fish is caught, measured, weighed, and often recorded on film before being released back to the ocean. Popular game fish (fish caught for sport) are tuna and marlin. Whaling is a type of fishing that involves the harvesting of whales and dolphins. It has declined in popularity since the 19th century but is still a way of life for many cultures, such as those in Scandinavia, Japan, Canada, and the Caribbean. The ocean offers a wealth of fishing and whaling resources, but these resources are threatened. People have harvested so much fish and marine life for food and other products that some species have disappeared. During the 1800s and early 1900s, whalers killed thousands of whales for whale oil (wax made from boiled blubber ) and ivory (whales’ teeth). Some species, including the blue whale ( Balaenoptera musculus ) and the right whale, were hunted nearly to extinction . Many species are still endangered today. In the 1960s and 1970s, catches of important food fish, such as herring in the North Sea and anchovies in the Pacific, began to drop off dramatically. Governments took notice of overfishing —harvesting more fish than the ecosystem can replenish . Fishers were forced to go farther out to sea to find fish, putting them at risk. (Deep-sea fishing is one of the most dangerous jobs in the world.) Now, they use advanced equipment, such as electronic fish finders and large gill nets or trawling nets, to catch more fish. This means there are far fewer fish to reproduce and replenish the supply. In 1992, the collapse, or disappearance, of cod in Canada’s Newfoundland Grand Banks put 40,000 fishers out of work. A ban was placed on cod fishing, and to this day, neither the cod nor the fisheries have recovered. To catch the dwindling numbers of fish, most fishers use trawl nets. They drag the nets along the seabed and across acres of ocean. These nets accidentally catch many small, young fish and mammals. Animals caught in fishing nets meant for other species are called bycatch . The fishing industry and fisheries management agencies argue about how to address the problem of bycatch and overfishing. Those involved in the fishing industry do not want to lose their jobs, while conservationists want to maintain healthy levels of fish in the ocean. A number of consumers are choosing to purchase sustainable seafood . Sustainable seafood is harvested from sources (either wild or farmed) that do not deplete the natural ecosystem. Mining and Drilling Many minerals come from the ocean. Sea salt is a mineral that has been used as a flavoring and preservative since ancient times. Sea salt has many additional minerals, such as calcium, that ordinary table salt lacks. Hydrothermal vents often form seafloor massive sulfide (SMS) deposits , which contain precious metals. These SMS deposits sit on the ocean floor, sometimes in the deep ocean and sometimes closer to the surface. New techniques are being developed to mine the seafloor for valuable minerals such as copper, lead, nickel, gold, and silver. Mining companies employ thousands of people and provide goods and services for millions more. Critics of undersea mining maintain that it disrupts the local ecology . Organisms—corals, shrimp, mussels—that live on the seabed have their habitat disturbed, upsetting the food chain. In addition, destruction of habitat threatens the viability of species that have a narrow niche . Maui’s dolphin ( Cephalorhynchus hectori maui ), for instance, is a critically endangered species native to the waters of New Zealand’s North Island. The numbers of Maui’s dolphin are already reduced because of bycatch. Seabed mining threatens its habitat, putting it at further risk of extinction. Oil is one of the most valuable resources taken from the ocean today. Offshore oil rigs pump petroleum from wells drilled into the continental shelf. About one-quarter of all oil and natural gas supplies now comes from offshore oil deposits around the world. Offshore drilling requires complex engineering . An oil platform can be constructed directly onto the ocean floor, or it can “float” above an anchor. Depending on how far out on the continental shelf an oil platform is located, workers may have to be flown in. Underwater, or subsea, facilities are complicated groups of drilling equipment connected to each other and a single oil rig. Subsea production often requires remotely operated underwater vehicles (ROVs). Some countries invest in offshore drilling for profit and to prevent reliance on oil from other regions. The Gulf of Mexico near the U.S. states of Texas and Louisiana is heavily drilled. Several European countries, including the United Kingdom, Denmark, and the Netherlands, drill in the North Sea. Offshore drilling is a complicated and expensive program, however. There are a limited number of companies that have the knowledge and resources to work with local governments to set up offshore oil rigs. Most of these companies are based in Europe and North America, although they do business all over the world. Some governments have banned offshore oil drilling. They cite safety and environmental concerns. There have been several accidents where the platform itself has exploded, at the cost of many lives. Offshore drilling also poses threats to the ocean ecosystem. Spills and leaks from oil rigs and oil tankers that transport the material seriously harm marine mammals and birds. Oil coats feathers, impairing birds’ ability to maintain their body temperature and remain buoyant in the water. The fur of otters and seals are also coated, and oil entering the digestive tract of animals may damage their organs. Offshore oil rigs also release metal cuttings, minute amounts of oil, and drilling fluid into the ocean every day. Drilling fluid is the liquid used with machinery to drill holes deep in the planet. This liquid can contain pollutants such as toxic chemicals and heavy metals . Pollution Most oil pollution does not come from oil spills, however. It comes from the runoff of pollutants into streams and rivers that flow into the ocean. Most runoff comes from individual consumers. Cars, buses, motorcycles, and even lawn mowers spill oil and grease on roads, streets, and highways. (Runoff is what makes busy roads shiny and sometimes slippery.) Storm drains or creeks wash the runoff into local waterways, which eventually flow into the ocean. The largest U.S. oil spill in the ocean took place in Alaska in 1989, by the tanker Exxon Valdez . The Exxon Valdez spilled at least 10 million gallons of oil into Prince William Sound. In comparison, American and Canadian consumers spill about 16 million gallons of oil runoff into the Atlantic and Pacific Oceans every year. For centuries, people have used the ocean as a dumping ground for sewage and other wastes. In the 21st century, the wastes include not only oil, but also chemical runoff from factories and agriculture . These chemicals include nitrates and phosphates , which are often used as fertilizers . These chemicals encourage algae blooms. An algae bloom is an increase in algae and bacteria that threatens plants and other marine life. Algae blooms limit the amount of oxygen in a marine environment, leading to what are known as dead zones , where little life exists beneath the ocean’s surface. Algae blooms can spread across hundreds or even thousands of miles. Another source of pollution is plastics . Most ocean debris, or garbage, is plastic thrown out by consumers. Plastics such as water bottles, bags, six-pack rings, and packing material put marine life at risk. Sea animals are harmed by the plastic either by getting tangled in it or by eating it. An example of marine pollution consisting mainly of plastics is the Great Pacific Garbage Patch . The Great Pacific Garbage Patch is a floating dump in the North Pacific. It’s about twice the size of Texas and probably contains about 100 million tons of debris. Most of this debris comes from the western coast of North America (the U.S. and Canada) and the eastern coast of Asia (Japan, China, Russia, North Korea, and South Korea). Because of ocean currents and weather patterns, the patch is a relatively stable formation and contains new and disintegrating debris. The smaller pieces of plastic debris are eaten by jellyfish or other organisms, and are then consumed by larger predators in the food web. These plastic chemicals may then enter a human’s diet through fish or shellfish. Another source of pollution is carbon dioxide. The ocean absorbs most carbon dioxide from the atmosphere. Carbon dioxide, which is necessary for life, is known as a greenhouse gas and traps radiation in Earth’s atmosphere. Carbon dioxide forms many acids, called carbonic acids , in the ocean. Ocean ecosystems have adapted to the presence of certain levels of carbonic acids, but the increase in carbon dioxide has led to an increase in ocean acids. This ocean acidification erodes the shells of animals such as clams, crabs, and corals. Global Warming Global warming contributes to rising ocean temperatures and sea levels . Warmer oceans radically alter the ecosystem. Global warming causes cold-water habitats to shrink, meaning there is less room for animals such as penguins, seals, or whales. Plankton, the base of the ocean food chain, thrives in cold water. Warming water means there will be less plankton available for marine life to eat. Melting glaciers and ice sheets contribute to sea level rise . Rising sea levels threaten coastal ecosystems and property. River deltas and estuaries are put at risk for flooding. Coasts are more likely to suffer erosion . Seawater more often contaminates sources of fresh water. All these consequences—flooding, erosion, water contamination—put low-lying island nations, such as the Maldives in the Indian Ocean, at high risk for disaster. To find ways to protect the ocean from pollution and the effects of climate change, scientists from all over the world are cooperating in studies of ocean waters and marine life. They are also working together to control pollution and limit global warming. Many countries are working to reach agreements on how to manage and harvest ocean resources. Although the ocean is vast, it is more easily polluted and damaged than people once thought. It requires care and protection as well as expert management. Only then can it continue to provide the many resources that living things—including people—need.

The Most Coast . . . Canada has 202,080 kilometers (125,567 miles) of coastline. Short But Sweet . . . Monaco has four kilometers (2.5 miles) of coastline.

No, the Toilet Doesn't Flush Backward in Australia The Coriolis effect, which can be seen in large-scale phenomena like trade winds and ocean currents, cannot be duplicated in small basins like sinks.

Extraterrestrial Oceans Mars probably had oceans billions of years ago, but ice and dry seabeds are all that remain today. Europa, one of Jupiter's moons, is probably covered by an ocean of water more than 96 kilometers (60 miles) deep, but it is trapped beneath a layer of ice, which the warmer water below frequently cracks. One of Saturn's moons, Enceladus, has cryovolcanism, or ice volcanoes. Instead of erupting with lava, ice volcanoes erupt with water, ammonia, or methane. Ice volcanoes may indicate oceanic activity.

International Oil Spill The largest oil spill in history, the Gulf War oil spill, released at least 40 million gallons of oil into the Persian Gulf. Valves at the Sea Island oil terminal in Kuwait were opened on purpose after Iraq invaded Kuwait in 1991. The oil was intended to stop a landing by U.S. Marines, but the oil drifted south to the shores of Saudi Arabia. A study of the Gulf War oil spill (conducted by the United Nations, several countries in the Middle East and the United States) found that most of the spilled oil evaporated and caused little damage to the environment.

Ocean Seas The floors of the Caspian Sea and the Black Sea are more like the ocean than other seas they do not rest on a continent, but directly on the ocean's basalt crust.

Early Ocean Explorers Polynesian people navigated a region of the Pacific Ocean now known as the Polynesian Triangle by 700 C.E. The corners of the Polynesian Triangle are islands: the American state of Hawai'i, the country of New Zealand, and the Chilean territory of Easter Island (also known as Rapa Nui). The distance between Easter Island and New Zealand, the longest length of the Polynesian Triangle, is one-quarter of Earth's circumference, more than 10,000 kilometers (6,200 miles). Polynesians successfully traveled these distances in canoes. It would be hundreds of years before another culture explored the ocean to this extent.

Media Credits

The audio, illustrations, photos, and videos are credited beneath the media asset, except for promotional images, which generally link to another page that contains the media credit. The Rights Holder for media is the person or group credited.

Illustrators

Educator reviewer, expert reviewer, last updated.

March 5, 2024

User Permissions

For information on user permissions, please read our Terms of Service. If you have questions about how to cite anything on our website in your project or classroom presentation, please contact your teacher. They will best know the preferred format. When you reach out to them, you will need the page title, URL, and the date you accessed the resource.

If a media asset is downloadable, a download button appears in the corner of the media viewer. If no button appears, you cannot download or save the media.

Text on this page is printable and can be used according to our Terms of Service .

Interactives

Any interactives on this page can only be played while you are visiting our website. You cannot download interactives.

Related Resources

Origins of the universe, explained

The most popular theory of our universe's origin centers on a cosmic cataclysm unmatched in all of history—the big bang.

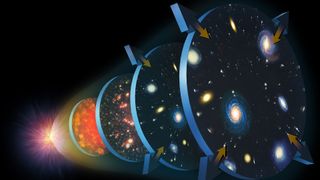

The best-supported theory of our universe's origin centers on an event known as the big bang. This theory was born of the observation that other galaxies are moving away from our own at great speed in all directions, as if they had all been propelled by an ancient explosive force.

A Belgian priest named Georges Lemaître first suggested the big bang theory in the 1920s, when he theorized that the universe began from a single primordial atom. The idea received major boosts from Edwin Hubble's observations that galaxies are speeding away from us in all directions, as well as from the 1960s discovery of cosmic microwave radiation—interpreted as echoes of the big bang—by Arno Penzias and Robert Wilson.

Further work has helped clarify the big bang's tempo. Here’s the theory: In the first 10^-43 seconds of its existence, the universe was very compact, less than a million billion billionth the size of a single atom. It's thought that at such an incomprehensibly dense, energetic state, the four fundamental forces—gravity, electromagnetism, and the strong and weak nuclear forces—were forged into a single force, but our current theories haven't yet figured out how a single, unified force would work. To pull this off, we'd need to know how gravity works on the subatomic scale, but we currently don't.

It's also thought that the extremely close quarters allowed the universe's very first particles to mix, mingle, and settle into roughly the same temperature. Then, in an unimaginably small fraction of a second, all that matter and energy expanded outward more or less evenly, with tiny variations provided by fluctuations on the quantum scale. That model of breakneck expansion, called inflation, may explain why the universe has such an even temperature and distribution of matter.

After inflation, the universe continued to expand but at a much slower rate. It's still unclear what exactly powered inflation.

Aftermath of cosmic inflation

As time passed and matter cooled, more diverse kinds of particles began to form, and they eventually condensed into the stars and galaxies of our present universe.

Introducing Nat Geo Kids Book Bundle!

By the time the universe was a billionth of a second old, the universe had cooled down enough for the four fundamental forces to separate from one another. The universe's fundamental particles also formed. It was still so hot, though, that these particles hadn't yet assembled into many of the subatomic particles we have today, such as the proton. As the universe kept expanding, this piping-hot primordial soup—called the quark-gluon plasma—continued to cool. Some particle colliders, such as CERN's Large Hadron Collider , are powerful enough to re-create the quark-gluon plasma.

Radiation in the early universe was so intense that colliding photons could form pairs of particles made of matter and antimatter, which is like regular matter in every way except with the opposite electrical charge. It's thought that the early universe contained equal amounts of matter and antimatter. But as the universe cooled, photons no longer packed enough punch to make matter-antimatter pairs. So like an extreme game of musical chairs, many particles of matter and antimatter paired off and annihilated one another.

You May Also Like

What if aliens exist—but they're just hiding from us? The Dark Forest theory, explained

The world’s most powerful telescope is rewriting the story of space and time

How fast is the universe really expanding? The mystery deepens.

Somehow, some excess matter survived—and it's now the stuff that people, planets, and galaxies are made of. Our existence is a clear sign that the laws of nature treat matter and antimatter slightly differently. Researchers have experimentally observed this rule imbalance, called CP violation , in action. Physicists are still trying to figure out exactly how matter won out in the early universe.

Building atoms

Within the universe's first second, it was cool enough for the remaining matter to coalesce into protons and neutrons, the familiar particles that make up atoms' nuclei. And after the first three minutes, the protons and neutrons had assembled into hydrogen and helium nuclei. By mass, hydrogen was 75 percent of the early universe's matter, and helium was 25 percent. The abundance of helium is a key prediction of big bang theory, and it's been confirmed by scientific observations.

Despite having atomic nuclei, the young universe was still too hot for electrons to settle in around them to form stable atoms. The universe's matter remained an electrically charged fog that was so dense, light had a hard time bouncing its way through. It would take another 380,000 years or so for the universe to cool down enough for neutral atoms to form—a pivotal moment called recombination. The cooler universe made it transparent for the first time, which let the photons rattling around within it finally zip through unimpeded.

We still see this primordial afterglow today as cosmic microwave background radiation , which is found throughout the universe. The radiation is similar to that used to transmit TV signals via antennae. But it is the oldest radiation known and may hold many secrets about the universe's earliest moments.

From the first stars to today

There wasn't a single star in the universe until about 180 million years after the big bang. It took that long for gravity to gather clouds of hydrogen and forge them into stars. Many physicists think that vast clouds of dark matter , a still-unknown material that outweighs visible matter by more than five to one, provided a gravitational scaffold for the first galaxies and stars.

Once the universe's first stars ignited , the light they unleashed packed enough punch to once again strip electrons from neutral atoms, a key chapter of the universe called reionization. In February 2018, an Australian team announced that they may have detected signs of this “cosmic dawn.” By 400 million years after the big bang , the first galaxies were born. In the billions of years since, stars, galaxies, and clusters of galaxies have formed and re-formed—eventually yielding our home galaxy, the Milky Way, and our cosmic home, the solar system.

Even now the universe is expanding , and to astronomers' surprise, the pace of expansion is accelerating. It's thought that this acceleration is driven by a force that repels gravity called dark energy . We still don't know what dark energy is, but it’s thought that it makes up 68 percent of the universe's total matter and energy. Dark matter makes up another 27 percent. In essence, all the matter you've ever seen—from your first love to the stars overhead—makes up less than five percent of the universe.

Related Topics

- BIG BANG THEORY

- SCIENCE AND TECHNOLOGY

This supermassive black hole was formed when the universe was a toddler

The 11 most astonishing scientific discoveries of 2023

The universe is expanding faster than it should be

The world's most powerful space telescope has launched at last

A First Glimpse of the Hidden Cosmos

- Environment

- Paid Content

- Photography

History & Culture

- History & Culture

- History Magazine

- Mind, Body, Wonder

- Destination Guide

- Terms of Use

- Privacy Policy

- Your US State Privacy Rights

- Children's Online Privacy Policy

- Interest-Based Ads

- About Nielsen Measurement

- Do Not Sell or Share My Personal Information

- Nat Geo Home

- Attend a Live Event

- Book a Trip

- Inspire Your Kids

- Shop Nat Geo

- Visit the D.C. Museum

- Learn About Our Impact

- Support Our Mission

- Advertise With Us

- Customer Service

- Renew Subscription

- Manage Your Subscription

- Work at Nat Geo

- Sign Up for Our Newsletters

- Contribute to Protect the Planet

Copyright © 1996-2015 National Geographic Society Copyright © 2015-2024 National Geographic Partners, LLC. All rights reserved

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Biology library

Course: biology library > unit 1, the scientific method.

- Controlled experiments

- The scientific method and experimental design

Introduction

- Make an observation.

- Ask a question.

- Form a hypothesis , or testable explanation.

- Make a prediction based on the hypothesis.

- Test the prediction.

- Iterate: use the results to make new hypotheses or predictions.

Scientific method example: Failure to toast

1. make an observation., 2. ask a question., 3. propose a hypothesis., 4. make predictions., 5. test the predictions..

- If the toaster does toast, then the hypothesis is supported—likely correct.

- If the toaster doesn't toast, then the hypothesis is not supported—likely wrong.

Logical possibility

Practical possibility, building a body of evidence, 6. iterate..

- If the hypothesis was supported, we might do additional tests to confirm it, or revise it to be more specific. For instance, we might investigate why the outlet is broken.

- If the hypothesis was not supported, we would come up with a new hypothesis. For instance, the next hypothesis might be that there's a broken wire in the toaster.

Want to join the conversation?

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

Essay Writing: Expository: Scientific Facts - SS2 English Lesson Note

Exposition is a type of writing that explains or clarifies a topic. It is often used in scientific writing to present and discuss scientific facts.

Scientific facts are statements that have been repeatedly confirmed through observation and experimentation. They are accepted as true by the scientific community.

Features of exposition on scientific facts include:

- Clarity: The exposition should be clear and easy to understand.

- Accuracy: The exposition should be accurate and based on sound scientific evidence.

- Objectivity: The exposition should be objective and unbiased.

- Organization: The exposition should be well-organized and easy to follow.

- Evidence: The exposition should be supported by evidence from scientific research.

Model expository essay on scientific facts

The following is a model expository essay on scientific facts:

Title: The Scientific Fact of Evolution

Introduction:

Evolution is the process by which living things change over time. It is a scientific fact that has been repeatedly confirmed by observation and experimentation.

There are many different theories of evolution, but they all agree on the basic principles. Evolution occurs through the process of natural selection. Natural selection is the process by which organisms that are better adapted to their environment are more likely to survive and reproduce. Over time, this leads to changes in the population of organisms.

There is a great deal of evidence to support the theory of evolution. For example, we can see evidence of evolution in the fossil record. The fossil record shows that organisms have changed over time. We can also see evidence of evolution in the DNA of living things. The DNA of different species is similar, which suggests that they share a common ancestor.

Conclusion:

The theory of evolution is a well-supported scientific fact. It is a powerful explanation for the diversity of life on Earth.

Well-composed expository essay:

A well-composed expository essay on scientific facts will be clear, accurate, objective, organized, and supported by evidence. It will also be well-written and engaging.

Here are some tips for writing a well-composed expository essay on scientific facts:

- Do your research. Make sure you understand the topic you are writing about.

- Use clear and concise language. Avoid jargon and technical terms that your audience may not understand.

- Be objective. Present the facts without bias or opinion.

- Organize your essay logically. Make sure your points flow smoothly from one to the next.

- Support your claims with evidence. Cite your sources so that your readers can verify your information.

- Proofread your essay carefully before submitting it.

Add a Comment

Notice: Posting irresponsibily can get your account banned!

No responses

Featured Posts

What this handout is about

Nearly every element of style that is accepted and encouraged in general academic writing is also considered good practice in scientific writing. The major difference between science writing and writing in other academic fields is the relative importance placed on certain stylistic elements. This handout details the most critical aspects of scientific writing and provides some strategies for evaluating and improving your scientific prose. Readers of this handout may also find our handout on scientific reports useful.

What is scientific writing?

There are several different kinds of writing that fall under the umbrella of scientific writing. Scientific writing can include:

- Peer-reviewed journal articles (presenting primary research)

- Grant proposals (you can’t do science without funding)

- Literature review articles (summarizing and synthesizing research that has already been carried out)

As a student in the sciences, you are likely to spend some time writing lab reports, which often follow the format of peer-reviewed articles and literature reviews. Regardless of the genre, though, all scientific writing has the same goal: to present data and/or ideas with a level of detail that allows a reader to evaluate the validity of the results and conclusions based only on the facts presented. The reader should be able to easily follow both the methods used to generate the data (if it’s a primary research paper) and the chain of logic used to draw conclusions from the data. Several key elements allow scientific writers to achieve these goals:

- Precision: ambiguities in writing cause confusion and may prevent a reader from grasping crucial aspects of the methodology and synthesis

- Clarity: concepts and methods in the sciences can often be complex; writing that is difficult to follow greatly amplifies any confusion on the part of the reader

- Objectivity: any claims that you make need to be based on facts, not intuition or emotion

How can I make my writing more precise?

Theories in the sciences are based upon precise mathematical models, specific empirical (primary) data sets, or some combination of the two. Therefore, scientists must use precise, concrete language to evaluate and explain such theories, whether mathematical or conceptual. There are a few strategies for avoiding ambiguous, imprecise writing.

Word and phrasing choice

Often several words may convey similar meaning, but usually only one word is most appropriate in a given context. Here’s an example:

- Word choice 1: “population density is positively correlated with disease transmission rate”

- Word choice 2: “population density is positively related to disease transmission rate”

In some contexts, “correlated” and “related” have similar meanings. But in scientific writing, “correlated” conveys a precise statistical relationship between two variables. In scientific writing, it is typically not enough to simply point out that two variables are related: the reader will expect you to explain the precise nature of the relationship (note: when using “correlation,” you must explain somewhere in the paper how the correlation was estimated). If you mean “correlated,” then use the word “correlated”; avoid substituting a less precise term when a more precise term is available.

This same idea also applies to choice of phrasing. For example, the phrase “writing of an investigative nature” could refer to writing in the sciences, but might also refer to a police report. When presented with a choice, a more specific and less ambiguous phraseology is always preferable. This applies even when you must be repetitive to maintain precision: repetition is preferable to ambiguity. Although repetition of words or phrases often happens out of necessity, it can actually be beneficial by placing special emphasis on key concepts.

Figurative language

Figurative language can make for interesting and engaging casual reading but is by definition imprecise. Writing “experimental subjects were assaulted with a wall of sound” does not convey the precise meaning of “experimental subjects were presented with 20 second pulses of conspecific mating calls.” It’s difficult for a reader to objectively evaluate your research if details are left to the imagination, so exclude similes and metaphors from your scientific writing.

Level of detail

Include as much detail as is necessary, but exclude extraneous information. The reader should be able to easily follow your methodology, results, and logic without being distracted by irrelevant facts and descriptions. Ask yourself the following questions when you evaluate the level of detail in a paper:

- Is the rationale for performing the experiment clear (i.e., have you shown that the question you are addressing is important and interesting)?

- Are the materials and procedures used to generate the results described at a level of detail that would allow the experiment to be repeated?

- Is the rationale behind the choice of experimental methods clear? Will the reader understand why those particular methods are appropriate for answering the question your research is addressing?

- Will the reader be able to follow the chain of logic used to draw conclusions from the data?

Any information that enhances the reader’s understanding of the rationale, methodology, and logic should be included, but information in excess of this (or information that is redundant) will only confuse and distract the reader.

Whenever possible, use quantitative rather than qualitative descriptions. A phrase that uses definite quantities such as “development rate in the 30°C temperature treatment was ten percent faster than development rate in the 20°C temperature treatment” is much more precise than the more qualitative phrase “development rate was fastest in the higher temperature treatment.”

How can I make my writing clearer?

When you’re writing about complex ideas and concepts, it’s easy to get sucked into complex writing. Distilling complicated ideas into simple explanations is challenging, but you’ll need to acquire this valuable skill to be an effective communicator in the sciences. Complexities in language use and sentence structure are perhaps the most common issues specific to writing in the sciences.

Language use

When given a choice between a familiar and a technical or obscure term, the more familiar term is preferable if it doesn’t reduce precision. Here are a just a few examples of complex words and their simple alternatives:

In these examples, the term on the right conveys the same meaning as the word on the left but is more familiar and straightforward, and is often shorter as well.

There are some situations where the use of a technical or obscure term is justified. For example, in a paper comparing two different viral strains, the author might repeatedly use the word “enveloped” rather than the phrase “surrounded by a membrane.” The key word here is “repeatedly”: only choose the less familiar term if you’ll be using it more than once. If you choose to go with the technical term, however, make sure you clearly define it, as early in the paper as possible. You can use this same strategy to determine whether or not to use abbreviations, but again you must be careful to define the abbreviation early on.

Sentence structure

Science writing must be precise, and precision often requires a fine level of detail. Careful description of objects, forces, organisms, methodology, etc., can easily lead to complex sentences that express too many ideas without a break point. Here’s an example:

The osmoregulatory organ, which is located at the base of the third dorsal spine on the outer margin of the terminal papillae and functions by expelling excess sodium ions, activates only under hypertonic conditions.

Several things make this sentence complex. First, the action of the sentence (activates) is far removed from the subject (the osmoregulatory organ) so that the reader has to wait a long time to get the main idea of the sentence. Second, the verbs “functions,” “activates,” and “expelling” are somewhat redundant. Consider this revision:

Located on the outer margin of the terminal papillae at the base of the third dorsal spine, the osmoregulatory organ expels excess sodium ions under hypertonic conditions.

This sentence is slightly shorter, conveys the same information, and is much easier to follow. The subject and the action are now close together, and the redundant verbs have been eliminated. You may have noticed that even the simpler version of this sentence contains two prepositional phrases strung together (“on the outer margin of…” and “at the base of…”). Prepositional phrases themselves are not a problem; in fact, they are usually required to achieve an adequate level of detail in science writing. However, long strings of prepositional phrases can cause sentences to wander. Here’s an example of what not to do from Alley (1996):

“…to confirm the nature of electrical breakdown of nitrogen in uniform fields at relatively high pressures and interelectrode gaps that approach those obtained in engineering practice, prior to the determination of the processes that set the criterion for breakdown in the above-mentioned gases and mixtures in uniform and non-uniform fields of engineering significance.”

The use of eleven (yes, eleven!) prepositional phrases in this sentence is excessive, and renders the sentence nearly unintelligible. Judging when a string of prepositional phrases is too long is somewhat subjective, but as a general rule of thumb, a single prepositional phrase is always preferable, and anything more than two strung together can be problematic.

Nearly every form of scientific communication is space-limited. Grant proposals, journal articles, and abstracts all have word or page limits, so there’s a premium on concise writing. Furthermore, adding unnecessary words or phrases distracts rather than engages the reader. Avoid generic phrases that contribute no novel information. Common phrases such as “the fact that,” “it should be noted that,” and “it is interesting that” are cumbersome and unnecessary. Your reader will decide whether or not your paper is interesting based on the content. In any case, if information is not interesting or noteworthy it should probably be excluded.

How can I make my writing more objective?

The objective tone used in conventional scientific writing reflects the philosophy of the scientific method: if results are not repeatable, then they are not valid. In other words, your results will only be considered valid if any researcher performing the same experimental tests and analyses that you describe would be able to produce the same results. Thus, scientific writers try to adopt a tone that removes the focus from the researcher and puts it only on the research itself. Here are several stylistic conventions that enhance objectivity:

Passive voice

You may have been told at some point in your academic career that the use of the passive voice is almost always bad, except in the sciences. The passive voice is a sentence structure where the subject who performs the action is ambiguous (e.g., “you may have been told,” as seen in the first sentence of this paragraph; see our handout on passive voice and this 2-minute video on passive voice for a more complete discussion).

The rationale behind using the passive voice in scientific writing is that it enhances objectivity, taking the actor (i.e., the researcher) out of the action (i.e., the research). Unfortunately, the passive voice can also lead to awkward and confusing sentence structures and is generally considered less engaging (i.e., more boring) than the active voice. This is why most general style guides recommend only sparing use of the passive voice.

Currently, the active voice is preferred in most scientific fields, even when it necessitates the use of “I” or “we.” It’s perfectly reasonable (and more simple) to say “We performed a two-tailed t-test” rather than to say “a two-tailed t-test was performed,” or “in this paper we present results” rather than “results are presented in this paper.” Nearly every current edition of scientific style guides recommends the active voice, but different instructors (or journal editors) may have different opinions on this topic. If you are unsure, check with the instructor or editor who will review your paper to see whether or not to use the passive voice. If you choose to use the active voice with “I” or “we,” there are a few guidelines to follow:

- Avoid starting sentences with “I” or “we”: this pulls focus away from the scientific topic at hand.

- Avoid using “I” or “we” when you’re making a conjecture, whether it’s substantiated or not. Everything you say should follow from logic, not from personal bias or subjectivity. Never use any emotive words in conjunction with “I” or “we” (e.g., “I believe,” “we feel,” etc.).

- Never use “we” in a way that includes the reader (e.g., “here we see trait evolution in action”); the use of “we” in this context sets a condescending tone.

Acknowledging your limitations

Your conclusions should be directly supported by the data that you present. Avoid making sweeping conclusions that rest on assumptions that have not been substantiated by your or others’ research. For example, if you discover a correlation between fur thickness and basal metabolic rate in rats and mice you would not necessarily conclude that fur thickness and basal metabolic rate are correlated in all mammals. You might draw this conclusion, however, if you cited evidence that correlations between fur thickness and basal metabolic rate are also found in twenty other mammalian species. Assess the generality of the available data before you commit to an overly general conclusion.

Works consulted

We consulted these works while writing this handout. This is not a comprehensive list of resources on the handout’s topic, and we encourage you to do your own research to find additional publications. Please do not use this list as a model for the format of your own reference list, as it may not match the citation style you are using. For guidance on formatting citations, please see the UNC Libraries citation tutorial . We revise these tips periodically and welcome feedback.

Alley, Michael. 1996. The Craft of Scientific Writing , 3rd ed. New York: Springer.

Council of Science Editors. 2014. Scientific Style and Format: The CSE Manual for Authors, Editors, and Publishers , 8th ed. Chicago & London: University of Chicago Press.

Day, Robert A. 1994. How to Write and Publish a Scientific Paper , 4th ed. Phoenix: Oryx Press.

Day, Robert, and Nancy Sakaduski. 2011. Scientific English: A Guide for Scientists and Other Professionals , 3rd ed. Santa Barbara: Greenwood.

Gartland, John J. 1993. Medical Writing and Communicating . Frederick, MD: University Publishing Group.

Williams, Joseph M., and Joseph Bizup. 2016. Style: Ten Lessons in Clarity and Grace , 12th ed. New York: Pearson.

You may reproduce it for non-commercial use if you use the entire handout and attribute the source: The Writing Center, University of North Carolina at Chapel Hill

Make a Gift

This page has been archived and is no longer updated

Effective Writing

To construct sentences that reflect your ideas, focus these sentences appropriately. Express one idea per sentence. Use your current topic — that is, what you are writing about — as the grammatical subject of your sentence (see Verbs: Choosing between active and passive voice ). When writing a complex sentence (a sentence that includes several clauses), place the main idea in the main clause rather than a subordinate clause. In particular, focus on the phenomenon at hand, not on the fact that you observed it.

Constructing your sentences logically is a good start, but it may not be enough. To ensure they are readable, make sure your sentences do not tax readers' short-term memory by obliging these readers to remember long pieces of text before knowing what to do with them. In other words, keep together what goes together. Then, work on conciseness: See whether you can replace long phrases with shorter ones or eliminate words without loss of clarity or accuracy.

The following screens cover the drafting process in more detail. Specifically, they discuss how to use verbs effectively and how to take care of your text's mechanics.

Shutterstock. Much of the strength of a clause comes from its verb. Therefore, to express your ideas accurately, choose an appropriate verb and use it well. In particular, use it in the right tense, choose carefully between active and passive voice, and avoid dangling verb forms.

Verbs are for describing actions, states, or occurrences. To give a clause its full strength and keep it short, do not bury the action, state, or occurrence in a noun (typically combined with a weak verb), as in "The catalyst produced a significant increase in conversion rate." Instead write, "The catalyst increased the conversion rate significantly." The examples below show how an action, state, or occurrence can be moved from a noun back to a verb.

Using the right tense

In your scientific paper, use verb tenses (past, present, and future) exactly as you would in ordinary writing. Use the past tense to report what happened in the past: what you did, what someone reported, what happened in an experiment, and so on. Use the present tense to express general truths, such as conclusions (drawn by you or by others) and atemporal facts (including information about what the paper does or covers). Reserve the future tense for perspectives: what you will do in the coming months or years. Typically, most of your sentences will be in the past tense, some will be in the present tense, and very few, if any, will be in the future tense.

Work done We collected blood samples from . . . Groves et al. determined the growth rate of . . . Consequently, astronomers decided to rename . . . Work reported Jankowsky reported a similar growth rate . . . In 2009, Chu published an alternative method to . . . Irarrázaval observed the opposite behavior in . . . Observations The mice in Group A developed , on average, twice as much . . . The number of defects increased sharply . . . The conversion rate was close to 95% . . .

Present tense

General truths Microbes in the human gut have a profound influence on . . . The Reynolds number provides a measure of . . . Smoking increases the risk of coronary heart disease . . . Atemporal facts This paper presents the results of . . . Section 3.1 explains the difference between . . . Behbood's 1969 paper provides a framework for . . .

Future tense

Perspectives In a follow-up experiment, we will study the role of . . . The influence of temperature will be the object of future research . . .

Note the difference in scope between a statement in the past tense and the same statement in the present tense: "The temperature increased linearly over time" refers to a specific experiment, whereas "The temperature increases linearly over time" generalizes the experimental observation, suggesting that the temperature always increases linearly over time in such circumstances.

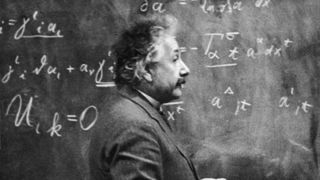

In complex sentences, you may have to combine two different tenses — for example, "In 1905, Albert Einstein postulated that the speed of light is constant . . . . " In this sentence, postulated refers to something that happened in the past (in 1905) and is therefore in the past tense, whereas is expresses a general truth and is in the present tense.

Choosing between active and passive voice

In English, verbs can express an action in one of two voices. The active voice focuses on the agent: "John measured the temperature." (Here, the agent — John — is the grammatical subject of the sentence.) In contrast, the passive voice focuses on the object that is acted upon: "The temperature was measured by John." (Here, the temperature, not John, is the grammatical subject of the sentence.)

To choose between active and passive voice, consider above all what you are discussing (your topic) and place it in the subject position. For example, should you write "The preprocessor sorts the two arrays" or "The two arrays are sorted by the preprocessor"? If you are discussing the preprocessor, the first sentence is the better option. In contrast, if you are discussing the arrays, the second sentence is better. If you are unsure what you are discussing, consider the surrounding sentences: Are they about the preprocessor or the two arrays?

The desire to be objective in scientific writing has led to an overuse of the passive voice, often accompanied by the exclusion of agents: "The temperature was measured " (with the verb at the end of the sentence). Admittedly, the agent is often irrelevant: No matter who measured the temperature, we would expect its value to be the same. However, a systematic preference for the passive voice is by no means optimal, for at least two reasons.

For one, sentences written in the passive voice are often less interesting or more difficult to read than those written in the active voice. A verb in the active voice does not require a person as the agent; an inanimate object is often appropriate. For example, the rather uninteresting sentence "The temperature was measured . . . " may be replaced by the more interesting "The measured temperature of 253°C suggests a secondary reaction in . . . ." In the second sentence, the subject is still temperature (so the focus remains the same), but the verb suggests is in the active voice. Similarly, the hard-to-read sentence "In this section, a discussion of the influence of the recirculating-water temperature on the conversion rate of . . . is presented " (long subject, verb at the end) can be turned into "This section discusses the influence of . . . . " The subject is now section , which is what this sentence is really about, yet the focus on the discussion has been maintained through the active-voice verb discusses .

As a second argument against a systematic preference for the passive voice, readers sometimes need people to be mentioned. A sentence such as "The temperature is believed to be the cause for . . . " is ambiguous. Readers will want to know who believes this — the authors of the paper, or the scientific community as a whole? To clarify the sentence, use the active voice and set the appropriate people as the subject, in either the third or the first person, as in the examples below.

Biologists believe the temperature to be . . . Keustermans et al. (1997) believe the temperature to be . . . The authors believe the temperature to be . . . We believe the temperature to be . . .

Avoiding dangling verb forms

A verb form needs a subject, either expressed or implied. When the verb is in a non-finite form, such as an infinitive ( to do ) or a participle ( doing ), its subject is implied to be the subject of the clause, or sometimes the closest noun phrase. In such cases, construct your sentences carefully to avoid suggesting nonsense. Consider the following two examples.

To dissect its brain, the affected fly was mounted on a . . . After aging for 72 hours at 50°C, we observed a shift in . . .

Here, the first sentence implies that the affected fly dissected its own brain, and the second implies that the authors of the paper needed to age for 72 hours at 50°C in order to observe the shift. To restore the intended meaning while keeping the infinitive to dissect or the participle aging , change the subject of each sentence as appropriate:

To dissect its brain, we mounted the affected fly on a . . . After aging for 72 hours at 50°C, the samples exhibited a shift in . . .

Alternatively, you can change or remove the infinitive or participle to restore the intended meaning:

To have its brain dissected , the affected fly was mounted on a . . . After the samples aged for 72 hours at 50°C, we observed a shift in . . .

In communication, every detail counts. Although your focus should be on conveying your message through an appropriate structure at all levels, you should also save some time to attend to the more mechanical aspects of writing in English, such as using abbreviations, writing numbers, capitalizing words, using hyphens when needed, and punctuating your text correctly.

Using abbreviations

Beware of overusing abbreviations, especially acronyms — such as GNP for gold nanoparticles . Abbreviations help keep a text concise, but they can also render it cryptic. Many acronyms also have several possible extensions ( GNP also stands for gross national product ).

Write acronyms (and only acronyms) in all uppercase ( GNP , not gnp ).

Introduce acronyms systematically the first time they are used in a document. First write the full expression, then provide the acronym in parentheses. In the full expression, and unless the journal to which you submit your paper uses a different convention, capitalize the letters that form the acronym: "we prepared Gold NanoParticles (GNP) by . . . " These capitals help readers quickly recognize what the acronym designates.

- Do not use capitals in the full expression when you are not introducing an acronym: "we prepared gold nanoparticles by… "

- As a more general rule, use first what readers know or can understand best, then put in parentheses what may be new to them. If the acronym is better known than the full expression, as may be the case for techniques such as SEM or projects such as FALCON, consider placing the acronym first: "The FALCON (Fission-Activated Laser Concept) program at…"

- In the rare case that an acronym is commonly known, you might not need to introduce it. One example is DNA in the life sciences. When in doubt, however, introduce the acronym.

In papers, consider the abstract as a stand-alone document. Therefore, if you use an acronym in both the abstract and the corresponding full paper, introduce that acronym twice: the first time you use it in the abstract and the first time you use it in the full paper. However, if you find that you use an acronym only once or twice after introducing it in your abstract, the benefit of it is limited — consider avoiding the acronym and using the full expression each time (unless you think some readers know the acronym better than the full expression).

Writing numbers

In general, write single-digit numbers (zero to nine) in words, as in three hours , and multidigit numbers (10 and above) in numerals, as in 24 hours . This rule has many exceptions, but most of them are reasonably intuitive, as shown hereafter.

Use numerals for numbers from zero to nine

- when using them with abbreviated units ( 3 mV );

- in dates and times ( 3 October , 3 pm );

- to identify figures and other items ( Figure 3 );

- for consistency when these numbers are mixed with larger numbers ( series of 3, 7, and 24 experiments ).

Use words for numbers above 10 if these numbers come at the beginning of a sentence or heading ("Two thousand eight was a challenging year for . . . "). As an alternative, rephrase the sentence to avoid this issue altogether ("The year 2008 was challenging for . . . " ) .

Capitalizing words

Capitals are often overused. In English, use initial capitals

- at beginnings: the start of a sentence, of a heading, etc.;

- for proper nouns, including nouns describing groups (compare physics and the Physics Department );

- for items identified by their number (compare in the next figure and in Figure 2 ), unless the journal to which you submit your paper uses a different convention;

- for specific words: names of days ( Monday ) and months ( April ), adjectives of nationality ( Algerian ), etc.

In contrast, do not use initial capitals for common nouns: Resist the temptation to glorify a concept, technique, or compound with capitals. For example, write finite-element method (not Finite-Element Method ), mass spectrometry (not Mass Spectrometry ), carbon dioxide (not Carbon Dioxide ), and so on, unless you are introducing an acronym (see Mechanics: Using abbreviations ).

Using hyphens

Punctuating text.

Punctuation has many rules in English; here are three that are often a challenge for non-native speakers.

As a rule, insert a comma between the subject of the main clause and whatever comes in front of it, no matter how short, as in "Surprisingly, the temperature did not increase." This comma is not always required, but it often helps and never hurts the meaning of a sentence, so it is good practice.

In series of three or more items, separate items with commas ( red, white, and blue ; yesterday, today, or tomorrow ). Do not use a comma for a series of two items ( black and white ).