data science Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Assessing the effects of fuel energy consumption, foreign direct investment and GDP on CO2 emission: New data science evidence from Europe & Central Asia

Documentation matters: human-centered ai system to assist data science code documentation in computational notebooks.

Computational notebooks allow data scientists to express their ideas through a combination of code and documentation. However, data scientists often pay attention only to the code, and neglect creating or updating their documentation during quick iterations. Inspired by human documentation practices learned from 80 highly-voted Kaggle notebooks, we design and implement Themisto, an automated documentation generation system to explore how human-centered AI systems can support human data scientists in the machine learning code documentation scenario. Themisto facilitates the creation of documentation via three approaches: a deep-learning-based approach to generate documentation for source code, a query-based approach to retrieve online API documentation for source code, and a user prompt approach to nudge users to write documentation. We evaluated Themisto in a within-subjects experiment with 24 data science practitioners, and found that automated documentation generation techniques reduced the time for writing documentation, reminded participants to document code they would have ignored, and improved participants’ satisfaction with their computational notebook.

Data science in the business environment: Insight management for an Executive MBA

Adventures in financial data science, gecoagent: a conversational agent for empowering genomic data extraction and analysis.

With the availability of reliable and low-cost DNA sequencing, human genomics is relevant to a growing number of end-users, including biologists and clinicians. Typical interactions require applying comparative data analysis to huge repositories of genomic information for building new knowledge, taking advantage of the latest findings in applied genomics for healthcare. Powerful technology for data extraction and analysis is available, but broad use of the technology is hampered by the complexity of accessing such methods and tools. This work presents GeCoAgent, a big-data service for clinicians and biologists. GeCoAgent uses a dialogic interface, animated by a chatbot, for supporting the end-users’ interaction with computational tools accompanied by multi-modal support. While the dialogue progresses, the user is accompanied in extracting the relevant data from repositories and then performing data analysis, which often requires the use of statistical methods or machine learning. Results are returned using simple representations (spreadsheets and graphics), while at the end of a session the dialogue is summarized in textual format. The innovation presented in this article is concerned with not only the delivery of a new tool but also our novel approach to conversational technologies, potentially extensible to other healthcare domains or to general data science.

Differentially Private Medical Texts Generation Using Generative Neural Networks

Technological advancements in data science have offered us affordable storage and efficient algorithms to query a large volume of data. Our health records are a significant part of this data, which is pivotal for healthcare providers and can be utilized in our well-being. The clinical note in electronic health records is one such category that collects a patient’s complete medical information during different timesteps of patient care available in the form of free-texts. Thus, these unstructured textual notes contain events from a patient’s admission to discharge, which can prove to be significant for future medical decisions. However, since these texts also contain sensitive information about the patient and the attending medical professionals, such notes cannot be shared publicly. This privacy issue has thwarted timely discoveries on this plethora of untapped information. Therefore, in this work, we intend to generate synthetic medical texts from a private or sanitized (de-identified) clinical text corpus and analyze their utility rigorously in different metrics and levels. Experimental results promote the applicability of our generated data as it achieves more than 80\% accuracy in different pragmatic classification problems and matches (or outperforms) the original text data.

Impact on Stock Market across Covid-19 Outbreak

Abstract: This paper analysis the impact of pandemic over the global stock exchange. The stock listing values are determined by variety of factors including the seasonal changes, catastrophic calamities, pandemic, fiscal year change and many more. This paper significantly provides analysis on the variation of listing price over the world-wide outbreak of novel corona virus. The key reason to imply upon this outbreak was to provide notion on underlying regulation of stock exchanges. Daily closing prices of the stock indices from January 2017 to January 2022 has been utilized for the analysis. The predominant feature of the research is to analyse the fact that does global economy downfall impacts the financial stock exchange. Keywords: Stock Exchange, Matplotlib, Streamlit, Data Science, Web scrapping.

Information Resilience: the nexus of responsible and agile approaches to information use

AbstractThe appetite for effective use of information assets has been steadily rising in both public and private sector organisations. However, whether the information is used for social good or commercial gain, there is a growing recognition of the complex socio-technical challenges associated with balancing the diverse demands of regulatory compliance and data privacy, social expectations and ethical use, business process agility and value creation, and scarcity of data science talent. In this vision paper, we present a series of case studies that highlight these interconnected challenges, across a range of application areas. We use the insights from the case studies to introduce Information Resilience, as a scaffold within which the competing requirements of responsible and agile approaches to information use can be positioned. The aim of this paper is to develop and present a manifesto for Information Resilience that can serve as a reference for future research and development in relevant areas of responsible data management.

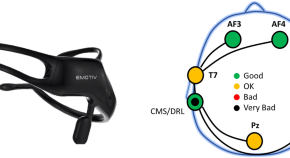

qEEG Analysis in the Diagnosis of Alzheimers Disease; a Comparison of Functional Connectivity and Spectral Analysis

Alzheimers disease (AD) is a brain disorder that is mainly characterized by a progressive degeneration of neurons in the brain, causing a decline in cognitive abilities and difficulties in engaging in day-to-day activities. This study compares an FFT-based spectral analysis against a functional connectivity analysis based on phase synchronization, for finding known differences between AD patients and Healthy Control (HC) subjects. Both of these quantitative analysis methods were applied on a dataset comprising bipolar EEG montages values from 20 diagnosed AD patients and 20 age-matched HC subjects. Additionally, an attempt was made to localize the identified AD-induced brain activity effects in AD patients. The obtained results showed the advantage of the functional connectivity analysis method compared to a simple spectral analysis. Specifically, while spectral analysis could not find any significant differences between the AD and HC groups, the functional connectivity analysis showed statistically higher synchronization levels in the AD group in the lower frequency bands (delta and theta), suggesting that the AD patients brains are in a phase-locked state. Further comparison of functional connectivity between the homotopic regions confirmed that the traits of AD were localized in the centro-parietal and centro-temporal areas in the theta frequency band (4-8 Hz). The contribution of this study is that it applies a neural metric for Alzheimers detection from a data science perspective rather than from a neuroscience one. The study shows that the combination of bipolar derivations with phase synchronization yields similar results to comparable studies employing alternative analysis methods.

Big Data Analytics for Long-Term Meteorological Observations at Hanford Site

A growing number of physical objects with embedded sensors with typically high volume and frequently updated data sets has accentuated the need to develop methodologies to extract useful information from big data for supporting decision making. This study applies a suite of data analytics and core principles of data science to characterize near real-time meteorological data with a focus on extreme weather events. To highlight the applicability of this work and make it more accessible from a risk management perspective, a foundation for a software platform with an intuitive Graphical User Interface (GUI) was developed to access and analyze data from a decommissioned nuclear production complex operated by the U.S. Department of Energy (DOE, Richland, USA). Exploratory data analysis (EDA), involving classical non-parametric statistics, and machine learning (ML) techniques, were used to develop statistical summaries and learn characteristic features of key weather patterns and signatures. The new approach and GUI provide key insights into using big data and ML to assist site operation related to safety management strategies for extreme weather events. Specifically, this work offers a practical guide to analyzing long-term meteorological data and highlights the integration of ML and classical statistics to applied risk and decision science.

Export Citation Format

Share document.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

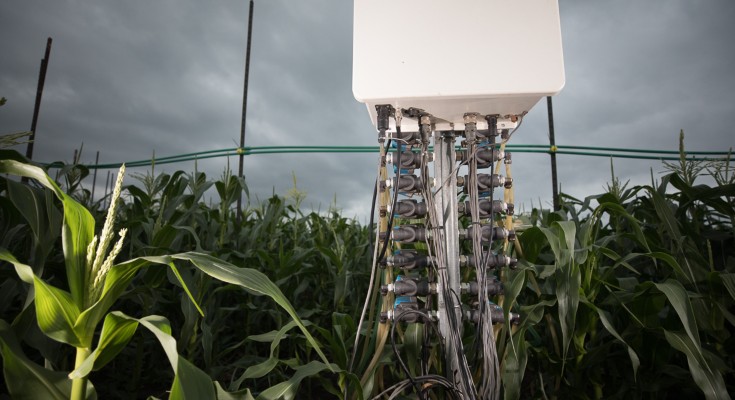

Two decades of fumigation data from the Soybean Free Air Concentration Enrichment facility

- Elise Kole Aspray

- Timothy A. Mies

- Elizabeth A. Ainsworth

Announcements

Collection open for submissions

Scientific Data is open to submissions for this special collection: Meteorology and hydroclimate observations and models

Scientific Data is open to submissions for this special collection: Genomics data for plant ecology, conservation and agriculture

Scientific Data is open to submissions for this special collection: Medical imaging data for digital diagnostics

Find out more about Scientific Data

Find the right repository for your data

Advertisement

Latest Research articles

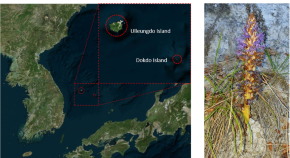

Chromosome-level genome assembly of Korean holoparasitic plants , Orobanche coerulescens

- Bongsang Kim

- So Yun Jhang

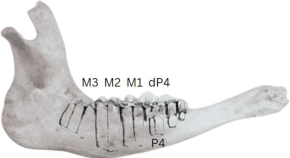

An international, open-access dataset of dental wear patterns and associated broad age classes in archaeological cattle mandibles

- Umberto Albarella

Chromosome-level genome assembly of Helwingia omeiensis : the first genome in the family Helwingiaceae

SignEEG v1.0: Multimodal Dataset with Electroencephalography and Hand-written Signature for Biometric Systems

- Ashish Ranjan Mishra

- Rakesh Kumar

- Rajkumar Saini

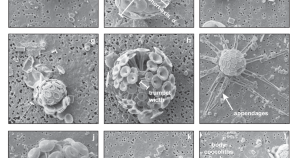

Cellular morphological trait dataset for extant coccolithophores from the Atlantic Ocean

- Rosie M. Sheward

- Alex J. Poulton

- Jens O. Herrle

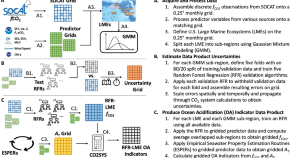

A mapped dataset of surface ocean acidification indicators in large marine ecosystems of the United States

- Jonathan D. Sharp

- Li-Qing Jiang

- Scott L. Cross

News & Comment

Motion-BIDS: an extension to the brain imaging data structure to organize motion data for reproducible research

We present an extension to the Brain Imaging Data Structure (BIDS) for motion data. Motion data is frequently recorded alongside human brain imaging and electrophysiological data. The goal of Motion-BIDS is to make motion data interoperable across different laboratories and with other data modalities in human brain and behavioral research. To this end, Motion-BIDS standardizes the data format and metadata structure. It describes how to document experimental details, considering the diversity of hardware and software systems for motion data. This promotes findable, accessible, interoperable, and reusable data sharing and Open Science in human motion research.

- Helena Cockx

- Julius Welzel

Strategizing Earth Science Data Development

Developing Earth science data products that meet the needs of diverse users is a challenging task for both data producers and service providers, as user requirements can vary significantly and evolve over time. In this comment, we discuss several strategies to improve Earth science data products that everyone can use.

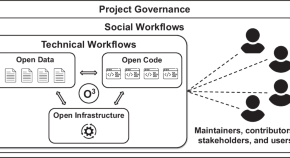

The O3 guidelines: open data, open code, and open infrastructure for sustainable curated scientific resources

Curated resources that support scientific research often go out of date or become inaccessible. This can happen for several reasons including lack of continuing funding, the departure of key personnel, or changes in institutional priorities. We introduce the Open Data, Open Code, Open Infrastructure (O3) Guidelines as an actionable road map to creating and maintaining resources that are less susceptible to such external factors and can continue to be used and maintained by the community that they serve.

- Charles Tapley Hoyt

- Benjamin M. Gyori

Beyond NGS data sharing for plant ecological resilience and improvement of agronomic traits

- Jayabalan Shilpha

- Seon-In Yeom

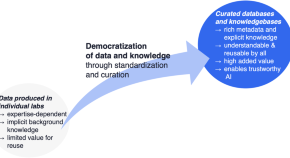

AI and the democratization of knowledge

The solution of the longstanding “protein folding problem” in 2021 showcased the transformative capabilities of AI in advancing the biomedical sciences. AI was characterized as successfully learning from protein structure data , which then spurred a more general call for AI-ready datasets to drive forward medical research. Here, we argue that it is the broad availability of knowledge , not just data, that is required to fuel further advances in AI in the scientific domain. This represents a quantum leap in a trend toward knowledge democratization that had already been developing in the biomedical sciences: knowledge is no longer primarily applied by specialists in a sub-field of biomedicine, but rather multidisciplinary teams, diverse biomedical research programs, and now machine learning. The development and application of explicit knowledge representations underpinning democratization is becoming a core scientific activity, and more investment in this activity is required if we are to achieve the promise of AI.

- Christophe Dessimoz

- Paul D. Thomas

A Framework for the Interoperability of Cloud Platforms: Towards FAIR Data in SAFE Environments

As the number of cloud platforms supporting scientific research grows, there is an increasing need to support interoperability between two or more cloud platforms. A well accepted core concept is to make data in cloud platforms Findable, Accessible, Interoperable and Reusable (FAIR). We introduce a companion concept that applies to cloud-based computing environments that we call a S ecure and A uthorized F AIR E nvironment (SAFE). SAFE environments require data and platform governance structures and are designed to support the interoperability of sensitive or controlled access data, such as biomedical data. A SAFE environment is a cloud platform that has been approved through a defined data and platform governance process as authorized to hold data from another cloud platform and exposes appropriate APIs for the two platforms to interoperate.

- Robert L. Grossman

- Rebecca R. Boyles

Trending - Altmetric

A global multiproxy database for temperature reconstructions of the Common Era

Transcriptome sequencing of seven deep marine invertebrates

CODC-v1: a quality-controlled and bias-corrected ocean temperature profile database from 1940–2023

Electric vehicle charging stations in the workplace with high-resolution data from casual and habitual users

This journal is a member of and subscribes to the principles of the Committee on Publication Ethics.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Journal of Big Data

Featured Collections on Computationally Intensive Problems in General Math and Engineering

This two-part special issue covers computationally intensive problems in engineering and focuses on mathematical mechanisms of interest for emerging problems such as Partial Difference Equations, Tensor Calculus, Mathematical Logic, and Algorithmic Enhancements based on Artificial Intelligence. Applications of the research highlighted in the collection include, but are not limited to: Earthquake Engineering, Spatial Data Analysis, Geo Computation, Geophysics, Genomics and Simulations for Nature Based Construction, and Aerospace Engineering. Featured lead articles are co-authored by three esteemed Nobel laureates: Jean-Marie Lehn, Konstantin Novoselov, and Dan Shechtman.

Open Special Issues

Advancements on Automated Data Platform Management, Orchestration, and Optimization Submission Deadline: 30 September 2024

Emergent architectures and technologies for big data management and analysis Submission Deadline: 1 October 2024

View our collection of open and closed special issues

- Most accessed

New custom rating for improving recommendation system performance

Authors: Tora Fahrudin and Dedy Rahman Wijaya

Optimization-based convolutional neural model for the classification of white blood cells

Authors: Tulasi Gayatri Devi and Nagamma Patil

Advanced RIME architecture for global optimization and feature selection

Authors: Ruba Abu Khurma, Malik Braik, Abdullah Alzaqebah, Krishna Gopal Dhal, Robertas Damaševičius and Bilal Abu-Salih

Feature reduction for hepatocellular carcinoma prediction using machine learning algorithms

Authors: Ghada Mostafa, Hamdi Mahmoud, Tarek Abd El-Hafeez and Mohamed E. ElAraby

Data oversampling and imbalanced datasets: an investigation of performance for machine learning and feature engineering

Authors: Muhammad Mujahid, EROL Kına, Furqan Rustam, Monica Gracia Villar, Eduardo Silva Alvarado, Isabel De La Torre Diez and Imran Ashraf

Most recent articles RSS

View all articles

A survey on Image Data Augmentation for Deep Learning

Authors: Connor Shorten and Taghi M. Khoshgoftaar

Big data in healthcare: management, analysis and future prospects

Authors: Sabyasachi Dash, Sushil Kumar Shakyawar, Mohit Sharma and Sandeep Kaushik

Review of deep learning: concepts, CNN architectures, challenges, applications, future directions

Authors: Laith Alzubaidi, Jinglan Zhang, Amjad J. Humaidi, Ayad Al-Dujaili, Ye Duan, Omran Al-Shamma, J. Santamaría, Mohammed A. Fadhel, Muthana Al-Amidie and Laith Farhan

Deep learning applications and challenges in big data analytics

Authors: Maryam M Najafabadi, Flavio Villanustre, Taghi M Khoshgoftaar, Naeem Seliya, Randall Wald and Edin Muharemagic

Short-term stock market price trend prediction using a comprehensive deep learning system

Authors: Jingyi Shen and M. Omair Shafiq

Most accessed articles RSS

Aims and scope

Latest tweets.

Your browser needs to have JavaScript enabled to view this timeline

- Editorial Board

- Sign up for article alerts and news from this journal

- Follow us on Twitter

Annual Journal Metrics

2022 Citation Impact 8.1 - 2-year Impact Factor 5.095 - SNIP (Source Normalized Impact per Paper) 2.714 - SJR (SCImago Journal Rank)

2023 Speed 56 days submission to first editorial decision for all manuscripts (Median) 205 days submission to accept (Median)

2023 Usage 2,559,548 downloads 280 Altmetric mentions

- More about our metrics

- ISSN: 2196-1115 (electronic)

Loading metrics

Open Access

Eleven quick tips for finding research data

Contributed equally to this work with: Kathleen Gregory, Siri Jodha Khalsa, William K. Michener, Fotis E. Psomopoulos, Anita de Waard, Mingfang Wu

Affiliation Data Archiving and Networked Services, Royal Netherlands Academy of Arts and Sciences, The Hague, Netherlands

Affiliation National Snow and Ice Data Centre, Cooperative Institute for Research in Environmental Sciences, University of Colorado, Boulder, Colorado, United States of America

Affiliation College of University Libraries & Learning Sciences, The University of New Mexico, Albuquerque, New Mexico, United States of America

Affiliation Institute of Applied Biosciences, Centre for Research and Technology Hellas, Thessaloniki, Greece

Affiliation Research Data Management Solutions, Elsevier, Jericho, Vermont, United States of America

* E-mail: [email protected]

Affiliation Australia National Data Service, Melbourne, Australia

- Kathleen Gregory,

- Siri Jodha Khalsa,

- William K. Michener,

- Fotis E. Psomopoulos,

- Anita de Waard,

- Mingfang Wu

Published: April 12, 2018

- https://doi.org/10.1371/journal.pcbi.1006038

- Reader Comments

Citation: Gregory K, Khalsa SJ, Michener WK, Psomopoulos FE, de Waard A, Wu M (2018) Eleven quick tips for finding research data. PLoS Comput Biol 14(4): e1006038. https://doi.org/10.1371/journal.pcbi.1006038

Editor: Francis Ouellette, Genome Quebec, CANADA

Copyright: © 2018 Gregory et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Funding: William K. Michener was supported by NSF (#IIA-1301346 and #ACI-1430508). The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

This is a PLOS Computational Biology Education paper.

Introduction

Over the past decades, science has experienced rapid growth in the volume of data available for research—from a relative paucity of data in many areas to what has been recently described as a data deluge [ 1 ]. Data volumes have increased exponentially across all fields of science and human endeavour, including data from sky, earth, and ocean observatories; social media such as Facebook and Twitter; wearable health-monitoring devices; gene sequences and protein structures; and climate simulations [ 2 ]. This brings opportunities to enable more research, especially cross-disciplinary research that could not be done before. However, it also introduces challenges in managing, describing, and making data findable, accessible, interoperable, and reusable by researchers [ 3 ].

When this vast amount and variety of data is made available, finding relevant data to meet a research need is increasingly a challenge. In the past, when data were relatively sparse, researchers discovered existing data by searching literature, attending conferences, and asking colleagues. In today’s data-rich environment, with accompanying advances in computational and networking technologies, researchers increasingly conduct web searches to find research data. The success of such searches varies greatly and depends to a large degree on the expertise of the person looking for data, the tools used, and, partially, on luck. This article offers the following 11 quick tips that researchers can follow to more effectively and precisely discover data that meet their specific needs.

- Tip 1: Think about the data you need and why you need them.

- Tip 2: Select the most appropriate resource.

- Tip 3: Construct your query strategically.

- Tip 4: Make the repository work for you.

- Tip 5: Refine your search.

- Tip 6: Assess data relevance and fitness -for -use.

- Tip 7: Save your search and data- source details.

- Tip 8: Look for data services, not just data.

- Tip 9: Monitor the latest data.

- Tip 10: Treat sensitive data responsibly.

- Tip 11: Give back (cite and share data).

Tip 1: Think about the data you need and why you need them

Before embarking on a search for data, consider how you will use the desired data in the context of your overall research question. Are you seeking data for comparison or validation, as the basis for a new study, or for another reason? List the characteristics that the data must have in order to fulfil your identified purpose(s), including requirements such as data format, spatial or temporal coverage, availability, and author or research group. In many cases, your initial data requirements and the identified constraints will change as you progress with the search. Pausing to first analyse what you need and why you need it can lead to a more analytic search, save searching time and facilitating the actions described in Tips 2–6.

Tip 2: Select the most appropriate resource

Directories of research-data repositories, such as re3data.org ( http://www.re3data.org ) and FAIRsharing ( https://fairsharing.org ), web search engines, and colleagues can be consulted to discover domain-specific portals in your discipline. Subject domain is but one criterion to consider when selecting an appropriate data repository. Various certification processes have also been implemented to help develop trustworthiness in repositories and to make their data-governing policies more transparent. For example, repositories earning the CoreTrustSeal ( https://www.coretrustseal.org/about ) Trustworthy Data Repository certification must meet 16 requirements measuring the accessibility, usability, reliability, and long-term stability of their data. Knowing what standards and criteria a repository applies to data and metadata provides more confidence in understanding and reusing the data from that repository.

Domain-specific portals provide ways to quickly narrow your search, offering interfaces and filters tailored to match the data and needs of specific disciplinary domains. Map interfaces for data collected from specific locations (see the National Water Information System, https://maps.waterdata.usgs.gov/mapper/index.html ) and specific search fields and tools (see the National Centre for Biotechnology Information’s complement of databases, ( https://www.ncbi.nlm.nih.gov/guide/all/ ) facilitate discovering disciplinary data. Other domain-focused repositories, such as the National Snow and Ice Data Centre (NSIDC, http://nsidc.org/data/search/ ), collect and apply knowledge about user requirements and incorporate domain semantics into their search engines to help data seekers quickly find appropriate data. Data aggregators, including DataONE ( https://www.dataone.org ) for environmental and earth observation data, VertNet ( http://vertnet.org ) and Global Biodiversity Information Facility (GBIF, https://www.gbif.org ) for museum specimen and biodiversity data, or DataMed ( https://datamed.org ) for biomedical datasets, enable searching multiple data repositories or collections through a single search interface. Some portals may not provide data-search functionality but instead provide a catalogue of data resources. A notable example is the AgBioData ( https://www.agbiodata.org/databases ) portal, which lists links to 12 agricultural biological databases dedicated to specific species (e.g., cotton, grain, or hardwood), where you can directly search for data.

The accessibility of data resources is another important consideration. University librarians can provide advice about particular subscription-based resources available at your institution. Research papers in your field can also point to available data repositories. In domains such as astronomy and genomics, for example, citations of datasets within journal articles are commonplace. These references usually include dataset access information that can be used to locate datasets of interest or to point toward data repositories favoured within a discipline.

Tip 3: Construct your query strategically

Describing your desired data effectively is key to communicating with the search system. Your description will determine if relevant data are retrieved and may inform the order of the hits in the results list. Help pages provide tips on how to construct basic and advanced searches within particular repositories (see for example Research Data Australia https://researchdata.ands.org.au —click on Advanced Search → Help). Note that not all repositories operate in the same manner. Some portals, such as DataONE ( https://www.dataone.org ), use semantic technologies to automatically expand the keywords entered in the search box to include synonyms. If a portal does not use automatic expansion, you may need to manually add various synonyms to your search query (e.g., in addition to ‘demography’ as a search term, one might also add ‘population density’, ‘population growth’, ‘census’, or ‘anthropology’).

- sea level (site:.edu)

Tip 4: Make the repository work for you

Repository developers invest significant time and energy organizing data in ways to make them more discoverable; use their work to your advantage. Familiarize yourself with the controlled vocabularies, subject categories, and search fields used in particular repositories. Searching for and successfully locating data is dependent on the information about the data, termed metadata, that are contained in these fields; this is particularly true for numeric or nontextual data. Browsing subject categories can also help to gauge the appropriateness of a resource, home in on an area of interest, or find related data that have been classified in the same category.

Researchers can also register or create profiles with many data repositories. By registering, you may be able to indicate your general research data interests which can be utilized in subsequent searches or receive alerts about datasets that you have previously downloaded (see also Tip 7).

Tip 5: Refine your search

In many cases, your initial search may not retrieve relevant data or all of the data that you need. Based on the retrieved results, you may need to broaden or narrow your approach. Apart from rephrasing your search query and using search operators, as discussed in Tip 3, facets or filters specific to individual repositories can be used to narrow the scope of your results. Refinements such as data format, types of analysis, and data availability allow users to quickly find usable data.

Examining results that look interesting (for example, by clicking on links for ‘more information’) can be a signal of the type of information that you find relevant. These results can then be linked to related ones (e.g., from the data provider, from different time series), and in subsequent searches, other results algorithmically determined to be related will be brought to the top of the results list.

Tip 6: Assess data relevance and fitness for use

Conduct a preliminary assessment of the retrieved data prior to investing time in subsequent data download, integration, and analytic and visualization efforts. A quick perusal of the metadata (text and/or images) can often enable you to verify that the data satisfy the initial requirements and constraints set forth in Tip 1 (e.g., spatial, temporal, and thematic coverage and data-sharing restrictions). Ideally, the metadata will also contain documentation sufficient to comprehensively assess the relevance and fitness for use of the data, including information about how the data were collected and quality assured, how the data have been previously used, etc. Some data repositories such as the National Science Foundation’s Arctic Data Centre ( https://arcticdata.io ) enable the data seeker to generate and download a metadata quality report that assesses how well the metadata adhere to community best practices for discovery and reusability. Clearly, if none of your criteria for data are met, you may not wish to download and use the associated data.

Attention should also be paid to quality parameters or flags within the data files. Make use of a visualization tool or statistics analysis tool, if provided, to examine quality or fitness of data for intended use before downloading data, especially if the data volume is large and the dataset includes many files.

Tip 7: Save your search and data-source details

Record the data source and data version if you access or download a data product. This may be accomplished by noting the persistent identifier, such as a digital object identifier (DOI) or another Global Unique Identifier (GUID) assigned to the data. Recording the URL from which you obtained the data can be a quick way of returning to it but should not be trusted in the long term for providing access to the data, as URLs can change. It is also a good practice to save a copy of any original data products that you downloaded [ 5 ]. You may, for example, need to go back to original data sources and check if there have been any changes or corrections to data. Registering with the data portal (as described in Tip 3) or registering as a user of a specific data product allows the repository to contact you when necessary. Such information may be needed when you publish a paper that builds on the data you accessed. If there are any errors found in the original data, registering with the data service allows them to contact you to see if there is an impact on any research conclusions that you have drawn from this data.

If you have registered with a portal, it may also be possible to save your searches, allowing you to resume your data search at a later time with all previously defined search criteria. Some portals use RESTful search interfaces, which means you can bookmark a results set or dataset and return to it later simply by going to the bookmark.

Tip 8: Look for data services, not just data

The data you seek may be available only via an application programming interface (API) or as linked data [ 6 ]. That is, instead of a file residing on a server, the data that best suits your purposes is provided as a service through an API. Examples of such services include the climate change projection data available through the NSW Climate Data Portal ( http://climatechange.environment.nsw.gov.au/Climate-projections-for-NSW/Download-datasets ), in which data are dynamically generated from a simulation model; Google Earth Engine ( https://earthengine.google.com ); or Amazon Web Services (AWS) public datasets ( https://aws.amazon.com/public-datasets/ ). Data made available from these services may not be searchable from general web search engines, but data services may be registered to data catalogues or federations such as Research Data Australia, DataONE, and other resources listed in re3data.org and FAIRsharing. Many repositories that host extremely large volumes of data such as sequencing, environmental observatory, and remotely sensed data provide access to tools, workflows, and computing resources that allow one to access, visualize, process, and download manageable subsets of the data. Often, the processing workflows that one might use to process and download a dataset can also be downloaded, saved, and used again in subsequent searches.

Tip 9: Monitor the latest data

One of the most effective ways to identify new data submissions is to monitor the latest literature, as many journals such as Nature , PLOS , Science , and others require that the data underlying a publication also be published in a public (e.g., Dataverse https://dataverse.org , Dryad http://datadryad.org , or Zenodo https://zenodo.org ) or discipline-based repository (e.g., EASY from Data Archiving and Networked Services [DANS] https://easy.dans.knaw.nl/ , GenBank https://www.ncbi.nlm.nih.gov/genbank/ , or PubChem https://pubchem.ncbi.nlm.nih.gov ).

In addition, many domain-based repositories, such as environmental observatories and sequencing databases, are constantly accepting similar types of data submissions. Publishers and some digital repositories also offer alerting services when new publications or data products are submitted. Depending on the resource, it may be possible to set up a recurring search API or a Rich Site Summary (RSS) feed to automatically monitor specific resources. For example, the NSIDC offers a subscription service where new data meeting a list of user-generated specifications are automatically pushed to a location specified by the user.

Tip 10: Treat sensitive data responsibly

In most cases, after you have located relevant data, you can download them straight away. However, there are cases, such as for medical and health data, endangered and threatened species, and sacred objects and archaeological finds, where you can only see a data description (the metadata) and are not able to download the data directly due to access restrictions imposed to protect the privacy of individuals represented in the data or to safeguard locations and species from harm or unwanted attention. Guidance with respect to sensitive data is available through the 2003 Fort Lauderdale Agreement ( https://www.genome.gov/pages/research/wellcomereport0303.pdf ), the 2009 Toronto Agreement ( https://www.nature.com/articles/461168a ) [ 7 ], the Australian National Data Service ( http://www.ands.org.au/working-with-data/sensitive-data ), and individual institutional and society research ethics committees.

Sensitive data are often discoverable and accessible if identity and location information are anonymized. In other cases, an established data-access agreement specifies the technical requirements as well as the ethical and scientific obligations that accessing and using the data entail. Technical requirements may include aspects such as auditing data access at the local system, defining read-only access rights, and/or ensuring constraints for nonprivileged network access. You can still contact the data owner to explain your intended use and to discuss the conditions and legal restrictions associated with using sensitive data. Such contact may even lead to collaborative research between you and the data owner. Should you be granted access to the data, it is important to use the data ethically and responsibly [ 8 ] to ensure that no harm is done to individuals, species, and culture heritages.

Tip 11: Give back (cite and share data)

There are three ways to give back to the community once you have sought, discovered, and used an existing data product. First, it is essential that you give proper attribution to the data creators (in some cases, the data owners) if you use others’ data for research, education, decision making, or other purposes [ 9 ]. Proper attribution benefits both data creators/providers and data seekers/users. Data creators/providers receive credit for their work, and their practice of sharing data is thus further encouraged. Data seekers/users make their own work more transparent and, potentially, reproducible by uniquely identifying and citing data used in their research.

Many data creators and institutions adopt standard licenses from organizations, such as Creative Commons, that govern how their data products may be shared and used. Creative Commons recommends that a proper attribution should include title, author, source, and license [ 10 ].

Second, provide feedback to the data creators or the data repository about any issues associated with data accessibility, data quality, or metadata completeness and interpretability. Data creators and repositories benefit from knowing that their data products are understandable and usable by others, as well as knowing how the data were used. Future users of the data will also benefit from your feedback.

Third, virtually all data seekers and data users also generate data. The ultimate ‘give-back’ is to also share your data with the broader community.

This paper highlights 11 quick tips that, if followed, should make it easier for a data seeker to discover data that meet a particular need. Regardless of whether you are acting as a data seeker or a data creator, remember that ‘data discovery and reuse are most easily accomplished when: (1) data are logically and clearly organized; (2) data quality is assured; (3) data are preserved and discoverable via an open data repository; (4) data are accompanied by comprehensive metadata; (5) algorithms and code used to create data products are readily available; (6) data products can be uniquely identified and associated with specific data originator(s); and (7) the data originator(s) or data repository have provided recommendations for citation of the data product(s)’ [ 11 ].

Acknowledgments

This work was developed as part of the Research Data Alliance (RDA) ‘WG/IG’ entitled ‘Data Discovery Paradigms’, and we acknowledge the support provided by the RDA community and structures. We would like to thank members of the group for their support, especially Andrea Perego, Mustapha Mokrane, Susanna-Assunta Sansone, Peter McQuilton, and Michel Dumontier who read this paper and provided constructive suggestions.

- 1. Gray J. Jim Gray on eScience: A transformed scientific method. In: Hey T, Tansley S, Tolle K, editors. The Fourth Paradigm: Data-Intensive Scientific Discovery. Richmond, WA: Microsoft Research; 2009. p.xvii–xxxi. Available from: https://www.microsoft.com/en-us/research/publication/fourth-paradigm-data-intensive-scientific-discovery/ .

- 2. Fox G, Hey T, Trefethen A. Where does all the data come from? In: Kleese van Dam K, editor. Data-Intensive Science. Chapman and Hall/CRC; Boca Raton: Taylor and Francis, May 2013. p. 15–51.

- View Article

- PubMed/NCBI

- Google Scholar

- 4. Warner, R. Google Advanced Search: A Comprehensive List of Google Search Operators [Internet]. 2015. Available from: https://bynd.com/news-ideas/google-advanced-search-comprehensive-list-google-search-operators/ . [cited 2017 Oct 26]

- 6. Heath T, Bizer C. Linked Data: Evolving the Web into a global data space. In: Hendler J, van Harmelen F, editors. Synthesis Lectures on the Semantic Web: Theory and Technology. Morgan & Claypool; 2011. p. 1–136.

- 8. Clark K, et al. Guidelines for the Ethical Use of Digital Data in Human Research. www.carltonconnect.com.au: The University of Melbourne; 2015. Available from: https://www.carltonconnect.com.au/wp-content/uploads/2015/06/Ethical-Use-of-Digital-Data.pdf . [cited 2018 Feb. 1].

- 9. Martone M, editor. Data Citation Synthesis Group: Joint Declaration of Data Citation Principles. FORCE11. San Diego, CA; 2014. [cited 2018 Feb 1]. Available from: https://www.force11.org/group/joint-declaration-data-citation-principles-final .

- 10. Creative Commons. Best practices for attribution [Internet]. 2014 [cited 2017 Sep 10]. Available from: https://wiki.creativecommons.org/wiki/Best_practices_for_attribution .

- 11. Michener W. K. Data discovery. In: Recknagel F, Michener WK, editors. Ecological informatics: Data management and knowledge discovery. Springer International Publishing, Cham, Switzerland; 2017.

- Interlibrary Loan and Scan & Deliver

- Course Reserves

- Purchase Request

- Collection Development & Maintenance

- Current Negotiations

- Ask a Librarian

- Instructor Support

- Library How-To

- Research Guides

- Research Support

- Study Rooms

- Research Rooms

- Partner Spaces

- Loanable Equipment

- Print, Scan, Copy

- 3D Printers

- Poster Printing

- OSULP Leadership

- Strategic Plan

Research Data Services

- Campus Services & Policies

- Archiving & Preservation

- Citing Datasets

- Data Papers & Journals

Data Papers & Data Journals

- Data Repositories

- ScholarsArchive@OSU data repository

- Data Storage & Backup

- Data Types & File Formats

- Defining Data

- File Organization

- IP & Licensing Data

- Laboratory Notebooks

- Research Lifecycle

- Researcher Identifiers

- Sharing Your Data

- Metadata/Documentation

- Tools & Resources

SEND US AN EMAIL

- L.K. Borland Data Management Support Coordinator Schedule an appointment with me!

- Diana Castillo College of Business/Social Sciences Data Librarian Assistant Professor 541-737-9494

- Clara Llebot Lorente Data Management Specialist Assistant Professor 541-737-1192 On sabbatical through June 2024

The rise of the "data paper"

Datasets are increasingly being recognized as scholarly products in their own right, and as such, are now being submitted for standalone publication. In many cases, the greatest value of a dataset lies in sharing it, not necessarily in providing interpretation or analysis. For example, this paper presents a global database of the abundance, biomass, and nitrogen fixation rates of marine diazotrophs. This benchmark dataset, which will continue to evolve over time, is a valuable standalone research product that has intrinsic value. Under traditional publication models, this dataset would not be considered "publishable" because it doesn't present novel research or interpretation of results. Data papers facilitate the sharing of data in a standardized framework that provides value, impact, and recognition for authors. Data papers also provide much more thorough context and description than datasets that are simply deposited to a repository (which may have very minimal metadata requirements).

What is a data paper?

Data papers thoroughly describe datasets, and do not usually include any interpretation or discussion (an exception may be discussion of different methods to collect the data, e.g.). Some data papers are published in a distinct “Data Papers” section of a well-established journal (see this article in Ecology, for example). It is becoming more common, however, to see journals that exclusively focus on the publication of datasets. The purpose of a data journal is to provide quick access to high-quality datasets that are of broad interest to the scientific community. They are intended to facilitate reuse of the dataset, which increases its original value and impact, and speeds the pace of research by avoiding unintentional duplication of effort.

Are data papers peer-reviewed?

Data papers typically go through a peer review process in the same manner as articles, but being new to scientific practice, the quality and scope of the process is variable across publishers. A good example of a peer reviewed data journal is Earth System Science Data ( ESSD ). Their review guidelines are well described and aren't all that different from manuscript review guidelines that we are all already familiar with.

You might wonder, W hat is the difference between a 'data paper' and a 'regular article + dataset published in a public repository' ? The answer to that isn’t always clear. Some data papers necessitate just as much preparation as, and are of equal quality to, ‘typical’ journal articles. Some data papers are brief, and only present enough metadata and descriptive content to make the dataset understandable and reusable. In most cases however, the datasets or databases presented in data papers include much more description than datasets deposited to a repository, even if those datasets were deposited to support a manuscript. Common practices and standards are evolving in the realm of data papers and data journals, but for now, they are the Wild West of data sharing.

Where do the data from data papers live?

Data preservation is a corollary of data papers, not their main purpose. Most data journals do not archive data in-house. Instead, they generally require that authors submit the dataset to a repository. These repositories archive the data, provide persistent access, and assign the dataset a unique identifier (DOI). Repositories do not always require that the dataset(s) be linked with a publication (data paper or ‘typical’ paper; Dryad does require one), but if you’re going to the trouble of submitting a dataset to a repository, consider exploring the option of publishing a data paper to support it.

How can I find data journals?

The article by Walters (2020) has a list of data journals in their appendix, and differentiates between "pure" data journals and journals that publish data reports but are devoted mainly to other types of contributions. They also update previous lists of data journals ( Candela et al, 2015 ).

Walters, William H.. 2020. “Data Journals: Incentivizing Data Access and Documentation Within the Scholarly Communication System”. Insights 33 (1): 18. DOI: http://doi.org/10.1629/uksg.510

Candela, L., Castelli, D., Manghi, P., & Tani, A. (2015). Data journals: A survey. Journal of the Association for Information Science and Technology , 66 (9), 1747–1762. https://doi.org/10.1002/asi.23358

This blog post by Katherine Akers , from 2014, also has a long list of existing data journals.

- << Previous: Citing Datasets

- Next: Data Repositories >>

- Last Updated: Aug 30, 2023 9:25 AM

- URL: https://guides.library.oregonstate.edu/research-data-services

Contact Info

121 The Valley Library Corvallis OR 97331–4501

Phone: 541-737-3331

Services for Persons with Disabilities

In the Valley Library

- Oregon State University Press

- Special Collections and Archives Research Center

- Undergrad Research & Writing Studio

- Graduate Student Commons

- Tutoring Services

- Northwest Art Collection

Digital Projects

- Oregon Explorer

- Oregon Digital

- ScholarsArchive@OSU

- Digital Publishing Initiatives

- Atlas of the Pacific Northwest

- Marilyn Potts Guin Library

- Cascades Campus Library

- McDowell Library of Vet Medicine

- Sources of Data For Research: Types & Examples

Introduction

In the age of information, data has become the driving force behind decision-making and innovation. Whether in business, science, healthcare, or government, data serves as the foundation for insights and progress.

As a researcher, you need to understand the various sources of data as they are essential for conducting comprehensive and impactful studies. In this blog post, we will explore the primary data sources, their definitions, and examples to help you gather and analyze data effectively.

Primary Data Sources

Primary data sources refer to original data collected firsthand by researchers specifically for their research purposes. These sources provide fresh and relevant information tailored to the study’s objectives. Examples of primary data sources include surveys and questionnaires, direct observations, experiments, interviews, and focus groups. As a researcher, you must be familiar with primary data sources, which are original data collected firsthand specifically for your research purposes.

These sources hold significant value as they offer fresh and relevant information tailored to your study. Also, researchers use primary data to obtain accurate and specific insights into their research questions to confirm that the data is directly relevant to their study and meets their specific needs. Collecting primary data allows you as a researcher to control the data collection process, and monitor the data quality and reliability for their analyses and conclusions.

Examples of Primary Data Sources

- Surveys and questionnaires: Surveys and questionnaires are widely used data collection methods that allow you to gather information directly from respondents. Whether distributed online, through mail, or in person, surveys enable you to reach a large audience and collect quantitative data efficiently. However, it is crucial to design clear and unbiased questions to ensure the accuracy and reliability of responses.

- Observations: Direct observations involve systematically watching and recording events or behaviors as they occur. This method provides you with real-time data, offering unique insights into participants’ natural behavior and responses. It is particularly valuable in fields such as psychology, anthropology, and ecology, where understanding human or animal behavior is critical.

- Experiments: Experiments involve when you deliberately manipulate variables to study cause-and-effect relationships. When you control variables, your experiments provide rigorous and conclusive data, often used in scientific research. They are well-suited for hypothesis testing and determining causal relationships.

- Interviews and focus groups : Qualitative data collected through interviews and focus groups give you an in-depth exploration of participants’ opinions, beliefs, and experiences. These methods help you to understand complex issues and gain rich insights that quantitative data alone may not capture or provide for your study.

Read More: What is Primary Data? + [Examples & Collection Methods]

Secondary Data Sources

As a researcher, you should also be familiar with secondary data sources. Secondary data sources involve data collected by someone else for purposes other than your specific research. Therefore, secondary data complements primary data and can provide valuable context and insights to your research.

Examples of Secondary Data Sources

- Published literature: Published literature refers to academic papers, books, and reports published by researchers and scholars in various fields. These literatures serve as a rich source of secondary data. These sources contain valuable findings and analyses from previous studies, offering a foundation for new research and the ability to build upon existing knowledge. Reviewing published literature is essential for you to understand the current state of research in your area of study and identify gaps for further investigation.

- Government sources: Government agencies collect and maintain vast amounts of data on a wide range of topics. These datasets are often made available for public use and can be a valuable resource for researchers. For example, census data provides demographic information, economic indicators offer insights into the economy, and health records contribute to public health research. Government sources offer standardized and reliable data that can be used for various research purposes.

- Online databases: The internet has opened up access to a wealth of data through online databases, data repositories, and open data initiatives. These platforms host datasets on diverse subjects. This makes them easily accessible to you and other researchers worldwide. Online databases are particularly beneficial for conducting cross-disciplinary research or exploring topics beyond your immediate field of expertise.

- Market research reports: Market research companies conduct surveys and gather data to analyze market trends, consumer behavior, and industry insights. These reports provide valuable data for businesses and researchers seeking information on market dynamics and consumer preferences. Market research reports offer you a comprehensive view of industries and can inform you of how to make strategic decisions.

Read More: What is Secondary Data? + [Examples, Sources & Analysis]

Tertiary Data Sources

In addition to primary and secondary data, you should be aware of tertiary data sources, which play a critical role in aggregating and organizing existing data from various origins. Tertiary data sources focus on collecting, curating, and preserving data for easy access and analysis.

Examples of Tertiary Data Sources

- Data aggregators: Data aggregators are companies or organizations that specialize in collecting and compiling data from multiple sources into centralized databases. These sources can include government agencies, research institutions, businesses, and other data providers. These aggregators offer a convenient way for you, a researcher, to access a vast amount of data on specific topics or industries. As they consolidate data from diverse sources, they provide you and other researchers with a comprehensive view of trends, patterns, and insights.

- Data brokers: The best way to describe data brokers is that they are entities that buy and sell data, often without the direct consent or knowledge of the individuals whose data is being traded. While data brokers can offer access to large datasets, their practices raise privacy and ethical concerns. As a researcher, you should be cautious when using data obtained through data brokers to ensure compliance with ethical guidelines and data protection laws.

- Data archives: Data archives serve as repositories for historical data and research findings. These archives are essential for preserving valuable information for future reference and analysis. They often contain datasets, reports, academic papers, and other research materials. Data archives ensure that data remains accessible for replication studies, verification of previous research, and the development of longitudinal analyses.

Emerging Data Sources

As you delve into the world of data collection, it’s important to know the emerging sources that have gained prominence in recent years. These newer data sources provide valuable insights and opportunities for research across various domains. Below are some of these emerging data sources:

- Internet of Things (IoT): The Internet of Things (IoT) has changed data collection in the 21st century through the everyday connection of devices and objects to the Internet. Smart devices like sensors, wearables, and home appliances generate vast amounts of data in real-time. For example, IoT devices in healthcare can monitor patients’ health metrics, while in agriculture, they can optimize irrigation and crop management. As a researcher, you can leverage IoT data to analyze patterns, predict trends, and make data-driven decisions.

- Social media and web data: Social media platforms and websites host a wealth of information generated by users worldwide. When you analyze social media posts and online reviews, and scrap the web, they provide you with valuable insights into public opinions, consumer behavior, and trends. You can study sentiment analysis, track customer preferences, and identify emerging topics using social media data. Web scraping allows for the extraction of data from websites, enabling researchers to gather large datasets for analysis.

- Sensor data: Sensor data is becoming increasingly relevant in various fields, including environmental monitoring, urban planning, and healthcare. Sensors are capable of measuring and collecting data on environmental parameters, traffic patterns, air quality, and more. This data helps you understand environmental changes, optimize urban infrastructure, and improve public health initiatives. Sensor networks offer a continuous stream of data, that provides you with real-time and accurate information.

In conclusion, we have explored the diverse sources of data for research, such as primary data sources, secondary data sources, and tertiary data sources, which all play a crucial role in getting the accurate information needed for research. It is important that you understand the strengths and limitations of each data source.

As you embark on your research journey, explore and utilize these diverse data sources. And if you leverage a combination of primary, secondary, and tertiary data, you can make informed decisions, drive progress in your respective fields, and uncover novel insights that may not be achievable without trying out different sources.

Connect to Formplus, Get Started Now - It's Free!

- data sources

- primary data sources

- research studies

- secondary data source

- tertiary data source

You may also like:

Projective Techniques In Surveys: Definition, Types & Pros & Cons

Introduction When you’re conducting a survey, you need to find out what people think about things. But how do you get an accurate and...

Naive vs Non Naive Participants In Research: Meaning & Implications

Introduction In research studies, naive and non-naive participant information alludes to the degree of commonality and understanding...

Subgroup Analysis: What It Is + How to Conduct It

Introduction Clinical trials are an integral part of the drug development process. They aim to assess the safety and efficacy of a new...

Desk Research: Definition, Types, Application, Pros & Cons

If you are looking for a way to conduct a research study while optimizing your resources, desk research is a great option. Desk research...

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

Research Methods | Definitions, Types, Examples

Research methods are specific procedures for collecting and analyzing data. Developing your research methods is an integral part of your research design . When planning your methods, there are two key decisions you will make.

First, decide how you will collect data . Your methods depend on what type of data you need to answer your research question :

- Qualitative vs. quantitative : Will your data take the form of words or numbers?

- Primary vs. secondary : Will you collect original data yourself, or will you use data that has already been collected by someone else?

- Descriptive vs. experimental : Will you take measurements of something as it is, or will you perform an experiment?

Second, decide how you will analyze the data .

- For quantitative data, you can use statistical analysis methods to test relationships between variables.

- For qualitative data, you can use methods such as thematic analysis to interpret patterns and meanings in the data.

Table of contents

Methods for collecting data, examples of data collection methods, methods for analyzing data, examples of data analysis methods, other interesting articles, frequently asked questions about research methods.

Data is the information that you collect for the purposes of answering your research question . The type of data you need depends on the aims of your research.

Qualitative vs. quantitative data

Your choice of qualitative or quantitative data collection depends on the type of knowledge you want to develop.

For questions about ideas, experiences and meanings, or to study something that can’t be described numerically, collect qualitative data .

If you want to develop a more mechanistic understanding of a topic, or your research involves hypothesis testing , collect quantitative data .

| Qualitative | to broader populations. . | |

|---|---|---|

| Quantitative | . |

You can also take a mixed methods approach , where you use both qualitative and quantitative research methods.

Primary vs. secondary research

Primary research is any original data that you collect yourself for the purposes of answering your research question (e.g. through surveys , observations and experiments ). Secondary research is data that has already been collected by other researchers (e.g. in a government census or previous scientific studies).

If you are exploring a novel research question, you’ll probably need to collect primary data . But if you want to synthesize existing knowledge, analyze historical trends, or identify patterns on a large scale, secondary data might be a better choice.

| Primary | . | methods. |

|---|---|---|

| Secondary |

Descriptive vs. experimental data

In descriptive research , you collect data about your study subject without intervening. The validity of your research will depend on your sampling method .

In experimental research , you systematically intervene in a process and measure the outcome. The validity of your research will depend on your experimental design .

To conduct an experiment, you need to be able to vary your independent variable , precisely measure your dependent variable, and control for confounding variables . If it’s practically and ethically possible, this method is the best choice for answering questions about cause and effect.

| Descriptive | . . | |

|---|---|---|

| Experimental |

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

| Research method | Primary or secondary? | Qualitative or quantitative? | When to use |

|---|---|---|---|

| Primary | Quantitative | To test cause-and-effect relationships. | |

| Primary | Quantitative | To understand general characteristics of a population. | |

| Interview/focus group | Primary | Qualitative | To gain more in-depth understanding of a topic. |

| Observation | Primary | Either | To understand how something occurs in its natural setting. |

| Secondary | Either | To situate your research in an existing body of work, or to evaluate trends within a research topic. | |

| Either | Either | To gain an in-depth understanding of a specific group or context, or when you don’t have the resources for a large study. |

Your data analysis methods will depend on the type of data you collect and how you prepare it for analysis.

Data can often be analyzed both quantitatively and qualitatively. For example, survey responses could be analyzed qualitatively by studying the meanings of responses or quantitatively by studying the frequencies of responses.

Qualitative analysis methods

Qualitative analysis is used to understand words, ideas, and experiences. You can use it to interpret data that was collected:

- From open-ended surveys and interviews , literature reviews , case studies , ethnographies , and other sources that use text rather than numbers.

- Using non-probability sampling methods .

Qualitative analysis tends to be quite flexible and relies on the researcher’s judgement, so you have to reflect carefully on your choices and assumptions and be careful to avoid research bias .

Quantitative analysis methods

Quantitative analysis uses numbers and statistics to understand frequencies, averages and correlations (in descriptive studies) or cause-and-effect relationships (in experiments).

You can use quantitative analysis to interpret data that was collected either:

- During an experiment .

- Using probability sampling methods .

Because the data is collected and analyzed in a statistically valid way, the results of quantitative analysis can be easily standardized and shared among researchers.

| Research method | Qualitative or quantitative? | When to use |

|---|---|---|

| Quantitative | To analyze data collected in a statistically valid manner (e.g. from experiments, surveys, and observations). | |

| Meta-analysis | Quantitative | To statistically analyze the results of a large collection of studies. Can only be applied to studies that collected data in a statistically valid manner. |

| Qualitative | To analyze data collected from interviews, , or textual sources. To understand general themes in the data and how they are communicated. | |

| Either | To analyze large volumes of textual or visual data collected from surveys, literature reviews, or other sources. Can be quantitative (i.e. frequencies of words) or qualitative (i.e. meanings of words). |

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Chi square test of independence

- Statistical power

- Descriptive statistics

- Degrees of freedom

- Pearson correlation

- Null hypothesis

- Double-blind study

- Case-control study

- Research ethics

- Data collection

- Hypothesis testing

- Structured interviews

Research bias

- Hawthorne effect

- Unconscious bias

- Recall bias

- Halo effect

- Self-serving bias

- Information bias

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to systematically measure variables and test hypotheses . Qualitative methods allow you to explore concepts and experiences in more detail.

In mixed methods research , you use both qualitative and quantitative data collection and analysis methods to answer your research question .

A sample is a subset of individuals from a larger population . Sampling means selecting the group that you will actually collect data from in your research. For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

In statistics, sampling allows you to test a hypothesis about the characteristics of a population.

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts and meanings, use qualitative methods .

- If you want to analyze a large amount of readily-available data, use secondary data. If you want data specific to your purposes with control over how it is generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Methodology refers to the overarching strategy and rationale of your research project . It involves studying the methods used in your field and the theories or principles behind them, in order to develop an approach that matches your objectives.

Methods are the specific tools and procedures you use to collect and analyze data (for example, experiments, surveys , and statistical tests ).

In shorter scientific papers, where the aim is to report the findings of a specific study, you might simply describe what you did in a methods section .

In a longer or more complex research project, such as a thesis or dissertation , you will probably include a methodology section , where you explain your approach to answering the research questions and cite relevant sources to support your choice of methods.

Is this article helpful?

Other students also liked, writing strong research questions | criteria & examples.

- What Is a Research Design | Types, Guide & Examples

- Data Collection | Definition, Methods & Examples

More interesting articles

- Between-Subjects Design | Examples, Pros, & Cons

- Cluster Sampling | A Simple Step-by-Step Guide with Examples

- Confounding Variables | Definition, Examples & Controls

- Construct Validity | Definition, Types, & Examples

- Content Analysis | Guide, Methods & Examples

- Control Groups and Treatment Groups | Uses & Examples

- Control Variables | What Are They & Why Do They Matter?

- Correlation vs. Causation | Difference, Designs & Examples

- Correlational Research | When & How to Use

- Critical Discourse Analysis | Definition, Guide & Examples

- Cross-Sectional Study | Definition, Uses & Examples

- Descriptive Research | Definition, Types, Methods & Examples

- Ethical Considerations in Research | Types & Examples

- Explanatory and Response Variables | Definitions & Examples

- Explanatory Research | Definition, Guide, & Examples

- Exploratory Research | Definition, Guide, & Examples

- External Validity | Definition, Types, Threats & Examples

- Extraneous Variables | Examples, Types & Controls

- Guide to Experimental Design | Overview, Steps, & Examples

- How Do You Incorporate an Interview into a Dissertation? | Tips

- How to Do Thematic Analysis | Step-by-Step Guide & Examples

- How to Write a Literature Review | Guide, Examples, & Templates

- How to Write a Strong Hypothesis | Steps & Examples

- Inclusion and Exclusion Criteria | Examples & Definition

- Independent vs. Dependent Variables | Definition & Examples

- Inductive Reasoning | Types, Examples, Explanation

- Inductive vs. Deductive Research Approach | Steps & Examples

- Internal Validity in Research | Definition, Threats, & Examples

- Internal vs. External Validity | Understanding Differences & Threats

- Longitudinal Study | Definition, Approaches & Examples

- Mediator vs. Moderator Variables | Differences & Examples

- Mixed Methods Research | Definition, Guide & Examples

- Multistage Sampling | Introductory Guide & Examples

- Naturalistic Observation | Definition, Guide & Examples

- Operationalization | A Guide with Examples, Pros & Cons

- Population vs. Sample | Definitions, Differences & Examples

- Primary Research | Definition, Types, & Examples

- Qualitative vs. Quantitative Research | Differences, Examples & Methods

- Quasi-Experimental Design | Definition, Types & Examples

- Questionnaire Design | Methods, Question Types & Examples

- Random Assignment in Experiments | Introduction & Examples

- Random vs. Systematic Error | Definition & Examples

- Reliability vs. Validity in Research | Difference, Types and Examples

- Reproducibility vs Replicability | Difference & Examples

- Reproducibility vs. Replicability | Difference & Examples

- Sampling Methods | Types, Techniques & Examples

- Semi-Structured Interview | Definition, Guide & Examples

- Simple Random Sampling | Definition, Steps & Examples

- Single, Double, & Triple Blind Study | Definition & Examples

- Stratified Sampling | Definition, Guide & Examples

- Structured Interview | Definition, Guide & Examples

- Survey Research | Definition, Examples & Methods

- Systematic Review | Definition, Example, & Guide

- Systematic Sampling | A Step-by-Step Guide with Examples

- Textual Analysis | Guide, 3 Approaches & Examples

- The 4 Types of Reliability in Research | Definitions & Examples

- The 4 Types of Validity in Research | Definitions & Examples

- Transcribing an Interview | 5 Steps & Transcription Software

- Triangulation in Research | Guide, Types, Examples

- Types of Interviews in Research | Guide & Examples

- Types of Research Designs Compared | Guide & Examples

- Types of Variables in Research & Statistics | Examples

- Unstructured Interview | Definition, Guide & Examples

- What Is a Case Study? | Definition, Examples & Methods

- What Is a Case-Control Study? | Definition & Examples

- What Is a Cohort Study? | Definition & Examples

- What Is a Conceptual Framework? | Tips & Examples

- What Is a Controlled Experiment? | Definitions & Examples

- What Is a Double-Barreled Question?

- What Is a Focus Group? | Step-by-Step Guide & Examples

- What Is a Likert Scale? | Guide & Examples

- What Is a Prospective Cohort Study? | Definition & Examples

- What Is a Retrospective Cohort Study? | Definition & Examples

- What Is Action Research? | Definition & Examples

- What Is an Observational Study? | Guide & Examples

- What Is Concurrent Validity? | Definition & Examples

- What Is Content Validity? | Definition & Examples

- What Is Convenience Sampling? | Definition & Examples

- What Is Convergent Validity? | Definition & Examples

- What Is Criterion Validity? | Definition & Examples

- What Is Data Cleansing? | Definition, Guide & Examples

- What Is Deductive Reasoning? | Explanation & Examples

- What Is Discriminant Validity? | Definition & Example

- What Is Ecological Validity? | Definition & Examples

- What Is Ethnography? | Definition, Guide & Examples

- What Is Face Validity? | Guide, Definition & Examples

- What Is Non-Probability Sampling? | Types & Examples

- What Is Participant Observation? | Definition & Examples

- What Is Peer Review? | Types & Examples

- What Is Predictive Validity? | Examples & Definition

- What Is Probability Sampling? | Types & Examples

- What Is Purposive Sampling? | Definition & Examples

- What Is Qualitative Observation? | Definition & Examples

- What Is Qualitative Research? | Methods & Examples

- What Is Quantitative Observation? | Definition & Examples

- What Is Quantitative Research? | Definition, Uses & Methods

"I thought AI Proofreading was useless but.."

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

- Privacy Policy

Home » Research Data – Types Methods and Examples

Research Data – Types Methods and Examples

Table of Contents

Research Data

Research data refers to any information or evidence gathered through systematic investigation or experimentation to support or refute a hypothesis or answer a research question.

It includes both primary and secondary data, and can be in various formats such as numerical, textual, audiovisual, or visual. Research data plays a critical role in scientific inquiry and is often subject to rigorous analysis, interpretation, and dissemination to advance knowledge and inform decision-making.

Types of Research Data

There are generally four types of research data:

Quantitative Data

This type of data involves the collection and analysis of numerical data. It is often gathered through surveys, experiments, or other types of structured data collection methods. Quantitative data can be analyzed using statistical techniques to identify patterns or relationships in the data.

Qualitative Data