Official websites use .gov

A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS

A lock ( ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

Logic Models

CDC Approach to Evaluation

A logic model is a graphic depiction (road map) that presents the shared relationships among the resources, activities, outputs, outcomes, and impact for your program. It depicts the relationship between your program’s activities and its intended effects. Learn more about logic models and the key steps to developing a useful logic model on the CDC Program Evaluation Framework Checklist for Step 2 page .

For additional logic model information:

Division for Heart Disease and Stroke Prevention: Developing and Using a Logic Model

E-mail: [email protected]

To receive email updates about this page, enter your email address:

Exit Notification / Disclaimer Policy

- The Centers for Disease Control and Prevention (CDC) cannot attest to the accuracy of a non-federal website.

- Linking to a non-federal website does not constitute an endorsement by CDC or any of its employees of the sponsors or the information and products presented on the website.

- You will be subject to the destination website's privacy policy when you follow the link.

- CDC is not responsible for Section 508 compliance (accessibility) on other federal or private website.

An official website of the United States government, Department of Justice.

Here's how you know

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock A locked padlock ) or https:// means you’ve safely connected to the .gov website. Share sensitive information only on official, secure websites.

Center for Research Partnerships and Program Evaluation (CRPPE)

Logic models.

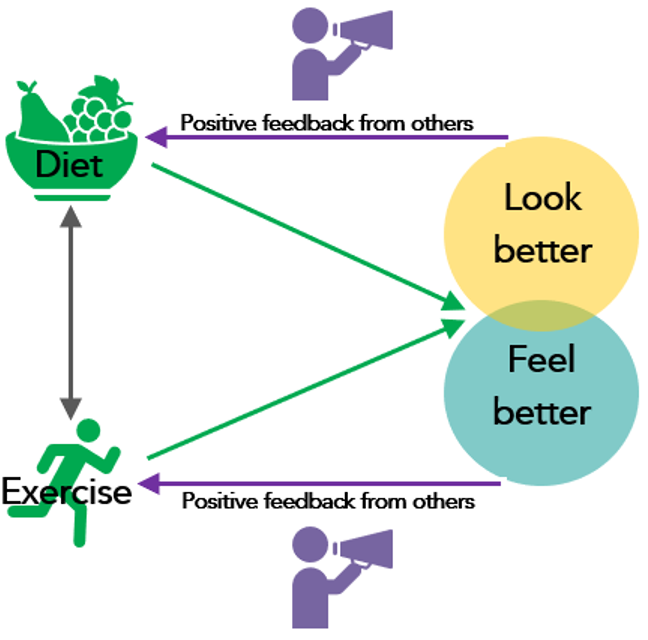

While there are many forms, logic models specify relationships among program goals, objectives, activities, outputs, and outcomes. Logic models are often developed using graphics or schematics and allow the program manager or evaluator to clearly indicate the theoretical connections among program components: that is, how program activities will lead to the accomplishment of objectives, and how accomplishing objectives will lead to the fulfillment of goals. In addition, logic models used for evaluation include the measures that will be used to determine if activities were carried out as planned (output measures) and if the program's objectives have been met (outcome measures).

Why Use a Logic Model?

Logic models are a useful tool for program development and evaluation planning for several reasons:

- They serve as a format for clarifying what the program hopes to achieve.

- They are an effective way to monitor program activities.

- They can be used for either performance measurement or evaluation.

- They help programs stay on track as well as plan for the future.

- They are an excellent way to document what a program intends to do and what it is actually doing.

Learn More about What a Logic Model Is and Why to Use It

Logic Model for Program Planning and Evaluation (University of Idaho-Extension)

How to Develop a Logic Model

Developing a logic model requires a program planner to think systematically about what they want their program to accomplish and how it will be done. The logic model should illustrate the linkages among the elements of the program including the goal, objectives, resources, activities, process measures, outcomes, outcome measures, and external factors.

Logic Model Schematic

The following logic model format and discussion was developed by the Juvenile Justice Evaluation Center (JJEC) and maintained online by the Justice Research and Statistics Association (www.jrsa.org) from 1998 to 2007.

At the top of the logic model example is a goal that represents a broad, measurable statement describing the desired long-term impact of the program. Knowing the expected long-term achievements a program is expected to make will help in determining what the overall program goal should be. Sometimes goals are not always achieved during the operation of a program. However, evaluators or program planners should continually re-visit the program's goals during program planning.

An objective is a more specific, measurable concept focused on the immediate or direct outcomes of the program that support accomplishment of your goal. Unlike goals, objectives should be achieved during the program. A clear objective will provide information concerning the direction, target, and timeframe for the program. Knowing the difference your program will make, as well as who will be impacted, and when will be helpful when developing focused objectives for your program.

Resources or inputs can include staff, facilities, materials, or funds, etc--anything invested in the program to accomplish the work that must be done. The resources needed to conduct a program should be articulated during the early stages of program development to ensure that a program is realistically implemented and capable of meeting its stated goal(s).

Activities represent efforts conducted to achieve the program objectives. After considering the resources a program will need, the specific activities that will be used to bring about the intended changes or results must be determined.

Process Measures are data used to demonstrate the implementation of activities. These include products of activities and indicators of services provided. Process measures provide documentation of whether a program is being implemented as originally intended. For example, process measures for a mental health court program might include the number of treatment contacts or the type of treatment received.

Outcome measures represent the actual change(s) or lack thereof in the target (e.g., clients or system) of the program that are directly related to the goal(s) and objectives. Outcomes may include intended or unintended consequences. Three levels of outcomes to consider include:

External Factors , located at the bottom of the logic model example, are factors within the system that may affect program operation . External factors vary according to program setting and may include influences such as development of or revisions to state/federal laws, unexpected changes in data sharing procedures, or other similar simultaneously running programs. It is important to think about external factors that might change how your program operates or affect program outcomes. External factors should be included during the development of the logic model so that they can be taken into account when assessing program operations or when interpreting the absence or presence of program changes.

If-Then Logic Model

Another way to develop a logic model is by using an "if-then" sequence that indicates how each component relates to each other. Conceptually, the if-then logic model works like this: IF [program activity ] THEN [program objective ] and IF [program objective ] THEN [program goal ].

In reality, the if-then logic model looks like this: IF a truancy reduction program is offered to youth who have been truant from school THEN their school attendance will increase and IF their school attendance is increased THEN their graduation rates will increase.

Another way to conceptualize the "if-then" format:

- If the required resources are invested, then those resources can be used to conduct the program activities.

- If the activities are completed, then the desired outputs for the target population will be produced.

- If the outputs are produced, then the outcomes will indicate that the objectives of the program have been accomplished.

Developing program logic using an "if-then" sequence can help a program manager or evaluator maintain focus and direction for the project and help specify what will be measured through the evaluation.

Common Problems When Developing Logic Models

Links among elements (e.g., objectives, activities, outcome measures) of the logic model are unclear or missing. It should be obvious which objective is tied to which activity, process measure, etc. Oftentimes logic models contain lists of each of the elements of a logic model without specifying which item on one list is related to which item on another list. This can easily lead to confusion regarding the relationship among elements or result in accidental omission of an item on a list of elements.

Too much (or too little) information is provided on the logic model. The logic model should include only the primary elements related to program/project design and operation. As a general rule, it should provide the "big picture" of the program/project and avoid providing very specific details related to how, for example, interventions will occur, or a list of all the agencies that will serve to improve collaboration efforts. If you feel that a model with all those details is necessary, consider developing two models; a model with the fundamental elements and a model with the details.

Objectives are confused with activities. Make sure that items listed as objectives are in fact objectives rather than activities. Anything related to program implementation or a task that is being carried out in order to accomplish something is an activity rather than an objective. For example, 'hire 10 staff members' is an activity that is being carried out in order to accomplish an objective such as 'improve response time for incoming phone calls.'

Objectives are not measurable. Unlike goals, which are not considered measurable because they are broad, mission-like statements, objectives should be measurable and directly related to the accomplishment of the goal. An objective is measurable when it specifically identifies the target (who or what will be affected), is time-oriented (when it will be accomplished), and indicates direction of desired change. In many cases, measurable objectives also include the amount of change desired.

Other Logic Model Examples

- Phoenix Gang Logic Model

- OJJDP Generic Logic Model

- Project Safe Neighborhoods Example

- Logic Model: A Comprehensive Guide to Program Planning, Implementation, and Evaluation

- Learning Center

Learn how to use a logic model to guide your program planning, implementation, and evaluation. This comprehensive guide covers everything you need to know to get started.

Table of Contents

- What are Logic Models and Why are They Important in Evaluation?

- The Key Components of a Logic Model: Inputs, Activities, Outputs, Outcomes, and Impacts

- Creating a Logic Model: Step-by-Step Guide and Best Practices

- Using Logic Models to Guide Evaluation Planning, Implementation, and Reporting

- Common Challenges and Solutions in Developing and Using Logic Models in Evaluation

- Enhancing the Usefulness and Credibility of Logic Models: Tips for Effective Communication and Stakeholder Engagement

- Advanced Topics in Logic Modeling: Theory of Change, Program Theory, and Impact Pathways

- Resources and Tools for Developing and Using Logic Models in Evaluation.

1. What are Logic Models and Why are They Important in Evaluation?

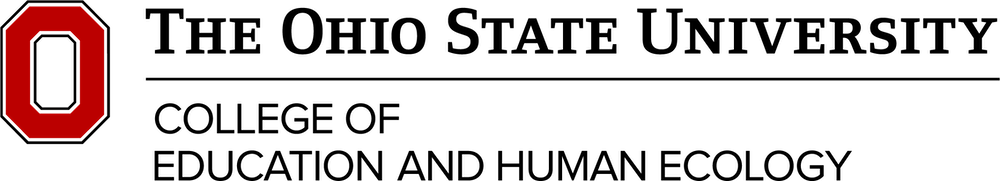

Logic models are visual representations or diagrams that illustrate how a program or intervention is intended to work. They map out the relationships between program inputs, activities, outputs, and outcomes, and can be used to communicate program goals and objectives, as well as guide program design, implementation, and evaluation.

Logic models are important in evaluation because they provide a clear and systematic way to identify and measure program inputs, activities, outputs, and outcomes. By mapping out the underlying assumptions and theories of change that drive a program, logic models help evaluators identify potential gaps, inconsistencies, and areas of improvement in program design and implementation. They also help evaluators develop evaluation plans and strategies, identify appropriate indicators and measures, and track progress toward program goals and objectives.

Logic models provide a structured and systematic approach to program evaluation that helps ensure that programs are designed, implemented, and evaluated in a rigorous and effective manner.

2. The Key Components of a Logic Model: Inputs, Activities, Outputs, Outcomes, and Impacts

The key components of a logic model are typically organized into five main categories: inputs, activities, outputs, outcomes, and impacts. Here is a brief description of each component:

- Inputs : These are the resources, both human and material, that are invested in the program. Inputs can include things like funding, staff time, equipment, and materials.

- Activities : These are the specific actions or interventions that the program undertakes in order to achieve its objectives. Activities can include things like training, outreach, or counseling.

- Outputs : These are the immediate products or services that result from the program’s activities. Outputs can include things like the number of people trained, the number of workshops conducted, or the number of brochures distributed.

- Outcomes : These are the changes that occur as a result of the program’s outputs. Outcomes can be short-term, intermediate, or long-term and can include changes in knowledge, behavior, or attitudes.

- Impacts : These are the broader changes that occur as a result of the program’s outcomes. Impacts can include changes in social, economic, or environmental conditions and are often difficult to measure.

By clearly identifying and mapping out each of these components, a logic model provides a clear and systematic way to understand how a program is designed to work, what resources are needed to implement it, and what outcomes and impacts it is expected to achieve.

3. Creating a Logic Model: Step-by-Step Guide and Best Practices

Creating a logic model is an iterative process that involves collaboration among stakeholders to develop a shared understanding of the program’s goals, objectives, and expected outcomes. Here is a step-by-step guide to creating a logic model, along with some best practices:

Step 1: Identify the Program Goal

The first step in creating a logic model is to identify the program’s overall goal. This should be a broad statement that reflects the program’s purpose and the desired change it seeks to achieve.

- Best Practice: The goal should be specific, measurable, achievable, relevant, and time-bound ( SMART ).

Step 2: Identify the Program Inputs

The next step is to identify the resources required to implement the program. Inputs can include staff, volunteers, funding, equipment, and other resources necessary to implement the program.

- Best Practice: Inputs should be clearly defined and quantified to help with budgeting and resource allocation.

Step 3: Identify the Program Activities

Once the inputs have been identified, the next step is to identify the specific activities that will be undertaken to achieve the program’s goal. These activities should be based on evidence-based best practices and should be feasible given the available resources.

- Best Practice: Activities should be designed to address the root causes of the problem the program is addressing.

Step 4: Identify the Program Outputs

Outputs are the immediate products or services that result from the program’s activities. These should be measurable and directly linked to the program’s activities.

- Best Practice: Outputs should be defined in terms of quantity, quality, and timeliness to ensure that they are meaningful and relevant.

Step 5: Identify the Program Outcomes

Outcomes are the changes that occur as a result of the program’s outputs. These should be specific, measurable, and relevant to the program’s goal and should reflect changes in knowledge, skills, behaviors, or attitudes.

- Best Practice: Outcomes should be defined in terms of short-term, intermediate, and long-term changes to provide a comprehensive picture of program impact.

Step 6: Identify the Program Impacts

Impacts are the broader changes that occur as a result of the program’s outcomes. These may be difficult to measure and may require longer-term evaluation efforts.

- Best Practice: Impacts should be defined in terms of their relevance and importance to stakeholders and should be used to guide ongoing program improvement efforts.

Step 7: Create the Logic Model Diagram

Once all of the components have been identified and defined, it is time to create the logic model diagram. This should be a visual representation of the program’s inputs, activities, outputs, outcomes, and impacts that illustrates how they are linked to one another.

- Best Practice: The logic model diagram should be clear and easy to understand, with each component labeled and defined.

Step 8: Use the Logic Model for Program Planning, Implementation, and Evaluation

Finally, the logic model should be used to guide program planning, implementation, and evaluation efforts. It should be shared with all stakeholders to ensure that everyone has a clear understanding of the program’s goals and objectives and how they will be achieved.

- Best Practice: The logic model should be reviewed and updated regularly to ensure that it remains relevant and useful over time.

4. Using Logic Models to Guide Evaluation Planning, Implementation, and Reporting

A logic model is a visual representation of the relationships among the inputs, activities, outputs, and outcomes of a program or intervention. It can be used to guide evaluation planning, implementation, and reporting by providing a framework for understanding the logic behind the program and how it is expected to produce results.

Here are some ways in which logic models can be used to guide evaluation:

- Planning: Logic models can be used during the planning phase to identify the program’s goals and objectives, the activities needed to achieve those goals, and the resources required. It can also help identify potential barriers and facilitators to implementation.

- Implementation: Logic models can help ensure that program activities are being implemented as intended. By tracking the inputs and outputs, it can be determined whether the program is being implemented as planned and whether the program is on track to achieve its goals.

- Evaluation: Logic models can guide the evaluation process by helping to identify the program’s intended outcomes and how they will be measured. It can also help to identify potential confounding variables that may influence the outcomes.

- Reporting: Logic models can be used to report on the program’s progress and impact. By comparing the program’s outputs and outcomes to the original logic model, it can be determined whether the program was successful in achieving its goals.

Logic models provide a useful tool for program planning, implementation, and evaluation. By using logic models to guide these processes, it is possible to ensure that programs are being implemented effectively and efficiently and that they are producing the desired outcomes.

5. Common Challenges and Solutions in Developing and Using Logic Models in Evaluation

Developing and using logic models in evaluation can be a challenging process. Here are some common challenges and solutions to consider:

- Challenge: Lack of stakeholder buy-in. If stakeholders are not involved in the development of the logic model or do not understand its purpose, they may not support its use in the evaluation process. Solution: Involve stakeholders in the development process and explain the purpose and benefits of using a logic model for evaluation.

- Challenge: Overcomplicated or unrealistic models. Logic models that are too complex or unrealistic can be difficult to implement and evaluate effectively. Solution: Keep the logic model simple and focused on the most important program components. Ensure that it is based on realistic assumptions and achievable outcomes.

- Challenge: Insufficient data. Lack of data can make it difficult to develop a logic model or to evaluate program outcomes. Solution: Collect baseline data before program implementation and ongoing data during implementation. Use multiple sources of data to validate the model and outcomes.

- Challenge: Difficulty in identifying outcomes. Outcomes can be challenging to identify and measure, especially in complex programs. Solution: Involve stakeholders in identifying outcomes and ensure that they are realistic, measurable, and aligned with the program goals.

- Challenge: Lack of flexibility. Logic models may need to be revised or updated as the program progresses or in response to changes in the environment. Solution: Build in flexibility to the logic model and be willing to modify it as needed to reflect changes in the program or environment.

- Challenge: Misuse of the logic model. If the logic model is not used consistently throughout the evaluation process, it may not be effective in guiding the evaluation or communicating results. Solution: Ensure that all stakeholders understand the purpose of the logic model and how it will be used throughout the evaluation process. Train staff on its use and encourage consistent use across the organization.

By addressing these common challenges, organizations can develop and use logic models effectively in program evaluation, leading to better-informed decision-making and improved program outcomes.

6. Enhancing the Usefulness and Credibility of Logic Models: Tips for Effective Communication and Stakeholder Engagement

To enhance the usefulness and credibility of logic models, effective communication and stakeholder engagement are essential. Here are some tips to help organizations communicate their logic models effectively and engage stakeholders:

- Use plain language: Avoid using technical jargon or acronyms that stakeholders may not understand. Use plain language to explain the logic model and its purpose.

- Provide context: Provide stakeholders with context about the program, its goals, and its intended outcomes. This will help stakeholders better understand the logic model and its relevance to the program.

- Use visuals: Visual aids such as diagrams, flowcharts, and infographics can help stakeholders better understand the logic model and how it relates to the program.

- Solicit feedback: Solicit feedback from stakeholders on the logic model, including its assumptions, activities, outputs, and outcomes. This will help ensure that the logic model is accurate and reflects stakeholders’ perspectives.

- Involve stakeholders: Involve stakeholders in the development and implementation of the logic model. This will help ensure that the logic model is relevant and useful to stakeholders and will increase stakeholder buy-in.

- Communicate results: Communicate the results of the evaluation using the logic model. This will help stakeholders understand how the program has progressed and how it has achieved its intended outcomes.

- Provide training: Provide training on the use of the logic model to stakeholders. This will help ensure that all stakeholders understand how to use the logic model and can communicate its importance to others.

By following these tips, organizations can effectively communicate their logic models and engage stakeholders in the evaluation process, leading to better-informed decision making and improved program outcomes.

7. Advanced Topics in Logic Modeling: Theory of Change, Program Theory, and Impact Pathways

Logic models are a useful tool for program evaluation, but there are some advanced topics that can enhance their effectiveness. Here are some advanced topics in logic modeling to consider:

- Theory of Change: A theory of change is a framework that outlines how a program will create change or achieve its intended outcomes. It provides a roadmap for how activities and outputs will lead to outcomes and impact. A theory of change can help identify assumptions and gaps in the logic model, and can be used to guide program planning and evaluation.

- Program Theory: Program theory is a conceptual framework that explains how a program is intended to work. It provides a detailed explanation of the underlying assumptions, logic, and mechanisms of the program. Program theory can be used to guide the development of a logic model and to help stakeholders better understand the program.

- Impact Pathways: Impact pathways are a visual representation of how a program’s activities and outputs lead to outcomes and impact. They can be used to help stakeholders understand the sequence of events that lead to impact and to identify the key points in the program where outcomes and impact can be measured.

These advanced topics can help organizations develop more effective logic models and better understand their programs. By incorporating a theory of change, program theory, and impact pathways into the logic model, organizations can identify the underlying assumptions, mechanisms, and causal pathways of their programs. This can help guide program planning, implementation, and evaluation, leading to better-informed decision making and improved program outcomes.

8. Resources and Tools for Developing and Using Logic Models in Evaluation

Developing and using logic models in evaluation can be a complex process. Fortunately, there are many resources and tools available to help organizations create effective logic models. Here are some resources and tools to consider:

- The Kellogg Foundation Logic Model Development Guide: This guide provides a comprehensive overview of logic models, including their purpose, components, and development process. It also includes case studies and examples.

- The W.K. Kellogg Foundation Evaluation Handbook: This handbook provides guidance on all aspects of program evaluation, including logic model development. It includes information on how to develop a logic model, how to use it in evaluation, and how to communicate the results.

- The CDC Framework for Program Evaluation: This framework provides a step-by-step process for conducting program evaluation, including developing a logic model. It also includes guidance on selecting evaluation methods and analyzing data.

- The University of Wisconsin-Extension Logic Model Resources: This website provides a variety of resources for developing and using logic models, including templates, examples, and guides.

- The Aspen Institute Program Planning and Evaluation Toolkit: This toolkit provides guidance on program planning and evaluation, including logic model development. It includes templates and worksheets to help organizations develop and use logic models.

- The Evaluation Toolbox: This online resource provides guidance on all aspects of program evaluation, including logic model development. It includes examples, templates, and guides.

By using these resources and tools, organizations can develop effective logic models for program evaluation. These tools can help organizations identify the key components of their program, define their intended outcomes, and develop a roadmap for program planning, implementation, and evaluation.

This piece of write up is insightful and pointed to the tenets of project Implementation needs. Kindly request that you share with me this piece through email, I and any other relevant information necessary for project Implementation.

Thank you for the elaborate write-up. I was looking for a detailed explanation of logic model. this has helped me. Thanks

Your write-up has helped me a lot. May i ask for the material, good outcomes bring joy in everyone’s life.

Leave a Comment Cancel Reply

Your email address will not be published.

How strong is my Resume?

Only 2% of resumes land interviews.

Land a better, higher-paying career

Jobs for You

Maternal, newborn, child health, and nutrition team senior technical advisor.

- United States (Remote)

Junior Program Analyst/Admin Assistant – USAID LAC/FO

- United States

Tax Coordinator – USAID Uganda

Monitoring and evaluation advisor.

- Cuso International

Monitoring, Evaluation &Learning (MEL) Specialist

- Brussels, Belgium

- European Endowment for Democracy (EED)

Economics and Business Management Expert

Governance and sustainability expert, agriculture expert with irrigation background, nutritionist with food security background, director of impact and evaluation.

- Glendale Heights, IL 60137, USA

- Bridge Communities

USAID Benin Advisor / Program Officer

Usaid/drc elections advisor.

- Democratic Republic of the Congo

Business Development Associate

Agriculture and resilience advisor, usaid/drc program officer, services you might be interested in, useful guides ....

How to Create a Strong Resume

Monitoring And Evaluation Specialist Resume

Resume Length for the International Development Sector

Types of Evaluation

Monitoring, Evaluation, Accountability, and Learning (MEAL)

LAND A JOB REFERRAL IN 2 WEEKS (NO ONLINE APPS!)

Sign Up & To Get My Free Referral Toolkit Now:

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

The Implementation Research Logic Model: a method for planning, executing, reporting, and synthesizing implementation projects

Affiliations.

- 1 Department of Population Health Sciences, University of Utah School of Medicine, Salt Lake City, Utah, USA. [email protected].

- 2 Center for Prevention Implementation Methodology for Drug Abuse and HIV, Department of Psychiatry and Behavioral Sciences, Department of Preventive Medicine, Department of Medical Social Sciences, and Department of Pediatrics, Northwestern University Feinberg School of Medicine, Chicago, Illinois, USA. [email protected].

- 3 Center for Prevention Implementation Methodology for Drug Abuse and HIV, Department of Psychiatry and Behavioral Sciences, Feinberg School of Medicine; Institute for Sexual and Gender Minority Health and Wellbeing, Northwestern University Chicago, Chicago, Illinois, USA.

- 4 Shirley Ryan AbilityLab and Center for Prevention Implementation Methodology for Drug Abuse and HIV, Department of Psychiatry and Behavioral Sciences and Department of Physical Medicine and Rehabilitation, Northwestern University Feinberg School of Medicine, Chicago, Illinois, USA.

- PMID: 32988389

- PMCID: PMC7523057

- DOI: 10.1186/s13012-020-01041-8

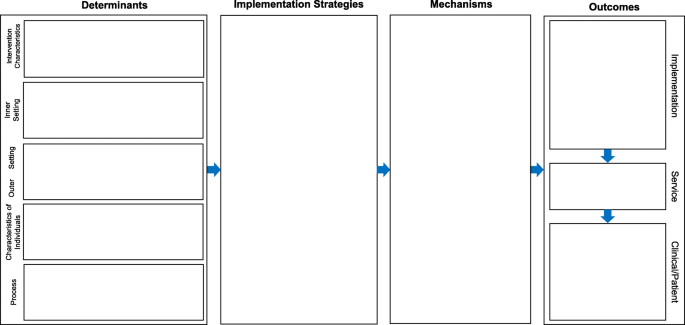

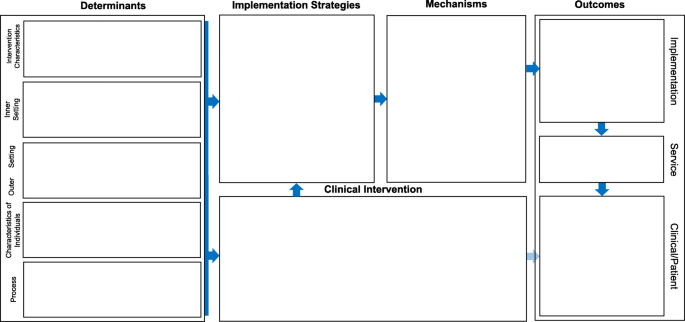

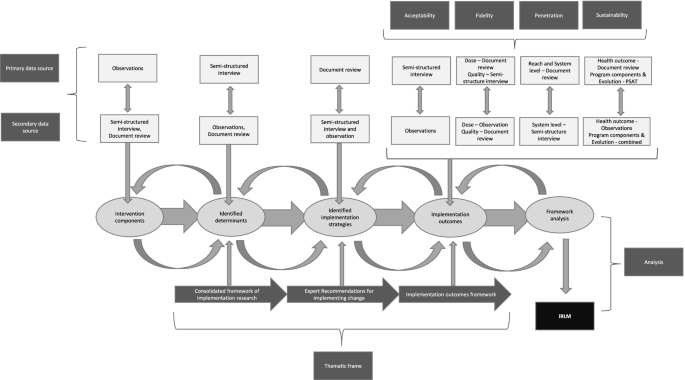

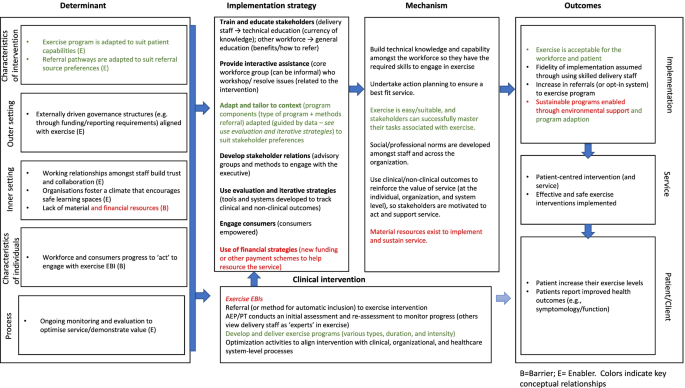

Background: Numerous models, frameworks, and theories exist for specific aspects of implementation research, including for determinants, strategies, and outcomes. However, implementation research projects often fail to provide a coherent rationale or justification for how these aspects are selected and tested in relation to one another. Despite this need to better specify the conceptual linkages between the core elements involved in projects, few tools or methods have been developed to aid in this task. The Implementation Research Logic Model (IRLM) was created for this purpose and to enhance the rigor and transparency of describing the often-complex processes of improving the adoption of evidence-based interventions in healthcare delivery systems.

Methods: The IRLM structure and guiding principles were developed through a series of preliminary activities with multiple investigators representing diverse implementation research projects in terms of contexts, research designs, and implementation strategies being evaluated. The utility of the IRLM was evaluated in the course of a 2-day training to over 130 implementation researchers and healthcare delivery system partners.

Results: Preliminary work with the IRLM produced a core structure and multiple variations for common implementation research designs and situations, as well as guiding principles and suggestions for use. Results of the survey indicated a high utility of the IRLM for multiple purposes, such as improving rigor and reproducibility of projects; serving as a "roadmap" for how the project is to be carried out; clearly reporting and specifying how the project is to be conducted; and understanding the connections between determinants, strategies, mechanisms, and outcomes for their project.

Conclusions: The IRLM is a semi-structured, principle-guided tool designed to improve the specification, rigor, reproducibility, and testable causal pathways involved in implementation research projects. The IRLM can also aid implementation researchers and implementation partners in the planning and execution of practice change initiatives. Adaptation and refinement of the IRLM are ongoing, as is the development of resources for use and applications to diverse projects, to address the challenges of this complex scientific field.

Keywords: Integration; Logic models; Program theory; Study specification.

PubMed Disclaimer

Conflict of interest statement

None declared.

Implementation Research Logic Model (IRLM)…

Implementation Research Logic Model (IRLM) Standard Form. Notes. Domain names in the determinants…

Implementation Research Logic Model (IRLM) Standard Form with Intervention. Notes. Domain names in…

- Letter to the editor on "the implementation research logic model: a method for planning, executing, reporting, and synthesizing implementation projects" (Smith JD, Li DH, Rafferty MR. the implementation research logic model: a method for planning, executing, reporting, and synthesizing implementation projects. Implement Sci. 2020;15 (1):84. Doi:10.1186/s13012-020-01041-8). Sales AE, Barnaby DP, Rentes VC. Sales AE, et al. Implement Sci. 2021 Nov 17;16(1):97. doi: 10.1186/s13012-021-01169-1. Implement Sci. 2021. PMID: 34789294 Free PMC article. No abstract available.

Similar articles

- Using the Implementation Research Logic Model to design and implement community-based management of possible serious bacterial infection during COVID-19 pandemic in Ethiopia. Tiruneh GT, Nigatu TG, Magge H, Hirschhorn LR. Tiruneh GT, et al. BMC Health Serv Res. 2022 Dec 13;22(1):1515. doi: 10.1186/s12913-022-08945-9. BMC Health Serv Res. 2022. PMID: 36514111 Free PMC article.

- Developing an implementation research logic model: using a multiple case study design to establish a worked exemplar. Czosnek L, Zopf EM, Cormie P, Rosenbaum S, Richards J, Rankin NM. Czosnek L, et al. Implement Sci Commun. 2022 Aug 16;3(1):90. doi: 10.1186/s43058-022-00337-8. Implement Sci Commun. 2022. PMID: 35974402 Free PMC article.

- Culture of Care: Organizational Responsibilities. Brown MJ, Symonowicz C, Medina LV, Bratcher NA, Buckmaster CA, Klein H, Anderson LC. Brown MJ, et al. In: Weichbrod RH, Thompson GA, Norton JN, editors. Management of Animal Care and Use Programs in Research, Education, and Testing. 2nd edition. Boca Raton (FL): CRC Press/Taylor & Francis; 2018. Chapter 2. In: Weichbrod RH, Thompson GA, Norton JN, editors. Management of Animal Care and Use Programs in Research, Education, and Testing. 2nd edition. Boca Raton (FL): CRC Press/Taylor & Francis; 2018. Chapter 2. PMID: 29787190 Free Books & Documents. Review.

- The Effectiveness of Integrated Care Pathways for Adults and Children in Health Care Settings: A Systematic Review. Allen D, Gillen E, Rixson L. Allen D, et al. JBI Libr Syst Rev. 2009;7(3):80-129. doi: 10.11124/01938924-200907030-00001. JBI Libr Syst Rev. 2009. PMID: 27820426

- Family planning operations research in Africa: reviewing a decade of experience. Wawer MJ, McNamara R, McGinn T, Lauro D. Wawer MJ, et al. Stud Fam Plann. 1991 Sep-Oct;22(5):279-93. Stud Fam Plann. 1991. PMID: 1759274 Review.

- Pre-exposure prophylaxis (PrEP) among people who use drugs: a qualitative scoping review of implementation determinants and change methods. Merle JL, Zapata JP, Quieroz A, Zamantakis A, Sanuade O, Mustanski B, Smith JD. Merle JL, et al. Addict Sci Clin Pract. 2024 May 30;19(1):46. doi: 10.1186/s13722-024-00478-2. Addict Sci Clin Pract. 2024. PMID: 38816889 Free PMC article. Review.

- Incorporating implementation science principles into curricular design. Gottlieb M, Bobitt J, Kotini-Shah P, Khosla S, Watson DP. Gottlieb M, et al. AEM Educ Train. 2024 May 27;8(3):e10996. doi: 10.1002/aet2.10996. eCollection 2024 Jun. AEM Educ Train. 2024. PMID: 38808130

- Exploring the content and delivery of feedback facilitation co-interventions: a systematic review. Sykes M, Rosenberg-Yunger ZRS, Quigley M, Gupta L, Thomas O, Robinson L, Caulfield K, Ivers N, Alderson S. Sykes M, et al. Implement Sci. 2024 May 28;19(1):37. doi: 10.1186/s13012-024-01365-9. Implement Sci. 2024. PMID: 38807219 Free PMC article. Review.

- MyHospitalVoice - a digital tool co-created with children and adolescents that captures patient-reported experience measures: a study protocol. Hybschmann J, Sørensen JL, Thestrup J, Pappot H, Boisen KA, Frandsen TL, Gjærde LK. Hybschmann J, et al. Res Involv Engagem. 2024 May 21;10(1):49. doi: 10.1186/s40900-024-00582-2. Res Involv Engagem. 2024. PMID: 38773648 Free PMC article. Review.

- Getting cozy with causality: Advances to the causal pathway diagramming method to enhance implementation precision. Klasnja P, Meza RD, Pullmann MD, Mettert KD, Hawkes R, Palazzo L, Weiner BJ, Lewis CC. Klasnja P, et al. Implement Res Pract. 2024 Apr 30;5:26334895241248851. doi: 10.1177/26334895241248851. eCollection 2024 Jan-Dec. Implement Res Pract. 2024. PMID: 38694167 Free PMC article.

- Nosek BA, Alter G, Banks GC, Borsboom D, Bowman SD, Breckler SJ, Buck S, Chambers CD, Chin G, Christensen G, et al. Promoting an open research culture. Science. 2015;348:1422–1425. doi: 10.1126/science.aab2374. - DOI - PMC - PubMed

- Slaughter SE, Hill JN, Snelgrove-Clarke E. What is the extent and quality of documentation and reporting of fidelity to implementation strategies: a scoping review. Implement Sci. 2015;10:1–12. doi: 10.1186/s13012-015-0320-3. - DOI - PMC - PubMed

- Brown CH, Curran G, Palinkas LA, Aarons GA, Wells KB, Jones L, Collins LM, Duan N, Mittman BS, Wallace A, et al: An overview of research and evaluation designs for dissemination and implementation. Annual Review of Public Health 2017, 38:null. - PMC - PubMed

- Hwang S, Birken SA, Melvin CL, Rohweder CL, Smith JD: Designs and methods for implementation research: advancing the mission of the CTSA program. Journal of Clinical and Translational Science 2020:Available online. - PMC - PubMed

- Smith JD. An Implementation Research Logic Model: a step toward improving scientific rigor, transparency, reproducibility, and specification. Implement Sci. 2018;14:S39.

Publication types

- Search in MeSH

Related information

- Cited in Books

Grants and funding

- R25 MH080916/MH/NIMH NIH HHS/United States

- UL1 TR001422/TR/NCATS NIH HHS/United States

- R01 MH118213/MH/NIMH NIH HHS/United States

- P30 DA027828/DA/NIDA NIH HHS/United States

- P30 AI117943/AI/NIAID NIH HHS/United States

- UM1 CA233035/CA/NCI NIH HHS/United States

- F32 HS025077/HS/AHRQ HHS/United States

- U18 DP006255/DP/NCCDPHP CDC HHS/United States

- R56 HL148192/HL/NHLBI NIH HHS/United States

LinkOut - more resources

Full text sources.

- BioMed Central

- Europe PubMed Central

- PubMed Central

- MedlinePlus Health Information

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

Search form

- Table of Contents

- Troubleshooting Guide

- A Model for Getting Started

- Justice Action Toolkit

- Best Change Processes

- Databases of Best Practices

- Online Courses

- Ask an Advisor

- Subscribe to eNewsletter

- Community Stories

- YouTube Channel

- About the Tool Box

- How to Use the Tool Box

- Privacy Statement

- Workstation/Check Box Sign-In

- Online Training Courses

- Capacity Building Training

- Training Curriculum - Order Now

- Community Check Box Evaluation System

- Build Your Toolbox

- Facilitation of Community Processes

- Community Health Assessment and Planning

- Section 1. Developing a Logic Model or Theory of Change

Chapter 2 Sections

- Section 2. PRECEDE/PROCEED

- Section 3. Healthy Cities/Healthy Communities

- Section 4. Asset Development

- Section 5. Collective Impact

- Section 6. The Institute of Medicine's Community Health Improvement Process (CHIP)

- Section 7. Ten Essential Public Health Services

- Section 8. Communities That Care

- Section 9. Community Readiness

- Section 10. The Strategic Prevention Framework

- Section 11. Health Impact Assessment

- Section 12. Documenting Health Promotion Initiatives Using the PAHO Guide

- Section 13. MAPP: Mobilizing for Action through Planning and Partnerships

- Section 14. MAP-IT: A Model for Implementing Healthy People 2020

- Section 15. The County Health Rankings & Roadmaps Take Action Cycle

- Section 16. Building Compassionate Communities

- Section 17. Addressing Social Determinants of Health in Your Community

- Section 18. PACE EH: Protocol for Assessing Community Excellence in Environmental Health

|

The Tool Box needs your help Your contribution can help change lives.

|

|

Sixteen training modules

|

- Main Section

| Learn how to create and use a logic model, a visual representation of your initiative's activities, outputs, and expected outcomes. |

What is a logic model?

When can a logic model be used, how do you create a logic model, what makes a logic model effective, what are the benefits and limitations of logic modeling.

A logic model presents a picture of how your effort or initiative is supposed to work. It explains why your strategy is a good solution to the problem at hand. Effective logic models make an explicit, often visual, statement of the activities that will bring about change and the results you expect to see for the community and its people. A logic model keeps participants in the effort moving in the same direction by providing a common language and point of reference.

More than an observer's tool, logic models become part of the work itself. They energize and rally support for an initiative by declaring precisely what you're trying to accomplish and how.

In this section, the term logic model is used as a generic label for the many ways of displaying how change unfolds.

Some other names include:

- road map, conceptual map, or pathways map

- mental model

- blueprint for change

- framework for action or program framework

- program theory or program hypothesis

- theoretical underpinning or rationale

- causal chain or chain of causation

- theory of change or model of change

Each mapping or modeling technique uses a slightly different approach, but they all rest on a foundation of logic - specifically, the logic of how change happens. By whatever name you call it, a logic model supports the work of health promotion and community development by charting the course of community transformation as it evolves.

A word about logic

The word "logic" has many definitions. As a branch of philosophy, scholars devote entire careers to its practice. As a structured method of reasoning, mathematicians depend on it for proofs. In the world of machines, the only language a computer understands is the logic of its programmer.

There is, however, another meaning that lies closer to heart of community change: the logic of how things work. Consider, for example, the logic to the motion of rush-hour traffic. No one plans it. No one controls it. Yet, through experience and awareness of recurrent patterns, we comprehend it, and, in many cases, can successfully avoid its problems (by carpooling, taking alternative routes, etc.).

Logic in this sense refers to "the relationship between elements and between an element and the whole." All of us have a great capacity to see patterns in complex phenomena. We see systems at work and find within them an inner logic, a set of rules or relationships that govern behavior. Working alone, we can usually discern the logic of a simple system. And by working in teams, persistently over time if necessary, there is hardly any system past or present whose logic we can't decipher.

On the flip side, we can also project logic into the future. With an understanding of context and knowledge about cause and effect, we can construct logical theories of change, hypotheses about how things will unfold either on their own or under the influence of planned interventions. Like all predictions, these hypotheses are only as good as their underlying logic. Magical assumptions, poor reasoning, and fuzzy thinking increase the chances that despite our efforts, the future will turn out differently than we expect or hope. On the other hand, some events that seem unexpected to the uninitiated will not be a surprise to long-time residents and careful observers.

The challenge for a logic modeler is to find and accurately represent the wisdom of those who know best how community change happens.

The logic in logic modeling

Like a road map, a logic model shows the route traveled (or steps taken) to reach a certain destination. A detailed model indicates precisely how each activity will lead to desired changes. Alternatively, a broader plan sketches out the chosen routes and how far you will go. This road map aspect of a logic model reveals what causes what, and in what order. At various points on the map, you may need to stop and review your progress and make any necessary adjustments.

A logic model also expresses the thinking behind an initiative's plan. It explains why the program ought to work, why it can succeed where other attempts have failed. This is the "program theory" or "rationale" aspect of a logic model. By defining the problem or opportunity and showing how intervention activities will respond to it, a logic model makes the program planners' assumptions explicit.

The form that a logic model takes is flexible and does not have to be linear (unless your program's logic is itself linear). Flow charts, maps, or tables are the most common formats. It is also possible to use a network, concept map, or web to describe the relationships among more complex program components. Models can even be built around cultural symbols that describe transformation, such as the Native American medicine wheel, if the stakeholders feel it is appropriate. See the "Generic Model for Disease/Injury Control and Prevention" in the Examples section for an illustration of how the same information can be presented in a linear or nonlinear format.

Whatever form you choose, a logic model ought to provide direction and clarity by presenting the big picture of change along with certain important details. Let's illustrate the typical components of a logic model, using as an example a mentoring program in a community where the high-school dropout rate is very high. We'll call this program "On Track."

- Purpose , or mission. What motivates the need for change? This can also be expressed as the problems or opportunities that the program is addressing. (For On Track, the community focused advocates on the mission of enhancing healthy youth development to improve the high-school dropout rate.)

- Context , or conditions. What is the climate in which change will take place? (How will new policies and programs for On Track be aligned with existing ones? What trends compete with the effort to engage youth in positive activities? What is the political and economic climate for investing in youth development?)

- Inputs , or resources or infrastructure. What raw materials will be used to conduct the effort or initiative? (In On Track, these materials are coordinator and volunteers in the mentoring program, agreements with participating school districts, and the endorsement of parent groups and community agencies.) Inputs can also include constraints on the program, such as regulations or funding gaps, which are barriers to your objectives.

- Activities , or interventions. What will the initiative do with its resources to direct the course of change? (In our example, the program will train volunteer mentors and refer young people who might benefit from a mentor.) Your intervention, and thus your logic model, should be guided by a clear analysis of risk and protective factors .

- Outputs . What evidence is there that the activities were performed as planned? (Indicators might include the number of mentors trained and youth referred, and the frequency, type, duration, and intensity of mentoring contacts.)

- Effects , or results, consequences, outcomes, or impacts. What kinds of changes came about as a direct or indirect effect of the activities? (Two examples are bonding between adult mentors and youth and increased self-esteem among youth.)

Putting these elements together graphically gives the following basic structure for a logic model. The arrows between the boxes indicate that review and adjustment are an ongoing process - both in enacting the initiative and developing the model.

Using this generic model as a template, let's fill in the details with another example of a logic model, one that describes a community health effort to prevent tuberculosis.

Remember , although this example uses boxes and arrows, you and your partners in change can use any format or imagery that communicates more effectively with your stakeholders. As mentioned earlier, the generic model for Disease/Injury Control and Prevention in Examples depicts the same relationship of activities and effects in a linear and a nonlinear format. The two formats helped communicate with different groups of stakeholders and made different points. The linear model better guided discussions of cause and effect and how far down the chain of effects a particular program was successful. The circular model more effectively depicted the interdependence of the components to produce the intended effects.

When exploring the results of an intervention, remember that there can be long delays between actions and their effects. Also, certain system changes can trigger feedback loops, which further complicate and delay our ability to see all the effects. (A definition from the System Dynamics Society , might help here: "Feedback refers to the situation of X affecting Y and Y in turn affecting X perhaps through a chain of causes and effects. One cannot study the link between X and Y and, independently, the link between Y and X and predict how the system will behave. Only the study of the whole system as a feedback system will lead to correct results.")

For these reasons, logic models indicate when to expect certain changes. Many planners like to use the following three categories of effects (illustrated in the models above), although you may choose to have more or fewer depending on your situation.

- Short-term or immediate effects. (In the On Track example, this would be that young people who participate in mentoring improve their self-confidence and understand the importance of staying in school.)

- Mid-term or intermediate effects. (Mentored students improve their grades and remain in school.)

- Longer-term or ultimate effects. (High school graduation rates rise, thus giving graduates more employment opportunities, greater financial stability, and improved health status.)

Here are two important notes about constructing and refining logic models. Outcome or Impact? Clarify your language. In a collaborative project, it is wise to anticipate confusion over language. If you understand the basic elements of a logic model, any labels can be meaningful provided stakeholders agree to them. In the generic and TB models above, we called the effects short-, mid-, and long-term. It is also common to hear people talk about effects that are "upstream" or "proximal" (near to the activities) versus "downstream" or "distal" (distant from the activities). Because disciplines have their own jargon, stakeholders from two different fields might define the same word in different ways. Some people are trained to call the earliest effects "outcomes" and the later ones "impacts." Other people are taught the reverse: "impacts" come first, followed by "outcomes." The idea of sequence is the same regardless of which terms you and your partners use. The main point is to clearly show connections between activities and effects over time, thus making explicit your initiative's assumptions about what kinds of change to expect and when. Try to define essential concepts at the design stage and then be consistent in your use of terms. The process of developing a logic model supports this important dialogue and will bring potential misunderstandings into the open. For good or for ill? Understand effects. While the starting point for logic modeling is to identify the effects that correspond to stated goals, your intended effect are not the only effects to watch for. Any intervention capable of changing problem behaviors or altering conditions in communities can also generate unintended effects. These are changes that no one plans and that might somehow make the problem worse. Many times our efforts to solve a problem lead to surprising, counterintuitive results. There is always a risk that our "cure" could be worse than the "disease" if we're not careful. Part of the added value of logic modeling is that the process creates a forum for scrutinizing big leaps of faith, a way to searching for unintended effects. (See the discussion of simulation in "What makes a logic model effective" for some thoughts on how to do this in a disciplined manner.) One of the greatest rewards for the extra effort is the ability to spot potential problems and redesign an initiative (and its logic model) before the unintended effects get out of hand, so that the model truly depicts activities that will plausibly produce the intended effects.

Choosing the right level of detail: the importance of utility and simplicity

It may help at this point to consider what a logic model is not. Although it captures the big picture, it is not an exact representation of everything that's going on. All models simplify reality; if they didn't, they wouldn't be of much use.

Even though it leaves out information, a good model represents those aspects of an initiative that, in the view of your stakeholders, are most important for understanding how the effort works. In most cases, the developers will go through several drafts before producing at a version that the stakeholders agree accurately reflects their story.

Should the information become overly complex, it is possible to create a family of related models, or nested models, each capturing a different level of detail. One model could sketch out the broad pathways of change, whereas others could elaborate on separate components, revealing detailed information about how the program operates on a deeper level. Individually, each model conveys only essential information, and together they provide a more complete overview of how the program or initiative functions. (See "How do you create a logic model?" for further details.)

Imagine "zooming-in" on the inner workings of a specific component and creating another, more detailed model just for that part. For a complex initiative, you may choose to develop an entire family of such related models that display how each part of the effort works, as well as how all the parts fit together. In the end, you may have some or all of the following family of models, each one differing in scope:

- View from Outer Space. This overall road map shows the major pathways of change and the full spectrum of effects. This view answers questions such s: Do the activities follow a single pathway, or are there separate pathways that converge down the line? How far does the chain of effects go? How do our program activities align with those of other organizations? What other forces might influence the effects that we hope to see? Where can we anticipate feedback loops and in what direction will they travel? Are there significant time delays between any of the connections?

- View from the Mountaintop. This closer view focuses on a specific component or set of components, yet it is still broad enough to describe the infrastructure, activities, and full sequence of effects. This view answers the same questions as the view from outer space, but with respect to just the selected component(s).

- You Are Here. This view expands on a particular part of the sequence, such as the roles of different stakeholders, staff, or agencies in a coalition, and functions like a flow chart for someone's work plan. It is a specific model that outlines routine processes and anticipated effects. This is the view that you might need to understand quality control within the initiative.

Families, Nesting, and Zooming-In In the Examples section, the idea of nested models is illustrated in the Tobacco Control family of models. It includes a global model that encompasses three intermediate outcomes in tobacco control - environments without tobacco smoke, reduced smoking initiation among youth, and increased cessation among youth and adults. Then a zoom-in model is elaborated for each one of these intermediate outcomes. The Comprehensive Cancer model illustrates a generic logic model accompanied by a zoom-in on the activities to give program staff the specific details they need. Notably, the intended effects on the zoom-in are identical to those on the global model and all major categories of activities are also apparent. But the zoom in unpacks these activities into their detailed components and, more important, indicates that the activities achieve their effects by influencing intermediaries who then move gatekeepers to take action. This level of detail is necessary for program staff, but it may be too much for discussions with funders and stakeholders.

The Diabetes Control model is another good example of a family of models. In this case, the zoom in models are very similar to the global model in level of detail. They add value by translating the global model into a plan for specific actors (in this case a state diabetes control program) or for specific objectives (e..g., increasing timely foot exams).

Logic models are useful for both new and existing programs and initiatives. If your effort is being planned, a logic model can help get it off to a good start. Alternatively, if your program is already under way, a model can help you describe, modify or enhance it.

Planners, program managers, trainers, evaluators, advocates and other stakeholders can use a logic model in several ways throughout an initiative. One model may serve more than one purpose, or it may be necessary to create different versions tailored for different aims. Here are examples of the various times that a logic model could be used.

During planning to:

- clarify program strategy

- identify appropriate outcome targets (and avoid over-promising)

- align your efforts with those of other organizations

- write a grant proposal or a request for proposals

- assess the potential effectiveness of an approach

- set priorities for allocating resources

- estimate timelines

- identify necessary partnerships

- negotiate roles and responsibilities

- focus discussions and make planning time more efficient

During implementation to:

- provide an inventory of what you have and what you need to operate the program or initiative

- develop a management plan

- incorporate findings from research and demonstration projects

- make mid-course adjustments

- reduce or avoid unintended effects

During staff and stakeholder orientation to:

- explain how the overall program works

- show how different people can work together

- define what each person is expected to do

- indicate how one would know if the program is working

During evaluation to:

- document accomplishments

- organize evidence about the program

- identify differences between the ideal program and its real operation

- determine which concepts will (and will not) be measured

- frame questions about attribution (of cause and effect) and contribution (of initiative components to the outcomes)

- specify the nature of questions being asked

- prepare reports and other media

- tell the story of the program or initiative

During advocacy to:

- justify why the program will work

- explain how resource investments will be used

There is no single way to create a logic model. Think of it as something to be used, its form and content governed by the users' needs.

Who creates the model? This depends on your situation. The same people who will use the model - planners, program managers, trainers, evaluators, advocates and other stakeholders - can help create it. For practical reasons, though, you will probably start with a core group, and then take the working draft to others for continued refinement.

Remember that your logic model is a living document, one that tells the story of your efforts in the community. As your strategy changes, so should the model. On the other hand, while developing the model you might see new pathways that are worth exploring in real life.

Two main development strategies are usually combined when constructing a logic model.

- Moving forward from the activities (also known as forward logic ). This approach explores the rationale for activities that are proposed or currently under way. It is driven by But why? questions or If-then thinking: But why should we focus on briefing Senate staffers? But why do we need them to better understand the issues affecting kids? But why would they create policies and programs to support mentoring? But why would new policies make a difference?... and so on. That same line of reasoning could also be uncovered using if-then statements: If we focus on briefing legislators, then they will better understand the issues affecting kids. If legislators understand, then they will enact new policies...

- Moving backward from the effects (also known as reverse logic ). This approach begins with the end in mind. It starts with a clearly identified value, a change that you and your colleagues would definitely like to see occur, and asks a series of "But how?" questions: But how do we overcome fear and stigma? But how can we ensure our services are culturally competent? But how can we admit that we don't already know what we're doing?

At first, you may not agree with the answers that certain stakeholders give for these questions. Their logic may not seem convincing or even logical. But therein lies the power of logic modeling. By making each stakeholder's thinking visible on paper, you can decide as a group whether the logic driving your initiative seems reasonable. You can talk about it, clarify misinterpretations, ask for other opinions, check the assumptions, compare them with research findings, and in the end develop a solid system of program logic. This product then becomes a powerful tool for planning, implementation, orientation, evaluation, and advocacy, as described above.

By now you have probably guessed that there is not a rigid step-by-step process for developing a logic model. Like the rest of community work, logic modeling is an ongoing process. Nevertheless, there are a few tasks you should be sure to accomplish.

To illustrate these in action, we'll use another example for an initiative called "HOME: Home Ownership Mobilization Effort." HOME aims to increase home ownership in order to give neighborhood control to the people who live there, rather than to outside landlords with no stake in the community. It does this through a combination of educating community residents, organizing the neighborhood, and building relationships with partners such as businesses.

Steps for drafting a logic model

- Available written materials often contain more than enough information to get started. Collect narrative descriptions, justifications, grant applications, or overview documents that explain the basic idea behind the intervention effort. If your venture involves a coalition of several organizations, be sure to get descriptions from each agency's point of view. For the HOME campaign, we collected documents from planners who proposed the idea, as well as mortgage companies, homeowner associations, and other neighborhood organizations.

- Your job as a logic modeler is to decode these documents. Keep a piece of paper by your side and sketch out the logical links as you find them. (This work can be done in a group to save time and engage more people if you prefer.)

- Read each document with an eye for the logical structure of the program. Sometimes that logic will be clearly spelled out (e.g., The information, counseling, and support services we provide to community residents will help them improve their credit rating, qualify for home loans, purchase homes in the community; over time, this program will change the proportion of owner-occupied housing in the neighborhood).

- Other times the logic will be buried in vague language, with big leaps from actions to downstream effects (e.g., Ours is a comprehensive community-based program that will transform neighborhoods, making them controlled by people who live there and not outsiders with no stake in the community).

- As you read each document, ask yourself the But why? and But how? questions. See if the writing provides an answer. Pay close attention to parts of speech. Verbs such as teach, inform, support, or refer are often connected to descriptions of program activities. Adjectives like reduced, improved, higher, or better are often used when describing expected effects.

- The HOME initiative , for instance, created different models to address the unique needs of their financial partners, program managers, and community educators. Mortgage companies, grant makers, and other decision makers who decided whether to allocate resources for the effort found the global view from space most helpful for setting context. Program managers wanted the closer, yet still broad view from the mountaintop. And community educators benefited most from the you are here version. The important thing to remember is that these are not three different programs, but different ways of understanding how the same program works.

- Logic models convey the story of community change. Working with the stakeholders, it's your responsibility to ensure that the story you've told in your draft makes sense (i.e., is logical) and is complete (has no loose ends). As you iteratively refine the model, ask yourself and others if it captures the full story.

- Short-term - Potential home owners attain greater understanding of how credit ratings are calculated and more accurate information about the steps to improve a credit rating; mortgage companies create new policies and procedures allowing renters to buy their own homes; local businesses start incentive programs; and anti-discrimination lawsuits are filed against illegal lending practices.

- Mid-term - The community's average credit rating improves; applications rise for home loans along with the approval rate; support services are established for first-time home buyers; neighborhood organizing gets stronger, and alliances expand to include businesses, health agencies, and elected officials.

- Longer-term - The proportion of owner-occupied housing rises; economic revitalization takes off as businesses invest in the community; residents work together to create walking trails, crime patrols, and fire safety screenings; rates of obesity, crime, and injury fall dramatically.

- An advantage of the graphic model is that it can display both the sequence and the interactions of effects. For example, in the HOME model, credit counseling leads to better understanding of credit ratings, while loan assistance leads to more loan submissions, but the two together (plus other activities such as more new buyer programs) are needed for increased home ownership.

- Drama (activities, interventions). How will obstacles be overcome? Who is doing what? What kinds of conflict and cooperation are evident? What's being done to re-arrange the forces of change? What new services or conditions are being introduced? Your activities, based on a clear analysis of risk and protective factors, are the answers to these kinds of questions, Your interventions reveal the drama in your story of directed social change.

Dramatic actions in the HOME initiative include offering educational sessions and forming business alliances, homeowner support groups, and a neighborhood organizing council. At evaluation time, each of these actions is closely connected to output indicators that document whether the program is on track and how fast it is moving. These outputs could be the number of educational sessions held, their average attendance, the size of the business alliance, etc. (These outputs are not depicted in the global model, but that could be done if valuable for users.)

- Raw Materials (inputs, resources, or infrastructure). The energy to create change can't come from nothing. Real resources must come into the system. Those resources may be financial, but they may also include people, space, information, technology, equipment, and other assets. The HOME campaign runs because of the input from volunteer educators, support from schools and faith institutions in the neighborhood, discounts provided by lenders and local businesses, revenue from neighborhood revitalization, and increasing social capital among community residents.

- Stakeholders working on the HOME campaign understood that they were challenging a history of racial discrimination and economic injustice. They saw gentrification occurring in nearby neighborhoods. They were aware of backlash from outside property owners who benefit from the status quo. None of these facts are included in the model per se, but a shaded box labeled History and Context was added to serve as a visual reminder that these things are in the background.

- Draft the logic model using both sides of your brain and all the talents of your stakeholders. Use your artistic and your analytic abilities .

- Arrange activities and intended effects in the expected time sequence. And don't forget to include important feedback loops - after all, most actions provoke a reaction.

- Link components by drawing arrows or using other visual methods that communicate the order of activities and effects. (Remember - the model does not have to be linear or read from left to right, top to bottom. A circle may better express a repeating cycle.)

- Allow yourself plenty of space to develop the model. Freely revise the picture to show the relationships better or to add components.

- Neatness counts, so avoid overlapping lines and unnecessary clutter.

- Color code regions of the model to help convey the main storyline.

- Try to keep everything on one page. When the model get too crowded, either adjust its scope or build nested models.

- Make sure it passes the "laugh test." That is, be sure that the image you create isn't so complex that it provokes an immediate laugh from stakeholders. Of course, different stakeholders will have different laugh thresholds.

- Use PowerPoint or other computer software to animate the model, building it step-by-step so that when you present it to people in an audience, they can follow the logic behind every connection.

- Don't let your model become a tedious exercise that you did just to satisfy someone else. Don't let it sit in a drawer. Once you've gone through the effort of creating a model, the rewards are in its use. Revisit it often and be prepared to make changes. All programs evolve and change through time, if only to keep up with changing conditions in the community. Like a roadmap, a good model will help you to recognize new or reinterpret old territory.

- Also, when things are changing rapidly, it's easy for collaborators to lose sight of their common goals. Having a well-developed logic model can keep stakeholders focused on achieving outcomes while remaining open to finding the best means for accomplishing the work. If you need to take a detour or make a longer stop, the model serves as a framework for incorporating the change.

- Clarify the path of activities to effects and outcomes

- Elaborate links

- Expand activities to reach your goals

- Establish or revise mile markers

- Redefine the boundary of your initiative or program

- Reframe goals or desired outcomes

You will know a model's effectiveness mainly by its usefulness to intended users. A good logic model usually:

- Logically links activities and effects

- Is visually engaging (simple, parsimonious) yet contains the appropriate degree of detail for the purpose (not too simple or too confusing)

- Provokes thought, triggers questions

- Includes forces known to influence the desired outcomes

The more complete your model, the better your chances of reaching "the promised land" of the story. In order to tell a complete story or present a complete picture in your model, make sure to consider all forces of change (root causes, trends, and system dynamics). Does your model reveal assumptions and hypotheses about the root causes and feedback loops that contribute to problems and their solutions?

In the HOME model, for instance, low home ownership persists when there is a vicious cycle of discrimination, bad credit, and hopelessness preventing neighborhood-wide organizing and social change. Three pathways of change were proposed to break that cycle: education; business reform; and neighborhood organizing. Building a model on one pathway to address only one force would limit the program's effectiveness.

You can discover forces of change in your situation using multiple assessment strategies, including forward logic and reverse logic as described above. When exploring forces of change, be sure to search for personal factors (knowledge, belief, skills) as well as environmental factors (barriers, opportunities, support, incentives) that keep the situation the same as well as ones that push for it to change.

Take time to simulate After you've mapped out the structure of a program strategy, there is still another crucial step to take before taking action: some kind of simulation. As logical as the story you are telling seems to you, as a plan for intervention it runs the risk of failure if you haven't explored how things might turn out in the real world of feedback and resistance. Simulation is one of the most practical ways to find out if a seemingly sensible plan will actually play out as you hope. Simulation is not the same as testing a model with stakeholders to see if it makes logical sense. The point of a simulation is to see how things will change - how the system will behave - through time and under different conditions. Though simulation is a powerful tool, it can be conducted in ways ranging from the simple to the sophisticated. Simulation can be as straightforward as an unstructured role-playing game, in which you talk the model through to its logical conclusions. In a more structured simulation, you could develop a tabletop exercise in which you proceed step by step through a given scenario with pre-defined roles and responsibilities for the participants. Ultimately, you could create a computer-based mathematical simulation by using any number of available software tools. The key point to remember is that creating logical models and simulating how those models will behave involve two different sets of skills, both of which are essential for discovering which change strategies will be effective in your community.

You can probably envision a variety of ways in which you might use the logic model you've developed or that logic modeling would benefit your work.

Here are a few advantages that experienced modelers have discovered.

- Logic models integrate planning, implementation, and evaluation. As a detailed description of your initiative, from resources to results, the logic model is equally important for planning, implementing, and evaluating the project. If you are a planner, the modeling process challenges you to think more like an evaluator. If your purpose is evaluation, the modeling prompts discussion of planning. And for those who implement, the modeling answers practical questions about how the work will be organized and managed.

- Logic models prevent mismatches between activities and effects. Planners often summarize an effort by listing its vision, mission, objectives, strategies and action plans . Even with this information, it can be hard to tell how all the pieces fit together. By connecting activities and effects, a logic model helps avoid proposing activities with no intended effect, or anticipating effects with no supporting activities. The ability to spot such mismatches easily is perhaps the main reason why so many logic models use a flow chart format.