Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Missing Data | Types, Explanation, & Imputation

Missing Data | Types, Explanation, & Imputation

Published on December 8, 2021 by Pritha Bhandari . Revised on June 21, 2023.

Missing data , or missing values, occur when you don’t have data stored for certain variables or participants. Data can go missing due to incomplete data entry, equipment malfunctions, lost files, and many other reasons.

Table of contents

Types of missing data, are missing data problematic, how to prevent missing data, how to deal with missing values, other interesting articles, frequently asked questions about missing data.

Missing data are errors because your data don’t represent the true values of what you set out to measure.

The reason for the missing data is important to consider, because it helps you determine the type of missing data and what you need to do about it.

There are three main types of missing data.

| Type | Definition |

|---|---|

| Missing completely at random (MCAR) | Missing data are randomly distributed across the variable and unrelated to other . |

| Missing at random (MAR) | Missing data are not randomly distributed but they are accounted for by other observed variables. |

| Missing not at random (MNAR) | Missing data systematically differ from the observed values. |

Missing completely at random

When data are missing completely at random (MCAR), the probability of any particular value being missing from your dataset is unrelated to anything else.

The missing values are randomly distributed, so they can come from anywhere in the whole distribution of your values. These MCAR data are also unrelated to other unobserved variables.

However, you note that you have data points from a wide distribution, ranging from low to high values.

Data are often considered MCAR if they seem unrelated to specific values or other variables. In practice, it’s hard to meet this assumption because “true randomness” is rare.

When data are missing due to equipment malfunctions or lost samples, they are considered MCAR.

Missing at random

Data missing at random (MAR) are not actually missing at random; this term is a bit of a misnomer .

This type of missing data systematically differs from the data you’ve collected, but it can be fully accounted for by other observed variables.

The likelihood of a data point being missing is related to another observed variable but not to the specific value of that data point itself.

But looking at the observed data for adults aged 18–25, you notice that the values are widely spread . It’s unlikely that the missing data are missing because of the specific values themselves.

Missing not at random

Data missing not at random (MNAR) are missing for reasons related to the values themselves.

This type of missing data is important to look for because you may lack data from key subgroups within your sample. Your sample may not end up being representative of your population .

Attrition bias

In longitudinal studies , attrition bias can be a form of MNAR data. Attrition bias means that some participants are more likely to drop out than others.

For example, in long-term medical studies, some participants may drop out because they become more and more unwell as the study continues. Their data are MNAR because their health outcomes are worse, so your final dataset may only include healthy individuals, and you miss out on important data.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Missing data are problematic because, depending on the type, they can sometimes cause sampling bias . This means your results may not be generalizable outside of your study because your data come from an unrepresentative sample .

In practice, you can often consider two types of missing data ignorable because the missing data don’t systematically differ from your observed values:

For these two data types, the likelihood of a data point being missing has nothing to do with the value itself. So it’s unlikely that your missing values are significantly different from your observed values.

On the flip side, you have a biased dataset if the missing data systematically differ from your observed data. Data that are MNAR are called non-ignorable for this reason.

Missing data often come from attrition bias , nonresponse , or poorly designed research protocols. When designing your study , it’s good practice to make it easy for your participants to provide data.

Here are some tips to help you minimize missing data:

- Limit the number of follow-ups

- Minimize the amount of data collected

- Make data collection forms user friendly

- Use data validation techniques

- Offer incentives

After you’ve collected data, it’s important to store them carefully, with multiple backups.

To tidy up your data, your options usually include accepting, removing, or recreating the missing data.

You should consider how to deal with each case of missing data based on your assessment of why the data are missing.

- Are these data missing for random or non-random reasons?

- Are the data missing because they represent zero or null values?

- Was the question or measure poorly designed?

Your data can be accepted, or left as is, if it’s MCAR or MAR. However, MNAR data may need more complex treatment.

The most conservative option involves accepting your missing data: you simply leave these cells blank.

It’s best to do this when you believe you’re dealing with MCAR or MAR values. When you have a small sample, you’ll want to conserve as much data as possible because any data removal can affect your statistical power .

You might also recode all missing values with labels of “N/A” (short for “not applicable”) to make them consistent throughout your dataset.

These actions help you retain data from as many research subjects as possible with few or no changes.

You can remove missing data from statistical analyses using listwise or pairwise deletion.

Listwise deletion

Listwise deletion means deleting data from all cases (participants) who have data missing for any variable in your dataset. You’ll have a dataset that’s complete for all participants included in it.

A downside of this technique is that you may end up with a much smaller and/or a biased sample to work with. If significant amounts of data are missing from some variables or measures in particular, the participants who provide those data might significantly differ from those who don’t.

Your sample could be biased because it doesn’t adequately represent the population .

Pairwise deletion

Pairwise deletion lets you keep more of your data by only removing the data points that are missing from any analyses. It conserves more of your data because all available data from cases are included.

It also means that you have an uneven sample size for each of your variables. But it’s helpful when you have a small sample or a large proportion of missing values for some variables.

When you perform analyses with multiple variables, such as a correlation , only cases (participants) with complete data for each variable are included.

- 12 people didn’t answer a question about their gender, reducing the sample size from 114 to 102 participants for the variable “gender.”

- 3 people didn’t answer a question about their age, reducing the sample size from 114 to 11 participants for the variable “age.”

Imputation means replacing a missing value with another value based on a reasonable estimate. You use other data to recreate the missing value for a more complete dataset.

You can choose from several imputation methods.

The easiest method of imputation involves replacing missing values with the mean or median value for that variable.

Hot-deck imputation

In hot-deck imputation , you replace each missing value with an existing value from a similar case or participant within your dataset. For each case with missing values, the missing value is replaced by a value from a so-called “donor” that’s similar to that case based on data for other variables.

You sort the data based on other variables and search for participants who responded similarly to other questions compared to your participants with missing values.

Cold-deck imputation

Alternatively, in cold-deck imputation , you replace missing values with existing values from similar cases from other datasets. The new values come from an unrelated sample.

You search for participants who responded similarly to other questions compared to your participants with missing values.

Use imputation carefully

Imputation is a complicated task because you have to weigh the pros and cons.

Although you retain all of your data, this method can create research bias and lead to inaccurate results. You can never know for sure whether the replaced value accurately reflects what would have been observed or answered. That’s why it’s best to apply imputation with caution.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Statistical power

- Pearson correlation

- Degrees of freedom

- Statistical significance

Methodology

- Cluster sampling

- Stratified sampling

- Focus group

- Systematic review

- Ethnography

- Double-Barreled Question

Research bias

- Implicit bias

- Publication bias

- Cognitive bias

- Placebo effect

- Pygmalion effect

- Hindsight bias

- Overconfidence bias

Missing data , or missing values, occur when you don’t have data stored for certain variables or participants.

In any dataset, there’s usually some missing data. In quantitative research , missing values appear as blank cells in your spreadsheet.

Missing data are important because, depending on the type, they can sometimes bias your results. This means your results may not be generalizable outside of your study because your data come from an unrepresentative sample .

To tidy up your missing data , your options usually include accepting, removing, or recreating the missing data.

- Acceptance: You leave your data as is

- Listwise or pairwise deletion: You delete all cases (participants) with missing data from analyses

- Imputation: You use other data to fill in the missing data

There are three main types of missing data .

Missing completely at random (MCAR) data are randomly distributed across the variable and unrelated to other variables .

Missing at random (MAR) data are not randomly distributed but they are accounted for by other observed variables.

Missing not at random (MNAR) data systematically differ from the observed values.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 21). Missing Data | Types, Explanation, & Imputation. Scribbr. Retrieved July 10, 2024, from https://www.scribbr.com/statistics/missing-data/

Is this article helpful?

Pritha Bhandari

Other students also liked, what is data cleansing | definition, guide & examples, how to find outliers | 4 ways with examples & explanation, random vs. systematic error | definition & examples, what is your plagiarism score.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Missing Data Overview: Types, Implications & Handling

By Jim Frost Leave a Comment

Missing data refers to the absence of data entries in a dataset where values are expected but not recorded. They’re the blank cells in your data sheet. Missing values for specific variables or participants can occur for many reasons, including incomplete data entry, equipment failures, or lost files. When data are missing, it’s a problem. However, the issues go beyond merely reducing the sample size. In some cases, they can skew your results.

Read on to learn more about the types of missing data, how they affect your results, and when and how to address them .

Types of Missing Data Explained

Missing data are not all created equal. There are varying types that have distinct impacts on your dataset and the conclusions you draw from your analysis. Furthermore, the extent to which absent values affect the study results largely depends on the type. These types require different strategies to maintain the integrity of your findings.

Missing data can be a form of Selection Bias .

Let’s delve into three types of missing data with examples to illustrate how they might appear in real-world datasets and affect your analysis. We’ll go from the best to worst kind.

Missing Completely at Random (MCAR)

When data are missing completely at random (MCAR), the likelihood of missing values is the same across all observations. In other words, the causes for the missing data are entirely unrelated to the data itself and affect all observations equally. Consequently, you can disregard the potential for the bias that occurs with other kinds of absent values.

For a bone density study I worked on, we measured the subjects’ activity levels for 12 hours with accelerometers and load monitors. Invariably, those monitors would fail randomly, and we’d lose some data. Those data are MCAR because all observations had an equal probability of containing missing values.

Fortunately, when your data gaps are MCAR, you can usually ignore it.

When data are Missing Completely at Random (MCAR), their absence is independent of any measured or unmeasured variables in the study. This randomness means that the missing data are less likely to introduce bias related to the data’s distribution, and you can often ignore them without distorting the analysis. MCAR data reduces sample size and the precision of the sample estimates but tends not to introduce bias. Regular statistical hypothesis testing will compensate for these random losses by adjusting the estimates to reflect the reduced sample size, thus preserving the study’s integrity .

Missing at Random (MAR)

Despite its name, MAR occurs when the absence of data is not random. The probability of missing data is not equal for all measurements. They’re more likely for some observations than others. However, measurements of observed variables predict the unequal probability of missing values occurring. Crucially, those probabilities don’t relate to the missing information itself. Hence, statisticians say that the data gaps correlate with observed values and not the unobserved (missing) values.

For instance, consider a medical study tracking the effects of a new medication. If patients from a particular region are less likely to complete follow-up visits — perhaps due to longer travel distances — their follow-up data would be missing. If the dataset includes the patients’ geographical information, this missing data would be classified as MAR. The missingness depends on the observed geographic location but not directly on the unobserved follow-up outcomes themselves. By acknowledging the role of geography in the availability of follow-up data, researchers can adjust their analyses to better estimate the medication’s effects across all regions.

Analysis of MAR missing data can produce biased results when analysts don’t correctly handle them. This bias occurs because missing values systematically differ from observed values, changing the properties of your sample. It is no longer a representative sample !

However, despite being non-random, MAR is a middle ground where your results can be unbiased when you use the correct methods. If your model uses the observed variables that predict the absent values, you can consider the missing data to be MCAR. Modern techniques for handling absent values often begin with the assumption of MAR, as it allows for more nuanced analyses that can account for observed patterns in the dataset .

Missing Not at Random (MNAR)

This type occurs when the probability of missing data relates to the absent values themselves, indicating a deeper issue within the dataset. Hence, it’s a problem because you can’t understand and model it.

In health surveys, individuals with more severe symptoms might be less likely to report their health status. This pattern creates a dataset where sicker individuals are underrepresented.

Missing Not at Random (MNAR) is the most challenging type of missing data because it occurs when the absence of data directly relates to the missing values themselves. This situation can introduce significant biases because the absent values are systematically different from the ones you record. For instance, if lower-income people are less likely to report their earnings, analysis of these data will likely overestimate the average income.

You might be unable to analyze MNAR data without producing biased results. And, unlike MAR, you can’t correct the bias using your observed variables. In this case, you should critically evaluate your results and compare them to other studies to assess the potential for bias and its degree .

Handling Missing Data

When dealing with missing data, researchers must decide on the best strategy to ensure their analysis remains robust and meaningful.

You typically have three options: accept, remove, or recreate them through estimation .

- Accepting : Leave the blank cells in your dataset and analyze.

- Listwise : This technique removes an entire record when any value is missing. It’s straightforward and ensures that only complete cases are analyzed.

- Pairwise : Unlike listwise deletion, pairwise deletion uses all available data by analyzing pairs of variables without missing values. This method includes more data points in specific statistical analyses but likely has unequal sample sizes for different pairs.

- Imputation : Fills in missing data with estimated values. The simplest form replaces absent values with the variable’s mean, median, or mode . More sophisticated methods, like regression imputation, predict missing values based on related information in the dataset.

Analyzing Missing Data Discussion

Accepting missing data is best for MCAR because they are unlikely to bias your results.

The deletion methods simplify the data handling process but reduce the sample size. Critically, deletion can introduce bias when the absent values are not MCAR.

Imputation helps maintain statistical power by estimating missing values and addressing reduced sample sizes. However, it risks introducing bias if the calculated values do not accurately reflect the correct values. Choosing the proper imputation method is crucial, as incorrect assumptions can result in misleading analysis outcomes.

For MAR data, advanced techniques like regression or multiple imputation can produce unbiased estimates. Consequently, they offer a significant advantage over the deletion methods.

Note: Using a measure of central tendency to replace missing values will still yield biased results for MAR data .

Navigating missing information is an essential skill in statistical analysis. By understanding the types of missing data and implementing strategies to manage them, researchers can ensure more accurate and reliable outcomes. Effective handling of absent values enriches the quality of your analysis and bolsters the credibility of your findings in the broader research community.

Remember, the goal is to handle missing data, anticipate and mitigate its occurrence, and ensure your dataset is representative and comprehensive .

Share this:

Reader Interactions

Comments and questions cancel reply.

- Open access

- Published: 03 April 2022

Evaluation of multiple imputation approaches for handling missing covariate information in a case-cohort study with a binary outcome

- Melissa Middleton 1 , 2 ,

- Cattram Nguyen 1 , 2 ,

- Margarita Moreno-Betancur 1 , 2 ,

- John B. Carlin 1 , 2 &

- Katherine J. Lee 1 , 2

BMC Medical Research Methodology volume 22 , Article number: 87 ( 2022 ) Cite this article

2026 Accesses

1 Citations

4 Altmetric

Metrics details

In case-cohort studies a random subcohort is selected from the inception cohort and acts as the sample of controls for several outcome investigations. Analysis is conducted using only the cases and the subcohort, with inverse probability weighting (IPW) used to account for the unequal sampling probabilities resulting from the study design. Like all epidemiological studies, case-cohort studies are susceptible to missing data. Multiple imputation (MI) has become increasingly popular for addressing missing data in epidemiological studies. It is currently unclear how best to incorporate the weights from a case-cohort analysis in MI procedures used to address missing covariate data.

A simulation study was conducted with missingness in two covariates, motivated by a case study within the Barwon Infant Study. MI methods considered were: using the outcome, a proxy for weights in the simple case-cohort design considered, as a predictor in the imputation model, with and without exposure and covariate interactions; imputing separately within each weight category; and using a weighted imputation model. These methods were compared to a complete case analysis (CCA) within the context of a standard IPW analysis model estimating either the risk or odds ratio. The strength of associations, missing data mechanism, proportion of observations with incomplete covariate data, and subcohort selection probability varied across the simulation scenarios. Methods were also applied to the case study.

There was similar performance in terms of relative bias and precision with all MI methods across the scenarios considered, with expected improvements compared with the CCA. Slight underestimation of the standard error was seen throughout but the nominal level of coverage (95%) was generally achieved. All MI methods showed a similar increase in precision as the subcohort selection probability increased, irrespective of the scenario. A similar pattern of results was seen in the case study.

Conclusions

How weights were incorporated into the imputation model had minimal effect on the performance of MI; this may be due to case-cohort studies only having two weight categories. In this context, inclusion of the outcome in the imputation model was sufficient to account for the unequal sampling probabilities in the analysis model.

Peer Review reports

Epidemiological studies often collect large amounts of data on many individuals. Some of this information may be costly to analyse, for example biological samples. Furthermore, if there are a limited number of cases, data on all non-cases may provide little additional information to that provided by a subset [ 1 ]. In this context, investigators may opt to use a case-cohort study design, in which background covariate data and outcomes are collected on all participants and more costly exposures (e.g. metabolite levels) are collected on a smaller subset. An example of a cohort study adopting the case-cohort design is the Barwon Infant Study (BIS). This is a population-derived cohort study with a focus on non-communicable diseases and the biological processes driving them. Given that a number of investigations within BIS involve exposures collected through costly biomarker and metabolite analysis, for example serum vitamin D levels, the case-cohort design was implemented to minimise cost [ 2 ].

In the case-cohort design, a subset of the full cohort, hereafter termed the subcohort, is randomly selected from the inception cohort. This subcohort is used as the sample of controls for all subsequent investigations, with exposure data collected from the subcohort and all cases [ 3 ]. In such a study, the analysis is conducted on the subcohort and cases only, resulting in an unequal probability of selection into the analysis, with cases having probability of selection equal to1 and non-case subcohort members having a probability of selection less than 1. This unequal sampling should be accounted for in the analysis so as to avoid bias induced due to the oversampling of cases [ 4 ].

One way to view the case-cohort design, and to address the unequal sampling, is to treat it as a missing data problem, where the exposure data is ‘missing by design’. Standard practice in the analysis of case-cohort studies is to handle this missing exposure data using inverse probability weighting (IPW) based on the probability of selection into the analysis [ 1 ]. Additionally, case-cohort studies may be subject to unintended missing data in the covariates, and multiple imputation (MI) may be applied to address this missing data.

MI is a two-stage procedure in which missing values are first imputed by drawing plausible sets of values from the posterior distribution of the missing data given the observed data, to form multiple, say m > 1, complete datasets. In the second stage, the analysis is conducted on each of these m datasets as though they were fully observed, producing m estimates of the target estimands. An overall estimate for the parameter of interest is produced, along with its variance, using Rubin’s rules [ 5 ]. If the imputation model is appropriate, and the assumptions on the missing data mechanism hold, then the resulting estimates are unbiased with standard errors (SE) that reflect not only the variation of the data but also the uncertainty in the missing values [ 6 ].

When conducting MI, there are two general approaches that can be used to generate the imputed datasets when there is multivariate missingness: joint modelling, most commonly multivariate normal imputation (MVNI), and fully conditional specification (FCS). Under MVNI the missing covariates are assumed to jointly follow a multivariate normal distribution [ 7 ]. In contrast, FCS uses a series of univariate imputation models, one for each incomplete covariate, and imputes missing values for each variable by iterating through these models sequentially [ 8 ]. To obtain valid inferences, careful consideration must be made when constructing the imputation model such that it incorporates all features of the analysis model, in order to ensure compatibility between the imputation and analysis model [ 9 , 10 ].

In simple terms, to achieve compatibility, the imputation model must at least include all the variables in the analysis model, and in the same form. It may also include additional variables, termed auxiliary variables, which can be used to improve the precision of the inference if the auxiliary variables are associated with the variables that have missing data. Auxiliary variables may additionally decrease bias if they are strong predictors of missingness [ 11 ]. In the context of a case-cohort study where the target estimand is the coefficient for the risk ratio (RR) estimated from a log-binomial model with IPW to address unequal probability of selection, two key features should be reflected in the imputation model; 1) the assumed distribution of the outcome, given the exposure and covariates (i.e. log-binomial), and 2) the weights. It is currently unclear how best to incorporate weights into MI in the context of a case-cohort analysis.

It has been previously shown that ignoring weights during MI can introduce bias into the point estimates and estimated variance produced through Rubin’s rules in the context of an IPW analysis model [ 12 , 13 ]. Various approaches to incorporate weights into MI have been proposed in the literature. Previous work from Marti and Chavance [ 14 ] in a survival analysis of case-cohort data suggests that simply including the outcome, as a proxy for the weights, in the imputation model may be sufficient for incorporating the weights. In the case-cohort setting we are considering, there are only two distinct weights, representing the unequal selection for cases and controls, so the weighting variable is completely collinear with the outcome. An additional approach is to include weights and an interaction between the weights and all of the variables in the analysis model in the imputation model. Carpenter and Kenward [ 12 ] illustrated that this corrected for the bias seen when the weights are ignored in the imputation model. One difficulty with this approach is that it may be infeasible if there are several incomplete variables. Another drawback is the increased number of parameters to be estimated during imputation. Another potential approach is to use stratum-specific imputation, in which missing values for cases and non-case subcohort members are imputed separately. While many studies have compared MI approaches in the case-cohort setting [ 14 , 15 , 16 , 17 ], these are in the context of a time-to-event endpoint and predominantly considered MI only to address the missing exposure due to the design. Keogh [ 16 ] considered additional missingness in the covariates, but this was in the context of a survival analysis where weights were dependent on time. While there are many approaches available, it is unclear how these preform in a simple case-cohort context with missing covariate data.

The aim of this study was to compare the performance of a range of possible methods for implementing MI to handle missing covariate data in the context of a case-cohort study where the target analysis uses IPW to estimate the i) RR and ii) odds ratio (OR). Whilst the common estimand in case-cohort studies is the RR due to the ability to directly estimate this quantity without the rare-disease assumption [ 18 ], we have chosen to additionally consider the target estimand being the coefficient for the OR as this is still a commonly used estimand. The performance of the MI approaches was explored under a range of scenarios through the use of a simulation study closely based on a case study within BIS, and application of these methods to the BIS data. The ultimate goal was to provide guidance on the use of MI for handling covariate missingness in the analysis of case-cohort studies.

The paper is structured as follows. We first introduce the motivating example, a case-cohort investigation within BIS, and the target analysis models used for this study. This is followed by a description of the MI approaches to be assessed and details of a simulation study designed to evaluate these approaches based on the BIS case study. We then apply these approaches to the BIS case study. Finally, we conclude with a discussion.

Motivating example

The motivating example for this study comes from a case-cohort investigation within BIS [ 19 ]. A full description of BIS can be found elsewhere [ 2 ]. Briefly, it is a population-derived longitudinal birth cohort study of infants recruited during pregnancy ( n = 1074). The research question focused on the effect of vitamin D insufficiency (VDI) at birth on the risk of food allergy at one-year. Cord blood was collected and stored after birth, and the children were followed up at one-year. During this review, the infant’s allergy status to five common food allergens (cow’s milk, peanuts, egg, sesame and cashew) was determined through a combination of a skin prick test and a food challenge. Of those who completed the one-year review ( n = 894, 83%), a random subcohort was selected ( n = 274), with a probability of approximately 0.31. The exposure, VDI, was defined as 25(OH)D 3 serum metabolite levels below 50 nM and was measured from those with a confirmed food allergy at one-year and those who were selected into the subcohort.

The planned primary analysis of the case study was to estimate the RR for the target association using IPW in a binomial regression model adjusted for the confounding variables: family history of allergy (any of asthma, hay fever, eczema, or food allergy in an infant’s parent or sibling), Caucasian ethnicity of the infant, number of siblings, domestic pet ownership, and formula feeding at 6 and 12 months. The target analysis of this study adjusted for a slightly different set of confounders to the BIS example. A description of these variables and the amount of missing data in each is shown in Table 1 .

Target analysis

In this study, we focus on two estimands from two different analysis models. Each model targets the association between VDI and food allergy at one-year, adjusting for confounders. The first model estimates the adjusted RR using a Poisson regression model with a log-link and a robust error variance [ 20 ] to avoid the known convergence issues of the log-binomial model:

The RR of interest is exp( θ 1 ). The second target estimand is the adjusted OR for the exposure-outcome association, estimated via a logistic regression model:

The OR of interest is exp( β 1 ). Estimation for each model uses IPW, where the weights are estimated using the method outlined by Borgan [ 21 ] for stratified sampling of the cohort, noting that the oversampling of the cases is a special case of stratified sampling where stratification depends on the outcome. The weight for i th individual can be defined as w i = 1 for cases, and n 0 / m 0 for non-cases, where n 0 is the number of non-cases in the full cohort and m 0 is the number of non-cases within the subcohort.

Below we outline the four approaches we considered in the BIS case study and simulation study for incorporating the weights into the imputation model. All MI approaches include the outcome, exposure, covariates, and auxiliary variables in the imputation model except where specified. Where imputation has been applied under FCS, binary variables have been imputed from a logistic model. For MVNI, all variables are imputed from a multivariate normal distribution, conditional on all other variables, with imputed covariates included into the analysis as is (i.e. without rounding).

Weight proxy as a main effect

Under this approach, only the analysis and auxiliary variables listed above were included in the imputation model, with the outcome acting as a proxy for the weights due to the collinearity between the outcome and weights. This approach is implemented under both the FCS and MVNI frameworks.

Weight proxy interactions

The second approach includes two-way interactions between the outcome (as a proxy for the weights) and all other analysis variables in the imputation model. Within FCS, the interactions were included as predictors, with these derived within each iteration of the imputation [ 22 ]. Within MVNI interactions were considered as ‘just another variable’ in the imputation model [ 22 ].

Stratum-specific imputation

In the case-cohort setting, where there are only two weight strata, another option is to impute separately within each weight/outcome stratum. Here, the outcome is not included in the imputation model, but rather the incomplete covariates are imputed using a model including the exposure, other covariates and auxiliary variables, for cases and non-cases separately.

Weighted imputation model

A final option is to impute the missing values using a weighted imputation model, where the weights are set to those used during analysis. This can only be conducted within the FCS framework.

The approaches for handling the missing covariate data are summarised in Table 2 .

- Simulation study

A simulation study was conducted to assess the performance of each MI approach for accommodating the case-cohort weights into the imputation model, across a range of scenarios. Simulations were conducted using Stata 15.1 [ 23 ]. Cohorts of size 1000 were generated using models outlined above with parameter values based on the observed relationships in BIS (except where noted).

Complete data generation

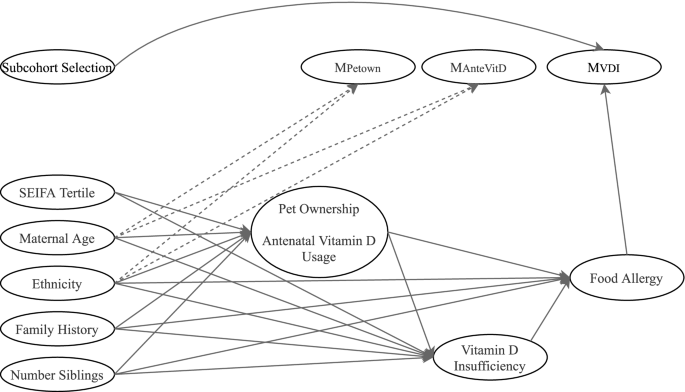

Complete data, comprising the exposure, five confounders and two auxiliary variables, were generated sequentially using the models listed below. Models for data generation were constructed based on the plausible causal structure specified in Fig. 1 . A table showing the parameter values can be found in Additional file 1 .

Caucasian ethnicity

Missingness directed acyclic graph (m-DAG) depicting the assumed causal structure between simulated variables and missingness indicators under the dependent missing mechanisms. For the independent missing mechanism, the dashed lines are absent. For simplicity, associations between baseline covariates have not been shown

Maternal age at birth, in years, (auxiliary variable)

where ε~ N (0, σ 2 )

Socioeconomic Index for Areas (SEIFA) tertile, (auxiliary variable)

Family history of allergy

Number of siblings

Pet ownership and antenatal vitamin D supplement usage

The exposure, VDI

Finally, the outcome, food allergy at one-year, was generated from a Bernoulli distribution with a probability determined by either model (1) or model (2) so the target analysis was correctly specified. In these models we set θ 1 = log( RR adj ) = log(1.16) and β 1 = log( OR adj ) = log(1.18) as estimated from BIS. Given the weak exposure-outcome association in BIS, we also generated food allergy with an enhanced association where we set θ 1 = β 1 = log(2.0).

An additional extreme data generation scenario was considered as a means to stress-test the MI approaches under more extreme conditions. In this scenario, the associations between the continuous auxiliary variable of maternal age and the exposure, missing covariates, and missing indicator variables were strengthened.

Inducing Missingness

Missingness was introduced into two covariates, antenatal vitamin D usage and pet ownership. Missingness was generated such that p % of overall observations had incomplete covariate information, with \(\frac{p}{2}\%\) having missing data in just one covariate and \(\frac{p}{2}\%\) having missing data in both, where p was chosen as either 15 or 30. Three missing data mechanisms were considered: an independent missing data mechanism and two dependent missing data mechanisms as depicted in the missingness directed acyclic graph (m-DAG) in Fig. 1 .

Under the independent missing data mechanism, missingness in each covariate was randomly assigned to align with the desired proportions. Under the dependent missingness mechanisms, an indicator for missingness in pet ownership, M petown , was initially generated from a logistic model (13), followed by an indicator for missingness in antenatal vitamin D usage, M antevd (model (14)).

The parameters, ν 0 and τ 0 , and τ 4 were iteratively chosen until the desired proportions of missing information were obtained. Missingness indicators were generated dependent on the outcome (a setting where the complete-case analysis would be expected to be biased) and an auxiliary variable (a setting where we expect a benefit of MI over the complete-case analysis), as depicted in the causal diagram in Fig. 1 . The dependency between the missing indicator variables was used to simultaneously control both the overall proportion of incomplete records and the proportion with missingness in both variables.

The two dependent missing mechanisms differed in the strength of association between predictor variables and the missing indicators. The first mechanism used parameter values set to those estimated in BIS (termed Dependent Missingness – Observed, or DMO ). The second used an enhanced mechanism where the parameters values were doubled (termed Dependent Missingness – Enhanced, or DME ). The parameter values used to induce missingness under the dependent missingness mechanisms can be found in Additional file 1 .

To mimic the case-cohort design, a subcohort was then randomly selected using one of three probabilities of selection (0.20, 0.30, 0.40). The exposure, VDI, was set to missing for participants without the outcome and who had not been selected into the subcohort.

Overall, we considered 78 scenarios (2 data generation processes, 2 exposure-outcome associations, 3 missing data mechanisms, 2 incomplete covariate proportions, and 3 subcohort selection probabilities, plus another 6 scenarios under extreme conditions).

For the 6 extreme scenarios presented in the results section, the mean percentage of cases in the full cohort across the 2000 simulated datasets was 20.4% (standard deviation: 1.3%) for scenarios targeting RR estimation, and 18.4% (1.2%) for OR estimation. The average case-cohort sample size ranged from 348 to 522, increasing with the probability of subcohort selection. The percentage of incomplete observations within the case-cohort sample ranged from 30.5 to 32.6%, with the percentage of incomplete cases increasing as the subcohort size decreased due to the dependency between the probability of being incomplete and the outcome. Additional file 1 contains a table showing summaries for the 2000 simulated datasets for the 6 extreme scenarios.

Evaluation of MI approaches

For each scenario, the MI approaches outlined above were applied and 30 imputed datasets generated, to match the maximum proportion of missing observations. The imputed datasets were analysed using IPW with the corresponding target analysis model. A complete-data analysis (with no missing data in the subcohort) and a complete-case analysis (CCA) were also conducted for comparison. Performance was measured in terms of percentage bias relative to the true value (relative bias), the empirical and model-based SE, and coverage probability of the 95% confidence interval for the target estimand, the effect of VDI on food allergy ( θ 1 in model (1) and β 1 in model (2)). In calculating the performance measures, the true value was taken to be the value used during data generation with measures calculated using the simsum package in Stata (see [ 24 ] for details). We also report the Monte Carlo standard error (MCSE) for each performance measure. For each scenario we presented results for 2000 simulations. With 2000 simulations, the MCSE for a true coverage of 95% would be 0.49%, and we can expect the estimated coverage probability to fall between 94.0 and 96.0% [ 25 ]. Since convergence issues were expected across the methods, we generated 2200 datasets in each scenario and retained the first 2000 datasets on which all methods converged. These 2000 datasets were used to calculate all performance measures except the convergence rate, which was calculated across the 2200 simulations.

Bias in RR estimation

Incompatibility between the imputation and analysis model may arise due to the imputation of missing values from a linear or logistic model when the analysis targets the RR [ 26 ]. To explore the bias introduced into the point estimate in this context, MI was conducted on the full cohort with completely observed exposure (i.e. before data were set to missing by design) and analysed without weighting. This was conducted to understand the baseline level bias, prior to the introduction of weighting. Results for this analysis are presented in Additional file 2 .

Each of the MI methods were also applied to the target analyses using BIS data. For consistency with the simulation study, the analysis was limited to observations with complete outcome and exposure data ( n = 246). In the case study there were also missing values in the covariates, Caucasian ethnicity (1%) and family history of allergy (1%), and the auxiliary variable SEIFA tertiles (2%), which were imputed alongside pet ownership (1%) and antenatal vitamin D usage (23%). For the FCS approaches, all variables were imputed using a logistic model, except for SEIFA tertile, which was imputed using an ordinal logistic model. Imputed datasets were analysed under each target analysis model with weights of 1 for cases and (0.31) −1 for non-case subcohort members. A CCA was also conducted.

Given that the pattern of results were similar across the range of scenarios, we describe the results for the 6 scenarios under extreme conditions (enhanced exposure-outcome association, 30% missing covariates under DME, and enhanced auxiliary associations). The results for the remaining scenarios are provided in Additional file 3 .

Across the 2200 simulations, only FCS-WX and FCS-SS had convergence issues (i.e., successfully completing the analysis without non-convergence of the imputation procedure or numerical issues in the estimation). The rate of non-convergence was greatest for FCS-WX with the smallest subcohort size (probability of selection = 0.2), with 4.0% of simulations under RR estimation and 3.2% under OR estimation failing to converge. Less than 0.2% of simulations failed to converge for FCS-SS under any combination of estimand and subcohort probability of selection.

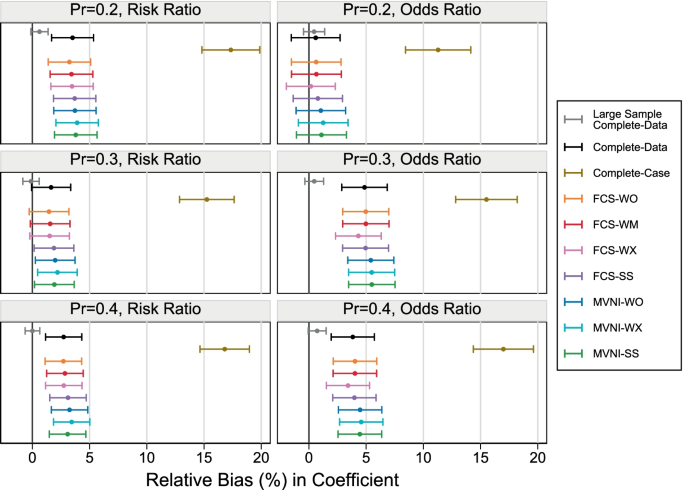

Figure 2 shows the relative bias in the estimate of the association (RR or OR) between VDI and food allergy at one-year for each scenario considered under extreme conditions. The largest bias for the large sample complete-data analysis occurred for the coefficient of the OR and the largest subcohort size at 1.5%, with the MCSE range covering a relative bias of 0%. In all scenarios shown, the CCA resulted in a large relative bias, ranging between 10 and 20%. All MI approaches reduced this bias drastically, irrespective of estimand and subcohort size. When the target estimand was the coefficient for the OR and the smallest subcohort probability was used, all MI approaches were approximately unbiased, with MVNI-WX showing the largest relative bias at 1.4% and FCS-WX showing the least at 0.4%. For the remaining scenarios across both estimands, the complete-data analysis and MI approaches showed a positive bias, with the largest occurring with the log (OR) estimand and a probability of selection of 0.3, where the relative bias was centred around 5%. Overall, minimal differences can be seen between the MI approaches when the estimation targeted the RR. When the target estimand was the log (OR), there was a slight decrease in relative bias for FCS-WX, when compared to other MI approaches, and a slight increase in the relative bias for MVNI approaches, when compared to FCS approaches.

Relative bias in the coefficient under the extreme scenarios with 30% missing covariate information. Error bars represent 1.96xMonte Carlo standard errors

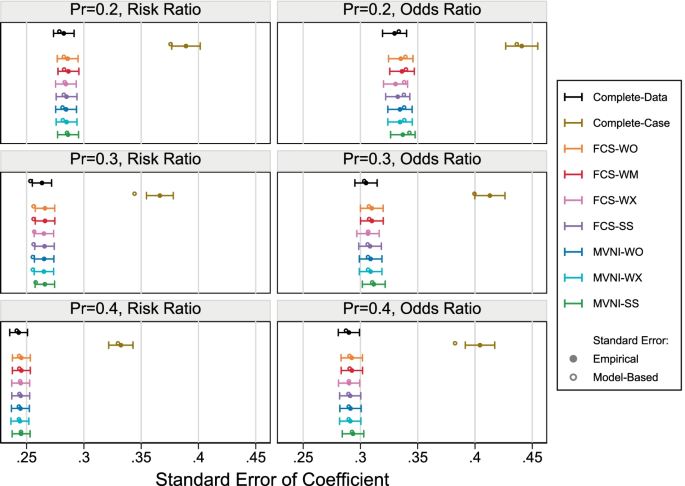

The empirical SE and model-based SE are shown in Fig. 3 . For most scenarios, we can see the SE has been underestimated in the CCA. There was a slight underestimation of the SE when the subcohort selection probability was 0.3 and the target estimand the log (RR), however, the model-based SE appears to fall within the MCSE intervals for the empirical SE. There appears to be no systematic deviation between the empirical SE and the model-based SE for any scenario. An increase in the precision can be seen as the subcohort size increases, and there is an increase in precision for all MI methods compared to the CCA for any given scenario, as expected.

Empirical standard error and model based standard error under the extreme scenarios with 30% missing covariate information. Error bars represent 1.96xMonte Carlo standard errors

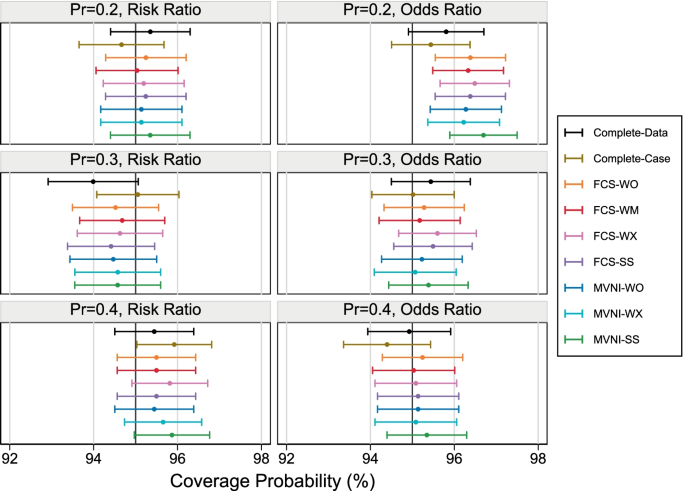

The estimated coverage probabilities are shown in Fig. 4 . For a nominal coverage of 95%, all MI approaches have a satisfactory coverage with 95% falling within the MCSE range for all scenarios, with the exception of the smallest subcohort size with OR estimation. Under this scenario, all MI approaches produce over-coverage, as a result of the point estimate being unbiased and the SE overestimated (but with the average model-based SE falling within the MCSE range). There is no apparent pattern in the coverage probability across the MI methods, with all methods performing similarly. Results from the CCA showed acceptable levels of coverage.

Coverage probability across 2000 simulations under the extreme scenarios with 30% missing covariate information. Error bars represent 1.96xMonte Carlo standard errors

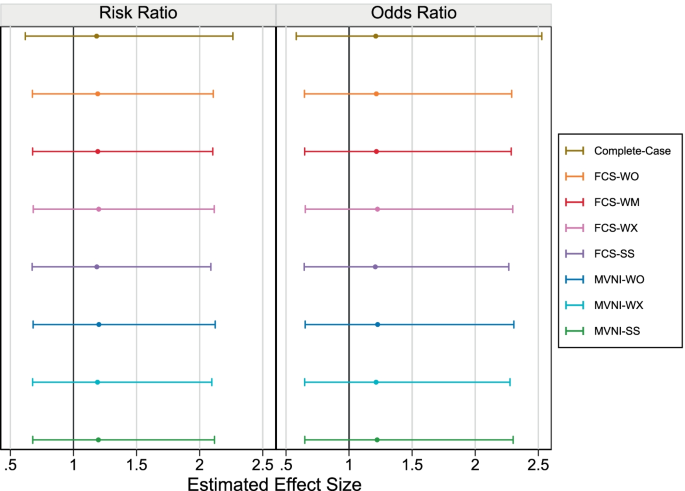

The results from the case study are shown in Fig. 5 . Results are consistent with the simulation study in that there is little variation in the estimated association across the MI methods. Unlike the simulation study under extreme conditions, the estimated coefficient is similar in the CCA and the MI approaches. There is an expected recovery of information leading to an increase in precision for MI approaches compared to the CCA.

Estimated parameter value with 95% confidence interval in case study dataset

In this study we compared a number of different approaches for accommodating unequal sampling probabilities into MI in the context of a case-cohort study. We found that how the weights were included in the imputation model had minimal effect on the estimated association or performance of MI which, as expected, outperformed CCA. Results were consistent across different levels of missing covariate information, target estimand and subcohort selection probability.

While bias was seen in some scenarios, this was minimal (~ 5%) and consistent across all MI approaches. We conducted a large sample analysis to confirm the data generation process was correct, given the bias observed in the complete-data analysis, which showed minimal bias. We have therefore attributed the positive bias seen in the simulation study to a finite sampling bias, which was observed for large effect sizes in similar studies [ 16 ]. The minimal difference across MI methods seen in the current study may be due to the case-cohort setting having only two weight strata that are collinear with the outcome and all MI approaches including the outcome either directly or indirectly (in the case of stratum-specific imputation). The results of this study complement the work by Marti and Chavance [ 14 ] who showed that inclusion of the outcome in the imputation model was sufficient to account for the unequal sampling probabilities in the context of a case-cohort survival analysis. In the case study, the MI approaches performed similarly to the CCA and we believe this is due to the observed weak associations in BIS.

Our simulation study was complicated by potential bias due to the incompatibility between the imputation and analysis model when the target analysis estimated a RR through a Poisson regression model. The same would be true if the RR was estimated using a binomial model, as in the case study. We assessed this explicitly through imputation of the full cohort prior to subcohort selection, with results shown in Additional file 2 . Minimal bias was seen due to this incompatibility. This may be because we only considered missing values in the covariates, which have been generated from a logistic model. Studies that have shown bias due to this incompatibility had considered missing values in both the outcome and exposure [ 26 ].

One strength of the current study was that it was based on a real case study, with data generated under a causal structure depicted by m-DAGs informed by subject matter knowledge. This simulation study also examined a range of scenarios; however, it is important to note that not all possible scenarios can be considered, and these results may not extend to scenarios with missingness dependent on unobserved data or with unintended missingness in the exposure or outcome. This study also has a number of limitations. The simulations were conducted under controlled conditions such that the analysis model was correctly specified, and the missing data mechanism was known. Under the specified missing data mechanisms, the estimand was known to be recoverable and therefore MI was expected to perform well [ 27 ]. The missing data mechanism is generally unknown in a real data setting and results may not be generalisable.

Another limitation of this study is that only covariates have been considered incomplete. Often there can be missingness in the outcome (e.g. subcohort members drop-out prior to one-year follow-up and outcome collection) and/or unintended missingness in the exposure (e.g. cord blood not stored for infants selected into the subcohort or with food allergy). This study has also only considered a combination of MI and IPW. There are other analytic approaches that could have been used, for example using weighting to account for the missing covariates as well as the missing data by design, or imputing the exposure in those not in the subcohort and conducting a full cohort analysis. These approaches have been explored in a time-to-event setting [ 14 , 16 , 17 ] but little is known on the appropriateness for the case-cohort setting with a binary outcome. Furthermore, no study to date has considered the scenario of additional exposure missing by chance within the subcohort. The limitations mentioned here offer an avenue for future work.

When performing MI in the context of case-cohort studies, how unequal sampling probabilities were accounted for in the imputation model made minimal difference in the analysis. In this setting, inclusion of the outcome in the imputation model, which is already standard practice, was a sufficient approach to account for the unequal sampling probabilities incorporated in the analysis model.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

Barwon Infant Study

Complete-Case Analysis

Dependent Missing – Enhanced

Dependent Missing – Observed

Fully Conditional Specification

Inverse Probability Weighting

Missingness Directed Acyclic Graph

Monte Carlo Standard Error

Multiple Imputation

Multivariate Normal Imputation

Standard Error

Socioeconomic Index for Areas

Vitamin D Insufficiency

Prentice RL. A case-cohort design for epidemiologic cohort studies and disease prevention trials. Biometrika. 1986;73(1):1–11.

Article Google Scholar

Vuillermin P, Saffery R, Allen KJ, Carlin JB, Tang ML, Ranganathan S, et al. Cohort profile: the Barwon infant study. Int J Epidemiol. 2015;44(4):1148–60.

Lumley T. Complex surveys: a guide to analysis using R. Hoboken, NJ: Wiley; 2010.

Book Google Scholar

Cologne J, Preston DL, Imai K, Misumi M, Yoshida K, Hayashi T, et al. Conventional case-cohort design and analysis for studies of interaction. Int J Epidemiol. 2012;41(4).

Rubin D. Inference and missing data. Biometrika. 1976;63(3):581–92.

Rubin DB. Multiple imputation for nonresponse in surveys: John Wiley & Sons; 1987.

Schafer JL. Analysis of incomplete multivariate data: chapman and hall/CRC; 1997.

van Buuren S. Multiple imputation of discrete and continuous data by fully conditional specification. Stat Methods Med Res. 2007;16(3):219–42.

Bartlett J, Seaman SR, White IR, Carpenter JR. Multiple imputation of covariates by fully conditional specification: accommodating the substantive model. Stat Methods Med Res. 2015;24(4):462–87.

Meng X-L. Multiple-imputation inferences with uncongenial sources of input. Stat Sci. 1994;9(4):538–58.

Google Scholar

Lee K, Simpson J. Introduction to multiple imputation for dealing with missing data. Respirology. 2014;19(2):162–7.

Carpenter J, Kenward M. Multiple imputation and its application: John Wiley & Sons; 2012.

Kim JK, Brick JM, Fuller WA, Kalton G. On the Bias of the multiple-imputation variance estimator in survey sampling. J Royal Statistical Society Series B (Statistical Methodology). 2006;68(3):509–21.

Marti H, Chavance M. Multiple imputation analysis of case-cohort studies. Stat Med. 2011;30:1595–607.

Breslow N, Lumley T, Ballantyne CM, Chambless LE, Kulich M. Using the whole cohort in the anlaysis of case-cohort data. Am J Epidemiol. 2009;169(11):1398–405.

Keogh RH, Seaman SR, Bartlett J, Wood AM. Multiple imputation of missing data in nested case-control and case-cohort studies. Biometrics. 2018;74:1438–49.

Keogh RH, White IR. Using full-cohort data in nested case-control and case-cohort studies by multiple imputation. Stat Med. 2013;32:4021–43.

Sato T. Risk ratio estimation in case-cohort studies. Environ Health Perspect. 1994;102(Suppl 8):53–6.

Molloy J, Koplin JJ, Allen KJ, Tang MLK, Collier F, Carlin JB, et al. Vitamin D insufficiency in the first 6 months of infancy and challenge-proven IgE-mediated food allergy at 1 year of age: a case-cohort study. Allergy. 2017;72:1222–31.

Article CAS Google Scholar

Zhou G. A modified poisson regression approach to prospective studies with binary data. Am J Epidemiol. 2004;159(7):702–6.

Borgan O, Langholz B, Samuelsen SO, Goldstein L, Pogoda J. Exposure stratified case-cohort designs. Lifetime Data Anal. 2000;6:39–58.

von Hippel PT. How to impute interactions, squares, and other transformed variables. Sociological Methodology. 2009;39:1.

StataCorp. Stata Statistical Software: Release 15. In: StataCorp, editor. College Station, TX: StataCorp LLC; 2017.

White IR. Simsum: Analyses of simulation studies including monte carlo error. The Stata Journal. 2010;10(3).

Morris TP, White IR, Crowther MJ. Using simulation studies to evaluate statistical methods. Stat Med. 2019:1–29.

Sullivan TR, Lee K, J, Ryan P, Salter AB. Multiple imputation for handling missing outcome data when estimating the relative risk. BMC Medical Research Metholody. 2017;17:134.

Moreno-Betancur M, Lee KJ, Leacy FB, White IR, Simpson JA, Carlin J. Canonical causal diagrams to guide the treatment of missing data in epidemiologic studies. Am J Epidemology. 2018;187:12.

Download references

Acknowledgements

The authors would like to thank the Melbourne Missing Data group and members of the Victorian Centre for Biostatistics for providing feedback in designing and interpreting the simulation study. We would also like to thank the BIS investigator group for providing access to the case-study data for illustrative purposes in this work.

This work was supported by the Australian National Health and Medical Research Council (Postgraduate Scholarship 1190921 to MM, career development fellowship 1127984 to KJL, and project grant 1166023). MMB is the recipient of an Australian Research Council Discovery Early Career Researcher Award (project number DE190101326) funded by the Australian Government. MM is funded by an Australian Government Research Training Program Scholarship. Research at the Murdoch Children’s Research Institute is supported by the Victorian Government’s Operational Infrastructure Support Program. The funding bodies do not have any role in the collection, analysis, interpretation or writing of the study.

Author information

Authors and affiliations.

Clinical Epidemiology & Biostatistics Unit, Murdoch Children’s Research Institute, Royal Children’s Hospital, 50 Flemington Rd, Parkville, Melbourne, VIC, 3052, Australia

Melissa Middleton, Cattram Nguyen, Margarita Moreno-Betancur, John B. Carlin & Katherine J. Lee

Department of Paediatrics, The University of Melbourne, Parkville, Australia

You can also search for this author in PubMed Google Scholar

Contributions

MM, CN, MMB, JBC and KJL conceived the project and designed the study. MM designed the simulation study and conducted the analysis, with input from co-authors, and drafted the manuscript. KJL, CN, MMB and JBC provided critical input to the manuscript. All of the co-authors read and approved the final version of this paper.

Corresponding author

Correspondence to Melissa Middleton .

Ethics declarations

Ethics approval and consent to participate.

The case study used data from the Barwon Infant Study, which has ethics approval from the Barwon Health Human Research and Ethics Committee (HREC 10/24). Participating parents provided informed consent and research methods followed national and international guidelines.

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1., additional file 2., additional file 3., rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and permissions

About this article

Cite this article.

Middleton, M., Nguyen, C., Moreno-Betancur, M. et al. Evaluation of multiple imputation approaches for handling missing covariate information in a case-cohort study with a binary outcome. BMC Med Res Methodol 22 , 87 (2022). https://doi.org/10.1186/s12874-021-01495-4

Download citation

Received : 20 September 2021

Accepted : 15 December 2021

Published : 03 April 2022

DOI : https://doi.org/10.1186/s12874-021-01495-4

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Multiple imputation

- Case-cohort study

- Missing data

- Unequal sampling probability

- Inverse probability weighting

BMC Medical Research Methodology

ISSN: 1471-2288

- General enquiries: [email protected]

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Challenges associated with missing data in electronic health records: A case study of a risk prediction model for diabetes using data from Slovenian primary care

Affiliations.

- 1 University of Maribor, Slovenia.

- 2 The University of Edinburgh, UK.

- 3 Brigham and Women's Hospital/Harvard Medical School, USA.

- PMID: 29027512

- DOI: 10.1177/1460458217733288

The increasing availability of data stored in electronic health records brings substantial opportunities for advancing patient care and population health. This is, however, fundamentally dependant on the completeness and quality of data in these electronic health records. We sought to use electronic health record data to populate a risk prediction model for identifying patients with undiagnosed type 2 diabetes mellitus. We, however, found substantial (up to 90%) amounts of missing data in some healthcare centres. Attempts at imputing for these missing data or using reduced dataset by removing incomplete records resulted in a major deterioration in the performance of the prediction model. This case study illustrates the substantial wasted opportunities resulting from incomplete records by simulation of missing and incomplete records in predictive modelling process. Government and professional bodies need to prioritise efforts to address these data shortcomings in order to ensure that electronic health record data are maximally exploited for patient and population benefit.

Keywords: databases and data mining; electronic health records; missing data; primary care; quality control; type 2 diabetes.

PubMed Disclaimer

Similar articles

- The Longitudinal Epidemiologic Assessment of Diabetes Risk (LEADR): Unique 1.4 M patient Electronic Health Record cohort. Fishbein HA, Birch RJ, Mathew SM, Sawyer HL, Pulver G, Poling J, Kaelber D, Mardon R, Johnson MC, Pace W, Umbel KD, Zhang X, Siegel KR, Imperatore G, Shrestha S, Proia K, Cheng Y, McKeever Bullard K, Gregg EW, Rolka D, Pavkov ME. Fishbein HA, et al. Healthc (Amst). 2020 Dec;8(4):100458. doi: 10.1016/j.hjdsi.2020.100458. Epub 2020 Oct 1. Healthc (Amst). 2020. PMID: 33011645 Free PMC article.

- Development of a screening tool using electronic health records for undiagnosed Type 2 diabetes mellitus and impaired fasting glucose detection in the Slovenian population. Štiglic G, Kocbek P, Cilar L, Fijačko N, Stožer A, Zaletel J, Sheikh A, Povalej Bržan P. Štiglic G, et al. Diabet Med. 2018 May;35(5):640-649. doi: 10.1111/dme.13605. Epub 2018 Mar 15. Diabet Med. 2018. PMID: 29460977

- Opportunities and challenges in developing risk prediction models with electronic health records data: a systematic review. Goldstein BA, Navar AM, Pencina MJ, Ioannidis JP. Goldstein BA, et al. J Am Med Inform Assoc. 2017 Jan;24(1):198-208. doi: 10.1093/jamia/ocw042. Epub 2016 May 17. J Am Med Inform Assoc. 2017. PMID: 27189013 Free PMC article. Review.

- Exploring practical approaches to maximising data quality in electronic healthcare records in the primary care setting and associated benefits. Report of panel-led discussion held at SAPC in July 2014. Dungey S, Glew S, Heyes B, Macleod J, Tate AR. Dungey S, et al. Prim Health Care Res Dev. 2016 Sep;17(5):448-52. doi: 10.1017/S1463423615000596. Epub 2016 Jan 18. Prim Health Care Res Dev. 2016. PMID: 26775763

- Electronic health record phenotyping improves detection and screening of type 2 diabetes in the general United States population: A cross-sectional, unselected, retrospective study. Anderson AE, Kerr WT, Thames A, Li T, Xiao J, Cohen MS. Anderson AE, et al. J Biomed Inform. 2016 Apr;60:162-8. doi: 10.1016/j.jbi.2015.12.006. Epub 2015 Dec 17. J Biomed Inform. 2016. PMID: 26707455 Free PMC article.

- Recurrent Neural Network based Time-Series Modeling for Long-term Prognosis Following Acute Traumatic Brain Injury. Nayebi A, Tipirneni S, Foreman B, Ratcliff J, Reddy CK, Subbian V. Nayebi A, et al. AMIA Annu Symp Proc. 2022 Feb 21;2021:900-909. eCollection 2021. AMIA Annu Symp Proc. 2022. PMID: 35309007 Free PMC article.

- Identifying early-measured variables associated with APACHE IVa providing incorrect in-hospital mortality predictions for critical care patients. Feng S, Dubin JA. Feng S, et al. Sci Rep. 2021 Nov 12;11(1):22203. doi: 10.1038/s41598-021-01290-7. Sci Rep. 2021. PMID: 34772961 Free PMC article.

- The National ReferAll Database: An Open Dataset of Exercise Referral Schemes Across the UK. Steele J, Wade M, Copeland RJ, Stokes S, Stokes R, Mann S. Steele J, et al. Int J Environ Res Public Health. 2021 Apr 30;18(9):4831. doi: 10.3390/ijerph18094831. Int J Environ Res Public Health. 2021. PMID: 33946537 Free PMC article.

- Data Mining in Healthcare: Applying Strategic Intelligence Techniques to Depict 25 Years of Research Development. Kolling ML, Furstenau LB, Sott MK, Rabaioli B, Ulmi PH, Bragazzi NL, Tedesco LPC. Kolling ML, et al. Int J Environ Res Public Health. 2021 Mar 17;18(6):3099. doi: 10.3390/ijerph18063099. Int J Environ Res Public Health. 2021. PMID: 33802880 Free PMC article.

- Missing data in primary care research: importance, implications and approaches. Marino M, Lucas J, Latour E, Heintzman JD. Marino M, et al. Fam Pract. 2021 Mar 29;38(2):200-203. doi: 10.1093/fampra/cmaa134. Fam Pract. 2021. PMID: 33480404 Free PMC article. No abstract available.

- Search in MeSH

Related information

Linkout - more resources, full text sources.

- Edinburgh Research Explorer, University of Edinburgh

Other Literature Sources

- scite Smart Citations

- Genetic Alliance

- MedlinePlus Health Information

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

- Search Menu

- Sign in through your institution

- Advance Articles

- Author Guidelines

- Submission Site

- Open Access Policy

- Self-Archiving Policy

- Why publish with Series A?

- About the Journal of the Royal Statistical Society Series A: Statistics in Society

- About The Royal Statistical Society

- Editorial Board

- Advertising & Corporate Services

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

- < Previous

Statistical Methods for Handling Incomplete Data

- Article contents

- Figures & tables

- Supplementary Data

Amit K Chowdhry, Statistical Methods for Handling Incomplete Data, Journal of the Royal Statistical Society Series A: Statistics in Society , Volume 186, Issue 1, January 2023, Page 166, https://doi.org/10.1093/jrsssa/qnad005

- Permissions Icon Permissions

Most references on missing data are applied in nature and are intended to guide researchers in various disciplines on how to appropriately analyse missing data. It is hard to find good comprehensive references which go into sufficient statistical theory for research into missing data. This book stands apart as it covers missing data theory in detail. Topics in this book range from the basics of likelihood-based inference, the basics of imputation, multiple imputation, propensity score methods, non-ignorable missing data, and various advanced methods, as well as applications in longitudinal, clustered, and survey data. The format of the book is in providing brief text, and is heavy on lemmas and theorems. Overall, this book reads like a graduate-level mathematical statistics textbook.

In contrast to the classic book on the topic, Statistical Analysis with Missing Data by Little and Rubin, now in its 3rd Edition, this book is more valuable for the mathematical statistician, whereas Little and Rubin is better as a book to read cover-to-cover to better understand how the theory is applied in practice.

The text says that this book is most appropriate for second year PhD students, and I agree that this is most appropriate for doctoral students in mathematics and statistics at the second year and above. While this book may have material of interest for applied statisticians. Mathematicians interested in learning about the problem of missing data and approaches to handing missing data may also find this book interesting and useful. Overall, this book is a great addition to the library of any PhD-level statistician doing research missing data methodology as is an authoritative reference on the mathematics of missing data. This book deserves the highest endorsement as it fills a unique niche in the available literature on the topic, and is of very high quality.

| Month: | Total Views: |

|---|---|

| January 2023 | 2 |

| February 2023 | 34 |

| March 2023 | 61 |

| April 2023 | 90 |

| May 2023 | 9 |

| June 2023 | 16 |

| July 2023 | 7 |

| August 2023 | 8 |

| September 2023 | 14 |

| October 2023 | 11 |

| November 2023 | 7 |

| January 2024 | 20 |

| February 2024 | 22 |

| March 2024 | 31 |

| April 2024 | 19 |

| May 2024 | 26 |

| June 2024 | 29 |

| July 2024 | 9 |

Email alerts

Citing articles via.

- Recommend to Your Librarian

- Advertising & Corporate Services

- Journals Career Network

- Email Alerts

Affiliations

- Online ISSN 1467-985X

- Print ISSN 0964-1998

- Copyright © 2024 Royal Statistical Society

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Flow field reconstruction from sparse sensor measurements with physics-informed neural networks

- Hosseini, Mohammad Yasin

- Shiri, Yousef

In the realm of experimental fluid mechanics, accurately reconstructing high-resolution flow fields is notably challenging due to often sparse and incomplete data across time and space domains. This is exacerbated by the limitations of current experimental tools and methods, which leave critical areas without measurable data. This research suggests a feasible solution to this problem by employing an inverse physics-informed neural network (PINN) to merge available sparse data with physical laws. The method's efficacy is demonstrated using flow around a cylinder as a case study, with three distinct training sets. One was the sparse velocity data from a domain, and the other two datasets were limited velocity data obtained from the domain boundaries and sensors around the cylinder wall. The coefficient of determination (R 2 ) coefficient and mean squared error (RMSE) metrics, indicative of model performance, have been determined for the velocity components of all models. For the 28 sensors model, the R 2 value stands at 0.996 with an associated RMSE of 0.0251 for the u component, while for the v component, the R 2 value registers at 0.969, accompanied by an RMSE of 0.0169. The outcomes indicate that the method can successfully recreate the actual velocity field with considerable precision with more than 28 sensors around the cylinder, highlighting PINN's potential as an effective data assimilation technique for experimental fluid mechanics.

- Laminar Flows

10 Major Cyberattacks And Data Breaches In 2024 (So Far)

Data extortion and ransomware attacks have had a massive impact on businesses during the first half of 2024.

Biggest Cyberattacks And Breaches

If the pace of major cyberattacks during the first half of 2024 has seemed to be nonstop, that’s probably because it has been: The first six months of the year have seen organizations fall prey to a series of ransomware attacks as well as data breaches focused on data theft and extortion.

And while recent years had been seeing intensifying cyberattacks, by and large they spared the general public from significant disruption — something that has proven to not be the case during 2024 so far.

[Related: Network Security Devices Are The Front Door To An IT Environment, But Are They Under Lock And Key?]

For instance, the February ransomware attack against UnitedHealth-owned prescription processor Change Healthcare caused massive disruption in the U.S. health care system for weeks — preventing many pharmacies and hospitals from processing claims and receiving payments. Then in May, the Ascension health system was struck by a ransomware attack that forced it to divert emergency care from some of its hospitals.

Most recently, software maker CDK Global fell victim to a crippling ransomware attack that has disrupted thousands of car dealerships that rely on the company’s platform. As of this writing, the disruptions were continuing, nearly two weeks after the initial attack.

The attacks have raised questions about whether threat actors are intentionally targeting companies whose patients and customers would be severely affected by the disruptions, in order to put increased pressure on the organizations for paying a ransom. If so, the tactic would seem to have been working, since UnitedHealth paid a $22 million ransom to a Russian-speaking cybercrime group that perpetrated the Change Healthcare attack, and CDK Global reportedly was planning to pay attackers’ ransom demands, as well.

It’s not certain that this has been the attackers’ strategy, however, said Mark Lance, vice president for DFIR and threat intelligence at GuidePoint Security, No. 39 on CRN’s Solution Provider 500 for 2024.

“Do I think that it was indirect or there was intent to have an impact all these kinds of downstream providers? You never know,” Lance said. When it comes to ransomware groups, “a lot of times, they might not even recognize the level of impact indirectly [an attack] is going to have on downstream providers or services.”

Still, he said, it can’t be entirely ruled out that attackers “might be using that as an opportunity to leverage [the disruption] and make sure they get paid.” And if there continue to be mass-disruption attacks such as these that point toward a “distinct trend,” that would represent a notable shift in attacker tactics, given that threat actors have usually steered clear of attacks that would put a government and law enforcement spotlight on them, Lance noted.