Loading metrics

Open Access

Perspective

The Perspective section provides experts with a forum to comment on topical or controversial issues of broad interest.

See all article types »

Correction of scientific literature: Too little, too late!

* E-mail: [email protected] (LB); [email protected] (EB); [email protected] (JH); [email protected] (GM-K)

Affiliation Faculty of Information and Technology, Monash University, Clayton, Victoria, Australia

Affiliation Harbers Bik LLC, San Francisco, California, United State of America

Affiliation Cipher Skin, Denver, Colorado, United State of America

Affiliation School of Health and Society, University of Wollongong, Wollongong, New South Wales, Australia

- Lonni Besançon,

- Elisabeth Bik,

- James Heathers,

- Gideon Meyerowitz-Katz

Published: March 3, 2022

- https://doi.org/10.1371/journal.pbio.3001572

- Reader Comments

The Coronavirus Disease 2019 (COVID-19) pandemic has highlighted the limitations of the current scientific publication system, in which serious post-publication concerns are often addressed too slowly to be effective. In this Perspective, we offer suggestions to improve academia’s willingness and ability to correct errors in an appropriate time frame.

Citation: Besançon L, Bik E, Heathers J, Meyerowitz-Katz G (2022) Correction of scientific literature: Too little, too late! PLoS Biol 20(3): e3001572. https://doi.org/10.1371/journal.pbio.3001572

Copyright: © 2022 Besançon et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Funding: The author(s) received no specific funding for this work.

Competing interests: EB has received consulting fees from publishers and research institutions, and gets donations through Patreon.com . All authors have been involved with error-checking research and have been the target of reprisals in a number of ways.

Traditionally, scientific progress has relied on trust and the relatively slow cycle of peer review, publication, and citation of research data. The current Coronavirus Disease 2019 (COVID-19) pandemic not only accelerated the speed of research but also brought to light some severe shortcomings of the scientific publication process, such as failures to quickly address errors or to catch and prevent scientific misconduct.

Within months of the Severe Acute Respiratory Syndrome Coronavirus 2 (SARS-CoV-2) virus being identified, disease progression to COVID-19, viral transmission routes, and treatment options were being carefully studied, and effective vaccines had begun development. This was one of the most impressive scientific achievements of the modern era [ 1 ]. While the goal of disease mitigation has been rightfully praised for its comparative speed, organization, and safety, the mechanics of the publication system that sustained it have been far from ideal. We believe it is true to say the response to COVID-19 has succeeded in spite of, rather than because of, the present publication system.

During the COVID-19 pandemic, many basic quality control and transparency principles have been violated on a regular basis [ 2 ]. This is perhaps most apparent in the Surgisphere debacle [ 3 ], in which global policy on COVID-19 treatment was changed overnight on the basis of a database that later turned out not to exist. Although the Surgisphere retraction happened quickly, it was far slower than the change in medical practice, which was immediate, and represents a best-case scenario in which a high-profile paper was immediately interrogated and investigated. The stories of hydroxychloroquine and ivermectin, both widely promoted based on poor quality or even fraudulent studies [ 4 ], are further concerning accounts of how the scientific publishing process has failed to exercise basic quality control.

In the usual course of scientific investigation, these stories would be something of a footnote—fraud, malfeasance, and mendacity within median research studies are interesting to meta-scientists, methodologists, and theoreticians, but rarely have an impact outside of the scientific community. By contrast, high-profile studies with global implications receive a great deal of collective attention and scrutiny if they are untrustworthy, which normally limits their influence. However, in the setting of the present pandemic, poor research has sometimes been instantly applied to health policy after being published or simply publicized, and used in treatment regimens shortly after. There is an immediacy to the impact of scientific papers that was rarely present prior to the pandemic.

Traditional responses to such research issues (such as post-review letters, notes of concern, and even retractions) are woefully inadequate to address these problems. Nowadays, preprints and peer-reviewed research papers are rapidly shared on online platforms among millions of readers within days of being published. A paper can impact worldwide health and well-being in a few weeks online; that it may be retracted at some point months in the future does not undo any harm caused in the meantime. Even if a paper is removed entirely from the publication record, it will never be removed from the digital space and is still likely to be cited by researchers and laypeople alike as evidence. Often, its removal contributes to its mystique. For example, a retracted and proven false study on vitamin D from 2020 [ 5 ] that was pulled from the preprint server it was hosted on was still being cited uncritically as recently as November 2021 .

All of these issues are compounded by the glacial pace at which scientific correction is likely to occur [ 6 ]. Identifying flaws in a paper may only take hours, but even the most basic formal correction can take months of mutual correspondence between scientific journal editors, authors, and critics. Even when authors try to correct their own published manuscripts, they can face strenuous challenges that prompt many to give up. Worse still, while editors and authors might gain financial and career benefits from ignoring errors, scientific critics are explicitly discouraged by the academic community from performing this work.

The authors of this Perspective have all been involved in error detection in this manner. For our voluntary work, we have received both legal and physical threats and been defamed by senior academics and internet trolls. While many scientists personally support error-checking work [ 7 ], academia as a whole seems to view error-checking as a dirty footnote to the achievement of publication. Even when papers are retracted, individuals who have spent endless hours explicating the problems therein are left with no formal career benefits whatsoever—if anything, they face substantial retaliation for correcting errors.

This system is unsustainable, unfair, and dangerous, and the pandemic has acted to magnify its unsuitability and numerous limitations. Rather than being a disappointing footnote, error-checking should be supported and funded by government agencies and research institutions. Public, open, and moderated review on PubPeer [ 8 ] and similar websites that expose serious concerns should be rewarded with praise rather than scorn, personal attacks, or threats (either legal or on the reviewers’ lives ). Importantly, retraction should not always be seen as a failure. While some papers are retracted for reasons of serious research misconduct, other papers are retracted because of unintentional errors [ 9 ]. Scientists must acknowledge that any process comes with an error rate, and correcting mistakes should not limit careers, but instead enhance them [ 10 ]. Consequently, we propose some solutions that could improve the current error-checking and correction system in scientific publishing ( Box 1 ).

Box 1. Approaches to destigmatize and speed up the scientific correction process

- Editors should issue an Expression of Concern within days after serious and verifiable concerns have been raised either privately or on a public forum. If the concerns are publicly raised, the Expression of Concern should link to them, otherwise, it should summarize the key points raised privately.

- Committee on Publication Ethics (COPE) guidelines for editors and journals should provide a timeline for responding to concerns about published papers, with, for instance, a maximum of 90 days to publicly highlight concerns, contact the authors, get a response from the authors, and publish it.

- Public, open, and post-publication peer review should be considered and rewarded by hiring and promotion committees as well as by funding bodies. Applications for funding or positions should consider such correction efforts made by scientists just as much as they consider the publication of new research results.

- To further establish an error-checking culture, scientists should be trained to recognize mistakes (including their own). Institutes and funding agencies should allocate time for error-checking, and institutions and journals should promote corrections and retractions as much as they promote new research findings.

- Notices of retractions or corrections could be linked to the researchers that initially raised concerns and attributed a DOI that links to their careful rebuttal of the original paper (even if it’s their own).

- Journal webpages should directly link to discussions on PubPeer rather than rely on the use of an external plug-in.

- DOI versioning already allows for concurrent versions of documents to coexist and should be adopted by publishing venues to allow for easy and fast correction of papers when needed, along with meta-data on the changes between versions.

- Critics who raise professional, nondefamatory concerns about a preprint or published paper should have explicit, paid legal protection (e.g., provided by their institutions or professional societies) against threats issued by the critiqued authors or their institutions.

Understandably, there are legal concerns when discussing the retraction and/or correction of scientific papers. We do not wish to minimize these issues, as they may be serious for both publishers and academics alike. However, many such problems stem from an environment in which retraction is seen as a career-ending calamity, and, thus, our proposed improvements ( Box 1 ) may alleviate many of the legal concerns faced by the academic community.

Viewing the retraction and correction of scientific papers as a failure is a self-fulfilling prophecy: As the status of a paper rarely changes post-publication, it is seen as something exceptional or immoderate instead of a normal part of the scientific process. The alternative to addressing this problem is to continue to maintain a scientific commons that is unable to deal with the rapid dissemination and correction of research that is needed in the digital age.

- View Article

- PubMed/NCBI

- Google Scholar

- 8. Barbour B, Stell BM. PubPeer: Scientific assessment without metrics. In: Biagioli M, Lippman A, editors. Gaming the Metrics: Misconduct and Manipulation in Academic Research. The MIT Press; 2020. p. 149–155. https://doi.org/10.7551/mitpress/11087.001.0001

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 15 June 2023

A meta-analysis of correction effects in science-relevant misinformation

- Man-pui Sally Chan ORCID: orcid.org/0000-0003-2984-0487 1 &

- Dolores Albarracín ORCID: orcid.org/0000-0002-9878-942X 2

Nature Human Behaviour volume 7 , pages 1514–1525 ( 2023 ) Cite this article

4002 Accesses

14 Citations

614 Altmetric

Metrics details

- Communication

- Human behaviour

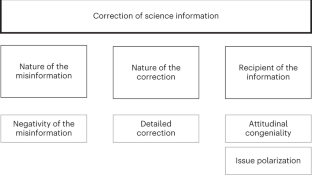

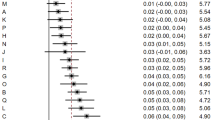

Scientifically relevant misinformation, defined as false claims concerning a scientific measurement procedure or scientific evidence, regardless of the author’s intent, is illustrated by the fiction that the coronavirus disease 2019 vaccine contained microchips to track citizens. Updating science-relevant misinformation after a correction can be challenging, and little is known about what theoretical factors can influence the correction. Here this meta-analysis examined 205 effect sizes (that is, k , obtained from 74 reports; N = 60,861), which showed that attempts to debunk science-relevant misinformation were, on average, not successful ( d = 0.19, P = 0.131, 95% confidence interval −0.06 to 0.43). However, corrections were more successful when the initial science-relevant belief concerned negative topics and domains other than health. Corrections fared better when they were detailed, when recipients were likely familiar with both sides of the issue ahead of the study and when the issue was not politically polarized.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

111,21 € per year

only 9,27 € per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

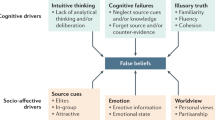

The psychological drivers of misinformation belief and its resistance to correction

Understanding and combatting misinformation across 16 countries on six continents

Accuracy prompts are a replicable and generalizable approach for reducing the spread of misinformation

Data availability.

The data that support the findings of this study are openly available in OSF at https://osf.io/vkygw/ .

Code availability

All code for data analyses associated with the current submission is available at https://osf.io/vkygw/ . Any updates will also be published in OSF.

Ahmed, W., Downing, J., Tuters, M. & Knight, P. Four experts investigate how the 5G coronavirus conspiracy theory began. The Conversation https://theconversation.com/four-experts-investigate-how-the-5g-coronavirus-conspiracy-theory-began-139137 (2020).

Heilweil, R. The conspiracy theory about 5G causing coronavirus, explained. Vox (2020); https://www.vox.com/recode/2020/4/24/21231085/coronavirus-5g-conspiracy-theory-covid-facebook-youtube

Pigliucci, M. & Boudry, M. The dangers of pseudoscience. The New York Times (2013); https://opinionator.blogs.nytimes.com/2013/10/10/the-dangers-of-pseudoscience/

Gordin, M. D. The problem with pseudoscience: pseudoscience is not the antithesis of professional science but thrives in science’s shadow. EMBO Rep. 18 , 1482 (2017).

Article CAS PubMed PubMed Central Google Scholar

Townson, S. Why people fall for pseudoscience (and how academics can fight back). The Guardian (2016); https://www.theguardian.com/higher-education-network/2016/jan/26/why-people-fall-for-pseudoscience-and-how-academics-can-fight-back

Caulfield, T. Pseudoscience and COVID-19—we’ve had enough already. Nature https://doi.org/10.1038/d41586-020-01266-z (2020).

Article PubMed PubMed Central Google Scholar

Pennycook, G. & Rand, D. G. Lazy, not biased: susceptibility to partisan fake news is better explained by lack of reasoning than by motivated reasoning. Cognition 188 , 39–50 (2019).

Article PubMed Google Scholar

Vraga, E. K. & Bode, L. Defining misinformation and understanding its bounded nature: using expertise and evidence for describing misinformation. Polit. Commun. https://doi.org/10.1080/10584609.2020.1716500 (2020).

Lewandowsky, S. et al. The Debunking Handbook 2020. Databrary https://doi.org/10.17910/b7.1182 (2020).

Pennycook, G. et al. Shifting attention to accuracy can reduce misinformation online. Nature 592 , 590–595 (2021).

Article CAS PubMed Google Scholar

Garrett, R. K., Weeks, B. E. & Neo, R. L. Driving a wedge between evidence and beliefs: how online ideological news exposure promotes political misperceptions. J. Comput.-Mediat. Commun. 21 , 331–348 (2016).

Article Google Scholar

Lazer, D. M. J. et al. The science of fake news: addressing fake news requires a multidisciplinary effort. Science 359 , 1094–1096 (2018).

Wyer, R. S. & Unverzagt, W. H. Effects of instructions to disregard information on its subsequent recall and use in making judgments. J. Pers. Soc. Psychol. 48 , 533–549 (1985).

Greitemeyer, T. Article retracted, but the message lives on. Psychon. Bull. Rev. 21 , 557–561 (2014).

McDiarmid, A. D. et al. Psychologists update their beliefs about effect sizes after replication studies. Nat. Hum. Behav. https://doi.org/10.1038/s41562-021-01220-7 (2021).

Yousuf, H. et al. A media intervention applying debunking versus non-debunking content to combat vaccine misinformation in elderly in the Netherlands: a digital randomised trial. EClinicalMedicine 35 , 100881 (2021).

Kuru, O. et al. The effects of scientific messages and narratives about vaccination. PLoS ONE 16 , e0248328 (2021).

Anderson, C. A. Inoculation and counterexplanation: debiasing techniques in the perseverance of social theories. Soc. Cogn. 1 , 126–139 (1982).

Jacobson, N. G. What Does Climate Change Look Like to You? The Role of Internal and External Representations in Facilitating Conceptual Change about the Weather and Climate Distinction (Univ. Southern California, 2022).

Pluviano, S., Watt, C. & Sala, S. D. Misinformation lingers in memory: failure of three pro-vaccination strategies. PLoS ONE 12 , 15 (2017).

Maertens, R., Anseel, F. & van der Linden, S. Combatting climate change misinformation: evidence for longevity of inoculation and consensus messaging effects. J. Environ. Psychol. 70 , 101455 (2020).

Chan, M. S., Jones, C. R., Jamieson, K. H. & Albarracin, D. Debunking: a meta-analysis of the psychological efficacy of messages countering misinformation. Psychol. Sci. 28 , 1531–1546 (2017).

Janmohamed, K. et al. Interventions to mitigate vaping misinformation: a meta-analysis. J. Health Commun. 27 , 84–92 (2022).

Walter, N. & Tukachinsky, R. A meta-analytic examination of the continued influence of misinformation in the face of correction: how powerful is it, why does it happen, and how to stop it? Commun. Res. 47 , 155–177 (2020).

Walter, N., Cohen, J., Holbert, R. L. & Morag, Y. Fact-checking: a meta-analysis of what works and for whom. Polit. Commun. 37 , 350–375 (2020).

Walter, N. & Murphy, S. T. How to unring the bell: a meta-analytic approach to correction of misinformation. Commun. Monogr. 85 , 423–441 (2018).

Walter, N., Brooks, J. J., Saucier, C. J. & Suresh, S. Evaluating the impact of attempts to correct health misinformation on social media: a meta-analysis. Health Commun. 36 , 1776–1784 (2021).

Chan, M. S., Jamieson, K. H. & Albarracín, D. Prospective associations of regional social media messages with attitudes and actual vaccination: A big data and survey study of the influenza vaccine in the United States. Vaccine 38 , 6236–6247 (2020).

Lawson, V. Z. & Strange, D. News as (hazardous) entertainment: exaggerated reporting leads to more memory distortion for news stories. Psychol. Pop. Media Cult. 4 , 188–198 (2015).

Nature Microbiology. Exaggerated headline shock. Nat. Microbiol. 4 , 377–377 (2019).

Pinker, S. The media exaggerates negative news. This distortion has consequences. The Guardian (2018); https://www.theguardian.com/commentisfree/2018/feb/17/steven-pinker-media-negative-news

CDC. HPV vaccine safety. U.S. Department of Health & Human Services https://www.cdc.gov/hpv/parents/vaccinesafety.html (2021).

Jaber, N. Parent concerns about HPV vaccine safety increasing. National Cancer Institute https://www.cancer.gov/news-events/cancer-currents-blog/2021/hpv-vaccine-parents-safety-concerns (2021).

Brody, J. E. Why more kids aren’t getting the HPV vaccine. The New York Times https://www.nytimes.com/2021/12/13/well/live/hpv-vaccine-children.html (2021).

Walker, K. K., Owens, H. & Zimet, G. ‘We fear the unknown’: emergence, route and transfer of hesitancy and misinformation among HPV vaccine accepting mothers. Prev. Med. Rep. 20 , 101240 (2020).

Normile, D. Japan reboots HPV vaccination drive after 9-year gap. Science 376 , 14 (2022).

Larson, H. J. Japan’s HPV vaccine crisis: act now to avert cervical cancer cases and deaths. Lancet Public Health 5 , e184–e185 (2020).

Soroka, S., Fournier, P. & Nir, L. Cross-national evidence of a negativity bias in psychophysiological reactions to news. Proc. Natl Acad. Sci. USA 116 , 18888–18892 (2019).

Baumeister, R. F., Bratslavsky, E., Finkenauer, C. & Vohs, K. D. Bad is stronger than good. Rev. Gen. Psychol. 5 , 323–370 (2001).

Kunda, Z. The case for motivated reasoning. Psychol. Bull. 108 , 480–498 (1990).

Kopko, K. C., Bryner, S. M. K., Budziak, J., Devine, C. J. & Nawara, S. P. In the eye of the beholder? Motivated reasoning in disputed elections. Polit. Behav. 33 , 271–290 (2011).

Leeper, T. J. & Mullinix, K. J. Motivated reasoning. Oxford Bibliographies https://doi.org/10.1093/OBO/9780199756223-0237 (2018).

Johnson, H. M. & Seifert, C. M. Sources of the continued influence effect: when misinformation in memory affects later inferences. J. Exp. Psychol. Learn. Mem. Cogn. 20 , 1420–1436 (1994).

Wilkes, A. L. & Leatherbarrow, M. Editing episodic memory following the identification of error. Q. J. Exp. Psychol. Sect. A 40 , 361–387 (1988).

Ecker, U. K. H., Lewandowsky, S. & Apai, J. Terrorists brought down the plane!—No, actually it was a technical fault: processing corrections of emotive information. Q. J. Exp. Psychol. 64 , 283–310 (2011).

Lewandowsky, S., Ecker, U. K. H., Seifert, C. M., Schwarz, N. & Cook, J. Misinformation and its correction: continued influence and successful debiasing. Psychol. Sci. Public Interest 13 , 106–131 (2012).

Nyhan, B. & Reifler, J. Does correcting myths about the flu vaccine work? An experimental evaluation of the effects of corrective information. Vaccine 33 , 459–464 (2015).

Nyhan, B., Reifler, J., Richey, S. & Freed, G. L. Effective messages in vaccine promotion: a randomized trial. Pediatrics 133 , e835–e842 (2014).

Nyhan, B. & Reifler, J. When corrections fail: the persistence of political misperceptions. Polit. Behav. 32 , 303–330 (2010).

Rathje, S., Roozenbeek, J., Traberg, C. S., van Bavel, J. J. & van der Linden, S. Meta-analysis reveals that accuracy nudges have little to no effect for U.S. conservatives: regarding Pennycook et al. (2020). Psychol. Sci. https://doi.org/10.25384/SAGE.12594110.v2 (2021).

Greene, C. M., Nash, R. A. & Murphy, G. Misremembering Brexit: partisan bias and individual predictors of false memories for fake news stories among Brexit voters. Memory 29 , 587–604 (2021).

Gawronski, B. Partisan bias in the identification of fake news. Trends Cogn. Sci. 25 , 723–724 (2021).

Pennycook, G. & Rand, D. G. Lack of partisan bias in the identification of fake (versus real) news. Trends Cogn. Sci. 25 , 725–726 (2021).

Borukhson, D., Lorenz-Spreen, P. & Ragni, M. When does an individual accept misinformation? An extended investigation through cognitive modeling. Comput. Brain Behav. 5 , 244–260 (2022).

Roozenbeek, J. et al. Susceptibility to misinformation is consistent across question framings and response modes and better explained by myside bias and partisanship than analytical thinking susceptibility to misinformation. Judgm. Decis. Mak. 17 , 547–573 (2022).

Bolsen, T., Druckman, J. N. & Cook, F. L. The influence of partisan motivated reasoning on public opinion. Polit. Behav. 36 , 235–262 (2014).

Hameleers, M. & van der Meer, T. G. L. A. Misinformation and polarization in a high-choice media environment: how effective are political fact-checkers? Commun. Res. 47 , 227–250 (2020).

Guay, B., Berinsky, A., Pennycook, G. & Rand, D. How to think about whether misinformation interventions work. Preprint at PsyArXiv https://doi.org/10.31234/OSF.IO/GV8QX (2022).

Hove, M. J. & Risen, J. L. It’s all in the timing: interpersonal synchrony increases affiliation. Soc. Cogn. 27 , 949–960 (2009).

Tesch, F. E. Debriefing research participants: though this be method there is madness to it. J. Pers. Soc. Psychol. 35 , 217–224 (1977).

Tanner-Smith, E. E. & Tipton, E. Robust variance estimation with dependent effect sizes: practical considerations including a software tutorial in Stata and SPSS. Res Synth. Methods 5 , 13–30 (2014).

Tanner-Smith, E. E., Tipton, E. & Polanin, J. R. Handling complex meta-analytic data structures using robust variance estimates: a tutorial in R. J. Dev. Life Course Criminol. 2 , 85–112 (2016).

Viechtbauer, W. Conducting meta-analyses in R with the metafor package. J. Stat. Softw. , https://doi.org/10.18637/jss.v036.i03 (2010).

van Aert, R. C. M. CRAN—package puniform. R Project https://cran.r-project.org/web/packages/puniform/index.html (2022).

Coburn, K. M. & Vevea, J. L. weightr: estimating weight-function models for publication bias. (2021); https://cran.r-project.org/web/packages/weights/index.html

Fisher, Z. & Tipton, E. robumeta: an R-package for robust variance estimation in meta-analysis. ArXiv . https://doi.org/10.48550/arXiv.1503.02220 (2015).

Sidik, K. & Jonkman, J. N. Robust variance estimation for random effects meta-analysis. Comput. Stat. Data Anal. 50 , 3681–3701 (2006).

Hedges, L. V., Tipton, E. & Johnson, M. C. Robust variance estimation in meta-regression with dependent effect size estimates. Res. Synth. Methods 1 , 39–65 (2010).

JASP Team. JASP (2022); https://jasp-stats.org/

Higgins, J. P. T., Thompson, S. G., Deeks, J. J. & Altman, D. G. Measuring inconsistency in meta-analyses. Br. Med. J. 327 , 557–560 (2003).

Higgins, J. P. T. & Thompson, S. G. Quantifying heterogeneity in a meta-analysis. Stat. Med. 21 , 1539–1558 (2002).

Tay, L. Q., Hurlstone, M. J., Kurz, T. & Ecker, U. K. H. A comparison of prebunking and debunking interventions for implied versus explicit misinformation. Br. J. Psychol. 113 , 591–607 (2022).

Tappin, B. M., Berinsky, A. J. & Rand, D. G. Partisans’ receptivity to persuasive messaging is undiminished by countervailing party leader cues. Nat. Hum. Behav. , https://doi.org/10.1038/s41562-023-01551-7 (2023).

Traberg, C. S. & van der Linden, S. Birds of a feather are persuaded together: perceived source credibility mediates the effect of political bias on misinformation susceptibility. Pers. Individ. Dif. 185 , 111269 (2022).

van Bavel, J. J. & Pereira, A. The partisan brain: an identity-based model of political belief. Trends Cogn. Sci. 22 , 213–224 (2018).

Kahan, D. M. Misconceptions, misinformation, and the logic of identity-protective cognition. SSRN Electron. J. https://doi.org/10.2139/SSRN.2973067 (2017).

Levendusky, M. Our Common Bonds: Using What Americans Share to Help Bridge the Partisan Divide (Univ. Chicago Press, 2023).

Voelkel, J. G. et al. Interventions reducing affective polarization do not improve anti-democratic attitudes. Nature Human Behaviour , 7 , 55–64 (2023); https://doi.org/10.31219/OSF.IO/7EVMP

Ecker, U. K. H., Hogan, J. L. & Lewandowsky, S. Reminders and repetition of misinformation: helping or hindering its retraction? J. Appl. Res. Mem. Cogn. 6 , 185–192 (2017).

Schwarz, N., Sanna, L. J., Skurnik, I. & Yoon, C. Metacognitive experiences and the intricacies of setting people straight: implications for debiasing and public information campaigns. in. Adv. Exp. Soc. Psychol. 39 , 127–161 (2007).

Ecker, U. K. H., Lewandowsky, S. & Chadwick, M. Can corrections spread misinformation to new audiences? Testing for the elusive familiarity backfire effect. Cogn. Res Princ. Implic. 5 , 41 (2020).

Kappel, K. & Holmen, S. J. Why science communication, and does it work? A taxonomy of science communication aims and a survey of the empirical evidence. Front. Commun. 4 , 55 (2019).

Fischhoff, B. The sciences of science communication. Proc. Natl Acad. Sci. USA 110 , 14033–14039 (2013).

Winters, M. et al. Debunking highly prevalent health misinformation using audio dramas delivered by WhatsApp: evidence from a randomised controlled trial in Sierra Leone. BMJ Glob. Health 6 , 6954 (2021).

Registered replication reports. Association for Psychological Science http://www.psychologicalscience.org/publications/replication (2017).

Vraga, E. K., Kim, S. C. & Cook, J. Testing logic-based and humor-based corrections for science, health, and political misinformation on social media. J. Broadcast Electron. Media 63 , 393–414 (2019).

Vijaykumar, S. et al. How shades of truth and age affect responses to COVID-19 (mis)information: randomized survey experiment among WhatsApp users in UK and Brazil. Humanit. Soc. Sci. Commun. 8 , 1–12 (2021).

Anderson, C. A., Lepper, M. R. & Ross, L. Perseverance of social theories: the role of explanation in the persistence of discredited information. J. Pers. Soc. Psychol. 39 , 1037–1049 (1980).

Sirlin, N., Epstein, Z., Arechar, A. A. & Rand, D. G. Digital literacy is associated with more discerning accuracy judgments but not sharing intentions. Harv. Kennedy Sch. Misinformation Rev. , https://doi.org/10.37016/mr-2020-83 (2021).

Arechar, A. A. et al. Understanding and reducing online misinformation across 16 countries on six continents. Preprint at PsyArXiv https://psyarxiv.com/a9frz/ (2022).

Pennycook, G., McPhetres, J., Zhang, Y., Lu, J. G. & Rand, D. G. Fighting COVID-19 misinformation on social media: experimental evidence for a scalable accuracy-nudge intervention. Psychol. Sci. 31 , 770–780 (2020).

Jahanbakhsh, F. et al. Exploring lightweight interventions at posting time to reduce the sharing of misinformation on social media. in Proc. ACM on Human–Computer Interaction vol. 5, 1–-42 (Association for Computing Machinery, 2021); https://doi.org/10.1145/3449092 (2021).

Pennycook, G. & Rand, D. G. Fighting misinformation on social media using crowdsourced judgments of news source quality. Proc. Natl Acad. Sci. USA 116 , 2521–2526 (2019).

Gesser-Edelsburg, A., Diamant, A., Hijazi, R. & Mesch, G. S. Correcting misinformation by health organizations during measles outbreaks: a controlled experiment. PLoS ONE 13 , e0209505 (2018).

Mosleh, M., Martel, C., Eckles, D. & Rand, D. Promoting engagement with social fact-checks online. Preprint at OSF https://osf.io/rckfy/ (2022).

Andrews, E. A. Combating COVID-19 Vaccine Conspiracy Theories: Debunking Misinformation about Vaccines, Bill Gates, 5G, and Microchips Using Enhanced Correctives . MSc thesis, State Univ. New York at Buffalo (2021).

Koller, M. Rebutting accusations: when does it work, when does it fail? Eur. J. Soc. Psychol. 23 , 373–389 (1993).

Greitemeyer, T. & Sagioglou, C. Does exonerating an accused researcher restore the researcher’s credibility? PLoS ONE 10 , e0126316 (2015).

Hedges, L. V. & Olkin, I. Statistical Methods for Meta-analysis (Academic, 1985).

Hedges, L. V. Distribution Theory for Glass’s estimator of effect size and related estimators. J. Educ. Stat. 6 , 107 (1981).

Borenstein, M., Hedges, L., Higgins, J. & Rothstein, H. Introduction to Meta-analysis (Wiley, 2009).

Lakens, D. Calculating and reporting effect sizes to facilitate cumulative science: a practical primer for t -tests and ANOVAs. Front. Psychol. 4 , 863 (2013).

Morris, S. B. Distribution of the standardized mean change effect size for meta-analysis on repeated measures. Br. J. Math. Stat. Psychol. 53 , 17–29 (2000).

Hart, W. et al. Feeling validated versus being correct: a meta-analysis of selective exposure to information. Psychol. Bull. 135 , 555–588 (2009).

Lord, C. G., Ross, L. & Lepper, M. R. Biased assimilation and attitude polarization: the effects of prior theories on subsequently considered evidence. J. Pers. Soc. Psychol. 37 , 2098–2109 (1979).

Seifert, C. M. The continued influence of misinformation in memory: what makes a correction effective? Psychol. Learn. Motiv. 41 , 265–292 (2002).

van der Linden, S., Leiserowitz, A., Rosenthal, S. & Maibach, E. Inoculating the public against misinformation about climate change. Glob. Chall. 1 , 1600008 (2017).

Ecker, U. K. H. et al. The psychological drivers of misinformation belief and its resistance to correction. Nat. Rev. Psychol. 1 , 13–29 (2022).

Ecker, U., Sharkey, C. X. M. & Swire-Thompson, B. Correcting vaccine misinformation: A failure to replicate familiarity or fear-driven backfire effects. PLoS One, 18 , e0281140 (2023).

Gawronski, B., Brannon, S. M. & Ng, N. L. Debunking misinformation about a causal link between vaccines and autism: two preregistered tests of dual-process versus single-process predictions (with conflicting results). Soc. Cogn. 40 , 580–599 (2022).

Guenther, C. L. & Alicke, M. D. Self-enhancement and belief perseverance. J. Exp. Soc. Psychol. 44 , 706–712 (2008).

Misra, S. Is conventional debriefing adequate? An ethical issue in consumer research. J. Acad. Mark. Sci. 20 , 269–273 (1992).

Green, M. C. & Donahue, J. K. Persistence of belief change in the face of deception: the effect of factual stories revealed to be false. Media Psychol. 14 , 312–331 (2011).

Ecker, U. K. H. & Ang, L. C. Political attitudes and the processing of misinformation corrections. Polit. Psychol. 40 , 241–260 (2019).

Sherman, D. K. & Kim, H. S. Affective perseverance: the resistance of affect to cognitive invalidation. Pers. Soc. Psychol. Bull. 28 , 224–237 (2002).

Golding, J. M., Fowler, S. B., Long, D. L. & Latta, H. Instructions to disregard potentially useful information: the effects of pragmatics on evaluative judgments and recall. J. Mem. Lang. 29 , 212–227 (1990).

Viechtbauer, W. & Cheung, M. W.-L. Outlier and influence diagnostics for meta-analysis. Res. Synth. Methods 1 , 112–125 (2010).

Borenstein, M. in Publication Bias in Meta-analysis: Prevention, Assessment, and Adjustme nts (eds Rothstein, H. R., Sutton, A. J. & Borenstein, M.) 194–220 (John Wiley & Sons, 2005).

Duval, S. in Publication Bias in Meta-analysis: Prevention, Assessment, and Adjustme nts (eds Rothstein, H. R., Sutton, A. J. & Borenstein, M.) 127–144 (John Wiley & Sons, 2005).

Peters, J. L., Sutton, A. J., Jones, D. R., Abrams, K. R. & Rushton, L. Contour-enhanced meta-analysis funnel plots help distinguish publication bias from other causes of asymmetry. J. Clin. Epidemiol. 61 , 991–996 (2008).

Stanley, T. D. & Doucouliagos, H. Meta-regression approximations to reduce publication selection bias. Res. Synth. Methods 5 , 60–78 (2014).

van Assen, M. A. L. M., van Aert, R. C. M. & Wicherts, J. M. Meta-analysis using effect size distributions of only statistically significant studies. Psychol. Methods 20 , 293–309 (2015).

Pustejovsky, J. E. & Rodgers, M. A. Testing for funnel plot asymmetry of standardized mean differences. Res. Synth. Methods 10 , 57–71 (2019).

Maier, M., Bartoš, F. & Wagenmakers, E. J. Robust Bayesian meta-analysis: addressing publication bias with model-averaging. Psychol. Methods , https://doi.org/10.1037/met0000405 (2022).

Download references

Acknowledgements

We thank D. O’Keefe, who assisted in the inter-rater reliability. Research reported in this publication was supported by the National Institute of Mental Health of the National Institutes of Health under Award Number R01MH114847 (D.A.), the National Institute on Drug Abuse of the National Institutes of Health under Award Number DP1 DA048570 (D.A.) and the National Institute of Allergy and Infectious Diseases of the National Institutes of Health under award numbers R01AI147487 (D.A. and M.S.C.) and P30AI045008 (Penn Center for AIDS Research [Penn CFAR] subaward; M.S.C.). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. This research was supported by the Science of Science Communication Endowment from the Annenberg Public Policy Center at the University of Pennsylvania. The funders had no role in study design, data collection and analysis, decision to publish or preparation of the manuscript.

Author information

Authors and affiliations.

Annenberg School for Communication, University of Pennsylvania, Philadelphia, PA, USA

Man-pui Sally Chan

Annenberg School for Communication, Annenberg Public Policy Center, School of Arts and Sciences, School of Nursing, Wharton School, University of Pennsylvania, Philadelphia, PA, USA

Dolores Albarracín

You can also search for this author in PubMed Google Scholar

Contributions

D.A. initiated the project, and M.S.C. supervised the project. Both M.S.C. and D.A. contributed to the theoretical formalism, developed the coding scheme and performed the coding reliability. M.S.C. took the lead in the data curation, preparing the analytical plan and performing the analytic calculations. Both M.S.C. and D.A. discussed the results and contributed to the final version of the manuscript.

Corresponding author

Correspondence to Man-pui Sally Chan .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Peer review

Peer review information.

Nature Human Behaviour thanks Jon Roozenbeek, Sander van der Linden and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information.

Supplementary analyses and results, Tables 1–5 and Fig. 1.

Reporting Summary.

Supplementary table.

PRISMA 2020 Checklist.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Cite this article.

Chan, Mp.S., Albarracín, D. A meta-analysis of correction effects in science-relevant misinformation. Nat Hum Behav 7 , 1514–1525 (2023). https://doi.org/10.1038/s41562-023-01623-8

Download citation

Received : 09 May 2022

Accepted : 09 May 2023

Published : 15 June 2023

Issue Date : September 2023

DOI : https://doi.org/10.1038/s41562-023-01623-8

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Engaging with conspiracy believers.

- Karen M. Douglas

- Robbie M. Sutton

- Daniel Toribio-Flórez

Review of Philosophy and Psychology (2024)

Psychological inoculation strategies to fight climate disinformation across 12 countries

- Tobia Spampatti

- Ulf J. J. Hahnel

- Tobias Brosch

Nature Human Behaviour (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Corrections, retractions and updates after publication

Taylor & francis journal article correction and retraction policy.

Sometimes after an article has been published it may be necessary to make a change to the Version of Record . This change will be made after careful consideration by the journal’s editorial team, with support from Taylor & Francis staff to make sure any necessary changes are done in accordance with both Taylor & Francis policies and guidance from the Committee on Publication Ethics (COPE).

Aside from cases where a minor error is concerned, any necessary changes will be accompanied by a post-publication notice, which will be permanently linked to the original article. These changes can be in the form of a Correction notice, an Expression of Concern , a Retraction , and in rare circumstances, a Removal .

The purpose of linking post-publication notices to the original article is to provide transparency around any changes and to ensure the integrity of the scholarly record. Note that all post-publication notices are free to access from the point of publication.

Read on for our full policy on corrections, retractions, and updates to published articles.

Version of Record

Each article published by Taylor & Francis journals, or journals published by us on behalf of a scholarly society, either in the print issue or online , constitutes the Version of Record (VoR): the final, definitive, and citable version in the scholarly record.

The VoR includes:

The article revised and accepted following peer review, in its final form, including the abstract, text, references, bibliography, and all accompanying tables, illustrations, data.

Any supplemental material.

Recognizing a published article as the VoR helps to provide further assurance that it is accurate, complete, and citable. Wherever possible it is our policy to maintain the integrity of the VoR in accordance with STM Association guidelines:

Publishing tips, direct to your inbox

Expert tips and guidance on getting published and maximizing the impact of your research. Register now for weekly insights direct to your inbox.

STM Guidelines on Preservation of the Objective Record of Science

What should I do if my article contains an error?

Authors should notify us as soon as possible if they find errors in their published article, especially errors that could affect the interpretation of data or reliability of information presented. It is the responsibility of the corresponding author to ensure consensus has been reached between all listed co-authors prior to requesting any corrections to an article.

If, after reading the guidance, you believe a correction is necessary for your article, please contact the journal’s Production Editor, or contact us .

Post-publication notices to ensure the accuracy of the scholarly record

Correction notice.

A Correction notice will be issued when it is necessary to correct an error or omission, where the interpretation of the article may be impacted but the scholarly integrity or original findings remains intact.

A correction notice, where possible, should always be written and approved by all authors of the original article. On very rare occasions where there is a need to correct an error made in the publication process, the journal may be required to issue a correction without the authors’ direct input. However, should this occur, the journal will make best efforts to notify the authors.

Please note that correction requests may be subject to full review, and if queries are raised, you may be expected to supply further information before the correction is approved.

Taylor & Francis distinguishes between major and minor errors. For correction notices, major errors or omissions are considered changes that impact the interpretation of the article, but the overall scholarly integrity remains intact. Minor errors are considered errors or omissions that do not impact the reliability of, or the readers’ understanding of, the interpretation of the article.

Major errors are always accompanied by a separate correction notice. The correction notice should provide clear details of the error and the changes that have been made to the Version of Record. Under these circumstances, Taylor & Francis will:

Correct the online article.

Issue a separate correction notice electronically linked back to the corrected version.

Add a footnote to the article displaying the electronic link to the correction notice.

Paginate and make available the correction notice in the online issue of the journal.

Make the correction notice free to view.

Minor errors may not be accompanied by a separate correction notice. Instead, a footnote will be added to the article detailing to the reader that the article has been corrected.

Concerns regarding the integrity of a published article should be raised via email to the Editor or via the Publisher .

Read our reference guide to the type of changes Taylor & Francis will correct using a correction notice.

Retractions

A Retraction will be issued where a major error (e.g., in the methods or analysis) invalidates the conclusions in the article, or where it appears research or publication misconduct has taken place (e.g., research without required ethical approvals, fabricated data, manipulated images, plagiarism, duplicate publication, etc.).

The decision to retract an article will be made in accordance with both Taylor & Francis policies and COPE guidelines. The decision will follow a full investigation by Taylor & Francis editorial staff in collaboration with the journal’s editorial team. Authors and institutions may request a retraction of their articles if they believe their reasons meet the criteria for retraction.

Retractions are issued to correct the scholarly record and should not be interpreted as punishments for the authors.

The COPE guidance can be found here .

Retraction will be considered in cases where:

There is clear evidence that the findings are unreliable, either as a result of misconduct (e.g., data fabrication or image manipulation) or honest error (e.g., miscalculation or experimental error).

The findings have previously been published elsewhere without proper referencing, permission, or justification (e.g., cases of redundant or duplicate publication).

The research constitutes plagiarism .

The Editor no longer has confidence in the validity or integrity of the article.

There is evidence or concerns of authorship for sale.

Citation manipulation is evident within the published paper

There is evidence of compromised peer review or systematic manipulation.

There is evidence of unethical research, or there is evidence of a breach of editorial policies.

The authors have deliberately submitted fraudulent or inaccurate information, or breached a warranty provided in the Author Publishing Agreement (APA).

Where the decision has been taken to retract an article, Taylor & Francis will:

Add a “retracted” watermark to the published Version of Record of the article.

Issue a separate retraction statement, titled ‘Retraction: [article title]’, that will be linked to the retracted article on Taylor & Francis Online.

Paginate and make available the retraction statement in the online issue of the journal.

Expressions of concern

In some cases, an Expression of Concern may be considered where concerns of a serious nature have been raised (e.g., research or publication misconduct), but where the outcome of the investigation is inconclusive or where due to various complexities, the investigation will not be completed for a considerable time. This could be due to ongoing institutional investigations or other circumstances outside of the journal’s control.

When the investigation has been completed, a Retraction or Correction notice may follow the Expression of Concern alongside the original article. All will remain part of the permanent publication record.

Expressions of Concern notices will be considered in cases where:

There is inconclusive evidence of research or publication misconduct by the authors, but the nature of the concerns warrants notifying the readers.

There are well-founded concerns that the findings are unreliable or that misconduct may have occurred, but there is limited cooperation from the authors’ institution(s) in investigating the concerns raised.

There is an investigation into alleged misconduct related to the publication that has not been, or would not be, fair and impartial or conclusive.

An investigation is underway, but a resolution will not be available for a considerable time, and the nature of the concerns warrant notifying the readers.

The Expression of Concern will be linked back to the published article it relates to.

Article removal

An Article Removal will be issued in rare circumstances where the problems cannot be addressed through a Retraction or Correction notice. Taylor & Francis will consider removal of a published article in very limited circumstances where:

The article contains content that could pose a serious risk of harm if acted upon or followed.

The article contains content which violates the rights to privacy of a study participant.

The article is defamatory or infringes other legal rights.

An article is subject to a court order.

In the case of an article being removed from Taylor & Francis Online, a removal notice will be issued in its place.

Updates and scholarly discussion on published articles

An addendum is a notification of an addition of information to an article.

Addenda do not contradict the original publication and are not used to fix errors (for which a Correction notice will be published), but if the author needs to update or add some key information then, this can be published as an addendum.

Addenda may be peer reviewed, according to journal policy, and are normally subject to oversight by the editors of the journal.

All addenda are electronically linked to the published article to which they relate.

Comment (including response and rejoinder correspondence)

Comments are short articles which outline an observation on a published article. In cases where a comment on a published article is submitted to the journal editor, it may be subject to peer review. The comment will be shared with the authors of the published article, who are invited to submit a response.

This author response again may be subject to peer review, and will be shared with the commentator, who may be invited to submit a rejoinder. The rejoinder may be subject to peer review and shared with the authors of the published article. No further correspondence will be considered for publication. The editor may decide to reject correspondence at any point before the comment, response and rejoinder are finalized.

All published comments, responses, and rejoinders are linked to the published article to which they relate.

Pop-up notifications

If deemed necessary by the Publishing Ethics & Integrity team , a pop-up notification may be temporarily added to the online version of an article to inform readers an article is under investigation. This is not a permanent note (unlike an Expression of Concern, Correction, or Retraction notice), but is to indicate an investigation is in progress. Please note, these are not added to every article under investigation.

Updating and retracting articles on F1000Research

On F1000Research, authors can revise, change, and update their articles by publishing new versions, which are added to the original article’s history on the platform. The versioning system is user-friendly and intuitive, with new versions (and their peer reviews) clearly linked and easily navigable from earlier versions. Authors can summarize changes in the ‘Amendments’ section at the start of a new version.

As stated in the F1000Research Retraction policy , articles may be retracted from F1000Research for several reasons, including research misconduct and duplicate publication, but the retracted article will usually remain on the site. Retracted articles are not ‘unpublished’ or ‘withdrawn’ so that they can be published elsewhere; usually the reasons for the retraction are so serious that the whole study, or large parts of it, are not appropriate for inclusion in the scientific literature anywhere.

Last updated 10th July 2024: Online ordering is currently unavailable due to technical issues. We apologise for any delays responding to customers while we resolve this. For further updates please visit our website https://www.cambridge.org/news-and-insights/technical-incident

We use cookies to distinguish you from other users and to provide you with a better experience on our websites. Close this message to accept cookies or find out how to manage your cookie settings .

Login Alert

- > The Cambridge Handbook of Cognition and Education

- > Correcting Student Errors and Misconceptions

Book contents

- The Cambridge Handbook of Cognition and Education

- Copyright page

- Contributors

- How Cognitive Psychology Can Inform Evidence-Based Education Reform

- Part I Foundations

- Part II Science and Math

- Part III Reading and Writing

- Part IV General Learning Strategies

- 16 When Does Interleaving Practice Improve Learning?

- 17 Correcting Student Errors and Misconceptions

- 18 How Multimedia Can Improve Learning and Instruction

- 19 Multiple-Choice and Short-Answer Quizzing on Equal Footing in the Classroom

- 20 Collaborative Learning

- 21 Self-Explaining

- 22 Enhancing the Quality of Student Learning Using Distributed Practice

- Part V Metacognition

17 - Correcting Student Errors and Misconceptions

from Part IV - General Learning Strategies

Published online by Cambridge University Press: 08 February 2019

Access options

Save book to kindle.

To save this book to your Kindle, first ensure [email protected] is added to your Approved Personal Document E-mail List under your Personal Document Settings on the Manage Your Content and Devices page of your Amazon account. Then enter the ‘name’ part of your Kindle email address below. Find out more about saving to your Kindle .

Note you can select to save to either the @free.kindle.com or @kindle.com variations. ‘@free.kindle.com’ emails are free but can only be saved to your device when it is connected to wi-fi. ‘@kindle.com’ emails can be delivered even when you are not connected to wi-fi, but note that service fees apply.

Find out more about the Kindle Personal Document Service .

- Correcting Student Errors and Misconceptions

- By Elizabeth J. Marsh , Emmaline Drew Eliseev

- Edited by John Dunlosky , Kent State University, Ohio , Katherine A. Rawson , Kent State University, Ohio

- Book: The Cambridge Handbook of Cognition and Education

- Online publication: 08 February 2019

- Chapter DOI: https://doi.org/10.1017/9781108235631.018

Save book to Dropbox

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Dropbox .

Save book to Google Drive

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Google Drive .

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Sage Choice

Putting the Self in Self-Correction: Findings From the Loss-of-Confidence Project

Julia m. rohrer.

1 International Max Planck Research School on the Life Course, Max Planck Institute for Human Development, Berlin

2 Department of Psychology, University of Leipzig

Warren Tierney

3 Department of Organizational Behavior, INSEAD, Singapore

Eric L. Uhlmann

Lisa m. debruine.

4 Institute of Neuroscience and Psychology, University of Glasgow

5 Laboratory of Experimental Psychology, KU Leuven

6 Institute of Psychology, Leiden University

Benedict Jones

Stefan c. schmukle, raphael silberzahn.

7 Sussex Business School, University of Sussex

Rebecca M. Willén

8 Institute for Globally Distributed Open Research and Education (IGDORE)

Rickard Carlsson

9 Department of Psychology, Linnaeus University

Richard E. Lucas

10 Department of Psychology, Michigan State University

Julia Strand

11 Department of Psychology, Carleton College

Simine Vazire

12 Melbourne School of Psychological Sciences, University of Melbourne

Jessica K. Witt

13 Department of Psychology, Colorado State University

Thomas R. Zentall

14 Department of Psychology, University of Kentucky

Christopher F. Chabris

15 Autism and Developmental Medicine Institute, Geisinger Health System, Danville, Pennsylvania

Tal Yarkoni

16 Department of Psychology, University of Texas at Austin

Science is often perceived to be a self-correcting enterprise. In principle, the assessment of scientific claims is supposed to proceed in a cumulative fashion, with the reigning theories of the day progressively approximating truth more accurately over time. In practice, however, cumulative self-correction tends to proceed less efficiently than one might naively suppose. Far from evaluating new evidence dispassionately and infallibly, individual scientists often cling stubbornly to prior findings. Here we explore the dynamics of scientific self-correction at an individual rather than collective level. In 13 written statements, researchers from diverse branches of psychology share why and how they have lost confidence in one of their own published findings. We qualitatively characterize these disclosures and explore their implications. A cross-disciplinary survey suggests that such loss-of-confidence sentiments are surprisingly common among members of the broader scientific population yet rarely become part of the public record. We argue that removing barriers to self-correction at the individual level is imperative if the scientific community as a whole is to achieve the ideal of efficient self-correction.

Science is often hailed as a self-correcting enterprise. In the popular perception, scientific knowledge is cumulative and progressively approximates truth more accurately over time ( Sismondo, 2010 ). However, the degree to which science is genuinely self-correcting is a matter of considerable debate. The truth may or may not be revealed eventually, but errors can persist for decades, corrections sometimes reflect lucky accidents rather than systematic investigation and can themselves be erroneous, and initial mistakes might give rise to subsequent errors before they get caught ( Allchin, 2015 ). Furthermore, even in a self-correcting scientific system, it remains unclear how much of the knowledge base is credible at any given point in time ( Ioannidis, 2012 ) given that the pace of scientific self-correction may be far from optimal.

Usually, self-correction is construed as an outcome of the activities of the scientific community as a whole (i.e., collective self-correction): Watchful reviewers and editors catch errors before studies get published, critical readers write commentaries when they spot flaws in somebody else’s reasoning, and replications by impartial groups of researchers allow the scientific community to update their beliefs about the likelihood that a scientific claim is true. Far less common are cases in which researchers publicly point out errors in their own studies and question conclusions they have drawn before (i.e., individual self-correction). The perceived unlikeliness of such an event is facetiously captured in Max Planck’s famous statement that new scientific truths become established not because their enemies see the light but because those enemies eventually die ( Planck, 1948 ). However, even if individual self-correction is not necessary for a scientific community as a whole to be self-correcting in the long run ( Mayo-Wilson et al., 2011 ), we argue that it can increase the overall efficiency of the self-corrective process and thus contribute to a more accurate scientific record.

The Value of Individual Self-Correction

The authors of a study have privileged access to details about how the study was planned and conducted, how the data were processed or preprocessed, and which analyses were performed. Thus, the authors remain in a special position to identify or confirm a variety of procedural, theoretical, and methodological problems that are less visible to other researchers. 1 Even when the relevant information can in principle be accessed from the outside, correction by the original authors might still be associated with considerably lower costs. For an externally instigated correction to take place, skeptical “outsiders” who were not involved in the research effort might have to carefully reconstruct methodological details from a scant methods section (for evidence that often authors’ assistance is required to reproduce analyses, see e.g., Chang & Li, 2018 ; Hardwicke et al., 2018 ), write persuasive e-mails to get the original authors to share the underlying data (often to no avail; Wicherts et al., 2011 ), recalculate statistics because reported values are not always accurate (e.g., Nuijten et al., 2016 ), or apply advanced statistical methods to assess evidence in the presence of distortions such as publication bias ( Carter et al., 2019 ).

Eventually, external investigators might resort to an empirical replication study to clarify the matter. A replication study can be a very costly or even impossible endeavor. Certainly, it is inefficient when a simple self-corrective effort by the original authors might have sufficed. Widespread individual self-correction would obviously not eliminate the need for replication, but it would enable researchers to make better informed choices about whether and how to attempt replication—more than 30 million scientific articles have been published since 1965 ( Pan et al., 2018 ), and limited research resources should not be expended mindlessly on attempts to replicate everything (see also Coles et al., 2018 ). In some cases, individual self-correction could render an empirical replication study unnecessary. In other cases, additionally disclosed information might render an empirical replication attempt even more interesting. And in any case, full information about the research process, including details that make the original authors doubt their claims, would help external investigators maximize the informativeness of their replication or follow-up study.

Finally, in many areas of science, scientific correction has become a sensitive issue often discussed with highly charged language ( Bohannon, 2014 ). Self-correction could help defuse some of this conflict. A research culture in which individual self-corrections are the default reaction to errors or misinterpretations could raise awareness that mistakes are a routine part of science and help separate researchers’ identities from specific findings.

The Loss-of-Confidence Project

To what extent does our research culture resemble the self-correcting ideal, and how can we facilitate such behavior? To address these questions and to gauge the potential impacts of individual self-corrections, we conducted the Loss-of-Confidence Project. The effort was born out of a discussion in the Facebook group PsychMAP following the online publication of Dana Carney’s statement “My Position on Power Poses” ( Carney, 2016 ). Carney revealed new methodological details regarding one of her previous publications and stated that she no longer believed in the originally reported effects. Inspired by her open disclosure, we conducted a project consisting of two parts: an open call for loss-of-confidence statements and an anonymous online survey.

First, in our open call, we invited psychological researchers to submit statements describing findings that they had published and in which they had subsequently lost confidence. 2 The idea behind the initiative was to help normalize and destigmatize individual self-correction while, hopefully, also rewarding authors for exposing themselves in this way with a publication. We invited authors in any area of psychology to contribute statements expressing a loss of confidence in previous findings, subject to the following requirements:

- The study in question was an empirical report of a novel finding.

- The submitting author has lost confidence in the primary/central result of the article.

- The loss of confidence occurred primarily as a result of theoretical or methodological problems with the study design or data analysis.

- The submitting author takes responsibility for the errors in question.

The goal was to restrict submissions to cases in which the stigma of disclosing a loss of confidence in previous findings would be particularly high; we therefore did not accept cases in which an author had lost faith in a previous finding for reasons that did not involve his or her own mistakes (e.g., because of a series of failed replications by other researchers).

Second, to understand whether the statements received in the first part of the project are outliers or reflect a broader phenomenon that goes largely unreported, we carried out an online survey and asked respondents about their experience with losses of confidence. The full list of questions asked can be found at https://osf.io/bv48h/ . The link to the survey was posted on Facebook pages and mailing lists oriented toward scientists (PsychMAP, Psychological Methods Discussion Group, International Social Cognition Network, Society for Judgment and Decision Making (SJDM), SJDM mailing list) and further promoted on Twitter. Survey materials and anonymized data are made available on the project’s Open Science Framework repository ( https://osf.io/bv48h ).

Results: Loss-of-Confidence Statements

The project was disseminated widely on social media (resulting in around 4,700 page views of the project website), and public commentary was overwhelmingly positive, highlighting how individual self-correction is aligned with perceived norms of scientific best practices. By the time we stopped the initial collection of submissions (December 2017–July 2018), we had received loss-of-confidence statements pertaining to six different studies. After posting a preprint of an earlier version of this manuscript, we reopened the collection of statements and received seven more submissions, some of them while finalizing the manuscript. Table 1 provides an overview of the statements we received. 3

Overview of the Loss-of-Confidence Statements

| Authors | Title | Journal | JIF | Citations |

|---|---|---|---|---|

| Implicit stereotype content: Mixed stereotypes can be measured with the implicit association test | 1.36 | 74 | ||

| Hemispheric specialization for skilled perceptual organization by chessmasters | 2.87 | 28 | ||

| Women’s preference for attractive makeup tracks changes in their salivary testosterone | 4.90 | 9 | ||

| The influence of working memory load on semantic priming | 2.67 | 51 | ||

| Understanding extraverts’ enjoyment of social situations: The importance of pleasantness | 5.92 | 220 | ||

| Second to fourth digit ratios and the implicit gender self-concept | 2.00 | 20 | ||

| It pays to be Herr Kaiser: Germans with noble-sounding surnames more often work as managers than as employees | 4.90 | 28 | ||

| Suboptimal choice in pigeons: Choice is primarily based on the value of the conditioned reinforcer rather than overall reinforcement rate | 2.03 | 64 | ||

| Talking points: A modulating circle reduces listening effort without improving speech recognition | 3.70 | 9 | ||

| Who knows what about a person? The self-other knowledge asymmetry (SOKA) model | 5.92 | 740 | ||

| Offenders’ lies and truths: An evaluation of the supreme court of Sweden’s criteria for credibility assessment | 1.46 | 19 | ||

| Action-specific influences on distance perception: A role for motor simulation | 2.94 | 252 | ||

| Prefrontal brain activity predicts temporally extended decision-making behavior | 2.15 | 45 |

Note: JIF = 2018 journal impact factor according to InCites Journal Citation Reports. Citations are according to Google Scholar on April 27, 2019.

In the following, we list all statements in alphabetical order of the first author of the original study to which they pertain. Some of the statements have been abbreviated; the long versions are available at OSF ( https://osf.io/bv48h/ ).

Statement on Carlsson and Björklund (2010) by Rickard Carlsson

In this study, we developed a new way to measure mixed (in terms of warmth and competence) stereotypes with the help of the implicit association test (IAT). In two studies, respondents took two IATs, and results supported the predictions: Lawyers were implicitly stereotyped as competent (positive) and cold (negative) relative to preschool teachers. In retrospect, there are a number of issues with the reported findings. First, there was considerable flexibility in what counted as support for the theoretical predictions. In particular, the statistical analysis in Study 2 tested a different hypothesis than Study 1. This analysis was added after peer review Round 2 and thus was definitely not predicted a priori. Later, when trying to replicate the reported analysis from Study 1 on the data from Study 2, I found that only one of the two effects reported in Study 1 could be successfully replicated. Second, when we tried to establish the convergent and discriminant validity of the IATs by correlating them with explicit measures, we committed the fallacy of taking a nonsignificant effect in an underpowered test as evidence for the null hypothesis, which in this case implied discriminant validity. Third, in Study 1, participants actually took a third IAT that measured general attitudes toward the groups. This IAT was not disclosed in the manuscript and was highly correlated with both the competence and the warmth IAT. Hence, it would have complicated our narrative and undermined the claim that we had developed a completely new measure. Fourth, data from an undisclosed behavioral measure were collected but never entered into data set or analyzed because I made a judgment that it was invalid based on debriefing of the participants. In conclusion, in this 2010 article, I claimed to have developed a way to measure mixed stereotypes of warmth and competence with the IAT. I am no longer confident in this finding.

Statement on Chabris and Hamilton (1992) by Christopher F. Chabris

This article reported a divided-visual-field (DVF) experiment showing that the skilled pattern recognition that chess masters perform when seeing a chess game situation was performed faster and more accurately when the stimuli were presented briefly in the left visual field, and thus first reached the right hemisphere of the brain, than when the stimuli were presented in the right field. The sample was large for a study of highly skilled performers (16 chess masters), but we analyzed the data in many different ways and reported the result that was most favorable. Most critically, we tried different rules for removing outlier trials and picked one that was uncommon but led to results consistent with our hypothesis. Nowadays, I would analyze these types of data using more justifiable rules and preregister the rules I was planning to use (among other things) to avoid this problem. For these reasons, I no longer think that the results provide sufficient support for the claims that the right hemisphere is more important than the left for chess expertise and for skilled visual pattern recognition. These claims may be true, but not because of our experiment.

Two other relevant things happened with this article. First, we submitted a manuscript describing two related experiments. We were asked to remove the original Experiment 1 because the p value for the critical hypothesis test was below .10 but not below .05. We complied with this request. We were also asked by one reviewer to run approximately 10 additional analyses of the data. We did not comply with this—instead, we wrote to the editor and explained that doing so many different analyses of the same data set would invalidate the p values. The editor agreed. This is evidence that the dangers of multiple testing were not exactly unknown as far back as the early 1990s. The sacrificed Experiment 1 became a chapter of my PhD thesis. I tried to replicate it several years later, but I could not recruit enough chess master participants. Having also lost some faith in the DVF methodology, I put that data in the “file drawer” for good.

Statement on Fisher et al. (2015) by Ben Jones and Lisa M. DeBruine

The article reported that women’s preferences for wearing makeup that was rated by other people as being particularly attractive were stronger in test sessions in which salivary testosterone was high than in test sessions in which salivary testosterone was relatively low. Not long after publication, we were contacted by a colleague who had planned to use the open data and analysis code from our article for a workshop on mixed effect models. They expressed some concerns about how our main analysis had been set up. Their main concern was that our model did not include random slopes for key within-subjects variables (makeup attractiveness and testosterone). Having looked into this issue over a couple of days, we agreed that not including random slopes typically increases false positive rates and that in the case of our study, the key effect for our interpretation was no longer significant. To minimize misleading other researchers, we contacted the journal immediately and asked to retract the article. Although this was clearly an unfortunate situation, it highlights the importance of open data and analysis code for allowing mistakes to be quickly recognized and the scientific record corrected accordingly.

Statement on Heyman et al. (2015) by Tom Heyman

The goal of the study was to assess whether the processes that presumably underlie semantic priming effects are automatic in the sense that they are capacity free. For instance, one of the most well-known mechanisms is spreading activation, which entails that the prime (e.g., cat) preactivates related concepts (e.g., dog), thus resulting in a head start. To disentangle prospective processes—those initiated on presentation of the prime, such as spreading activation—from retrospective processes—those initiated on presentation of the target—three different types of stimuli were selected. On the basis of previously gathered word association data, we used symmetrically associated word pairs (e.g., cat–dog; both prime and target elicit one another) as well as asymmetrically associated pairs in the forward direction (e.g., panda–bear; the prime elicits the target but not vice versa) and in the backward direction (e.g., bear–panda; the target elicits the prime but not vice versa). However, I now believe that this manipulation was not successful in teasing apart prospective and retrospective processes. Critically, the three types of stimuli do not solely differ in terms of their presumed prime–target association. That is, I overlooked a number of confounding variables, for one because a priori matching attempts did not take regression effects into account (for more details, see supplementary statement at https://osf.io/bv48h/ ). Unfortunately, this undercuts the validity of the study’s central claim.

Statement on Lucas and Diener (2001) by Richard E. Lucas