- Python for Machine Learning

- Machine Learning with R

- Machine Learning Algorithms

- Math for Machine Learning

- Machine Learning Interview Questions

- ML Projects

- Deep Learning

- Computer vision

- Data Science

- Artificial Intelligence

Hypothesis in Machine Learning

- Demystifying Machine Learning

- Bayes Theorem in Machine learning

- What is Machine Learning?

- Best IDEs For Machine Learning

- Learn Machine Learning in 45 Days

- Interpolation in Machine Learning

- How does Machine Learning Works?

- Machine Learning for Healthcare

- Applications of Machine Learning

- Machine Learning - Learning VS Designing

- Continual Learning in Machine Learning

- Meta-Learning in Machine Learning

- P-value in Machine Learning

- Why Machine Learning is The Future?

- How Does NASA Use Machine Learning?

- Few-shot learning in Machine Learning

- Machine Learning Jobs in Hyderabad

The concept of a hypothesis is fundamental in Machine Learning and data science endeavours. In the realm of machine learning, a hypothesis serves as an initial assumption made by data scientists and ML professionals when attempting to address a problem. Machine learning involves conducting experiments based on past experiences, and these hypotheses are crucial in formulating potential solutions.

It’s important to note that in machine learning discussions, the terms “hypothesis” and “model” are sometimes used interchangeably. However, a hypothesis represents an assumption, while a model is a mathematical representation employed to test that hypothesis. This section on “Hypothesis in Machine Learning” explores key aspects related to hypotheses in machine learning and their significance.

Table of Content

How does a Hypothesis work?

Hypothesis space and representation in machine learning, hypothesis in statistics, faqs on hypothesis in machine learning.

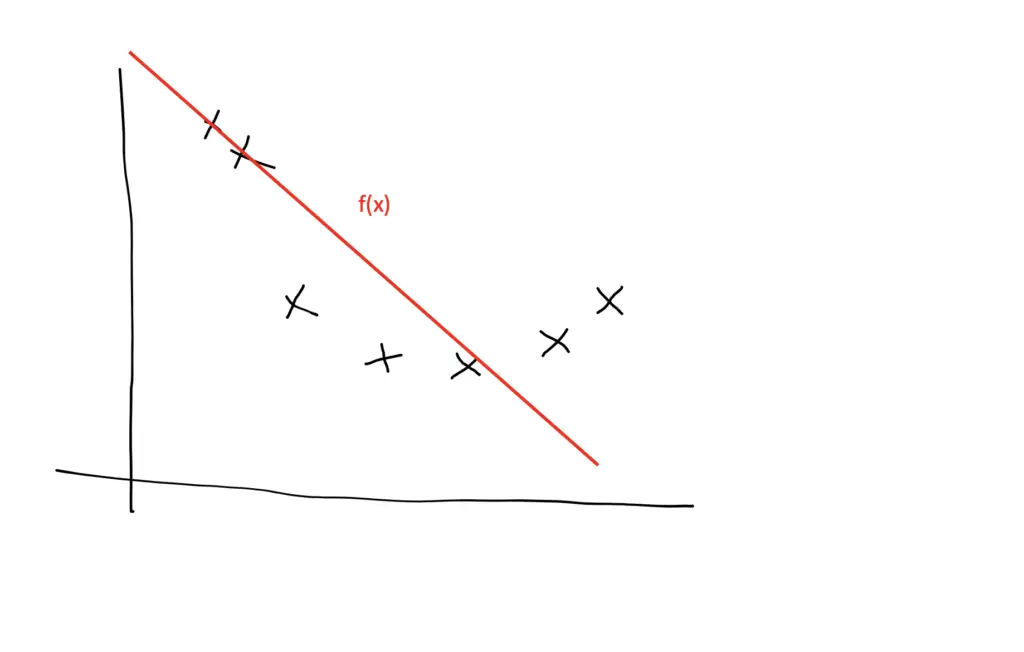

A hypothesis in machine learning is the model’s presumption regarding the connection between the input features and the result. It is an illustration of the mapping function that the algorithm is attempting to discover using the training set. To minimize the discrepancy between the expected and actual outputs, the learning process involves modifying the weights that parameterize the hypothesis. The objective is to optimize the model’s parameters to achieve the best predictive performance on new, unseen data, and a cost function is used to assess the hypothesis’ accuracy.

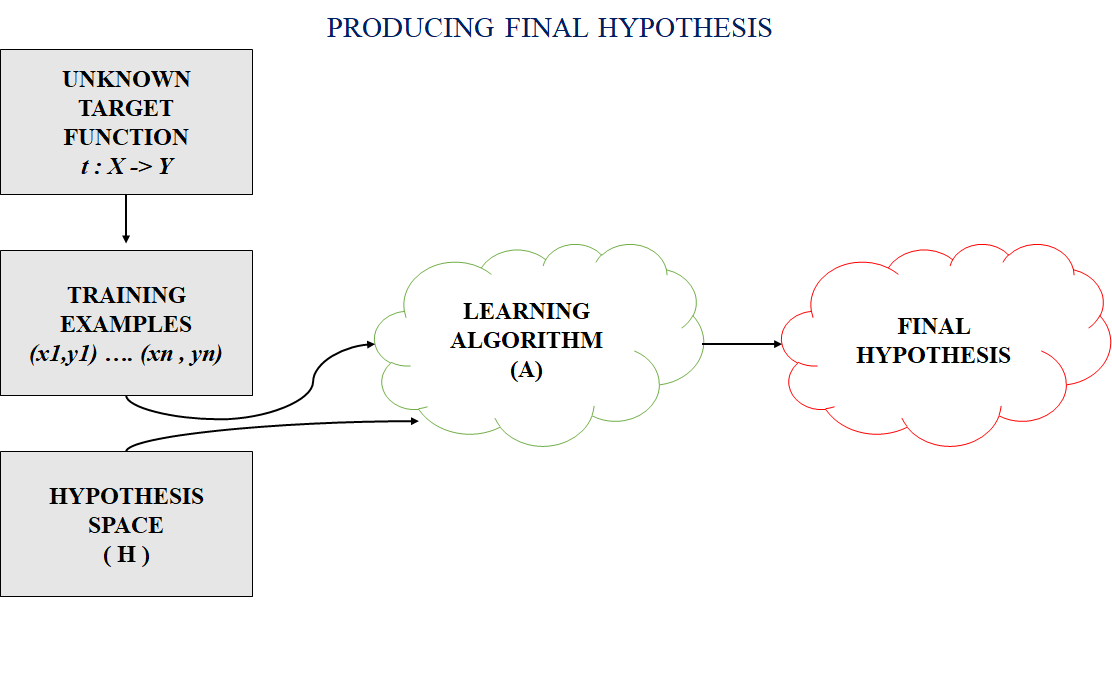

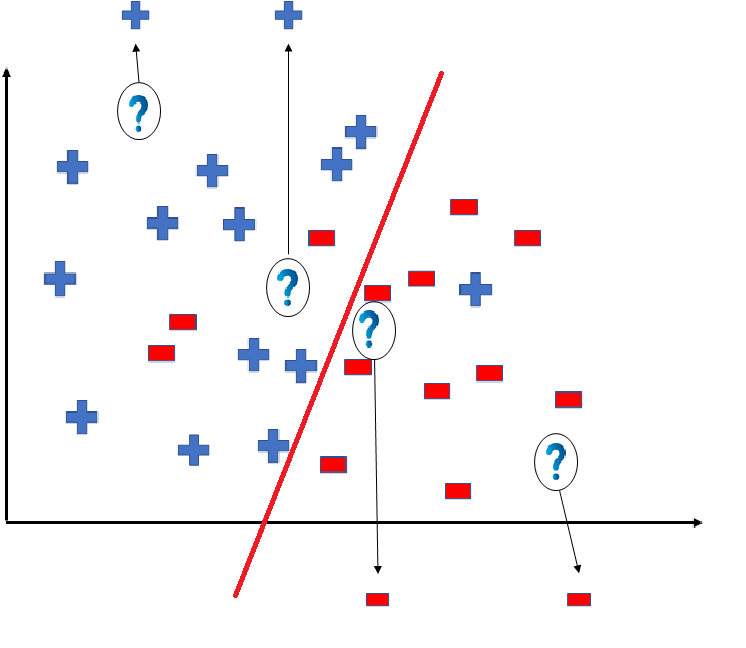

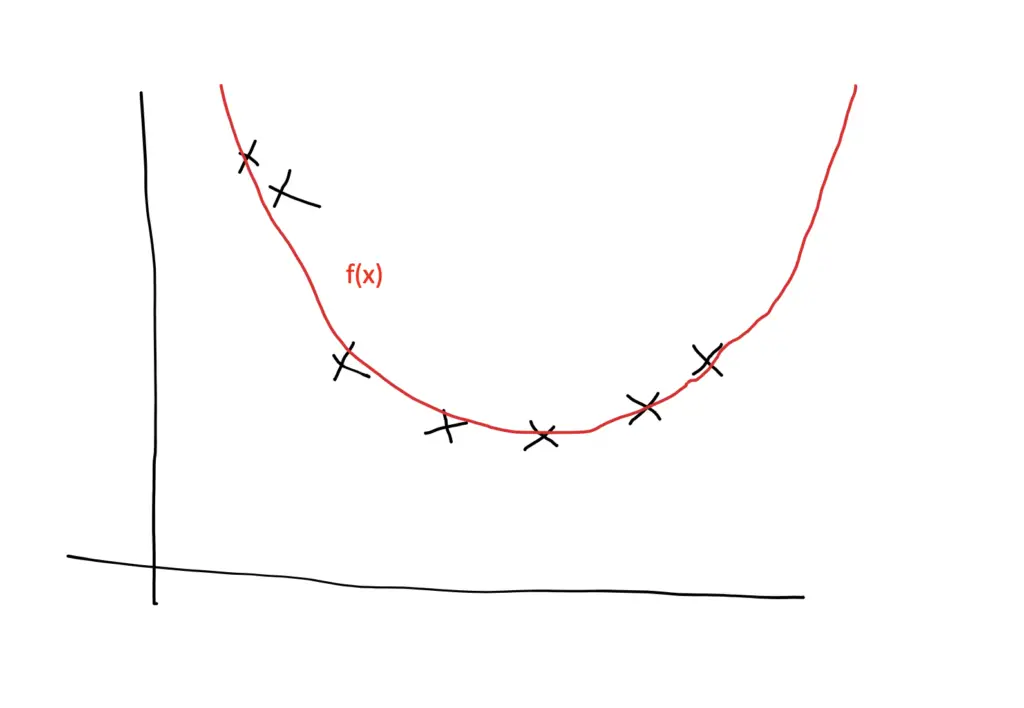

In most supervised machine learning algorithms, our main goal is to find a possible hypothesis from the hypothesis space that could map out the inputs to the proper outputs. The following figure shows the common method to find out the possible hypothesis from the Hypothesis space:

Hypothesis Space (H)

Hypothesis space is the set of all the possible legal hypothesis. This is the set from which the machine learning algorithm would determine the best possible (only one) which would best describe the target function or the outputs.

Hypothesis (h)

A hypothesis is a function that best describes the target in supervised machine learning. The hypothesis that an algorithm would come up depends upon the data and also depends upon the restrictions and bias that we have imposed on the data.

The Hypothesis can be calculated as:

[Tex]y = mx + b [/Tex]

- m = slope of the lines

- b = intercept

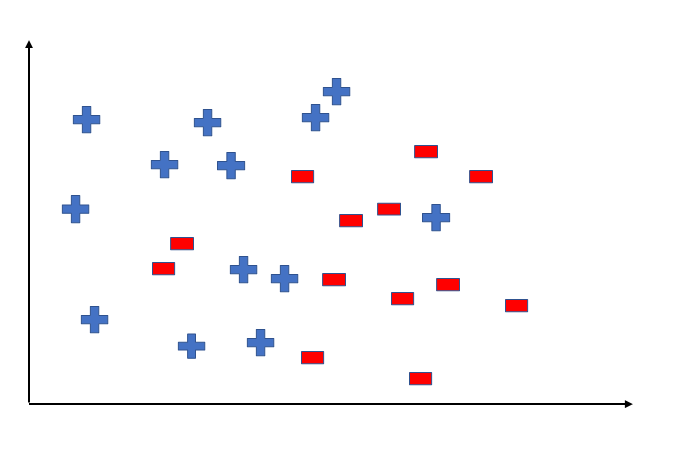

To better understand the Hypothesis Space and Hypothesis consider the following coordinate that shows the distribution of some data:

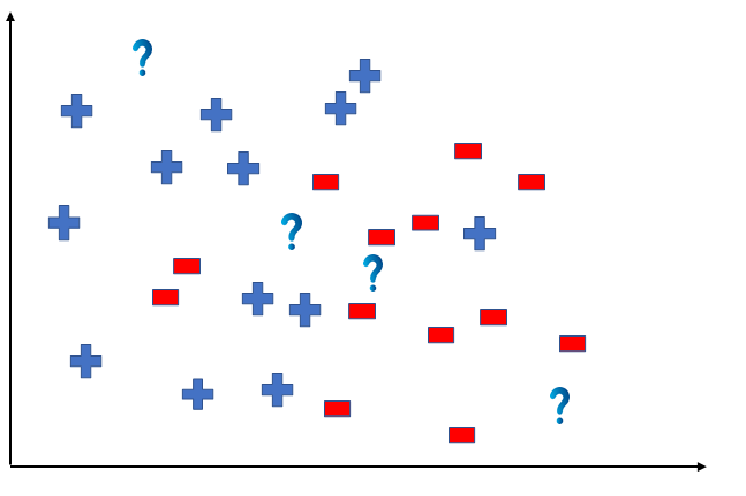

Say suppose we have test data for which we have to determine the outputs or results. The test data is as shown below:

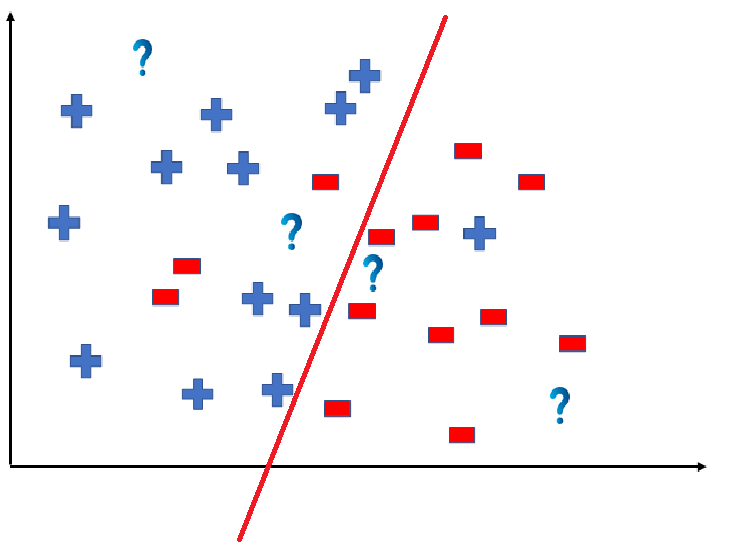

We can predict the outcomes by dividing the coordinate as shown below:

So the test data would yield the following result:

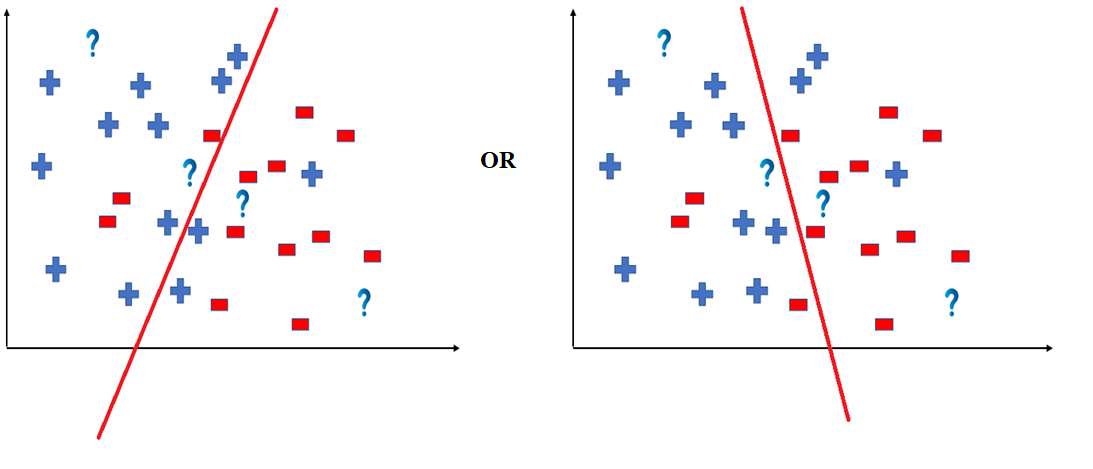

But note here that we could have divided the coordinate plane as:

The way in which the coordinate would be divided depends on the data, algorithm and constraints.

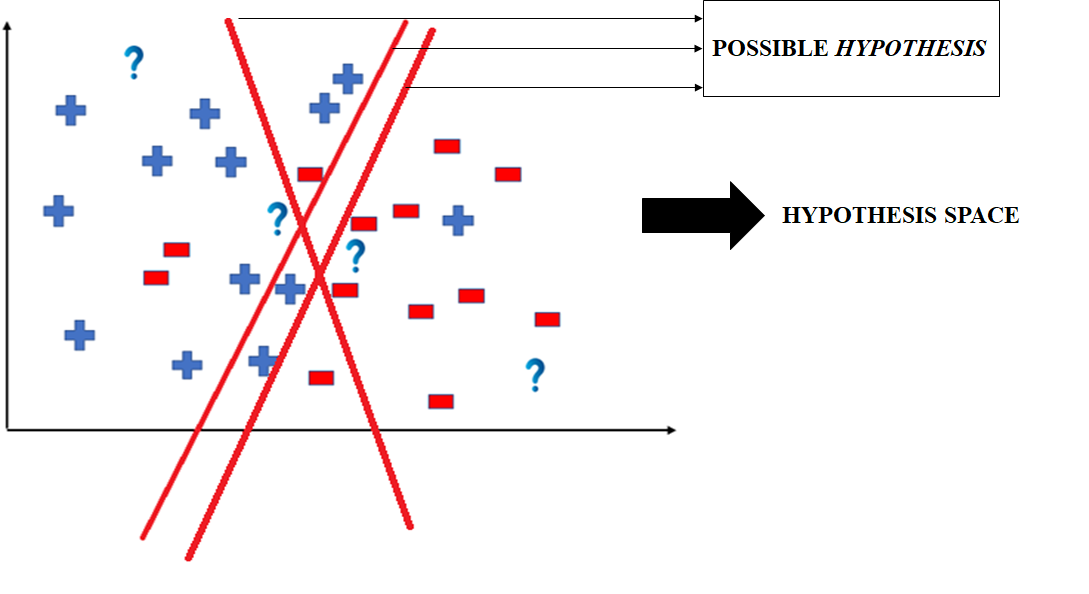

- All these legal possible ways in which we can divide the coordinate plane to predict the outcome of the test data composes of the Hypothesis Space.

- Each individual possible way is known as the hypothesis.

Hence, in this example the hypothesis space would be like:

The hypothesis space comprises all possible legal hypotheses that a machine learning algorithm can consider. Hypotheses are formulated based on various algorithms and techniques, including linear regression, decision trees, and neural networks. These hypotheses capture the mapping function transforming input data into predictions.

Hypothesis Formulation and Representation in Machine Learning

Hypotheses in machine learning are formulated based on various algorithms and techniques, each with its representation. For example:

- Linear Regression : [Tex] h(X) = \theta_0 + \theta_1 X_1 + \theta_2 X_2 + … + \theta_n X_n[/Tex]

- Decision Trees : [Tex]h(X) = \text{Tree}(X)[/Tex]

- Neural Networks : [Tex]h(X) = \text{NN}(X)[/Tex]

In the case of complex models like neural networks, the hypothesis may involve multiple layers of interconnected nodes, each performing a specific computation.

Hypothesis Evaluation:

The process of machine learning involves not only formulating hypotheses but also evaluating their performance. This evaluation is typically done using a loss function or an evaluation metric that quantifies the disparity between predicted outputs and ground truth labels. Common evaluation metrics include mean squared error (MSE), accuracy, precision, recall, F1-score, and others. By comparing the predictions of the hypothesis with the actual outcomes on a validation or test dataset, one can assess the effectiveness of the model.

Hypothesis Testing and Generalization:

Once a hypothesis is formulated and evaluated, the next step is to test its generalization capabilities. Generalization refers to the ability of a model to make accurate predictions on unseen data. A hypothesis that performs well on the training dataset but fails to generalize to new instances is said to suffer from overfitting. Conversely, a hypothesis that generalizes well to unseen data is deemed robust and reliable.

The process of hypothesis formulation, evaluation, testing, and generalization is often iterative in nature. It involves refining the hypothesis based on insights gained from model performance, feature importance, and domain knowledge. Techniques such as hyperparameter tuning, feature engineering, and model selection play a crucial role in this iterative refinement process.

In statistics , a hypothesis refers to a statement or assumption about a population parameter. It is a proposition or educated guess that helps guide statistical analyses. There are two types of hypotheses: the null hypothesis (H0) and the alternative hypothesis (H1 or Ha).

- Null Hypothesis(H 0 ): This hypothesis suggests that there is no significant difference or effect, and any observed results are due to chance. It often represents the status quo or a baseline assumption.

- Aternative Hypothesis(H 1 or H a ): This hypothesis contradicts the null hypothesis, proposing that there is a significant difference or effect in the population. It is what researchers aim to support with evidence.

Q. How does the training process use the hypothesis?

The learning algorithm uses the hypothesis as a guide to minimise the discrepancy between expected and actual outputs by adjusting its parameters during training.

Q. How is the hypothesis’s accuracy assessed?

Usually, a cost function that calculates the difference between expected and actual values is used to assess accuracy. Optimising the model to reduce this expense is the aim.

Q. What is Hypothesis testing?

Hypothesis testing is a statistical method for determining whether or not a hypothesis is correct. The hypothesis can be about two variables in a dataset, about an association between two groups, or about a situation.

Q. What distinguishes the null hypothesis from the alternative hypothesis in machine learning experiments?

The null hypothesis (H0) assumes no significant effect, while the alternative hypothesis (H1 or Ha) contradicts H0, suggesting a meaningful impact. Statistical testing is employed to decide between these hypotheses.

Please Login to comment...

Similar reads.

- Machine Learning

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Machine Learning

Artificial Intelligence

Control System

Supervised Learning

Classification, miscellaneous, related tutorials.

Interview Questions

- Send your Feedback to [email protected]

Help Others, Please Share

Learn Latest Tutorials

Transact-SQL

Reinforcement Learning

R Programming

React Native

Python Design Patterns

Python Pillow

Python Turtle

Preparation

Verbal Ability

Company Questions

Trending Technologies

Cloud Computing

Data Science

B.Tech / MCA

Data Structures

Operating System

Computer Network

Compiler Design

Computer Organization

Discrete Mathematics

Ethical Hacking

Computer Graphics

Software Engineering

Web Technology

Cyber Security

C Programming

Data Mining

Data Warehouse

Programmathically

Introduction to the hypothesis space and the bias-variance tradeoff in machine learning.

In this post, we introduce the hypothesis space and discuss how machine learning models function as hypotheses. Furthermore, we discuss the challenges encountered when choosing an appropriate machine learning hypothesis and building a model, such as overfitting, underfitting, and the bias-variance tradeoff.

The hypothesis space in machine learning is a set of all possible models that can be used to explain a data distribution given the limitations of that space. A linear hypothesis space is limited to the set of all linear models. If the data distribution follows a non-linear distribution, the linear hypothesis space might not contain a model that is appropriate for our needs.

To understand the concept of a hypothesis space, we need to learn to think of machine learning models as hypotheses.

The Machine Learning Model as Hypothesis

Generally speaking, a hypothesis is a potential explanation for an outcome or a phenomenon. In scientific inquiry, we test hypotheses to figure out how well and if at all they explain an outcome. In supervised machine learning, we are concerned with finding a function that maps from inputs to outputs.

But machine learning is inherently probabilistic. It is the art and science of deriving useful hypotheses from limited or incomplete data. Our functions are not axioms that explain the data perfectly, and for most real-life problems, we will never have all the data that exists. Accordingly, we will not find the one true function that perfectly describes the data. Instead, we find a function through training a model to map from known training input to known training output. This way, the model gradually approximates the assumed true function that describes the distribution of the data. So we treat our model as a hypothesis that needs to be tested as to how well it explains the output from a given input. We do this using a test or validation data set.

The Hypothesis Space

During the training process, we select a model from a hypothesis space that is subject to our constraints. For example, a linear hypothesis space only provides linear models. We can approximate data that follows a quadratic distribution using a model from the linear hypothesis space.

Of course, a linear model will never have the same predictive performance as a quadratic model, so we can adjust our hypothesis space to also include non-linear models or at least quadratic models.

The Data Generating Process

The data generating process describes a hypothetical process subject to some assumptions that make training a machine learning model possible. We need to assume that the data points are from the same distribution but are independent of each other. When these requirements are met, we say that the data is independent and identically distributed (i.i.d.).

Independent and Identically Distributed Data

How can we assume that a model trained on a training set will perform better than random guessing on new and previously unseen data? First of all, the training data needs to come from the same or at least a similar problem domain. If you want your model to predict stock prices, you need to train the model on stock price data or data that is similarly distributed. It wouldn’t make much sense to train it on whether data. Statistically, this means the data is identically distributed . But if data comes from the same problem, training data and test data might not be completely independent. To account for this, we need to make sure that the test data is not in any way influenced by the training data or vice versa. If you use a subset of the training data as your test set, the test data evidently is not independent of the training data. Statistically, we say the data must be independently distributed .

Overfitting and Underfitting

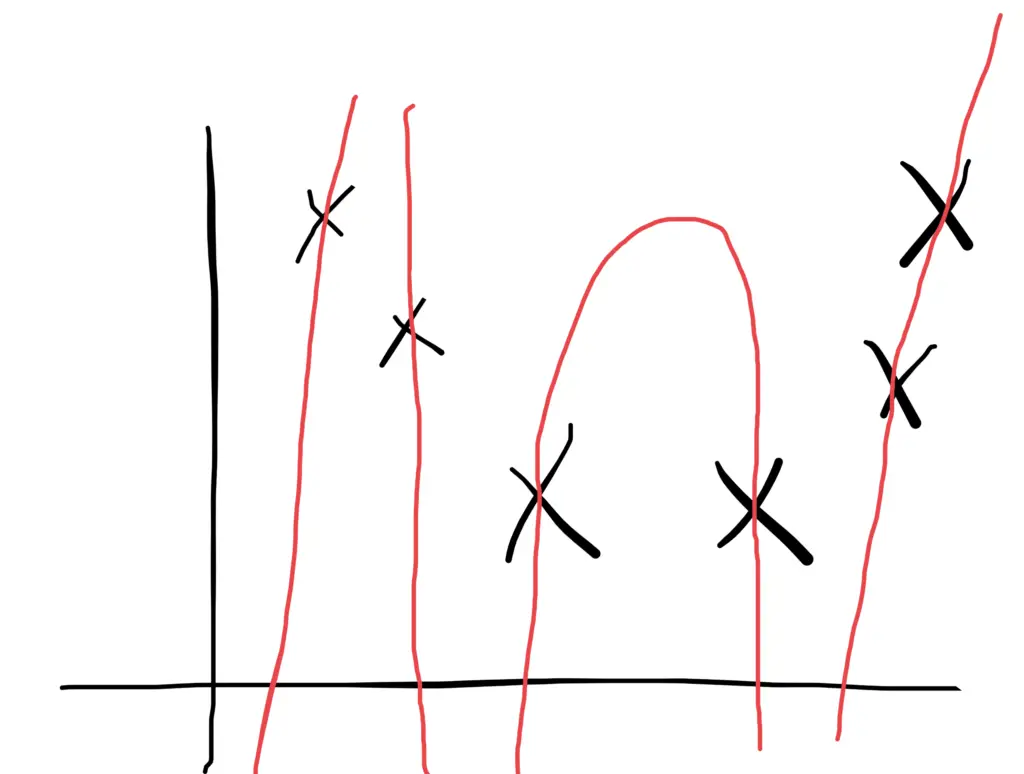

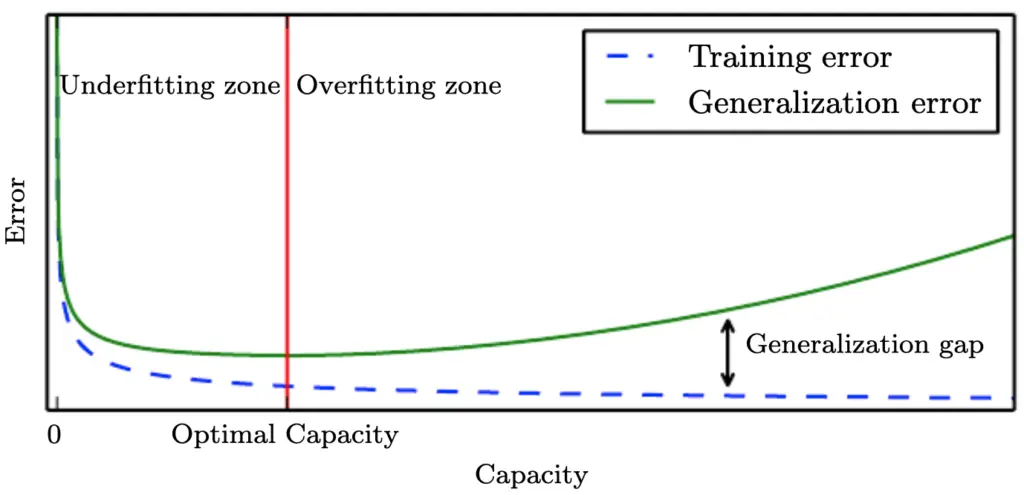

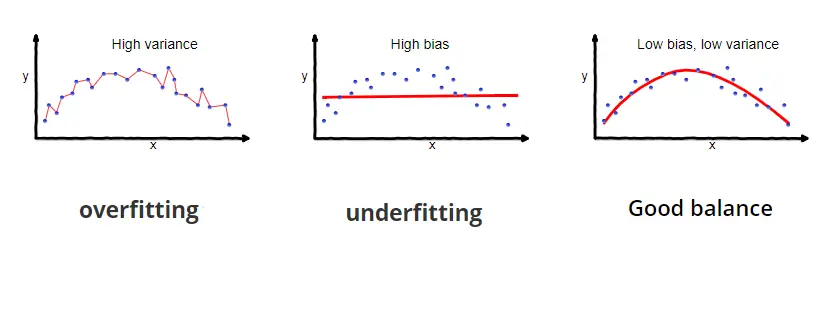

We want to select a model from the hypothesis space that explains the data sufficiently well. During training, we can make a model so complex that it perfectly fits every data point in the training dataset. But ultimately, the model should be able to predict outputs on previously unseen input data. The ability to do well when predicting outputs on previously unseen data is also known as generalization. There is an inherent conflict between those two requirements.

If we make the model so complex that it fits every point in the training data, it will pick up lots of noise and random variation specific to the training set, which might obscure the larger underlying patterns. As a result, it will be more sensitive to random fluctuations in new data and predict values that are far off. A model with this problem is said to overfit the training data and, as a result, to suffer from high variance .

To avoid the problem of overfitting, we can choose a simpler model or use regularization techniques to prevent the model from fitting the training data too closely. The model should then be less influenced by random fluctuations and instead, focus on the larger underlying patterns in the data. The patterns are expected to be found in any dataset that comes from the same distribution. As a consequence, the model should generalize better on previously unseen data.

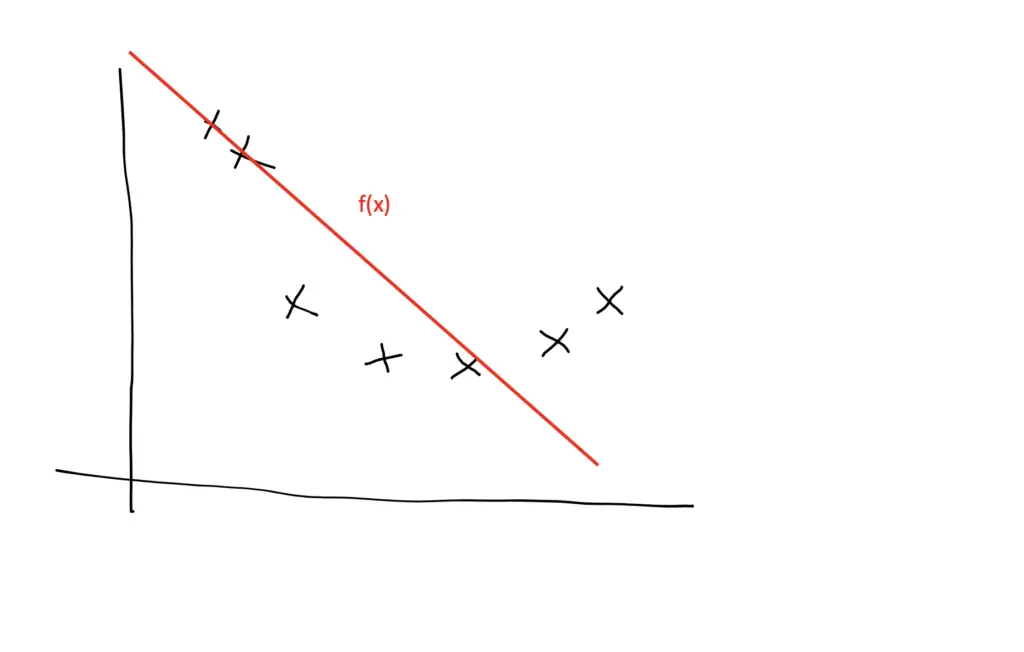

But if we go too far, the model might become too simple or too constrained by regularization to accurately capture the patterns in the data. Then the model will neither generalize well nor fit the training data well. A model that exhibits this problem is said to underfit the data and to suffer from high bias . If the model is too simple to accurately capture the patterns in the data (for example, when using a linear model to fit non-linear data), its capacity is insufficient for the task at hand.

When training neural networks, for example, we go through multiple iterations of training in which the model learns to fit an increasingly complex function to the data. Typically, your training error will decrease during learning the more complex your model becomes and the better it learns to fit the data. In the beginning, the training error decreases rapidly. In later training iterations, it typically flattens out as it approaches the minimum possible error. Your test or generalization error should initially decrease as well, albeit likely at a slower pace than the training error. As long as the generalization error is decreasing, your model is underfitting because it doesn’t live up to its full capacity. After a number of training iterations, the generalization error will likely reach a trough and start to increase again. Once it starts to increase, your model is overfitting, and it is time to stop training.

Ideally, you should stop training once your model reaches the lowest point of the generalization error. The gap between the minimum generalization error and no error at all is an irreducible error term known as the Bayes error that we won’t be able to completely get rid of in a probabilistic setting. But if the error term seems too large, you might be able to reduce it further by collecting more data, manipulating your model’s hyperparameters, or altogether picking a different model.

Bias Variance Tradeoff

We’ve talked about bias and variance in the previous section. Now it is time to clarify what we actually mean by these terms.

Understanding Bias and Variance

In a nutshell, bias measures if there is any systematic deviation from the correct value in a specific direction. If we could repeat the same process of constructing a model several times over, and the results predicted by our model always deviate in a certain direction, we would call the result biased.

Variance measures how much the results vary between model predictions. If you repeat the modeling process several times over and the results are scattered all across the board, the model exhibits high variance.

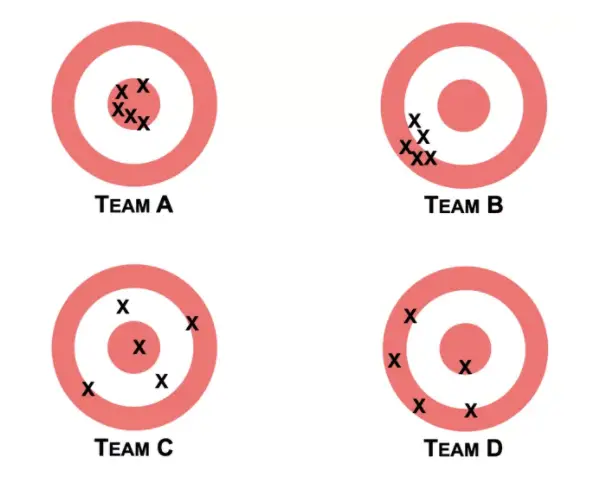

In their book “Noise” Daniel Kahnemann and his co-authors provide an intuitive example that helps understand the concept of bias and variance. Imagine you have four teams at the shooting range.

Team B is biased because the shots of its team members all deviate in a certain direction from the center. Team B also exhibits low variance because the shots of all the team members are relatively concentrated in one location. Team C has the opposite problem. The shots are scattered across the target with no discernible bias in a certain direction. Team D is both biased and has high variance. Team A would be the equivalent of a good model. The shots are in the center with little bias in one direction and little variance between the team members.

Generally speaking, linear models such as linear regression exhibit high bias and low variance. Nonlinear algorithms such as decision trees are more prone to overfitting the training data and thus exhibit high variance and low bias.

A linear model used with non-linear data would exhibit a bias to predict data points along a straight line instead of accomodating the curves. But they are not as susceptible to random fluctuations in the data. A nonlinear algorithm that is trained on noisy data with lots of deviations would be more capable of avoiding bias but more prone to incorporate the noise into its predictions. As a result, a small deviation in the test data might lead to very different predictions.

To get our model to learn the patterns in data, we need to reduce the training error while at the same time reducing the gap between the training and the testing error. In other words, we want to reduce both bias and variance. To a certain extent, we can reduce both by picking an appropriate model, collecting enough training data, selecting appropriate training features and hyperparameter values. At some point, we have to trade-off between minimizing bias and minimizing variance. How you balance this trade-off is up to you.

The Bias Variance Decomposition

Mathematically, the total error can be decomposed into the bias and the variance according to the following formula.

Remember that Bayes’ error is an error that cannot be eliminated.

Our machine learning model represents an estimating function \hat f(X) for the true data generating function f(X) where X represents the predictors and y the output values.

Now the mean squared error of our model is the expected value of the squared difference of the output produced by the estimating function \hat f(X) and the true output Y.

The bias is a systematic deviation from the true value. We can measure it as the squared difference between the expected value produced by the estimating function (the model) and the values produced by the true data-generating function.

Of course, we don’t know the true data generating function, but we do know the observed outputs Y, which correspond to the values generated by f(x) plus an error term.

The variance of the model is the squared difference between the expected value and the actual values of the model.

Now that we have the bias and the variance, we can add them up along with the irreducible error to get the total error.

A machine learning model represents an approximation to the hypothesized function that generated the data. The chosen model is a hypothesis since we hypothesize that this model represents the true data generating function.

We choose the hypothesis from a hypothesis space that may be subject to certain constraints. For example, we can constrain the hypothesis space to the set of linear models.

When choosing a model, we aim to reduce the bias and the variance to prevent our model from either overfitting or underfitting the data. In the real world, we cannot completely eliminate bias and variance, and we have to trade-off between them. The total error produced by a model can be decomposed into the bias, the variance, and irreducible (Bayes) error.

About Author

Related Posts

Help | Advanced Search

Statistics > Machine Learning

Title: hypothesis spaces for deep learning.

Abstract: This paper introduces a hypothesis space for deep learning that employs deep neural networks (DNNs). By treating a DNN as a function of two variables, the physical variable and parameter variable, we consider the primitive set of the DNNs for the parameter variable located in a set of the weight matrices and biases determined by a prescribed depth and widths of the DNNs. We then complete the linear span of the primitive DNN set in a weak* topology to construct a Banach space of functions of the physical variable. We prove that the Banach space so constructed is a reproducing kernel Banach space (RKBS) and construct its reproducing kernel. We investigate two learning models, regularized learning and minimum interpolation problem in the resulting RKBS, by establishing representer theorems for solutions of the learning models. The representer theorems unfold that solutions of these learning models can be expressed as linear combination of a finite number of kernel sessions determined by given data and the reproducing kernel.

Submission history

Access paper:.

- HTML (experimental)

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

- Reference work entry

- First Online: 01 January 2023

- Cite this reference work entry

- Martin W. Bauer 2

64 Accesses

The word “hypothesis” is of ancient Greek origin and composed of two parts: “hypo” for “under,” and “thesis” for “to put there”; in Latin, this translated “to suppose” or “supposition”; made up of “sub” [under] and “positum” [put there]. It refers to something that we put there, maybe to start with, maybe to stay with us as an installation. Hence in modern English we say “ let us hypothesize, suppose,” or “let us put it that … .,” and then we start the argument by developing implications and reaching conclusions. The term “hypothesis” marks a space of possibilities in several ways. Firstly, it is the uncertain starting point from which firmer conclusions might be drawn. Public reasoning examines how, from uncertain hypotheses, neither true nor false, we can nevertheless reach useful conclusions. Secondly, the hypothesis is the end point of a logical process of firming up on reality through scientific enquiry. Scientific methodology makes hypothesis testing the gold standard...

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Barnes, J. (1984). The complete works of Aristotle – The revised Oxford translation (vols 1 and 2). Princeton: PUP.

Google Scholar

Bauer, M. W., & Gaskell, G. (2008). Social representations theory: A progressive research programme for social psychology. Journal for the Theory of Social Behaviour, 38 (4), 335–354.

Article Google Scholar

Blumenberg, H. (1986). Die Lesbarkeit der Welt [The readability of the world] . Frankfurt: Suhrkamp Wissenschaft.

Brentano, F. (2015 [1874]). Psychology from an empirical standpoint . London: Routledge Classics.

Bruner, J. S. (1974). Beyond the information given – Studies in the psychology of knowing . London: George Allen & Unwin.

Daston, L. (2005). Fear and loathing of the imagination in science. DAEDALUS , Fall, 16–30.

Debrouwere, S., & Rosseel, Y. (2021). The conceptual, cunning, and conclusive experiment in psychology. Perspectives on Psychological Science , 1–11. https://doi.org/10.1177/17456916211026947 .

Eco, U., & Sebeok, T. S. (Eds.). (1988). The sign of three – Dupin, Holmes and Pierce . Bloomington: Indiana University Press.

Fassnacht, G. (2000). Bemetology – Towards continuous (self-)observation and personality assessment. In M. W. Bauer & G. Gaskell (Eds.), Qualitative researching with text, image and sound – A practical handbook (pp. 108–129). London: Sage.

Feyerabend, P. K. (1981). Problems of empiricism – Philosophical papers (Vol. 2). Cambridge: CUP.

Book Google Scholar

Foppa, K. (1965). Lernen, Gedaechtnis, Verhalten [learning, memory, behaviour] – Ergebnisse und Probleme der Lernpsychologie . Koeln: Kiepenheuer & Witsch.

Frigg, R. (2010). Models and fictions. Synthese, 172 , 251–268.

Gadamer, H. G. (1975 [1960]). Truth and method (2nd ed.). London: Sheed & Ward.

Geigerenzer, G. (2020). How to explain behaviour? Topics in Cognitive Science, 12 , 1363–1381.

Geigerenzer, G., Switjtink, Z., Porter, T., Daston, L., Beatty, J., & Krueger, L. (1989). The empire of chance – How probability changed science and everyday life . Cambridge: CUP.

Glass, D. J., & Hall, N. (2008). A brief history of the hypothesis. Cell, 8 , 378–381.

Gregory, R. L. (1980). Perceptions as hypothesis. Philosophical Transactions of the Royal Society B, 290 , 181–197.

Groner, R. (1978). Hypothesen im Denkprozess [Hypothesis in thinking processes] – Grundlagen einer verallgemeinerten Theorie auf der Basis elementarer Informationsverarbeitung . Bern: Hans Huber Verlag.

Groner, R., Groner, M., & Bischof, W. F. (1983). Approaches to Heuristics: A historical review. In R. Groner et al. (Eds.), Methods of heuristics (pp. 1–18). Hillsdale: Lawrence Erlbaum Associates Publishers.

Habermas, J. (1989). The theory of communicative action (two volumes) . Cambridge: Polity Press.

Harre, R. (1985). The philosophies of science – An introductory survey (2nd ed.). Oxford: OUP.

Kahneman, D. (2011). Thinking – Fast and slow . London: Penguin.

Koehler, W. (1925). The mentality of apes . New York: Harcourt Brace.

Lewin, K. (1931). The conflict between Aristotelian and Galilean modes of thought in contemporary psychology. Journal of General Psychology, 5 , 141–177. [reprint in Gold M (1999) The complete social scientist – A Kurt Lewin reader . Washington: APA, pp. 37–66].

Lewin, K. (1936). Principles of topological psychology . New York: McGraw-Hill.

Lloyd, G. E. R. (1990). Demystifying mentalities . Cambridge: CUP.

Miller, G. A., Galanter, E., & Pribram, K. H. (1960). Plans and the structure of behaviour . London: Holt, Rinehart & Winston.

Peters, D. (2013). Resistance and rationality: Some lessons from scientific revolutions. In M. W. Bauer, R. Harre, & C. Jensen (Eds.), Resistance and the practice of rationality (pp. 11–28). Newcastle: Cambridge Scholars Publishers.

Piaget, J. (1972). The principles of genetic epistemology . London: Routledge & Kegan Paul.

Popper, K. R. (1972). Conjectures and refutations (4th revised ed.). London: Routledge & Kegan Paul.

Prinz, W. (2012). Open minds: The social making of agency and intentionality . Cambridge, MA: MIT Press.

Psillos, S. (2011). An explorer upon untrodden ground: Peirce on abduction. In Handbook of the history of logic (Vol. 10, pp. 117–151). Elsevier North Holland.

Rescher, H. (1964). Hypothetical reasoning . Amsterdam: North-Holland.

Scheel, A. M., Tiokhin, L., Isager, P. M., & Lakens, D. (2020). Why hypothesis testers should spend less time testing hypothesis. Perspectives on Psychological Science, 16 , 744–755.

Scheler, M. (1960[1925]). Wissensformen und die Gesellschaft [Types of knowledge and society], Gesammelte Werke Bd. 8. Bern/Muenchen: Francke Verlag.

Suppes, P. (1983). Heuristics and the axiomatic method. In R. Groner et al. (Eds.), Methods of heuristics (pp. 79–88). Hillsdale: Lawrence Erlbaum Associates Publishers.

Download references

Author information

Authors and affiliations.

Department of Psychological and Behavioural Science, London School of Economics and Political Science, London, UK

Martin W. Bauer

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Martin W. Bauer .

Editor information

Editors and affiliations.

Dublin City University, Dublin, Ireland

Vlad Petre Glăveanu

Section Editor information

Department of Life Sciences, University of Trieste, Trieste, Italy

Sergio Agnoli

Marconi Institute for Creativity, Sasso Marconi, Italy

Rights and permissions

Reprints and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this entry

Cite this entry.

Bauer, M.W. (2022). Hypothesis. In: Glăveanu, V.P. (eds) The Palgrave Encyclopedia of the Possible. Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-030-90913-0_193

Download citation

DOI : https://doi.org/10.1007/978-3-030-90913-0_193

Published : 26 January 2023

Publisher Name : Palgrave Macmillan, Cham

Print ISBN : 978-3-030-90912-3

Online ISBN : 978-3-030-90913-0

eBook Packages : Behavioral Science and Psychology Reference Module Humanities and Social Sciences Reference Module Business, Economics and Social Sciences

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- svg]:stroke-accent-900">

Fish swimming together fare better in turbulent waters

By Laura Baisas

Posted on Jun 6, 2024 2:00 PM EDT

3 minute read

Deposit Photos

Schooling fish including zebrafish , rainbowfish, and opahs/moonfish like to stick together in the big blue. Scientists believe that traveling in schools has numerous evolutionary benefits , but it also could be more beneficial to their general movements . They also may have an easier time swimming through the ocean’s more turbulent waters than those that go it alone. The findings are described in a study published June 6 in the open-access journal PLOS Biology .

Do the locomotion

Locomotion –the way animals move from one place to another–is critical to several aspects of their behavior. Various movements are needed during their migration periods , reproduction, and when feeding. Many species have different adaptations to make moving around more efficient to compensate. Fish have sleek and streamlined bodies that create little resistance in the water, scales that allow for flexible movements and physical protection, and gills that extract oxygen.

“Generating movement through the environment is one of the defining features of animals. Yet the environment can be very challenging with obstacles and animals often face difficult conditions that increase the cost of movement,” study co-authors and evolutionary biologists Yangfan Zhang and George Lauder of Harvard University, tell Popular Science .

[Related: Why do we send so many fish to space? ]

Scientists in different fields have proposed several hypotheses for why the collective movements of animals are beneficial. It could enhance mating success, help them avoid predators, or make it easier for animals to communicate when finding food.

In this study , the team proposes a new hypothesis for navigating more challenging waters. Their turbulent sheltering hypothesis suggests that traveling in schools allows fish to shield each other from more disruptive water currents.

“The turbulence sheltering hypothesis has not been previously proposed so this study is the first to both propose and test it,” says Zhang. “For many years now, there have not been any substantially new ideas on why fish in particular might school and move as a collective group. The turbulence sheltering hypothesis provides a new idea for why fishes might gain an energetic advantage by moving as a school.”

Testing the turbulence sheltering hypothesis

To put this hypothesis to the test, the team ran trials with giant danios ( Devario aeqipinnatus ). These types of carp regularly swim in schools and are only about one to two inches long, despite the superlative in their name. The team observed the danios swimming alone or in groups of eight in both turbulent and more steadily flowing water. High-speed cameras captured the animals’ movements as they swam and the team simultaneously measured the fishes’ respiration rate and energy expenditure.

[Related: Making babies may take 10 times more energy than we thought .]

They found that the schooling fish spent up to 79 percent less energy when swimming in turbulent water compared to swimming alone. Schooling fish also clustered more closely together in the turbulent water compared to the more steady streams. Solitary fish had to beat their tails more vigorously to keep their same speed in more turbulent currents.

This activity is somewhat similar to competitive cyclists drafting off of one another during a triathlon to reduce drag.

“A significant difference between humans in a triathlon, or cyclists moving behind each other and benefiting from the reduced flow (called a drag wake) is that fish are accelerating the flow behind them,” says Zhang. “Previous research has shown that fish can even benefit from thrust wake (an increased fluid velocity). Fish move a bit more elegantly through the fluid than humans.”

‘Dramatic benefits’

The results add some support to the turbulence sheltering hypothesis, indicating that locomotion efficiency might be a driving factor behind whey schooling behavior evolved.

“The most surprising part of the study is the dramatic benefits that moving in a group confers when fish swim in turbulence,” says Zhang. “It’s much, much, better to swim in a school if the environmental flows are turbulent and challenging than it is to swim as a solitary individual. In future experiments, the team plans to conduct experiments to understand what specific mechanisms enable energy saving in individual animals when they move within a group. This data is valuable in general for understanding fish ecology and the fundamentals of hydrodynamics . It could also potentially be applied to the design and maintenance of habitats that are meant to harbor protected fish species or to hinder more invasive ones.

Latest in Fish

Why do we send so many fish to space why do we send so many fish to space.

By Briley Lewis

Cuttlefish tentacles and origami inspired a new robotic claw Cuttlefish tentacles and origami inspired a new robotic claw

By Andrew Paul

PhotoGeometry

Measuring the World With Images

What does CLIP “Read”? Towards Understanding CLIP’s Text Embedding Space

TL;DR: We want to predict CLIP's zero-shot ability by only seeing its text embedding space. We made two hypotheses:

- CLIP’s zero-shot ability is related to its understanding of ornithological domain knowledge, such that the text embedding of a simple prompt (e.g., "a photo of a Heermann Gull") aligns closely with a detailed descriptive prompt of the same bird. (This hypothesis was not supported by our findings)

- CLIP’s zero-shot ability is related to how well it separates one class's text embedding from the nearest text embedding of a different class. (This hypothesis showed moderate support)

Hypothesis 1:

How would a bird expert tell the difference between a California gull and a Heermann's Gull?

A California Gull has a yellow bill with a black ring and red spot, gray back and wings with white underparts, and yellow legs, whereas a Heermann's Gull has a bright red bill with a black tip, dark gray body, and black legs.

Experts utilize domain knowledge/unique appearance characteristics to classify species.

Thus, we hypothesize that, if the multimodal training of CLIP makes CLIP understand the same domain knowledge of experts, the text embedding of "a photo of a Heermann Gull " (let's denote it asplain_prompt(Heermann Gull)) shall be close (and vice versa) to the text embedding of "a photo of a bird with Gray body and wings, white head during breeding season plumage, Bright red with black tip bill, Black legs, Medium size. Note that it has a Bright red bill with a black tip, gray body, wings, and white head during the breeding season ." (let's denote it as descriptive_prompt(Heermann Gull)).

For example, the cosine similarity between the two prompts of the Chuck-will's-widow is 0.44 (lowest value across the CUB dataset), and the zero-shot accuracy on this species is precisely 0.

Then, we can formulate our hypothesis as follows

We tested our hypothesis in the CUB dataset.

Qualitative and Quantitative Results

The cosine similarity between "a photo of Yellow breasted Chat" and "a photo of a bird with Olive green back, bright yellow breast plumage" is 0.82, which is the highest value across the whole CUB dataset. However, the zero-shot accuracy on this species is 10% (average accuracy is 51%)

We got the Pearson correlation coefficient and the Spearman correlation coefficient between accuracy and the text embedding similarity as follows:

- Pearson correlation coefficient = -0.14, p-value: 0.05

- Spearman correlation coefficient = -0.14 p-value: 0.05

The coefficients suggest a very weak linear correlation.

We also make a line plot of accuracy vs. text embedding similarity, which shows no meaningful trends (maybe we can say the zero-shot accuracy tends to zero if the text embedding similarity score is lower than 0.50):

Thus, we conclude that the hypothesis is not supported.

I think there are possibly two reasons:

- The lack of correlation might be due to the nature of CLIP's training data, where captions are often not descriptive

- CLIP does not utilize domain knowledge in the same way humans do

Hypothesis 2

We examine the species with nearly zero CLIP accuracy:

We can see that they are close in appearance. Therefore, we wonder if their text embeddings are close as well.

More formally, we want to examine the cosine similarity between one species' text embedding and its nearest text embedding to see if CLIP's inability to distinguish them at the semantic level possibly causes the classification to fail.

We also got the Pearson correlation coefficient and the Spearman correlation coefficient:

- Pearson correlation coefficient = -0.43 p-value: 1.3581472478043406e-10

- Spearman correlation coefficient = -0.43 p-value: 1.3317673165555703e-10

which suggests a significant but moderate negative correlation.

And a very noisy plot ......

Wait, what if we smooth the line plot by averaging every 20 points to 1 point:

The trend looks clearer, although there is still an "outlier."

In conclusion, I think we can't determine whether CLIP's zero-shot without giving the information/context of other classes. For example, CLIP completely failed to classify a California gull vs. a Heermann's Gull, while it perfectly solves the problem of, e.g., a banana vs. a Heermann's Gull.

Next step, I want to investigate:

- Are there some special local geometry properties that are related to the zero-shot ability?

- When and why does CLIP's zero-shot prediction fail? Is it because the image encoder misses the detailed high-resolution features, or is it because the text encoder fails to encode the most "unique" semantic information. Or maybe it is just because we need a larger model to align the image embedding space and the text embedding space.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

This site uses cookies to offer you a better browsing experience. Visit GW’s Website Privacy Notice to learn more about how GW uses cookies.

TESS finds intriguing world sized between Earth and Venus

U sing observations by NASA's TESS (Transiting Exoplanet Survey Satellite) and many other facilities, two international teams of astronomers have discovered a planet between the sizes of Earth and Venus only 40 light-years away. Multiple factors make it a candidate well-suited for further study using NASA's James Webb Space Telescope.

TESS stares at a large swath of the sky for about a month at a time, tracking the brightness changes of tens of thousands of stars at intervals ranging from 20 seconds to 30 minutes. Capturing transits—brief, regular dimmings of stars caused by the passage of orbiting worlds—is one of the mission's primary goals.

"We've found the nearest, transiting, temperate, Earth-size world located to date," said Masayuki Kuzuhara, a project assistant professor at the Astrobiology Center in Tokyo, who co-led one research team with Akihiko Fukui, a project assistant professor at the University of Tokyo. "Although we don't yet know whether it possesses an atmosphere, we've been thinking of it as an exo-Venus, with similar size and energy received from its star as our planetary neighbor in the solar system."

The host star, called Gliese 12, is a cool red dwarf located almost 40 light-years away in the constellation Pisces. The star is only about 27% of the sun's size, with about 60% of the sun's surface temperature. The newly discovered world, named Gliese 12 b, orbits every 12.8 days and is Earth's size or slightly smaller—comparable to Venus. Assuming it has no atmosphere, the planet has a surface temperature estimated at around 107 degrees Fahrenheit (42 degrees Celsius).

Astronomers say that the diminutive sizes and masses of red dwarf stars make them ideal for finding Earth-size planets. A smaller star means greater dimming for each transit, and a lower mass means an orbiting planet can produce a greater wobble, known as "reflex motion," of the star. These effects make smaller planets easier to detect.

The lower luminosities of red dwarf stars also means their habitable zones—the range of orbital distances where liquid water could exist on a planet's surface—lie closer to them. This makes it easier to detect transiting planets within habitable zones around red dwarfs than those around stars emitting more energy.

The distance separating Gliese 12 and the new planet is just 7% of the distance between Earth and the sun. The planet receives 1.6 times more energy from its star as Earth does from the sun and about 85% of what Venus experiences.

"Gliese 12 b represents one of the best targets to study whether Earth-size planets orbiting cool stars can retain their atmospheres, a crucial step to advance our understanding of habitability on planets across our galaxy," said Shishir Dholakia, a doctoral student at the Centre for Astrophysics at the University of Southern Queensland in Australia. He co-led a different research team with Larissa Palethorpe, a doctoral student at the University of Edinburgh and University College London.

Both teams suggest that studying Gliese 12 b may help unlock some aspects of our own solar system's evolution.

"It is thought that Earth's and Venus's first atmospheres were stripped away and then replenished by volcanic outgassing and bombardments from residual material in the solar system," Palethorpe explained. "The Earth is habitable, but Venus is not due to its complete loss of water. Because Gliese 12 b is between Earth and Venus in temperature, its atmosphere could teach us a lot about the habitability pathways planets take as they develop."

One important factor in retaining an atmosphere is the storminess of its star. Red dwarfs tend to be magnetically active, resulting in frequent, powerful X-ray flares. However, analyses by both teams conclude that Gliese 12 shows no signs of extreme behavior.

A paper led by Kuzuhara and Fukui appears in The Astrophysical Journal Letters . The Dholakia and Palethorpe findings were published in Monthly Notices of the Royal Astronomical Society on the same day.

During a transit, the host star's light passes through any atmosphere. Different gas molecules absorb different colors, so the transit provides a set of chemical fingerprints that can be detected by telescopes like Webb.

"We know of only a handful of temperate planets similar to Earth that are both close enough to us and meet other criteria needed for this kind of study, called transmission spectroscopy, using current facilities," said Michael McElwain, a research astrophysicist at NASA's Goddard Space Flight Center in Greenbelt, Maryland, and a co-author of the Kuzuhara and Fukui paper. "To better understand the diversity of atmospheres and evolutionary outcomes for these planets, we need more examples like Gliese 12 b."

TESS is a NASA Astrophysics Explorer mission managed by NASA Goddard and operated by MIT in Cambridge, Massachusetts. Additional partners include Northrop Grumman, based in Falls Church, Virginia; NASA's Ames Research Center in California's Silicon Valley; the Center for Astrophysics | Harvard & Smithsonian in Cambridge, Massachusetts; MIT's Lincoln Laboratory; and the Space Telescope Science Institute in Baltimore. More than a dozen universities, research institutes, and observatories worldwide are participants in the mission.

More information: Masayuki Kuzuhara et al, Gliese 12 b: A Temperate Earth-sized Planet at 12 pc Ideal for Atmospheric Transmission Spectroscopy, The Astrophysical Journal Letters (2024). DOI: 10.3847/2041-8213/ad3642

Shishir Dholakia et al, Gliese 12 b, A Temperate Earth-sized Planet at 12 Parsecs Discovered with TESS and CHEOPS, (2024). DOI: 10.1093/mnras/stae1152

Provided by NASA's Goddard Space Flight Center

- Skip to main content

- Keyboard shortcuts for audio player

Hubble will change how it points, but NASA says 'great science' will continue

Nell Greenfieldboyce

The Hubble Space Telescope in orbit in 1999, just after a servicing mission by astronauts. NASA hide caption

The Hubble Space Telescope is suffering the kinds of aches and pains that can come with being old, and NASA officials say they’re shifting into a new way of pointing the telescope in order to work around a piece of hardware that’s become intolerably glitchy.

Officials also announced that, for now, they’ve decided not to pursue a plan put forward by a wealthy private astronaut who wanted to go to Hubble in a SpaceX capsule, in a mission aimed at extending the telescope’s lifespan by boosting it up into a higher orbit and perhaps even adding new technology to enhance its operations.

“Even without that reboost, we still expect to continue producing science through the rest of this decade and into the next,” Mark Clampin , director of the astrophysics division in NASA’s science mission directorate, told reporters in a teleconference on Tuesday.

Because of atmospheric drag, the bus-sized telescope is slowly drifting down towards Earth. If nothing is eventually done to raise it up, it will likely plunge down into the atmosphere and mostly burn up in the mid-2030’s.

Private mission to save the Hubble Space Telescope raises concerns, NASA emails show

That’s one reason why NASA was so interested when Jared Isaacman, who has previously gone to orbit in a SpaceX capsule, suggested mounting a mission to Hubble as part of a series of technology demonstration spaceflights he has planned.

NASA and SpaceX jointly worked on a feasibility study to see what might be possible for Hubble. The telescope has been in orbit since 1990 and was last repaired 15 years ago, by astronauts who went up in NASA’s space shuttles, which are now museum exhibits.

NASA’s Clampin told reporters that “after exploring the current commercial capabilities, we are not going to pursue a reboost right now.”

He said the assessment of Isaacman’s proposal raised a number of considerations, including potential risks such as “premature loss of science” if Hubble accidentally got damaged.

NASA officials stressed that Hubble’s instruments are healthy and the telescope remains incredibly productive.

“We do not see Hubble as being on its last legs,” said Patrick Crouse , project manager for the Hubble Space Telescope at NASA’s Goddard Space Flight Center in Greenbelt, Maryland. “We do think it's a very capable observatory and poised to do exciting things.”

But it will have to do those exciting things with a new way of operating the system it uses for pointing at celestial objects.

That’s because officials have abandoned their efforts to use a glitchy gyroscope that has repeatedly forced the telescope to suspend science and go into “safe” mode in recent months.

Hubble’s pointing system is so precise, NASA says it is the equivalent of being able to keep a laser shining on a dime over 200 miles away for however long Hubble takes a picture – up to 24 hours. This system has long relied on using three gyroscopes at a time.

Now, though, to avoid having to use the sketchy gyro, NASA says Hubble will shift into a one-gyroscope mode of operation, a contingency plan that’s been around for years.

“After completing a series of tests and carefully considering our options, we have made the decision that we will transition Hubble to operate using only one of its three remaining gyros,” Clampin said. “Operationally, we believe this is our best approach to support Hubble science through this decade and into the next.”

The scattered stars of the globular cluster NGC 6355, that resides in our Milky Way, seen in this image from the Hubble Space Telescope ESA/Hubble & NASA, E.Noyola, R. Cohen hide caption

Using only one healthy gyroscope, and keeping one in reserve as a backup, will let the telescope continue to return gorgeous images of the universe, with some limitations. Hubble will be less efficient, for example, and it won’t be able to track moving objects that are close to Earth, within the orbit of Mars.

But Clampin said that “most of the observations it takes will be completely unaffected by this change.”

Astronomers still clamor to use Hubble, with proposals for what to observe far exceeding the available telescope time.

The launch of the James Webb Space Telescope in 2021 did not render Hubble obsolete, as the two telescopes capture different kinds of light.

NASA's Voyager 1 spacecraft is talking nonsense. Its friends on Earth are worried

Eventually, NASA will have to decide what to do about Hubble, given that some of its large components would survive re-entering the Earth’s atmosphere. The space agency has long considered sending up some kind of mission that would control its descent and ensure that any Hubble rubble would safely fall into an ocean.

Adding such a propulsion unit would mean that NASA could also boost Hubble’s orbit, enabling it to live longer and take advantage of whatever instruments continued to work. But NASA’s Clampin suggested that there is time to consider options.

“Our latest prediction is that the earliest Hubble would re-enter the Earth's atmosphere is the mid-2030s,” he said. “So we are not going to be seeing it come down in the next couple of years.”

- Hubble Space Telescope

The case for space

The universe isn’t the only thing expanding. Governments have invested in space technology for decades, and now business is accelerating its growth. On this episode of The McKinsey Podcast , McKinsey senior partner Ryan Brukardt speaks with global editorial director Lucia Rahilly about the global space economy, where innovation in space-based technology is generating a range of public- and private-sector opportunities that could reach $1.8 trillion by 2035 1 Space: The $1.8 trillion opportunity for global economic growth , McKinsey, April 8, 2024. —as well as the imperative to shape space responsibly.

In our second segment, author Moshik Temkin talks about his book Warriors, Rebels, and Saints: The Art of Leadership from Machiavelli to Malcolm X (PublicAffairs/Hachette Book Group, November 2023) and what history can tell us about the types of leaders we need today . This comes from our Author Talks series.

This transcript has been edited for clarity and length.

The McKinsey Podcast is cohosted by Roberta Fusaro and Lucia Rahilly.

The allure of space

Lucia Rahilly: Tell us about your background and the origins of your passion for space as a profession.

Ryan Brukardt: This might be a bit corny, but as a child, I would look up at space and see the stars. And I just got caught up in that. That’s where I started. I grew up in the ’80s with a space shuttle launching periodically here in the US, seeing those astronauts. I went to a space camp. I got a degree in physics and went into the Air Force. Space has always been a passion for me. And it’s great to be part of the thinking behind what’s going to happen in the industry and how it’s going to affect every person on Earth.

Lucia Rahilly: What has happened in recent years to make space more accessible than in the past?

Ryan Brukardt: Most people probably don’t realize just how much their daily lives interact with space. Every day, people get in cars assisted with navigation by GPS. Every day, people order food online, assisted by both communications and navigation provided by space assets. The presence of space in our lives is probably more ubiquitous than people think.

However, what really has happened in the last several years is access to space, the ability to get to space—the cost of that has come down dramatically. As you might imagine, when you’re trying to put assets into orbit, it can be very expensive. Through a variety of technological advancements, as well as entrepreneurship and private investment in particular, we’ve seen those costs come down.

Lucia Rahilly: We’re talking about more than space tourism here, correct?

Ryan Brukardt: That’s right. Most of the capabilities we have from space are provided by satellites and other unmanned platforms here in Earth’s orbit. Space tourism is part of the space economy, and people go to space for a variety of reasons—not just for scientific purposes but also for fun. That will increase. However, it is a smaller part of the economy today.

Want to subscribe to The McKinsey Podcast ?

Spaceonomics.

Lucia Rahilly: Let’s turn to the research, starting with the definition of what we’re calling the space economy. What does that mean exactly?

Ryan Brukardt: In the past, it has been a bit nebulous. Trying to define what the space economy means was why we set out to do this report to begin with. The way we think about it, there are two large halves. One-half is what we call “the backbone”: launch vehicles, rockets, and satellites and all the ground equipment that makes those things work. The other half is what we call “the reach”: the applications that use that backbone to provide goods and services to people on Earth.

Lucia Rahilly: Before we get into the specifics on applications, talk to us a bit about the capabilities that industries will be able to develop via investment in space.

Ryan Brukardt: We think about them in three buckets. The first is connectivity. We have the ability to communicate with basically anywhere in the world using satellite communications technology. We can now do that with what’s called high bandwidth and low latency. That means we can send a lot of data very quickly. And the types of use cases that allows for are things like video conferencing, et cetera.

The second bucket is mobility: understanding where you are on Earth. We already do that with cell phones, but increasingly, we’ll do that with even smaller devices to know where anything is in the world.

The third bucket is deriving data that only space-based applications can provide. You will see this in Google Maps—electro-optical imagery or imagery that allows you to see what’s happening on Earth. It used to be that a company or a government would take a picture of Earth once a day or once a week, but now it’s able to take that picture very often.

So you’re able to use not only visual data but also other types of data on what we call a high return aspect to see what changes. Think about crops, for example, and understanding how much water there is to feed those crops, what you may need to do about that, and what actions you may want to take.

Tracking mobility—for better and worse

Lucia Rahilly: Let’s pick up the mobility example. Is this improved, more accurate positioning data—or is it something fundamentally different from GPS? And what will we use it for?

Ryan Brukardt: First, this data has gotten much more accurate, which allows certain use cases to emerge. Second, the ability to detect those signals used to require big antennas and receivers. Now, you can do it with your cell phone. But pretty soon you’ll be able to do it with even a very, very small low-power sticker that you could put on an asset.

For example, now you can get in your car and take out your phone, and it will help you navigate to where you want to go. But in the future, we’ll be able to track containers on ships to understand where they are, understand when they’ll arrive somewhere else, and be very discreet in tracking them. Both the proliferation of old use cases to new geographies, as well as the appearance of use cases that didn’t exist before, are going to propel growth.

Lucia Rahilly: I live in New York City. We’ve got a constant dinner table discussion about wearables and tracking, because my kids, terrifyingly, are beginning to be at large. The research talks about personal-tracking services through wearables. What are the applications here? Is privacy a quaint anachronism in this satellite era?

Ryan Brukardt: The regulatory environment for space is still maturing. There is an idea that we need the appropriate amount of regulation to be able to manage things like privacy. What’s the air traffic control “system” for that? We talk about ways of responsibly managing bandwidth, to enable communications, whether through satellite or otherwise. This whole idea of privacy needs to come through an effective regulatory environment. That’s still catching up. The rate of technological innovation, in some ways, has outpaced that.

This whole idea of privacy needs to come through an effective regulatory environment. That’s still catching up. The rate of technological innovation, in some ways, has outpaced that. Ryan Brukardt

Lucia Rahilly: Talk to us about disaster warnings and management. What role does space-based technology play? And what’s the business opportunity here?

Ryan Brukardt: There are a couple aspects to disaster response. One is being able to give response agencies and governments real-time information about what’s happening in a very clear and concise way. The second is communications. For emergency responders and governments, the ability to effectively communicate is extremely important, and communications often go down during disasters.

Also, what information can we derive from Earth? We talked, for example, about mobile signals to understand where people are. People keep their cell phones on in an emergency. There are commercial technologies out there that can detect those mobile signals, so the government can understand where people may be in trouble.

What happens to satellites—and trash?

Lucia Rahilly: Another opportunity the research highlights is in-orbit servicing. Is the expectation that existing satellites can be upgraded and repaired or that they are replaced? And in the latter case, what happens to legacy equipment? Does it come down? Or is it up in space interminably?

Ryan Brukardt: There is tension right now in the industry between upgrading and repairing satellites on orbit and putting up new satellites to replace the old ones. There are advantages and disadvantages to both, and there’s a role for both. Going back to the regulatory question, it’s getting crowded up there, and there’s a real concern about these satellites running into each other. How do we manage that?

Lucia Rahilly: Related, whose responsibility is it to pick up the garbage accumulating in space?

Ryan Brukardt: That is a hotly debated topic. Many satellites in what we call low Earth orbit or lower orbits are a little bit self-cleaning. So they’ll degrade in orbit; they’ll burn up in our atmosphere. And then they’re just gone. There are satellites far away from Earth for which, to your question on in-orbit servicing, it makes sense in some cases to repair, upgrade, and put more fuel into them.

When you ask who’s responsible, large Western governments and other governments are struggling with that question right now. What requirements do we put on people who build and launch satellites, to ensure space is free and open for the future? And what does that mean? What cost comes with that? What are the regulatory requirements?

Global spacefaring

Lucia Rahilly: You talk in the research about backbone investments that include satellites, launchers, and services like broadcast television or GPS. Do you see investment continuing to be largely state sponsored? Or is there an obvious commercial opportunity in that area?

Ryan Brukardt: Over the last ten years, we’ve gone from launching a few times a year globally to launching every other day or so with all the spacefaring countries we have now. This is a phenomenal change due to several factors.

One is technology and technological improvements in the launch itself. Another is reusability. Some is the commercial scale and scope of certain technologies. And the other is private investment. This has been a huge difference in the last several years. Governments will always be very important and for many space companies, their anchor customer; however, private capital coming in and starting to explore more commercial use cases is a big change.

How space affects life on Earth

Lucia Rahilly: A criticism we sometimes hear about investment in space, or at least certain segments of the space economy, is that there is a genuinely urgent need to address challenges right here on Earth. Talk to us about ways space might help advance progress toward sustainability goals.

Ryan Brukardt: When it comes to, “How can we affect life on Earth? Why would we invest there versus in other places?” I’d point to some of the use cases we talked about. The ability to provide essential access and then basically full access to the global knowledge base to places in the world that are underserved or unconnected is huge.

That allows for education. It allows for an understanding of what’s happening in local communities, as well as more broadly. To understand what’s happening, and in some cases hold others accountable for what’s happening on Earth, is something that really only space and some space assets can provide.

And there are a whole bunch of new technologies allowing us to better understand things like carbon, methane, et cetera, where it was hard to pinpoint or address some of the sources. We’re able to provide information and analytics, not only for private industry but also for governments to take action.

Think about a utility in Europe that has to make a choice every day: Do they turn on some kind of power plant that relies on fossil fuels? Or do they rely on wind? With space-based assets, they better predict how good the wind will be. And if they don’t turn on that fossil fuel generator that day, that’s carbon that doesn’t get released.

And there are a whole bunch of new technologies allowing us to better understand things like carbon, methane, et cetera, where it was hard to pinpoint or address some of the sources. We’re able to provide information and analytics, not only for private industry but also for governments to take action. Ryan Brukardt

Lucia Rahilly: Related, one of the issues that makes headlines is mining in space, lunar resource extraction, which seems potentially complex ethically, geopolitically, et cetera. Any considerations for businesses to keep in mind in that area, as space becomes increasingly commercialized?

Ryan Brukardt: Our ability to extract certain minerals from either asteroids or other planetary bodies like the moon, and some of the decisions regarding responsible extraction, responsible use, and how that all works, are pretty far away.

What I do think is near-term, though, is that many more nations, many more private companies even, are going into space. And they will start to explore, for example, on the moon. The question is, how do we make sure that even in those early steps that we’re all being responsible? Are we cleaning up after ourselves and working well with others and taking care of all our natural resources, whether here or in other places?

The new space race

Lucia Rahilly: We’ve obviously seen geopolitical risk intensify across the globe in recent years. Is geopolitics a factor in the economy of space? And if so, how?

Ryan Brukardt: The short answer is yes. And it always has been. If you look back at the ’60s and the ’50s, there were a couple of countries in a space race. That generated a massive industrial base in those countries. As that race went on, it generated a lot of economic activity and a lot of technological advancement and, at the end of the day, some pretty spectacular achievements during that time period.

If you look today, we’re in another geopolitical environment where living, working, and using space for national-security purposes will continue to increase. There are maybe more players that are going to be involved in space.

The number of space agencies has grown. It used to be a very, very small number. And now you see many in the Middle East, in Asia, even appearing in Europe. So the interest in space, the use of space, and in some cases, unfortunately, the exploitation of space is going to be an integral part of many countries’ national-security constructs.

So the interest in space, the use of space, and in some cases, unfortunately, the exploitation of space is going to be an integral part of many countries’ national-security constructs. Ryan Brukardt

Job creation

Lucia Rahilly: Any other benefits you want to highlight that the space economy might confer?

Ryan Brukardt: One that it is really helping with in many countries is job creation—and in particular, science- and engineering-type jobs, where there weren’t any. Even if a country can’t launch astronauts from its own soil, for example, it might develop its space economy by providing high-tech components for satellites, for launch vehicles, et cetera.

We’ve been working with some clients to think through this: if they were to do that in their country, what would that mean for their own economy and for the development of their own universities and whatnot?

If you want to engineer and design high-tech components to be launched into space, you need to have engineers and scientists who understand that technology. You need to have universities that can produce those and a secondary-education system that gets people interested in it. Many countries thinking through this emerging space economy come all the way back to how they think about their education systems and where, over the next 20 years, they’re going to generate economic returns for their GDP.

Challenges to consider

Lucia Rahilly: What are the biggest challenges to the space industry at the moment?

Ryan Brukardt: Regulation is definitely one. There is tension between how much innovation versus regulation we want as a global economy. The second is tension regarding how many players are involved, whether they’re private companies or governments. We don’t necessarily want a domain to tighten. We want free and open use. How is that going to evolve?

The third is how rapidly some of these technologies will be applied to different types of business problems. And it’s on this third one where we spend a lot of our time with client work. We are working with clients in the mining industry and the agriculture industry, for example, to think through: how can they use those capabilities we talked about before—communications, mobility, and different types of analytics—to solve their own business problems?

At the end of the day, if you’re an executive working in a particular industry, it doesn’t really matter how you fix your business problems: in space, on the ground, with some other technology, or with generative AI. What they really want is better outcomes for their customers and employees.

Starting on your space strategy

Lucia Rahilly: You’ve said in the research that the space economy is at an inflection point. What do you expect to happen in terms of the dynamics of growth in coming years?

Ryan Brukardt: The short answer is we’re going to see significant growth in the space economy over the next ten years. When we set out to think about what’s going to happen in the economy, we didn’t want to use some top-down number. We wanted to think through all the use cases, both government and private-sector use cases, and build this projection from the bottom up. We think that’s important because the space economy is likely to almost triple in the next ten years, from $630.0 billion to $1.8 trillion.

And we talked about this whole concept of backbone and reach. Frankly, as someone who works in the space industry, we have a hard time talking about reach. We like to talk about satellites and launch vehicles. And it is really reach that’s going to propel the space economy from that $630.0 billion to $1.8 trillion. It’s an exciting time, which is why we say we’re at this inflection point, as more and more folks on Earth benefit from space and figure out how to use those capabilities.

Lucia Rahilly: Should private-sector leaders put space on their agendas? And if so, how should they think about incorporating space into their strategies?

Ryan Brukardt: Well, space is the final frontier, right? Everybody needs to have it in their strategy . What we’ve been working on with our clients in this period of rapid change—not just in space but across many industries—is using a bit of that excitement about the capabilities provided in and through space, a bit of that technological change, and a bit of that passion to say that space can really change the world even more than it already has.

See how it can work in your business, in your government, and for your people, whether they’re employees or residents. It can and will continue to touch the lives of everybody throughout Earth. I know it sounds lofty, but we believe it is true.

The types of leaders who change the world

Roberta Fusaro: Next up, what can CEOs learn from dynamic leaders in history? Here’s author and Harvard fellow Moshik Temkin.

Moshik Temkin: We can’t understand transformative, important leaders without understanding the history that shaped them and made them, the world in which they came up, and the crises that they faced. It’s more of a question that never has a clear answer. Because for every important leader that you look at, you’ll find that that leader is forged by history and the circumstances that that leader faces. But also, really important leaders then change things, and they create the transformation that makes the world different. So they make history.

If you consider the types of leaders who are in the book, you really find three kinds. The first is people who have power. You can talk about presidents. You can talk about people who have institutional or formal power.

Then, there’s a second group: for example, the suffragists who were fighting for the right of women to vote or Martin Luther King Jr. or Malcolm X, who were fighting for the African American struggle in the 1950s and 1960s. They’re not heads of state. They don’t have formal power, but they do have some power and they find alternative sources of power to achieve the kind of change that they want.

And finally, there’s another category that is very interesting, and that’s where the opposition comes from. Because opposition means sometimes that you’re quite literally in danger. So think about, let’s say, the French resistance during World War II or people who were escaping slavery in the 19th century in the United States. These are people for whom the stakes couldn’t be higher. It’s life or death, right? And their opposition to power is quite literally putting their own lives in danger. And sometimes the lives of others are at risk. How do you lead when you have no power?

If I try to translate this to the business world, the really interesting question is this: let’s say you’re part of an organization or a corporation. You’re not at the top. You have some power. You might decide that you want to replace the person who holds power. That’s a very tricky thing to do inside an organization, because that can cost you your own career, your own success. But by creating alliances, by being strategic, by thinking about the strengths and weaknesses of the person above you—sometimes the person at the top—you can find ways to eventually realize your own goals and ambitions within that hierarchy.

The list of problems and challenges that we have is a long one. The question is, are we going to be able to find the leaders and the leadership we need? I see those leaders. They’re not known yet, and they’re not famous. But when we identify such people, we need to encourage them, we need to rally around them, and we need to help and support them. If people who are listening to this view themselves as such leaders, then they shouldn’t be shy about trying to step up and do the things that need to be done. Because ultimately for me, leadership, true leadership, is a form of public service.

Ryan Brukardt is a senior partner in McKinsey’s Miami office. Lucia Rahilly is the global editorial director of McKinsey Global Publishing and is based in the New York office, and Roberta Fusaro is an editorial director in the Boston office.

Explore a career with us

Related articles.

Space: The $1.8 trillion opportunity for global economic growth

Can better governance help space lift off?

Space: The missing element of your strategy

COMMENTS

Our goal is to find a model that classifies objects as positive or negative. Applying Logistic Regression, we can get the models of the form: (1) which estimate the probability that the object at hand is positive. Each such model is called a hypothesis, while the set of all the hypotheses an algorithm can learn is known as its hypothesis space ...

There is a tradeoff between the expressiveness of a hypothesis space and the complexity of finding a good hypothesis within that space. — Page 697, Artificial Intelligence: A Modern Approach, Second Edition, 2009. Hypothesis in Machine Learning: Candidate model that approximates a target function for mapping examples of inputs to outputs.

The hypothesis space is $2^{2^4}=65536$ because for each set of features of the input space two outcomes (0 and 1) are possible. The ML algorithm helps us to find one function, sometimes also referred as hypothesis, from the relatively large hypothesis space. References. A Few Useful Things to Know About ML;

A hypothesis is a function that best describes the target in supervised machine learning. The hypothesis that an algorithm would come up depends upon the data and also depends upon the restrictions and bias that we have imposed on the data. The Hypothesis can be calculated as: y = mx + b y =mx+b. Where, y = range. m = slope of the lines.

Hypothesis space (H) is the composition of all legal best possible ways to divide the coordinate plane so that it best maps input to proper output. Further, each individual best possible way is called a hypothesis (h). Hence, the hypothesis and hypothesis space would be like this: Hypothesis in Statistics

A hypothesis space/class is the set of functions that the learning algorithm considers when picking one function to minimize some risk/loss functional.. The capacity of a hypothesis space is a number or bound that quantifies the size (or richness) of the hypothesis space, i.e. the number (and type) of functions that can be represented by the hypothesis space.