MUET Writing Malaysia – Definitive Guide with Essay Example

MUET is a test that all Malaysian students must take in order to apply for their desired course. It consists of 3 parts: Language Proficiency, General Paper, and Subject Knowledge Test. The Language Proficiency section tests the student’s command of the English language through various writing tasks such as essay writing, letter-writing, and summarizing text. This blog post will aim to provide you with tips on how to score well in this section of MUET by providing an example of an essay topic along with guidelines on how it should be written.

What is MUET writing in Malaysia?

MUET is also known as the Malaysian University English Test seeks to measure the English proficiency among candidates who plan on pursuing tertiary studies at universities located within Malaysia.

The MUET is a test that tests the four language skills of listening, speaking, reading, and writing. While some people may be better at one or two than others; they’re all important to hone in order for anyone interested in pursuing an education around these topics.

The MUET writing test is challenging- students have to transfer information from a non-linear source and write an essay of at least 350 words on the topic given.

MUET Essay Task 1: Report writing (40 marks)

- You have been given 40 minutes to complete the task.

- Study and analyze the information.

- Describe the information or the process in a report format

- Write between 150-200 words

MUET Essay Task 2: Extended writing (60 marks)

- The essay must not be less than 350 words

- Given 50 minutes to complete the task

- Your essay can be:

– Analytical- – Descriptive – Persuasive – Argumentative

Topics for MUET Essay Writing

The following topics are perfectly acceptable for a MUET essay, as all are general knowledge questions that every university or college student should know.

- Why do people commit suicide for selfish reasons? Discuss

- The most valuable thing in life is friendship do you agree?

- Some people prefer street food others prefer homemade which one is the best to support your answer in 350 words

- Education must be free for every child do you agree or not?

- Impact of social media on people

- Some people prefer small towns to live in others one prefer to live in a city why? Which one do you prefer?

MUET Essay Writing Format

MUET is a challenging exam because it includes writing within the Academic Writing Format. For many students, this format may be unfamiliar and difficult to understand or execute in their answers at test time so they don’t score well on that section of MUET.

This difficulty can come from being unaware of how academic writing differs from other forms such as creative or personal essays which are more common formats for essay questions outside of the MUET testing space.

1. Introduction paragraph:

Introduce your topic to the reader.

- Use catchy lines, questions, brief definitions, etc.

- Must have a thesis statement a statement which consists of the main big ideas

- Never use: “research or study” use words like on the basics of my readings, in my opinion, as this is essay writing, not a research paper.

- Never use: “ can be defined as” use words like “In my opinion, I believe that, etc.”

2. Body paragraph:

Body paragraph: must have 3 paragraphs.

- State the main idea

- Often written as the first sentence of each paragraph

- Supporting details and ideas

- Examples, elaborations, explanations,s, and descriptions can also be included

- Must support the main idea discussed in this particular body/body paragraph

- Use linkers, connectors, and transition words.

Conclusion paragraph:

- Restate the main idea

- Use words like hence, therefore, thus, as a result.

- Write it like it is the last sentence of the paragraph.

3. Concluding paragraph:

- Bring the ending of things that you have discussed earlier.

- Restate your thesis statements.

- The conclusion must have 4 sentences

- You may suggest, give opinions or reaffirm your stand

- Use words: To conclude, to summarize, as a conclusion, finally, lastly, eventually, to encapsulate.

Tips to write MUET Essay for Malaysian Students

The best way to write a MUET essay is to have a succinct thesis in the introduction and also be cognizant of transitions between one topic and another.

Moreover, it’s important to know your audience when you’re writing an essay for MUET. One key point which is usually overlooked by students is having knowledge about the specific subject they’re talking about.

- Transfer information

- Use good grammar

- Carefully analyze the graphical information

- Give your opinion

- Think critically

- If you’re aiming at scoring Band 5 or higher, then critical thinking is a must.

- If your aim is to score Band 5 or higher on the exam, it’s time for some serious rigor and reflection.

Use these tools to help with critical thinking: Short term, mid-term and long term effects

- Example: Alcohol

- Short term: yellow teeth/bad breath

- Mid-term: waste of money

- Long term: Health problems such as cancer

- Individual, society, and government

- Individual: Set goals and prepare for change

- Society: Must not encourage one to consume alcohol.

- Government: Must stop fundraising

Types of MUET Essays

MUET essays are usually of the expository type, they can also be argumentative or persuasive.

- While an essay with a descriptive header generally has a focus on what is being described.

- An essay with a narrative header (or “story”) generally puts a priority on how something was done or what it felt like to do it and the completion

- And an informative-explanatory (inform + explain) header offers treatment or analysis of existing data, facts, practices, etc.

- Persuasive essay questions require you to take up a position on an issue and then persuade or convince the reader about your viewpoint based upon evidence. These can come in the form of anecdotes, facts, statistics etcetera

- Expository Essays are different from other types of essays in that they don’t require any persuasion but instead simply look for evidence supporting or disproving what you have been told/asked about.

Importance of MUET essay

You will be required to take the MUET test if you are planning on pursuing your university degree at local public university.

However, note that this requirement is not compulsory for those who wish to pursue their education outside of these institutions and choose instead to study at private schools or international ones (MUET tests are only necessary when applying for scholarships).

Remember also, that different universities may have different English proficiency requirements so make sure you check what they need before sending off an application.

The grading system for MUET

When students get their MUET result, they’ll be able to see the mark for each paper and then an overall score. Band 1 is when the student will have to take two extra English courses during holiday time; a Band 3 or 4 means one course more than this. If you’re in bands 5 or 6- excellent! You don’t need any additional lessons over the summer holidays whatsoever.

MUET Essay writing Band 6- Very good user (Aggregated score: 260-300)

The speaker demonstrates a strong command of the language, and their fluency is unparalleled. They are highly expressive in everything they say, accurate with words that express both nuance and precision when needed most. The person’s understanding of context should not be questioned – it appears as if this individual functions flawlessly when interacting verbally or written textually via various languages.

MUET Essay writing Band 5- Good user (Aggregated score: 220-259)

Hire experts for muet test & essay writing.

Assignment writer Malaysia is staffed by a team of writers who are experts in the Marketing and business field, holding master’s degrees or PhDs. These professional homework writers can provide assistance with case study writing for your marketing projects as well as thesis support at an affordable price point.

If you are interested in getting the best Marketing Assignment Help, turn to Freelance assignment writers and get your top-tier help. Our Essay Writers Malaysia knows academic writing requirements inside out so they can provide research topics that meet any student’s needs.

Struggling to understand your assignment? Get the help you need from professionals. Collaborate with us and get the best assignments completed on time while still understanding everything.

Buy Custom Written Academic Papers From Malaysia Assignment Helpers

Related Post

50000+ Orders Delivered

4.9/5 5 Star Rating

Confidential & Secure

Group Assignment Help

Online Exam -Test & Quiz

Cheapest Price Quote

Diploma & Certificate Levels

Semester & FYP Papers

Summative & Individual

GBA & Reflective

Last Minute Assistance

UP TO 15 % DISCOUNT

Instant Paper Writing Services by Native Malaysia Writers

Get Assistance for Assignments, online Exam, and Projects Writing

MUET Writing Malaysia – Definitive Guide with Essay Example

The Malaysian University English Test (MUET) is a normalized assessment that surveys the English language capability of Malaysian students. It is a pivotal necessity for admission into tertiary training establishments in Malaysia and is perceived by employers as a benchmark for English capability in the job market. The MUET Writing component holds significant weightage, as it evaluates the candidates’ ability to express ideas, organize thoughts, and communicate effectively in written form. This definitive guide aims to provide an in-depth understanding of the MUET Writing test format, scoring criteria, and tips for success. Furthermore, we will present a sample essay to illustrate the application of these guidelines.

Table of Contents

MUET Writing Test Format

The MUET Writing test consists of two tasks: Task A and Task B. Task A requires candidates to write an interpretive essay based on a set of input texts, while Task B assesses their ability to produce a discursive essay on a given topic. Each task has a specific time allocation:

Task A: Interpretive Essay

Time: 30 minutes

Word Limit: 150-200 words

Task B: Discursive Essay

Time: 60 minutes

Word Limit: 350-500 words

It is essential to manage your time wisely to complete both tasks within the allocated time frame.

Scoring Criteria

MUET Writing is scored based on four key areas:

Task Fulfillment

How well the candidate addresses the given task and demonstrates an understanding of the requirements.

Language Accuracy

The ability to use appropriate grammar, vocabulary, and sentence structures effectively.

Cohesion and Coherence

How well ideas are organized and connected, resulting in a logical and cohesive essay.

Appropriateness of Style

The candidate’s ability to adopt an appropriate tone, register, and style for the given task.

Every area is doled out a particular band score going from 0 to 6, and the last score is the normal of the four band scores. It is urgent to focus on every angle to maximize your score.

Tips for MUET Writing Success

Understand the Task Requirements

Carefully read the instructions and ensure you fully comprehend what is expected of you in each task.

Plan and Organize

Take a few minutes to plan your essay structure before writing. Create an outline with a clear introduction, body paragraphs, and conclusion.

Time Management

Allocate appropriate time for each task. Prioritize Task B since it carries more marks but ensure you complete Task A within the assigned time as well.

Language Use

Demonstrate a wide range of vocabulary, sentence structures, and grammar. Avoid repetitive language and aim for precision and clarity in your writing.

Use linking words and phrases to connect ideas and create a smooth flow. Check the logical progression of your arguments and ensure they support your thesis statement.

Introduction and Conclusion

Craft an engaging introduction that provides context and a clear thesis statement. Conclude your essay by summarizing your main points and offering a thoughtful closing statement.

Practice Writing

Regularly practice writing essays within the word limit to enhance your speed and proficiency. Seek feedback from teachers or peers to identify areas for improvement.

Sample Essay: Task B

Topic: “Social media has had a significant impact on interpersonal communication. Discuss the advantages and disadvantages.”

Essay Example

In today’s digital age, social media platforms have upset the manner in which we impart. While they offer a few advantages, there are likewise disadvantages to consider. This essay will investigate the disadvantages and burdens of social media’s effect on relational communication.

Body Paragraph 1

Benefits One of the essential benefits of social media is its capacity to interface with individuals across distances. Platforms like Facebook and Twitter empower people to keep in contact with loved ones, paying little heed to geological obstructions. Moreover, Assignment Help Malaysia gives a platform for people to offer their viewpoints, encouraging sound discussions and widening points of view.

Body Paragraph 2

Disadvantages Then again, social media can adversely affect relational correspondence. Unreasonable dependence on digital platforms can prompt a decrease in eye-to-eye communication, lessening the profundity of personal connections. In addition, the steady openness to arranged pictures and romanticized adaptations of individuals’ lives on platforms like Instagram can prompt insecurities and low confidence.

social media’s effect on relational correspondence is a situation with two sides. While it permits us to interface and draw in with others all the more effectively, it can likewise block significant, up close, and personal cooperation. In this manner, it is fundamental to find some kind of strike between the advantages and downsides of social media to guarantee its positive impact on our lives.

MUET Report: Assessing English Language Proficiency among Malaysian Students

The Malaysian University English Test (MUET) plays a vital role in assessing the English language proficiency of Malaysian students. This report aims to analyze MUET performance among students in Malaysia and Assignment Help provide recommendations for enhancing English language proficiency. By examining test scores, identifying trends, exploring challenges, and proposing strategies, this report aims to contribute to the improvement of English language education in Malaysia.

MUET Speaking Topics

Here are some MUET Speaking topics that are commonly used in the test:

- Technology and its Impact on Society

- Environmental Issues and Conservation

- Education System Reforms

- Youth Empowerment and Volunteerism

- Globalization and Its Effects on Culture

- Health and Fitness in Modern Society

- Social Media and Its Influence on Relationships

- Economic Development and Income Inequality

- Tourism and Its Impact on Local Communities

- The Role of Government in Promoting Entrepreneurship

These topics cover a scope of contemporary issues that expect the possibility to offer their viewpoints, take part in conversations, and present contentions. It is essential to remain refreshed on current undertakings and have a balanced comprehension of every point.

MUET Preparation and Essay Writing

If you’re looking to hire experts for both MUET test preparation and essay writing assistance, here are some steps you can follow:

Determine Your Needs

Assess whether you need assistance with overall test preparation or specific sections such as writing, speaking, listening, or reading. Additionally, consider if you require help specifically with essay writing or need a comprehensive approach.

Research Online Tutoring Platforms

Explore reputable online tutoring platforms that offer expert assistance in language proficiency exams like MUET. Platforms like Preply, iTalki, Verbling, and Tutor.com provide a wide range of qualified tutors who specialize in language exams. You can filter tutors based on their expertise in essay writing and test preparation.

Check Tutors’ Profiles

Review the profiles of potential tutors. Look for individuals with experience teaching or tutoring MUET and a demonstrated proficiency in essay writing. Pay attention to their qualifications, educational background, teaching style, and reviews from previous students to assess their suitability.

Schedule Consultations

Arrange consultations or trial sessions with shortlisted tutors. Use this opportunity to discuss your needs, goals, and expectations. Inquire about their approach to essay writing, test preparation strategies, and their ability to tailor their guidance to your specific requirements.

Seek Writing Assistance

If you specifically require essay writing support, consider engaging an expert academic writer or editor. Platforms like Upwork, Freelancer, or Essay Writing Services provide access to professional writers with expertise in various subjects, including essay writing. Review their profiles, portfolios, and client feedback to ensure their proficiency.

Discuss Expectations and Goals

Clearly communicate your expectations, goals, and areas of focus to the tutors or writers. Make sure they understand your requirements for MUET test preparation and essay writing. Provide any specific essay prompts or topics you need assistance with, and discuss the desired outcome for your essays.

Collaboration and Practice

Collaborate with the tutors or writers to create a study plan and a schedule that incorporates both test preparation and essay writing. Regularly practice writing essays under their guidance and utilize their feedback to improve your writing skills. Additionally, work with them to develop effective strategies for the different sections of the MUET test.

MUET Group Discussion

MUET (Malaysian University English Test) includes a Group Discussion component, which assesses your ability to engage in a discussion and express your ideas effectively in English. Here are some tips to excel in the MUET Group Discussion:

Understand the Format:

Familiarize yourself with the format and requirements of the MUET Group Discussion. Typically, a group of candidates will be given a topic and asked to discuss it for a specific duration. You will be assessed based on your ability to communicate fluently, express opinions, listen to others, and collaborate in a group setting.

Improve English Language Skills

Upgrade your English language abilities by perusing generally, working on talking and tuning in, and extending your jargon. Focus on punctuation, sentence construction, and articulation.

Practice Active Listening

During the discussion, actively listen to what others are saying. Show interest, maintain eye contact, and respond appropriately. Engage in the conversation by building upon others’ points, asking relevant questions, and seeking clarification when needed.

Respect Others’ Views

Show respect for the opinions of other participants, even if you disagree. Be open-minded and consider different perspectives. Practice active and respectful listening by acknowledging others’ points and responding constructively.

Manage Time Effectively

Time management is crucial in Group Discussions. Keep track of the time allotted for the discussion and ensure that all participants have a chance to contribute. If the discussion gets off track, steer it back to the main topic and encourage others to stay focused.

Practice with Mock Group Discussions

Organize practice sessions with classmates or friends. Choose relevant topics, set time limits, and simulate the actual MUET Group Discussion. Practice maintaining a balance between listening and speaking, and work on improving your communication skills within a group setting.

The MUET Writing component is an important part of the Malaysian University English Test that evaluates candidates’ ability to express ideas and communicate effectively in written form. This definitive guide has provided an overview of the test format, scoring criteria, and valuable tips for success. Additionally, a sample essay exemplified the application of these guidelines. To excel in MUET Writing, it is crucial to understand the task requirements, plan and organize your essays, manage time effectively, utilize language accurately, ensure cohesion and coherence, and practice regularly. By following these recommendations, you can enhance your performance and achieve success in the MUET Writing test.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Skip to main content

- All Articles

- Malaysia Articles

- Malaysia Subject guides

- Choosing where to study

- Applying to a university

- Financing your studies

- Getting your visa

- Once you arrive

- Housing & accommodation

- Before you leave

- Post-study life

MUET essay writing tips

To achieve success in the MUET writing test you need to understand the tasks, questions and answer techniques. We take you through how to approach these for the best results with top tips.

The best preparation for any test is practice. The same is true for the MUET writing test, with two questions designed to test your written English language ability. It’s a good idea for the MUET writing test to practice both of these questions and the different components they are made up of. We guide you through what kind of questions you can expect to see in the MUET writing test, how to answer them and some tips for this section of the MUET exam.

*You can get more detailed information and tips for the MUET writing test with our most recent guide.

MUET essay writing question one example

For the MUET writing test question one, you are required to create and write a reply to a letter or email. This will need to be at least 100 words long for the task and should make use of all of the information in the email or letter provided. Let’s take a closer look at an example:

Your classmate, Elliott, was unable to visit your English teacher who was injured because he was ill. Read the email from him asking about the visit that he had missed.

From: Elliott

Subject: How is Mrs. Alina doing?

How are you? How is Mrs.Alina doing since her injury? I am really sad that I could not join all of you to visit her as I was down with a fever and cough.

I heard that Mrs. Alina hurt he arm quite seriously. Am I right? How did it happen?

Please update me on her situation and when she will be back at school.

I hope I can visit her soon. Would you like to come with me then?

Write soon.

When approaching this task you can break down the email into distinct sections so that you can reply effectively. You can use the paragraph structure for this purpose. For example:

Let Elliott know how you are and explain what the current situation with Mrs. Alina is.

Describe what happened to Mrs. Alina and how severe the accident was.

Give an update on when Mrs. Alina will be returning to school.

Answer Eliott on whether you would like to accompany him on a visit to Mrs. Alina.

Here is a basic model answer reply for question one:

To: Elliott

RE: Update on Mrs. Alina

I am well thank you. I hope you are feeling better and recovered from your fever and cough. Mrs. Alina was in the emergency ward for a few hours and she was discharged on the same day. She has been home resting since.

Yes, you are right. Mrs. Alina injured her arm quite seriously. She was cooking in the kitchen when she slipped and fell. It was quite a bad fall and he husband had to take her to hospital.

She will be on best rest for about two weeks and should be back in school immediately after. She hopes not to be away for too long as our exams are drawing near.

Yes, most definitely, I will visit her with you, when you are ready. That would really cheer her up.

In the meantime, take care and see you soon.

Tips for MUET essay writing question one

Our top tips for success in the MUET writing test question one are:

Stick to the allocated time for the question which is about 25 minutes.

Read the instructions and questions carefully.

Make notes and highlight keywords and phrases.

Practice writing in a shorter format and more concisely.

Practice identifying questions and ideas.

Know the level of English is required for good marks. In this case, a CEFR level ranging between a minimum of A2 (elementary) to C1 (advanced).

MUET essay writing question two example

For the MUET writing test question two, you are required to write a short essay based on a statement or question that is provided. This should be 250 words or more and you need to be able to express an opinion, form an argument and provide justification. Your MUET essay should relate directly to the statement or question you have been asked, so make sure to stay on topic.

We can now turn our attention to the type of question you may come across for the MUET writing test part two.

You attended a talk by a youth speaker. During the talk, the speaker suggests that to reduce crime in the long term, courts should significantly reduce prison sentences and focus on education and community work to help criminals not re-offend. Prison is not a cure for crime

To what extent do you agree or disagree with the opinion?

Remember that for your MUET essay you should have an introduction, followed by three points each a paragraph long and then a conclusion. Let’s take a look at a possible introductory paragraph for this task.

Everyone thinks that reducing our crime rate is very important. Some people think that reducing prison sentences and replacing this with education and community work is a solution instead. On the other hand, there are those who believe that keeping prison sentences long is still the most suitable way to deal with crime. In my opinion, the method chosen would depend on the type and seriousness of the crime committed.

Ensure that when you are writing your subsequent paragraphs you make your point, give an explanation and then an example to back this up. Let’s look at a possible example:

Some think that an individual with a higher level of knowledge and education are less likely to commit a crime. It has been proven that crime occurs less often in countries with higher levels of education. In addition, community service is another great way of preventing reoffence. For example, in Singapore, when an offender is caught littering. They are sentenced to 100 hours of community service picking up litter.

Tips for MUET essay writing question two

Read the question carefully to see what type of essay you will write, discursive, argumentative or descriptive.

Test yourself under pressure by sticking to a time limit. In the exam, you will only have 50 minutes for this task.

Make lists of vocabulary and words while studying so that you can use these effectively when writing.

Try to engage in Engish discussions and talks. You can even watch discussions online to help. This can help identify opinions and arguments.

Sketch out a plan for your MUET essay with the main ideas and structure. This will help when you write.

If you are getting ready to take the MUET exam soon you will find our guides to the MUET speaking , reading and listening tests useful.

'Study in Malaysia' eBook

Enjoy what you’ve read? We’ve condensed the above popular topics about studying in Malaysia into one handy digital book.

Search for a course

3 Fields to study in Malaysia

The steady, robust economy of Malaysia has seen the growth of many industries and increased interest in a number of study fields. Whilst Malaysian universities are globally ranked and offer students high-calibre education, it is within these fields that they’re offered unique perspectives and insight in growing international fields. Thinking of studying abroad in Malaysia? Let our overview of three key fields you should consider studying help outline your

Get in touch

Bumi Gemilang

Blog Pendidikan dan Informasi

MUET Writing : Sample Essays (Malaysian University English Test)

MUET Writing : Sample Essays (Malaysian University English Test) .

Untuk Rujukan. KPM (Kementerian Pendidikan Malaysia). Semoga Perkongsian informasi, maklumat, makluman, pemberitahuan, laman web, sesawang, pautan, portal rasmi, panduan, bahan rujukan, bahan pembelajaran dan pengajaran, sumber rujukan dalam post di blog Bumi Gemilang berguna dan dapat membantu para pengguna. 0820

- Introduction To MUET (New Format 2021) (CEFR-Aligned) (1-28)

- Success in MUET (CEFF-Aligned) (1-50) (PL) [2]

- Master Class MUET (CEFR-Aligned) (1-50) (SAS) [2]

- Model Test Paper MUET (CEFR-Aligned) (1-32) (PL) [2]

- Get Ahead in MUET (CEFR-Aligned) (1-38) (PS) [2]

- MUET My Way (CEFR-Aligned) (1-35) (PL) [2]

- Score in MUET (CEFR-Aligned) (1-22) (IB) [2]

- MUET My Way (CEFR-Aligned) (1-41) (PL) [1]

……………………………………………………………..

- Model Answer Essay (Band 5) and Feedback

- MUET Essay Guide

- Sample MUET Writing Scripts

- MUET – Sample Essays

- Teknik Menjawab – Guidelines For MUET Writing

- Linkers in MUET

- Writing About A Bar Chart – Report

- Writing About Survey Results – Report

- MUET Writing Question 1

- MUET Writing – Sample

- MUET Essay Sample

- MUET Writing Sample

- MUET Writing Sample Answer .

- MUET Tips : Writing, Speaking, Reading & Listening

…………………………………………………………………………………………………

- Doa-Doa Amalan Menghadapi Ujian dan Peperiksaan + Amalan Pelajar Cemerlang (PU)

…………………………………………………………………………………………….

- 18 Tips, Teknik dan Kaedah Belajar Secara Efektif

Untuk mendapatkan segala Maklumat Terkini atau Updates blog Bumi Gemilang :

(1) Follow / Like Bumi Gemilang Facebook Page .

- https://www.facebook.com/BumiGemilang2

(2) Join / Sertai 2 Telegram Groups blog Bumi Gemilang .

- Join / Sertai 2 Telegram Groups To Follow Blog Bumi Gemilang

(3) Follow Twitter blog Bumi Gemilang .

- https://twitter.com/BumiGemilang

………………………………………………………………………………….

Terima Kasih kerana membaca post ini.

…………………………………………………………………………………

Please Share This Post :

2 thoughts on “muet writing : sample essays (malaysian university english test)”.

Bumi Gemilang tak pernah mengecewakan. Terima kasih! 😀

Write a Comment Cancel reply

★all are equal before the law★

MUET Writing 101: How to Tackle Email Writing Question

Addressing an email appropriately can pose a challenge for students. The question arises: should one merely answer the provided questions, or is it advisable to include additional information? What are your thoughts on this dilemma?

When tasked with writing a minimum of 100 words, what should we include? What do we aim to communicate?

As a starting point, what steps can we take?

To begin with, let’s establish the foundational steps…

Step 1: Familiarize yourself with the task.

How? Examine the email (stimulus) and pinpoint the following:

a. Recognize the keyword(s) in the question or instruction. b. Who is the sender of the email? c. Who is the intended recipient of the email? d. What is the primary subject matter of the email?

Formal or casual language?

In essence, the keywords and language style in the stimulus will indicate the register students should adopt when composing their email replies.

STOP RIGHT THERE! Need to know how to reply a letter for MUET question? Click https://ezuddin.com/2024/05/mastering-the-art-of-reply-why-reply-letters-matter-in-the-muet-exam/

Ok. Moving ON!!!!!!…

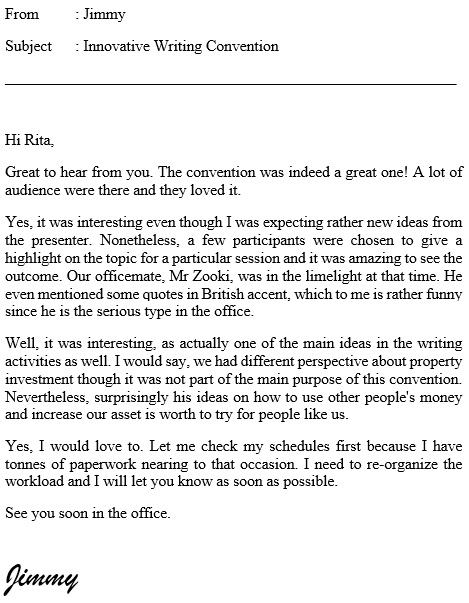

This is the example of the email question.

Your colleague, Rita, was absent from work because she had to attend her sister’s wedding. Read the email from her asking about the Innovative Writing Convention that she missed.

Using all the notes given, write a reply of at least 100 words in an appropriate style .

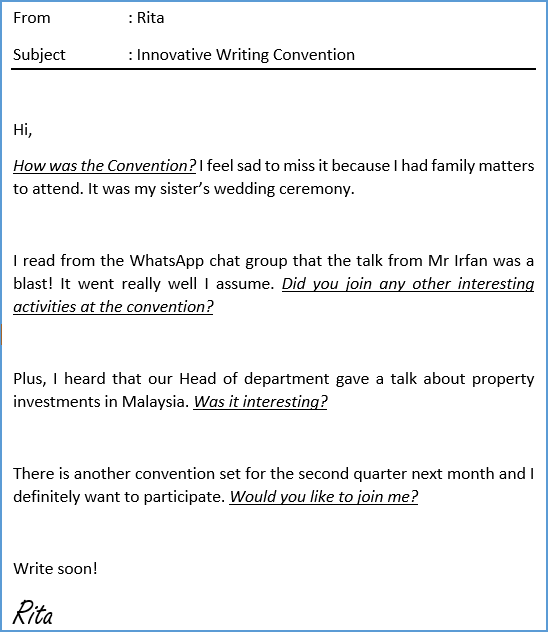

Step 2: Incorporate all the notes, keywords, and details from the email into your response.

Now, carefully review the instructions and the email to identify the specific notes and keywords to utilize when drafting your email reply. Highlight or underline them as you read through each paragraph.

Your colleague, Rita, was absent from work because she had to attend her sister’s wedding. Read the email from her asking about the Innovative Writing Convention that she missed.

What is our next course of action?

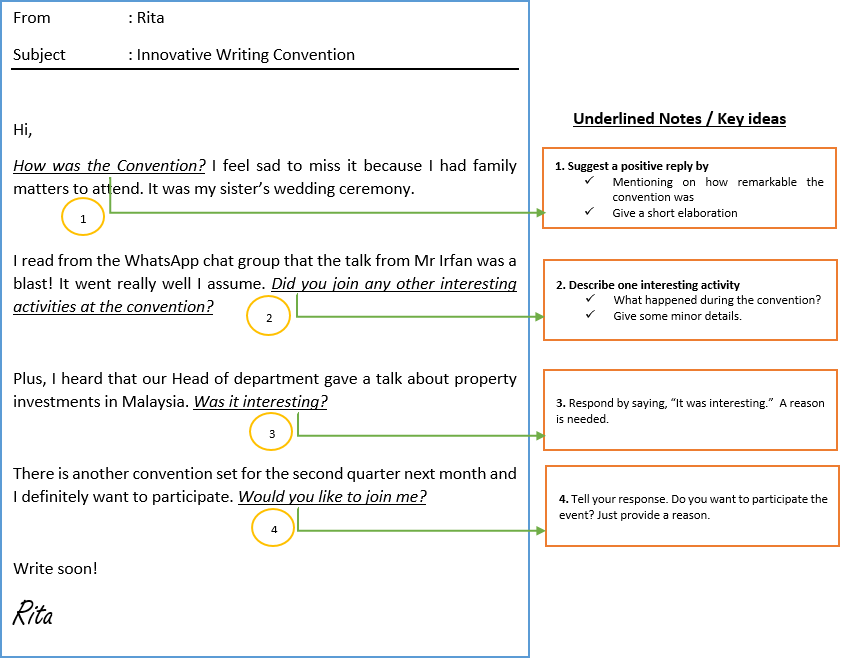

Perhaps this will aid in clarifying the writing process.

- After identifying the notes or key ideas ,observe the possible response (refer no 1, 2, 3 & 4).

“Using all the notes given, write a reply of at least 100 words in an appropriate style .”

Based on the information above, here’s what you should consider: –

- Begin your email with a proper salutation , such as “Hi John” or “Hello buddy.”

- Start by identifying the key points or “ notes ” within the instructions and each paragraph. You might underline these for clarity.

- Once you’ve highlighted the notes, craft a response for each paragraph, ensuring that you address the specific points mentioned. It’s crucial to i nclude relevant keywords from the instructions and elaborate on them in your response.

- When composing your replies, make sure to “ AGREE ” with any questions posed. For instance, (point no 2) if asked, “Did you join…?” your response should affirmatively state “Yes” before providing further details. Similarly, for point no 3 “Was it interesting?” respond positively and expand upon your answer.

- Given the email format, you have the flexibility to use an informal or casual language style in your responses.

STEP 3: Write the response

As always, when we are set to write the response, we should write in paragraphs. We could refer to the each of the notes above to write each of the paragraphs.

Sample responses

Note 1 (for paragraph 1)

The convention was indeed a great one! A lot of audience were there and they loved it.

Note 2 (for paragraph 2)

Yes it was interesting even though I was expecting rather new ideas from the presenter. Nonetheless, a few participants were chosen to give a highlight on the topic for a particular session and it was amazing to see the outcome. Our officemate, Mr Zooki, was in the limelight at that time. He even mentioned some quotes in British accent which to me is rather funny since he is the serious type in the office.

Note 3 (for paragraph 3)

Well, it was interesting and, actually one of the main ideas in the writing activities as well. I would say, we had different perspective about property investment though it was not part of the main purpose of this convention. But, surprisingly, his ideas on how to use other people’s money and increase your asset is worth to try for people like us.

Note 4 (for paragraph 4)

Yes, I would love to. Let me check my schedules first because I have tonnes of paperwork nearing to that occasion. I need to re-organize the workload and I will let you know as soon as possible.

So, the email would look like this.

Alright, that’s all for now. Here are some tips to keep in mind:

- When responding to inquiries about a previous event, ensure your reply includes precise details such as the venue, date, organizer, and other relevant information mentioned in the email.

- If you need to describe the event, employ appropriate adjectives and sensory details. Consider what you heard, saw, or felt during the event to enhance your description.

- Use suitable expressions to convey various purposes, such as expressing preferences, reactions, disagreements, or declining requests.

- Aim to provide detailed information by elaborating on your main points. This “advance mode” of communication can offer a more comprehensive understanding of your response.

Ok. Goodluck and Adieu……

Again. Need to know how to reply a letter? Click https://ezuddin.com/2024/05/mastering-the-art-of-reply-why-reply-letters-matter-in-the-muet-exam/

Oh BTW. If you need more samples and exercises, you may get this handy book by clicking the image below.

12 Replies to “MUET Writing 101: How to Tackle Email Writing Question”

why aren’t you coming back? its 2021

I am back 🙂

Thank youuuu ,I really love ittt

Hi Ezuddin, Thanks for sharing your notes here. I am preparing my son to take MUET this year (he just finished his form 5/SPM) and I find your site to be very helpful. I can’t thank you enough. Permit me to use your samples in slides, acknowledging you and the source, of course. Thanks once again! (From Veronica: A teacher-mom)

Hi madam, sure. Go ahead. ?

TQVM for sharing the tips how to write an email. May I use your notes for my students,

no biggie. Go ahead.

hi I have a question, so to write the response do we need to also write the from : Jimmy, subject : innovative or we can just straight away write the first paragraph?

follow the format. And the answer is Yes.

- Pingback: Mastering the Art of Reply: Why Reply Letters Matter in the MUET Exam -

Comments are closed.

A blogspot to offer ideas & support especially for the writing of MUET Task 2 essay (Extended Writing); & also for the teaching of English for MUET (if the mood appears!)

Sunday 17 July 2022

Muet session 2/2022 - 800/4 writing task 2 (extended writing) - "lack of appreciation for our culture.." -my take..

REMINDER:

PLEASE DO NOT PLAGIARISE THIS ESSAY.

You attended a talk at school in conjunction with Malaysia's Independence Day. During the talk, the guest speaker made the following statement:

"The lack of appreciation for our culture has caused Malaysians to lose their true identity.”

Write an essay expressing your opinion on the statement. Write at least 250 words. [60]

--&&&---

Culture is among one of the important aspects of our life. Culture is said to have the ability to help mould our true identities. Yet, is it true that the lack of appreciation for our culture has made Malaysians to lose their true identity? I agree with the statement as the Malaysian multiracial culture is diminishing, Malaysians are embracing an international culture and a true Malaysian identity has never existed.

First and foremost, the Malaysian multiracial culture is diminishing and at a fast rate too. Malaysia has been known as a multiracial and multicultural country for centuries. Its people – namely the Malays, Chinese, Indians and several other indigenuous races like the Ibans – have been sharing and co-existing in this small, prosperous country for so long. All of these people have been mixing with each other, respecting one anothers’ beliefs and celebrating everyone’s different festivals perhaps since Independence was declared in 1957. This is the true multicultural and multiracial Malaysia. Yet, with the advancement of technology, this culture of Malaysia is diminishing at a very fast rate. The technological advancement, which led to the creation of non-face-to-face communication applications such as WhatsApp, Skype, Face Time, video calls and emails brought about less connections and communications in the real world, with real people. Many of us are opting for this kind of communication and interaction, due to its simplicity and time saving traits. Subsequently, there is less of mixing, socialising and interacting with the general Malaysian public of different backgrounds, ethnicities, cultures and beliefs; causing a lack of understanding, appreciation and respect for other Malaysians and ultimately the multicultural, multiracial Malaysia. Many of us now tend to respect only our own race, culture and belief and could be said to have developed a dislike and hatred towards cultures and beliefs that are different from ours. Following this position and situation, the appreciation for our multicultural and multiracial Malaysian culture is slowly being eroded; resulting in each Malaysian to also slowly lose connection with his own country, yet more connected to his historical country of origin. For example, some Malays of Middle Eastern descent are now looking back to Middle East for a cultural connection. Some even start giving their children Middle Eastern names, using more of some Arabic words at home; and reviving, cooking and serving recipes from “back home”. They sometimes often feel proud of and boast of their heritage instead of being Malaysians. In this way, Malaysians could lose their identity as citizens of Malaysia, feeling more connected to their original countries, history, racial as well as cultural backgrounds; and hence would prefer this identity more rather than as Malaysians. Thus, it appears true to say that the lack of appreciation for our culture due to the appraisal for one’s own culture has made Malaysians to lose their true identity.

Next, Malaysians are embracing the international identity. The world is now a global village, thanks to the process of globalisation. Far and remote areas are joining the united “big world” to become a complete federation of the countries of the world. Perhaps, in a few years to come, there will be no country known according to its original name as the world strengthens its unity and would only be known by one-yet-representing-all name. As this wave of "global identity" extends its fingers to every nook and corner of the world, Malaysia is not spared. Many of our young people are embracing the international look, style, lifestyles and even names. To hear Malaysians being addressed using Western or Korean names is not strange anymore now. Businesses also are metamorphosing into a “global” icon or image, leading to us feeling like we are in a foreign business area rather than in Malaysia. Names that resemble international places such as Farenheit88, Design Village, Crescent Dew or Starhill Gallery are common in Malaysia now. Therefore, as Malaysians look outwards to foreign countries in their vision to be part of the global village, the absorption of the foreign cultures and customs are inevitable. More and more Malaysians are claiming that they are part of the world community and thus, are more appreciative of the world or global culture than of their original country. We could see this when Malaysians are found to be quicker to celebrate international festivals like Bon Odori or Songkran, joining in the festivities rather than the Thaipusam congregation or the Penang International Boat Race. Some Malaysians are even integrating this new culture into their personal lives by opting for “international” names like Mikhail, Anastasia or Aidan – names that are not nor do not sound Malaysian. As they embrace the identity of world citizen, celebrating and appreciating perhaps all cultures and their festivals, inevitably the appreciation of our own culture would be less; and as they label or claim themselves more as the citizens of the world or the people of the global village, they would lose their identity as Malaysians. It is in this way that the given statement is found true, and so it could be said that the Malaysian identity is lost due to the lack of appreciation for our culture and the growth of appreciation for the world culture.

Finally, a true Malaysian identity has never existed. Being multiracial and multicultural since its inception in 1957, and after its re-birth in 1963, Malaysia has always been accepted as represented by its three main races namely the Malays, Chinese and Indians. There just could not be one race representing this country without the other two. As so, there has never been one single identity for the citizens of Malaysia. If ever the name “Malaysia” is spoken whether in a local or international platform, these three races or ethnicities would automatically come into mind. In terms of food and festivals, it is those of these three races that are being highlighted, promoted and accepted as representative of Malaysia, like Hari Raya, Chinese New Year and Deepavali and food such as Nasi Lemak, Mooncakes and Thosai. Never has there been one single cultural festival or one same traditional food for all Malaysians to associate with. Due to this emphasis on the main three races of Malaysia, their food and festivals, one true single identity of Malaysians has never existed. As a result of this, citizens of Malaysia frequently celebrate these festivals and appreciate the corresponding cultures separately according to their ethnicities, religious beliefs and connections to the respective racial groups. In other words, as an example, only the Muslims and Malays would celebrate Hari Raya with their extended or nuclear families; and likewise for the other races. Malaysia has no festival which every Malaysian would celebrate together as a way to relish its culture and heritage like the Thanksgiving Day in the USA. Such is the situation, Malaysian’s identity could be said as always “fragmented”. Malaysians would mainly associate themselves to these three main races or to their own racial and cultural background should they not belong to one of these groups. When there is no single identity of Malaysians, there is also no single culture of Malaysia. Almost everyone is Malaysia is just celebrating their own cultural heritage but renaming it as the Malaysian culture. Ultimately, the actual Malaysian culture which consists of that of the three main races lacks appreciation and Malaysians are left without one single identity that everyone could feel belonged to. Thus, as explained here, Malaysians lose their true identity due to the lack of appreciation for our culture is undeniable due to the inexistence of a single true identity for Malaysians and of course, the inexistence of a single culture for Malaysia and the appreciation for it.

In a nutshell, the claim that the lack of appreciation for our culture has caused Malaysians to lose their true identity has been proven true due to reasons such as Malaysia’s multiracial culture is diminishing fast, Malaysians are embracing the international culture and identity; and there has never been one single culture and identity for Malaysia and Malaysians. It is hoped that despite their differences, Malaysians would always find one single cause that would unite all of them regardless of their cultural and historical heritage, the importance of which has been implied by the late Kofi Annan, “We may have different religions, different languages, different coloured skin, but we belong to one human race.”

---&&&---

A Personal Comment : I find this question rather tough, being a Muet teacher myself. I wrote this essay in about 3 days. I wonder how the candidates could or would do it in just 50 minutes. Furthermore, this question requires vast knowledge on the aspects of "identity", "Malaysia and Malaysian identity" and also those of other countries as well in order to provide comparison, etc. To candidates, I hope you realise the need to be knowledgable in order to keep up with the difficulty level of this task - MuetMzsa

2 comments:

This comment has been removed by the author.

Hi, is it ok if I use the article for annotation assignment ?

MUET my way...

Sunday, july 24, 2022.

- Hello New MUET Batch of 2022/23 Students

Hi to both teachers and students of MUET..

As a new academic year dawns, we try to brush ourselves up and figure out what we should be doing whether it is to be a better teacher or a student.

Here's a things-to-do list.

A) Students:

1. Buy my MUETMYWAY Textbook published by Pelangi.

2. Complete the grammar module in the first chapter of the book. The first part analyses your grammar competency and fossilised errors. Then it allows you to fix your errors step by step. The last section lets you see how the formulas are used in actual writing and how to edit by yourselves. I guarantee you that this is the most important skill that will push you up a band or two. It is what differentiates a band 3 from bands 4 & 5. The new book will have sentence writing skills section.. I put so much effort into this because writing good grammatically good sentences brings home the message accurately and succinctly.

3. Next, use various highlighters and highlight all the phrases in the vocab section that you have never heard of before, and will consciously use in the near future for all 4 skills. To build your vocab, you need to have more low frequency words .. this means word level, then phrases level, sentence and paragraph level.. add proverbs and sayings.. and tune up your critical thinking. The new book will have additional vocab including current issues and main concepts that F6 students need to master in order to write or speak with flair. You need to cross reference with the dictionary or Google or Wikipedia.. for example, if you don't know what is euthanasia... or if you cannot give a single reason why prostitution should be legalised, then you definitely have a lot of surfing and reading up to do. Remember, the more you read, the more you internalise good quality vocab. The revised textbook (2022 version) will also have a list of most commonly mispronounced words. Use google to click on the audio icon to hear the correct pronunciation. Do you know the real pronunciation for 'tuition'? I bet you will get it 99.9% wrong.

4. Go through all the notes in the 4 chapters on Listening, Speaking, Reading and Writing. I have put my heart and soul into all the notes especially on the speaking and writing chapters where students can learn how to construct a great academic essay.. Learn about hooks, stands, thesis statements, topic sentences, concluding sentences and the 3Rs in conclusions: Recap, Reiterate, Recommend.

5. Try out the Model test twice. The first time do it exam style. Time yourself for your speaking responses, 2 mins for the individual speech. Cross check your points with the suggested answers. Try out the group discussion with some friends, if they don't mind helping. Use the QR codes to download the listening tracks and answer within the timeframe given. Time yourself too for the Reading and Writing sections. Check answers. Then after a month, do the same test all over again and see if you have higher marks. Logically it should be higher, of course!

6. You can then try out the Model Test Book I published, also with Pelangi. Same style.. 1st time exam style, 2nd try with reference to dictionaries, Google n etc. Actually there are two books, 1st set published in 2020/21 and new out is out 2022.. in total you have 8 model tests to drill yourselves with.

7. You can then send me sample essays for me to mark or sample videos of individual presentations that you self-recorded. I charge RM10 per essay/video.. email to [email protected]. You can BOOST me AFTER I send you my feedback. :)

B) Teachers

1. Follow steps 1-7 from the point of view of a teacher. I have expressed step by step everything that needs to be covered in the textbook.. Fix their grammar first, then fix their vocab, move on to fix each of the 4 skills then drill with model tests. You may email me your queries on how to be a better teacher. And you may look for the MUET Teachers Telegram group but you will need to show the admins your timetable to prove you really are a MUET teacher. Once in the group you will have access to lively discussions and the official MUET Repository. Here you will find past exam papers, more worksheets, important documents like official MPM MUET downloadable materials, suggested Schemes of Work.. etc. This is the best MUET support group in Malaysia.

2. Read up on the last chapter of the MUETMYWAY textbook. I wrote a whole chapter outlining teaching skills and ideas... all the techniques are beneficial to both new and seasoned teachers. Seasoned teachers may not be receptive to my 'spin' on teaching MUET as it involves a lot of Project Based Learning and hands-on experiential learning.. bottom up is better than top down approach. Again, if you have questions, and need teaching guidance, feel free to email me at [email protected] and we can also chat on Whatsapp.

I never meant to fall into the business of selling books. The opportunities presented itself. Maybe this is the best way that God has planned for me to share my knowledge and help my brethren. Together we are brothers and sisters in the pursuit of knowledge and life long learning. Huiyoooo!

Friday, July 15, 2022

- Suggested Answers MUET Session 2, 2022

Bah.. I'm sure you're dying to know. Without further ado, here are my answers. Feel free to discuss politely in the comments. Rude ones will be deleted. You may debate til the cows come home but I will stick to these answers.

MUET S2 2022

Remember these are NOT official MPM answers.

Post in the comments what u got. Here is a prediction of ur band.

X times 7.5

For instance,

if u got 25 over 40, do the math for 25 x 7.5 =

That makes u a Band 3.5 for Reading. Get it?

TQ for Being Visitor No:

Blog archive.

- ► June (1)

- ► April (1)

- ► November (1)

- ► July (2)

- ► January (4)

- ► January (2)

- ► October (1)

- ► August (1)

- ► July (1)

- ► May (1)

- ► April (2)

- ► March (1)

- ► February (2)

- ► January (1)

- ► December (2)

- ► February (1)

- ► October (4)

- ► September (1)

- ► May (3)

- ► August (2)

- ► March (2)

- ► May (2)

- ► November (5)

- ► June (2)

- ► November (3)

- ► October (3)

- ► July (4)

- ► March (3)

- ► December (1)

- ► March (7)

- ► September (3)

- ► July (6)

- ► March (4)

- ► January (8)

- ► November (7)

- ► October (7)

- ► September (4)

- ► April (9)

- ► March (8)

- ► February (3)

- ► January (3)

- ► November (6)

- ► August (5)

- ► July (5)

- ► April (6)

- ► August (4)

Popular Posts

- MUET SPEAKING 800/2 TIPS - SAMPLE ANSWERS Candidate A Instructions to candidates: Task A: Individual presentation Study the stimulus or topic given. You are given...

- MUET Writing 800/4 Question 2 sample answers for 350 word academic essay Below are 4 samples of good essays... Band 4 or 5... Band 6 essays will demonstrate a much better command of linguistic fluency and accuracy...

- 2 MUET Speaking Samples by one of my Personal Online Tutees Hey there everyone! Getting excited that Speaking is around the corner? One of my online tutees under my personal online tutoring (email me...

- MUET SPEAKING 2013 Sample Scripts One of the questions that came out for this end of the year MUET speaking exam is : (Submitted by a candidate) How to attain happiness...

- MUET Speaking question June 2015 Hi, all. My students are taking this year's MUET, mid year paper. Here is an update of the questions that came out. I am using my own w...

- 4 muet writing samples MUET Writing Question 2 sample answers for 350 word academic essay Below are 4 samples of good essays... Band 4 or 5... Band 6 essays will d...

- HOW TO WRITE GOOD MUET ESSAY INTRODUCTIONS A lot of students do not score well in MUET writing because they may be unaware of the Academic Writing Format. Those who know about it fin...

MUET NEW FORMAT

Tuesday, august 23, 2022, introduction to muet writing.

MUET Paper 4: Writing (800/4)

-T o assess the ability of test takers to communicate in writing in the context of higher education, covering both more formal and less formal writing genres

MUET Writing Test Specification

Max score: 90 marks

Duration: 75 minutes

Number of tasks: 2

Number of questions: 2

Task format

Task 1: Guided Writing (at least 100 words)

Task 2: Extended Writing (at least 250 words)

Language functions

• Giving precise information

• Describing experiences, feelings and events

• Providing advice, reasons and opinions

MUET Writing Test Structure

Task 1: Guided Writing

Stimulus: Letter or email (100−135 words) Each note is no longer than 4 words.

Response: Letter or email of at least 100 words

Time allowed: 25 minutes

Text type: -Email

-Letter ( Formal letter / Informal letter)

Task 2: Extended Writing

Stimulus: Statement setting out an idea or a problem in 40−80 words

Response: Essay (discursive, argumentative, or a problem-solution) of at least 250 words

Time allowed: 50 minutes

Text type: -Discursive Essay

-Argumentative Essay

-Problem Solution Essay

Monday, August 22, 2022

Introduction to muet reading.

Objective of the MUET Reading test:

To assess the ability of test takers to understand reading texts in the higher education context, covering both more formal and less formal text types.

MUET Reading Test Specification

Number of texts: 10

Number of parts: 7

Number of questions: 40

Question types: MCQ

Possible genres

MUET Reading Test Structure

Part 1 text length: three short texts of the same text type, thematically linked, amounting to a total of 100 to 150 words question type: multiple matching (three texts preceded by 4 multiple matching questions) number of questions: 4 part 2 and 3 text length: two texts each of 300 to 450 words question type: mcqs - 3 options number of questions: 10 part 4 text length: two independent texts based on the same theme (not necessarily of the same text type) amounting to a total of 700 to 800 words question type: two mcqs based on text 1, two mcqs based on text 2, and two mcqs comparing the two texts (3 options) number of questions: 6 part 5 text length: one text of 500 to 600 words question type: a gapped text with 6 missing sentences (7 options) number of questions: 6 part 6 and 7 text length: two texts of 700 to 900 words each question type: mcqs - 4 options number of questions: 14, introduction to muet speaking.

MUET Paper 2: Speaking (800/2)

-To assess the ability of test takers to give an oral presentation of ideas individually

-To interact in small groups in both more formal and less formal academic contexts.

MUET Speaking Test Specification

Duration: Approximately 30 minutes

Number of Part: 2 Parts

Part 1: Individual Presentation

Part 2: Group Discussion

MUET Speaking Test Structure

Part 1 (Individual Presentation)

Task Type: Individual presentation based on a written prompt

-2 minutes to prepare

-2 minutes to present

Language Functions

Each task should elicit some of the following functions:

• expressing opinions

• giving reasons

• elaborating

• justifying

• summarising

• concluding

Part 2 (Group Discussion)

Task Type: Group discussion based on a written question and five prompts in the form of a mind map

-3 minutes to prepare

-8 to 12 minutes to discuss

The task should elicit some of the following functions:

• inferring

• evaluating

• initiating

• prompting

• negotiating

• turn-taking

• interrupting

Sunday, August 21, 2022

Introduction to muet listening, muet listening test specification, muet listening test structure, saturday, august 20, 2022, what is muet.

MUET stands for Malaysian University English Test .

Malaysian Examinations Council (MEC) has been responsible for the Malaysian University English Test (MUET).

Objective of MUET

-To measure the English language proficiency of candidates who intend to pursue first degree studies

-In order to help institutions make better decisions about the readiness of prospective students for academic coursework and their ability to use and understand English in different contexts in higher education.

MUET Test Dates

MUET is administered 3 times a year. The sessions are named:

- MUET Session 1

- MUET Session 2

- MUET Session 3

* The Listening, Reading and Writing tests are taken on the same day, but the Speaking test is taken at times spread over a duration of two weeks.

The Malaysian Examination Council (MEC) also administered MUET on Demand (MoD)

*All four components are taken on the same day, depending on the number of candidates and subject to the availability of rooms for the Speaking test.

MUET on Demand (MoD) has two types

-Computer Based Test

-Paper Based Test

SPEAKING TEST OPTION (MoD)

-Candidates now may sit for Speaking Test via Face-to-Face or Online.

Note: Speaking Test Online will be held (one week before) the Written Test.

Reference (MoD Speaking Online)

Video

https://www.mpm.edu.my/en/mod/guide-for-muet-speaking-online-test

https://www.mpm.edu.my/en/mod/zoom-handbook-for-muet-candidates

Speaking Test Date (Online)

https://www.mpm.edu.my/mod/jadual-waktu-mod-speaking-online

MUET TEST COMPONENTS

MUET Aggregated Scores

Note: Candidates are required to attempt all four components to obtain an overall band score. No certificate will be awarded to candidates who fail to attempt all four components.

MUET Paper 4: Writing (800/4) Objective of the MUET Writing test: -T o assess the ability of test takers to communicate in writing in the...

- INTRODUCTION TO MUET LISTENING MUET Paper 1: Listening (800/1) Objective of the MUET Listening test: To assess the ability of test takers to understand spoken discourse i...

- INTRODUCTION TO MUET SPEAKING MUET Paper 2: Speaking (800/2) Objective of the MUET Speaking test: -To assess the ability of test takers to give an oral presentation of i...

- Original article

- Open access

- Published: 08 July 2024

Can you spot the bot? Identifying AI-generated writing in college essays

- Tal Waltzer ORCID: orcid.org/0000-0003-4464-0336 1 ,

- Celeste Pilegard 1 &

- Gail D. Heyman 1

International Journal for Educational Integrity volume 20 , Article number: 11 ( 2024 ) Cite this article

1 Altmetric

Metrics details

The release of ChatGPT in 2022 has generated extensive speculation about how Artificial Intelligence (AI) will impact the capacity of institutions for higher learning to achieve their central missions of promoting learning and certifying knowledge. Our main questions were whether people could identify AI-generated text and whether factors such as expertise or confidence would predict this ability. The present research provides empirical data to inform these speculations through an assessment given to a convenience sample of 140 college instructors and 145 college students (Study 1) as well as to ChatGPT itself (Study 2). The assessment was administered in an online survey and included an AI Identification Test which presented pairs of essays: In each case, one was written by a college student during an in-class exam and the other was generated by ChatGPT. Analyses with binomial tests and linear modeling suggested that the AI Identification Test was challenging: On average, instructors were able to guess which one was written by ChatGPT only 70% of the time (compared to 60% for students and 63% for ChatGPT). Neither experience with ChatGPT nor content expertise improved performance. Even people who were confident in their abilities struggled with the test. ChatGPT responses reflected much more confidence than human participants despite performing just as poorly. ChatGPT responses on an AI Attitude Assessment measure were similar to those reported by instructors and students except that ChatGPT rated several AI uses more favorably and indicated substantially more optimism about the positive educational benefits of AI. The findings highlight challenges for scholars and practitioners to consider as they navigate the integration of AI in education.

Introduction

Artificial intelligence (AI) is becoming ubiquitous in daily life. It has the potential to help solve many of society’s most complex and important problems, such as improving the detection, diagnosis, and treatment of chronic disease (Jiang et al. 2017 ), and informing public policy regarding climate change (Biswas 2023 ). However, AI also comes with potential pitfalls, such as threatening widely-held values like fairness and the right to privacy (Borenstein and Howard 2021 ; Weidinger et al. 2021 ; Zhuo et al. 2023 ). Although the specific ways in which the promises and pitfalls of AI will play out remain to be seen, it is clear that AI will change human societies in significant ways.

In late November of 2022, the generative large-language model ChatGPT (GPT-3, Brown et al. 2020 ) was released to the public. It soon became clear that talk about the consequences of AI was much more than futuristic speculation, and that we are now watching its consequences unfold before our eyes in real time. This is not only because the technology is now easily accessible to the general public, but also because of its advanced capacities, including a sophisticated ability to use context to generate appropriate responses to a wide range of prompts (Devlin et al. 2018 ; Gilson et al. 2022 ; Susnjak 2022 ; Vaswani et al. 2017 ).

How AI-generated content poses challenges for educational assessment

Since AI technologies like ChatGPT can flexibly produce human-like content, this raises the possibility that students may use the technology to complete their academic work for them, and that instructors may not be able to tell when their students turn in such AI-assisted work. This possibility has led some people to argue that we may be seeing the end of essay assignments in education (Mitchell 2022 ; Stokel-Walker 2022 ). Even some advocates of AI in the classroom have expressed concerns about its potential for undermining academic integrity (Cotton et al. 2023 ; Eke 2023 ). For example, as Kasneci et al. ( 2023 ) noted, the technology might “amplify laziness and counteract the learners’ interest to conduct their own investigations and come to their own conclusions or solutions” (p. 5). In response to these concerns, some educational institutions have already tried to ban ChatGPT (Johnson, 2023; Rosenzweig-Ziff 2023 ; Schulten, 2023).

These discussions are founded on extensive scholarship on academic integrity, which is fundamental to ethics in higher education (Bertram Gallant 2011 ; Bretag 2016 ; Rettinger and Bertram Gallant 2022 ). Challenges to academic integrity are not new: Students have long found and used tools to circumvent the work their teachers assign to them, and research on these behaviors spans nearly a century (Cizek 1999 ; Hartshorne and May 1928 ; McCabe et al. 2012 ). One recent example is contract cheating, where students pay other people to do their schoolwork for them, such as writing an essay (Bretag et al. 2019 ; Curtis and Clare 2017 ). While very few students (less than 5% by most estimates) tend to use contract cheating, AI has the potential to make cheating more accessible and affordable and it raises many new questions about the relationship between technology, academic integrity, and ethics in education (Cotton et al. 2023 ; Eke 2023 ; Susnjak 2022 ).

To date, there is very little empirical evidence to inform debates about the likely impact of ChatGPT on education or to inform what best practices might look like regarding use of the technology (Dwivedi et al. 2023 ; Lo 2023 ). The primary goal of the present research is to provide such evidence with reference to college-essay writing. One critical question is whether college students can pass off work generated by ChatGPT as their own. If so, large numbers of students may simply paste in ChatGPT responses to essays they are asked to write without the kind of active engagement with the material that leads to deep learning (Chi and Wylie 2014 ). This problem is likely to be exacerbated when students brag about doing this and earning high scores, which can encourage other students to follow suit. Indeed, this kind of bragging motivated the present work (when the last author learned about a college student bragging about using ChatGPT to write all of her final papers in her college classes and getting A’s on all of them).

In support of the possibility that instructors may have trouble identifying ChatGPT-generated test, some previous research suggests that ChatGPT is capable of successfully generating college- or graduate-school level writing. Yeadon et al. ( 2023 ) used AI to generate responses to essays based on a set of prompts used in a physics module that was in current use and asked graders to evaluate the responses. An example prompt they used was: “How did natural philosophers’ understanding of electricity change during the 18th and 19th centuries?” The researchers found that the AI-generated responses earned scores comparable to most students taking the module and concluded that current AI large-language models pose “a significant threat to the fidelity of short-form essays as an assessment method in Physics courses.” Terwiesch ( 2023 ) found that ChatGPT scored at a B or B- level on the final exam of Operations Management in an MBA program, and Katz et al. ( 2023 ) found that ChatGPT has the necessary legal knowledge, reading comprehension, and writing ability to pass the Bar exam in nearly all jurisdictions in the United States. This evidence makes it very clear that ChatGPT can generate well-written content in response to a wide range of prompts.

Distinguishing AI-generated from human-generated work

What is still not clear is how good instructors are at distinguishing between ChatGPT-generated writing and writing generated by students at the college level given that it is at least possible that ChatGPT-generated writing could be both high quality and be distinctly different than anything people generally write (e.g., because ChatGPT-generated writing has particular features). To our knowledge, this question has not yet been addressed, but a few prior studies have examined related questions. In the first such study, Gunser et al. ( 2021 ) used writing generated by a ChatGPT predecessor, GPT-2 (see Radford et al. 2019 ). They tested nine participants with a professional background in literature. These participants both generated content (i.e., wrote continuations after receiving the first few lines of unfamiliar poems or stories), and determined how other writing was generated. Gunser et al. ( 2021 ) found that misclassifications were relatively common. For example, in 18% of cases participants judged AI-assisted writing to be human-generated. This suggests that even AI technology that is substantially less advanced than ChatGPT is capable of generating writing that is hard to distinguish from human writing.

Köbis and Mossink ( 2021 ) also examined participants’ ability to distinguish between poetry written by GPT-2 and humans. Their participants were given pairs of poems. They were told that one poem in each pair was written by a human and the other was written by GPT-2, and they were asked to determine which was which. In one of their studies, the human-written poems were written by professional poets. The researchers generated multiple poems in response to prompts, and they found that when the comparison GPT-2 poems were ones they selected as the best among the set generated by the AI, participants could not distinguish between the GPT-2 and human writing. However, when researchers randomly selected poems generated by GPT-2, participants were better than chance at detecting which ones were generated by the AI.

In a third relevant study, Waltzer et al. ( 2023a ) tested high school teachers and students. All participants were presented with pairs of English essays, such as one on why literature matters. In each case one essay was written by a high school student and the other was generated by ChatGPT, and participants were asked which essay in each pair had been generated by ChatGPT. Waltzer et al. ( 2023a ) found that teachers only got it right 70% of the time, and that students’ performance was even worse (62%). They also found that well-written essays were harder to distinguish from those generated by ChatGPT than poorly written ones. However, it is unclear the extent to which these findings are specific to the high school context. It should also be noted that there were no clear right or wrong answers in the types of essays used in Waltzer et al. ( 2023a ), so the results may not generalize to essays that ask for factual information based on specific class content.

AI detection skills, attitudes, and perceptions

If college instructors find it challenging to distinguish between writing generated by ChatGPT and college students, it raises the question of what factors might be correlated with the ability to perform this discrimination. One possible correlate is experience with ChatGPT, which may allow people to recognize patterns in the writing style it generates, such as a tendency to formally summarize previous content. Content-relevant knowledge is another possible predictor. Individuals with such knowledge will presumably be better at spotting errors in answers, and it is plausible that instructors know that AI tools are likely to get content of introductory-level college courses correct and assume that essays that contain errors are written by students.

Another possible predictor is confidence about one’s ability to discriminate on the task or on particular items of the task (Erickson and Heit 2015 ; Fischer & Budesco, 2005 ; Wixted and Wells 2017 ). In other words, are AI discriminations made with a high degree of confidence more likely to be accurate than low-confidence discriminations? In some cases, confidence judgments are a good predictor of accuracy, such as on many perceptual decision tasks (e.g., detecting contrast between light and dark bars, Fleming et al. 2010 ). However, in other cases correlations between confidence and accuracy are small or non-existent, such as on some deductive reasoning tasks (e.g., Shynkaruk and Thompson 2006 ). Links to confidence can also depend on how confidence is measured: Gigerenzer et al. ( 1991 ) found overconfidence on individual items, but good calibration when participants were asked how many items they got right after seeing many items.

In addition to the importance of gathering empirical data on the extent to which instructors can distinguish ChatGPT from college student writing, it is important to examine how college instructors and students perceive AI in education given that such attitudes may affect behavior (Al Darayseh 2023 ; Chocarro et al. 2023 ; Joo et al. 2018 ; Tlili et al. 2023 ). For example, instructors may only try to develop precautions to prevent AI cheating if they view this as a significant concern. Similarly, students’ confusion about what counts as cheating can play an important role in their cheating decisions (Waltzer and Dahl 2023 ; Waltzer et al. 2023b ).

The present research

In the present research we developed an assessment that we gave to college instructors and students (Study 1) and ChatGPT itself (Study 2). The central feature of the assessment was an AI Identification Test , which included 6 pairs of essays. In each case (as was indicated in the instructions), one essay in each pair was generated by ChatGPT and the other was written by college students. The task was to determine which essay was written by the chatbot. The essay pairs were drawn from larger pools of essays of each type.

The student essays were written by students as part of a graded exam in a psychology class, and the ChatGPT essays were generated in response to the same essay prompts. Of interest was overall performance and to assess potential correlates of performance. Performance of college instructors was of particular interest because they are the ones typically responsible for grading, but performance of students and ChatGPT were also of interest for comparison. ChatGPT was also of interest given anecdotal evidence that college instructors are asking ChatGPT to tell them whether pieces of work were AI-generated. For example, the academic integrity office at one major university sent out an announcement asking instructors not to report students for cheating if their evidence was solely based on using ChatGPT to detect AI-generated writing (UCSD Academic Integrity Office, 2023 ).

We also administered an AI Attitude Assessment (Waltzer et al. 2023a ), which included questions about overall levels of optimism and pessimism about the use of AI in education, and the appropriateness of specific uses of AI in academic settings, such as a student submitting an edited version of a ChatGPT-generated essay for a writing assignment.

Study 1: College instructors and students

Participants were given an online assessment that included an AI Identification Test , an AI Attitude Assessment , and some demographic questions. The AI Identification Test was developed for the present research, as described below (see Materials and Procedure). The test involved presenting six pairs of essays, with the instructions to try to identify which one was written by ChatGPT in each case. Participants also rated their confidence before the task and after responding to each item, and reported how many they thought they got right at the end. The AI Attitude Assessment was drawn from Waltzer et al. ( 2023a ) to assess participants’ views of the use of AI in education.

Participants